GLTF and GLB format basics, part 2

This article is a continuation of the review of the basics of GLTF and GLB formats. You can find the first part of the article here . In the first part, we discussed with you why the format was originally planned, as well as such artifacts and their GLTF format attributes as Scene, Node, Buffer, BufferView, Accessor and Mesh. In this article, we will look at Material, Texture, Animations, Skin, Camera, and also finish creating a minimal valid GLTF file.

Material and Texture

Materials and textures are inextricably linked to the mesh. If necessary, the mesh can be animated. The material stores information about how the model will be rendered by the engine. GLTF defines materials using a common set of parameters that are based on Physical-Based Rendering (PBR). The PBR model allows you to create a “physically correct” mapping of an object in different light conditions due to the fact that the shading model should work with the “physical” properties of the surface. There are several ways to describe PBR. The most common model is the metallic-roughness model, which is used by default in the GLTF. You can also use the specular-glosiness model, but only with a separate extenstion. The main attributes of the material are as follows:

- name is the name of the mesh.

- baseColorFactor / baseColorTexture - stores color information. In the case of the Factor attribute, information is stored in a numerical value for RGBA, in the case of Texture, a reference to the texture is stored in the textures object.

- metallicFactor - stores Metallic information

- roughnessFactor - stores Roughness information

- doubleSided - true or false (default value) and indicates whether the mesh will be rendered on both sides or only on the front side.

"materials": [ { "pbrMetallicRoughness": { "baseColorTexture": { "index": 0 }, "metallicFactor": 0.0, "roughnessFactor": 0.800000011920929 }, "name": "Nightshade_MAT", "doubleSided": true } ],

Metallic or “metallicity”. This parameter describes how strongly the reflectivity is similar to a real metal, i.e. how much light is reflected from the surface. The value is measured from 0 to 1, where 0 is a dielectric, and 1 is a pure metal.

Roughness or "roughness". This attribute indicates how “rough” the surface is, thereby affecting the scattering of light from the surface. It is measured from 0 to 1, where 0 is ideally flat, and 1 is a completely rough surface, which reflects only a small amount of light.

Texture - an object that stores texture maps (Texture maps). These cards give a realistic model. Thanks to them, it is possible to designate the appearance of the model, to impart various properties such as metallicity, roughness, natural darkening from the environment, and even glow properties. Textures are described by three high-level arrays: textures, samplers, images. The Textures object uses indexes to refer to sampler and image instances. The most important object is image, since It is he who stores information about the location of the map. In textures, it is described by the word source. The picture may be located somewhere on the hard disk (for example, "uri": “duckCM.png”) and encoded in GLTF (“bufferView”: 14, “mimeType”: “image / jpeg”). Samplers is an object that defines the parameters of filters and wrapping corresponding to GL types.

In our example with a triangle there are no textures, but I will provide JSON from other models with which I worked. In this example, the textures were written to the buffer, so they are also read from buffer using BufferView:

"textures": [ { "sampler": 0, "source": 0 } ], "images": [ { "bufferView": 1, "mimeType": "image/jpeg" } ], Animations

GLTF supports articulated, skinned (skinned) and morph target animations using key frames. The information of these frames is stored in buffers and refers to animations using accessors. GLTF 2.0 defines only the animation repository, so it does not define any specific behavior at run time, such as playback order, autostart, loops, timeline display, etc. All animations are stored in the Animations array and are defined as a set channels (channel attribute), as well as a set of samplers, which are determined by accessors (Accessor) that process information about key frames (key frames) and the interpolation method (samples attribute)

The main attributes of the Animations object are as follows:

- name - the name of the animation (if any)

- channel is an array that connects the output values of keyframes of the animation to a specific node in the hierarchy.

- The sampler is an attribute that refers to an Accessor that processes key frames from the buffer.

- target is an object that determines which node (Node object) should be animated using the node attribute, as well as which node property should be animated using the path - translation, rotation, scale, weights attribute, etc. Non-animated attributes retain their values during animations. If node is not defined, then the channel attribute should be omitted.

- samplers - defines input and output pairs: a set of scalar floating-point values representing linear time in seconds. All values (input / output) are stored in a buffer and accessed via accessors. The interpolation attribute stores the value of interpolation between keys.

In the simplest GLTF there are no animations. An example is taken from another file:

"animations": [ { "name": "Animate all properties of one node with different samplers", "channels": [ { "sampler": 0, "target": { "node": 1, "path": "rotation" } }, { "sampler": 1, "target": { "node": 1, "path": "scale" } }, { "sampler": 2, "target": { "node": 1, "path": "translation" } } ], "samplers": [ { "input": 4, "interpolation": "LINEAR", "output": 5 }, { "input": 4, "interpolation": "LINEAR", "output": 6 }, { "input": 4, "interpolation": "LINEAR", "output": 7 } ] }, Skin

Skinning information, also known as skinning, aka bone animation, is stored in the skins array. Each skin is defined using the inverseBindMatrices attribute, which refers to the IBM inverse bind matrix data. This data is used to transfer the coordinates to the same space as each joint, as well as an attribute of the joints array, which lists the indices of nodes used as joints to animate skin. The order of the joins is defined in the array skin.joints and must match the order of the inverseBatMatrices data. The skeleton attribute points to a Node object, which is the common root of the joints / joints hierarchy, or the direct or indirect parent node of the common root.

An example of using the skin object (not in the example with a triangle):

"skins": [ { "name": "skin_0", "inverseBindMatrices": 0, "joints": [ 1, 2 ], "skeleton": 1 } ] Key attributes:

- name - skinning name

- inverseBindMatrices - indicates the number of the accessor storing information about the Inverse Bind Matrix

- joints - indicates the number of accessor storing information about joints / joints

- skeleton - indicates the number of accessor storing information about the "root"

joint / joint with which the skeleton of the model begins

Camera

The camera determines the projection matrix, which is obtained by transforming the “view” into the coordinates of the clip. If it is simpler, then the cameras determine the visual appearance (viewing angle, directions of “sight”, etc.) that the user sees when loading the model.

The projection can be “Perspective” and “Orthogonal”. Cameras are contained in nodes (nodes) and may have transformations. Cameras are fixed in Node objects and, thus, can have transformations. The camera is defined so that the local + X axis is directed to the right, the lens looks in the direction of the local -Z axis, and the top of the camera is aligned with the local + Y axis. If the transformation is not specified, then the camera is at the origin. Cameras are stored in cameras. Each of them defines the type attribute, which designates the type of projection (perspective or orthogonal), as well as such attributes as perspective or orthographic, in which more detailed information is already stored. Depending on the presence of the zfar attribute, cameras with the “perspective” type can use a finite or infinite projection.

An example of a camera in JSON with a type of perspective. Not relevant for the example of the minimum correct GLTF file (triangle):

"cameras": [ { "name": "Infinite perspective camera", "type": "perspective", "perspective": { "aspectRatio": 1.5, "yfov": 0.660593, "znear": 0.01 } } ] The main attributes of the Camera object:

- name - skinning name

- type - camera type, perspective or orthographic.

- perspective / orthographic - an attribute containing the details of the corresponding type value

- aspectRatio - Aspect ratio (fov).

- yfov - the angle of the vertical field of view (fov) in radians

- zfar - distance to the far clipping plane

- znear - distance to the near clipping plane

- extras - application specific data

Minimum valid GLTF file

At the beginning of the article I wrote about the fact that we will collect the minimum GLTF file, which will contain 1 triangle. JSON with built-in buffer can be found below. Just copy it to a text file, change the file format to .gtlf. To view the 3D asset in the file, you can use any viewer that supports GLTF, but personally I use this

{ "scenes" : [ { "nodes" : [ 0 ] } ], "nodes" : [ { "mesh" : 0 } ], "meshes" : [ { "primitives" : [ { "attributes" : { "POSITION" : 1 }, "indices" : 0 } ] } ], "buffers" : [ { "uri" : "data:application/octet-stream;base64,AAABAAIAAAAAAAAAAAAAAAAAAAAAAIA/AAAAAAAAAAAAAAAAAACAPwAAAAA=", "byteLength" : 44 } ], "bufferViews" : [ { "buffer" : 0, "byteOffset" : 0, "byteLength" : 6, "target" : 34963 }, { "buffer" : 0, "byteOffset" : 8, "byteLength" : 36, "target" : 34962 } ], "accessors" : [ { "bufferView" : 0, "byteOffset" : 0, "componentType" : 5123, "count" : 3, "type" : "SCALAR", "max" : [ 2 ], "min" : [ 0 ] }, { "bufferView" : 1, "byteOffset" : 0, "componentType" : 5126, "count" : 3, "type" : "VEC3", "max" : [ 1.0, 1.0, 0.0 ], "min" : [ 0.0, 0.0, 0.0 ] } ], "asset" : { "version" : "2.0" } } What is the result?

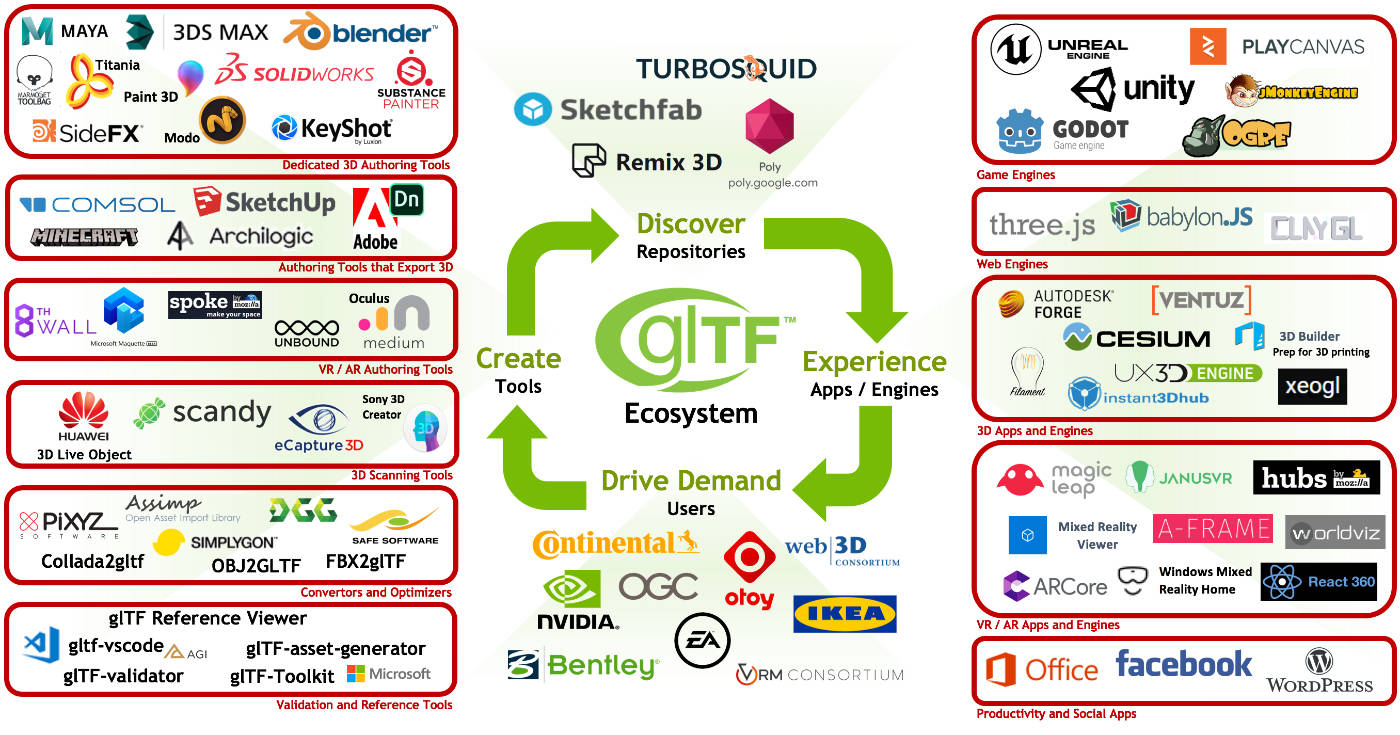

In conclusion, I want to note the growing popularity of GLTF and GLB formats, many companies are already actively using it, and some are already actively pursuing this. The ease of its use in the social network Facebook (3D posts and, more recently, 3D Photos), the active use of GLB in Oculus Home, as well as a number of innovations that were announced as part of GDC 2019, greatly contributes to the popularization of the format. Lightness, fast rendering speed, Ease of use, promotion of the Khronos Group and standardization of the format - these are the main advantages that, as I am sure, will eventually do their work in its further promotion!

')

Source: https://habr.com/ru/post/448298/

All Articles