Some aspects of monitoring MS SQL Server. Recommendations for setting trace flags

Foreword

Quite often, users, developers and administrators of the MS SQL Server DBMS face problems with the performance of the DB or the DBMS as a whole, therefore monitoring of MS SQL Server is very relevant.

This article is an addition to the article Using Zabbix to monitor the MS SQL Server database and it will cover some aspects of MS SQL Server monitoring, in particular: how to quickly determine what resources are missing, as well as recommendations on setting trace flags.

For the following scripts to work, you need to create an inf scheme in the correct database as follows:

')

Creating inf schema

use <_>; go create schema inf; Method of detecting a shortage of RAM

The first indicator of a shortage of RAM is the case when an instance of MS SQL Server eats up all the RAM allocated to it.

To do this, create the following inf.vRAM view:

Creating the inf.vRAM view

CREATE view [inf].[vRAM] as select a.[TotalAvailOSRam_Mb] -- , a.[RAM_Avail_Percent] -- , a.[Server_physical_memory_Mb] -- , a.[SQL_server_committed_target_Mb] -- MS SQL Server , a.[SQL_server_physical_memory_in_use_Mb] -- MS SQL Server , a.[SQL_RAM_Avail_Percent] -- MS SQL Server MS SQL Server , a.[StateMemorySQL] -- MS SQL Server , a.[SQL_RAM_Reserve_Percent] -- MS SQL Server -- , (case when a.[RAM_Avail_Percent]<10 and a.[RAM_Avail_Percent]>5 and a.[TotalAvailOSRam_Mb]<8192 then 'Warning' when a.[RAM_Avail_Percent]<=5 and a.[TotalAvailOSRam_Mb]<2048 then 'Danger' else 'Normal' end) as [StateMemoryServer] from ( select cast(a0.available_physical_memory_kb/1024.0 as int) as TotalAvailOSRam_Mb , cast((a0.available_physical_memory_kb/casT(a0.total_physical_memory_kb as float))*100 as numeric(5,2)) as [RAM_Avail_Percent] , a0.system_low_memory_signal_state , ceiling(b.physical_memory_kb/1024.0) as [Server_physical_memory_Mb] , ceiling(b.committed_target_kb/1024.0) as [SQL_server_committed_target_Mb] , ceiling(a.physical_memory_in_use_kb/1024.0) as [SQL_server_physical_memory_in_use_Mb] , cast(((b.committed_target_kb-a.physical_memory_in_use_kb)/casT(b.committed_target_kb as float))*100 as numeric(5,2)) as [SQL_RAM_Avail_Percent] , cast((b.committed_target_kb/casT(a0.total_physical_memory_kb as float))*100 as numeric(5,2)) as [SQL_RAM_Reserve_Percent] , (case when (ceiling(b.committed_target_kb/1024.0)-1024)<ceiling(a.physical_memory_in_use_kb/1024.0) then 'Warning' else 'Normal' end) as [StateMemorySQL] from sys.dm_os_sys_memory as a0 cross join sys.dm_os_process_memory as a cross join sys.dm_os_sys_info as b cross join sys.dm_os_sys_memory as v ) as a; Then you can determine that the MS SQL Server instance consumes all the memory allocated to it by the following query:

select SQL_server_physical_memory_in_use_Mb, SQL_server_committed_target_Mb from [inf].[vRAM]; If the SQL_server_physical_memory_in_use_Mb indicator is constantly not less than SQL_server_committed_target_Mb, then it is necessary to check the statistics of expectations.

To determine the lack of RAM through the statistics of expectations, create an inf.vWaits view:

Creating the inf.vWaits view

CREATE view [inf].[vWaits] as WITH [Waits] AS (SELECT [wait_type], -- [wait_time_ms] / 1000.0 AS [WaitS],-- . signal_wait_time_ms ([wait_time_ms] - [signal_wait_time_ms]) / 1000.0 AS [ResourceS],-- signal_wait_time_ms [signal_wait_time_ms] / 1000.0 AS [SignalS],-- [waiting_tasks_count] AS [WaitCount],-- . 100.0 * [wait_time_ms] / SUM ([wait_time_ms]) OVER() AS [Percentage], ROW_NUMBER() OVER(ORDER BY [wait_time_ms] DESC) AS [RowNum] FROM sys.dm_os_wait_stats WHERE [waiting_tasks_count]>0 and [wait_type] NOT IN ( N'BROKER_EVENTHANDLER', N'BROKER_RECEIVE_WAITFOR', N'BROKER_TASK_STOP', N'BROKER_TO_FLUSH', N'BROKER_TRANSMITTER', N'CHECKPOINT_QUEUE', N'CHKPT', N'CLR_AUTO_EVENT', N'CLR_MANUAL_EVENT', N'CLR_SEMAPHORE', N'DBMIRROR_DBM_EVENT', N'DBMIRROR_EVENTS_QUEUE', N'DBMIRROR_WORKER_QUEUE', N'DBMIRRORING_CMD', N'DIRTY_PAGE_POLL', N'DISPATCHER_QUEUE_SEMAPHORE', N'EXECSYNC', N'FSAGENT', N'FT_IFTS_SCHEDULER_IDLE_WAIT', N'FT_IFTSHC_MUTEX', N'HADR_CLUSAPI_CALL', N'HADR_FILESTREAM_IOMGR_IOCOMPLETION', N'HADR_LOGCAPTURE_WAIT', N'HADR_NOTIFICATION_DEQUEUE', N'HADR_TIMER_TASK', N'HADR_WORK_QUEUE', N'KSOURCE_WAKEUP', N'LAZYWRITER_SLEEP', N'LOGMGR_QUEUE', N'ONDEMAND_TASK_QUEUE', N'PWAIT_ALL_COMPONENTS_INITIALIZED', N'QDS_PERSIST_TASK_MAIN_LOOP_SLEEP', N'QDS_CLEANUP_STALE_QUERIES_TASK_MAIN_LOOP_SLEEP', N'REQUEST_FOR_DEADLOCK_SEARCH', N'RESOURCE_QUEUE', N'SERVER_IDLE_CHECK', N'SLEEP_BPOOL_FLUSH', N'SLEEP_DBSTARTUP', N'SLEEP_DCOMSTARTUP', N'SLEEP_MASTERDBREADY', N'SLEEP_MASTERMDREADY', N'SLEEP_MASTERUPGRADED', N'SLEEP_MSDBSTARTUP', N'SLEEP_SYSTEMTASK', N'SLEEP_TASK', N'SLEEP_TEMPDBSTARTUP', N'SNI_HTTP_ACCEPT', N'SP_SERVER_DIAGNOSTICS_SLEEP', N'SQLTRACE_BUFFER_FLUSH', N'SQLTRACE_INCREMENTAL_FLUSH_SLEEP', N'SQLTRACE_WAIT_ENTRIES', N'WAIT_FOR_RESULTS', N'WAITFOR', N'WAITFOR_TASKSHUTDOWN', N'WAIT_XTP_HOST_WAIT', N'WAIT_XTP_OFFLINE_CKPT_NEW_LOG', N'WAIT_XTP_CKPT_CLOSE', N'XE_DISPATCHER_JOIN', N'XE_DISPATCHER_WAIT', N'XE_TIMER_EVENT') ) , ress as ( SELECT [W1].[wait_type] AS [WaitType], CAST ([W1].[WaitS] AS DECIMAL (16, 2)) AS [Wait_S],-- . signal_wait_time_ms CAST ([W1].[ResourceS] AS DECIMAL (16, 2)) AS [Resource_S],-- signal_wait_time_ms CAST ([W1].[SignalS] AS DECIMAL (16, 2)) AS [Signal_S],-- [W1].[WaitCount] AS [WaitCount],-- . CAST ([W1].[Percentage] AS DECIMAL (5, 2)) AS [Percentage], CAST (([W1].[WaitS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgWait_S], CAST (([W1].[ResourceS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgRes_S], CAST (([W1].[SignalS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgSig_S] FROM [Waits] AS [W1] INNER JOIN [Waits] AS [W2] ON [W2].[RowNum] <= [W1].[RowNum] GROUP BY [W1].[RowNum], [W1].[wait_type], [W1].[WaitS], [W1].[ResourceS], [W1].[SignalS], [W1].[WaitCount], [W1].[Percentage] HAVING SUM ([W2].[Percentage]) - [W1].[Percentage] < 95 -- percentage threshold ) SELECT [WaitType] ,MAX([Wait_S]) as [Wait_S] ,MAX([Resource_S]) as [Resource_S] ,MAX([Signal_S]) as [Signal_S] ,MAX([WaitCount]) as [WaitCount] ,MAX([Percentage]) as [Percentage] ,MAX([AvgWait_S]) as [AvgWait_S] ,MAX([AvgRes_S]) as [AvgRes_S] ,MAX([AvgSig_S]) as [AvgSig_S] FROM ress group by [WaitType]; In this case, you can determine the lack of RAM by the following query:

SELECT [Percentage] ,[AvgWait_S] FROM [inf].[vWaits] where [WaitType] in ( 'PAGEIOLATCH_XX', 'RESOURCE_SEMAPHORE', 'RESOURCE_SEMAPHORE_QUERY_COMPILE' ); Here you need to pay attention to the indicators Percentage and AvgWait_S. If they are significant in their entirety, then there is a very high probability that the RAM is not enough for the MS SQL Server instance. Essential values are determined individually for each system. However, you can start with the following indicator: Percentage> = 1 and AvgWait_S> = 0.005.

To output indicators to the monitoring system (for example, Zabbix), you can create the following two queries:

- How many percent of RAM expectations are occupied (sum for all such types of expectations):

select coalesce(sum([Percentage]), 0.00) as [Percentage] from [inf].[vWaits] where [WaitType] in ( 'PAGEIOLATCH_XX', 'RESOURCE_SEMAPHORE', 'RESOURCE_SEMAPHORE_QUERY_COMPILE' ); - how many milliseconds occupy the RAM expectation types (maximum value of all average delays for all such expectation types):

select coalesce(max([AvgWait_S])*1000, 0.00) as [AvgWait_MS] from [inf].[vWaits] where [WaitType] in ( 'PAGEIOLATCH_XX', 'RESOURCE_SEMAPHORE', 'RESOURCE_SEMAPHORE_QUERY_COMPILE' );

Based on the dynamics of the values obtained for these two indicators, it can be concluded that there is enough RAM for an instance of MS SQL Server.

Method for detecting excessive load on the CPU

To detect processor shortages, it suffices to use the sys.dm_os_schedulers system view. Here, if the runnable_tasks_count indicator is constantly greater than 1, then there is a high probability that the number of cores is not enough for the MS SQL Server instance.

To output the indicator to the monitoring system (for example, Zabbix), you can create the following query:

select max([runnable_tasks_count]) as [runnable_tasks_count] from sys.dm_os_schedulers where scheduler_id<255; Based on the dynamics of the obtained values for this indicator, it can be concluded whether the CPU time (the number of CPU cores) is sufficient for an instance of MS SQL Server.

However, it is important to keep in mind the fact that the queries themselves can request several threads at once. And sometimes the optimizer cannot correctly estimate the complexity of the query itself. Then the request may be allocated too many threads that are currently not can be processed simultaneously. And this also causes the type of wait associated with a shortage of CPU time, and the queue growth for schedulers that use specific CPU cores, te the runnable_tasks_count indicator will grow in such conditions.

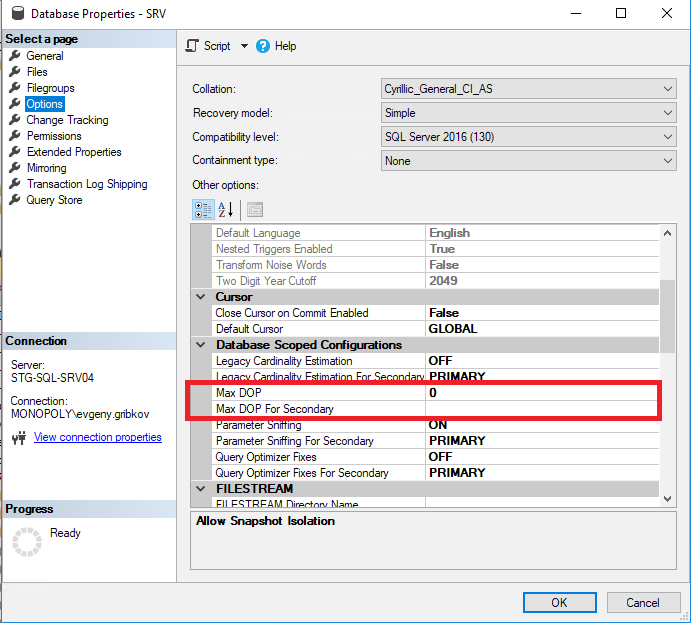

In this case, before increasing the number of CPU cores, you must correctly configure the concurrency properties of the MS SQL Server instance itself, and from the 2016 version you must correctly configure the concurrency properties of the required databases:

Here you should pay attention to the following parameters:

- Max Degree of Parallelism-sets the maximum number of streams that can be allocated to each request (the default is 0-restriction only by the operating system itself and by the edition of MS SQL Server)

- Cost Threshold for Parallelism — estimated cost of parallelism (default is 5)

- Max DOP-sets the maximum number of threads that can be allocated to each query at the database level (but no more than the value of the Max Degree of Parallelism property) (the default is 0-limiting only by the operating system itself and MS SQL Server editors as well as a restriction on the “Max Degree of Parallelism” property of the entire instance of MS SQL Server)

It is impossible to give an equally good recipe for all cases, that is, you need to analyze heavy queries.

From my own experience, I recommend the following algorithm of actions for OLTP systems for setting the properties of parallelism:

- first prohibit parallelism by setting the entire instance of Max Degree of Parallelism to 1

- analyze the most difficult requests and select the optimal number of threads for them

- set Max Degree of Parallelism to the selected optimal number of streams obtained from item 2, as well as for specific databases set Max DOP value obtained from item 2 for each database

- analyze the most difficult requests and identify the negative effect of multithreading. If it is, then increase the cost threshold for parallelism.

For systems such as 1C, Microsoft CRM and Microsoft NAV, in most cases, the prohibition of multithreading will be suitable.

Also, if there is a Standard edition, in most cases a multithreading ban will be appropriate in view of the fact that this edition is limited in the number of CPU cores.

For OLAP systems, the algorithm described above is not suitable.

From my own experience, I recommend the following algorithm of actions for OLAP-systems for setting the properties of parallelism:

- analyze the most difficult requests and select the optimal number of threads for them

- set Max Degree of Parallelism to the selected optimal number of flows obtained from item 1, and also for specific databases set Max DOP value obtained from item 1 for each database

- analyze the most difficult requests and identify the negative effect of parallelism limitation. If it is, then either lower the value of Cost Threshold for Parallelism, or repeat steps 1-2 of this algorithm

T e for OLTP systems we go from single-threading to multithreading, and for OLAP-systems, on the contrary, we go from multi-threading to single-threading. In this way, it is possible to select the optimal concurrency settings for both a specific database and the entire instance of MS SQL Server.

It is also important to understand that the settings of the properties of parallelism need to be changed over time, based on the results of monitoring the performance of MS SQL Server.

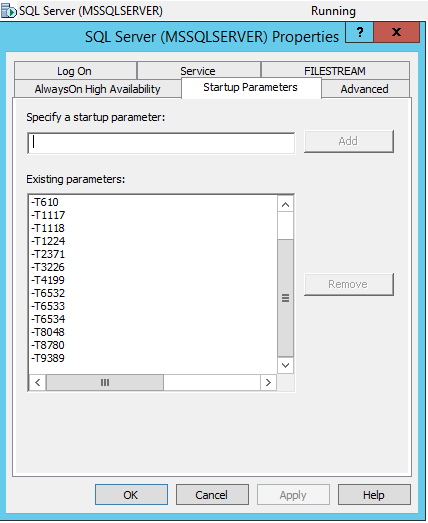

Recommendations for setting trace flags

From my own experience and the experience of my colleagues for optimal performance, I recommend setting the following trace flags at the start level of the MS SQL Server service for the 2008-2016 version:

- 610 - Reducing logging inserts in indexed tables. Can help with inserts into tables with a large number of records and multiple transactions, with frequent long waits for WRITELOG to change in indexes

- 1117 - If a file in the filegroup meets the auto-zoom threshold requirements, all files in the filegroup increase.

- 1118 — Makes all objects be located in different extents (ban on mixed extents), minimizing the need to scan an SGAM page, which is used to track mixed extents.

- 1224 - Disables lock escalation based on the number of locks. However, excessive memory usage may enable lock escalation.

- 2371 - Changes the threshold for fixed automatic update statistics on the threshold for dynamic automatic update statistics. It is important to update query plans for large tables, where incorrectly determining the number of records leads to erroneous execution plans.

- 3226 - Suppresses backup success messages in the error log

- 4199 - Includes changes to the query optimizer released in update rollup packages and SQL Server update packages.

- 6532-6534 - Includes improving the performance of query operations with spatial data types

- 8048 - Converts NUMA partitioned memory objects to CPU partitioned.

- 8780 - Includes additional time allocation for scheduling a request. Some requests without this flag may be rejected because they do not have a request plan (a very rare error)

- 9389 — Enables additional dynamic temporarily-provided memory buffer for batch mode operators, which allows the batch mode operator to request additional memory and avoid data transfer to tempdb if additional memory is available.

Also, before the 2016 version, it is useful to include the trace flag 2301, which includes the optimization of the extended decision support and thereby helps in choosing more accurate query plans. However, starting from version 2016, it often has a negative effect in a fairly long total query execution time.

Also for systems in which there are a lot of indexes (for example, for 1C databases), I recommend including the trace flag 2330, which disables the collection on the use of indexes, which generally has a positive effect on the system.

More details about the trace flags can be found here .

For the link above, it is also important to consider the versions and assemblies of MS SQL Server, since for newer versions, some trace flags are enabled by default or have no effect.

You can enable and disable the trace flag using the DBCC TRACEON and DBCC TRACEOFF commands, respectively. See here for more details.

You can get the status of the trace flags using the DBCC TRACESTATUS command: more .

In order for the trace flags to be included in the autostart of the MS SQL Server service, you need to go to the SQL Server Configuration Manager and add the following trace flags through -T in the service properties:

Results

This article has examined some aspects of monitoring MS SQL Server, with which you can quickly identify the lack of RAM and free CPU time, as well as a number of other less obvious problems. The most commonly used trace flags were considered.

Sources

» SQL Server Wait Statistics

" SQL Server wait statistics or please tell me where it hurts

» System view sys.dm_os_schedulers

» Using Zabbix to monitor MS SQL Server database

SQL Lifestyle

» Trace Flags

" Sql.ru

Source: https://habr.com/ru/post/448044/

All Articles