Linux Virtual File Systems: Why Do They Need It and How Do They Work? Part 2

Hello everyone, we are sharing with you the second part of the publication “Virtual file systems in Linux: why are they needed and how do they work?” The first part can be read here . Recall that this series of publications is timed to the launch of the new thread in the course “Linux Administrator” , which starts very soon.

How to monitor VFS using eBPF and bcc tools

The easiest way to understand how the kernel handles

')

Fortunately, using eBPF is fairly easy using the bcc tools, which are available as packages from a common Linux distribution and documented in detail by Bernard Gregg . The

To get at least a cursory idea of what work VFS is doing on a running system, try

Let's take a more trivial example and see what happens when we insert a USB flash drive into a computer and the system detects it.

In the example above,

When a USB drive is inserted, a kernel

So what's the point of the events file? Using cscope to search for __device_add_disk () , indicates that it calls

Read-only root file systems make embedded devices possible.

Of course, no one turns off the server or your computer, pulling the plug from the outlet. But why? And all because mounted file systems on physical storage devices may have deferred entries, and data structures that record their state may not be synchronized with entries in the repository. When this happens, system owners have to wait for the next boot to run the

However, we all know that many IoT devices, as well as routers, thermostats and cars are now running Linux. Many of these devices have virtually no user interface, and there is no way to turn them off cleanly. Imagine starting a car with a low battery when the power of the control device on Linux constantly jumps up and down. How is it that the system boots without a long

Creating

Bound and overlaid mounts, their use by containers

Running the

Based on the description of the overlaid and bound mount, no one is surprised that Linux containers actively use them. Let's observe what happens when we use systemd-nspawn to launch a container using the

Calling

Let's see what happened:

Running

Here,

Conclusion

Understanding the internal structure of Linux can seem an impossible task, since the kernel itself contains a huge amount of code, leaving aside Linux user-space applications and system call interfaces in C libraries, such as

make kernel research easier than ever.

Friends, write whether this article was useful to you? Perhaps you have any comments or comments? And those who are interested in the course "Administrator Linux", we invite you to the open day , which will be held on April 18.

First part.

How to monitor VFS using eBPF and bcc tools

The easiest way to understand how the kernel handles

sysfs files is to look at it in practice, and the easiest way to watch ARM64 is to use eBPF. eBPF (short for Berkeley Packet Filter) consists of a virtual machine running in the kernel , which privileged users can query from the command line. Kernel sources tell the reader what the kernel can do; running eBPF tools on a booted system shows what the kernel actually does.')

Fortunately, using eBPF is fairly easy using the bcc tools, which are available as packages from a common Linux distribution and documented in detail by Bernard Gregg . The

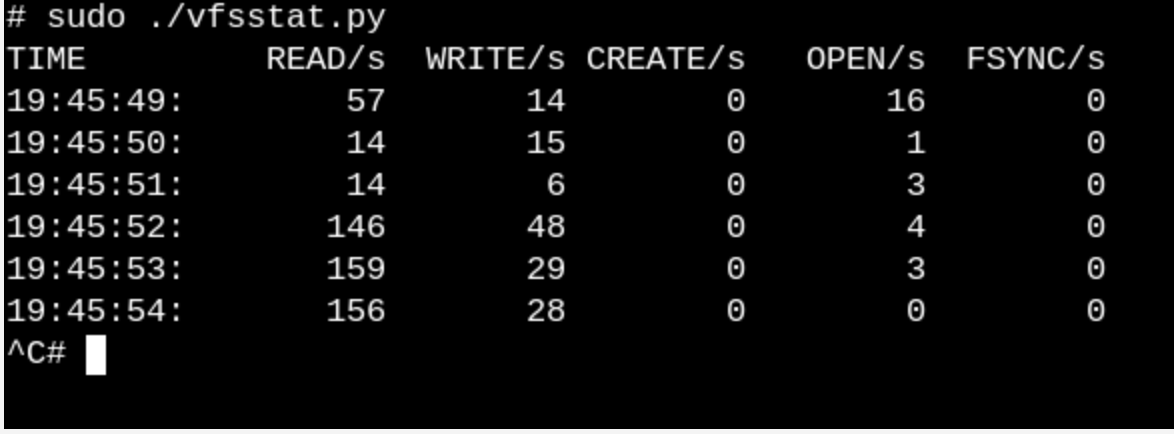

bcc tools are Python scripts with small insertions of C code, which means that anyone familiar with both languages can easily modify them. In bcc/tools there are 80 Python scripts, which means that most likely the developer or system administrator will be able to choose something suitable for solving the problem.To get at least a cursory idea of what work VFS is doing on a running system, try

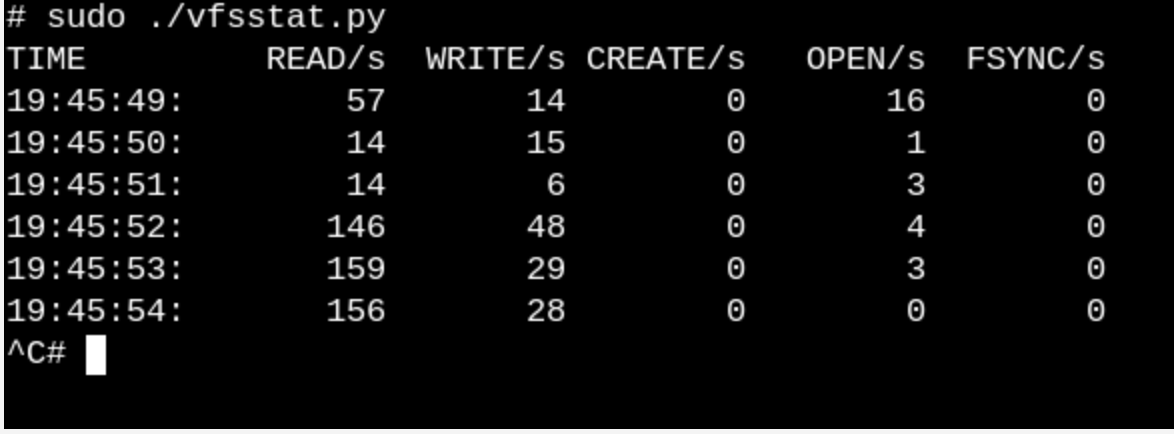

vfscount or vfsstat . This will show, say, that dozens of calls to vfs_open() and “his friends” occur literally every second.

vfsstat.py is a Python script, with C code inserts, that simply counts VFS function calls.Let's take a more trivial example and see what happens when we insert a USB flash drive into a computer and the system detects it.

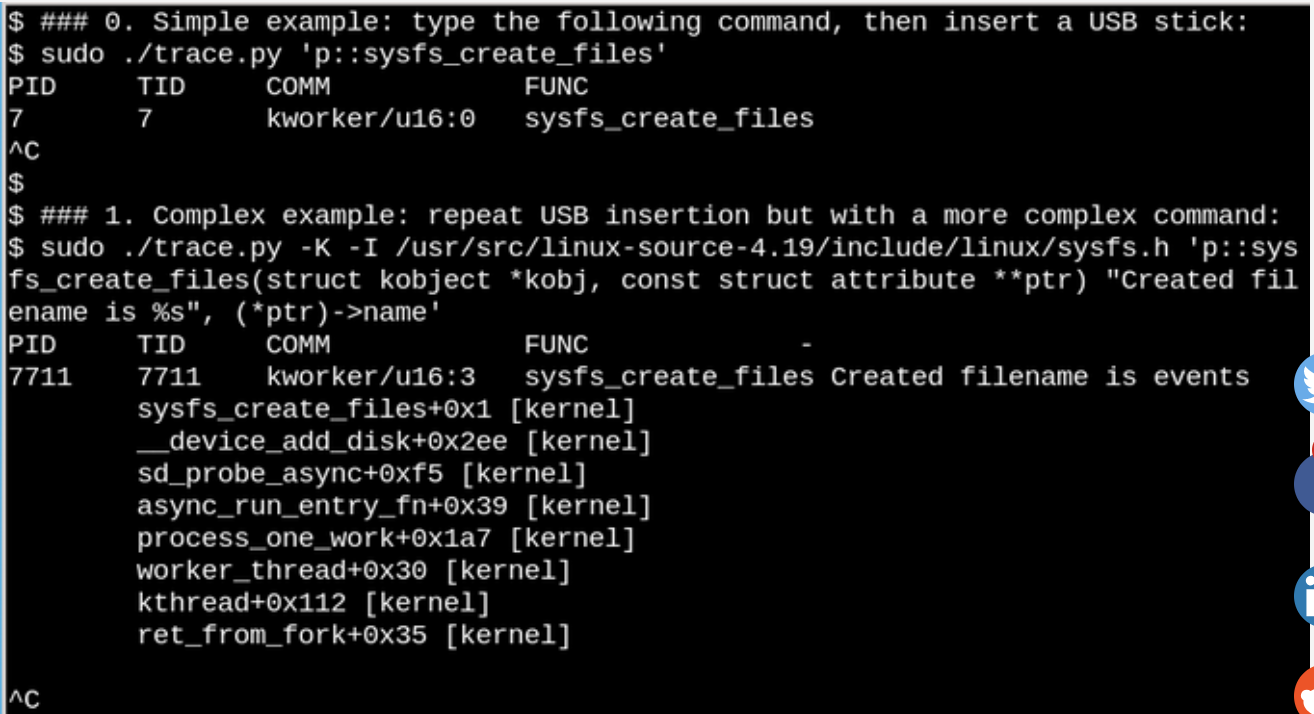

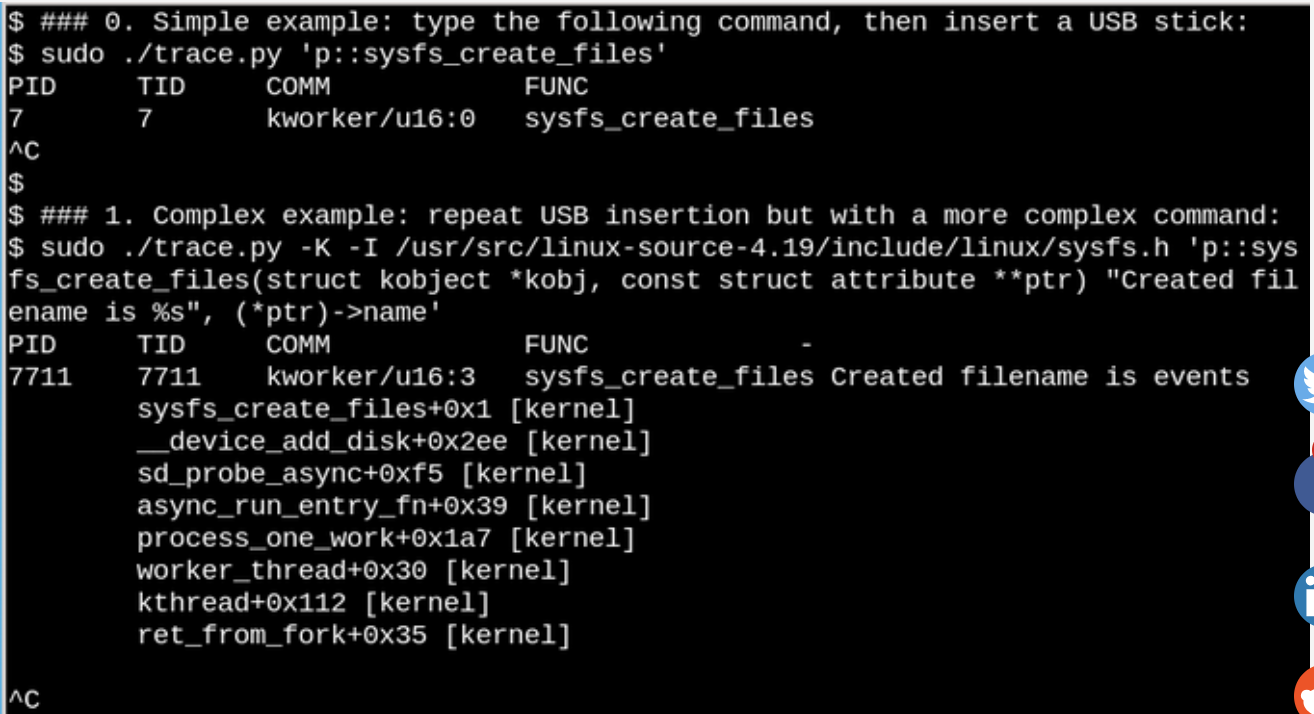

With eBPF, you can see what happens in /sys when a USB flash drive is inserted. Here is a simple and complex example.In the example above,

bcc trace.py tool displays a message when the sysfs_create_files() command is sysfs_create_files() . We see that sysfs_create_files() was launched using the kworker stream in response to the fact that the flash drive was inserted, but what file was created? The second example shows the power of eBPF. Here, trace.py displays a kernel trace.py (kernel backtrace) (option -K) and the name of the file that was created by sysfs_create_files() . Insertion in single statements is C code that includes an easily recognizable format string provided by the Python script that runs the LLVM just-in-time compiler . This line it compiles and executes in a virtual machine inside the kernel. The full signature of the sysfs_create_files () function must be reproduced in the second command so that the format string can refer to one of the parameters. Errors in this C code fragment lead to recognizable C compiler errors. For example, if the -l option is omitted, you will see “Failed to compile BPF text.” Developers who are familiar with C and Python will find bcc tools easy to expand and modify.When a USB drive is inserted, a kernel

kworker will show that PID 7711 is the kworker stream that created the «events» file in sysfs . Accordingly, a call with sysfs_remove_files() will show that deleting the drive resulted in deleting the events file, which corresponds to the general concept of reference counting. At the same time, viewing sysfs_create_link () from eBPF during insertion of a USB drive will show that at least 48 symbolic links have been created.So what's the point of the events file? Using cscope to search for __device_add_disk () , indicates that it calls

disk_add_events () , and either "media_change" or "eject_request" can be written to the event file. Here, the kernel block layer informs userspace about the appearance and extraction of the “disk”. Notice how informative this research method is on the example of inserting a USB drive compared to trying to figure out how everything works, exclusively from source.Read-only root file systems make embedded devices possible.

Of course, no one turns off the server or your computer, pulling the plug from the outlet. But why? And all because mounted file systems on physical storage devices may have deferred entries, and data structures that record their state may not be synchronized with entries in the repository. When this happens, system owners have to wait for the next boot to run the

fsck filesystem-recovery utility and, in the worst case, lose data.However, we all know that many IoT devices, as well as routers, thermostats and cars are now running Linux. Many of these devices have virtually no user interface, and there is no way to turn them off cleanly. Imagine starting a car with a low battery when the power of the control device on Linux constantly jumps up and down. How is it that the system boots without a long

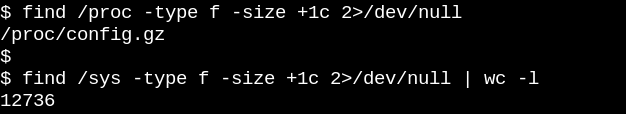

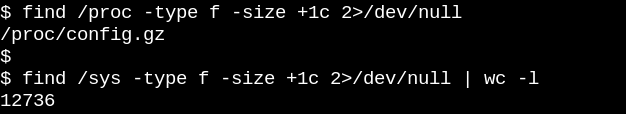

fsck , when the engine finally starts working? And the answer is simple. Embedded devices rely on a read-only root filesystem (abbreviated as ro-rootfs (read-only root file system)).ro-rootfs offer many benefits that are less obvious than genuine. One advantage is that malware cannot write to /usr or /lib if no Linux process can write there. Another is that the largely immutable file system is crucial for field support for remote devices, since support personnel use local systems that are nominally identical to systems in the field. Perhaps the most important (but also the most insidious) advantage is that ro-rootfs makes developers decide which system objects will be unchanged, even at the system design stage. Working with ro-rootfs can be inconvenient and painful, as is often the case with const variables in programming languages, but their advantages easily pay for the additional overhead.Creating

rootfs only rootfs requires some extra effort for developers of embedded systems, and this is where VFS comes into play. Linux requires that the files in /var be writable, and, in addition, many popular applications that run embedded systems will try to create configuration dot-files in $HOME . One solution for configuration files in the home directory is usually their pre-generation and build into rootfs . For /var one of the possible approaches is to mount it in a separate section that is writable, while it is mounted / read-only. Another popular alternative is to use bind or overlay mounts.Bound and overlaid mounts, their use by containers

Running the

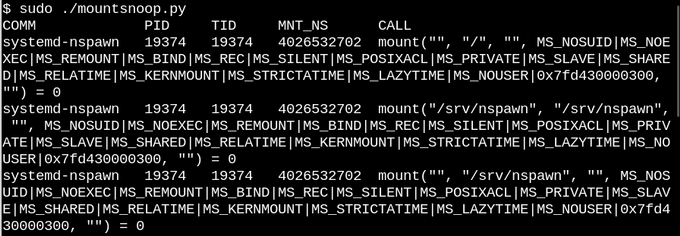

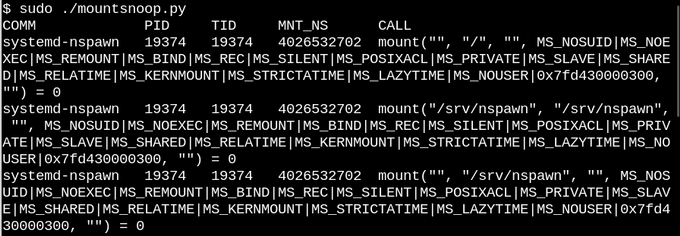

man mount command is the best way to learn about mountable and overlayed mountings, which give developers and system administrators the ability to create a file system along one path and then provide it to applications in another. For embedded systems, this means the ability to store files in /var on a read-only flash drive, but overlaying or linking the mounting of the path from tmpfs to /var when loading will allow applications to write scrawl notes there. The next time you turn on, changes to /var will be lost. The overlay mount creates a union between tmpfs and the underlying file system and allows you to make modifications to existing files in ro-tootf while the associated mount can make new empty tmpfs folders visible as available for writing to ro-rootfs paths. While overlayfs is the proper ( proper ) file system type, the associated mount is implemented in the VFS namespace .Based on the description of the overlaid and bound mount, no one is surprised that Linux containers actively use them. Let's observe what happens when we use systemd-nspawn to launch a container using the

bcc mountsnoop tool.

Calling

system-nspawn starts the container while mountsnoop.py .Let's see what happened:

Running

mountsnoop while the container is “loading” indicates that the runtime environment of the container is strongly dependent on the mount being linked (Only the beginning of the long output is displayed).Here,

systemd-nspawn provides the selected files in the host's procfs and sysfs container as paths in its rootfs . In addition to the MS_BIND flag, which establishes a binding mount, some other flags in the mounted system define the relationship between changes in the host and container namespaces. For example, a bound mount can either skip changes to /proc and /sys to a container, or hide them depending on the call.Conclusion

Understanding the internal structure of Linux can seem an impossible task, since the kernel itself contains a huge amount of code, leaving aside Linux user-space applications and system call interfaces in C libraries, such as

glibc . One way to make progress is to read the source code of a single kernel subsystem, with a focus on understanding system calls and headers facing user space, as well as the main internal interfaces of the kernel, for example, the file_operations table. File operations provide the principle of “everything is a file”, so their management is especially nice. The C kernel source files in the fs/ top-level directory represent the implementation of virtual file systems, which are a shell layer that provides wide and relatively simple compatibility of popular file systems and storage devices. Linux binding and overlapping mounts are the magic of VFS, which makes it possible to create containers and root-only file systems for reading. Combined with source code learning, the eBPF kernel tool and its bcc interfacemake kernel research easier than ever.

Friends, write whether this article was useful to you? Perhaps you have any comments or comments? And those who are interested in the course "Administrator Linux", we invite you to the open day , which will be held on April 18.

First part.

Source: https://habr.com/ru/post/447748/

All Articles