How to divide the front-end and backend, maintaining mutual understanding

How to change the architecture of a monolithic product to accelerate its development, and how to divide one team into several, while maintaining consistency of work? For us, the answer to these questions was the creation of a new API. Under the cat you will find a detailed story about the path to such a decision and an overview of the selected technologies, but for a start - a small lyrical digression.

Several years ago I read in a scientific article that more and more time is needed for full-fledged training, and in the near future it will take eighty years of life to gain knowledge. Apparently, in IT this future has already arrived.

I was lucky to start programming in those years when there was no division into backend and front-end programmers when the words “prototype”, “productologist”, “UX” and “QA” did not sound. The world was simpler, the trees were taller and greener, the air was cleaner, and children played in the courtyards, rather than parking cars. No matter how much I would like to return at that time, I must admit that all this is not the intention of the supervillain, but the evolutionary development of society. Yes, society could have developed differently, but, as we know, history does not tolerate the subjunctive mood.

Prehistory

BILLmanager appeared just in those times when there was no hard division into directions. He had a consistent architecture, knew how to control user behavior, and it could even be expanded with plugins. As time went on, the team developed the product, and everything seemed to be fine, but strange phenomena began to be observed. For example, when a programmer was engaged in business logic, he would start to impose bad forms, making them uncomfortable and difficult to understand. Or the addition of seemingly simple functionality took several weeks: architectural modules were tightly connected, so when changing one, you had to adjust the other.

About convenience, ergonomics and global development of the product in general could be forgotten when the application fell with an unknown error. If earlier a programmer had time to do work in different directions, then with the growth of the product and the requirements for it, it became impossible. The developer saw the whole picture and understood that if the function does not work correctly and stably, then molds, buttons, tests and promotion will not help. Therefore, I put everything off and sat down to correct the ill-fated error. He accomplished his small feat, which remained unrecognized by no one (the client simply didn’t have the strength to correctly serve), but the function started to work. Actually, in order for these small feats to reach customers, there are people in the team who are responsible for different areas: front-end and back-end, testing, design, support, promotion.

But this was only the first step. The team has changed, and the product architecture has remained technically tightly connected. Because of this, it was not possible to develop the application at the required pace, when changing the interface, it was necessary to change the backend logic, although the structure of the data itself often remained unchanged. With all this it was necessary to do something.

Frontend and backend

To become a professional in everything is long and expensive, so the modern world of application programmers is divided, for the most part, into frontend and backend.

Everything seems to be clear here: we are recruiting front-end programmers, they will be responsible for the user interface, and the backend will finally be able to focus on business logic, data models and other engineered things. At the same time, the backend, front-end, testers and designers will remain in the same team (after all, they make a common product, they just focus on different parts of it). Being in the same team means having one informational and, preferably, territorial space; discuss new features together and disassemble finished ones; coordinate work on a big task.

For some abstract new project this will be enough, but we already had a written application, and the volumes of the planned works and the terms for their implementation clearly indicated that we could not manage with one team. There are five people in the basketball team, 11 in the football team, and we had about 30. That didn’t fit the ideal scrum team of five to nine people. It was necessary to divide, but how to keep connectedness? To budge, it was necessary to solve the architectural and organizational problems.

“We’ll do everything in one project, it will be more convenient,” they said ...

Architecture

When a product is outdated, it seems logical to abandon it and write a new one. This is a good solution if you can predict the time and it will suit everyone. But in our case, even under ideal conditions, the development of a new product would take years. In addition, the specificity of the application is such that it would be extremely difficult to switch from the old to the new with their full difference. Backward compatibility is very important for our customers, and if it doesn’t exist, they will refuse to switch to the new version. The feasibility of developing from scratch in this case is questionable. Therefore, we decided to upgrade the architecture of the existing product while maintaining maximum backward compatibility.

Our application is a monolith, whose interface was built on the server side. The frontend only implemented the instructions received from it. In other words, the backend was not responsible for the user interface . Architectural frontend and backend worked as one unit, so by changing one, we had to change the other. And this is not the worst thing, which is much worse - it was impossible to develop a user interface without deep knowledge of what is happening on the server.

It was necessary to separate the front-end and back-end, to make separate software applications: it was the only way to start developing them with the required rates and volumes. But how to do two projects in parallel, to change their structure, if they are highly dependent on each other?

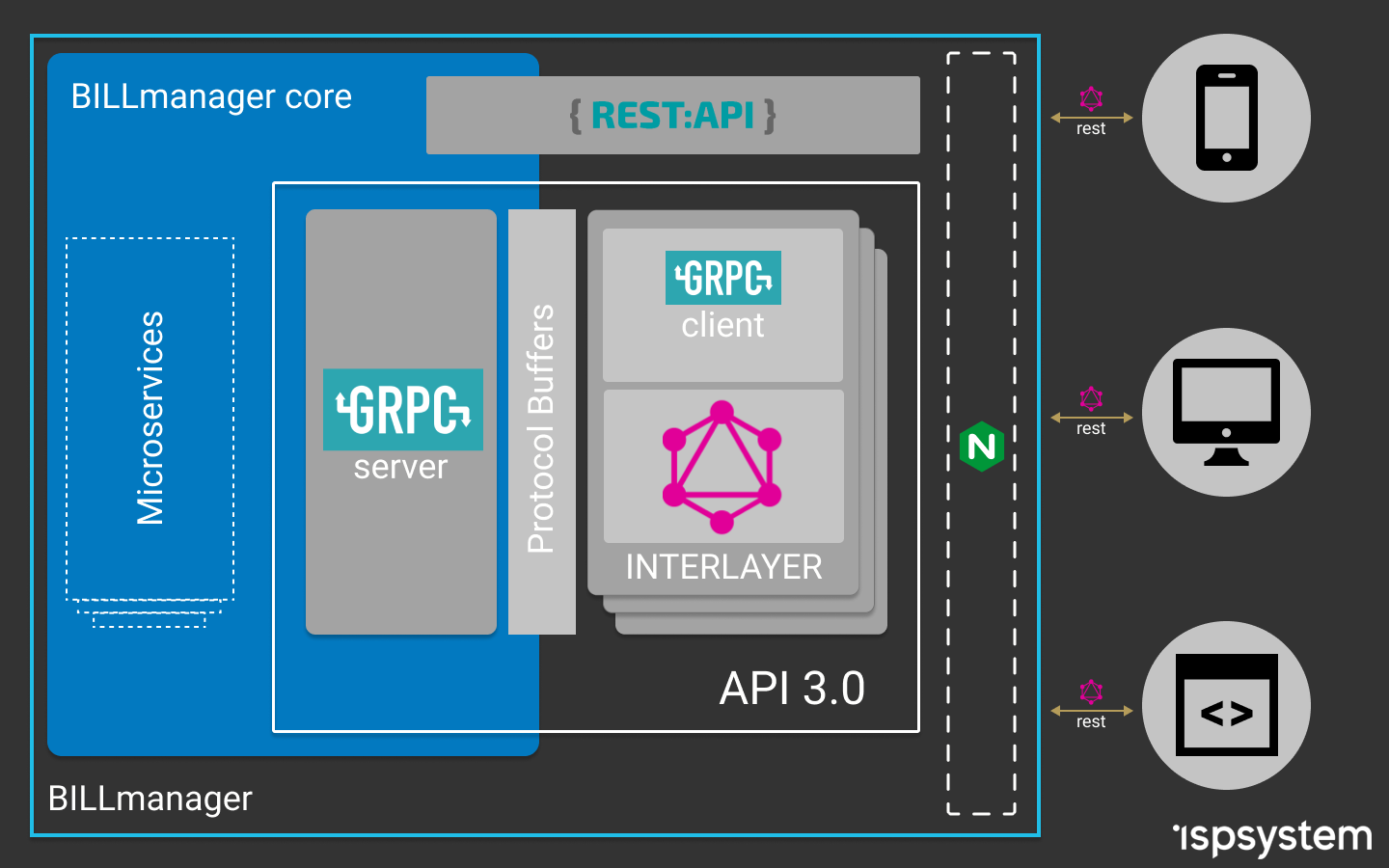

The solution was an additional system - an interlayer . The idea of the layer is extremely simple: it must coordinate the work of the backend and the frontend and take on all the additional costs. For example, in order to decompose the payment function on the backend side, the layer combined the data, and on the front end side, nothing needed to be changed; or so that we would not do an additional function on the back-end to output all the services ordered by the user to the dashboards, but aggregate the data in the interlayer.

In addition, the layer was supposed to add certainty that you can call from the server and that eventually will return. It would be desirable, that the request of operations could be done without knowledge of the internal structure of the functions that perform them.

Increased sustainability by dividing areas of responsibility.

Communications

Due to the strong dependence between the front-end and the back-end, it was impossible to do work in parallel, which slowed down both parts of the team. By programmatically dividing one large project into several, we got freedom of action in each, but at the same time we needed to maintain consistency in the work.

Someone will say that consistency is achieved by means of increasing soft skills. Yes, they need to be developed, but this is not a panacea. Look at the traffic, it is also important that drivers are polite, know how to go around random obstacles and help each other in difficult situations. But! Without the rules of the road, even with the best communications, we would get accidents at every intersection and the risk of not getting to the place on time.

We needed rules that would be hard to break. As they say, it was easier to comply with them than to break. But the introduction of any laws carries not only advantages, but also overhead, but we really did not want to slow down the main work, drawing everyone into the process. Therefore, we created a coordination group, and then a team, the purpose of which was to create conditions for the successful development of different parts of the product. She set up interfaces that allowed different projects to work as one unit - the very rules that are easier to follow than to break.

We call this command “API”, although the technical implementation of the new API is only a small part of its tasks. As common parts of the code are placed in a separate function, the API team also deals with general issues of product teams. This is where our front end and back end are connected, so the members of this team need to understand the specifics of each direction.

Perhaps “API” is not the most appropriate name for the team, it would be more suitable for architecture or large-scale vision, but I think it doesn’t change this fact.

API

The access interface to the functions on the server existed in our initial application, but for the consumer it looked chaotic. When separating the frontend and backend, more certainty was needed.

The objectives for the new API were formed from the daily difficulties in implementing new product and design ideas. We needed:

- Weak connectivity of the system components so that the backend and the frontend can be developed in parallel.

- High scalability so that the new API does not interfere with increasing functionality.

- Stability and consistency.

The search for a solution for the API did not start from the backend, as is usually the case, but, on the contrary, we thought what the users need.

The most common are all sorts of REST APIs. In recent years, descriptive models have been added to them through tools like swagger, but you need to understand that this is the same REST. And, in fact, its main plus and at the same time, minus - these are rules that are purely descriptive. That is, no one forbids the creator of such an API to deviate from the REST postulates when implementing individual parts.

Another common solution is GraphQL. It is also not perfect, but unlike REST, the GraphQL API is not just a descriptive model, but real rules.

Above, I spoke about a system that was supposed to coordinate the work of the frontend and backend. The interlayer is exactly that intermediate level. Having considered the possible options for working with the server, we stopped at GraphQL as an API for the frontend . But, since the backend was written in C ++, the implementation of the GraphQL server turned out to be a nontrivial task. I will not describe here all the arising difficulties and tricks that we went to in order to overcome them, this did not bring a real result. We looked at the problem from the other side and decided that simplicity is the key to success. Therefore, we stopped at proven solutions: a separate Node.js server with Express.js and Apollo Server.

Next, you had to decide how to access the backend API. First we looked in the direction of raising the REST API, then we tried to use addons in C ++ for Node.js. As a result, we realized that all this does not suit us, and after a detailed analysis for the backend, we chose an API based on gRPC services .

By bringing together the experience gained in using C ++, TypeScript, GraphQL and gRPC, we obtained an application architecture that allows us to flexibly develop the backend and frontend while continuing to create a single software product.

It turned out a scheme where the frontend communicates with the intermediate server using GraphQL queries (knows what to ask and what will be received in response). The GraphQL server in resolvers calls the gRPC server API functions, and they use Protobuf schemas for communication. The gRPC-based API server knows which microservice to take data from, or to whom to send the received request. Microservices themselves are also built on gRPC, which provides speed of query processing, data typing and the possibility of using various programming languages for their development.

The general scheme of work after the architecture change

This approach has a number of drawbacks, the main of which is the additional work on setting up and coordinating schemes, as well as writing support functions. But these costs will pay off when there are more API users.

Result

We have gone an evolutionary path of product and team development. Achieved success or the idea turned into a failure, probably, it is too early to judge, but you can sum up the interim results. What we have now:

- The front-end is responsible for the mapping, and the backend is responsible for the data.

- The frontend preserved flexibility in terms of querying and receiving data. The interface knows what to ask from the server and what responses should be.

- On the backend, it was possible to change the code with the confidence that the user interface would continue to work. It became possible to switch to microservice architecture without the need to redo the whole frontend.

- Now you can use mock data for the frontend, when the backend is not yet ready.

- Creating collaborative schemes eliminated interaction problems when teams understood the same task differently. The number of iterations on the alteration of data formats has decreased: we act according to the principle “measure seven times, cut once”.

- Now you can plan work on the sprint in parallel.

- To implement individual microservices, you can now recruit developers who are not familiar with C ++.

Of all this, the main achievement I would call the opportunity to consciously develop the team and project. I think we managed to create conditions in which each participant can more purposefully increase their competencies, focus on tasks and not disperse attention. Everyone is required to work only on their own site, and now it is possible with high involvement and without constant switching. It is impossible to become a professional in everything, but now it is not necessary for us .

The article was a review and very general. Its goal was to show the path and results of complex research work on how to change architecture from a technical point of view to continue product development, as well as to demonstrate the organizational difficulties of dividing the team into agreed parts.

Here I have superficially touched upon the issues of command and team work on one product, the choice of API technologists (REST vs GraphQL), the connection of Node.js applications with C ++, etc. Each of these topics draws on a separate article, and if you are interested, we will write them.

')

Source: https://habr.com/ru/post/447650/

All Articles