The evolution of CI in the mobile development team

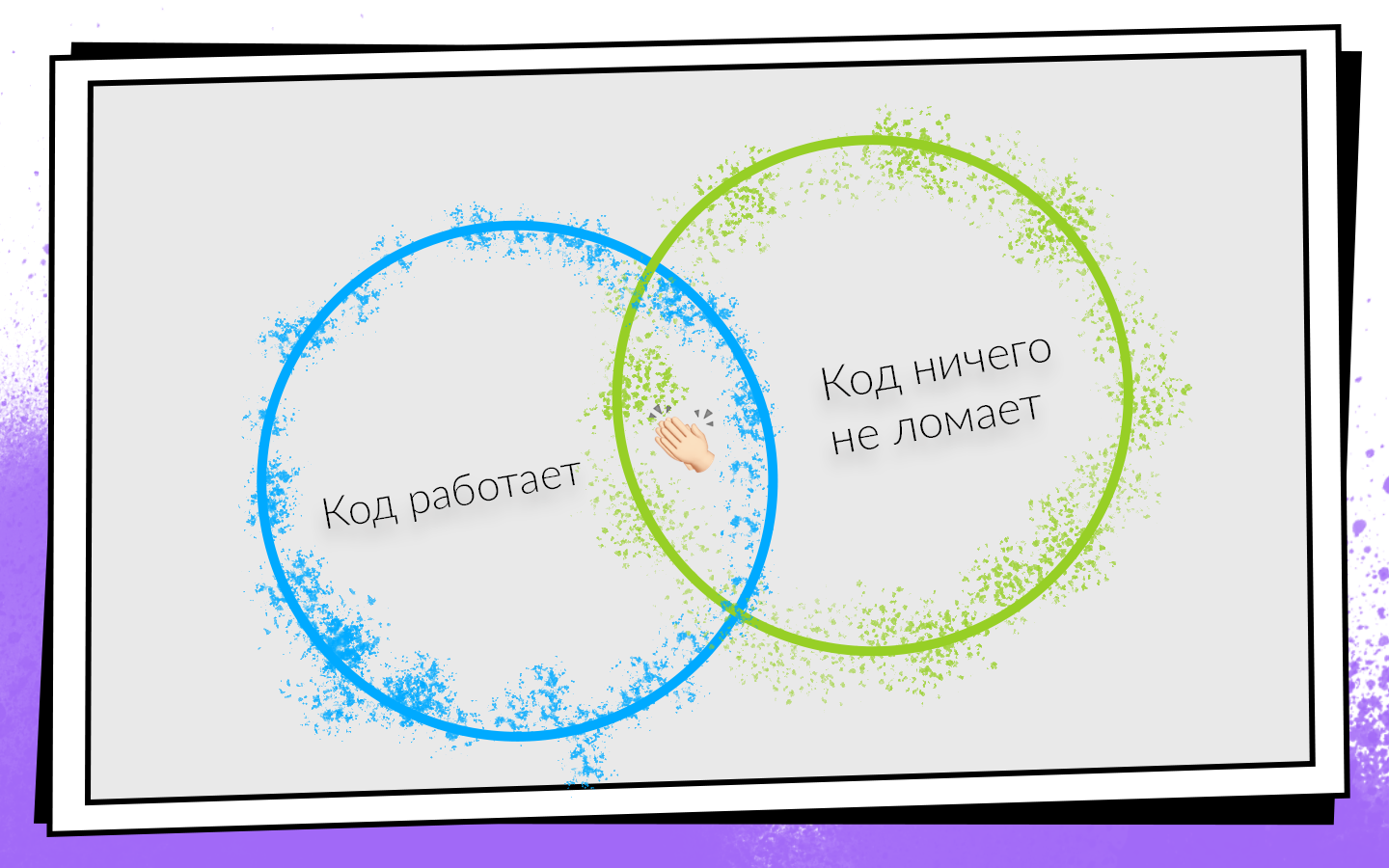

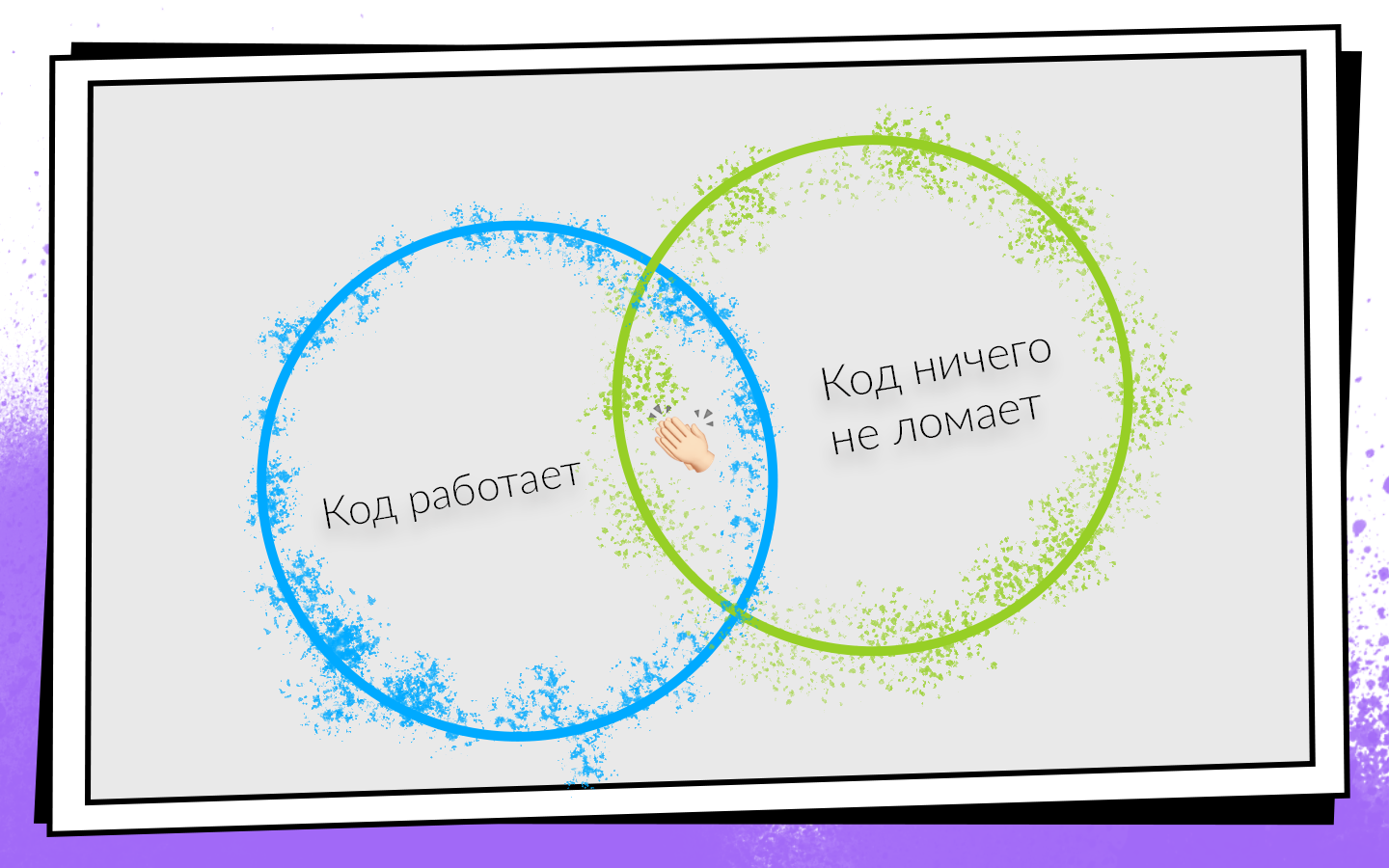

Today, most software products are developed in teams. Conditions for the success of team development can be represented in the form of a simple scheme.

Having written the code, you need to make sure that it:

')

If both conditions are met, then you are on your way to success. In order to easily check these conditions and not turn away from a profitable path, we invented Continuous Integration.

CI is a workflow where you integrate your code into the common product code as often as possible. And not just integrating, but also constantly checking that everything works. Since you need to check a lot and often, you should think about automation. You can check everything on hand-crafted, but not worth it, and here's why.

How to implement and develop Continuous Integration in the Avito mobile development team, how from 0 to 450 assemblies per day reached, and that build machines collect 200 hours a day, says Nikolay Nesterov ( nnesterov ) - participant of all evolutionary changes in the CI / CD Android application .

The story is based on the example of the Android-team, but most approaches are applicable on iOS too.

Once upon a time in the Android team Avito worked one person. By definition, it didn’t need anything from Continuous Integration: it wasn’t anyone to integrate with.

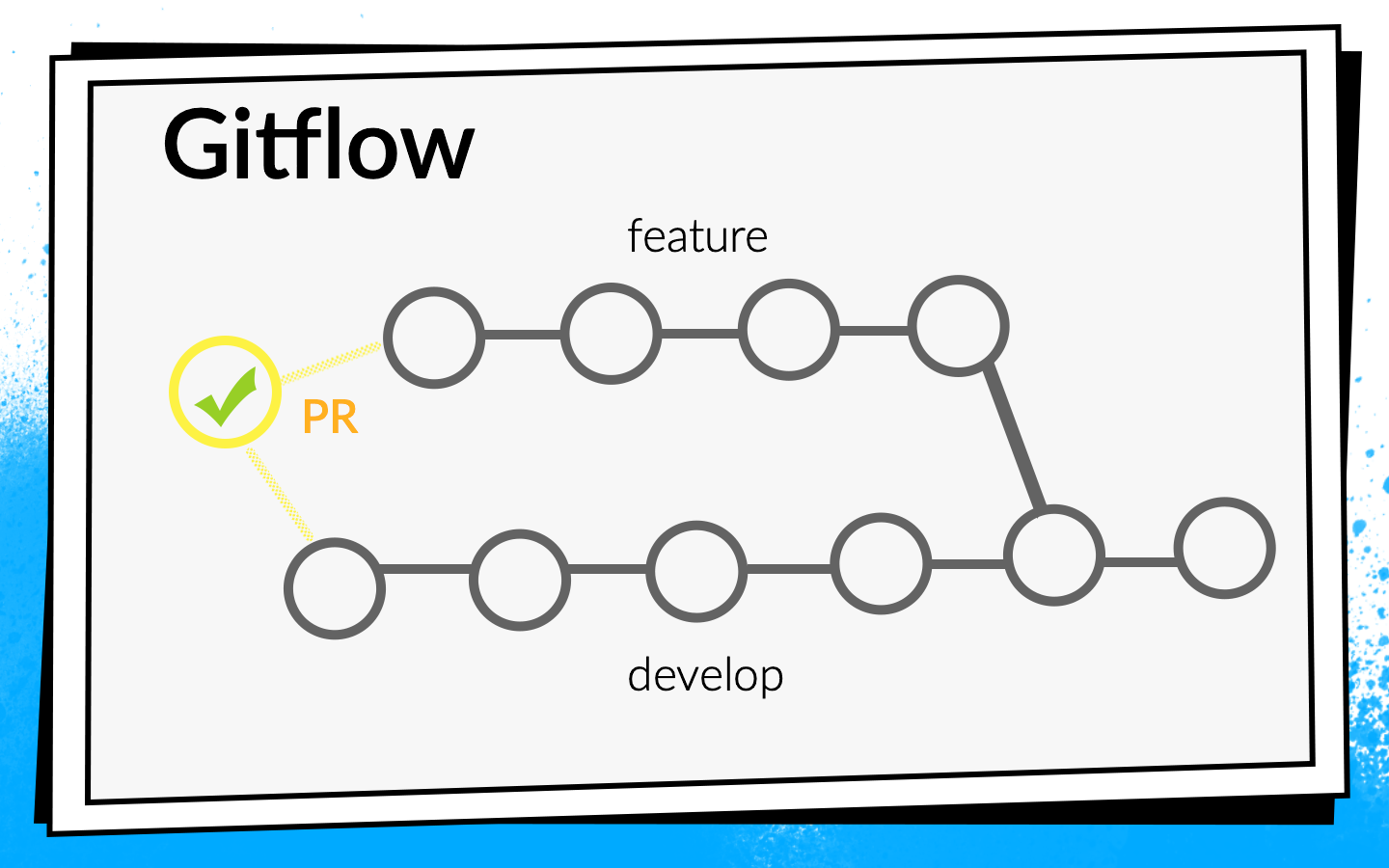

But the application grew, there appeared more and more new tasks, respectively, the team grew. At some point, it is time to formalize the code integration process more formally. It was decided to use Git flow.

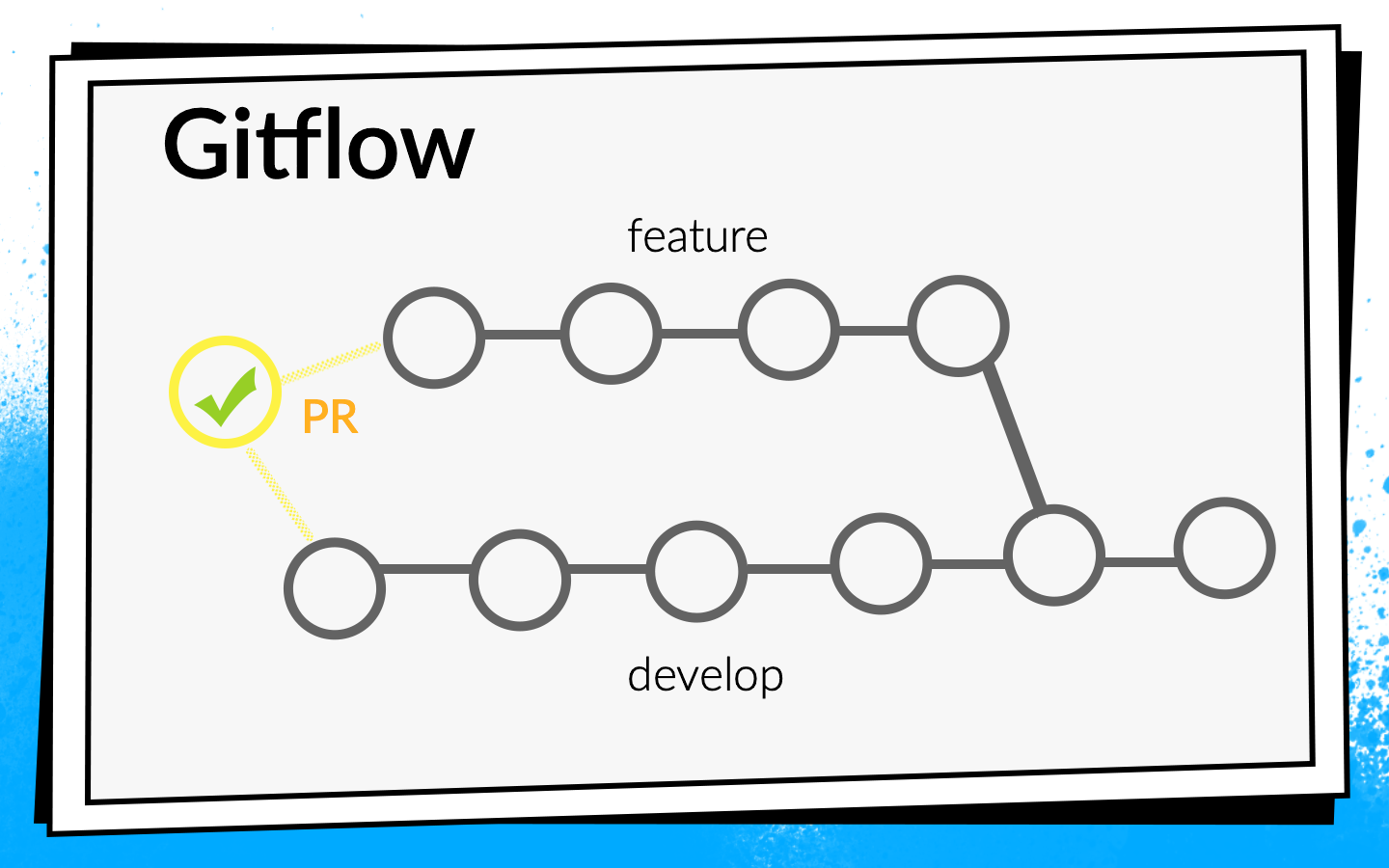

The concept of Git flow is well known: the project has one common branch to develop, and for each new feature, the developers cut a separate branch, commit to it, push, and when they want to infuse their code into the develop branch, open pull request. To share knowledge and discuss approaches, we introduced a code review, that is, colleagues must verify and confirm each other’s code.

Watching the code with your eyes is cool, but not enough. Therefore, automatic checks are introduced.

To understand how to run these checks, let's look at the development process in Avito.

It can be schematically represented as follows:

Nobody liked to run checks on his laptop. When the developer has finished the feature, he wants to quickly push it and open the pull request. If at this moment some long checks are launched, it is not only not very pleasant, but also slows down the development: while the laptop checks something, it is impossible to work normally on it.

We really liked to run checks at night, because there is a lot of time and servers, you can roam. But, unfortunately, when the feature code got into develop, the developer has much less motivation to fix the errors that CI found. I occasionally caught myself thinking when I looked at the morning report on all the errors found, which I will fix them sometime later, because now in Jira there is a cool new task that I really want to start doing.

If the checks block the pull request, then the motivation is enough, because until the builds go green, the code will not end up in develop, which means that the task will not be completed.

As a result, we chose this strategy: at night we drive the largest possible set of checks, and the most critical of them, and most importantly, the fastest, run on pull request. But we don’t stop there - in parallel, we optimize the speed at which checks pass so that they can be transferred from night mode to check request.

At that time, all of our assemblies were fairly fast, so we simply turned on the blocker to pull request the build of the ARC, Junit tests and calculation of code coverage. We turned it on, thought it over, and abandoned code coverage, because we thought that we didn't need it.

It took us two days to complete the setup of the base CI (hereinafter, a temporary estimate is approximate, needed for scale).

After that, we began to think further - are we checking correctly? Do we run builds correctly on pull request?

We ran the build on the last commit of the branch with which pull request is open. But checking this commit can only show that the code that the developer wrote is working. But they do not prove that he did not break anything. In fact, you need to check the state of the develop branch after the feature has been merged into it.

To do this, we wrote a simple bash script premerge.sh:

Here, all the latest changes from develop are simply pulled up and merged into the current branch. We added the premerge.sh script with the first step of all the builds and began to check exactly what we want, that is, integration .

It took three days to localize the problem, find a solution and write this script.

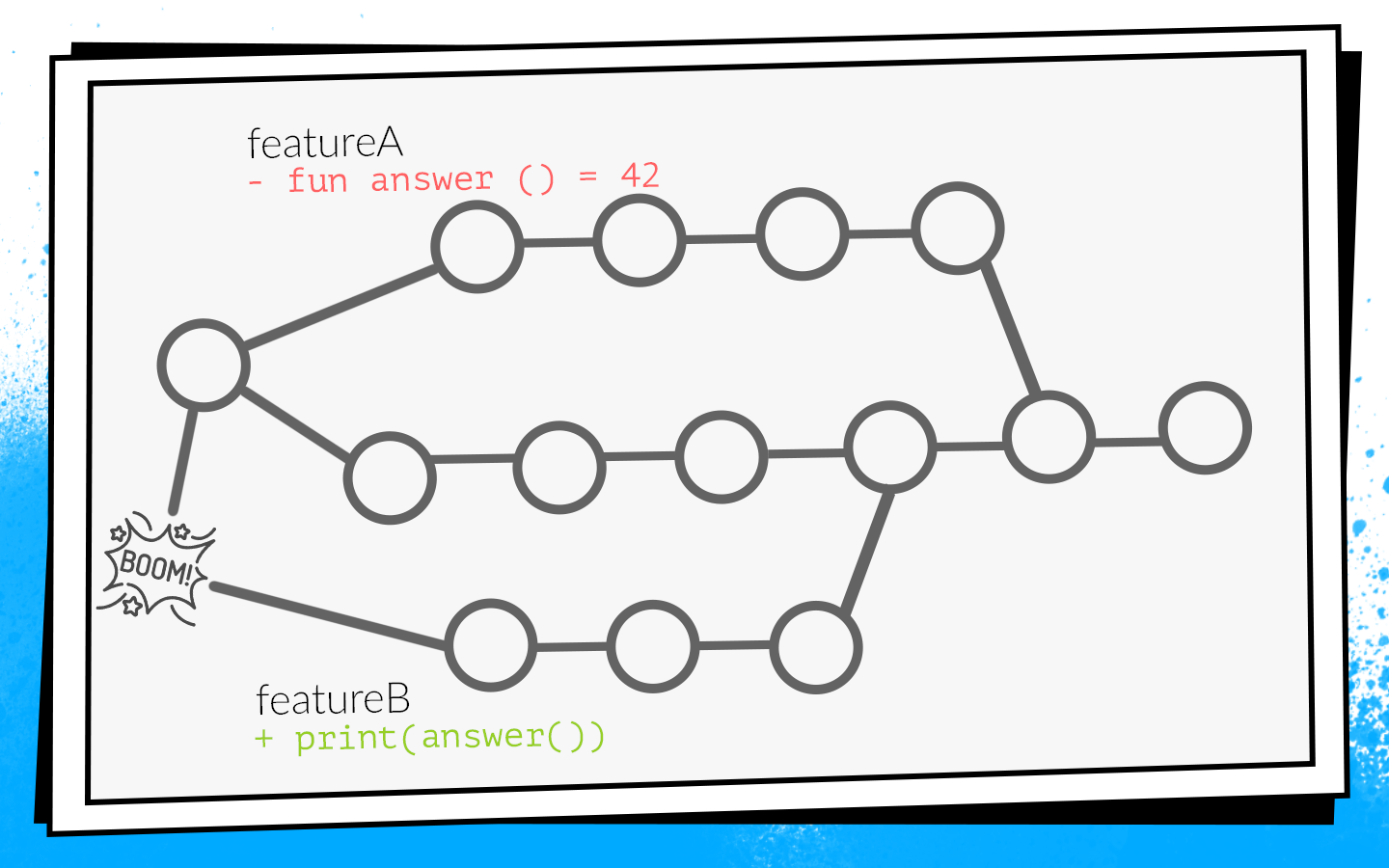

The application developed, more and more tasks appeared, the team grew, and premerge.sh sometimes began to fail us. In develop penetrated conflicting changes that broke the assembly.

An example of how this happens:

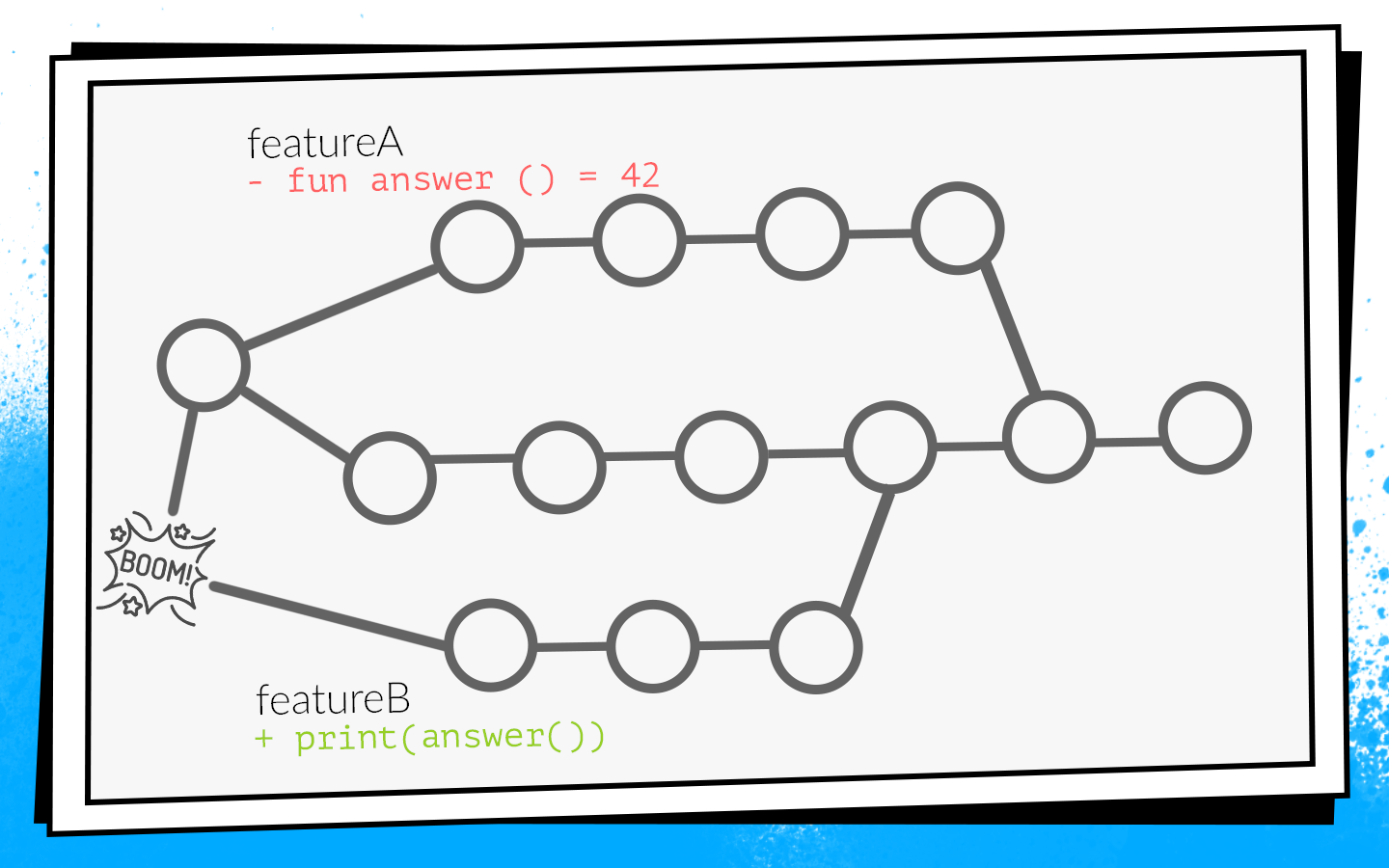

Two developers start to cut features A and B at the same time. Developer feature A discovers an unused function

Developers finish work and at the same time open pull request. Builds are started, premerge.sh checks both pull requests for a new state of develop - all checks are green. After that, pull request features A, merge pull request features B ... Boom! Develop breaks because in the develop code there is a call to a non-existent function.

When not going to develop, this is a local catastrophe . The whole team can not collect and give anything for testing.

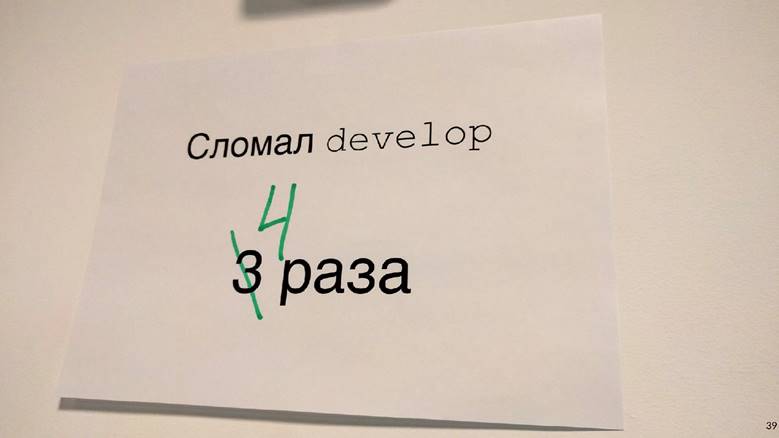

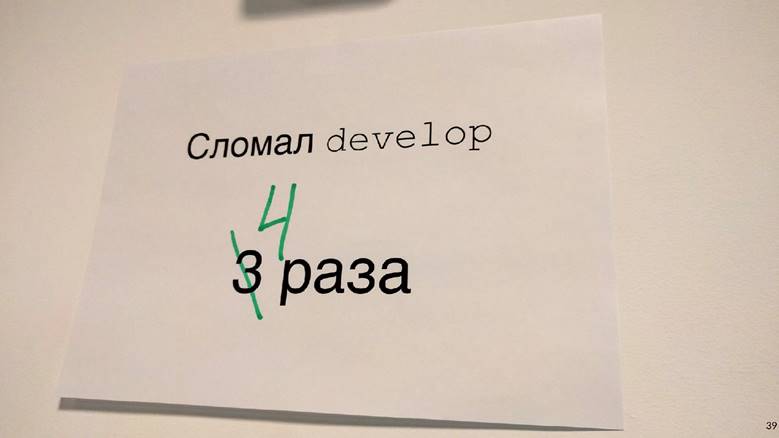

It so happened that I most often dealt with infrastructural tasks: analytics, network, databases. That is, I wrote those functions and classes that other developers use. Because of this, I very often got into such situations. I even had such a picture at one time.

Since this did not suit us, we began to work out options for how to prevent it.

The first option: rebuild all pull request when developing develop. If in our example pull request with feature A first gets into develop, pull request feature B is rebuilt, and, accordingly, checks fail due to a compilation error.

To understand how much time it will take, consider the two PR example. Open two PRs: two builds, two test runs. After the first PR is injected into develop, the second must be reassembled. In total, two PR runs take three runs of checks: 2 + 1 = 3.

Basically, fine. But we looked at the statistics, and the typical situation in our team was 10 open PRs, and then the number of checks is the sum of the progression: 10 + 9 + ... + 1 = 55. That is, to accept 10 PR, you need to reassemble 55 times. And this is in an ideal situation, when all the checks pass the first time, when no one opens the additional pull request while this ten is processed.

Imagine yourself as a developer who needs to have time to click on the “merge” button first, because if a neighbor does it, you will have to wait until all the assemblies are redone ... No, that won't work, it will seriously slow down development.

The second possible way: collect the pull request after the code review. That is, open the pull request, collect the required number of apruvas from colleagues, fix what you need, then launch the builds. If they are successful, pull request merges with develop. In this case, there are no additional restarts, but the feedback is greatly slowed down. I, as a developer, opening a pull request, just want to see if he is going. For example, if a test fell, you need to quickly fix it. In the case of a deferred assembly, the feedback slows down, and hence the entire development. It did not suit us either.

As a result, there was only the third option - the bike . All our code, all our sources are stored in a repository in a Bitbucket server. Accordingly, we had to develop a plugin for Bitbucket.

This plugin overrides the pull request merge mechanism. The beginning is standard: PR opens, all assemblies run, code review passes. But after the code review has been passed, and the developer decides to click on “merge”, the plugin checks for which state of develop the checks were run. If after the develops build has been updated, the plugin will not allow such a pull request to be inserted into the main branch. It will simply restart the builds for a relatively fresh develop.

In our example with conflicting changes, such builds will not work due to a compilation error. Accordingly, the developer of the feature B will have to correct the code, restart the checks, then the plugin will automatically apply pull request.

Before the introduction of this plug-in, we had an average of 2.7 start-up checks per pull request. With the plugin was 3.6 launch. It suited us.

It is worth noting that this plugin has a flaw: it restarts the build only once. That is, there is still a small window through which conflicting changes can get into develop. But the probability of this is low, and we agreed on this compromise between the number of launches and the probability of a breakdown. For two years, fired only once, therefore, probably not in vain.

It took us two weeks to write the first version of the plug-in for Bitbucket.

Meanwhile, our team continued to grow. New checks were added.

We thought: why fix mistakes if they can be prevented? And therefore implemented a static code analysis . Started with lint, which is included in the Android SDK. But at that time he didn’t know how to work with Kotlin-code at all, and we already have 75% of the application written in Kotlin. Therefore, to the lint added built-in Android Studio checks.

To do this, I had to greatly pervert: take Android Studio, pack it in Docker and run it on CI with a virtual monitor so that it thinks it is running on a real laptop. But it worked.

Also at this time, we began to write a lot of instrumentation tests and implemented screenshot testing . This is when a reference screenshot for a separate small view is generated, and the test is that a screenshot is taken from the view and compared with the reference directly pixel-by-pixel. If there is a discrepancy, it means that the layout has gone somewhere or something is wrong in the styles.

But instrumentation tests and screenshot tests need to be run on devices: on emulators or on real devices. Given that a lot of tests and they chase often, you need a whole farm. It’s too hard to build a farm, so we found a ready-made option - Firebase Test Lab.

It was chosen because Firebase is a Google product, that is, it must be reliable and is unlikely to ever die. Prices are affordable: $ 5 per hour of operation of a real device, $ 1 per hour of operation of an emulator.

It took about three weeks to implement the Firebase Test Lab in our CI.

But the team continued to grow, and Firebase, unfortunately, began to let us down. At that time, he had no SLA. Sometimes, Firebase kept waiting until the right amount of test devices was released, and did not start to execute them right there, as we wanted. Waiting in line took up to half an hour, and it is very long. Instrumentation tests were run on every PR, delays slowed down the development very much, and then another bill came in a month with a round sum. In general, it was decided to abandon Firebase and cut in-house, since the team has grown enough.

They took a docker, stuffed emulators into it, wrote a simple program in Python, which at the right moment brings up the right amount of emulators in the right version and when necessary it stops them. And, of course, a couple of bash scripts - where do without them?

It took five weeks to create your own test environment.

As a result, for each pull request there was an extensive, blocking merger list of checks:

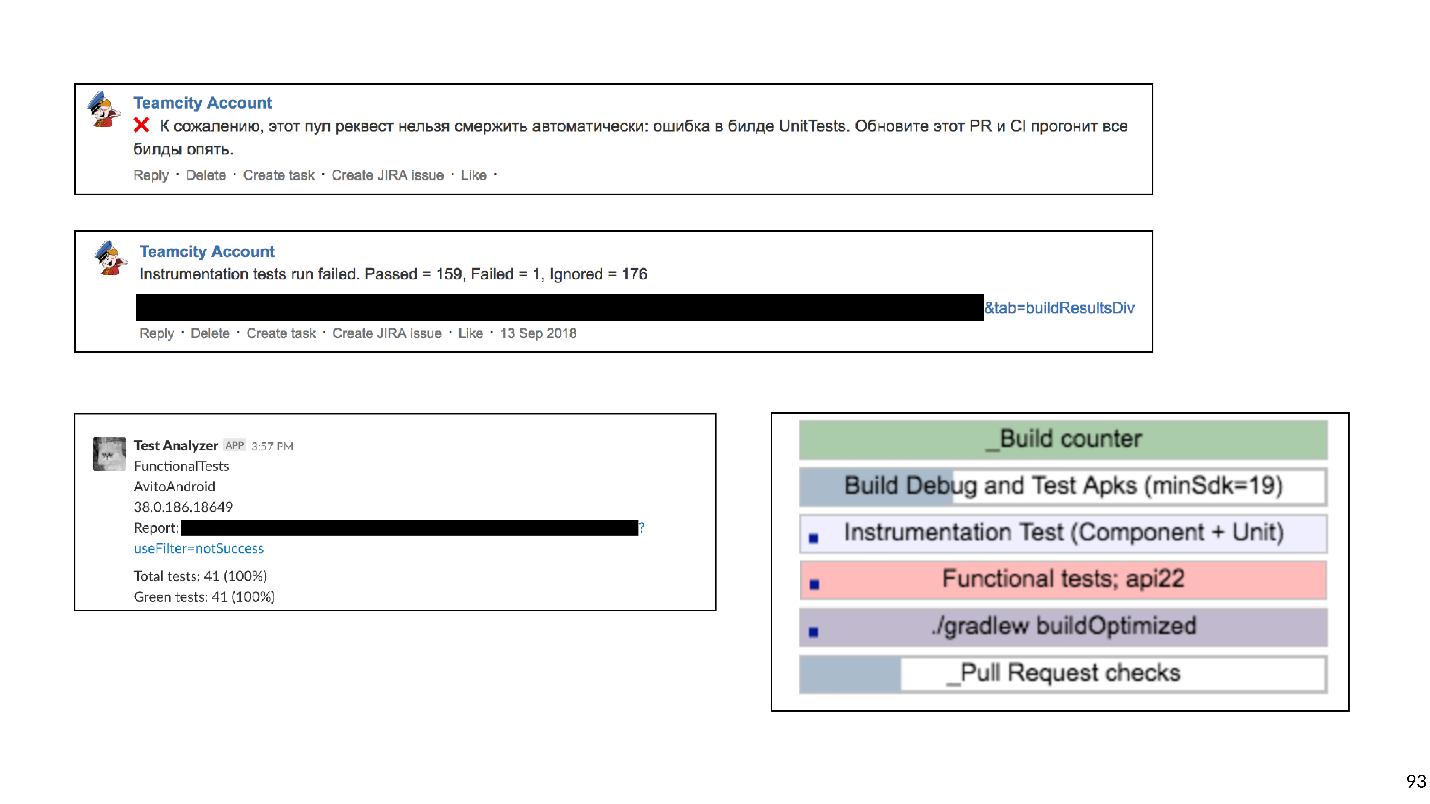

This prevented many possible breakdowns. Technically, everything worked, but the developers complained that waiting for results for too long.

Too long is how much? We downloaded the data from Bitbucket and TeamCity into the analysis system and realized that the average waiting time was 45 minutes . That is, the developer, opening a pull request on average, will wait for the results of the builds for 45 minutes. In my opinion, this is a lot, and so it is impossible to work.

Of course, we decided to speed up all our builds.

Having seen that builds often stand in a queue, we first bought additional iron - extensive development is the simplest. The builds stopped queuing, but the waiting time decreased only slightly, because some of the checks themselves were chasing for a very long time.

Our Continuous Integration could catch these types of errors and problems.

Looking at this list, we realized that only the first two points are critical. We want to catch such problems first. Bugs in the layout are detected at the design-review stage and then easily fixed. Working with technical debt requires a separate process and planning, so we decided not to check it for a pull request.

Based on this classification, we shake up the entire list of checks. They crossed out Lint and moved his launch overnight: just to give him a report on how many problems there are in the project. With the technical debt, we agreed to work separately, but refused to completely check from Android Studio checks . Docker's Android Studio to run inspections sounds interesting, but it brings a lot of trouble with support. Any update of Android Studio versions is a struggle with incomprehensible bugs. It was also difficult to maintain screen tests, because the library was not very stable, there were false positives. Screenshot tests removed from the list of checks .

As a result, we still have:

Without heavy checks everything became better. But there is no limit to perfection!

Our application has already been broken into approximately 150 gradle modules. Usually in this case, Gradle remote cache works well, and we decided to try it.

Gradle remote cache is a service that can cache build artifacts for individual tasks in individual modules. Gradle, instead of actually compiling the code, knocks on remote cache via HTTP and asks if someone has already performed this task. If yes, just download the result.

Starting Gradle remote cache is easy because Gradle provides a Docker image. We managed to do it in three hours.

All I had to do was start Docker and write one line in the project. But although you can run it quickly so that everything works well, it will take a lot of time.

Below is a cache misses chart.

At the very beginning, the percentage of misses by the cache was about 65. After three weeks, it was possible to bring this value to 20%. It turned out that the tasks that the Android application collects have strange transitive dependencies, due to which Gradle missed the cache.

By connecting the cache, we greatly accelerated the assembly. But apart from the assembly, instrumentation tests are still chasing, but they are chasing for a long time. Perhaps not all tests need to be driven to every pull request. To find out, use impact analysis.

At pull request, we collect git diff and find the modified Gradle modules.

It makes sense to run only those instrumentation tests that check the modified modules and all the modules that depend on them. There is no point in running tests for neighboring modules: the code has not changed, and nothing can break.

With instrumentation tests, things are not that simple, because they must be in the top-level Application module. We applied heuristics with bytecode analysis to understand which module each test belongs to.

It took about eight weeks to modernize the work of instrumentation tests so that they only checked the modules involved.

Measures to accelerate inspections successfully worked. From 45 minutes we reached about 15. A quarter of an hour to wait for the build is already normal.

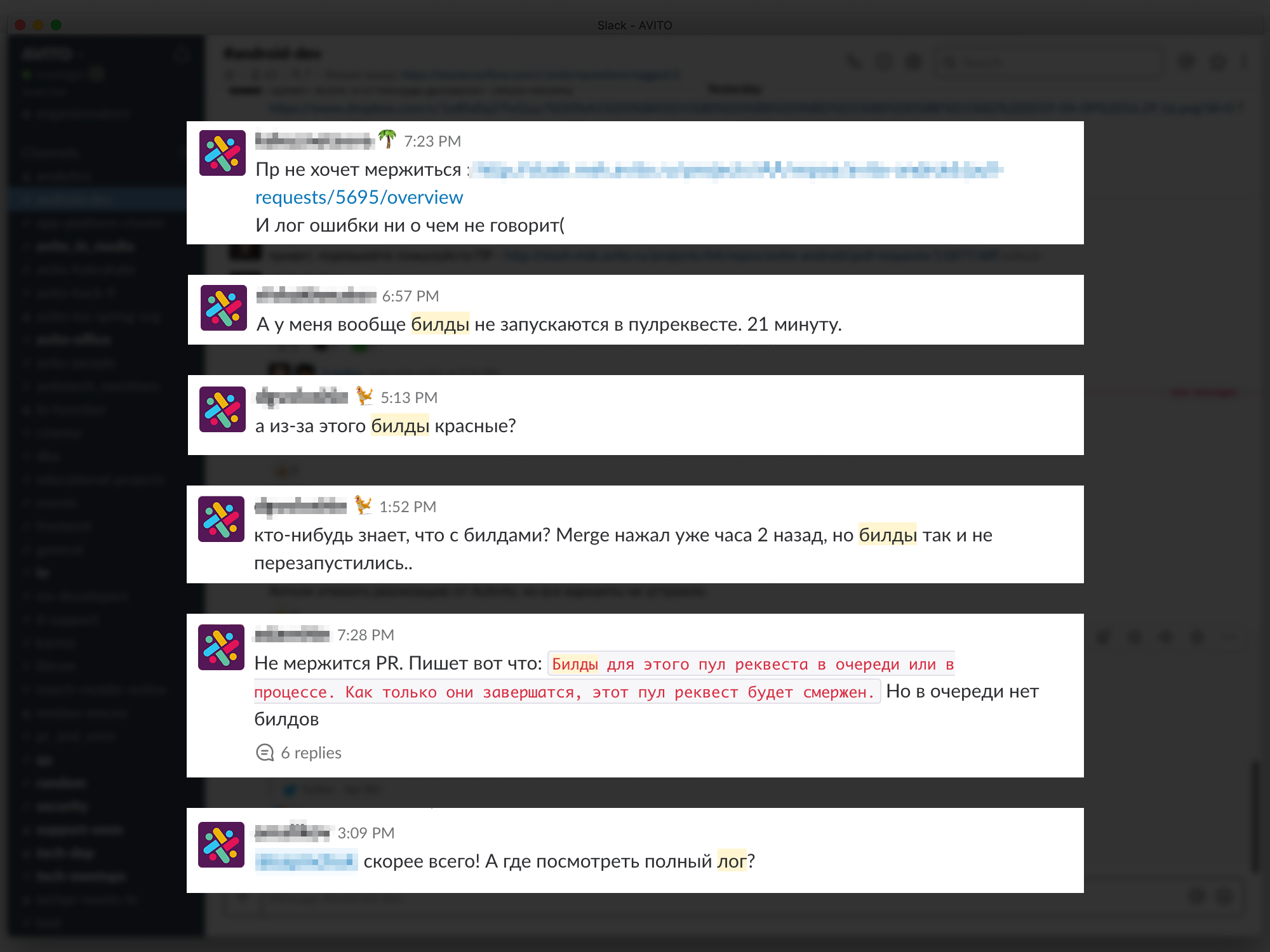

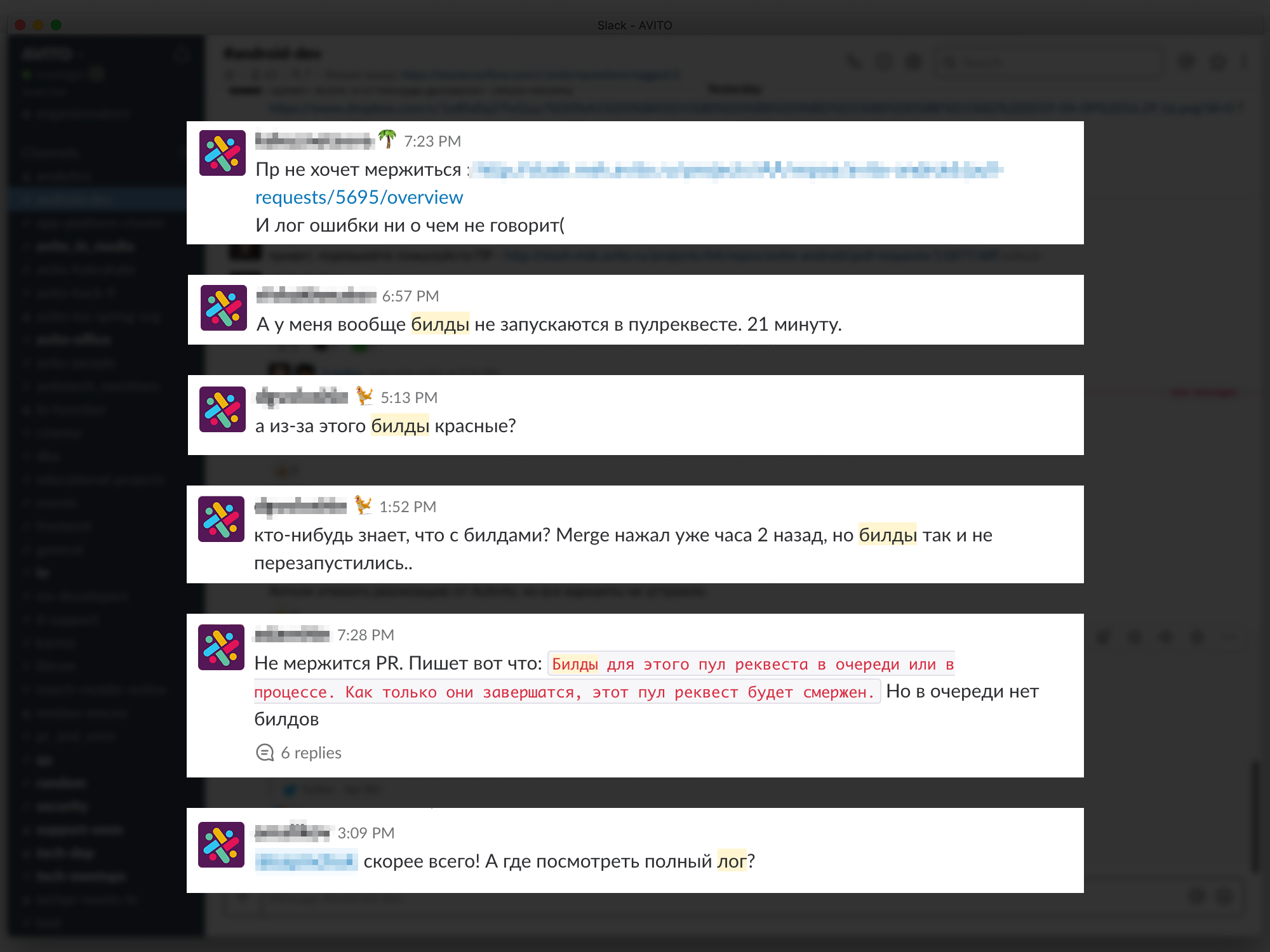

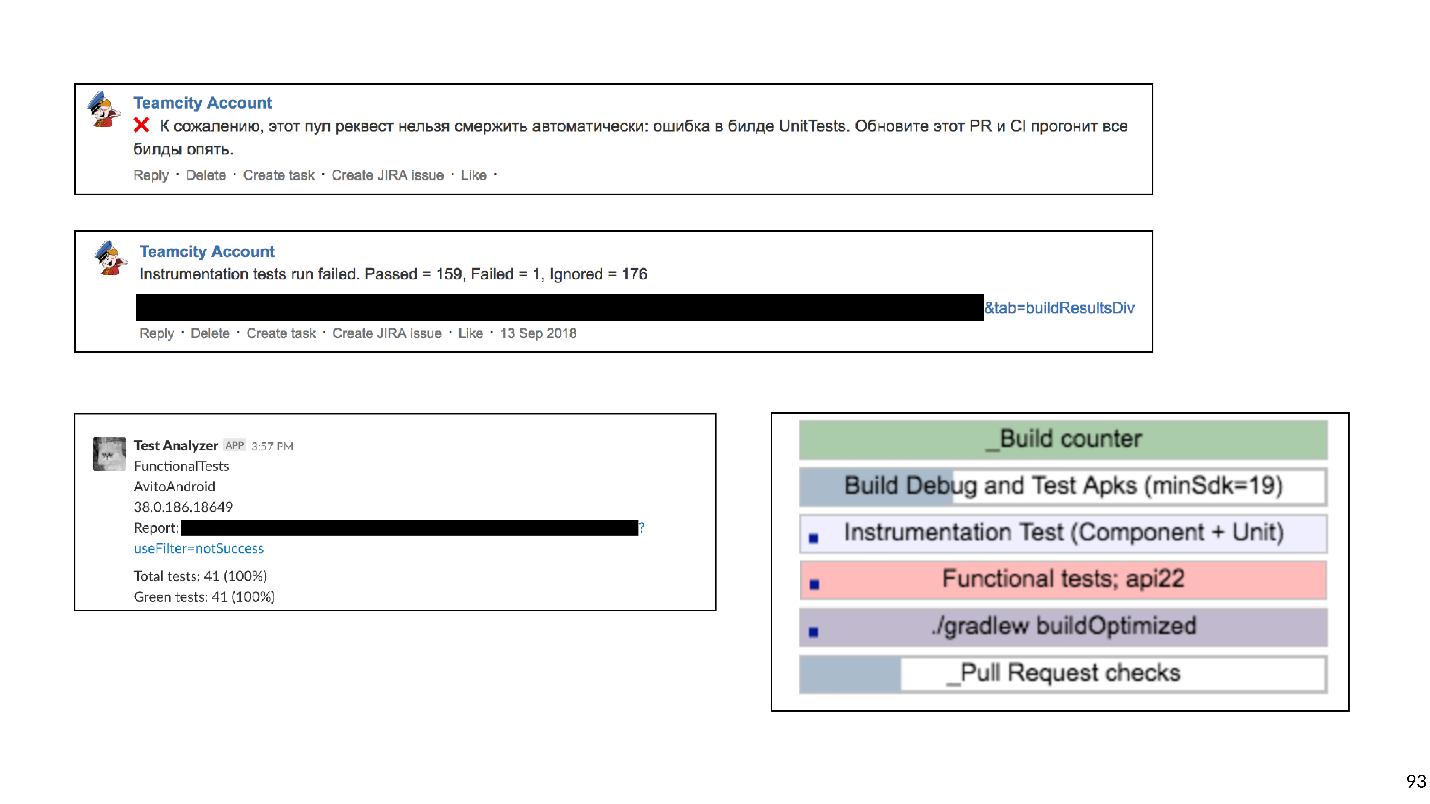

But now the developers have started to complain that it is not clear to them which builds are being launched, where to see the log, why the build is red, which test fell, etc.

Problems with feedback slow down development, so we tried to provide the most clear and detailed information about each PR and build. We started with comments in Bitbucket to PR with an indication of which build fell and why, we wrote address messages to Slack. In the end, they made a PR dashboard for the page with a list of all the builds that are currently being launched and their status: queued, started, dropped or completed. You can click on the build and get on its log.

Detailed feedback was spent six weeks.

We turn to the newest history. Having decided the question of feedback, we reached a new level - we decided to build our own emulator farm. When tests and emulators are many, they are difficult to manage. As a result, all of our emulators moved to a k8s cluster with flexible resource management.

In addition, there are other plans.

So, we traced the history of the development of Continuous Integration in Avito. Now I want to give some advice from the point of view of the experienced.

If I could only give one piece of advice, it would be this:

Bash is a very flexible and powerful tool, it is very convenient and fast to write scripts. But with him you can fall into the trap, and we, unfortunately, fell into it.

It all started with simple scripts that were run on our build machines:

But, as you know, everything develops over time and becomes more complicated - let's run one script from another, let's pass some parameters there - in the end I had to write a function that determines what level of bash nesting we are in to substitute the right quotes to get it all started.

You can imagine the labor costs for the development of such scripts. I advise you not to fall into this trap.

What can be replaced?

We decided to choose the second option, and now systematically delete all bash-scripts and write a lot of custom gradle-task.

Tip number 2: to store the infrastructure in the code.

Conveniently, the setting of Continuous Integration is not stored in the Jenkins UI or TeamCity, etc., but in the form of text files directly in the project repository. This gives versioning. It will not be difficult to roll back or collect code on another branch.

Scripts can be stored in the project. And what to do with the environment?

Advice # 3: Docker can help with the environment.

It will definitely help Android developers, unfortunately there is no iOS yet.

This is an example of a simple docker file that contains jdk and android-sdk:

Having written this docker-file (I will tell you a secret, you can not write it, but pull it off ready with GitHub) and assemble an image, you get a virtual machine where you can build the application and run Junit tests.

The two main reasons why this makes sense are: scalability and repeatability. Using docker, you can quickly pick up a dozen build agents that will have exactly the same environment as the previous one. This makes life easier for CI engineers. It’s quite simple to stuff the android-sdk into the docker, with emulators a bit more difficult: you’ll have to make some effort (well, or download it again from GitHub).

Council number 4: do not forget that checks are not done for the sake of checks, but for people.

Developers are very important fast and, most importantly, clear feedback: what they have broken, what test fell, where to look at the buildlog.

Tip # 5: Be pragmatic as you develop Continuous Integration.

Clearly understand what types of errors you want to prevent, how much you are willing to spend resources, time, machine time. Too long checks can, for example, be moved overnight. And from those who catch not very important mistakes, completely abandoned.

Tip number 6: use ready-made tools.

Now there are many companies that provide cloud CI.

For small teams this is a good way out. There is no need to support anything, just pay some money, build your application and even chase instrumentation tests.

Tip number 7: a large team profitable in-house solutions.

But sooner or later, with the growth of the team will become more profitable in-house solutions. With these solutions there is one moment. In economics, there is a law of diminishing returns: in any project, each successive improvement is given more and more difficult, requiring more and more investments.

The economy describes our whole life, including Continuous Integration. I built a work schedule for each stage of development of our Continuous Integration.

It is seen that any improvement is given more and more difficult. Looking at this graph, one can understand that it is necessary to develop Continuous Integration consistently with the growth of team size. For a team of two people, spending 50 days developing an internal emulator farm is just an idea. But at the same time, for a large team not to engage in a continuous integration is also a bad idea, because of the problems of integration, repair of communications, etc. will take even longer.

We started with the fact that automation is needed, because people are expensive, they are mistaken and lazy. But people automate too. Therefore, all the same problems apply to automation.

But I have statistics: errors are caught in 20% of assemblies. , . , , , - , develop, . , , - .

Continuous Integration. .

Having written the code, you need to make sure that it:

')

- Works.

- Nothing breaks, including the code that your colleagues wrote.

If both conditions are met, then you are on your way to success. In order to easily check these conditions and not turn away from a profitable path, we invented Continuous Integration.

CI is a workflow where you integrate your code into the common product code as often as possible. And not just integrating, but also constantly checking that everything works. Since you need to check a lot and often, you should think about automation. You can check everything on hand-crafted, but not worth it, and here's why.

- People are dear . The hour of work of any programmer is more expensive than the hour of work of any server.

- People are wrong . Therefore, there may be situations when they run tests on the wrong branch or have collected the wrong commit for testers.

- People are lazy . Periodically, when I finish a task, I have a thought: “What is there to check? I wrote two lines - everything works fine! ”I think some of you also have such thoughts sometimes come to mind. But you should always check.

How to implement and develop Continuous Integration in the Avito mobile development team, how from 0 to 450 assemblies per day reached, and that build machines collect 200 hours a day, says Nikolay Nesterov ( nnesterov ) - participant of all evolutionary changes in the CI / CD Android application .

The story is based on the example of the Android-team, but most approaches are applicable on iOS too.

Once upon a time in the Android team Avito worked one person. By definition, it didn’t need anything from Continuous Integration: it wasn’t anyone to integrate with.

But the application grew, there appeared more and more new tasks, respectively, the team grew. At some point, it is time to formalize the code integration process more formally. It was decided to use Git flow.

The concept of Git flow is well known: the project has one common branch to develop, and for each new feature, the developers cut a separate branch, commit to it, push, and when they want to infuse their code into the develop branch, open pull request. To share knowledge and discuss approaches, we introduced a code review, that is, colleagues must verify and confirm each other’s code.

Checks

Watching the code with your eyes is cool, but not enough. Therefore, automatic checks are introduced.

- First check the assembly of the ARC .

- Many junit tests .

- We consider code coverage , since we run tests.

To understand how to run these checks, let's look at the development process in Avito.

It can be schematically represented as follows:

- The developer writes code on his laptop. You can run integration checks right here - either a commit hook, or just drive checks in the background.

- After the developer has run the code, it opens the pull request. To get its code in the develop branch, you need to go through code review and collect the required number of confirmations. You can enable checks and builds here: while not all builds are successful, pull request cannot be merged.

- After the pull request is merged and the code has gotten into develop, you can choose a convenient time: for example, at night, when all servers are free, and drive checks as much as you like.

Nobody liked to run checks on his laptop. When the developer has finished the feature, he wants to quickly push it and open the pull request. If at this moment some long checks are launched, it is not only not very pleasant, but also slows down the development: while the laptop checks something, it is impossible to work normally on it.

We really liked to run checks at night, because there is a lot of time and servers, you can roam. But, unfortunately, when the feature code got into develop, the developer has much less motivation to fix the errors that CI found. I occasionally caught myself thinking when I looked at the morning report on all the errors found, which I will fix them sometime later, because now in Jira there is a cool new task that I really want to start doing.

If the checks block the pull request, then the motivation is enough, because until the builds go green, the code will not end up in develop, which means that the task will not be completed.

As a result, we chose this strategy: at night we drive the largest possible set of checks, and the most critical of them, and most importantly, the fastest, run on pull request. But we don’t stop there - in parallel, we optimize the speed at which checks pass so that they can be transferred from night mode to check request.

At that time, all of our assemblies were fairly fast, so we simply turned on the blocker to pull request the build of the ARC, Junit tests and calculation of code coverage. We turned it on, thought it over, and abandoned code coverage, because we thought that we didn't need it.

It took us two days to complete the setup of the base CI (hereinafter, a temporary estimate is approximate, needed for scale).

After that, we began to think further - are we checking correctly? Do we run builds correctly on pull request?

We ran the build on the last commit of the branch with which pull request is open. But checking this commit can only show that the code that the developer wrote is working. But they do not prove that he did not break anything. In fact, you need to check the state of the develop branch after the feature has been merged into it.

To do this, we wrote a simple bash script premerge.sh:

#!/usr/bin/env bash set -e git fetch origin develop git merge origin/develop Here, all the latest changes from develop are simply pulled up and merged into the current branch. We added the premerge.sh script with the first step of all the builds and began to check exactly what we want, that is, integration .

It took three days to localize the problem, find a solution and write this script.

The application developed, more and more tasks appeared, the team grew, and premerge.sh sometimes began to fail us. In develop penetrated conflicting changes that broke the assembly.

An example of how this happens:

Two developers start to cut features A and B at the same time. Developer feature A discovers an unused function

answer() in the project and, like a good boy scout, removes it. In this case, the developer features B in his branch adds a new call to this function.Developers finish work and at the same time open pull request. Builds are started, premerge.sh checks both pull requests for a new state of develop - all checks are green. After that, pull request features A, merge pull request features B ... Boom! Develop breaks because in the develop code there is a call to a non-existent function.

When not going to develop, this is a local catastrophe . The whole team can not collect and give anything for testing.

It so happened that I most often dealt with infrastructural tasks: analytics, network, databases. That is, I wrote those functions and classes that other developers use. Because of this, I very often got into such situations. I even had such a picture at one time.

Since this did not suit us, we began to work out options for how to prevent it.

How not to break develop

The first option: rebuild all pull request when developing develop. If in our example pull request with feature A first gets into develop, pull request feature B is rebuilt, and, accordingly, checks fail due to a compilation error.

To understand how much time it will take, consider the two PR example. Open two PRs: two builds, two test runs. After the first PR is injected into develop, the second must be reassembled. In total, two PR runs take three runs of checks: 2 + 1 = 3.

Basically, fine. But we looked at the statistics, and the typical situation in our team was 10 open PRs, and then the number of checks is the sum of the progression: 10 + 9 + ... + 1 = 55. That is, to accept 10 PR, you need to reassemble 55 times. And this is in an ideal situation, when all the checks pass the first time, when no one opens the additional pull request while this ten is processed.

Imagine yourself as a developer who needs to have time to click on the “merge” button first, because if a neighbor does it, you will have to wait until all the assemblies are redone ... No, that won't work, it will seriously slow down development.

The second possible way: collect the pull request after the code review. That is, open the pull request, collect the required number of apruvas from colleagues, fix what you need, then launch the builds. If they are successful, pull request merges with develop. In this case, there are no additional restarts, but the feedback is greatly slowed down. I, as a developer, opening a pull request, just want to see if he is going. For example, if a test fell, you need to quickly fix it. In the case of a deferred assembly, the feedback slows down, and hence the entire development. It did not suit us either.

As a result, there was only the third option - the bike . All our code, all our sources are stored in a repository in a Bitbucket server. Accordingly, we had to develop a plugin for Bitbucket.

This plugin overrides the pull request merge mechanism. The beginning is standard: PR opens, all assemblies run, code review passes. But after the code review has been passed, and the developer decides to click on “merge”, the plugin checks for which state of develop the checks were run. If after the develops build has been updated, the plugin will not allow such a pull request to be inserted into the main branch. It will simply restart the builds for a relatively fresh develop.

In our example with conflicting changes, such builds will not work due to a compilation error. Accordingly, the developer of the feature B will have to correct the code, restart the checks, then the plugin will automatically apply pull request.

Before the introduction of this plug-in, we had an average of 2.7 start-up checks per pull request. With the plugin was 3.6 launch. It suited us.

It is worth noting that this plugin has a flaw: it restarts the build only once. That is, there is still a small window through which conflicting changes can get into develop. But the probability of this is low, and we agreed on this compromise between the number of launches and the probability of a breakdown. For two years, fired only once, therefore, probably not in vain.

It took us two weeks to write the first version of the plug-in for Bitbucket.

New checks

Meanwhile, our team continued to grow. New checks were added.

We thought: why fix mistakes if they can be prevented? And therefore implemented a static code analysis . Started with lint, which is included in the Android SDK. But at that time he didn’t know how to work with Kotlin-code at all, and we already have 75% of the application written in Kotlin. Therefore, to the lint added built-in Android Studio checks.

To do this, I had to greatly pervert: take Android Studio, pack it in Docker and run it on CI with a virtual monitor so that it thinks it is running on a real laptop. But it worked.

Also at this time, we began to write a lot of instrumentation tests and implemented screenshot testing . This is when a reference screenshot for a separate small view is generated, and the test is that a screenshot is taken from the view and compared with the reference directly pixel-by-pixel. If there is a discrepancy, it means that the layout has gone somewhere or something is wrong in the styles.

But instrumentation tests and screenshot tests need to be run on devices: on emulators or on real devices. Given that a lot of tests and they chase often, you need a whole farm. It’s too hard to build a farm, so we found a ready-made option - Firebase Test Lab.

Firebase test lab

It was chosen because Firebase is a Google product, that is, it must be reliable and is unlikely to ever die. Prices are affordable: $ 5 per hour of operation of a real device, $ 1 per hour of operation of an emulator.

It took about three weeks to implement the Firebase Test Lab in our CI.

But the team continued to grow, and Firebase, unfortunately, began to let us down. At that time, he had no SLA. Sometimes, Firebase kept waiting until the right amount of test devices was released, and did not start to execute them right there, as we wanted. Waiting in line took up to half an hour, and it is very long. Instrumentation tests were run on every PR, delays slowed down the development very much, and then another bill came in a month with a round sum. In general, it was decided to abandon Firebase and cut in-house, since the team has grown enough.

Docker + Python + bash

They took a docker, stuffed emulators into it, wrote a simple program in Python, which at the right moment brings up the right amount of emulators in the right version and when necessary it stops them. And, of course, a couple of bash scripts - where do without them?

It took five weeks to create your own test environment.

As a result, for each pull request there was an extensive, blocking merger list of checks:

- Assembly of the ARC;

- Junit tests;

- Lint;

- Android Studio checks;

- Instrumentation tests;

- Screenshot tests.

This prevented many possible breakdowns. Technically, everything worked, but the developers complained that waiting for results for too long.

Too long is how much? We downloaded the data from Bitbucket and TeamCity into the analysis system and realized that the average waiting time was 45 minutes . That is, the developer, opening a pull request on average, will wait for the results of the builds for 45 minutes. In my opinion, this is a lot, and so it is impossible to work.

Of course, we decided to speed up all our builds.

Accelerate

Having seen that builds often stand in a queue, we first bought additional iron - extensive development is the simplest. The builds stopped queuing, but the waiting time decreased only slightly, because some of the checks themselves were chasing for a very long time.

We remove too long checks

Our Continuous Integration could catch these types of errors and problems.

- Not going . CI may catch a compilation error when something is not collected due to conflicting changes. As I said, then no one can collect anything, the development gets up, and everyone is nervous.

- Bug in behavior . For example, when the application is assembled, but when you press the button, it drops, or the button is not pressed at all. This is bad, because such a bug can get to the user.

- Bug in the layout . For example, the button is pressed, but moved 10 pixels to the left.

- The increase in technical debt .

Looking at this list, we realized that only the first two points are critical. We want to catch such problems first. Bugs in the layout are detected at the design-review stage and then easily fixed. Working with technical debt requires a separate process and planning, so we decided not to check it for a pull request.

Based on this classification, we shake up the entire list of checks. They crossed out Lint and moved his launch overnight: just to give him a report on how many problems there are in the project. With the technical debt, we agreed to work separately, but refused to completely check from Android Studio checks . Docker's Android Studio to run inspections sounds interesting, but it brings a lot of trouble with support. Any update of Android Studio versions is a struggle with incomprehensible bugs. It was also difficult to maintain screen tests, because the library was not very stable, there were false positives. Screenshot tests removed from the list of checks .

As a result, we still have:

- Assembly of the ARC;

- Junit tests;

- Instrumentation tests.

Gradle remote cache

Without heavy checks everything became better. But there is no limit to perfection!

Our application has already been broken into approximately 150 gradle modules. Usually in this case, Gradle remote cache works well, and we decided to try it.

Gradle remote cache is a service that can cache build artifacts for individual tasks in individual modules. Gradle, instead of actually compiling the code, knocks on remote cache via HTTP and asks if someone has already performed this task. If yes, just download the result.

Starting Gradle remote cache is easy because Gradle provides a Docker image. We managed to do it in three hours.

All I had to do was start Docker and write one line in the project. But although you can run it quickly so that everything works well, it will take a lot of time.

Below is a cache misses chart.

At the very beginning, the percentage of misses by the cache was about 65. After three weeks, it was possible to bring this value to 20%. It turned out that the tasks that the Android application collects have strange transitive dependencies, due to which Gradle missed the cache.

By connecting the cache, we greatly accelerated the assembly. But apart from the assembly, instrumentation tests are still chasing, but they are chasing for a long time. Perhaps not all tests need to be driven to every pull request. To find out, use impact analysis.

Impact analysis

At pull request, we collect git diff and find the modified Gradle modules.

It makes sense to run only those instrumentation tests that check the modified modules and all the modules that depend on them. There is no point in running tests for neighboring modules: the code has not changed, and nothing can break.

With instrumentation tests, things are not that simple, because they must be in the top-level Application module. We applied heuristics with bytecode analysis to understand which module each test belongs to.

It took about eight weeks to modernize the work of instrumentation tests so that they only checked the modules involved.

Measures to accelerate inspections successfully worked. From 45 minutes we reached about 15. A quarter of an hour to wait for the build is already normal.

But now the developers have started to complain that it is not clear to them which builds are being launched, where to see the log, why the build is red, which test fell, etc.

Problems with feedback slow down development, so we tried to provide the most clear and detailed information about each PR and build. We started with comments in Bitbucket to PR with an indication of which build fell and why, we wrote address messages to Slack. In the end, they made a PR dashboard for the page with a list of all the builds that are currently being launched and their status: queued, started, dropped or completed. You can click on the build and get on its log.

Detailed feedback was spent six weeks.

Plans

We turn to the newest history. Having decided the question of feedback, we reached a new level - we decided to build our own emulator farm. When tests and emulators are many, they are difficult to manage. As a result, all of our emulators moved to a k8s cluster with flexible resource management.

In addition, there are other plans.

- Return Lint (and other static analysis). We are already working in this direction.

- Run all end-to-end tests on all versions of the SDK on the PR blocker.

So, we traced the history of the development of Continuous Integration in Avito. Now I want to give some advice from the point of view of the experienced.

Tips

If I could only give one piece of advice, it would be this:

Please be careful with shell scripts!

Bash is a very flexible and powerful tool, it is very convenient and fast to write scripts. But with him you can fall into the trap, and we, unfortunately, fell into it.

It all started with simple scripts that were run on our build machines:

#!/usr/bin/env bash ./gradlew assembleDebug But, as you know, everything develops over time and becomes more complicated - let's run one script from another, let's pass some parameters there - in the end I had to write a function that determines what level of bash nesting we are in to substitute the right quotes to get it all started.

You can imagine the labor costs for the development of such scripts. I advise you not to fall into this trap.

What can be replaced?

- Any scripting language. It is more convenient to write in Python or Kotlin Script , because it is programming, not scripts.

- Or describe all the logic of builds in the form of Custom gradle tasks for your project.

We decided to choose the second option, and now systematically delete all bash-scripts and write a lot of custom gradle-task.

Tip number 2: to store the infrastructure in the code.

Conveniently, the setting of Continuous Integration is not stored in the Jenkins UI or TeamCity, etc., but in the form of text files directly in the project repository. This gives versioning. It will not be difficult to roll back or collect code on another branch.

Scripts can be stored in the project. And what to do with the environment?

Advice # 3: Docker can help with the environment.

It will definitely help Android developers, unfortunately there is no iOS yet.

This is an example of a simple docker file that contains jdk and android-sdk:

FROM openjdk:8 ENV SDK_URL="https://dl.google.com/android/repository/sdk-tools-linux-3859397.zip" \ ANDROID_HOME="/usr/local/android-sdk" \ ANDROID_VERSION=26 \ ANDROID_BUILD_TOOLS_VERSION=26.0.2 # Download Android SDK RUN mkdir "$ANDROID_HOME" .android \ && cd "$ANDROID_HOME" \ && curl -o sdk.zip $SDK_URL \ && unzip sdk.zip \ && rm sdk.zip \ && yes | $ANDROID_HOME/tools/bin/sdkmanager --licenses # Install Android Build Tool and Libraries RUN $ANDROID_HOME/tools/bin/sdkmanager --update RUN $ANDROID_HOME/tools/bin/sdkmanager "build-tools;${ANDROID_BUILD_TOOLS_VERSION}" \ "platforms;android-${ANDROID_VERSION}" \ "platform-tools" RUN mkdir /application WORKDIR /application Having written this docker-file (I will tell you a secret, you can not write it, but pull it off ready with GitHub) and assemble an image, you get a virtual machine where you can build the application and run Junit tests.

The two main reasons why this makes sense are: scalability and repeatability. Using docker, you can quickly pick up a dozen build agents that will have exactly the same environment as the previous one. This makes life easier for CI engineers. It’s quite simple to stuff the android-sdk into the docker, with emulators a bit more difficult: you’ll have to make some effort (well, or download it again from GitHub).

Council number 4: do not forget that checks are not done for the sake of checks, but for people.

Developers are very important fast and, most importantly, clear feedback: what they have broken, what test fell, where to look at the buildlog.

Tip # 5: Be pragmatic as you develop Continuous Integration.

Clearly understand what types of errors you want to prevent, how much you are willing to spend resources, time, machine time. Too long checks can, for example, be moved overnight. And from those who catch not very important mistakes, completely abandoned.

Tip number 6: use ready-made tools.

Now there are many companies that provide cloud CI.

For small teams this is a good way out. There is no need to support anything, just pay some money, build your application and even chase instrumentation tests.

Tip number 7: a large team profitable in-house solutions.

But sooner or later, with the growth of the team will become more profitable in-house solutions. With these solutions there is one moment. In economics, there is a law of diminishing returns: in any project, each successive improvement is given more and more difficult, requiring more and more investments.

The economy describes our whole life, including Continuous Integration. I built a work schedule for each stage of development of our Continuous Integration.

It is seen that any improvement is given more and more difficult. Looking at this graph, one can understand that it is necessary to develop Continuous Integration consistently with the growth of team size. For a team of two people, spending 50 days developing an internal emulator farm is just an idea. But at the same time, for a large team not to engage in a continuous integration is also a bad idea, because of the problems of integration, repair of communications, etc. will take even longer.

We started with the fact that automation is needed, because people are expensive, they are mistaken and lazy. But people automate too. Therefore, all the same problems apply to automation.

- Automate expensive. Remember the schedule of labor costs.

- Automation makes people wrong.

- Sometimes it is very lazy to automate, because everything works like this. Why else to improve something, why all this Continuous Integration?

But I have statistics: errors are caught in 20% of assemblies. , . , , , - , develop, . , , - .

Continuous Integration. .

, , AppsConf . . 22-23 .

Source: https://habr.com/ru/post/447608/

All Articles