Splunk Universal Forwarder in docker as a system log collector

Splunk is one of several most recognizable commercial products for collecting and analyzing logs. Even now, when sales in Russia are no longer made, this is no reason not to write instructions / how-to on this product.

Task : collect system logs from the docker node in Splunk without changing the host configuration

I would like to start with the official approach, which looks odd when using the docker.

Link to Docker Hub

What do we have:

')

1. Pullim image

$ docker pull splunk/universalforwarder:latest 2. We start the container with the necessary parameters

$ docker run -d -p 9997:9997 -e 'SPLUNK_START_ARGS=--accept-license' -e 'SPLUNK_PASSWORD=<password>' splunk/universalforwarder:latest 3. Go to the container

docker exec -it <container-id> /bin/bash Next we are asked to go

And configure the container after it starts:

./splunk add forward-server <host name or ip address>:<listening port> ./splunk add monitor /var/log ./splunk restart Wait. What?

But the surprises do not end there. If you run the container from the official image online, you will see the following:

Little disappointment

$ docker run -it -p 9997:9997 -e 'SPLUNK_START_ARGS=--accept-license' -e 'SPLUNK_PASSWORD=password' splunk/universalforwarder:latest PLAY [Run default Splunk provisioning] ******************************************************************************************************************************************************************************************************* Tuesday 09 April 2019 13:40:38 +0000 (0:00:00.096) 0:00:00.096 ********* TASK [Gathering Facts] *********************************************************************************************************************************************************************************************************************** ok: [localhost] Tuesday 09 April 2019 13:40:39 +0000 (0:00:01.520) 0:00:01.616 ********* TASK [Get actual hostname] ******************************************************************************************************************************************************************************************************************* changed: [localhost] Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.599) 0:00:02.215 ********* Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.054) 0:00:02.270 ********* TASK [set_fact] ****************************************************************************************************************************************************************************************************************************** ok: [localhost] Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.075) 0:00:02.346 ********* Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.067) 0:00:02.413 ********* Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.060) 0:00:02.473 ********* Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.051) 0:00:02.525 ********* Tuesday 09 April 2019 13:40:40 +0000 (0:00:00.056) 0:00:02.582 ********* Tuesday 09 April 2019 13:40:41 +0000 (0:00:00.216) 0:00:02.798 ********* included: /opt/ansible/roles/splunk_common/tasks/change_splunk_directory_owner.yml for localhost Tuesday 09 April 2019 13:40:41 +0000 (0:00:00.087) 0:00:02.886 ********* TASK [splunk_common : Update Splunk directory owner] ***************************************************************************************************************************************************************************************** ok: [localhost] Tuesday 09 April 2019 13:40:41 +0000 (0:00:00.324) 0:00:03.210 ********* included: /opt/ansible/roles/splunk_common/tasks/get_facts.yml for localhost Tuesday 09 April 2019 13:40:41 +0000 (0:00:00.094) 0:00:03.305 ********* ... Fine. There is not even an artifact in the image. That is, every time you start it will take time to download the archive with binaries, unpack and configure.

But what about the docker-way and all that?

No thanks. We will go another way. What if we perform all these operations at the assembly stage? Then let's go!

In order not to drag for a long time, I will show the final image immediately:

Dockerfile

# FROM centos:7 # , ENV SPLUNK_HOME /splunkforwarder ENV SPLUNK_ROLE splunk_heavy_forwarder ENV SPLUNK_PASSWORD changeme ENV SPLUNK_START_ARGS --accept-license # # wget - # expect - Splunk # jq - , RUN yum install -y epel-release \ && yum install -y wget expect jq # , , RUN wget -O splunkforwarder-7.2.4-8a94541dcfac-Linux-x86_64.tgz 'https://www.splunk.com/bin/splunk/DownloadActivityServlet?architecture=x86_64&platform=linux&version=7.2.4&product=universalforwarder&filename=splunkforwarder-7.2.4-8a94541dcfac-Linux-x86_64.tgz&wget=true' \ && wget -O docker-18.09.3.tgz 'https://download.docker.com/linux/static/stable/x86_64/docker-18.09.3.tgz' \ && tar -xvf splunkforwarder-7.2.4-8a94541dcfac-Linux-x86_64.tgz \ && tar -xvf docker-18.09.3.tgz \ && rm -f splunkforwarder-7.2.4-8a94541dcfac-Linux-x86_64.tgz \ && rm -f docker-18.09.3.tgz # shell , inputs.conf, splunkclouduf.spl first_start.sh . source . COPY [ "inputs.conf", "docker-stats/props.conf", "/splunkforwarder/etc/system/local/" ] COPY [ "docker-stats/docker_events.sh", "docker-stats/docker_inspect.sh", "docker-stats/docker_stats.sh", "docker-stats/docker_top.sh", "/splunkforwarder/bin/scripts/" ] COPY splunkclouduf.spl /splunkclouduf.spl COPY first_start.sh /splunkforwarder/bin/ # , RUN chmod +x /splunkforwarder/bin/scripts/*.sh \ && groupadd -r splunk \ && useradd -r -m -g splunk splunk \ && echo "%sudo ALL=NOPASSWD:ALL" >> /etc/sudoers \ && chown -R splunk:splunk $SPLUNK_HOME \ && /splunkforwarder/bin/first_start.sh \ && /splunkforwarder/bin/splunk install app /splunkclouduf.spl -auth admin:changeme \ && /splunkforwarder/bin/splunk restart # COPY [ "init/entrypoint.sh", "init/checkstate.sh", "/sbin/" ] # . /, . VOLUME [ "/splunkforwarder/etc", "/splunkforwarder/var" ] HEALTHCHECK --interval=30s --timeout=30s --start-period=3m --retries=5 CMD /sbin/checkstate.sh || exit 1 ENTRYPOINT [ "/sbin/entrypoint.sh" ] CMD [ "start-service" ] And so, what is contained in

first_start.sh

#!/usr/bin/expect -f set timeout -1 spawn /splunkforwarder/bin/splunk start --accept-license expect "Please enter an administrator username: " send -- "admin\r" expect "Please enter a new password: " send -- "changeme\r" expect "Please confirm new password: " send -- "changeme\r" expect eof When you first start, Splunk asks you to specify a username / password, but this data is used only for executing the administrative commands of this particular installation, that is, inside the container. In our case, we just want to run the container so that everything works and the logs flow like water. Of course, this is a hardcode, but I haven’t found any other ways.

Further on the script is executed.

/splunkforwarder/bin/splunk install app /splunkclouduf.spl -auth admin:changeme splunkclouduf.spl - This is the Splunk Universal Forwarder credits file that can be downloaded from the web interface.

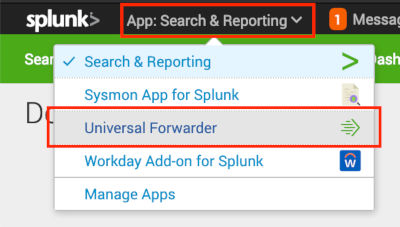

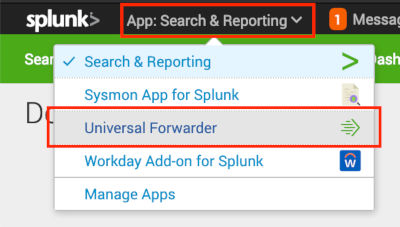

Where to click to download (in pictures)

This is a regular archive that can be unpacked. Inside there are certificates and a password for connecting to our SplunkCloud and outputs.conf with a list of our input instances. This file will be relevant until you reinstall your Splunk installation or add an input node if the installation is on-premise. Therefore, there is nothing wrong with adding it to the inside of the container.

And the last - restart. Yes, to apply changes, you need to restart it.

In our inputs.conf we add the logs that we want to send to Splunk. It is not necessary to add this file to the image if, for example, you are throwing configs through puppet. The main thing is that Forwarder should see the configs when starting the daemon, otherwise it will be necessary ./splunk restart .

What docker stats scripts? On the githab there is an old solution from outcoldman , the scripts are taken from there and refined to work with the current versions of Docker (ce-17. *) And Splunk (7. *).

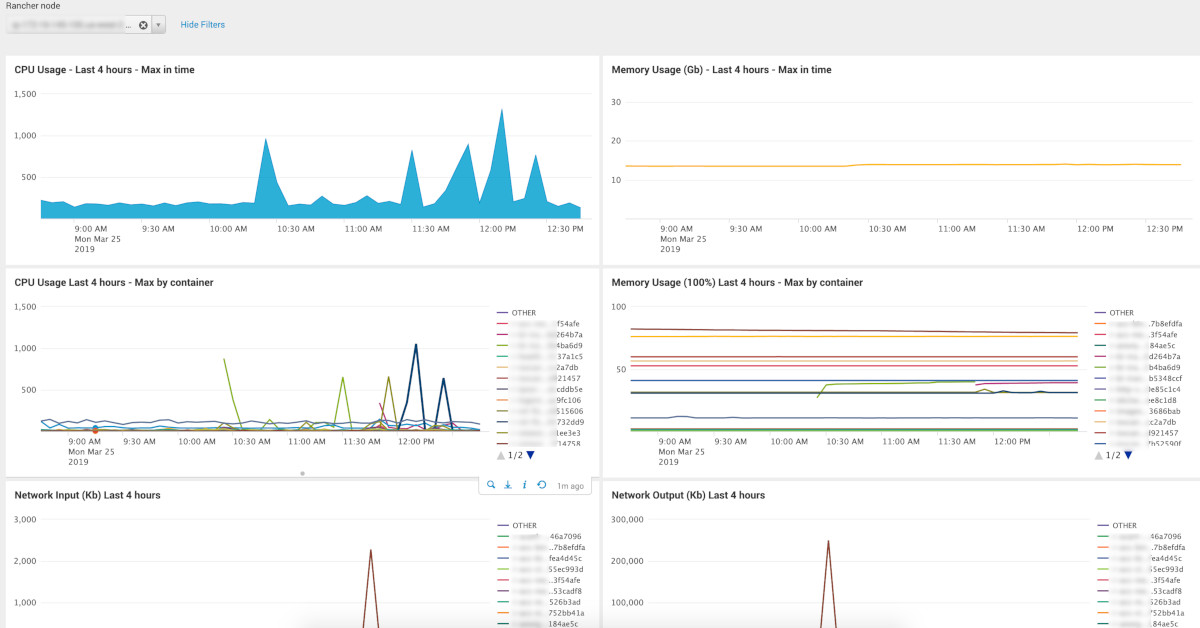

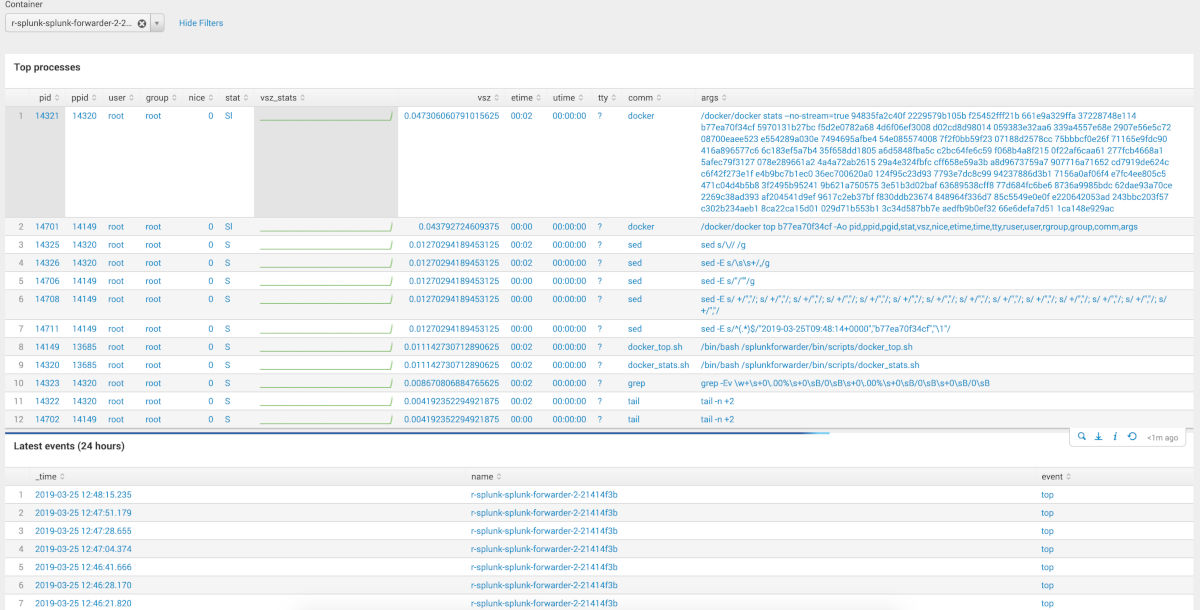

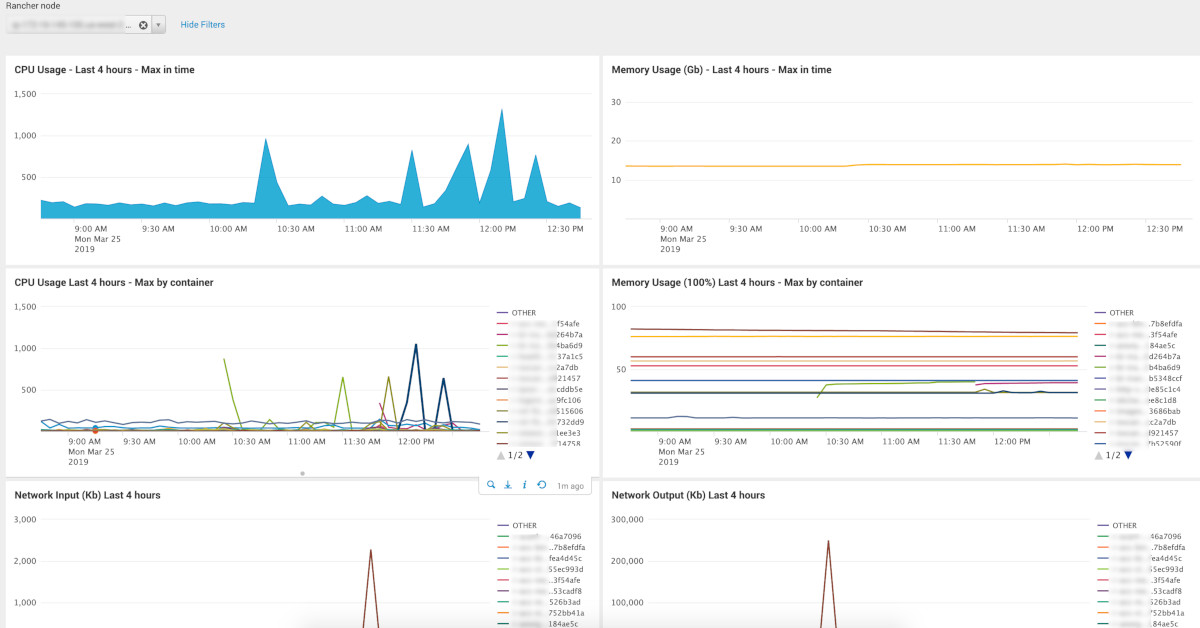

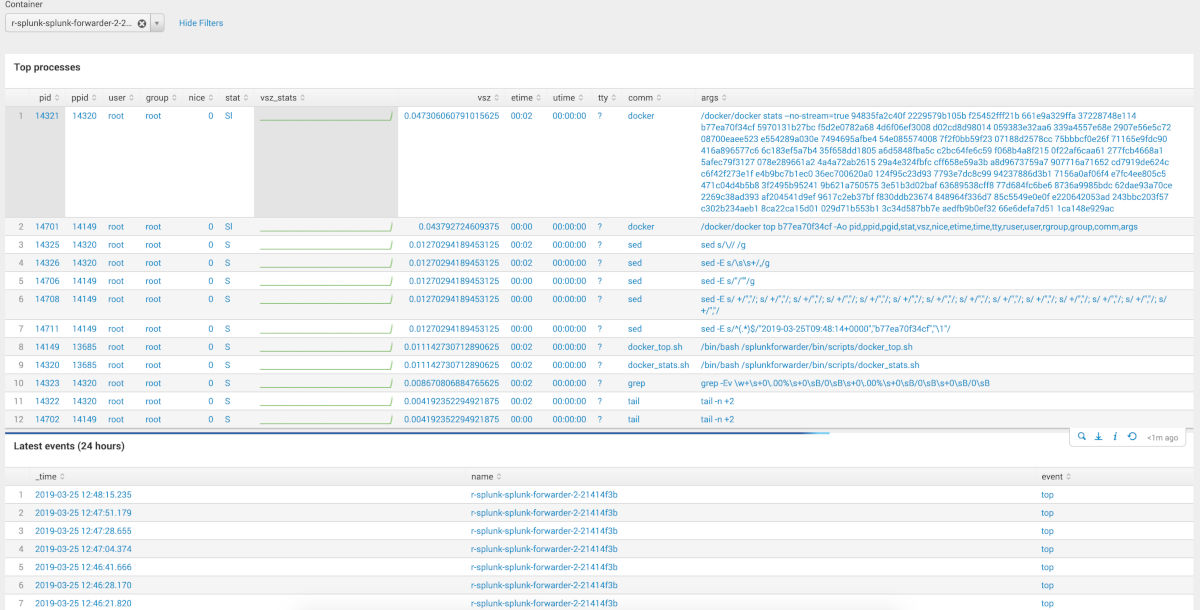

With the data obtained, you can build such

Dashboards: (a couple of pictures)

The source code of the dashes lies in the turnip specified at the end of the article. Please note that there are 2 select fields there: 1 - selection of the index (searched by mask), choice of host / container. The index mask will most likely have to be updated, depending on the names you use.

Finally, I want to draw attention to the start () function in

entrypoint.sh

start() { trap teardown EXIT if [ -z $SPLUNK_INDEX ]; then echo "'SPLUNK_INDEX' env variable is empty or not defined. Should be 'dev' or 'prd'." >&2 exit 1 else sed -e "s/@index@/$SPLUNK_INDEX/" -i ${SPLUNK_HOME}/etc/system/local/inputs.conf fi sed -e "s/@hostname@/$(cat /etc/hostname)/" -i ${SPLUNK_HOME}/etc/system/local/inputs.conf sh -c "echo 'starting' > /tmp/splunk-container.state" ${SPLUNK_HOME}/bin/splunk start watch_for_failure } In my case, for each environment and each individual entity, whether it is an application in a container or a host machine, we use a separate index. So speed of search at considerable accumulation of data will not suffer. A simple rule is used to name indexes: <environment_name> _ <service / application / etc> . Therefore, in order for the container to be universal, before launching the demon itself, we replace the sed- wildcard with the name of the environment. The variable with the name of the environment is passed through the environment variables. It sounds funny.

It is also worth noting that for some reason Splunk is not affected by the presence of the host parameter docker. He will still be stubbornly sending logs with his container id in the host field. As a solution, you can mount / etc / hostname from the host machine and, when starting, make a replacement similar to the index names.

Docker-compose.yml example

version: '2' services: splunk-forwarder: image: "${IMAGE_REPO}/docker-stats-splunk-forwarder:${IMAGE_VERSION}" environment: SPLUNK_INDEX: ${ENVIRONMENT} volumes: - /etc/hostname:/etc/hostname:ro - /var/log:/var/log - /var/run/docker.sock:/var/run/docker.sock:ro Total

Yes, perhaps, the solution is not ideal and certainly not universal for everyone, since there is a lot of “hardcode” . But based on it, everyone can collect their image and put it in their private artifactor, if, as it happened, you need Splunk Forwarder in the docker.

References:

Decision from article

The solution from outcoldman inspired to reuse part of the functionality

Of Universal Forwarder Configuration Documentation

Source: https://habr.com/ru/post/447402/

All Articles