Instant design

People learn architecture from old books that were written for Java. The books are good, but they give a solution to the tasks of that time with the tools of that time. Time has changed, C # already looks more like a light Scala than Java, and there are few good new books.

In this article we will look at the criteria for good code and bad code, how and what to measure. We will see an overview of typical tasks and approaches, analyze the pros and cons. At the end there will be recommendations and best practices for designing web applications.

This article is a transcript of my report from the conference DotNext 2018 Moscow. In addition to the text, under the cut there is a video and a link to the slides.

')

As you, probably, could guess, today I will talk about the development of corporate software, namely, how to structure modern web applications:

We formulate the criteria. I really don't like it when the talk about design is conducted in the style of "my kung fu is stronger than your kung fu." Business has, in principle, one specific criterion, which is called "money." Everyone knows that time is money, so these two components are most often the most important.

So, the criteria. In principle, business most often asks us "as many features as possible per unit of time", but with one nuance - these features should work. And the first stage at which it may break is the review code. That is, it seems, the programmer said: "I will do it in three hours." Three hours have passed, the review has come to the code, and the team leader says: "Oh, no, redo it." There are three more - and how many iterations the review code has passed, you need to multiply three hours by that.

The next moment is the return from the acceptance test phase. Same. If the feature does not work, then it is not done, these three hours stretch for a week, two - well, as usual there. The last criterion is the number of regressions and bugs, which nevertheless, despite testing and acceptance, were passed in production. This is also very bad. There is one problem with this criterion. It is difficult to track, because the connection between the fact that we have launched something into the repository, and the fact that after two weeks something broke, it can be difficult to track. But, nevertheless, it is possible.

Long ago, when programmers were just starting to write programs, there was still no architecture, and everyone did everything the way they like.

Therefore, such an architectural style was obtained. We call it “noodles”, abroad they say “spaghetti code”. Everything is connected with everything: we change something at point A - at point B it breaks, to understand what is connected with what is absolutely impossible. Naturally, the programmers quickly realized that this would not work, and we needed to do some kind of structure, and decided that some layers would help us. Now, if you imagine that minced meat is a code, and lasagna are such layers, here is an illustration of a layered architecture. The stuffing was still stuffing, but now the stuffing from layer No. 1 cannot just go and talk to the stuffing from layer No. 2. We gave the code some form: even in the picture you can see that the lasagna is more framed.

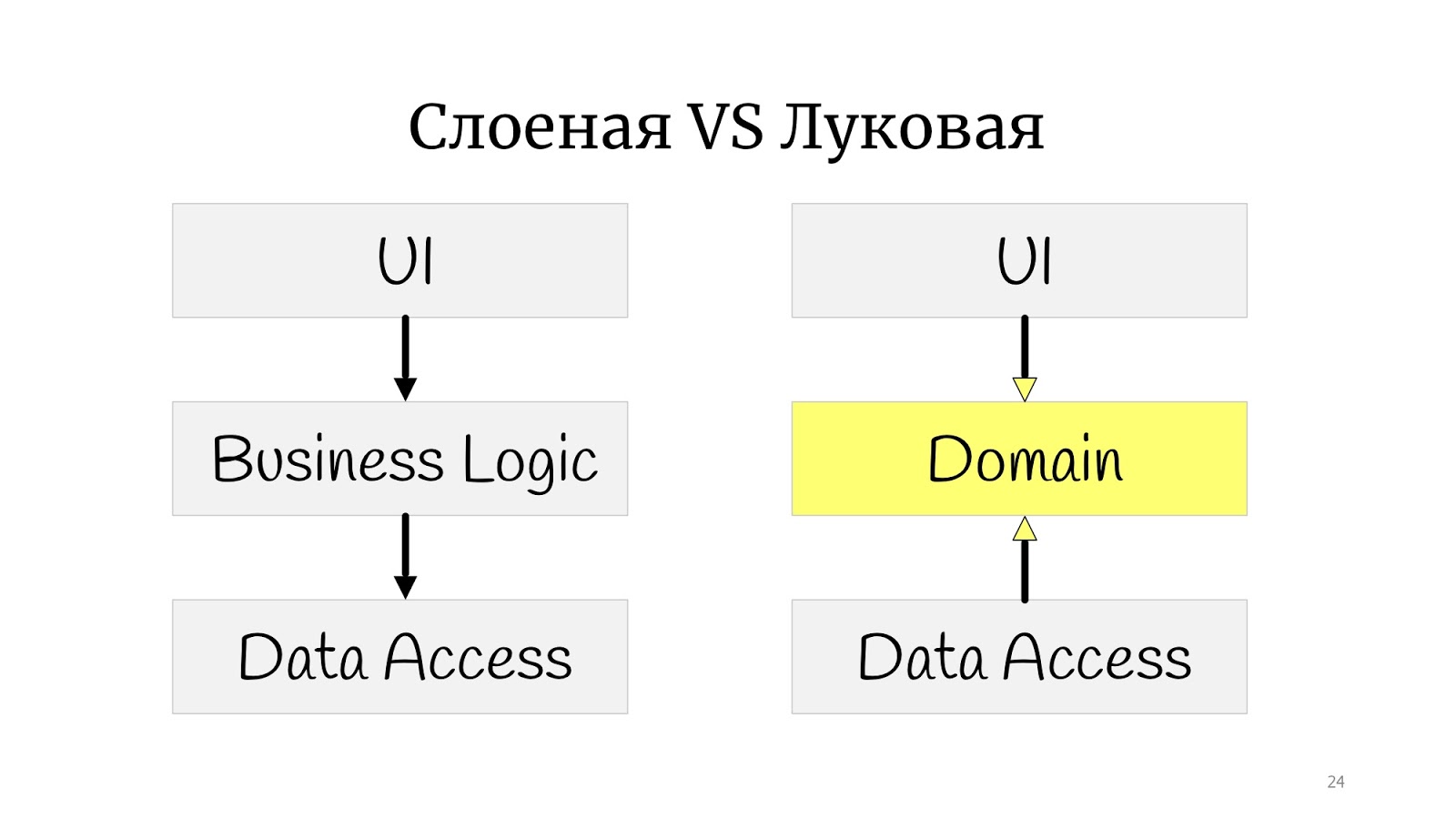

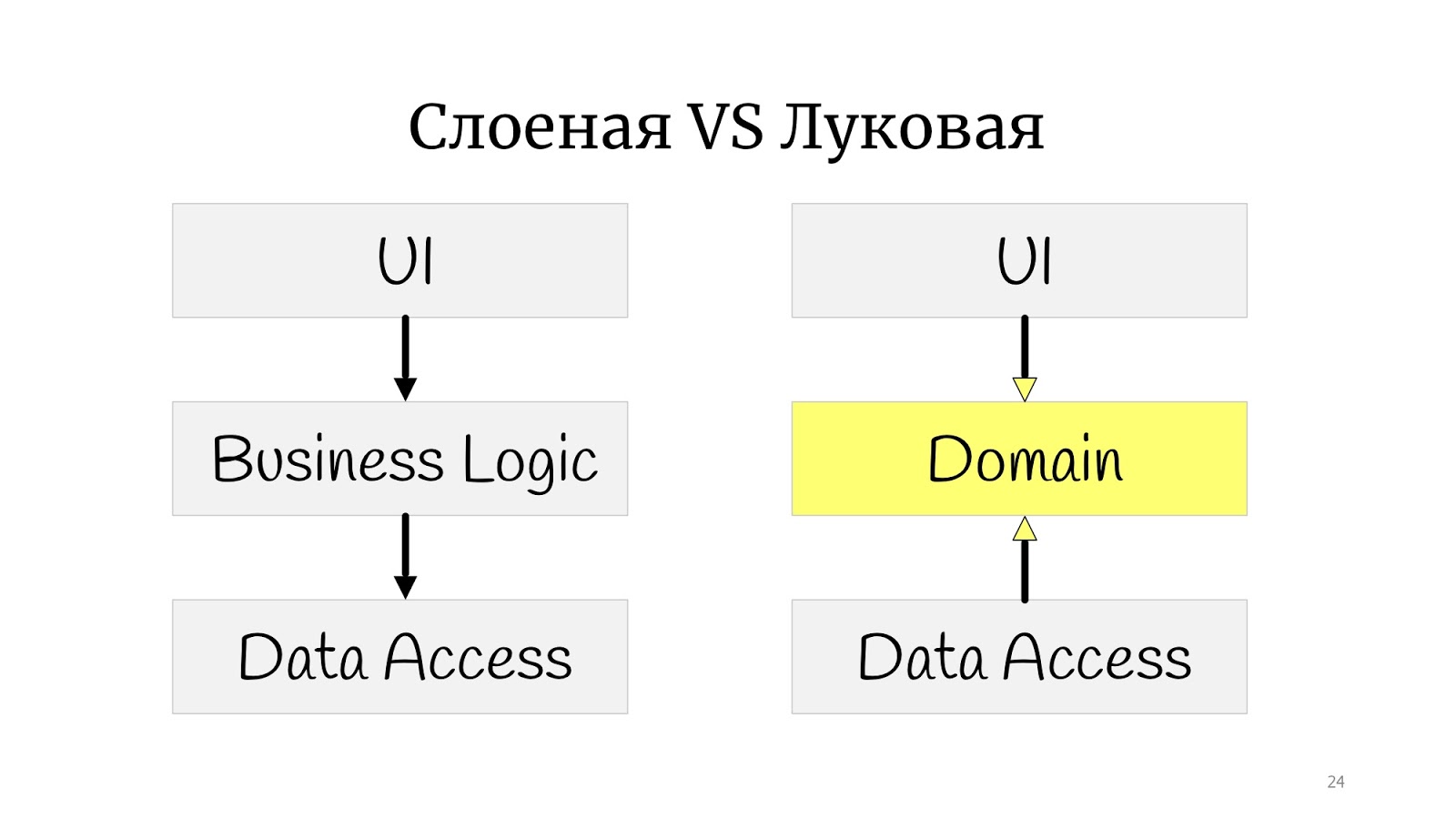

Everyone is familiar with the classical layered architecture : there is a UI, there is a business logic and there is a Data Access layer. There are still all sorts of services, facades and layers, named after the architect who left the company, there can be an unlimited number of them.

The next stage was the so-called onion architecture . It would seem a huge difference: before that there was a square, and then there were circles. It seems that is completely different.

Not really. The only difference is that somewhere at this time the principles of SOLID were formulated, and it turned out that in classical onion there is a problem with dependency inversion, because the abstract domain code for some reason depends on the implementation, on Data Access, therefore Data Access decided to deploy , and made Data Access dependent on the domain.

I practiced drawing and drawing onion architecture here, but not classically with “little rings”. I got something between a polygon and circles. I did this to simply show that if you came across the words “onion,” “hexagonal,” or “ports and adapters,” they are all the same. The point is that the domain in the center, it is wrapped in services, they can be domain or application-services, as you like. And the outside world in the form of UI, tests and infrastructure, where the DAL moved - they communicate with the domain through this service layer.

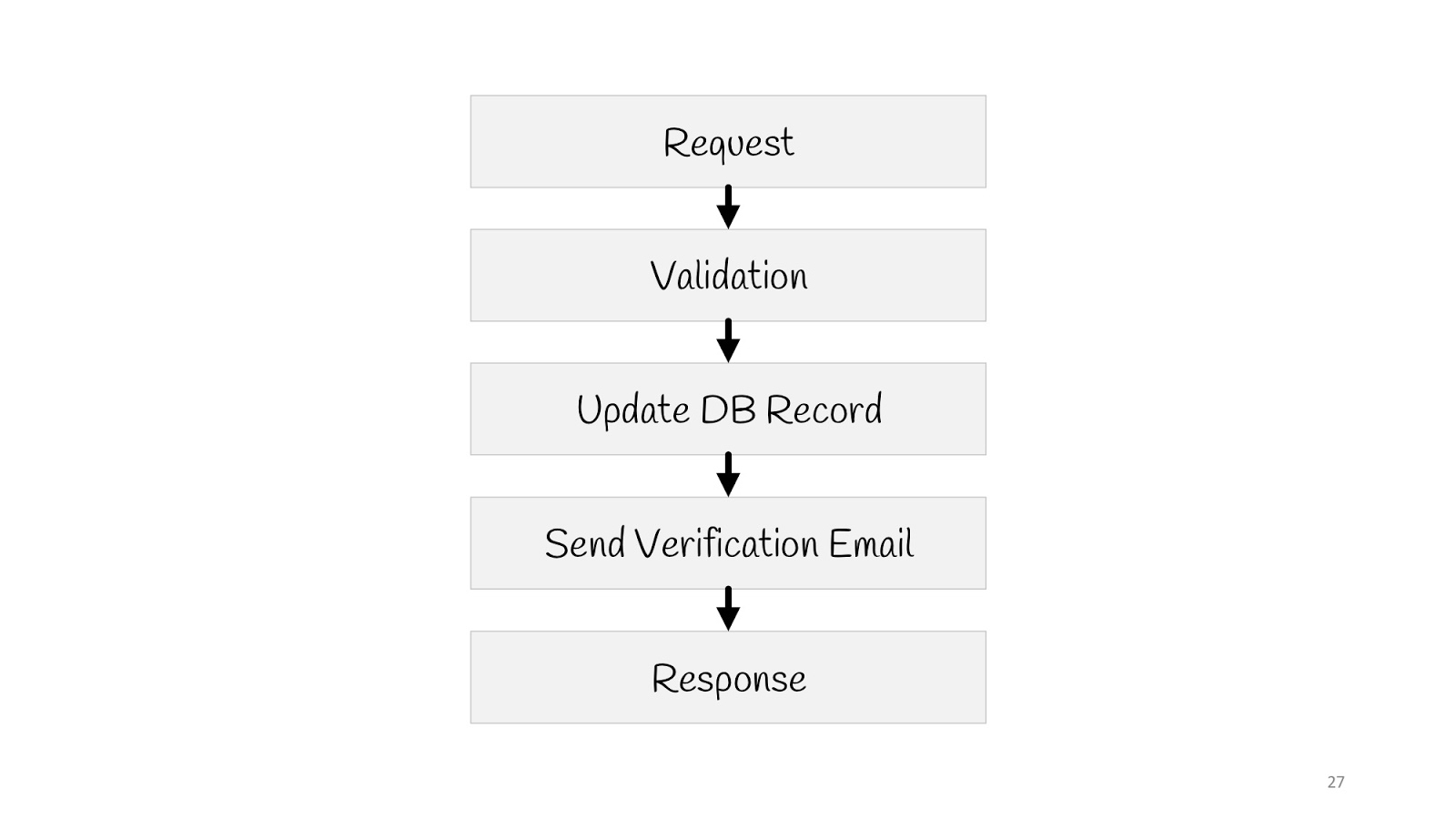

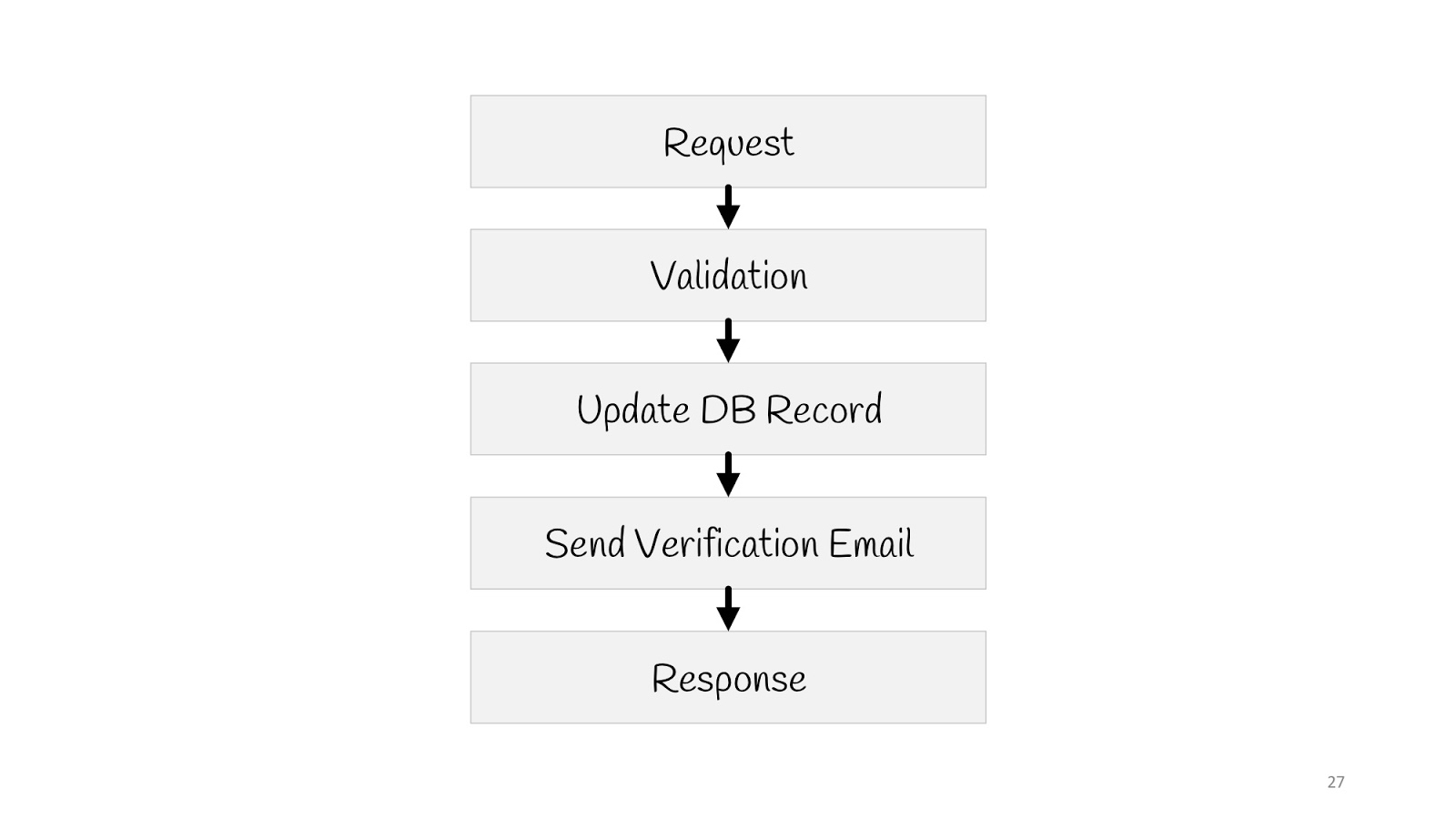

Let's see how in such a paradigm will look like a simple use case - update the user's email.

We need to send a request, perform a validation, update the value in the database, send a notification to the new email: “Everything is fine, you changed the email, we know everything is fine”, and to answer the browser “200” everything is okay.

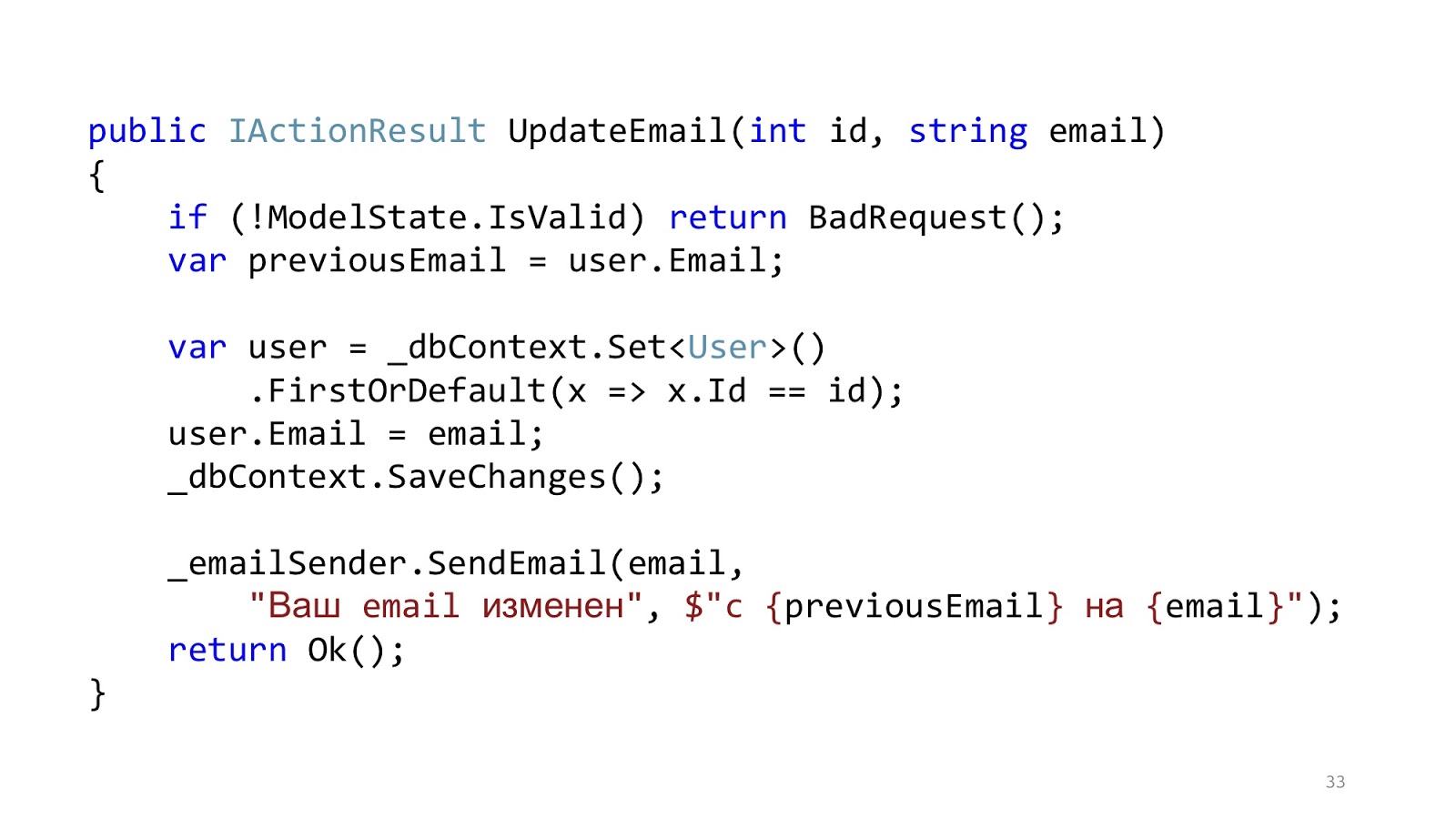

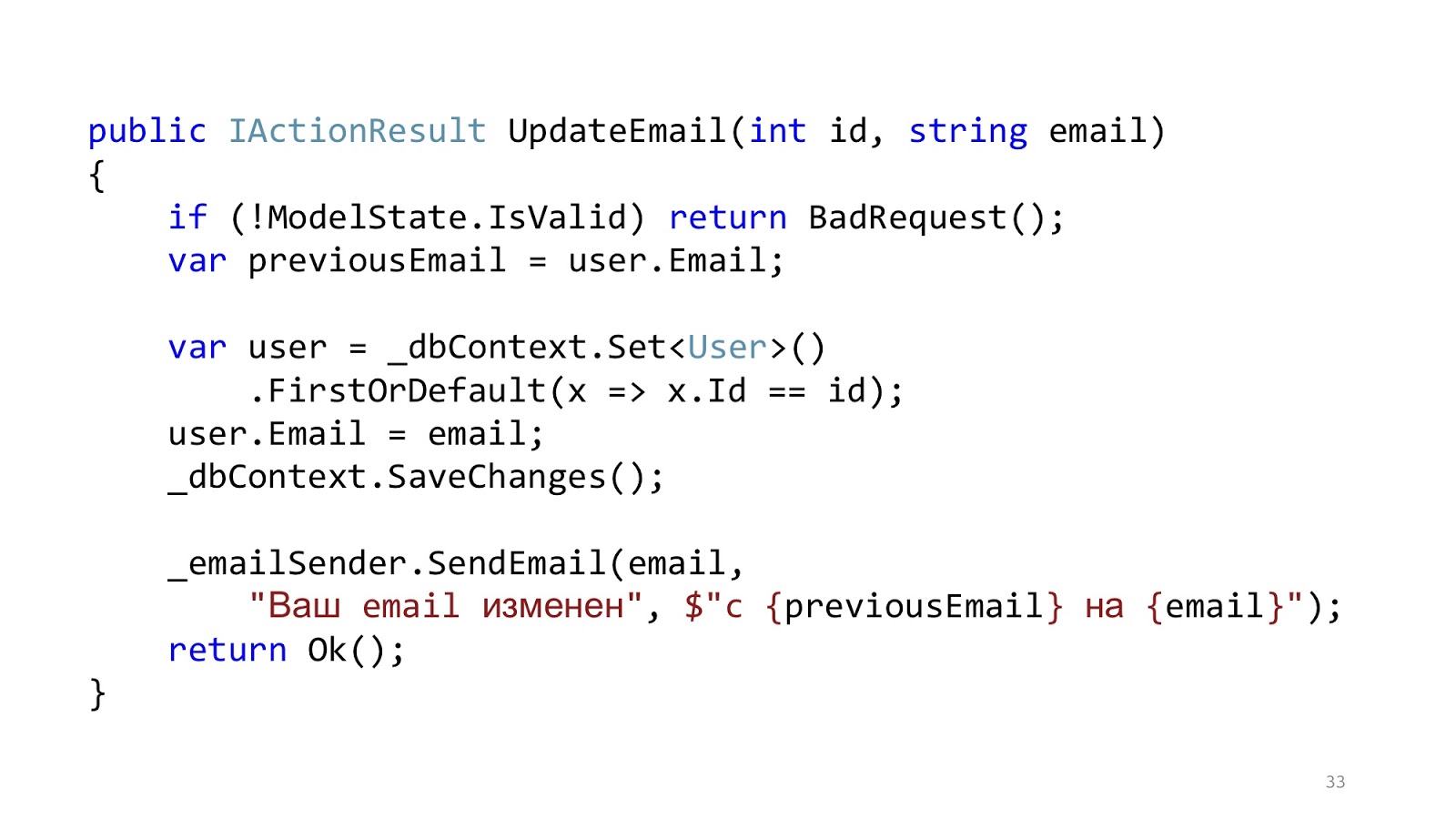

The code might look something like this. Here we have standard ASP.NET MVC validation, there is an ORM to read and update data, and there is some email-sender that sends a notification. It seems like everything is fine, yes? One nuance - in an ideal world.

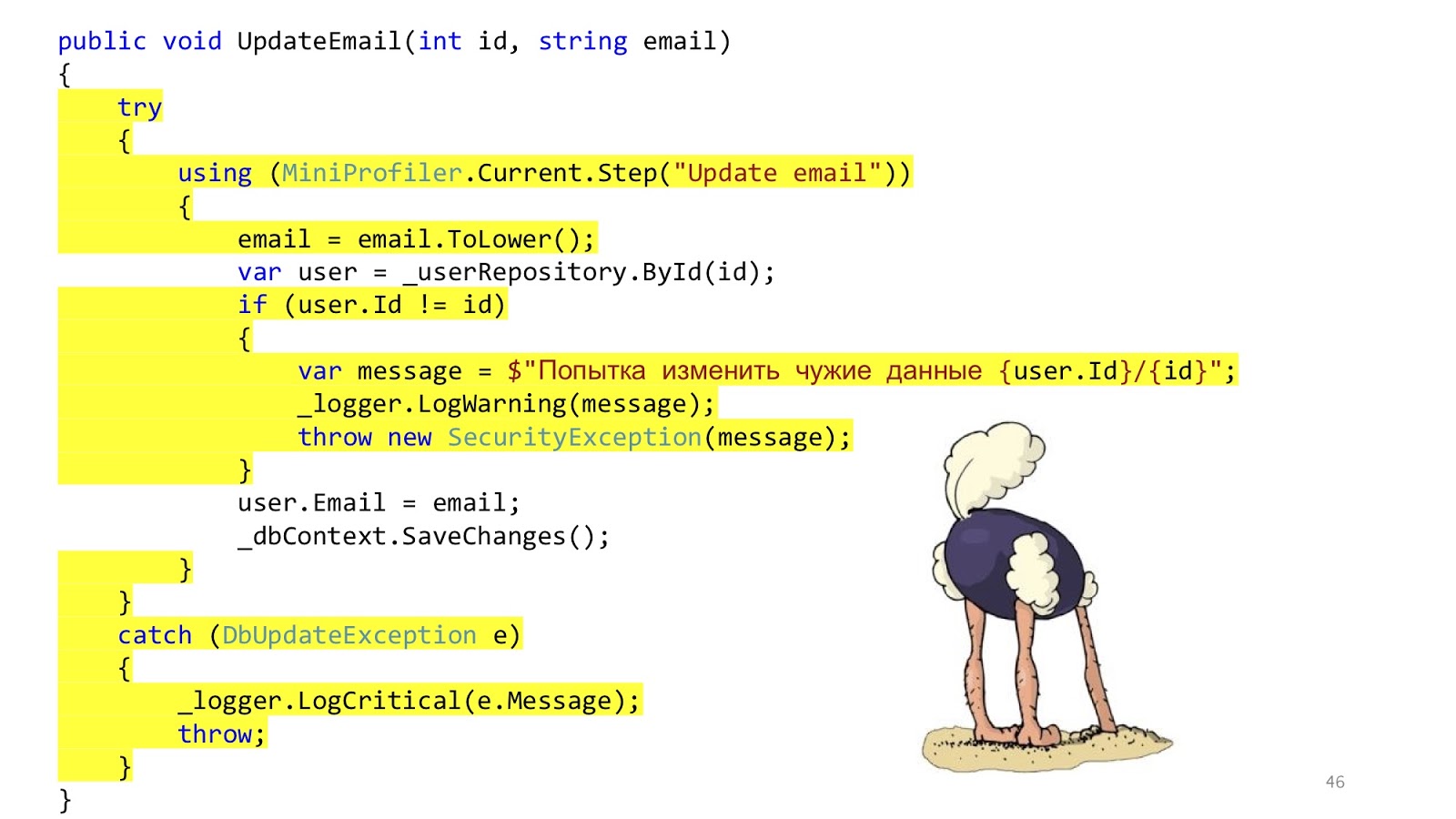

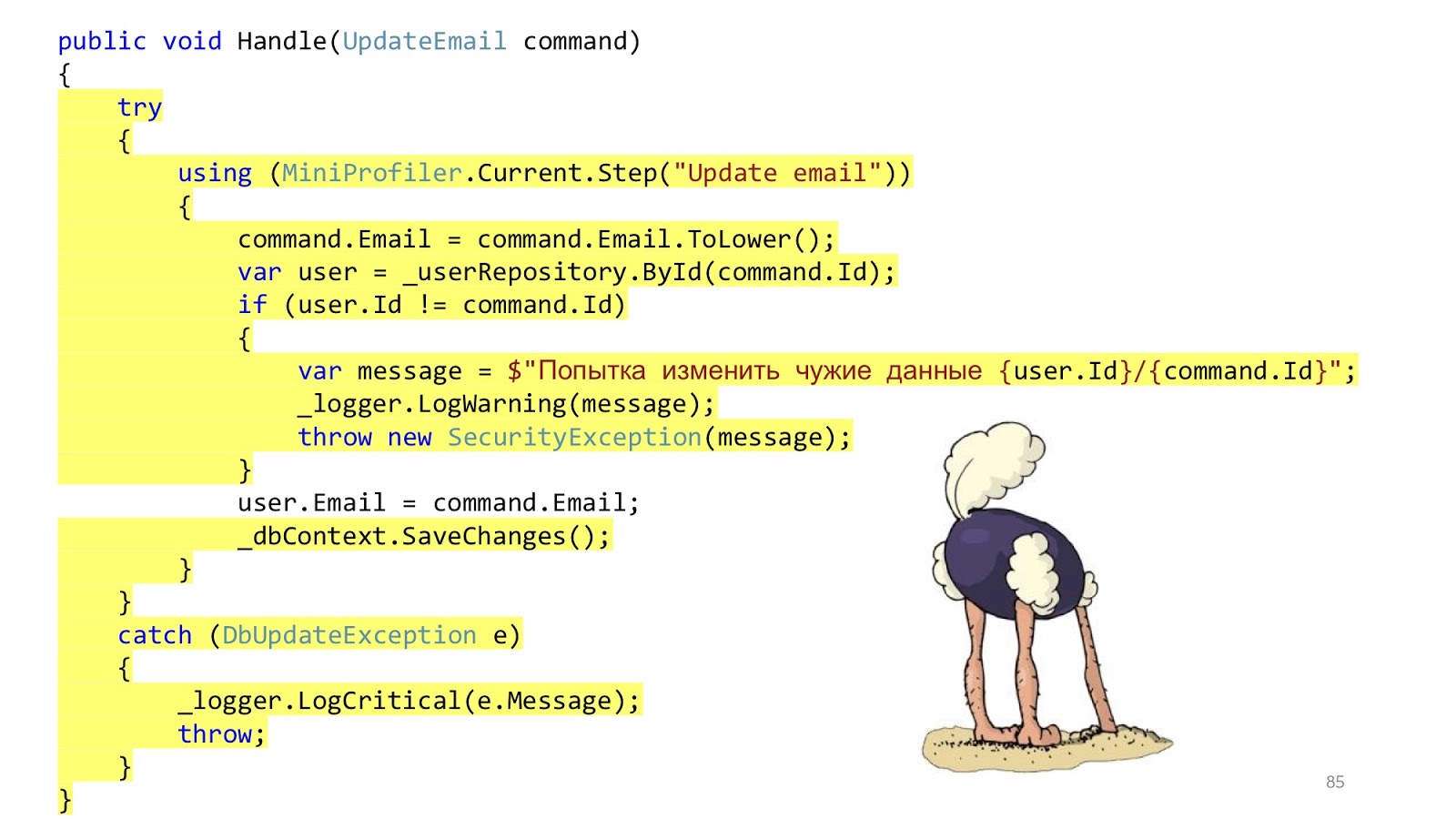

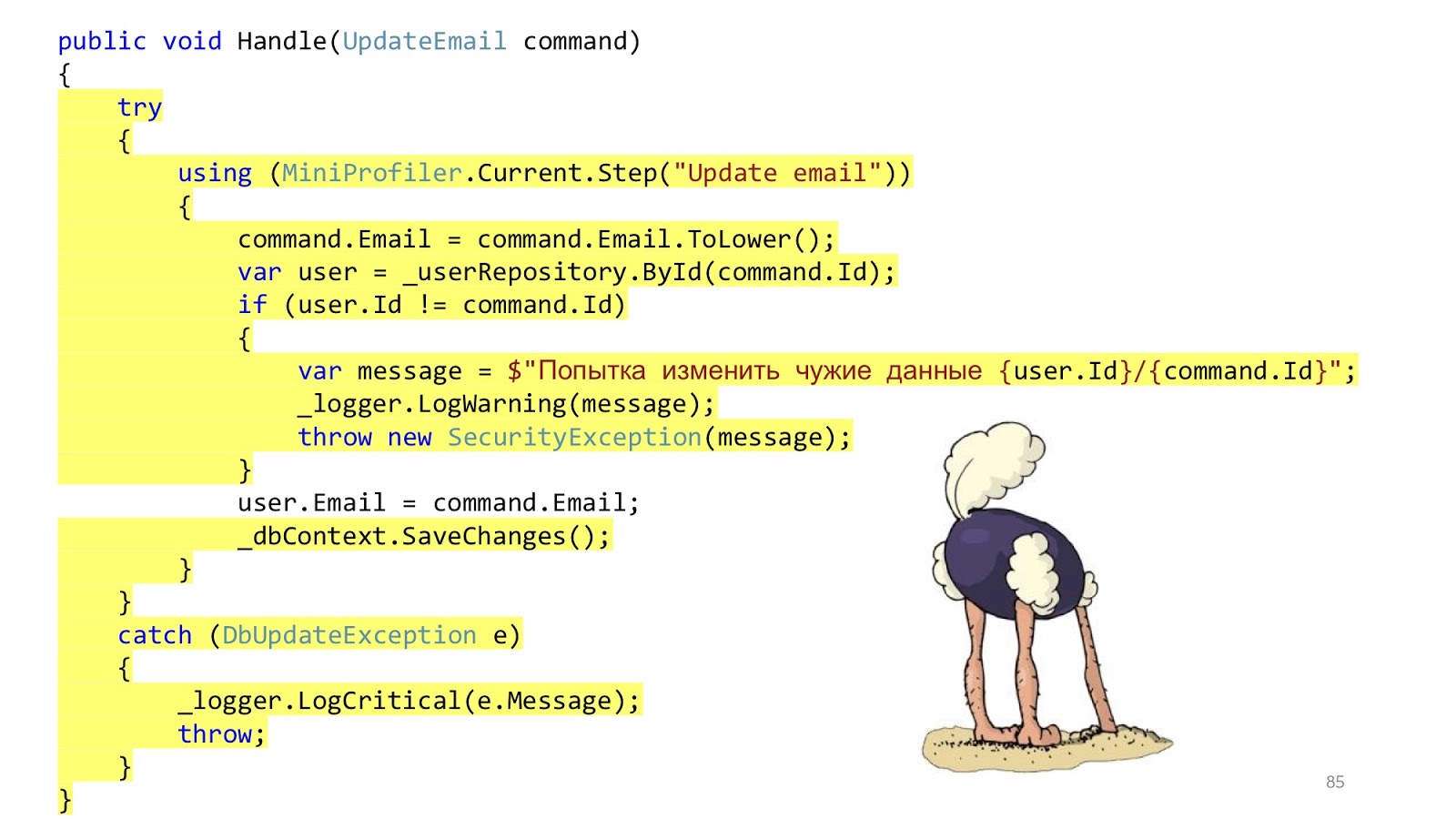

In the real world, the situation is slightly different. The point is to add authorization, error checking, formatting, logging and profiling. All this has nothing to do with our use case, but it should be. And that little piece of code has become big and scary: with a lot of nesting, with a lot of code, with the fact that it is hard to read, and most importantly, the infrastructure code is larger than the domain code.

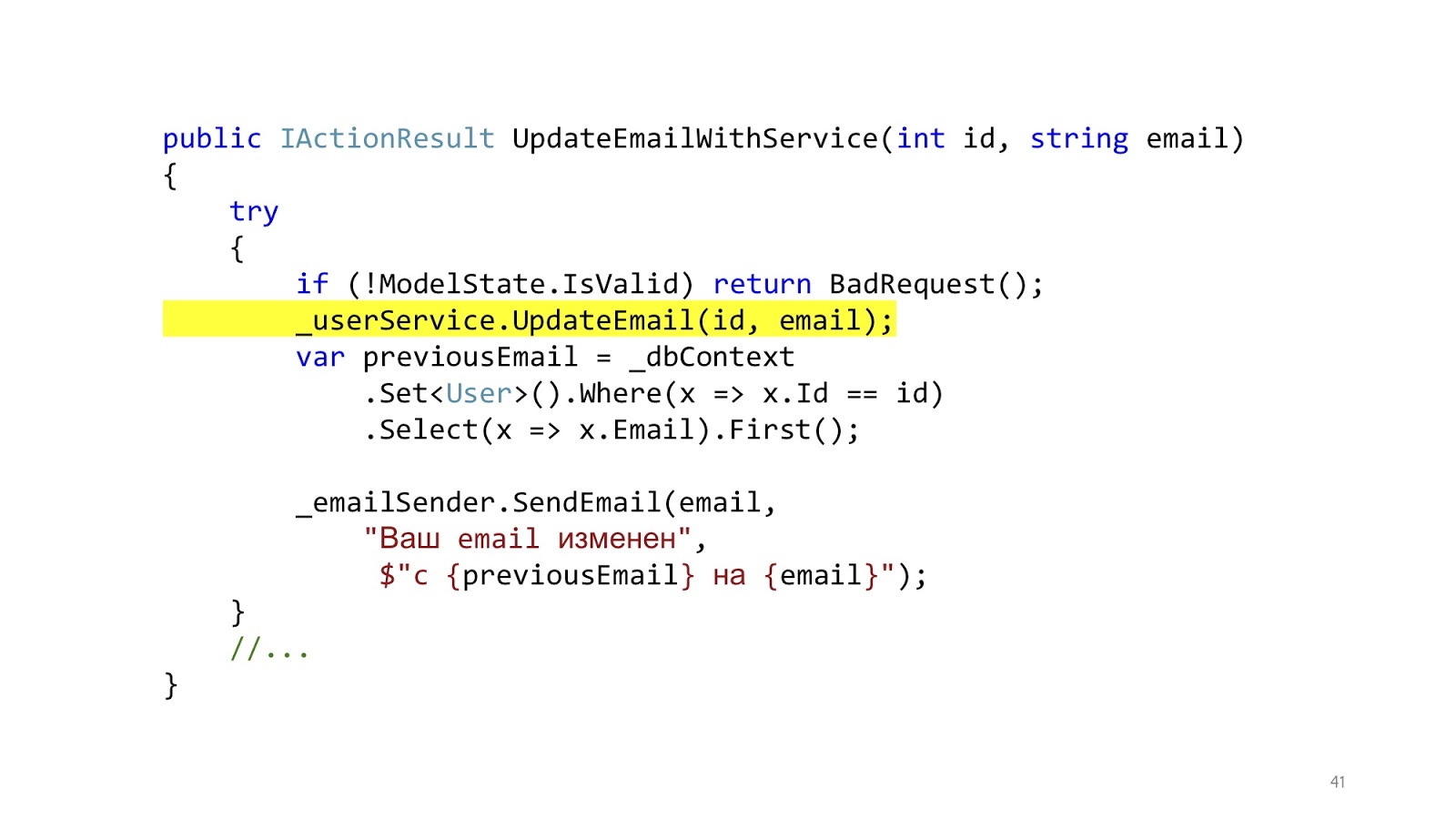

“Where are the services?” You will say. I wrote all the logic in the controllers. Of course, this is a problem, now I will add services, and everything will be fine.

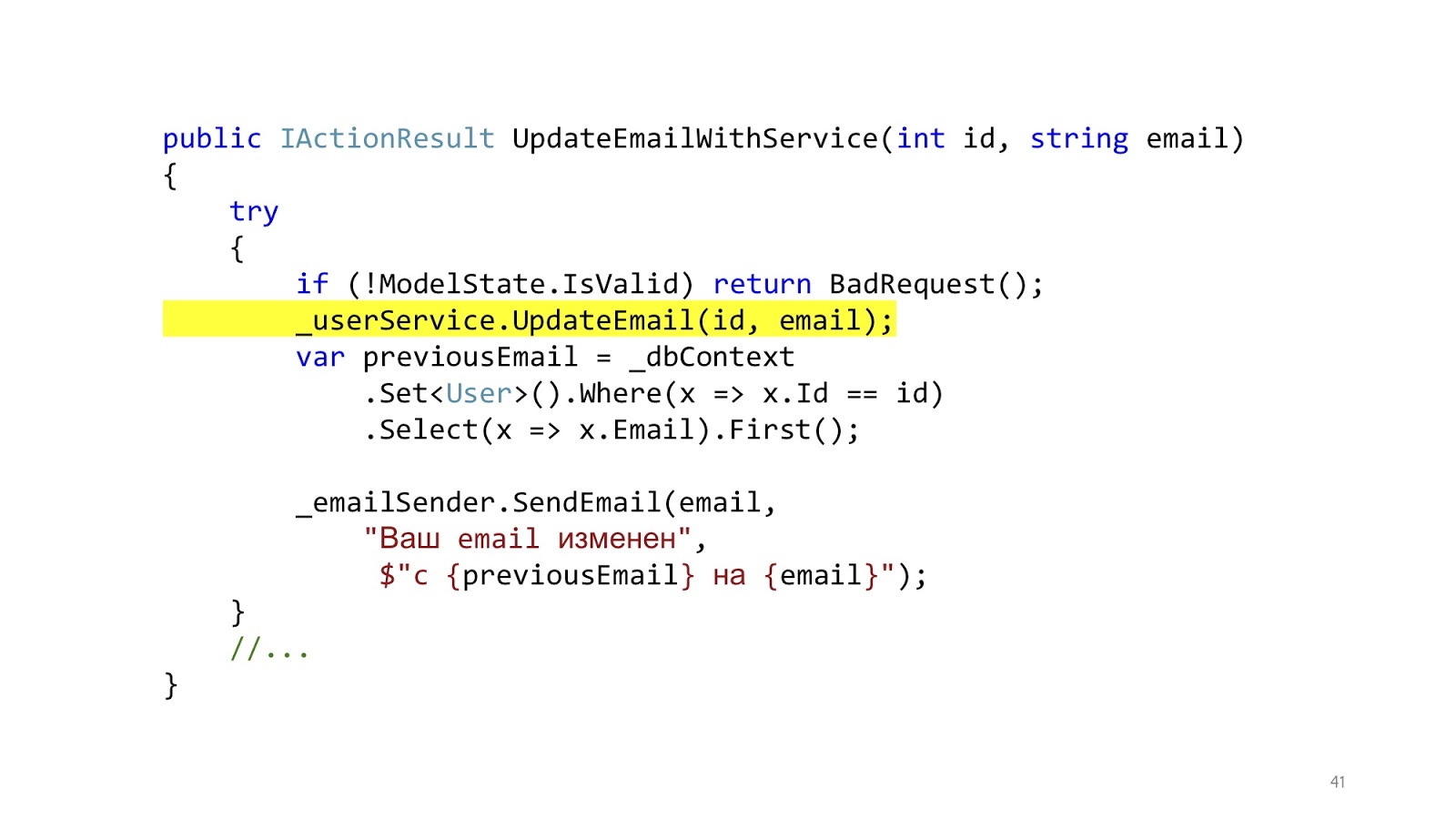

We add services, and it really gets better, because instead of a big footcloth, we got one small beautiful line.

Got better? It has become! And now we can reuse this method in different controllers. The result is obvious. Let's look at the implementation of this method.

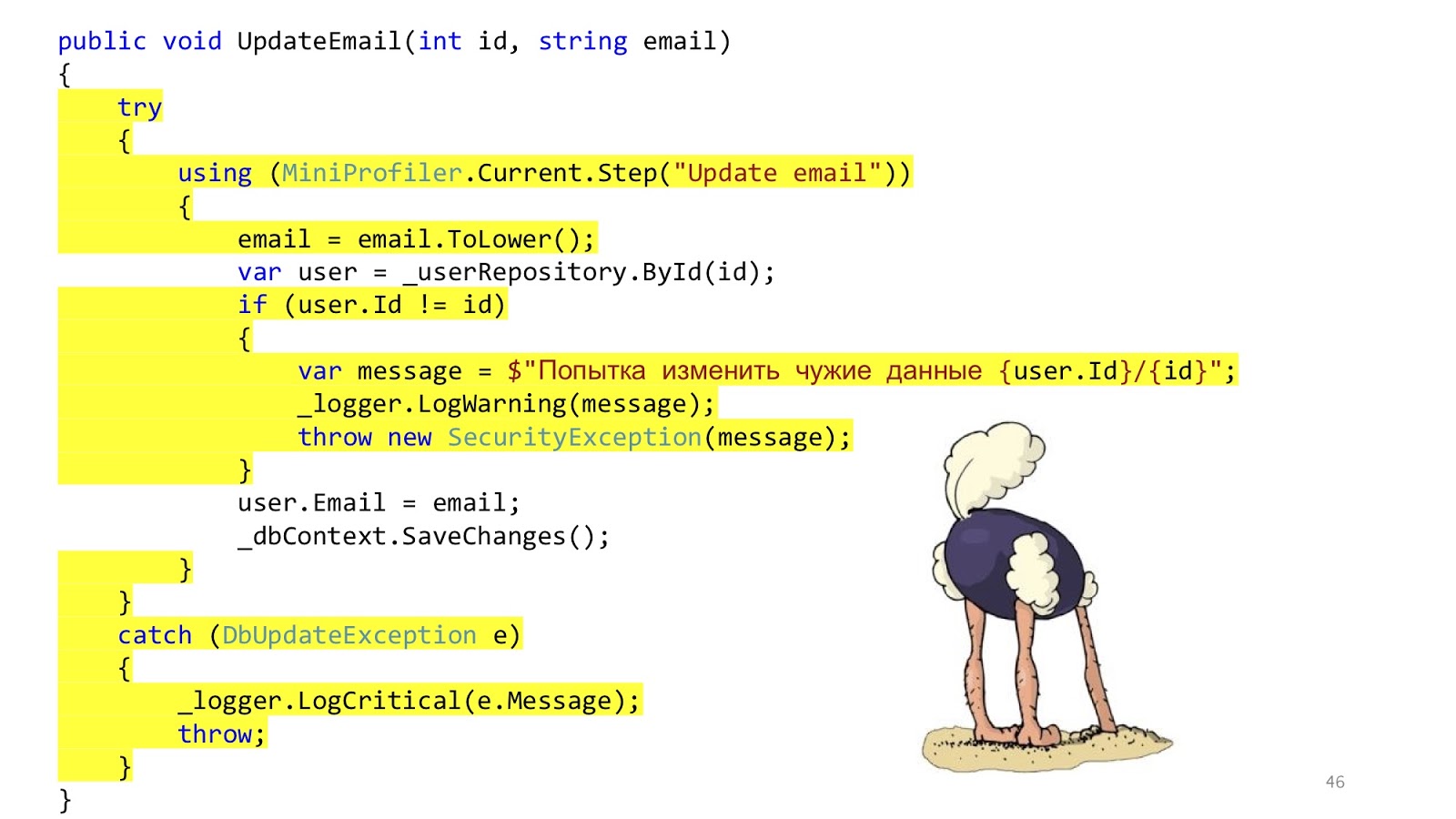

But here everything is not so good. This code has not gone away. All the same, we just transferred to the services. We decided not to solve the problem, but simply disguise it and move it to another place. That's all.

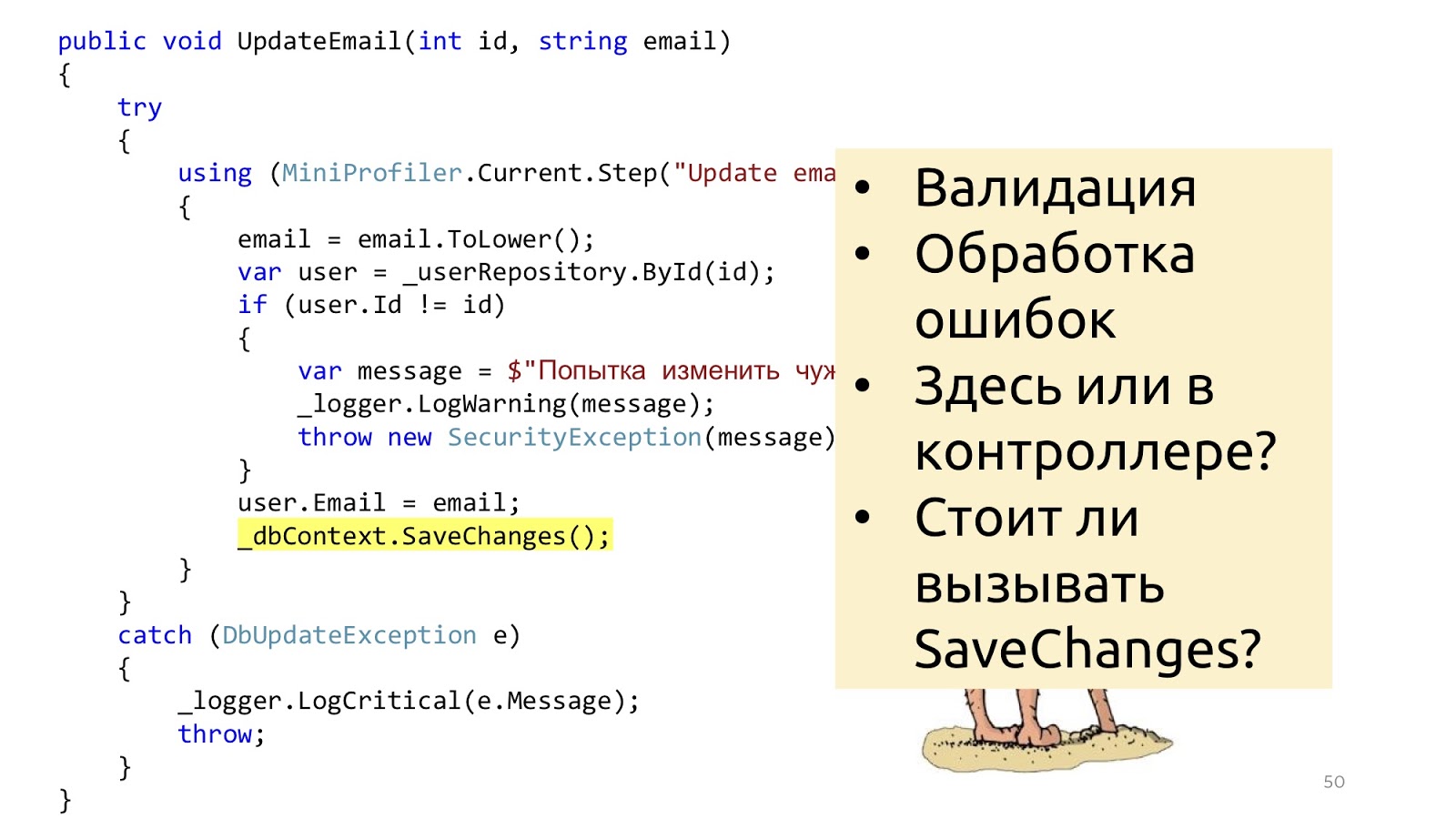

In addition, there are some other issues. Should we do validation in the controller or here? Well, sort of, in the controller. And if you need to go to the database and see what such an ID is or that there is no other user with such an email? Hmm, well, then in the service. But error handling here? This error handling is probably here, and the error handling that will be answered by the browser in the controller. And the SaveChanges method, is it in service or do I need to transfer it to the controller? It can be both so and so, because if the service is called one, it is more logical to call in the service, and if you have three controller methods in the controller that need to be called, then you need to call it outside of these services so that there is one transaction. These are the thoughts that suggest that maybe the layers do not solve any problems.

And this idea occurred to more than one person. If you google, at least three of these honorable husbands write about the same thing. From top to bottom: Stephen .NET Junkie (unfortunately, I don’t know his last name, because it doesn’t appear anywhere on the Internet) by IoC container Simple Injector . Next, Jimmy Bogard - the author of AutoMapper . And below is Scott Vlashin, the author of the site “F # for fun and profit” .

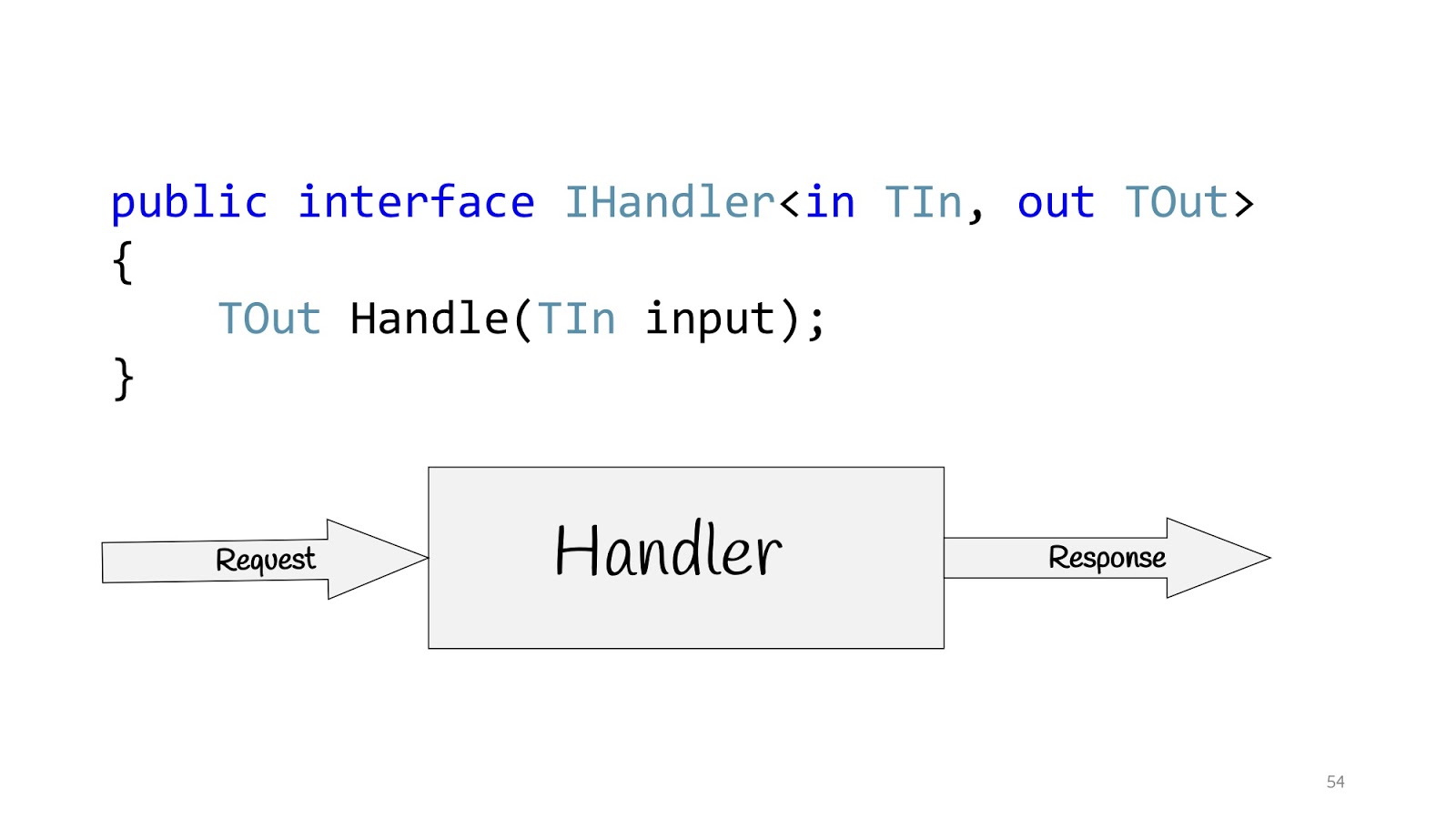

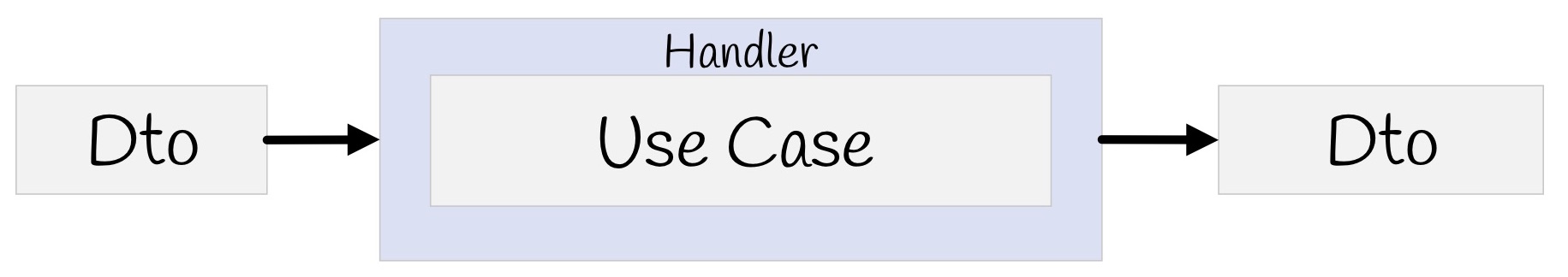

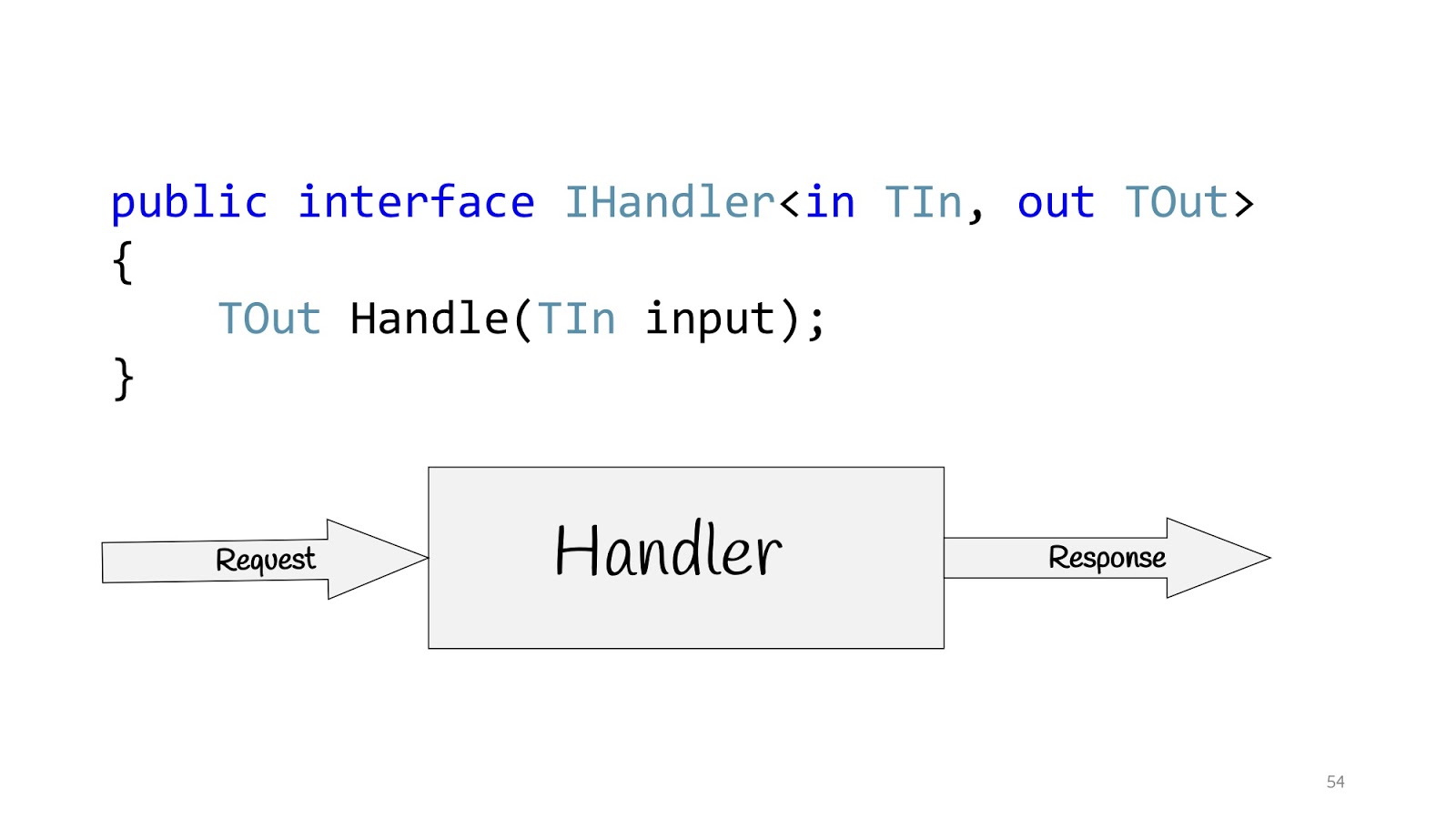

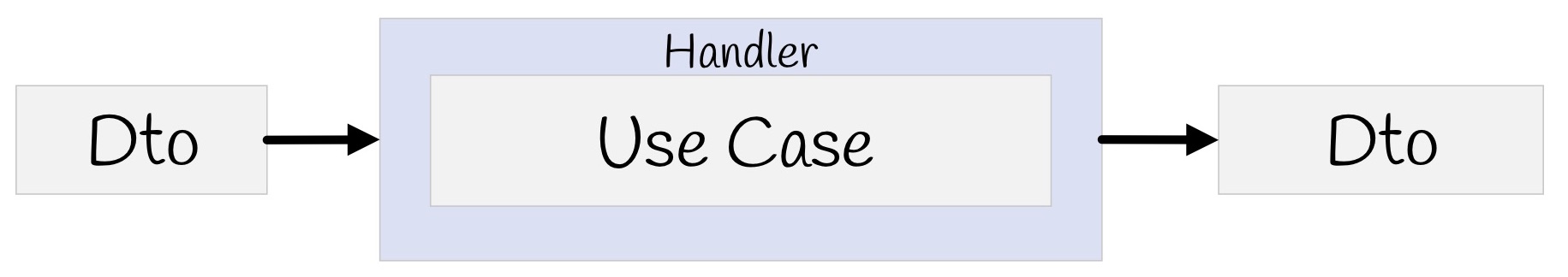

All these people talk about the same thing and offer to build applications not on the basis of layers, but on the basis of use options, that is, the requirements for which the business asks us. Accordingly, the use case in C # can be defined using the IHandler interface. It has input values, output values, and there is the method itself that actually executes this use case.

And inside this method there can be either a domain model, or any denormalized model for reading, maybe with the help of Dapper or with the help of Elastic Search, if you need to look for something, and maybe you have Legacy -system with stored procedures - no problem, as well as network requests - well, in general, anything you might need. But if there are no layers, how to be?

First, let's get rid of UserService. Remove the method and create a class. And also remove, and remove again. And then take and remove the class.

Let's think, are these classes equivalent or not? The GetUser class returns data and does not change anything on the server. This, for example, about the request "Give me the user ID." The UpdateEmail and BanUser classes return the result of the operation and change state. For example, when we say to the server: “Please change the state, you need to change something here.”

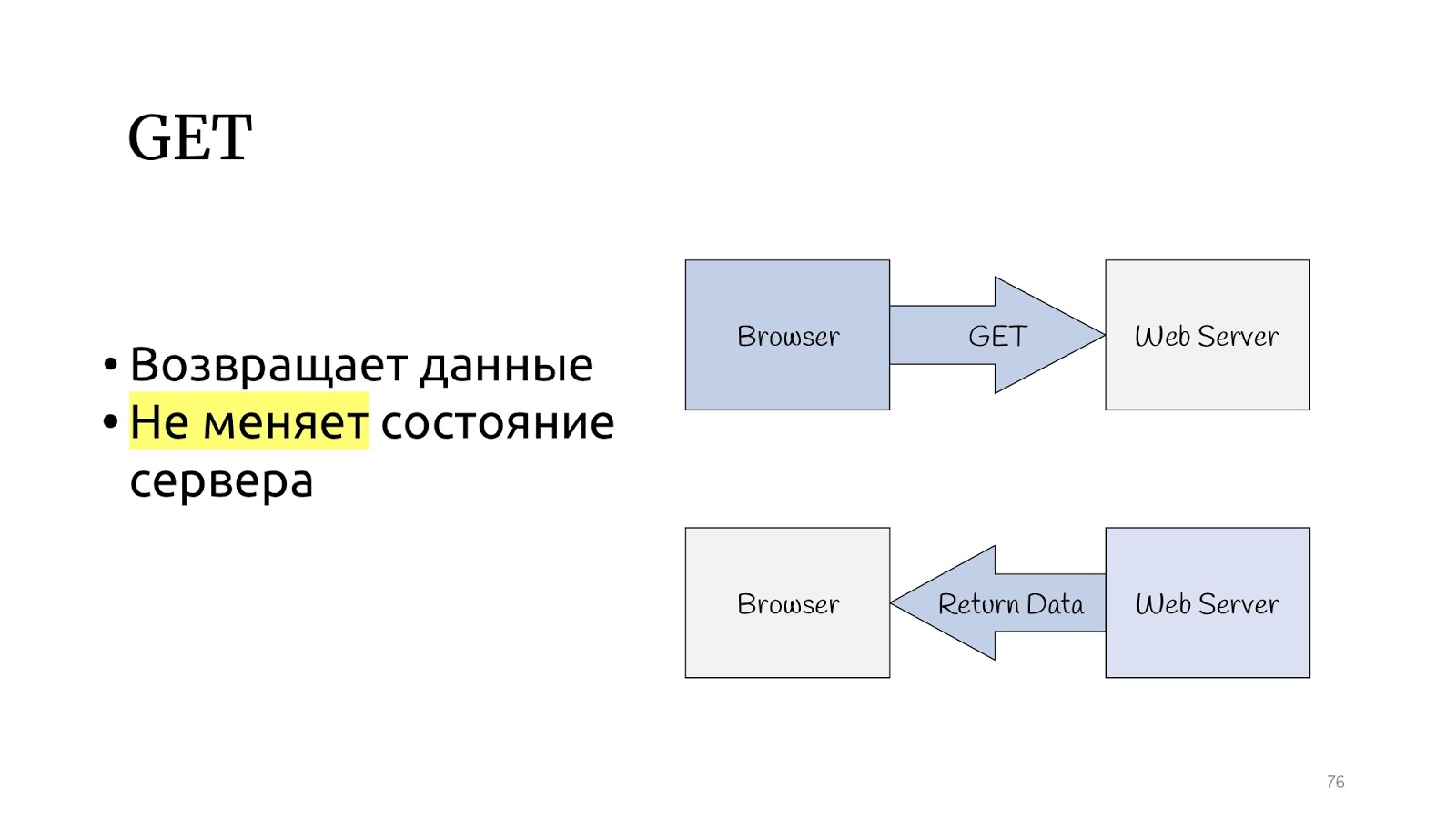

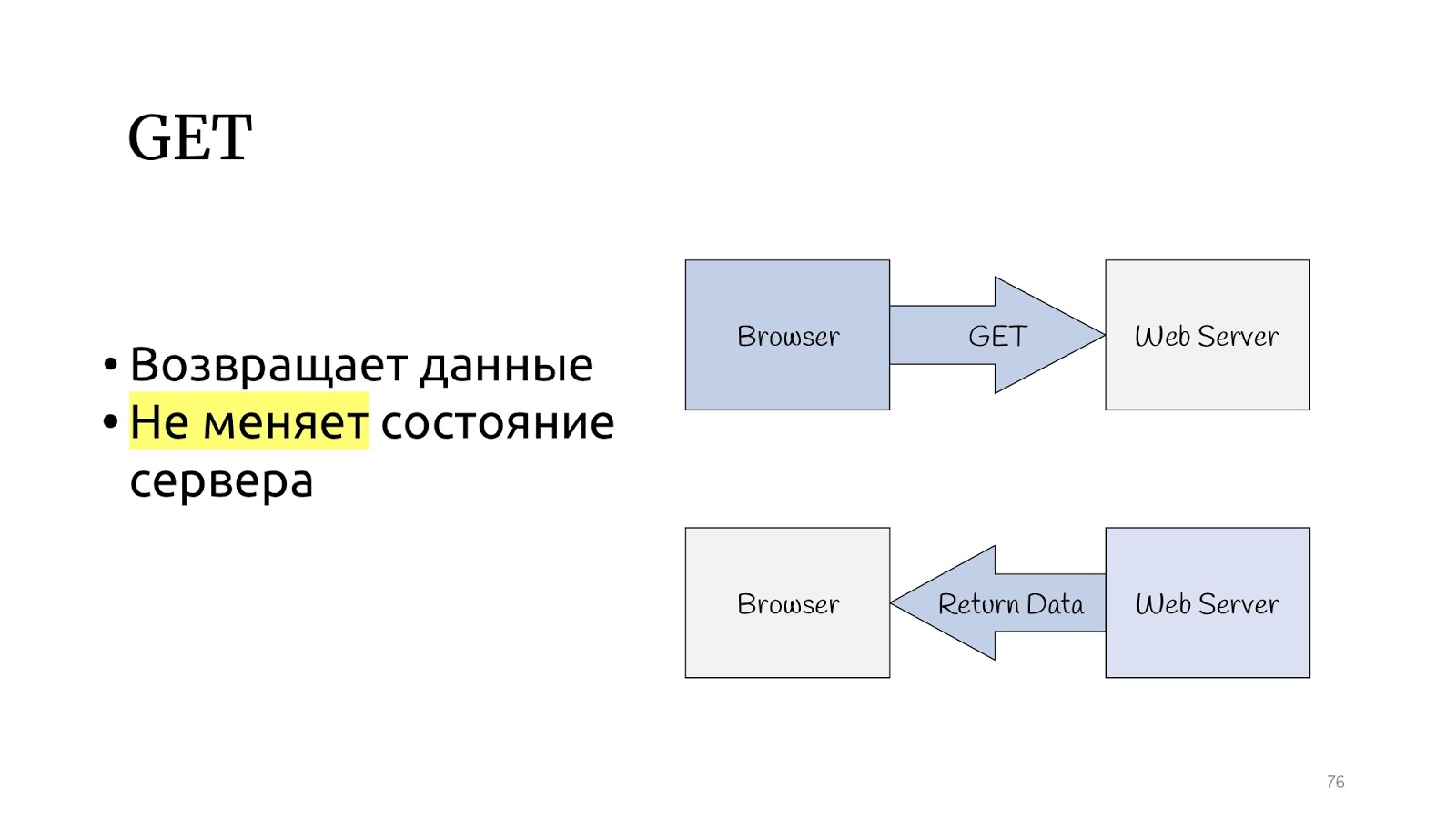

Look at the HTTP protocol. There is a GET method that, according to the HTTP protocol specification, should return data and not change the state of the server.

And there are other methods that can change the state of the server and return the result of the operation.

The CQRS paradigm seems to have been specially created for the HTTP protocol. Query is GET operations, and commands are PUT, POST, DELETE — you don’t need to invent anything.

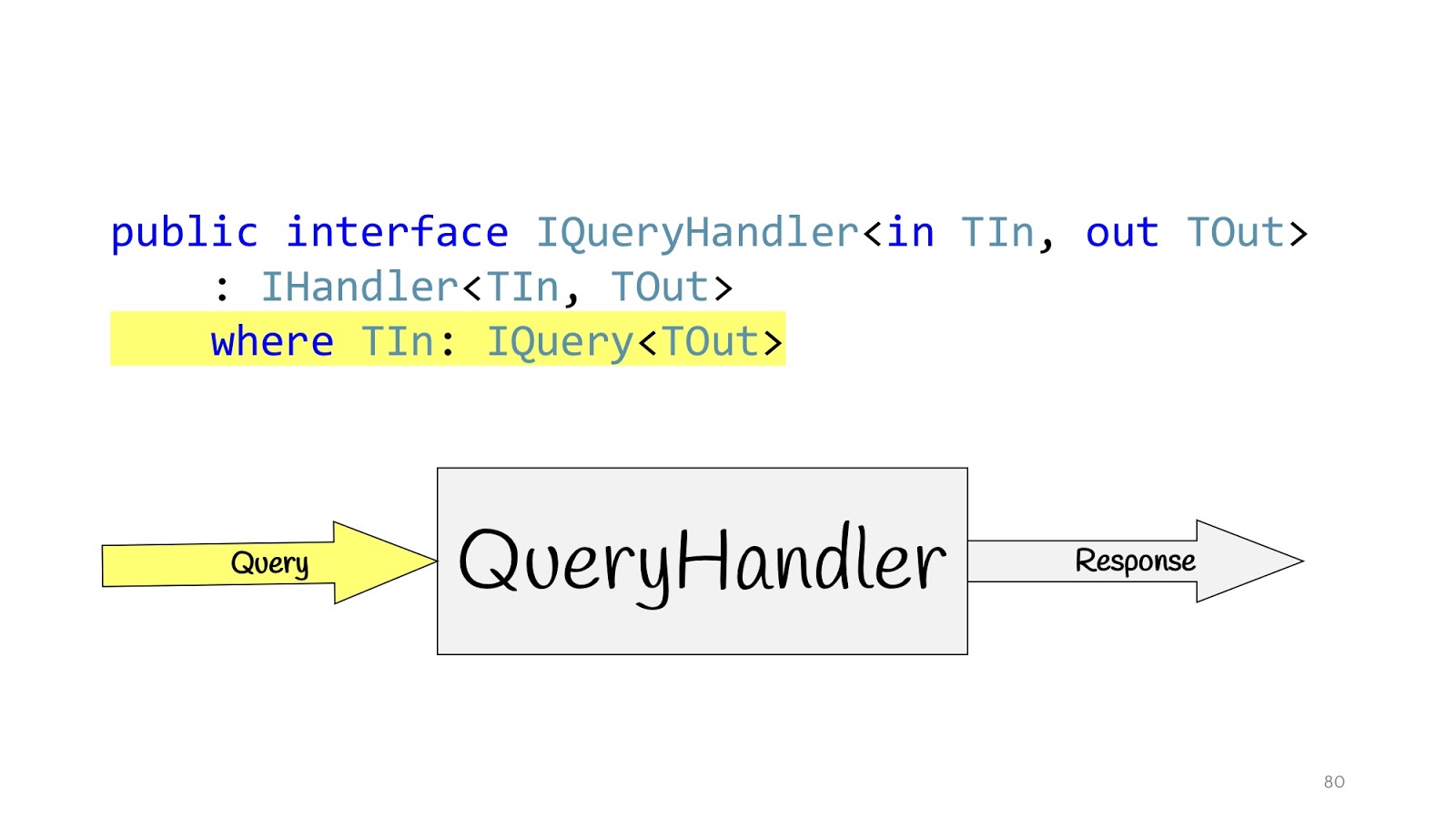

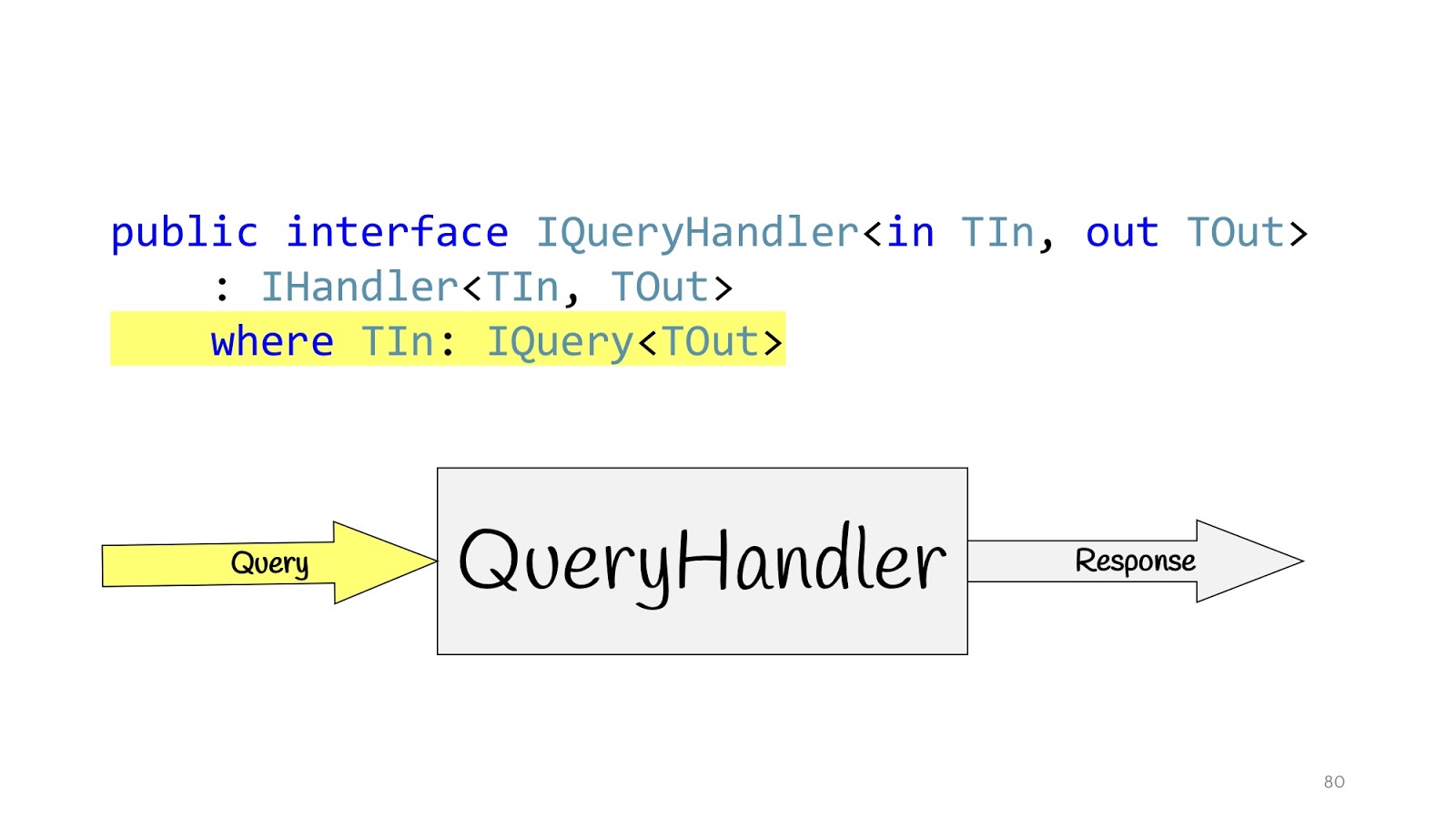

We will define our Handler and define additional interfaces. IQueryHandler, which differs only in that we hang constraint that the type of input values is IQuery. IQuery is a marker interface, there is nothing in it except for this generic. We need a generic in order to place constraint in the QueryHandler, and now, when declaring the QueryHandler, we cannot transfer there not Query, but passing the Query object there, we know its return value. This is convenient if you have some interfaces, so that you do not look for their implementation in the code, and again not to make a mess of it. You write IQueryHandler, write the implementation there, and you cannot substitute another type of return value in TOut. It just won't compile. Thus, it is immediately apparent which input values correspond to which input data.

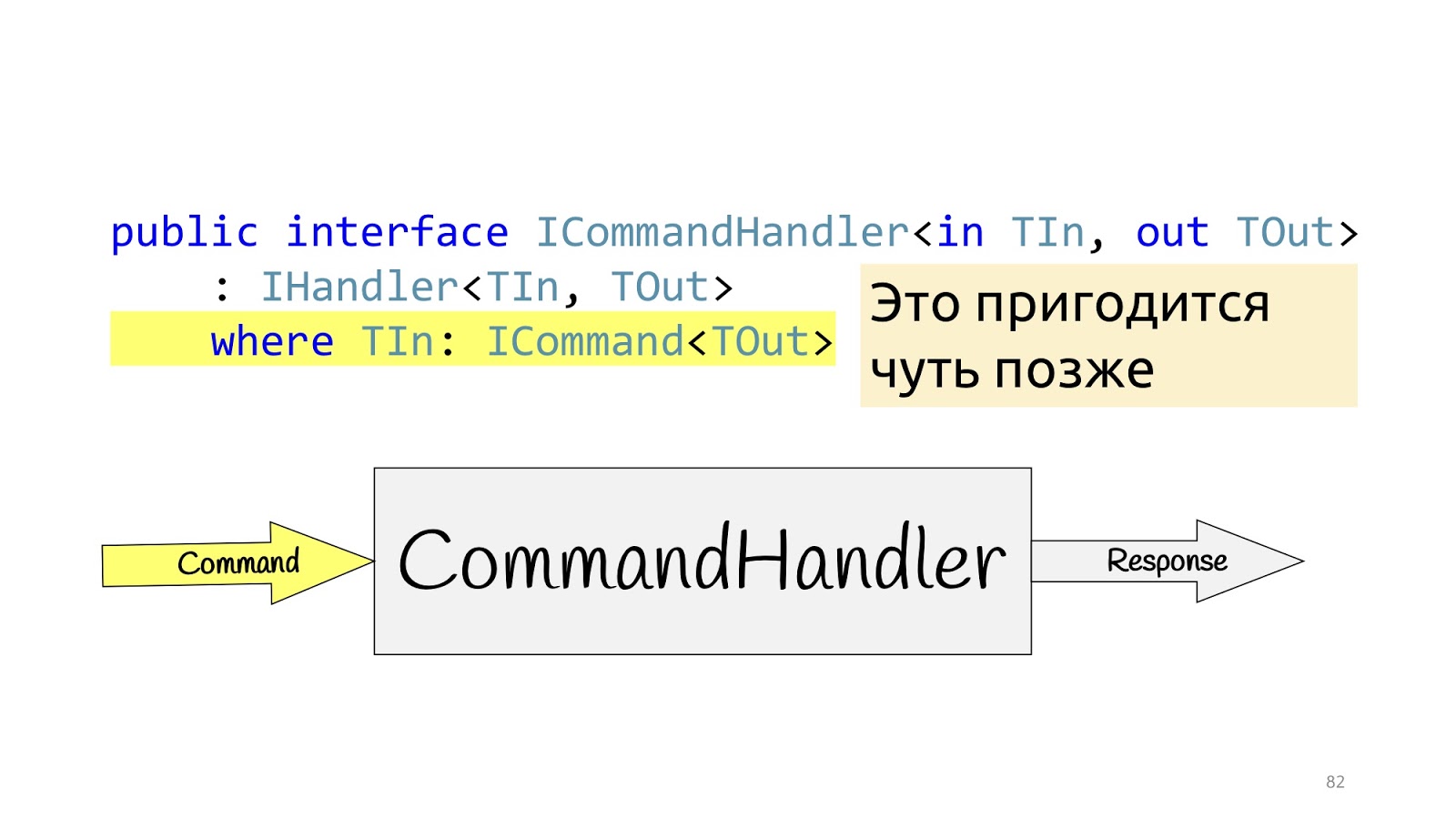

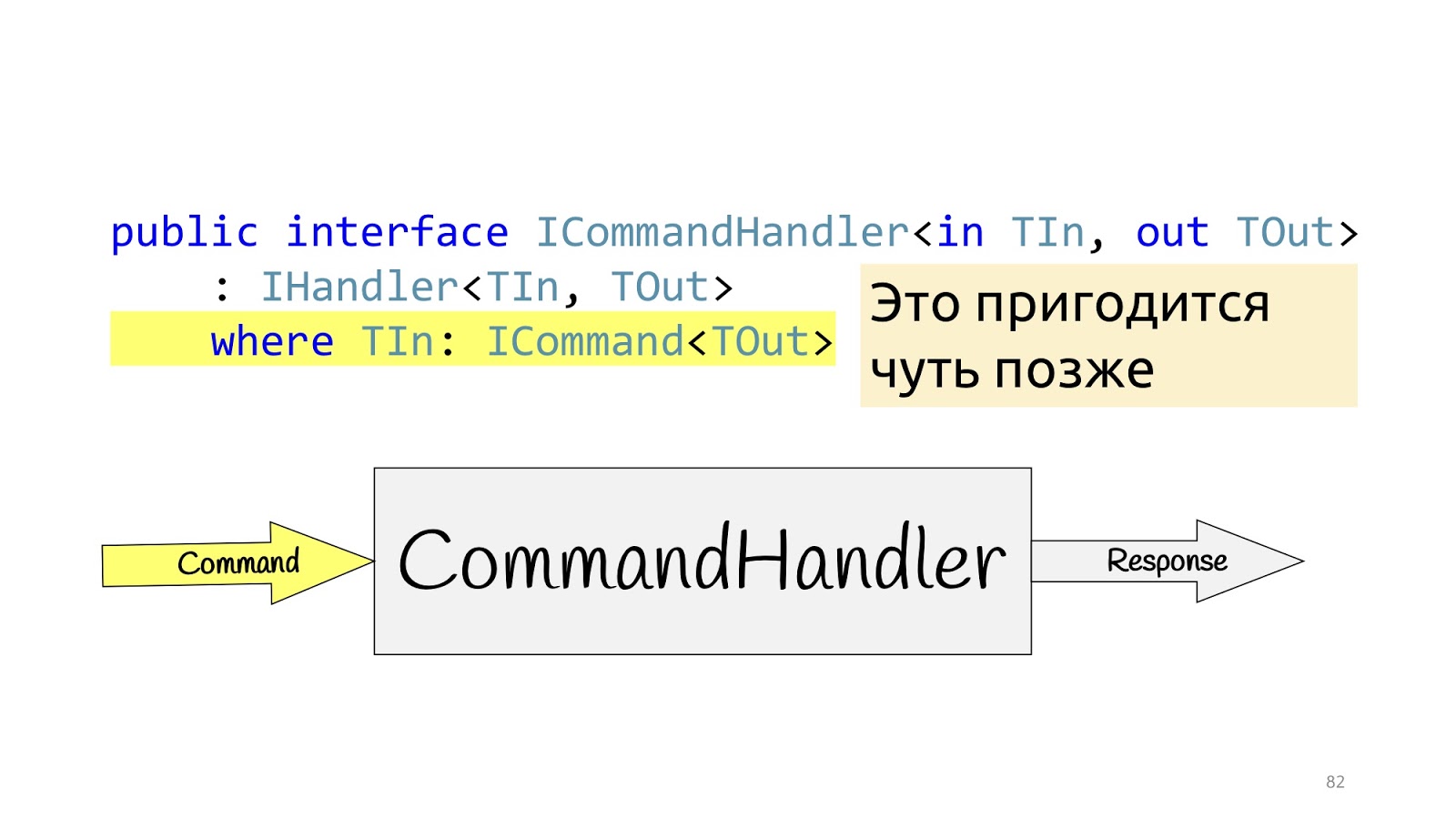

Absolutely the same situation for the CommandHandler with one exception: this generic will be needed for one more trick, which we will see a little further.

Handlers, we announced what kind of implementation they have?

Any problem, yes? It seems that something did not help.

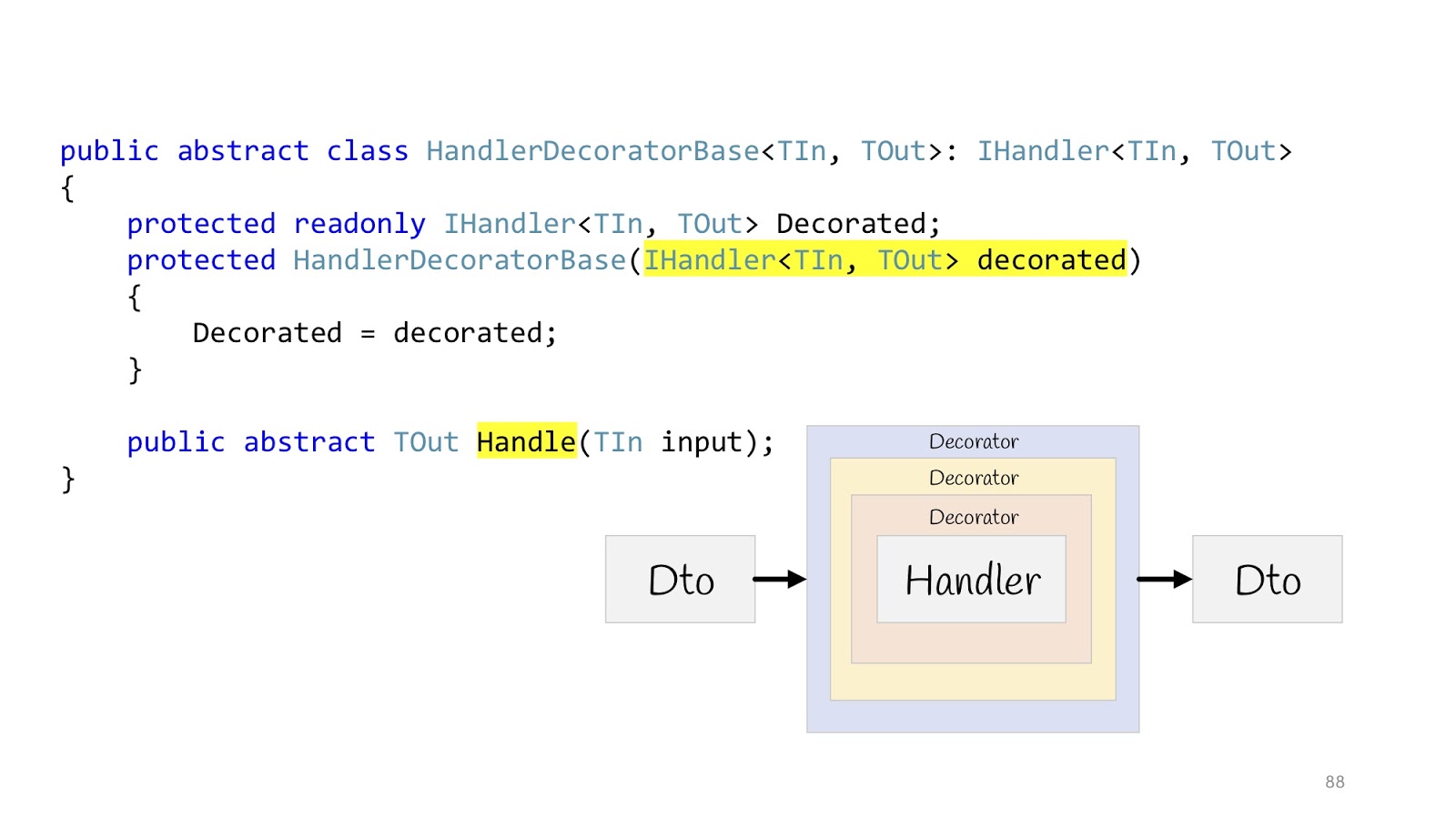

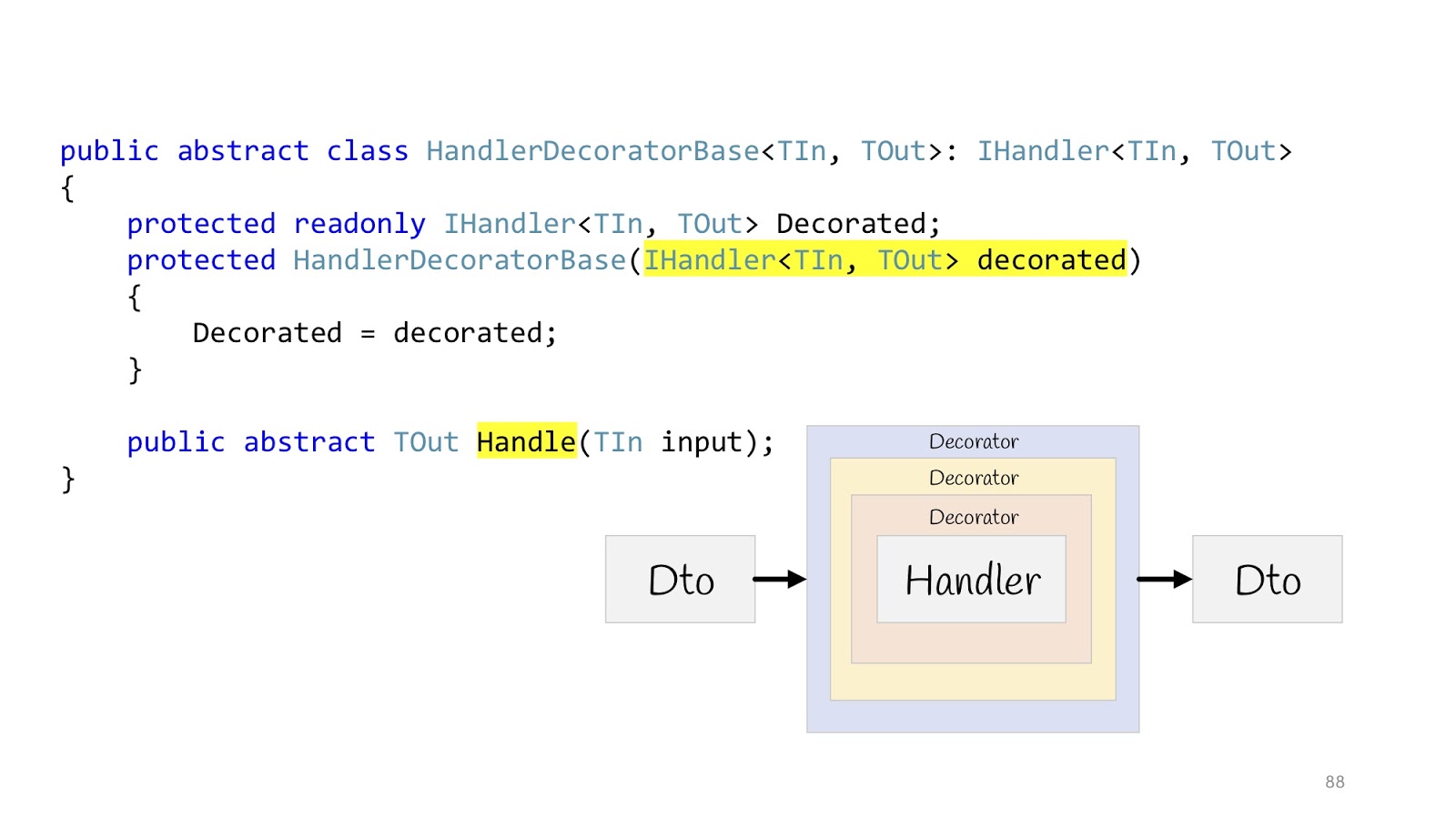

But it did not help, because we are still in the middle of the road, we still need to refine a little, and this time we will need to use the decorator pattern, namely, its remarkable layout feature. The decorator can be wrapped in the decorator, wrapped in the decorator, wrapped in the decorator - continue until you get bored.

Then everything will look like this: there is an input Dto, it enters the first decorator, the second, the third, then we go into the Handler and also exit it, go through all the decorators and return Dto in the browser. We declare an abstract base class in order to later inherit, the body of Handler is passed to the constructor, and we declare the abstract method Handle, in which the additional logic of the decorators will be hung.

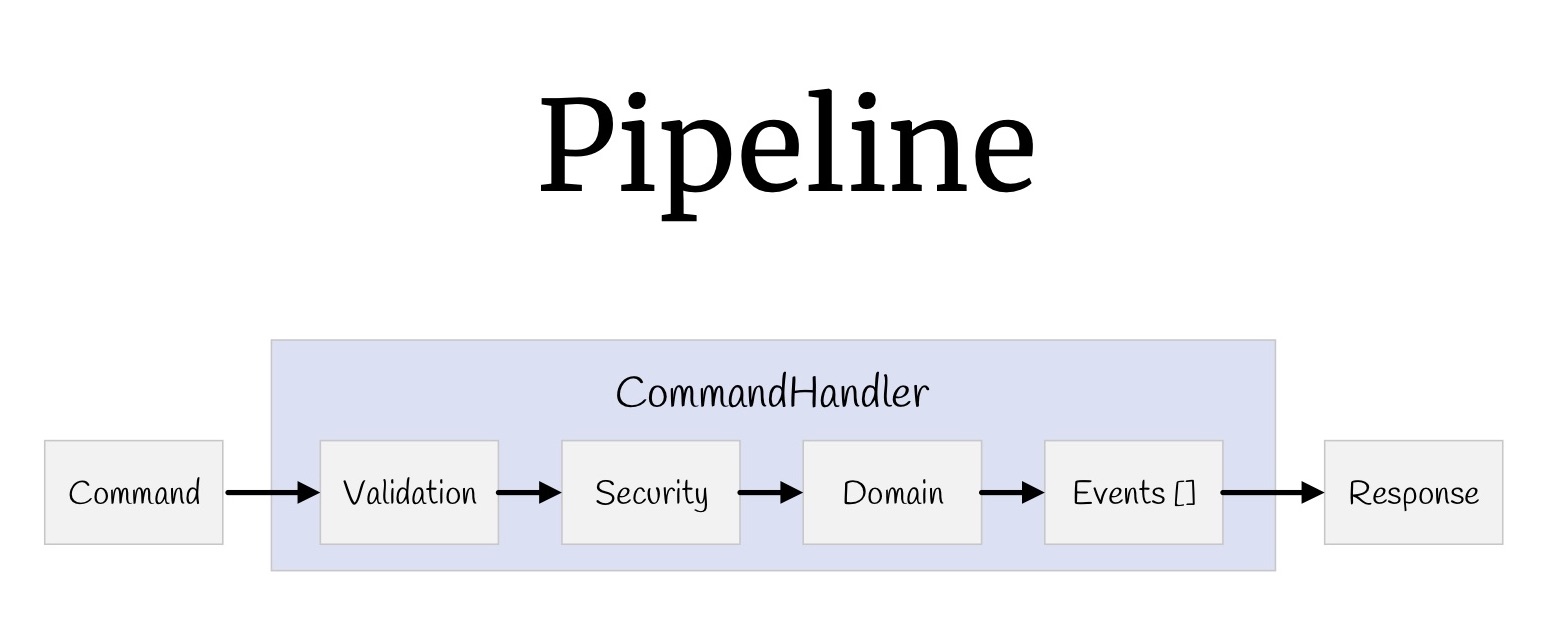

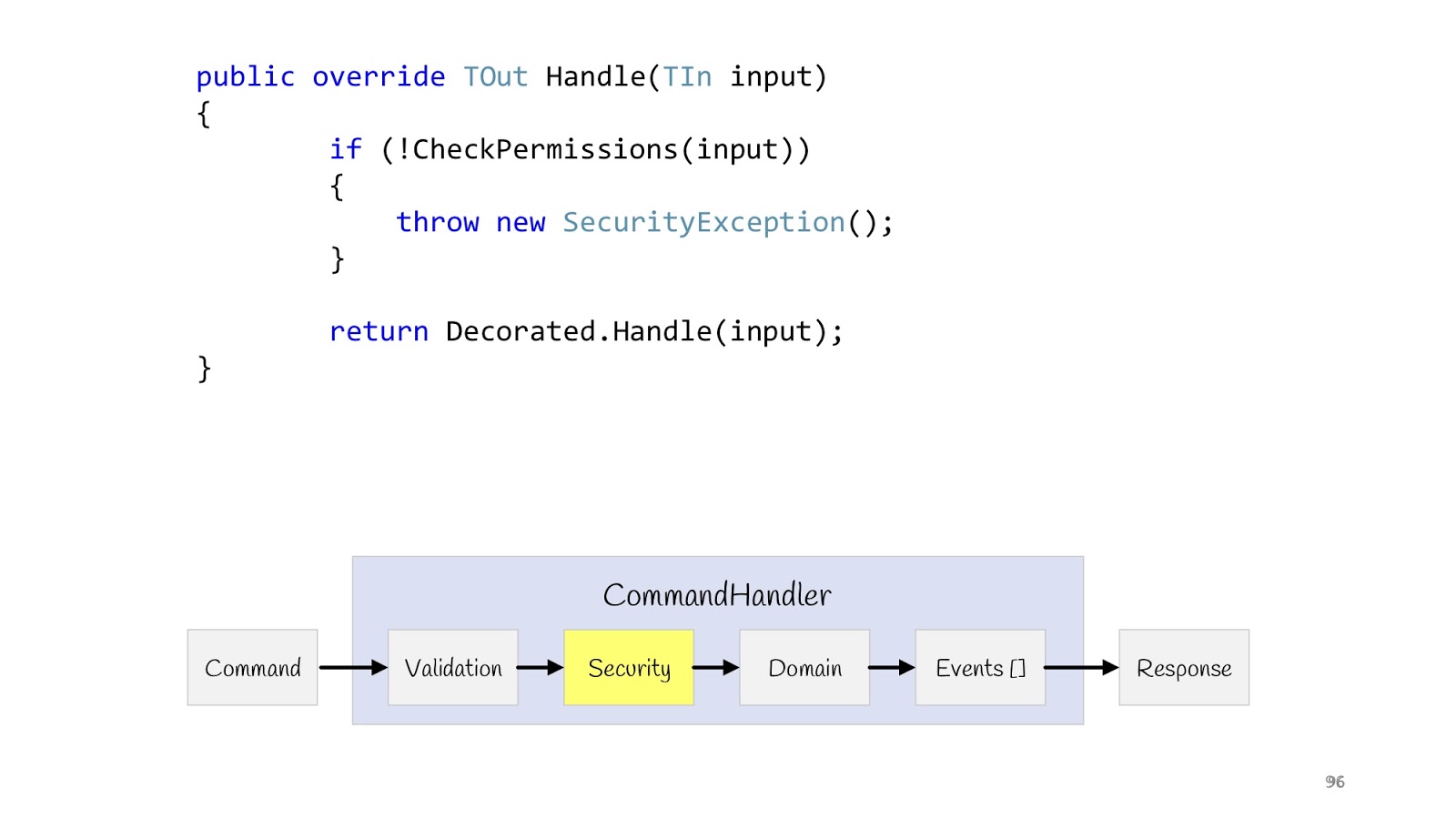

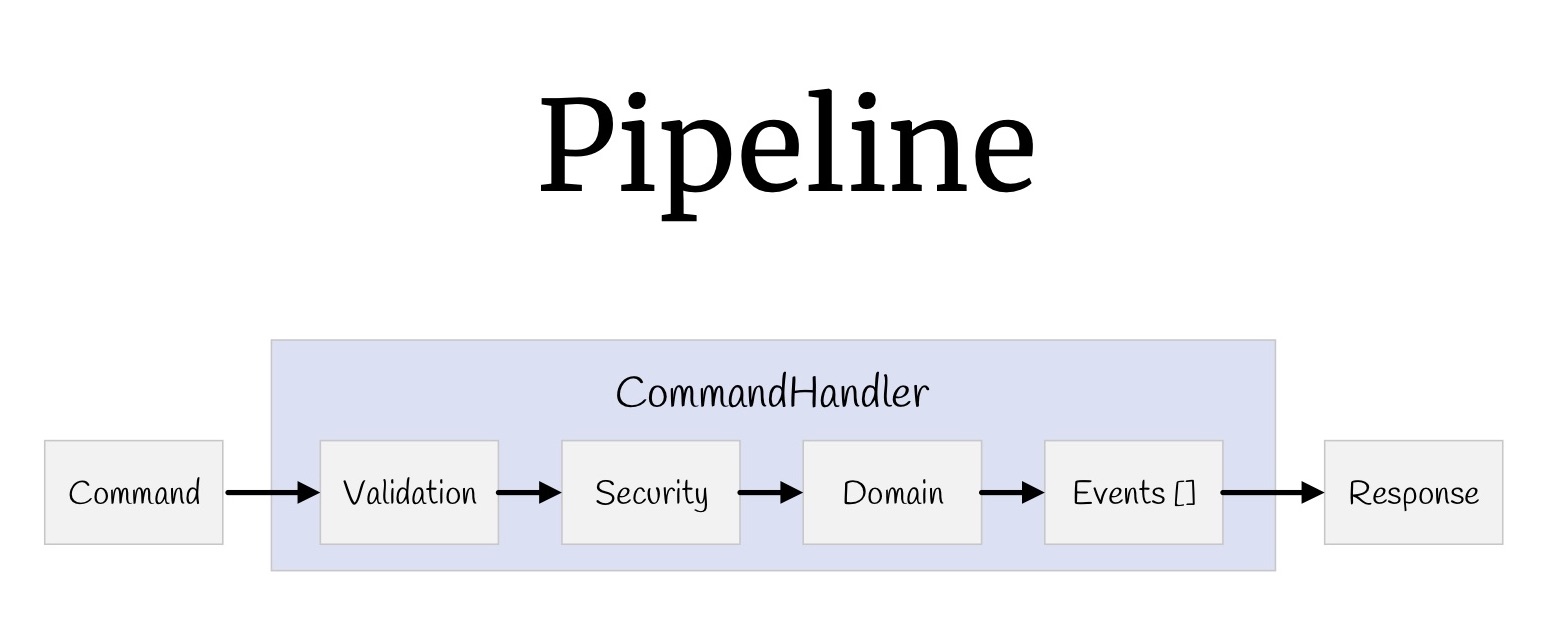

Now with the help of decorators you can build a pipeline. Let's start with the teams. What did we have? Input values, validation, access control, the logic itself, some events that occur as a result of this logic, and return values.

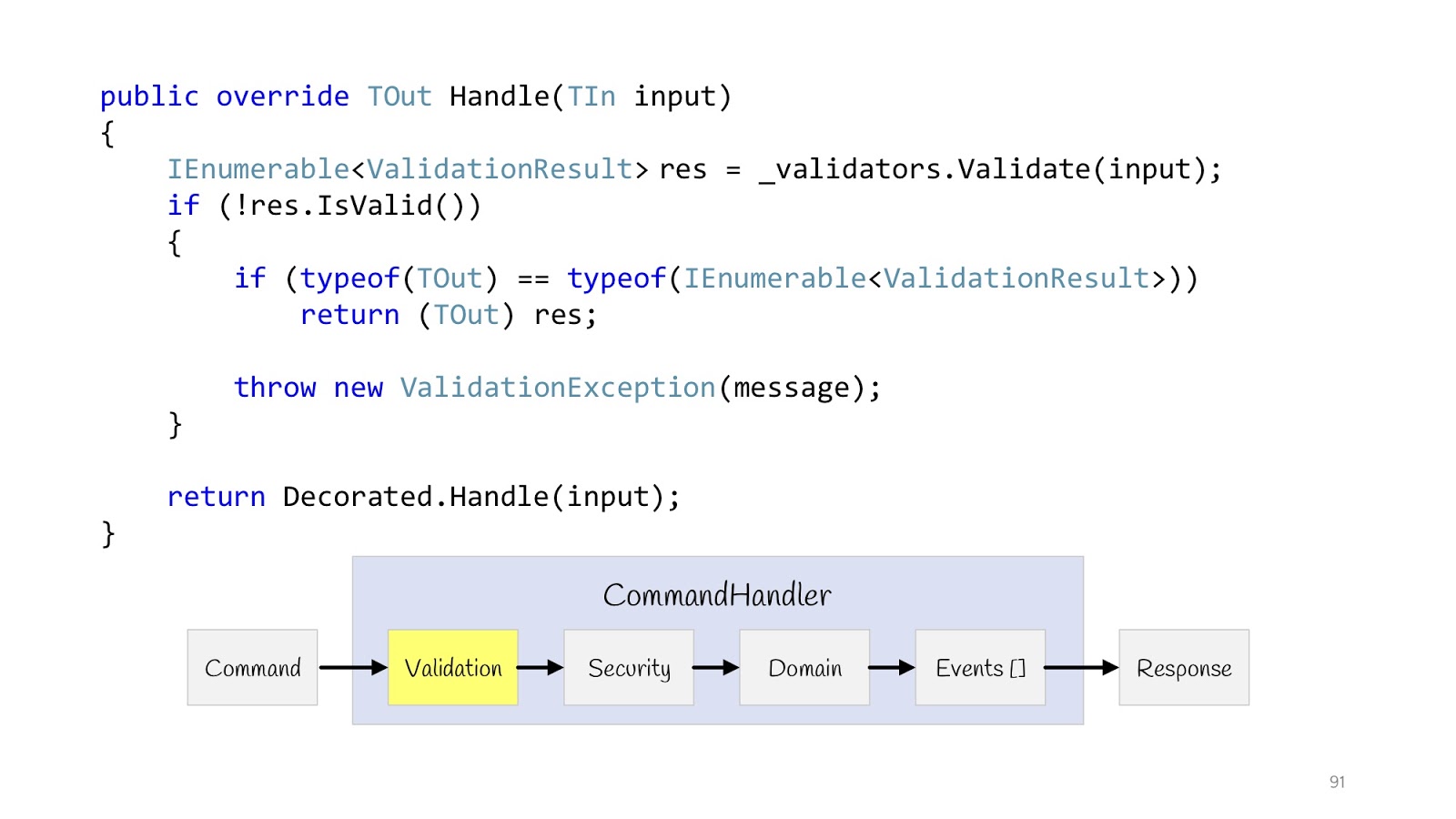

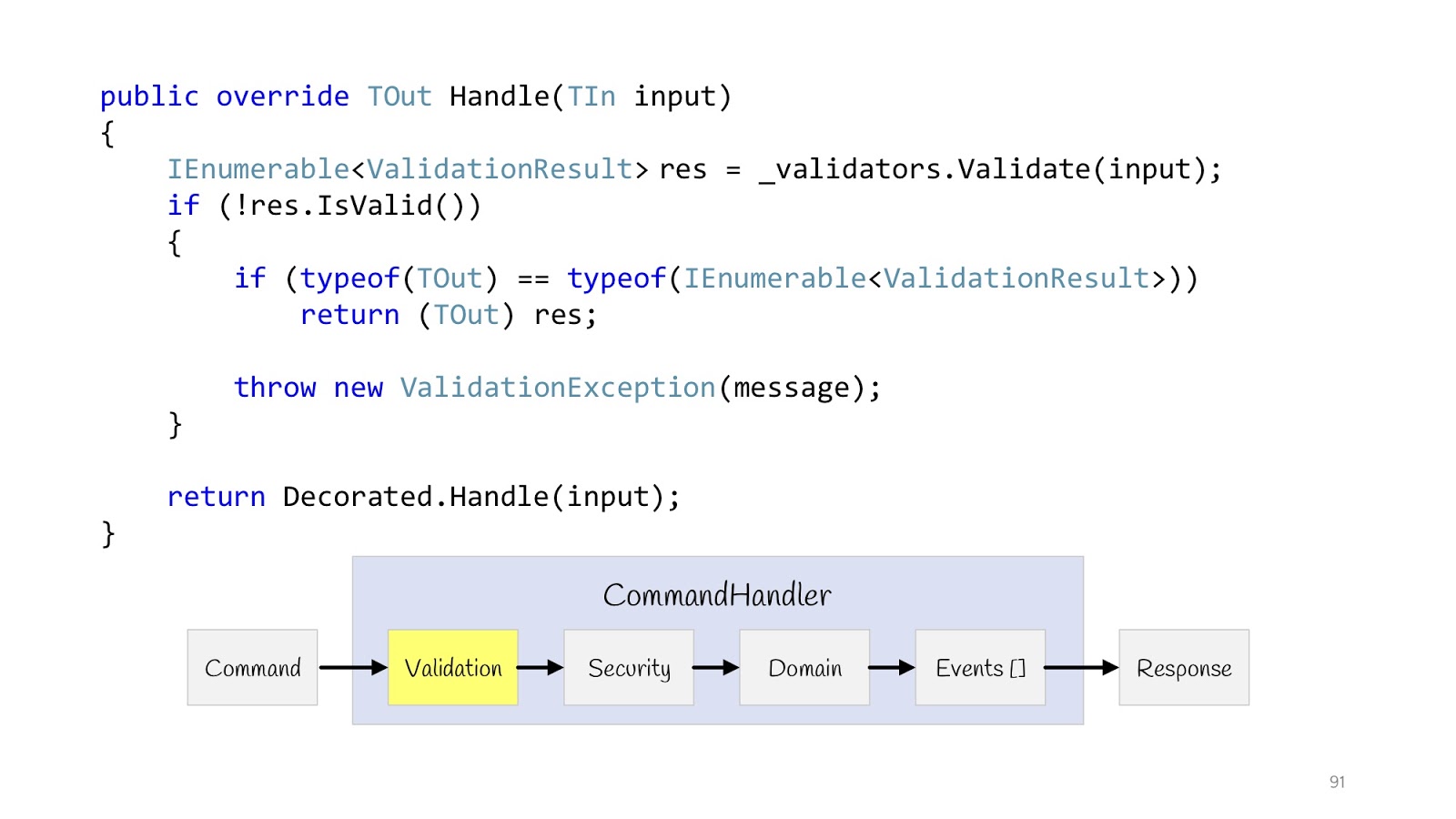

Let's start with validation. We announce the decorator. IEnumerable comes from the constructor of this validator of type T validators. We execute them all, check if validation fails and the return type is

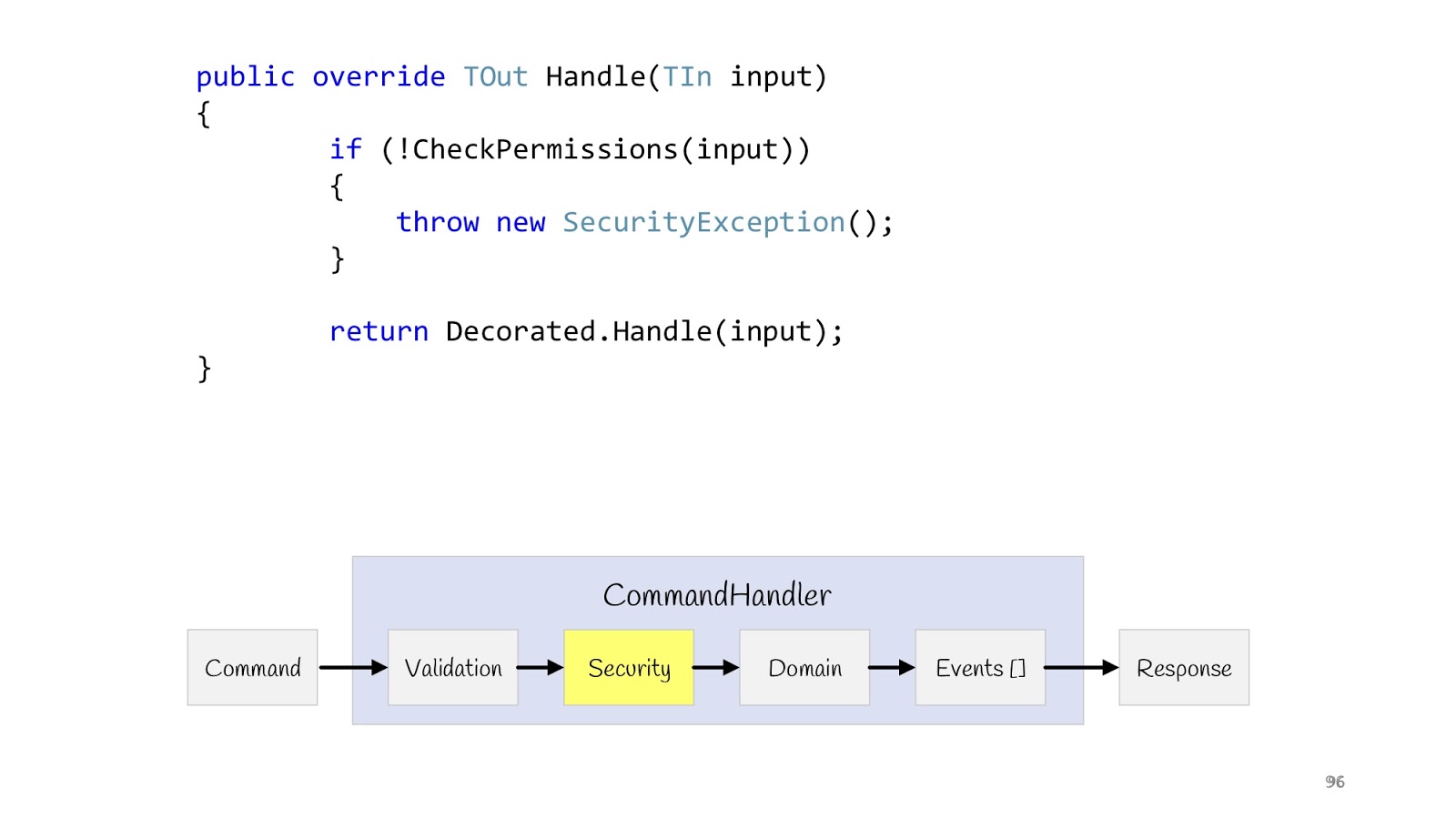

The next step is Security. Also we declare the decorator, we do the CheckPermission method, we check. If suddenly something went wrong, everything, we do not continue. Now, after we have carried out all the checks and are sure that everything is fine, we can execute our logic.

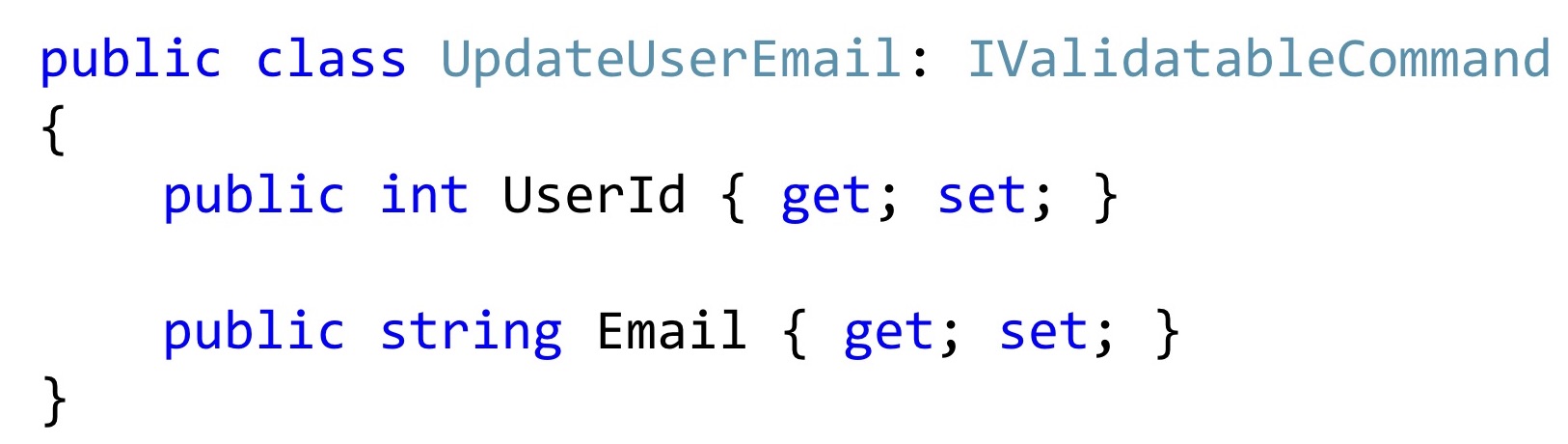

Before showing the implementation of logic, I want to start a little bit earlier, namely, with the input values that come there.

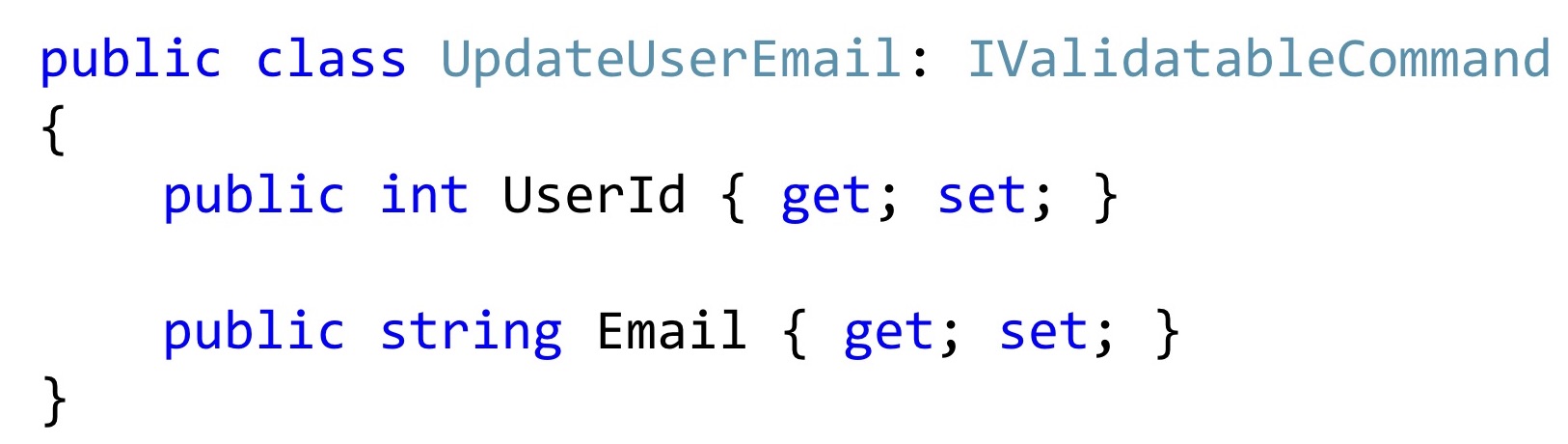

Now, if we single out such a class this way, then most often it can look something like this. At least this is the code that I see in my daily work.

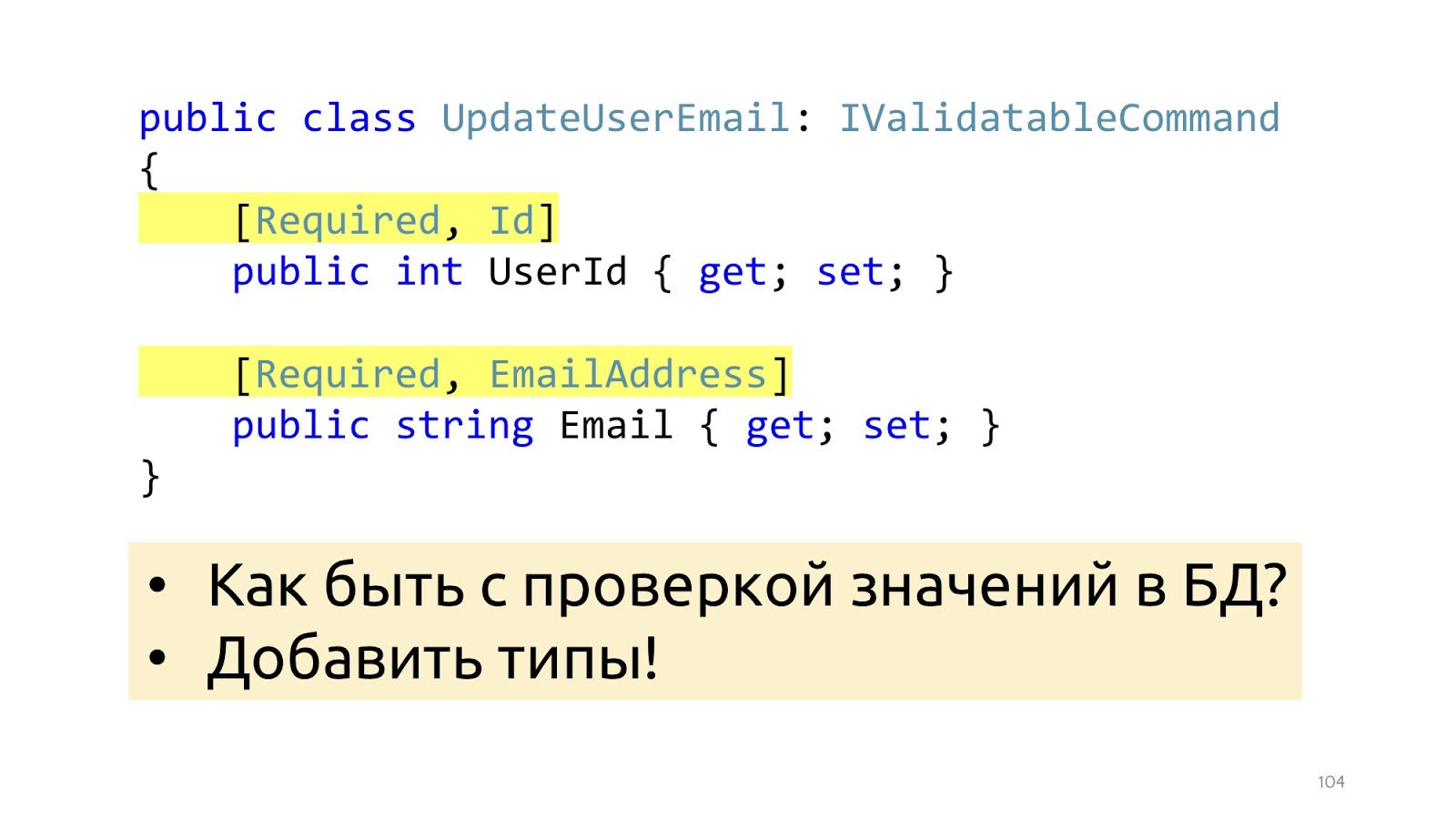

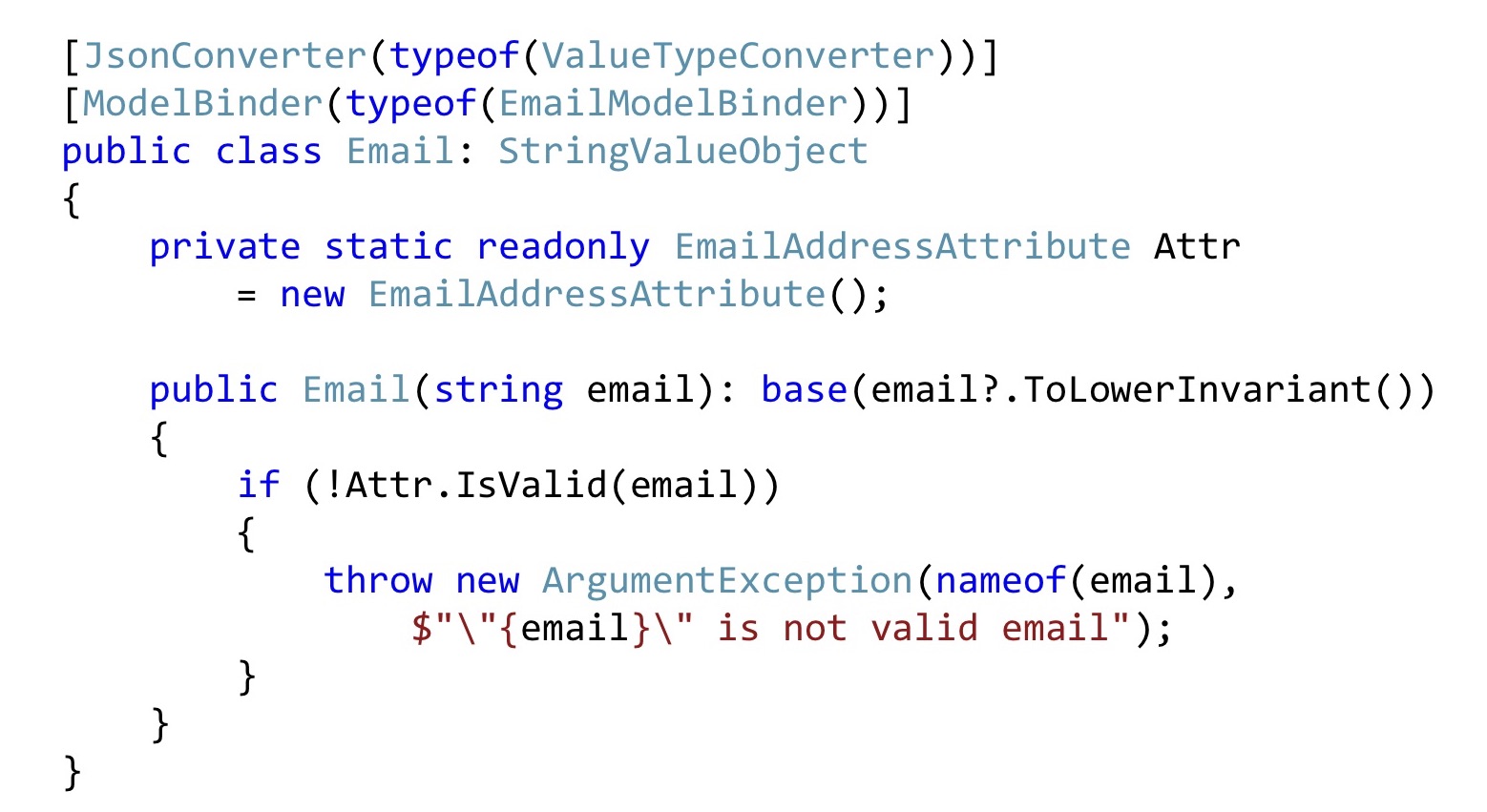

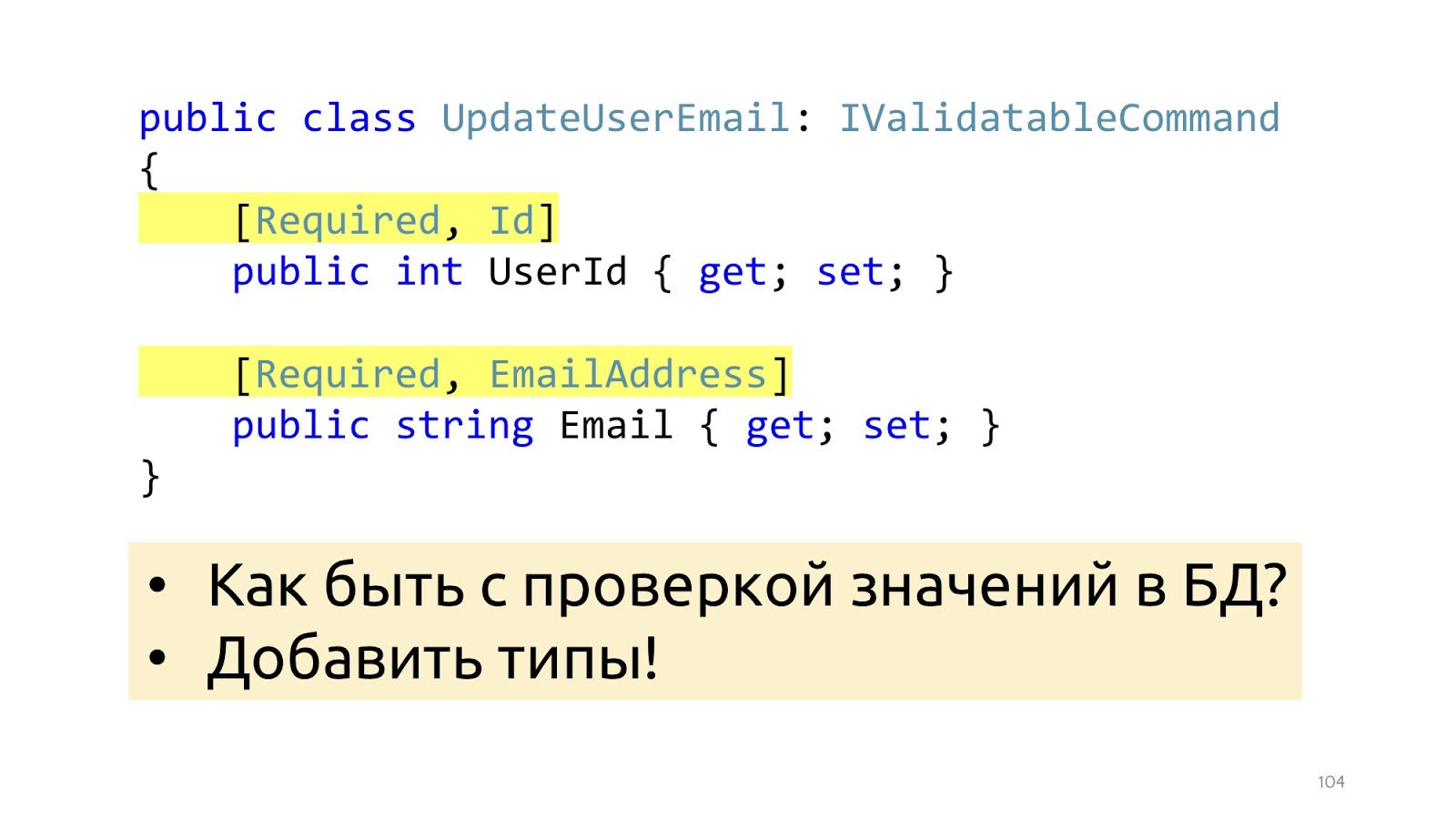

In order for validation to work, we add here some attributes that tell us what kind of validation it is. This will help in terms of data structure, but will not help with such validation as checking values in the database. Here, just EmailAddress, it is not clear how, where to check how to use these attributes in order to go to the database. Instead of attributes, you can go to special types, then this problem will be solved.

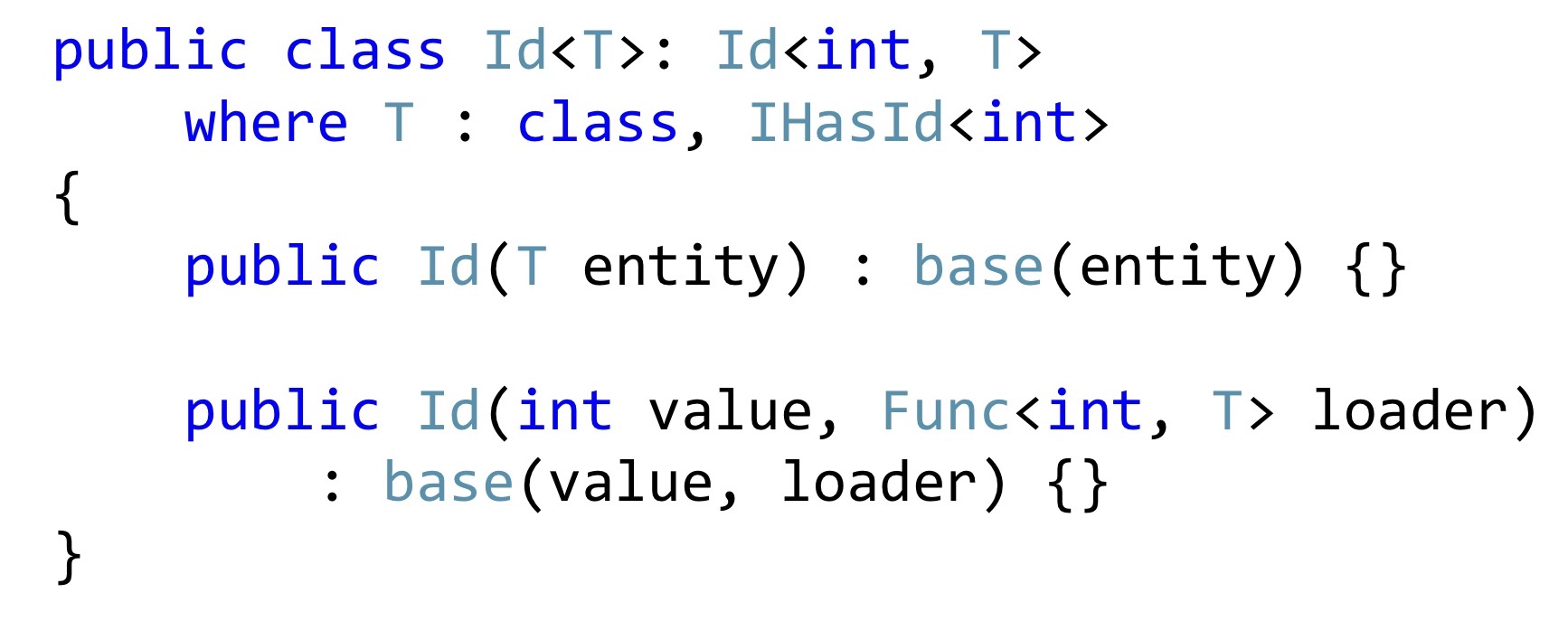

Instead of the primitive

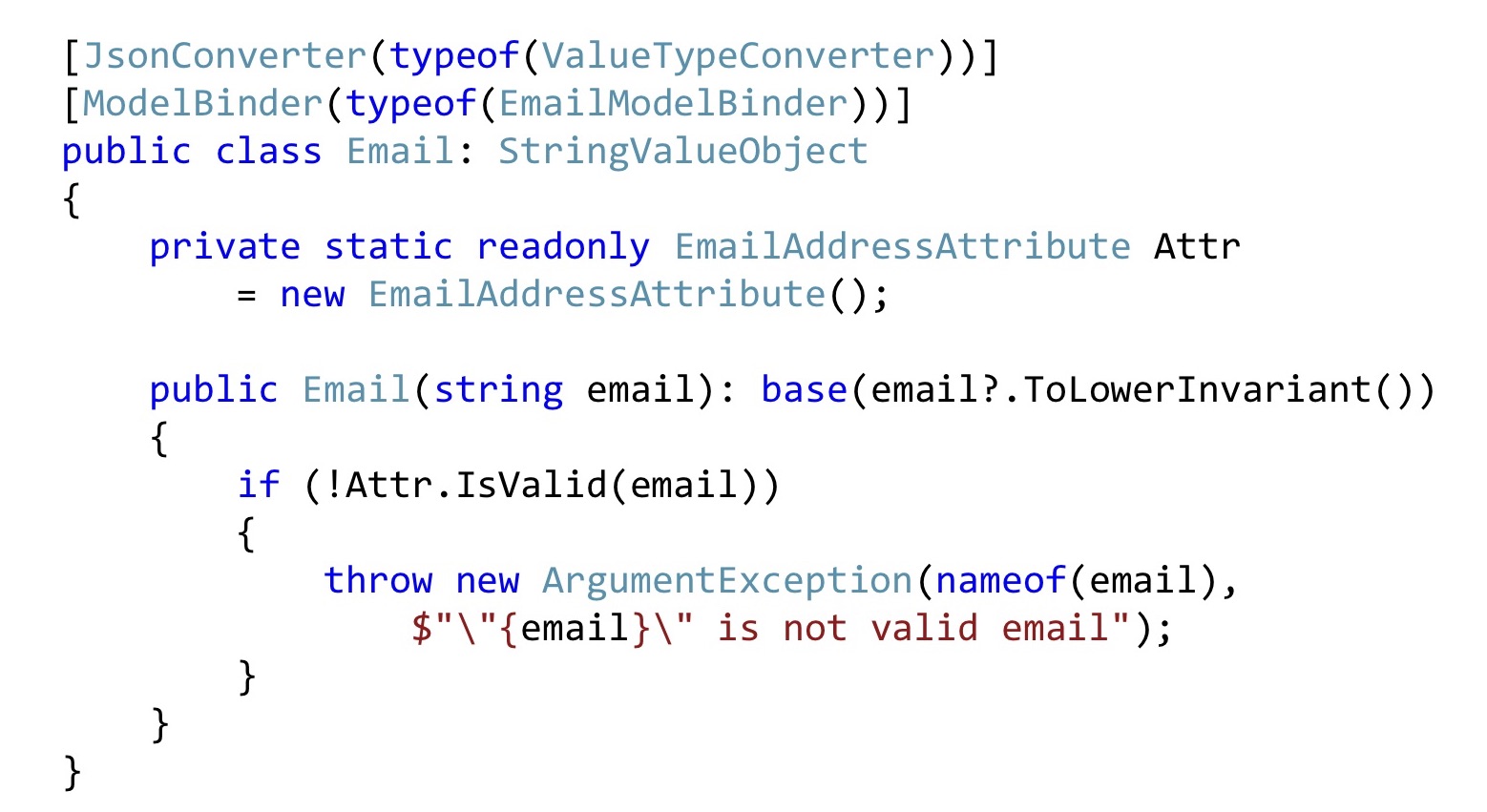

We do the same with Email. Let's convert all Emails to the bottom line so that everything looks the same to us. Next we take the Email attribute, declare it as static for compatibility with ASP.NET validation and here we just call it. That is, so too can be done. In order for the ASP.NET infrastructure to pick up all this, you will have to slightly change the serialization and / or ModelBinding. The code is not very much there, it is relatively simple, so I will not stop there.

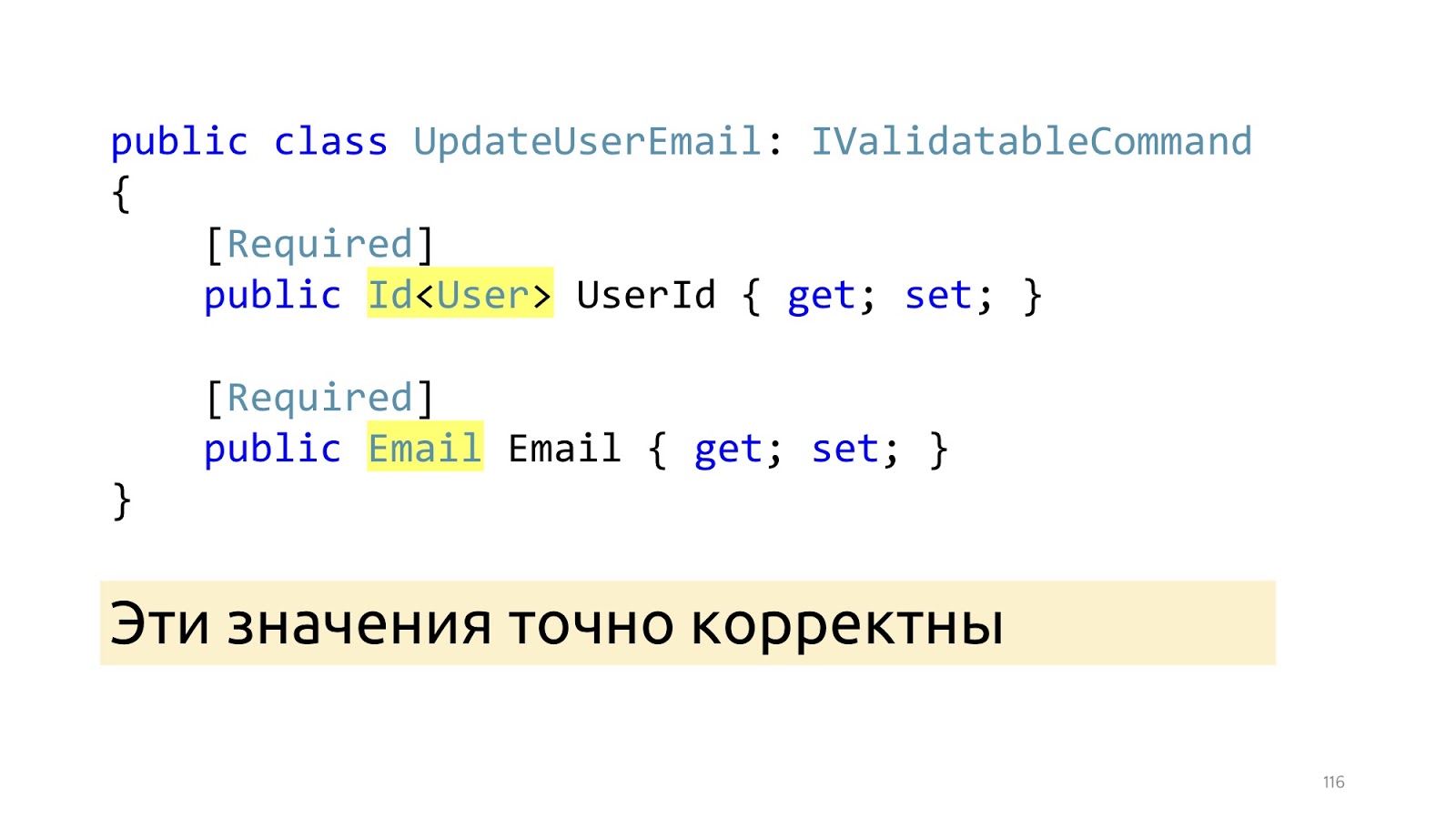

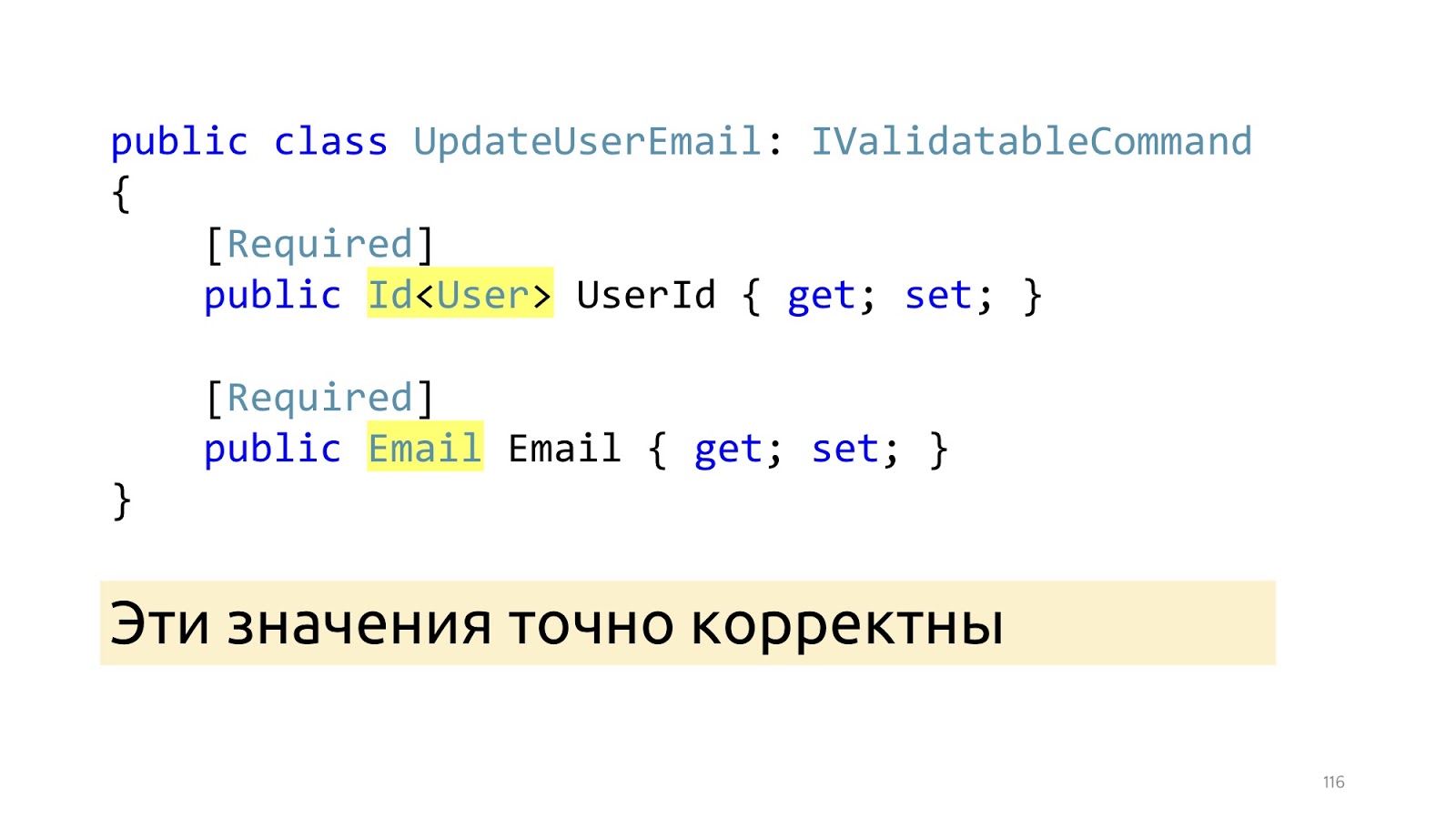

After these changes, instead of primitive types, we have specialized types here: Id and Email. And after these ModelBinder and updated deserializer have worked, we know for sure that these values are correct, including the values in the database. "Invariants"

The next point on which I would like to dwell is the state of invariants in the class, because quite often the anemic model is used, in which there is just a class, many getters-setters, it is completely incomprehensible how they should work together. We work with complex business logic, so it is important for us that the code be self-documenting. Instead, it is better to declare a real constructor with an empty ORM, you can declare it protected so that programmers in their application code cannot call it, and ORM can. Here we are no longer sending a primitive type, but an Email type, it is already exactly correct, if it is null, we still throw Exception. You can use any Fody, PostSharp, but soon C # 8 comes out. Accordingly, there will be a Non-nullable reference type, and it is better to wait for its support in the language. The next moment, if we want to change the name and surname, most likely we want to change them together, so there must be an appropriate public method that changes them together.

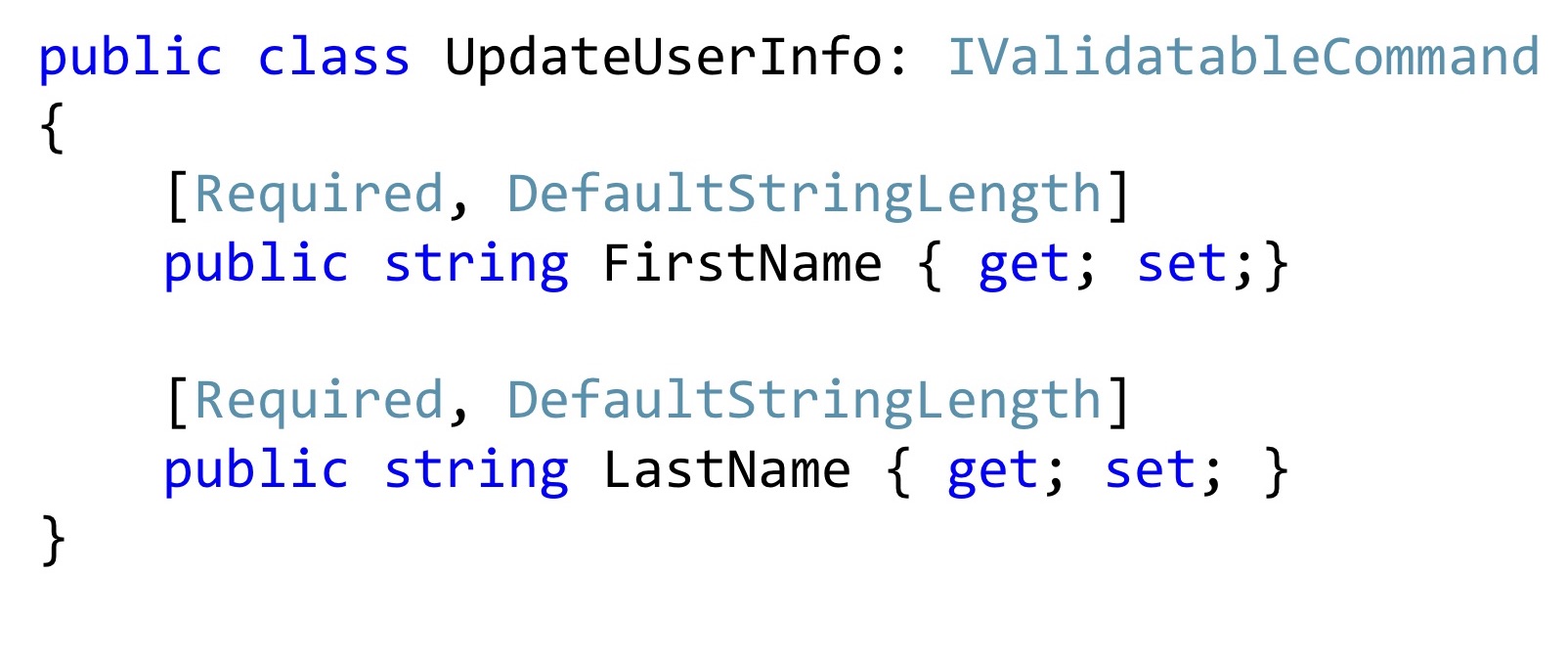

In this public method, we also verify that the length of these strings corresponds to what we use in the database. And if something is wrong, then stop execution. Here I use the same trick. I declare a special attribute and just call it in the application code.

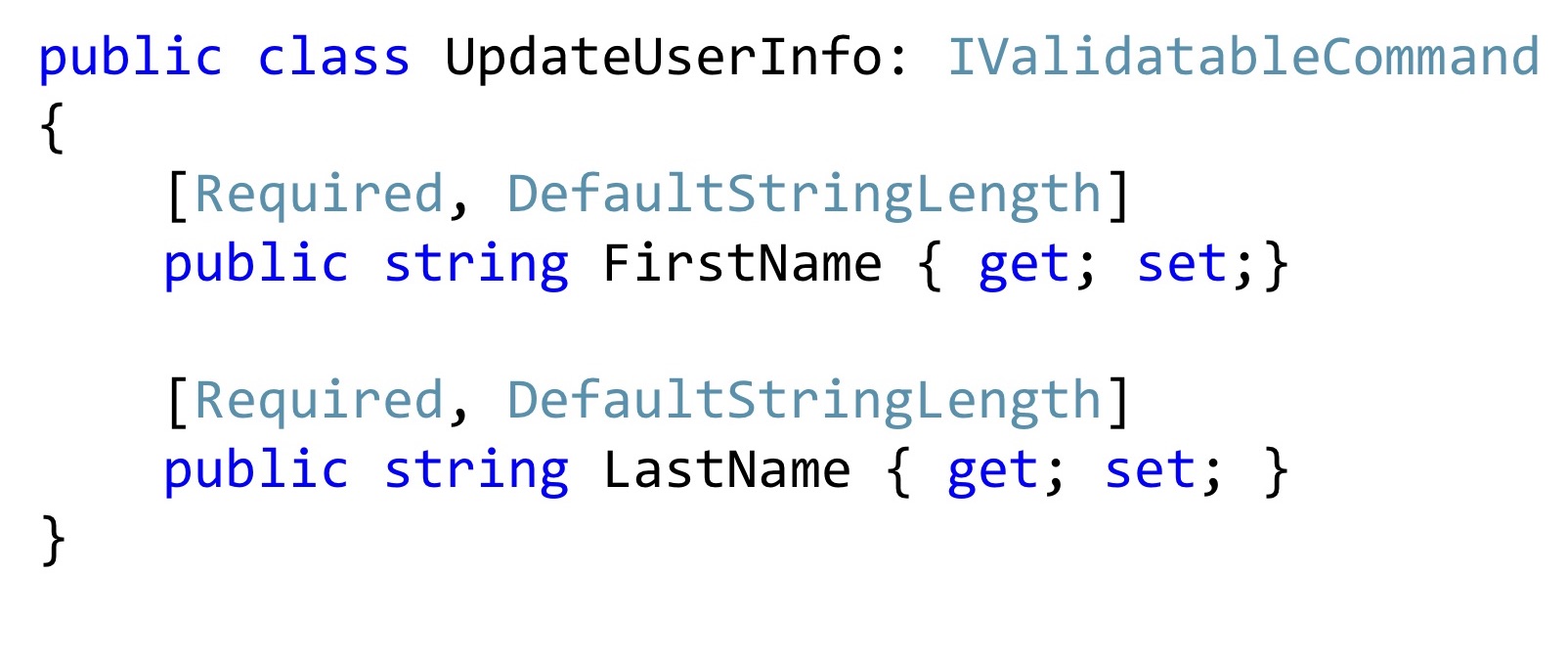

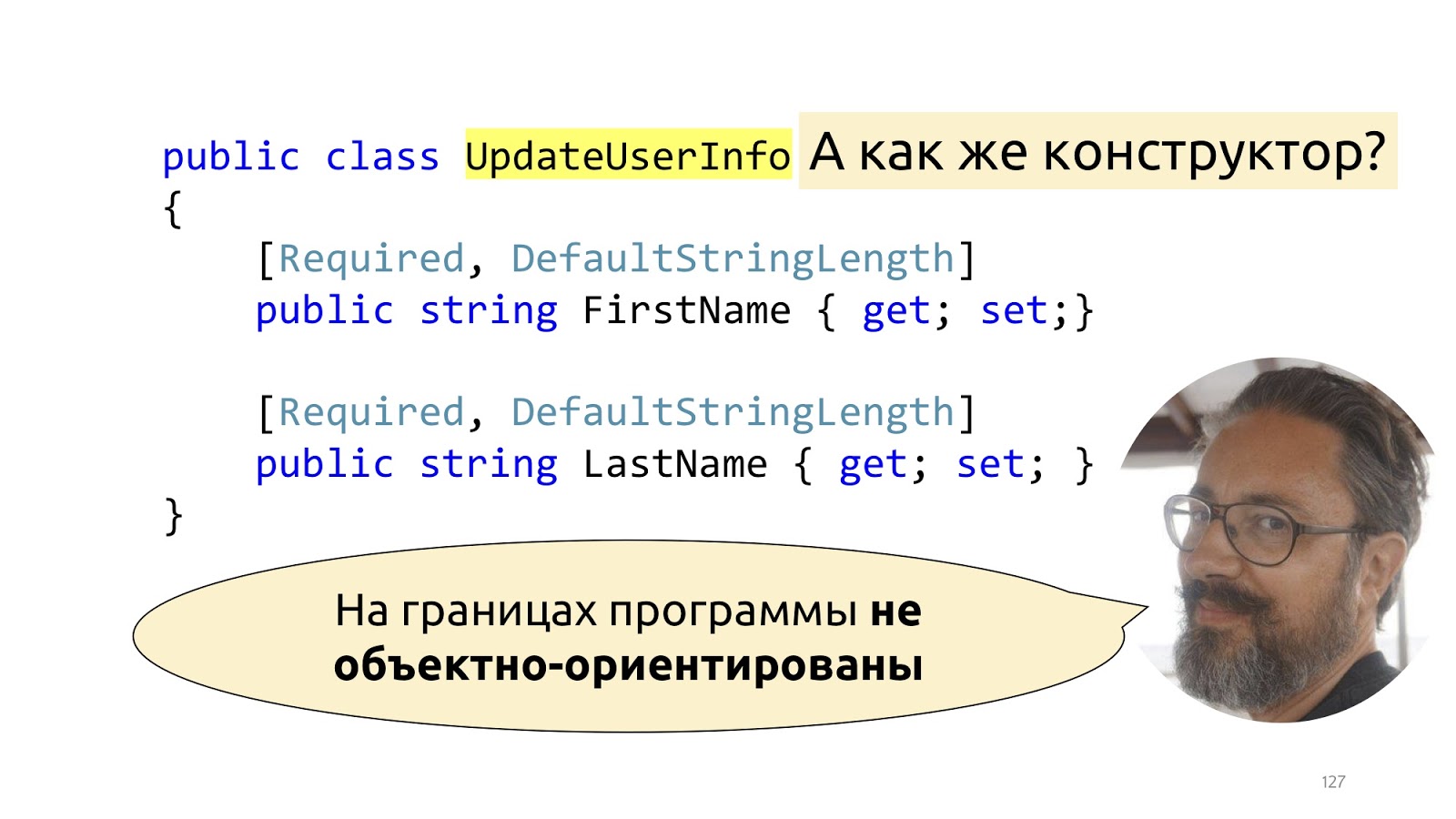

Moreover, such attributes can be reused in Dto. Now, if I want to change the name and surname, I may have such a command to change. Is it worth it to add a special constructor here? It seems to be worth it. It will become better, no one will change these values, will not break them, they will be exactly the right ones.

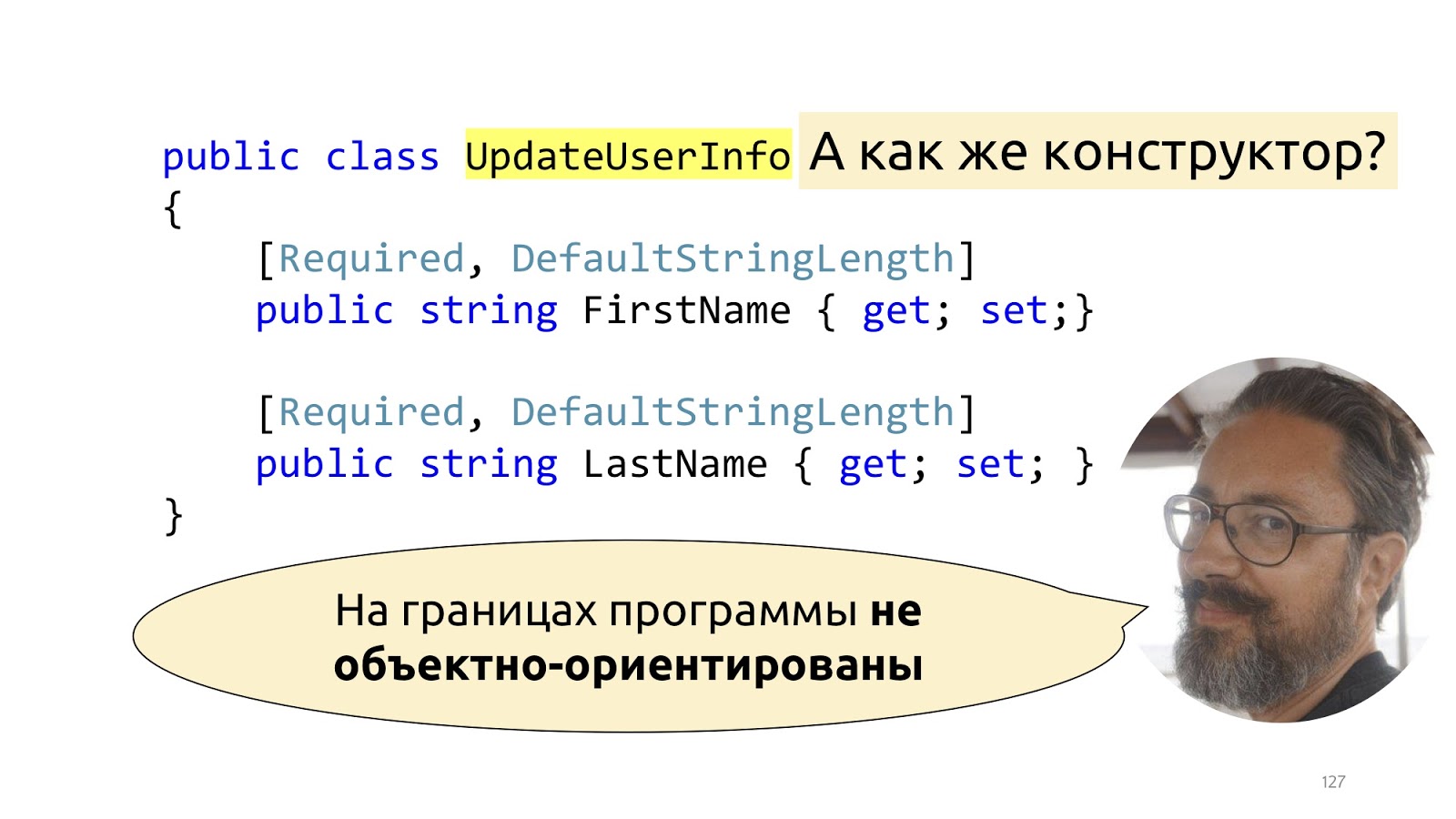

Not really, really. The fact is that Dto is not really objects at all. This is such a dictionary, in which we shove deserialized data. That is, they pretend to be objects, of course, but they have only one responsibility - to be serialized and deserialized. If we try to deal with this structure, we will begin to declare some ModelBinder with designers, something to do, this is incredibly tedious, and, most importantly, it will break with new outputs of new frameworks. All this is well described by Mark Simon in the article “On the boundaries of the program are not object-oriented,” if you are interested, read his post, it’s all described in detail.

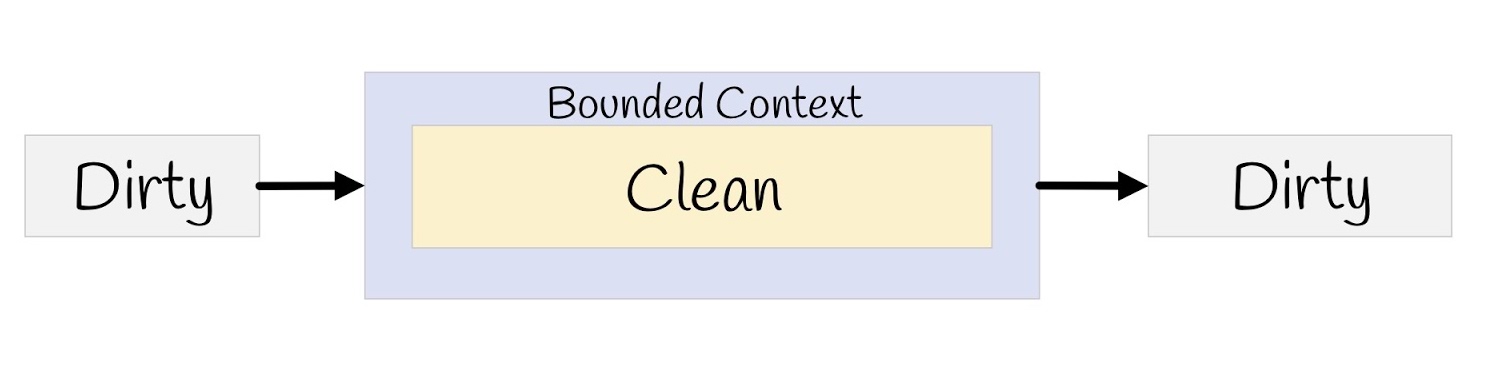

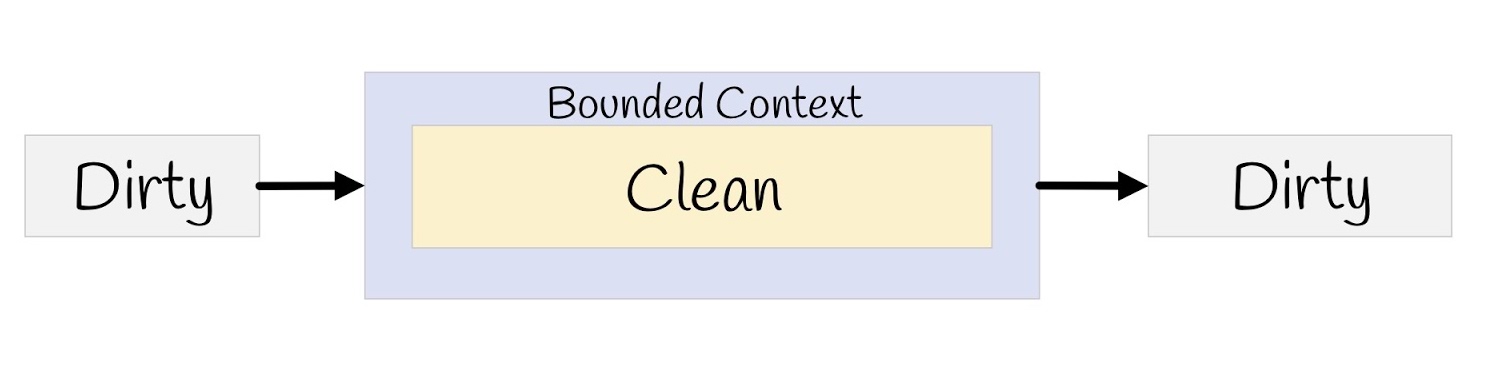

In short, we have a dirty outside world, we put in the input of the check, convert it to our clean model, and then transfer it all back to serialization, to the browser, again to the dirty outside world.

After all these changes have been made, what will Hander look like?

I wrote two lines here in order to make it easier to read, but in general can be written in one. The data is exactly correct, because we have a type system, there is validation, that is, reinforced concrete correct data, we do not need to check them again. This user also exists, there is no other user with such busy email, everything can be done. However, there is still no call to the SaveChanges method, there is no notification, and there are no logs and profilers, right? Moving on.

Domain events.

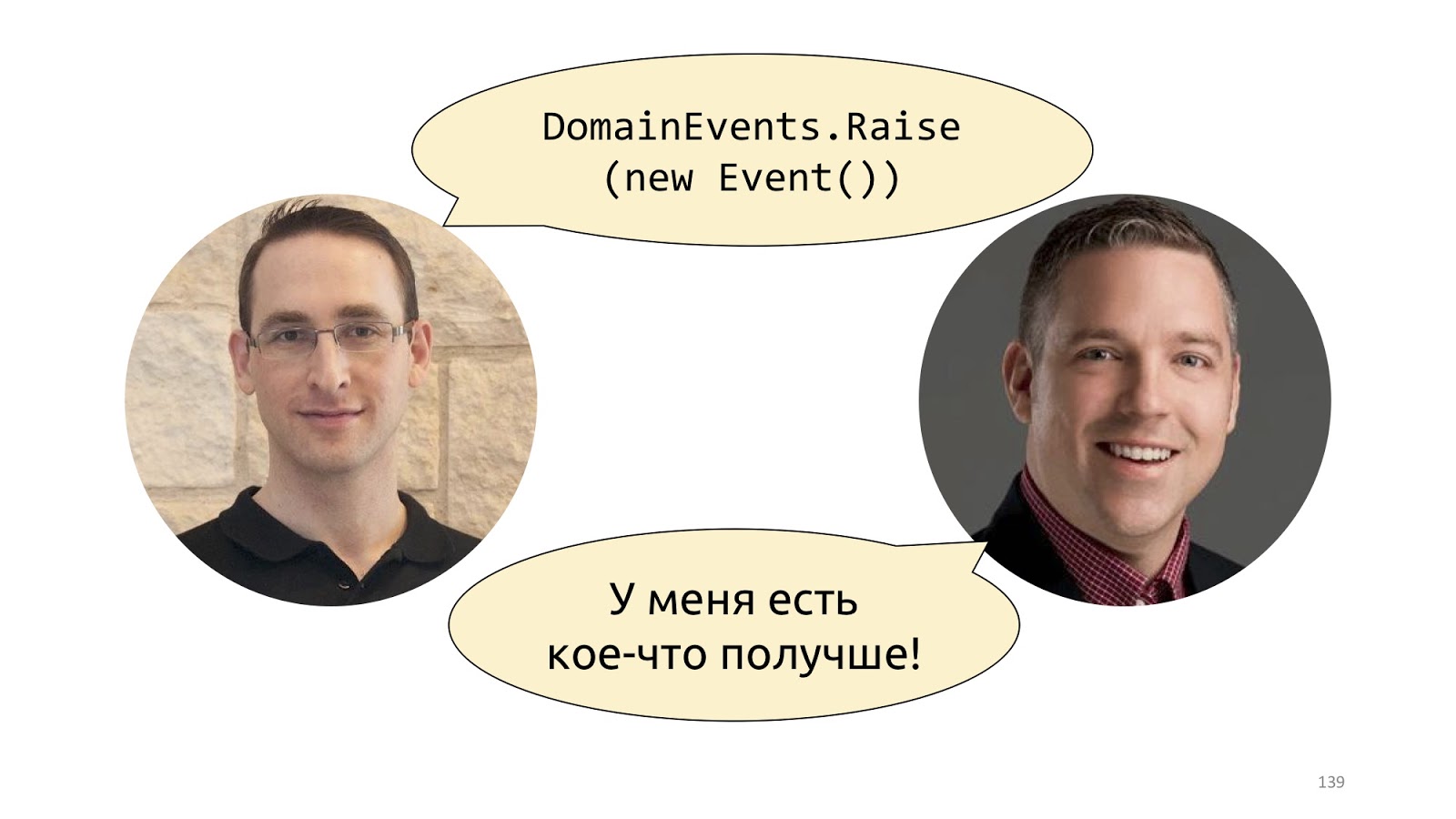

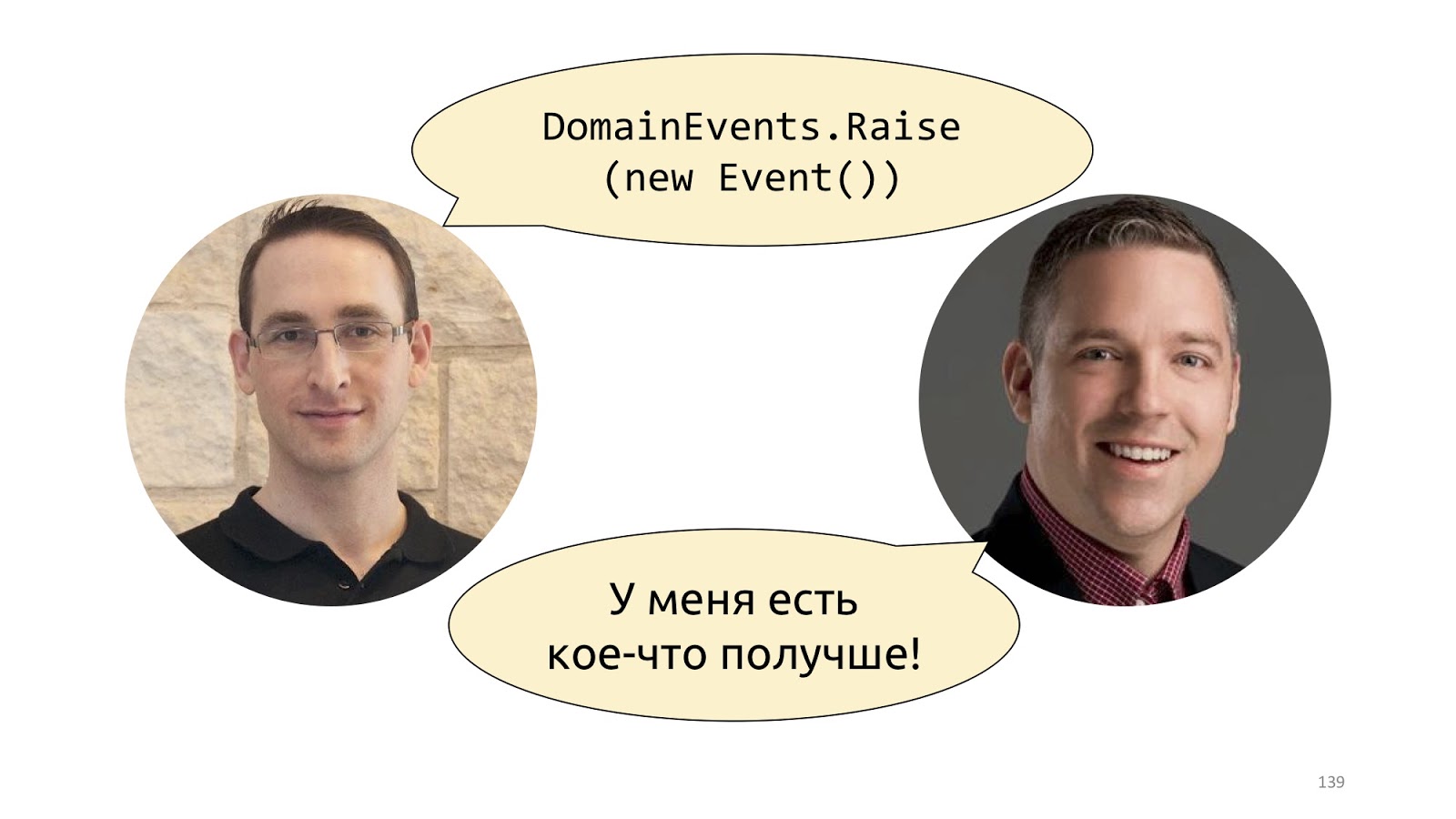

Perhaps, for the first time, Udi Dahan popularized this concept in his post “Domain Events - Salvation” . There he proposes to simply declare a static class with the Raise method and throw out such events. A little later, Jimmy Bogard proposed a better implementation, and it is called “A better domain events events” .

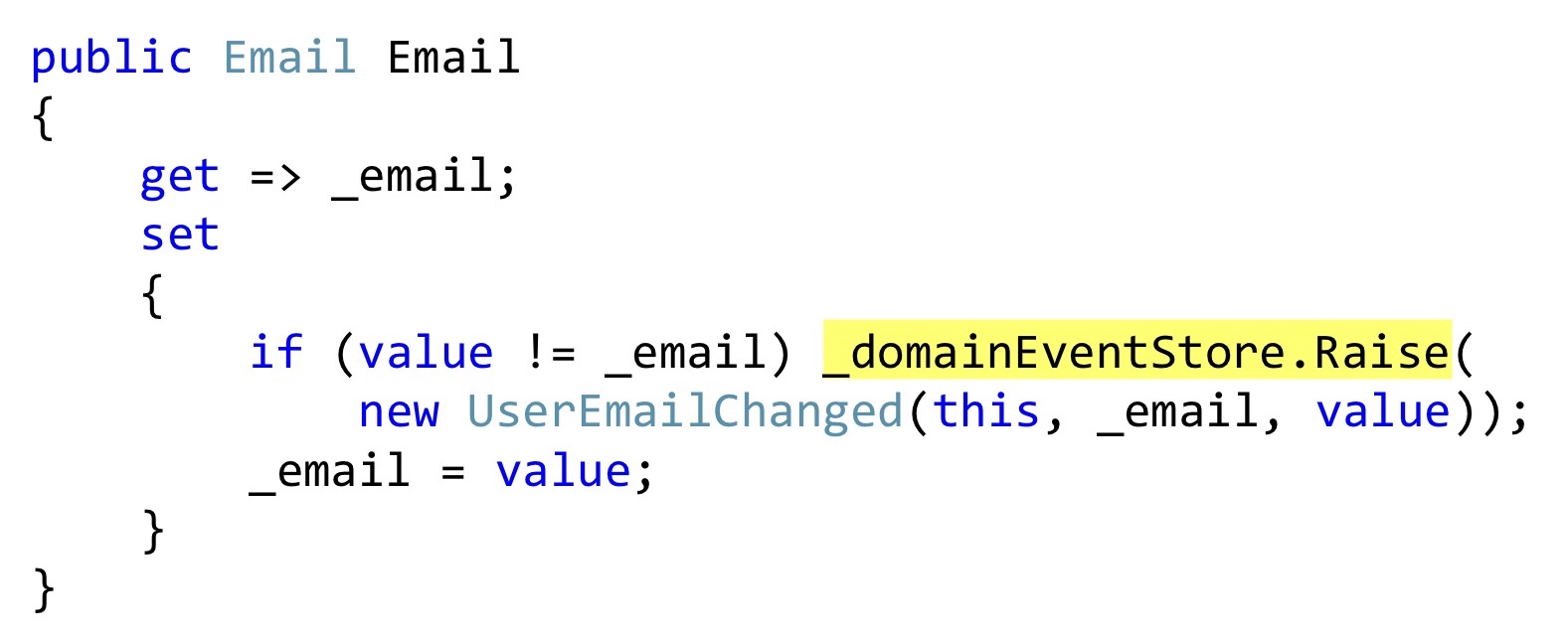

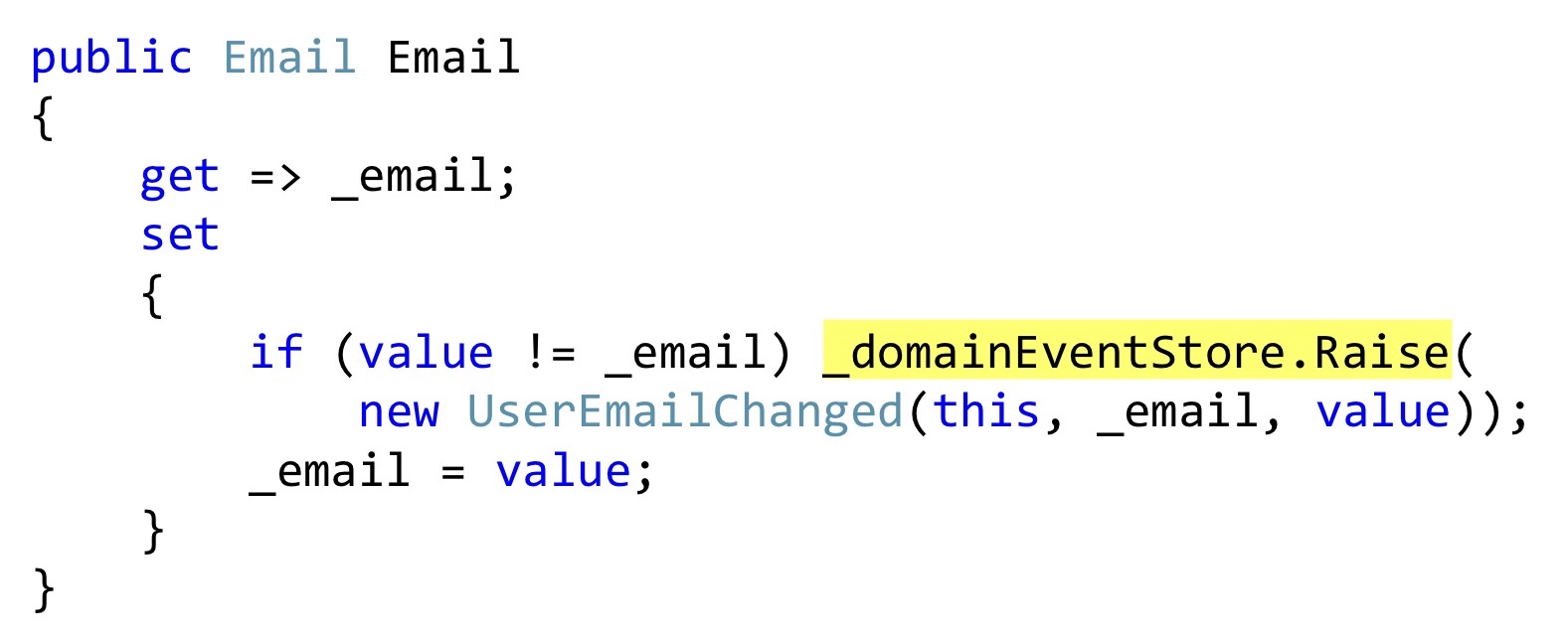

I will show the serialization of Bogard with one small change, but important. Instead of throwing out events, we can declare some list, and in those places where some reaction should take place, right inside the entity, save these events. In this case, this

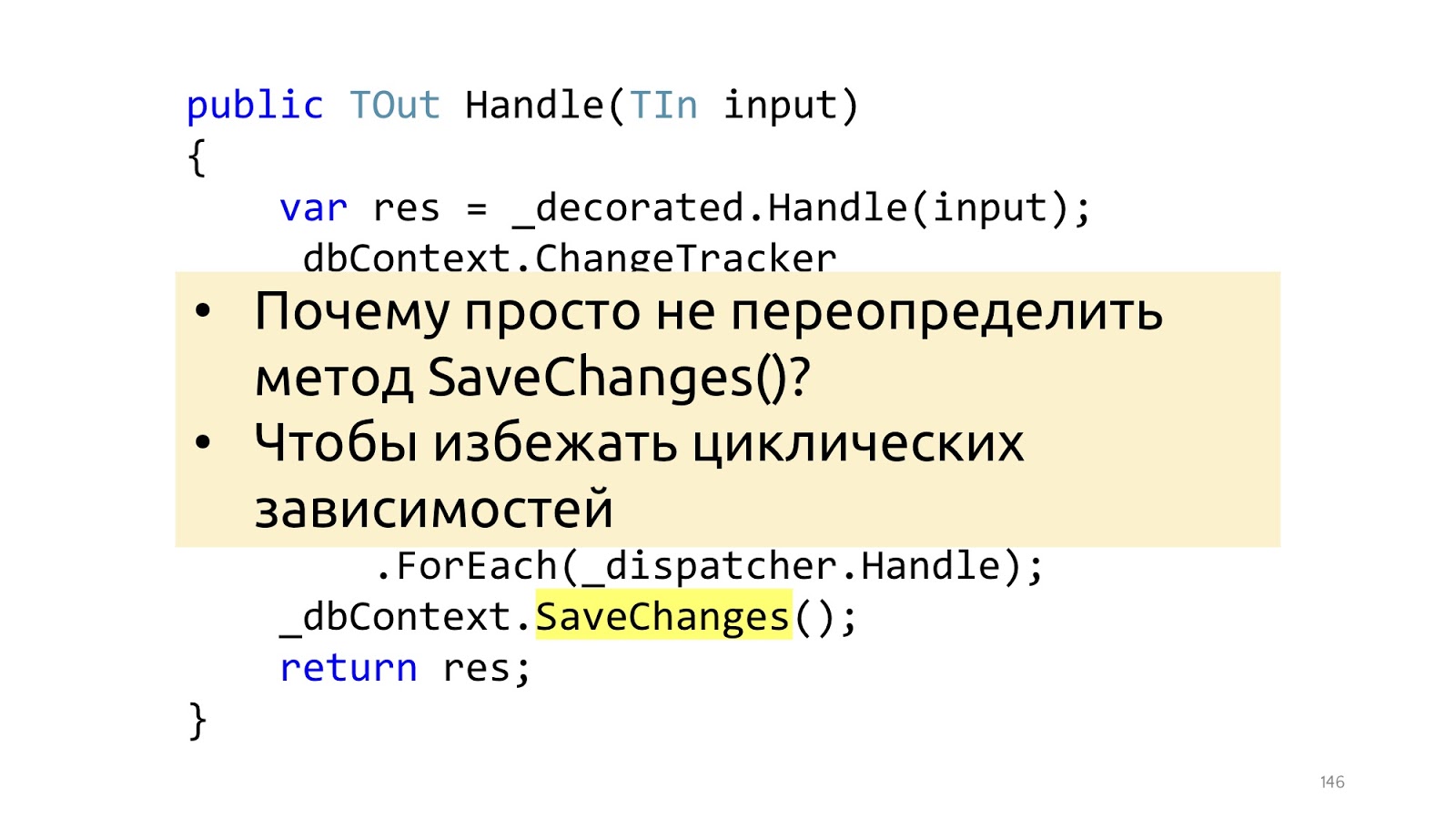

Further, at that moment, when we call the SaveChanges method, we take a ChangeTracker, see if there are any entities that implement the interface, whether they have domain events. And if there is, let's take all these domain events and send them to some dispatcher who knows what to do with them.

The implementation of this dispatcher is a topic for a separate conversation, there are some difficulties with multiple dispatch in C #, but this is also done. With this approach, there is another unobvious advantage. Now, if we have two developers, one can write code that changes this very email, and the other can make a notification module. They are absolutely not connected with each other, they write different code, they are connected only at the level of this domain event of the same Dto class. The first developer simply throws out this class at some point, the second one reacts to it and knows that it needs to be sent to email, SMS, push notifications to the phone and all the other million notifications, taking into account all the preferences of users, which usually are.

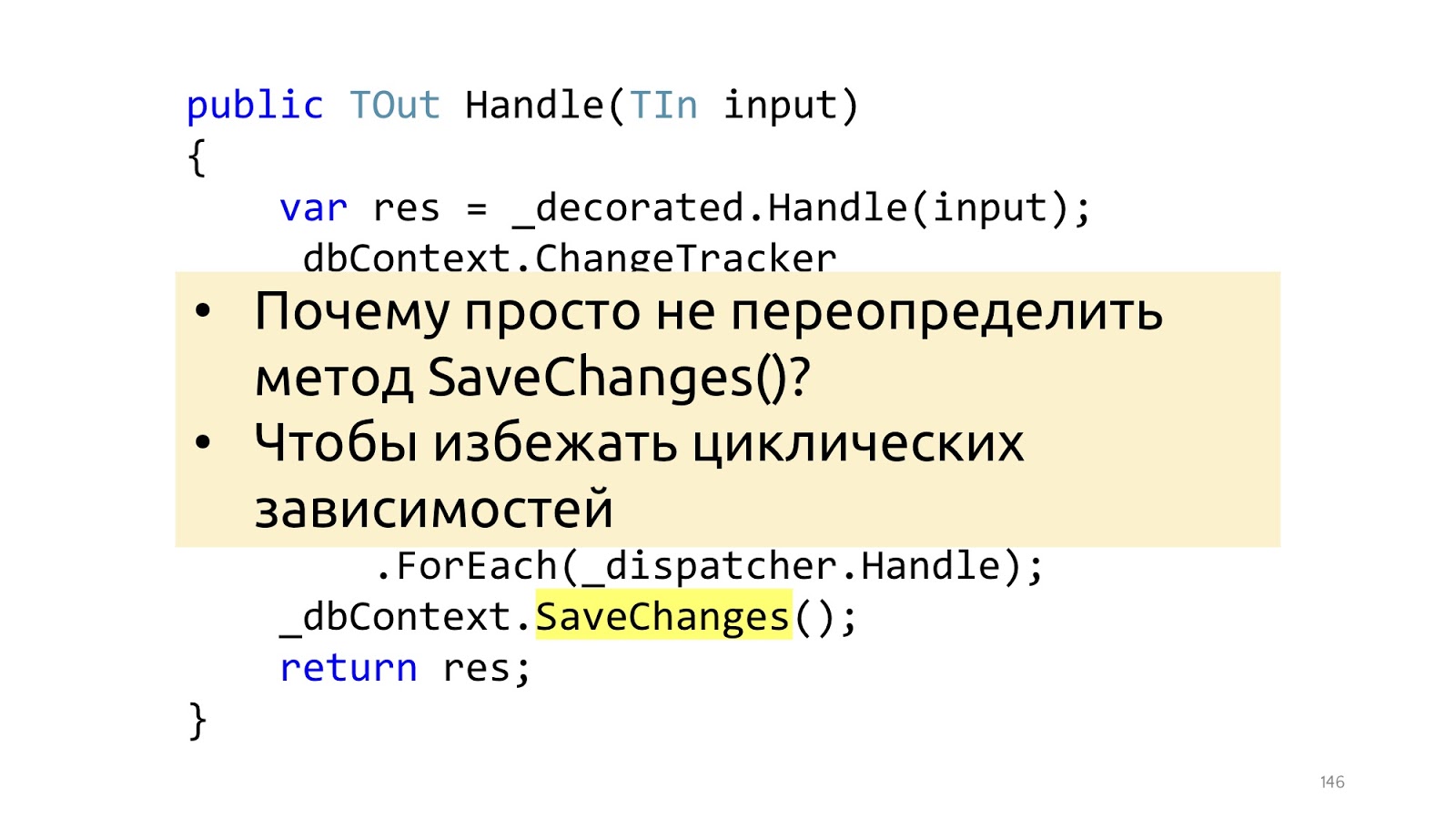

Here is the smallest, but important note. The Jimmy article uses an overload of the SaveChanges method, and it’s better not to. And to do it better in the decorator, because if we overload the SaveChanges method and we needed dbContext in the Handler, we will get circular dependencies. You can work with this, but the solutions are slightly less convenient and a little less beautiful. Therefore, if the pipeline is built on decorators, then it makes no sense to do it differently.

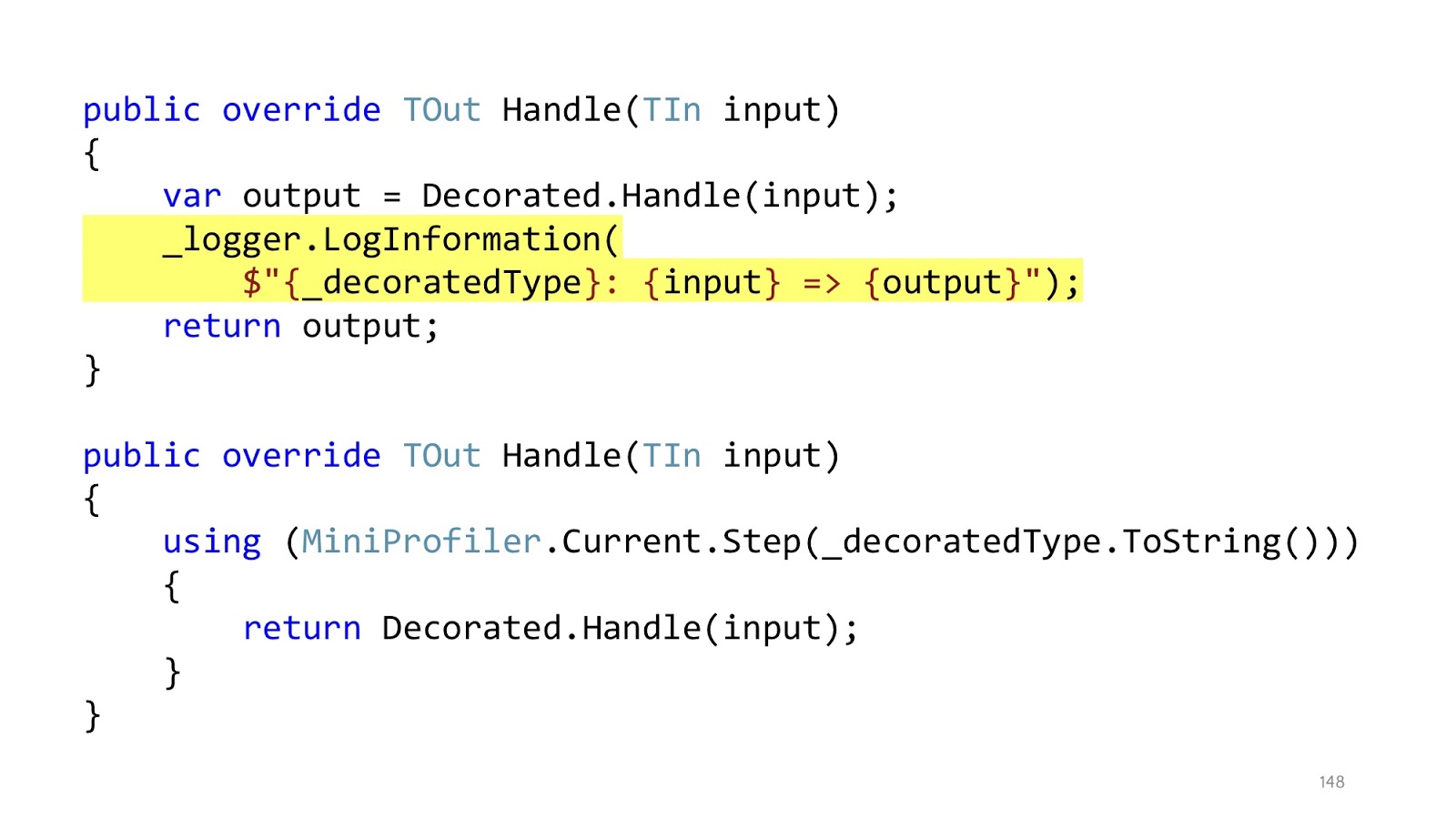

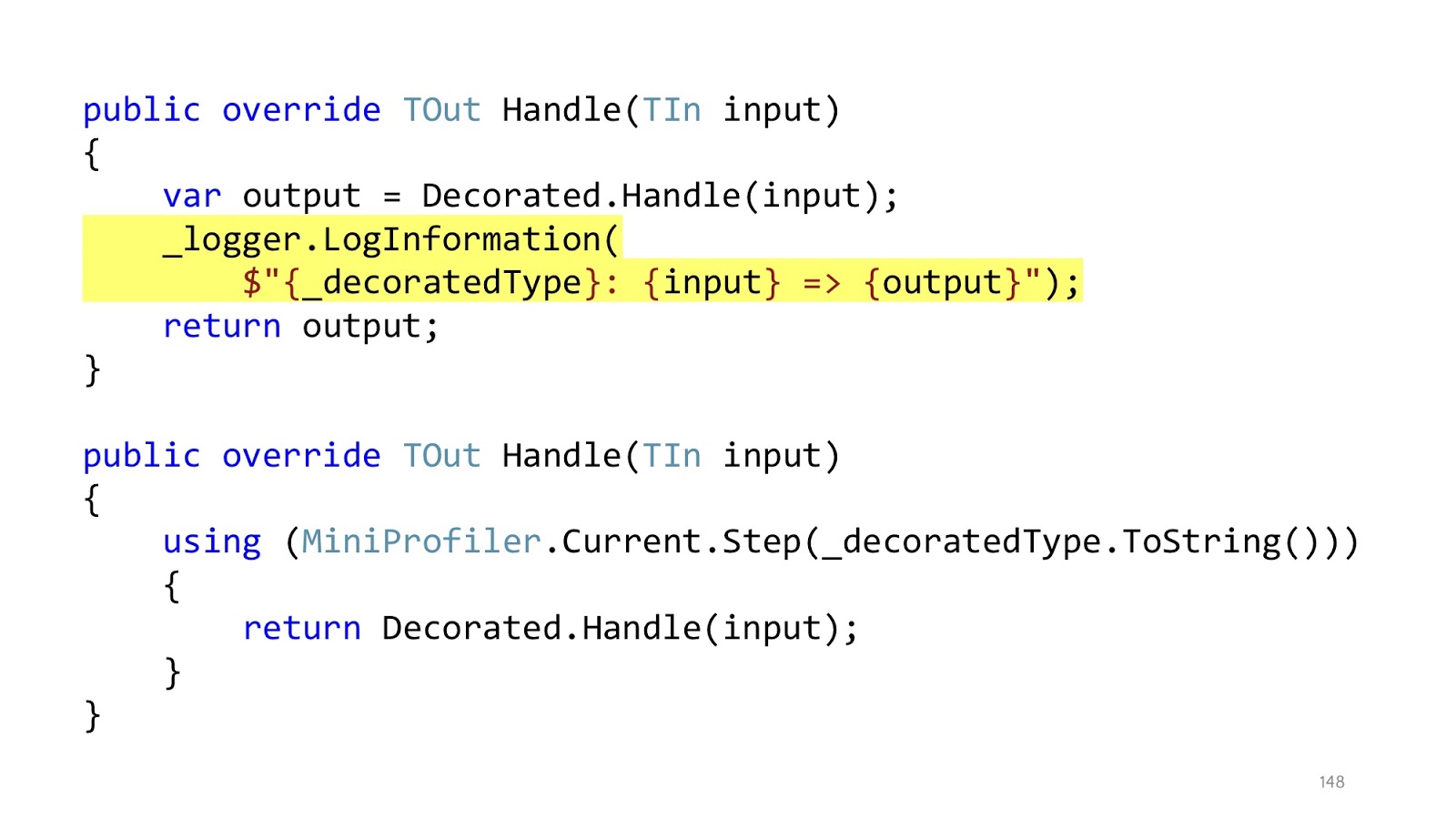

The nesting of the code remained, but in the original example we had first using MiniProfiler, then try catch, then if. In total, there were three levels of nesting, now each of this level of nesting is in its decorator. And inside the decorator, who is responsible for profiling, we have only one level of nesting, the code reads perfectly. In addition, it is clear that in these decorators only one responsibility. If the decorator is responsible for logging, then he will only log, if for profiling, respectively, only profiling, everything else is in other places.

After the whole pipeline has completed, we just have to take Dto and send further to the browser, serialize JSON.

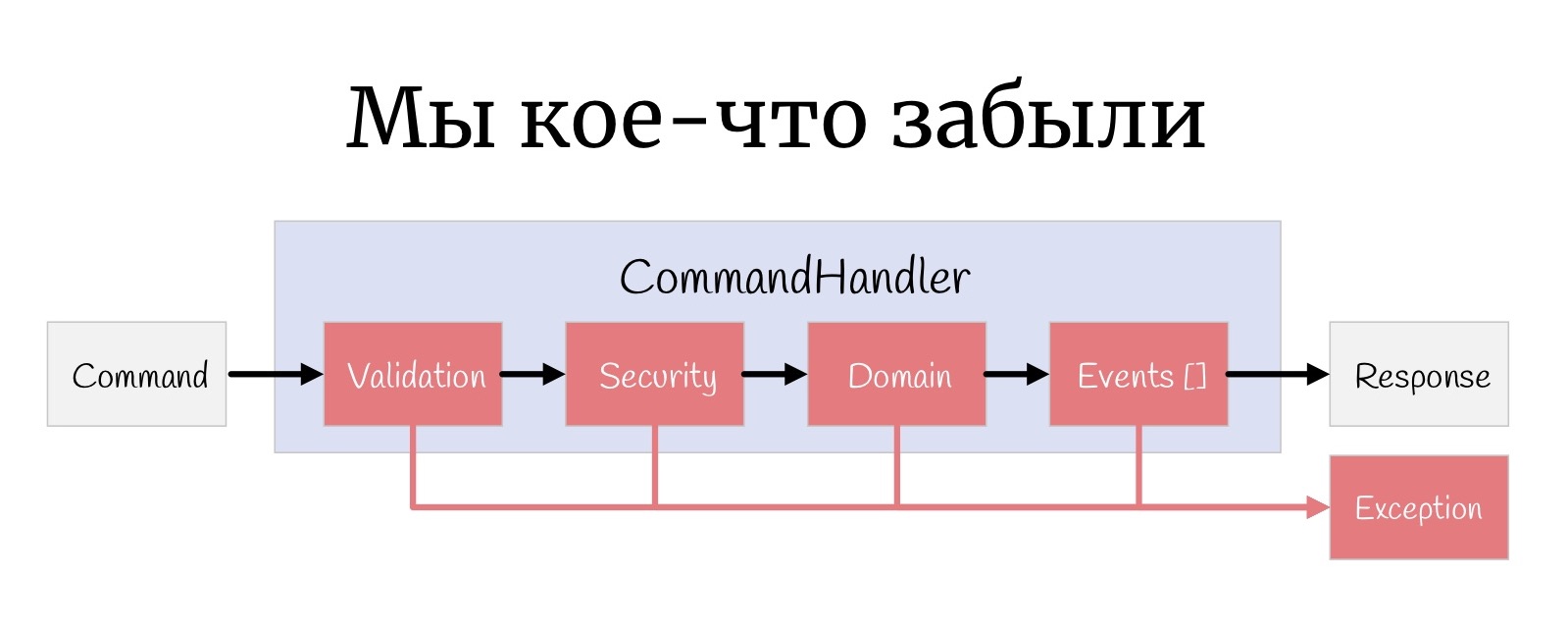

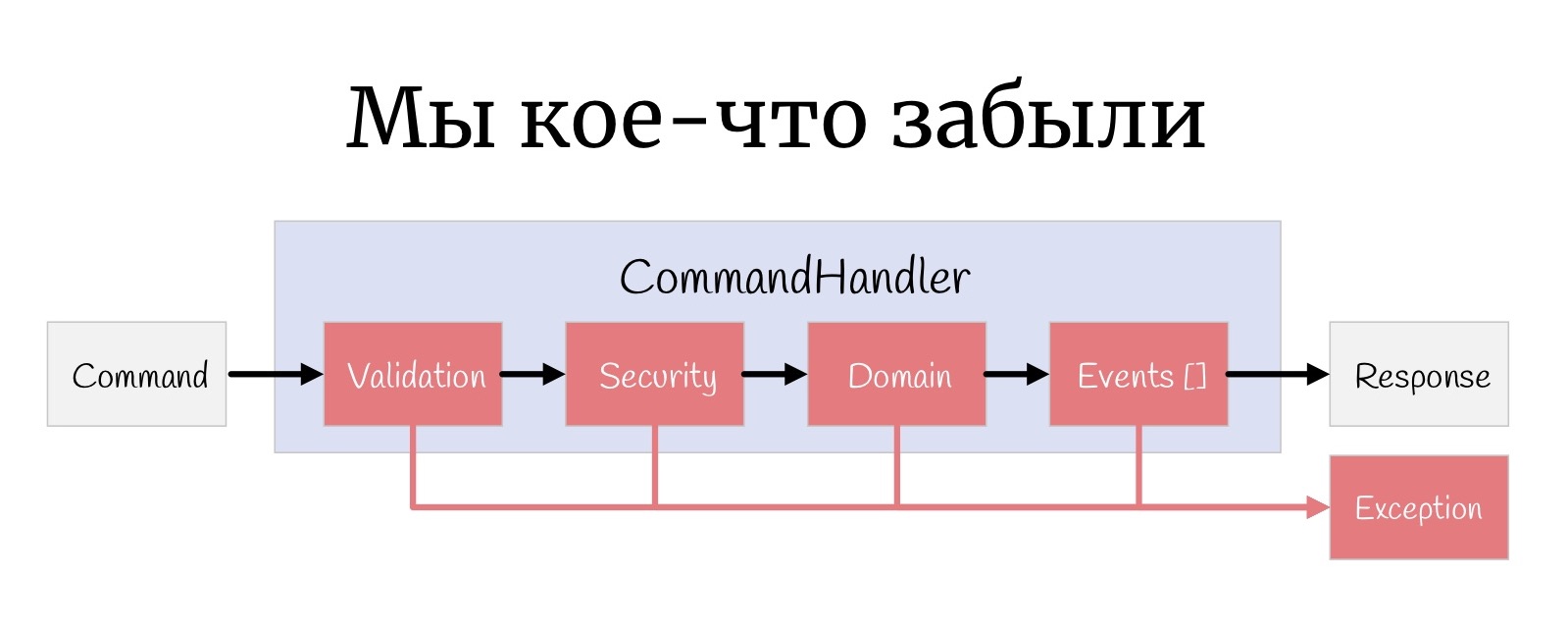

But one more small-small such thing that is sometimes forgotten: Exception can happen here at every stage, and in general it is necessary to process them somehow.

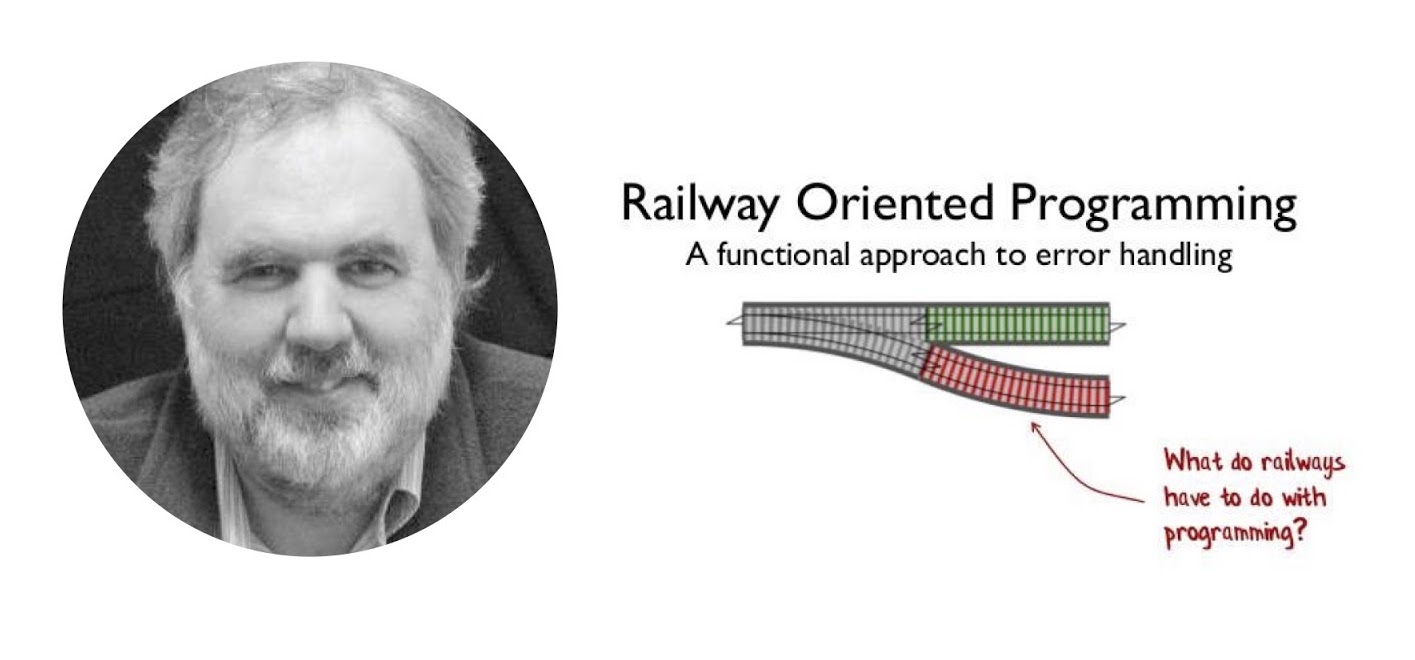

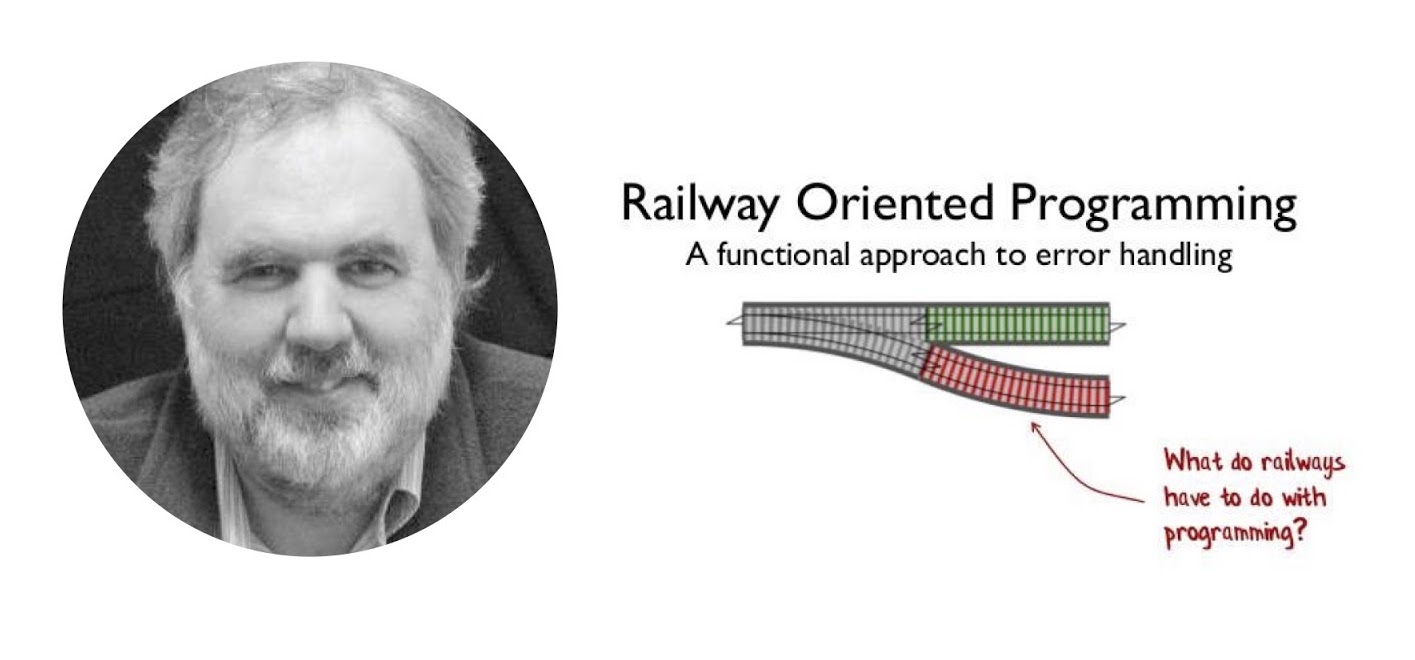

I can not fail to mention here again Scott Vlashin and his report “Railway oriented programming” . Why? The original report is entirely devoted to working with errors in the F # language, how to organize the flow a little differently, and why such an approach might be preferable to using Exception. In F #, this really works very well, because F # is a functional language, and Scott uses the capabilities of a functional language.

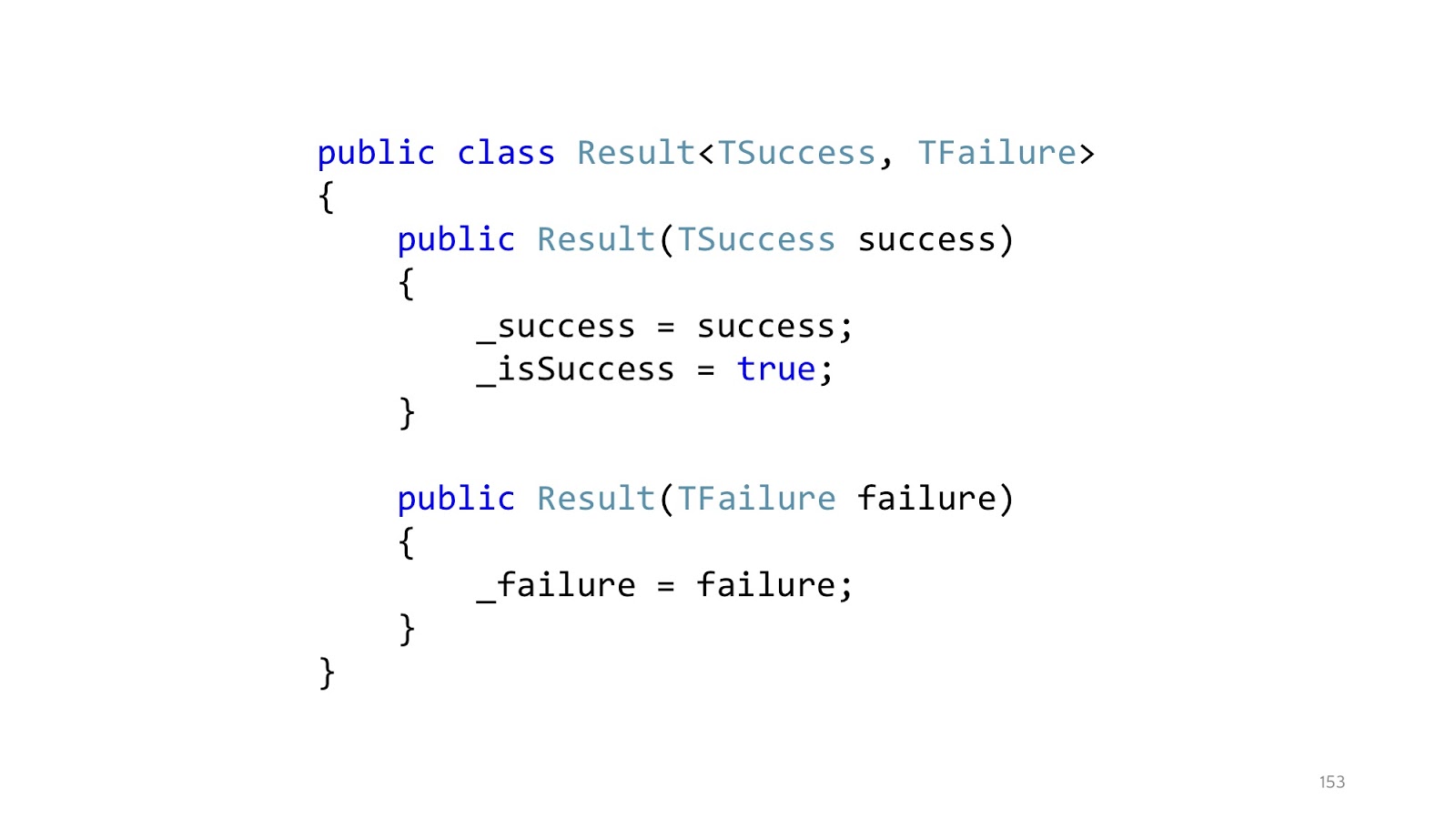

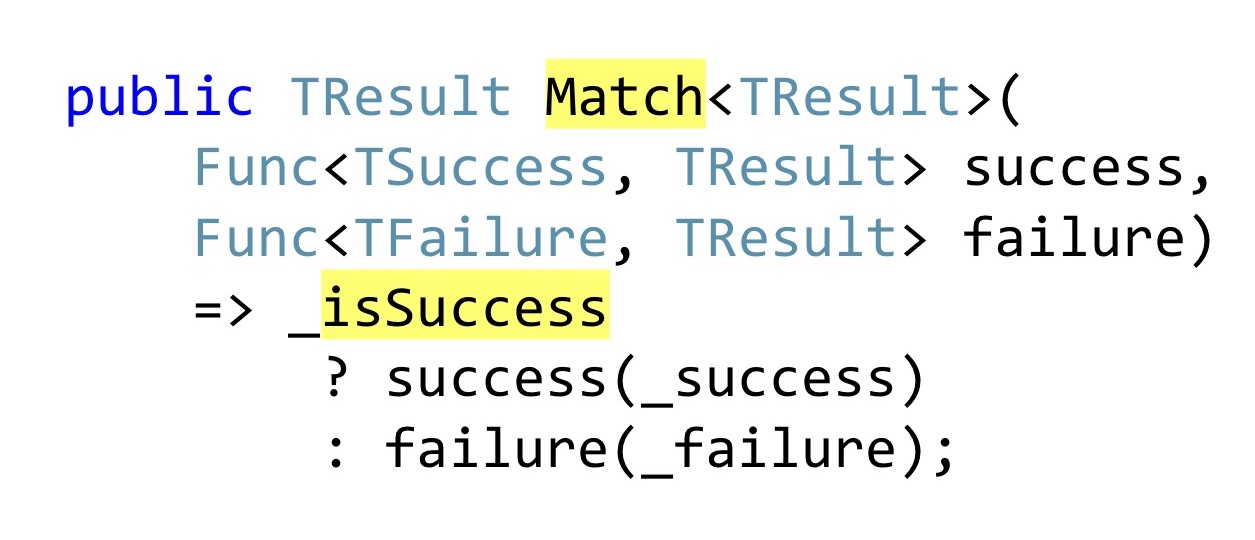

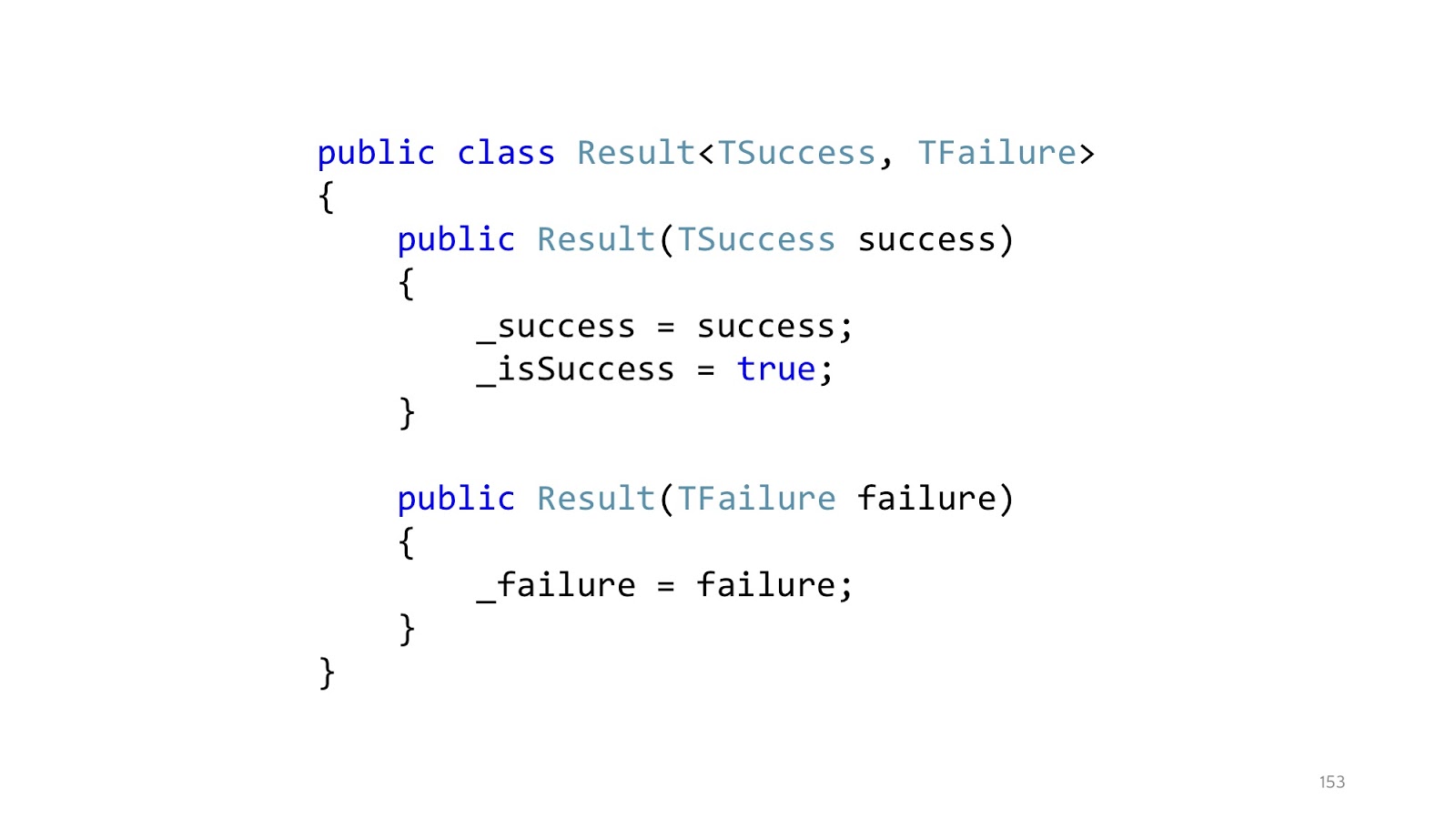

Since, probably, most of you still write in C #, then if you write an analogue in C # , then this approach will look something like this. Instead of throwing exceptions, we declare a Result class that has a successful branch and an unsuccessful branch. Accordingly, two designers. A class can only be in one state. This class is a special case of a type-association, discriminated union from F #, but rewritten in C #, because there is no built-in support in C #.

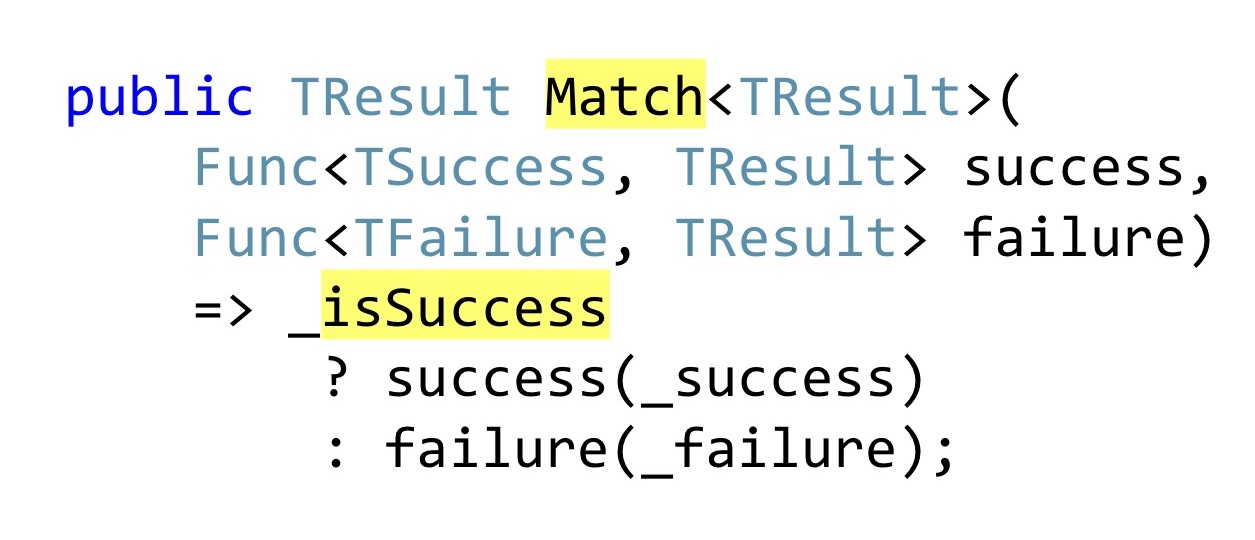

Instead of declaring public getters, which someone may not check for null in the code, Pattern Matching is used. Again, in F # it would be embedded in the Pattern Matching language, in C # you have to write a separate method, to which we will pass one function, which knows what to do with a successful result of the operation, how to transform it further along the chain, and what is wrong. That is, no matter which branch worked for us, we have to drop it to one return result. In F #, this all works very well, because there is a functional composition, well, everything else that I have already listed. In .NET, this works somewhat worse, because once you have more than one Result, and many - and almost every method can fail for one reason or another - almost all of your resulting function types become Result, and you need them as something to combine.

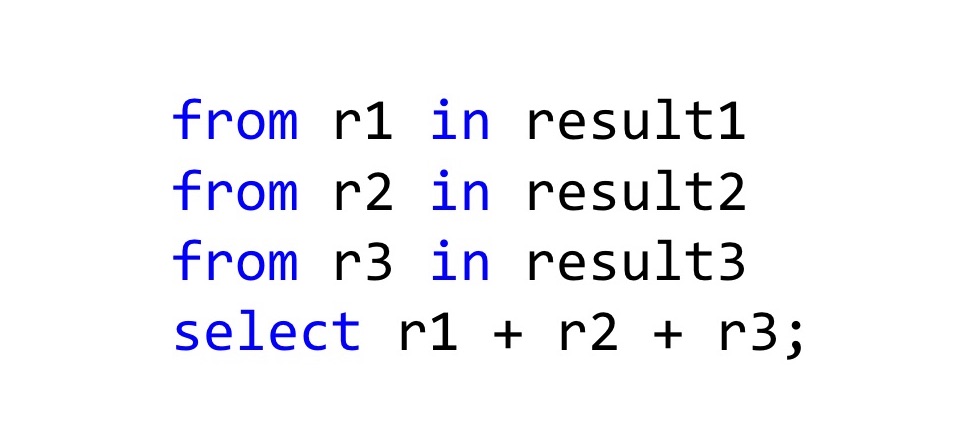

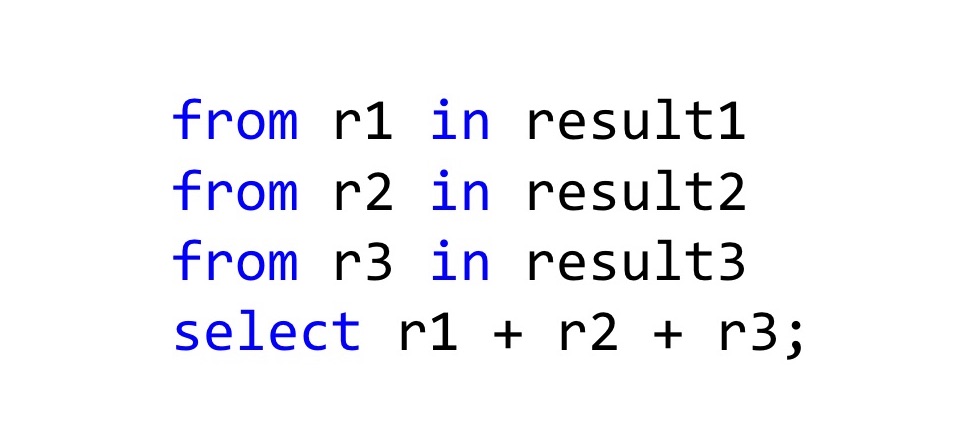

The easiest way to combine them is to use LINQ , because in general LINQ does not only work with IEnumerable, if you define the SelectMany and Select methods correctly, then the C # compiler will see what you can use for these types of LINQ syntax. In general, tracing paper is obtained from Haskell do-notation or from the same Computation Expressions in F #. How should this be read? Here we have three results of the operation, and if everything is good there in all three cases, then take these results r1 + r2 + r3 and add. The type of the resulting value will also be Result, but the new Result, which we declare in Select. In general, this is even a working approach, if not for one thing.

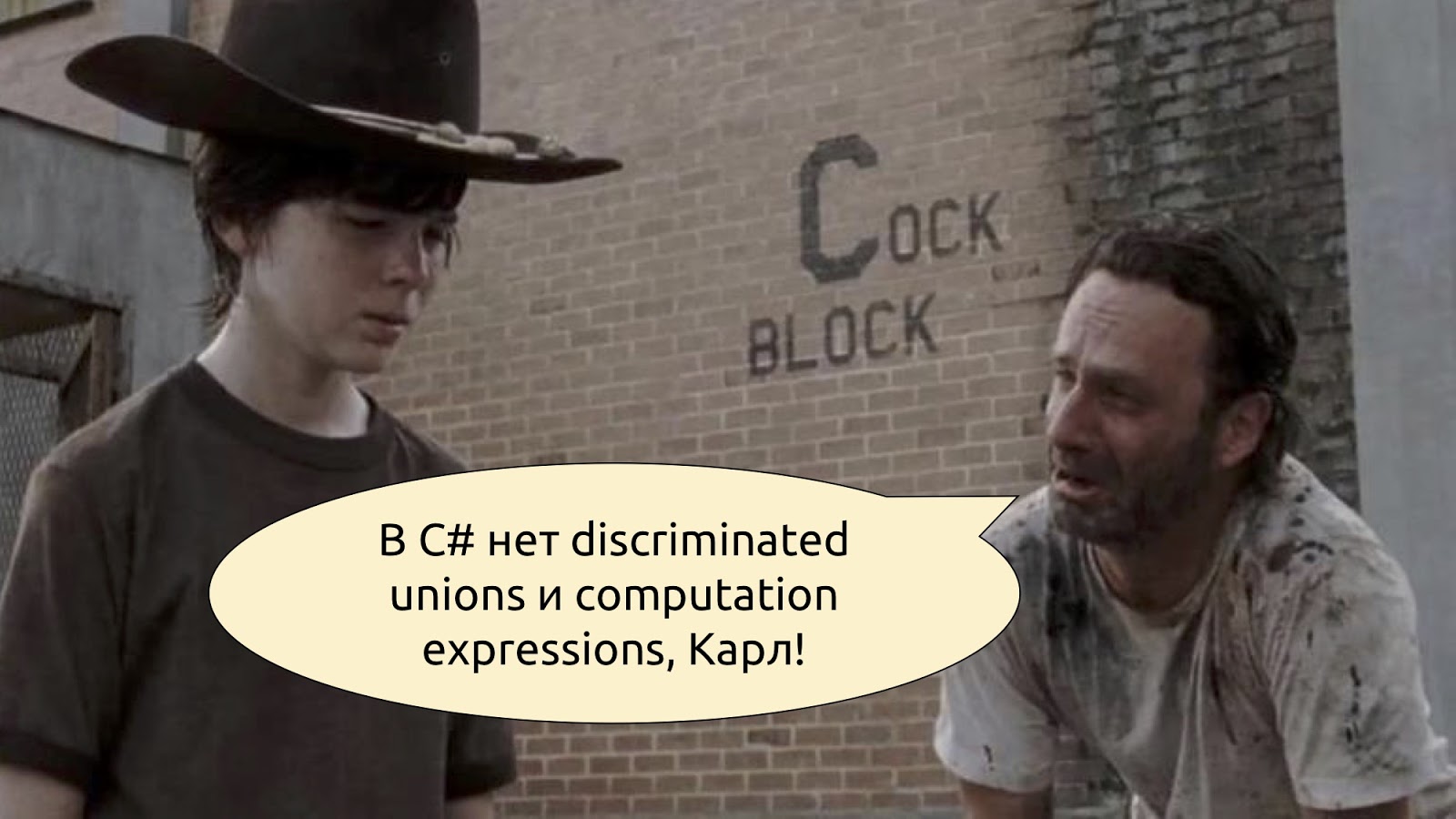

For all other developers, as soon as you start writing such C # code, you start to look something like this. “These are bad scary Exceptions, don't write them! They are evil! It is better to write code that no one understands and cannot debug! ”

C # is not F #, it is somewhat different, there are no different concepts on the basis of which this is done, and when we are trying to pull an owl on the globe, it turns out, to put it mildly, it is unusual.

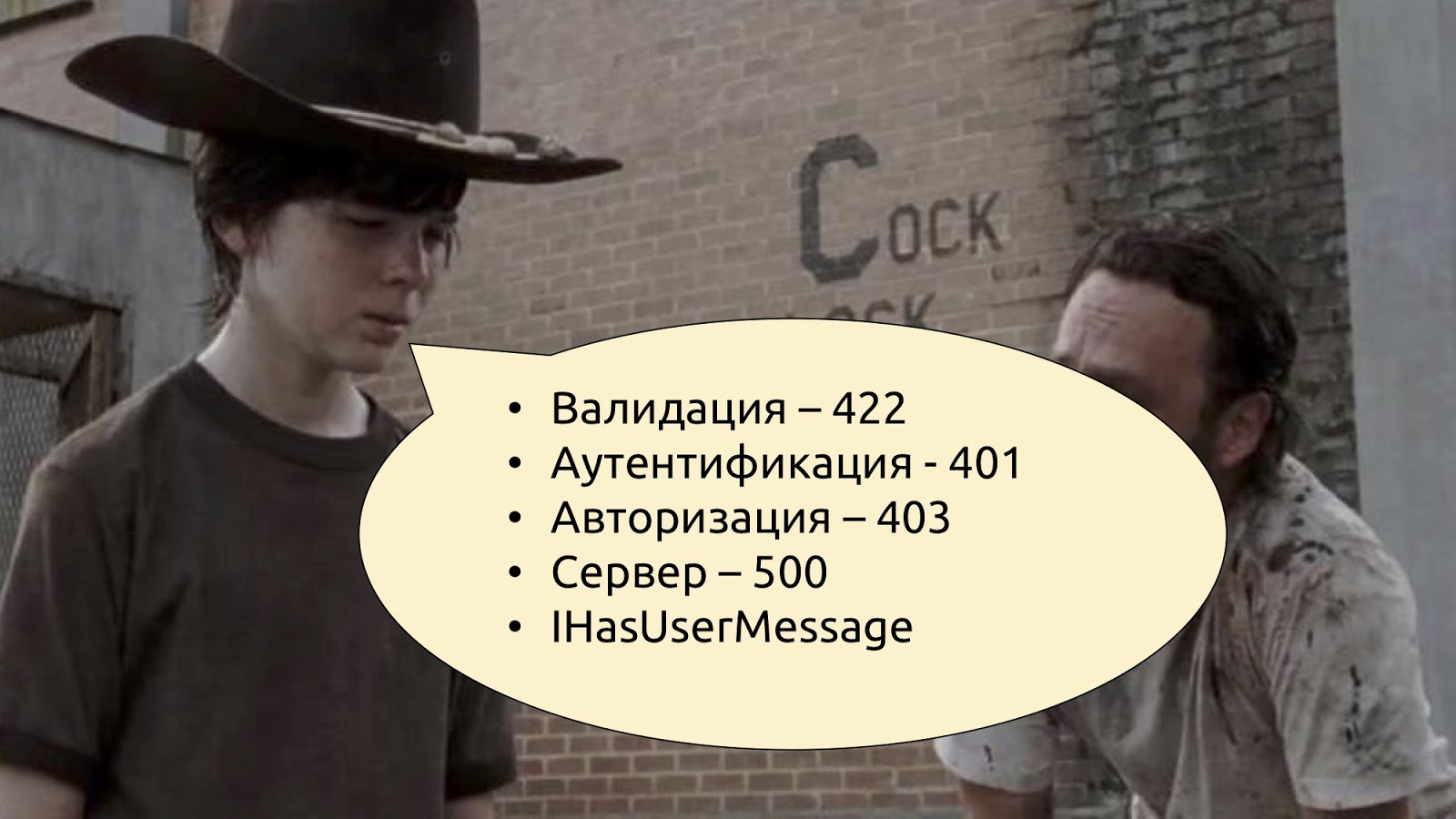

Instead, you can use the built-in normal tools that are documented, that everyone knows and that will not cause developers cognitive dissonance. ASP.NET has global Handler Exceptions.

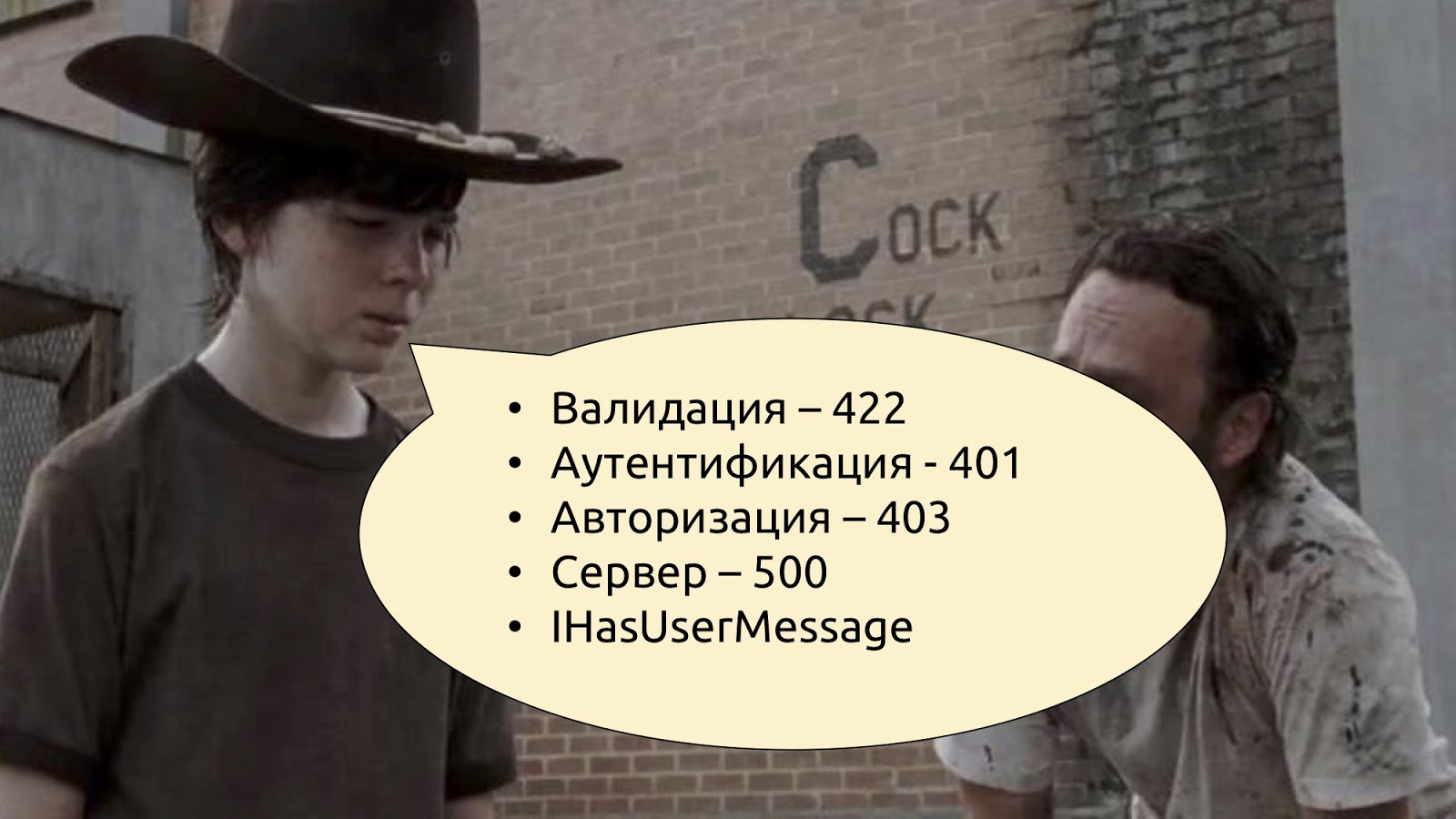

We know that if there are any problems with validation, you need to return code 400 or 422 (Unprocessable Entity). If the problem is with authentication and authorization, there are 401 and 403. If something went wrong, then something went wrong. And if something went wrong and you want to tell the user exactly what, define your Exception type, say it is IHasUserMessage, declare Message getter on this interface and just check: if this interface is implemented, then you can take the message from Exception and pass it to the JSON user. If this interface is not implemented, it means that there is some kind of system error there, and we will simply say to the users that something went wrong, we are already engaged, we all know - well, as usual.

With this we finish with the teams and see what we have in the Read-stack. As for the request, validation, response, it’s about the same thing, we will not stop separately. There may be an additional cache here, but in general, there are also no major problems with the cache.

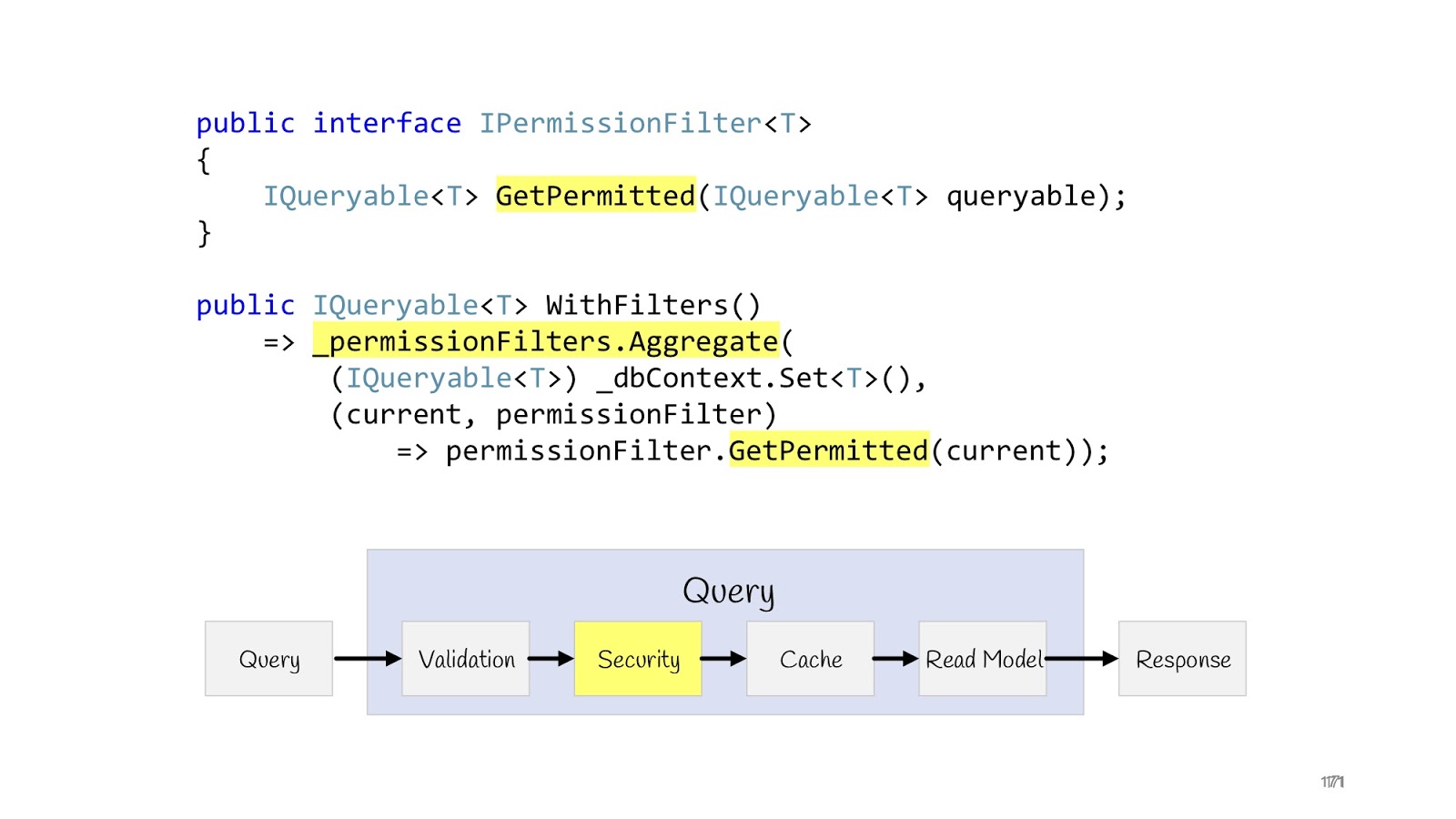

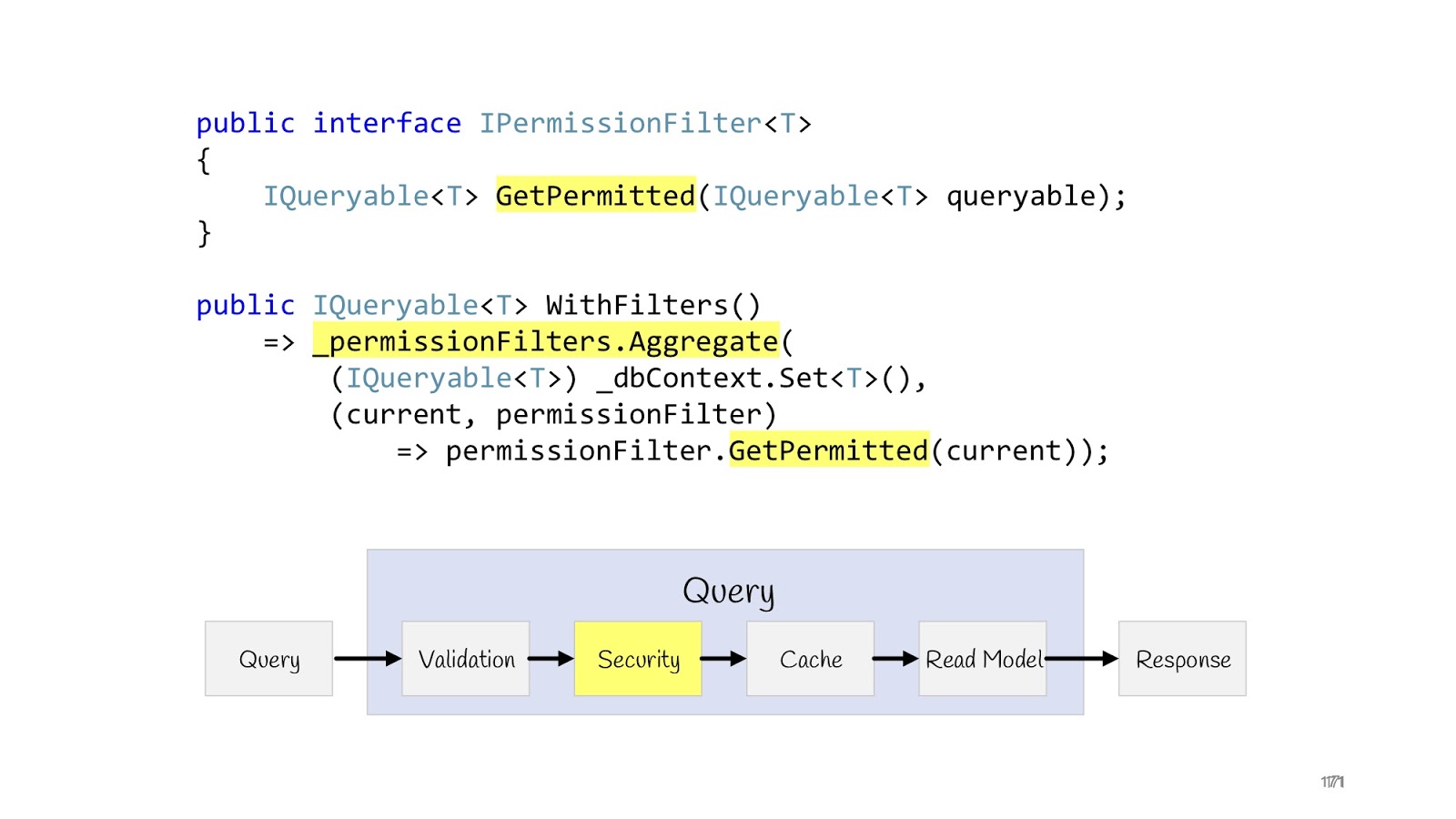

We’ll look better at security checks. There may also be the same Security decorator, which checks whether this query can be made or not:

But there is another case where we print not one record, but print lists, and some users have to display a complete list (for example, some superadminators), and other users we have to display limited lists, the third - limited software. to another, well, and as is often the case in corporate applications, access rights can be extremely sophisticated, so you need to be sure that these lists are not crawled by data that these users do not intend.

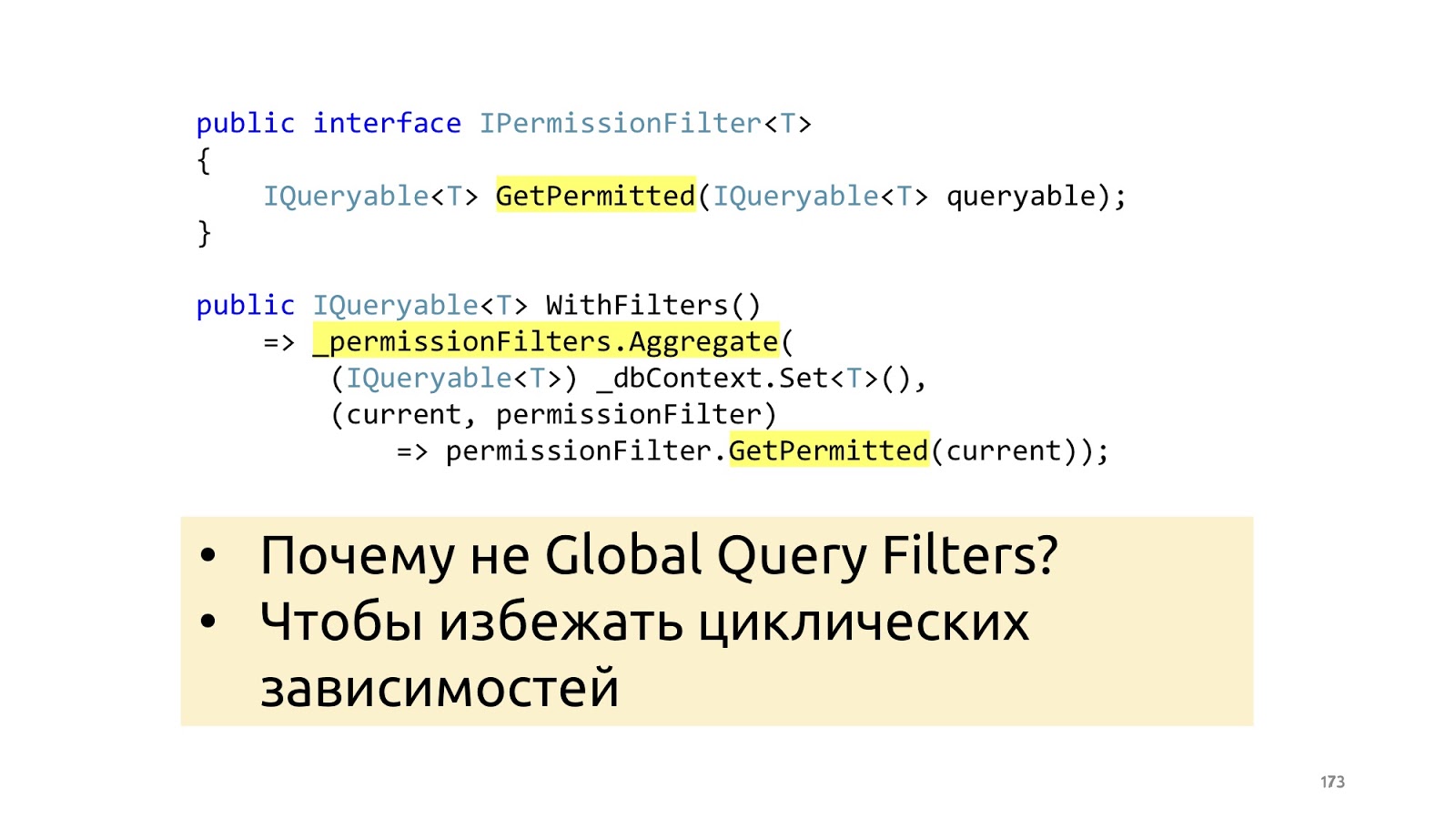

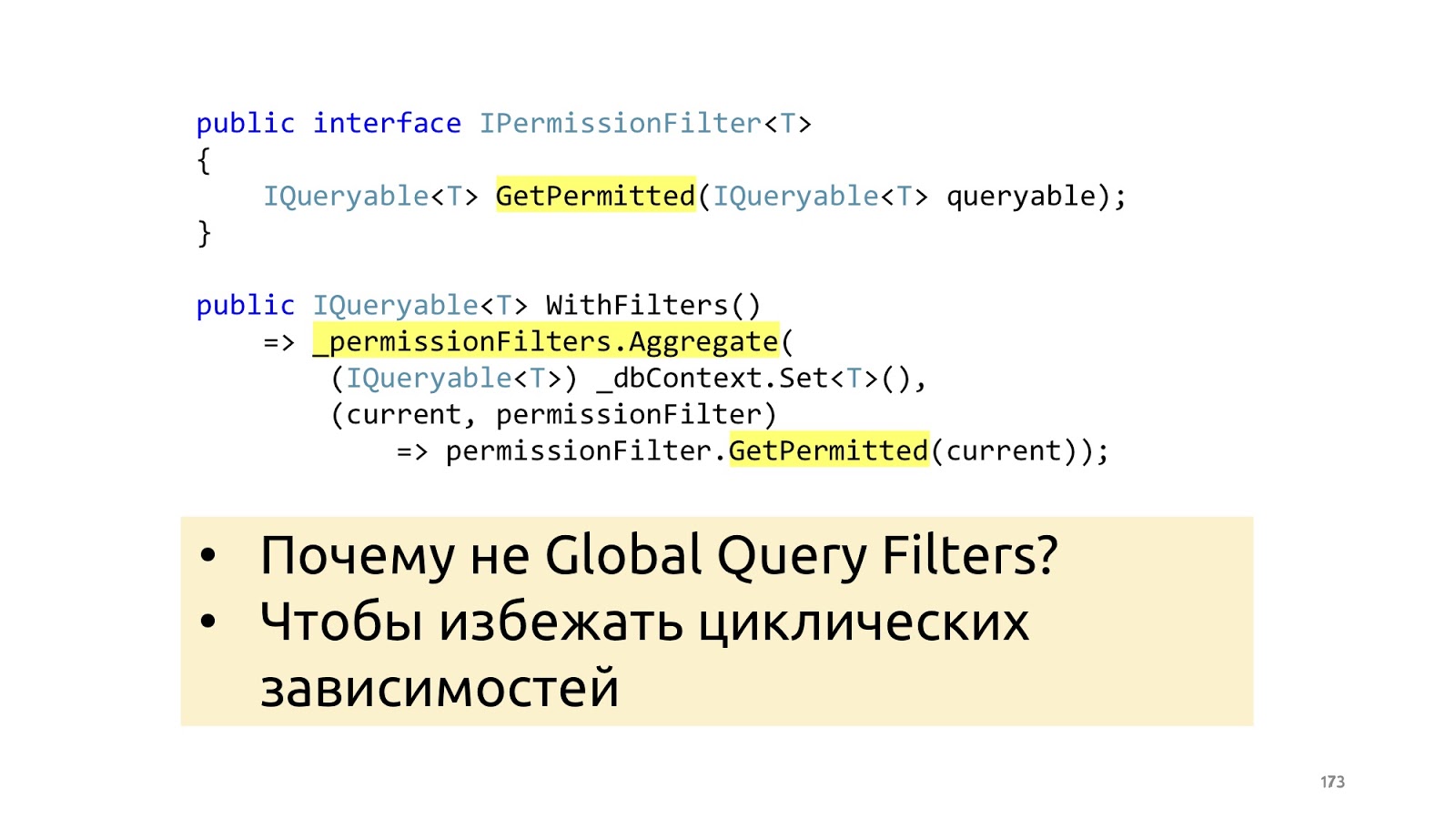

The problem is solved quite simply . We can add an interface (IPermissionFilter) to which the original queryable comes in and returns a queryable. The difference is that by that queryable, which is returned, we have already hung up additional where clauses, checked the current user and said: “Here, return to this user only the data that ...” - and then all your logic related to permission'ami . Again, if you have two programmers, one programmer goes to write permissions, he knows that he just needs to write a lot of permissionFilters and check that they work correctly for all entities. And other programmers do not know anything about permission'y, in the list just always pass the correct data, that's all. Because they receive at the entrance no longer the original queryable from dbContext, but limited by filters. This permissionFilter also has a layout property, we can add and apply all permissionFilters. As a result, we obtain the resulting permissionFilter, which will limit the selection of data to the maximum, taking into account all the conditions that are suitable for this entity.

Why not do it with built-in ORM tools, for example, Global Filters in entity framework? Again, in order not to dwell on all sorts of cyclical dependencies and not to drag in the context any additional story about your business layer.

It remains to look at the model of reading. In the CQRS paradigm, the domain model in the reading stack is not used; instead, we simply immediately form the Dto that the browser needs at the moment.

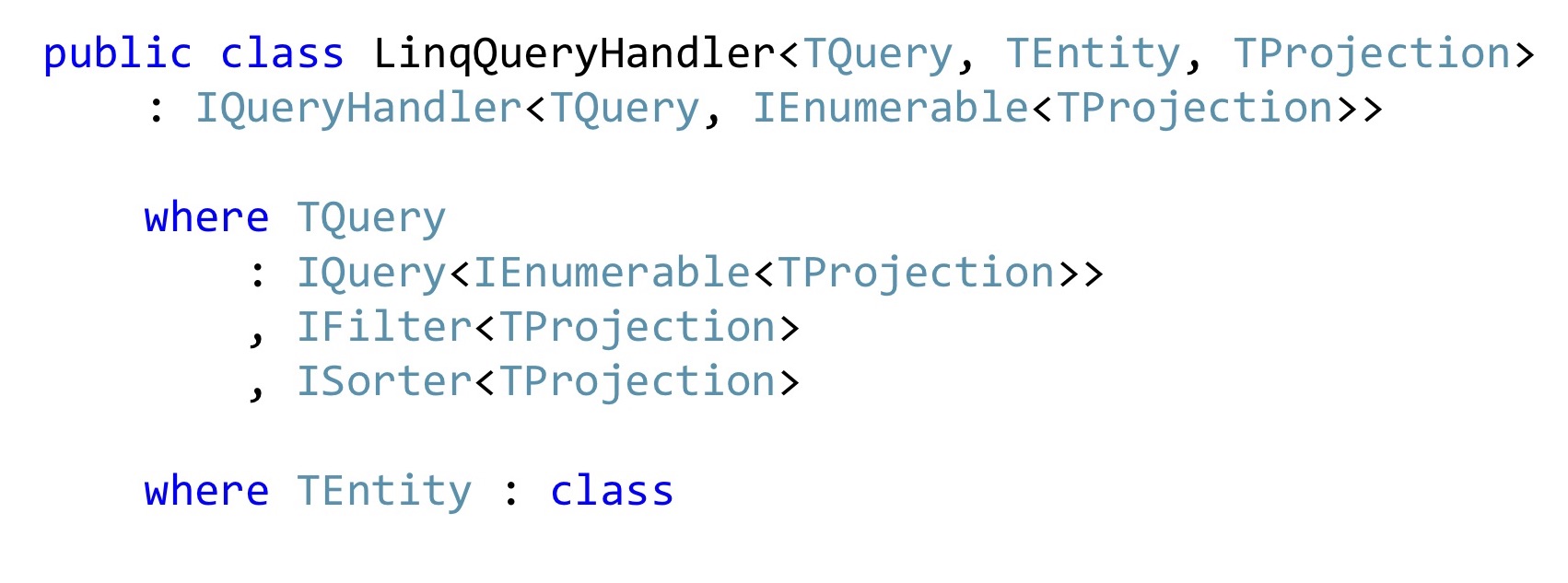

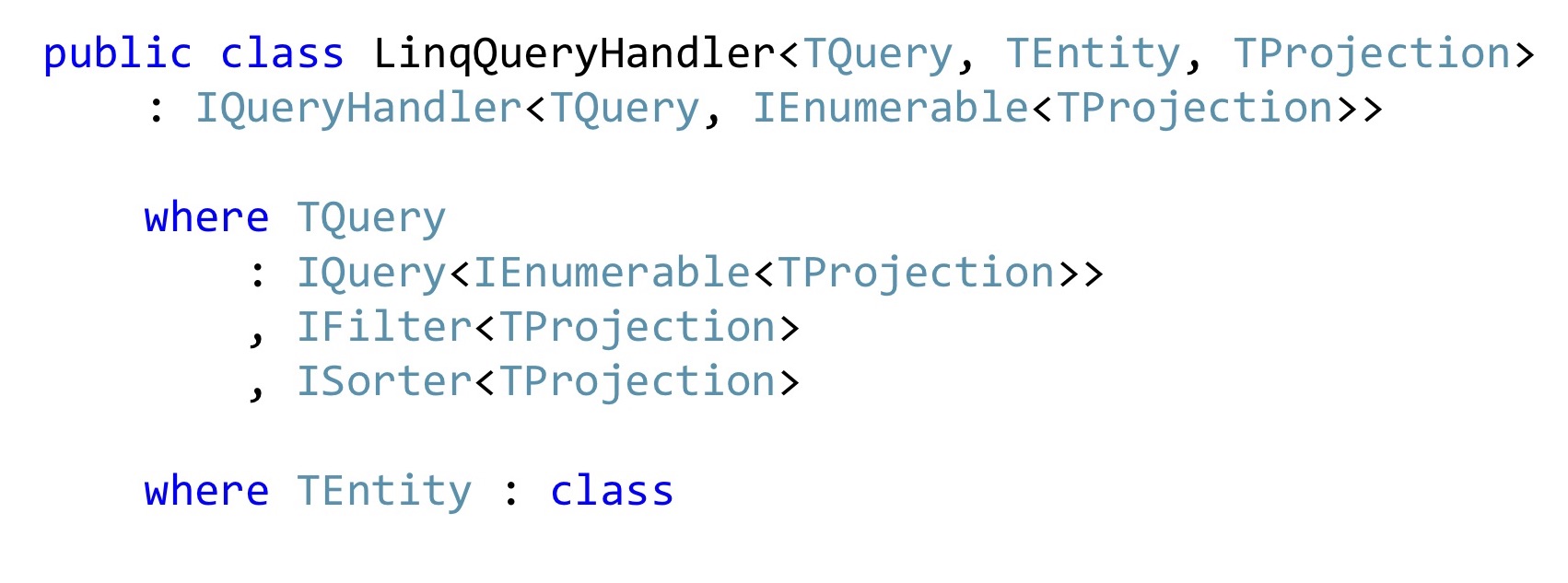

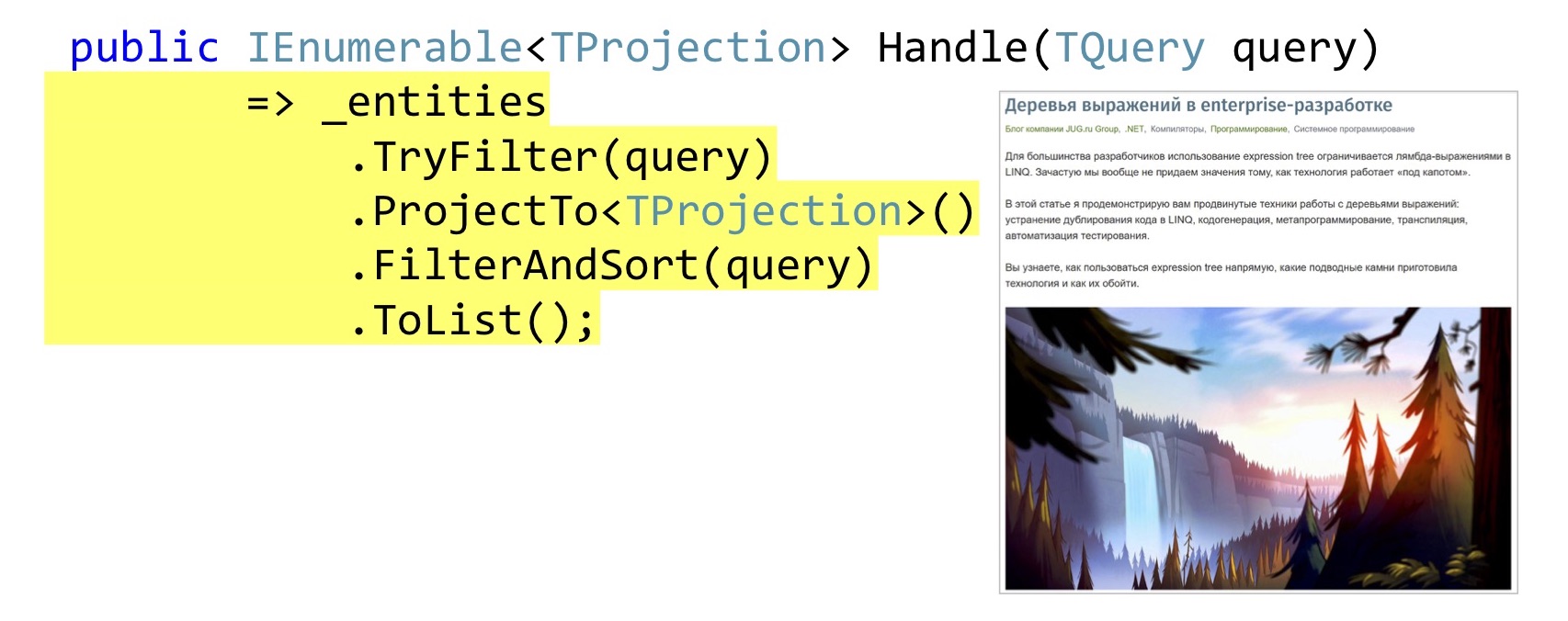

If we are writing in C #, then most likely we are using LINQ, if there are not only some monstrous performance requirements, and if they are, then you may not have a corporate application. In general, this task can be solved once and for all with LinqQueryHandler. There is a pretty scary constraint on a generic: this is Query, which returns a list of projections, and it can still filter these projections and sort these projections. It also works only with some types of entities and knows how to convert these entities to projections and return the list of such projections already in the form of Dto to the browser.

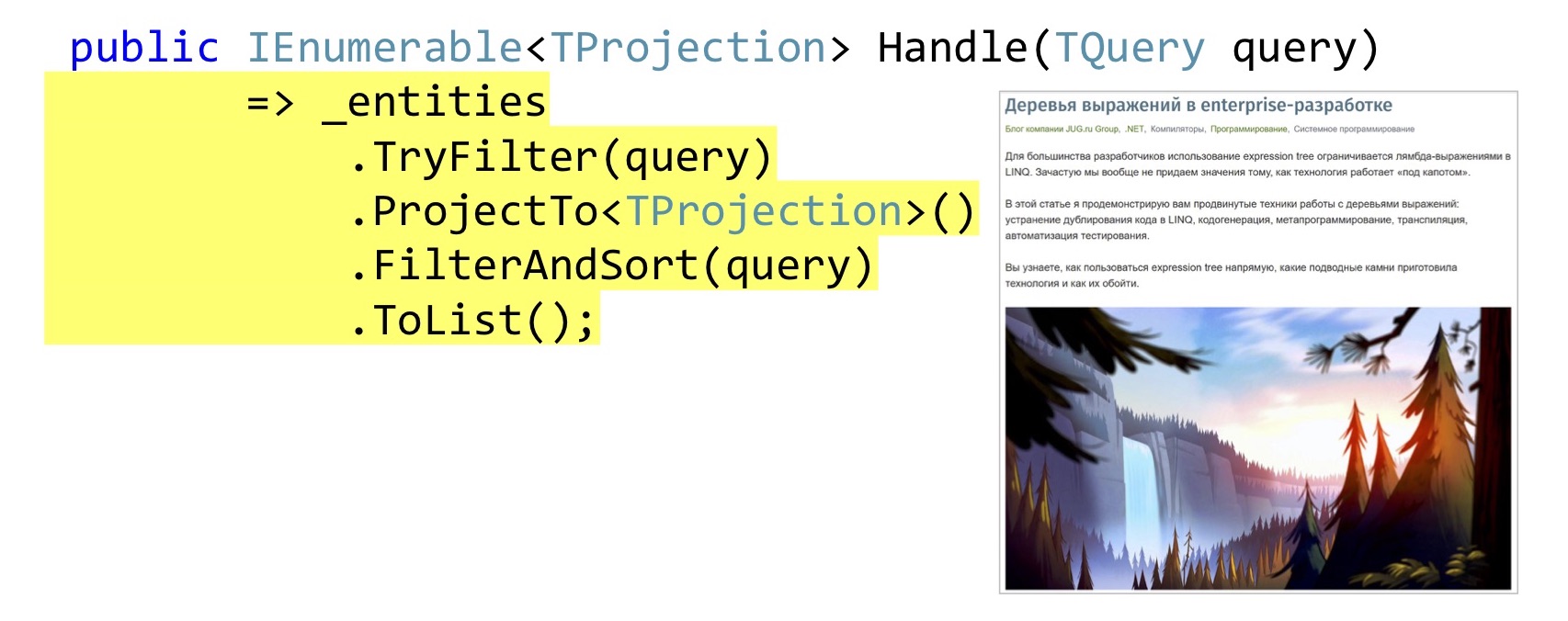

The implementation of the Handle method can be quite simple. Just in case, let's check if this TQuery filter implements the initial entity. Further we do a projection, it is AutoMapper's queryable extension. If someone still does not know, AutoMapper can build projections in LINQ, that is, those who will build the Select method, and not map it in memory.

Then we apply filtering, sorting and give it all to the browser. , DotNext, , , , , , expression' , .

Moving on. , DotNext', — SQL. Select , , , queryable- .

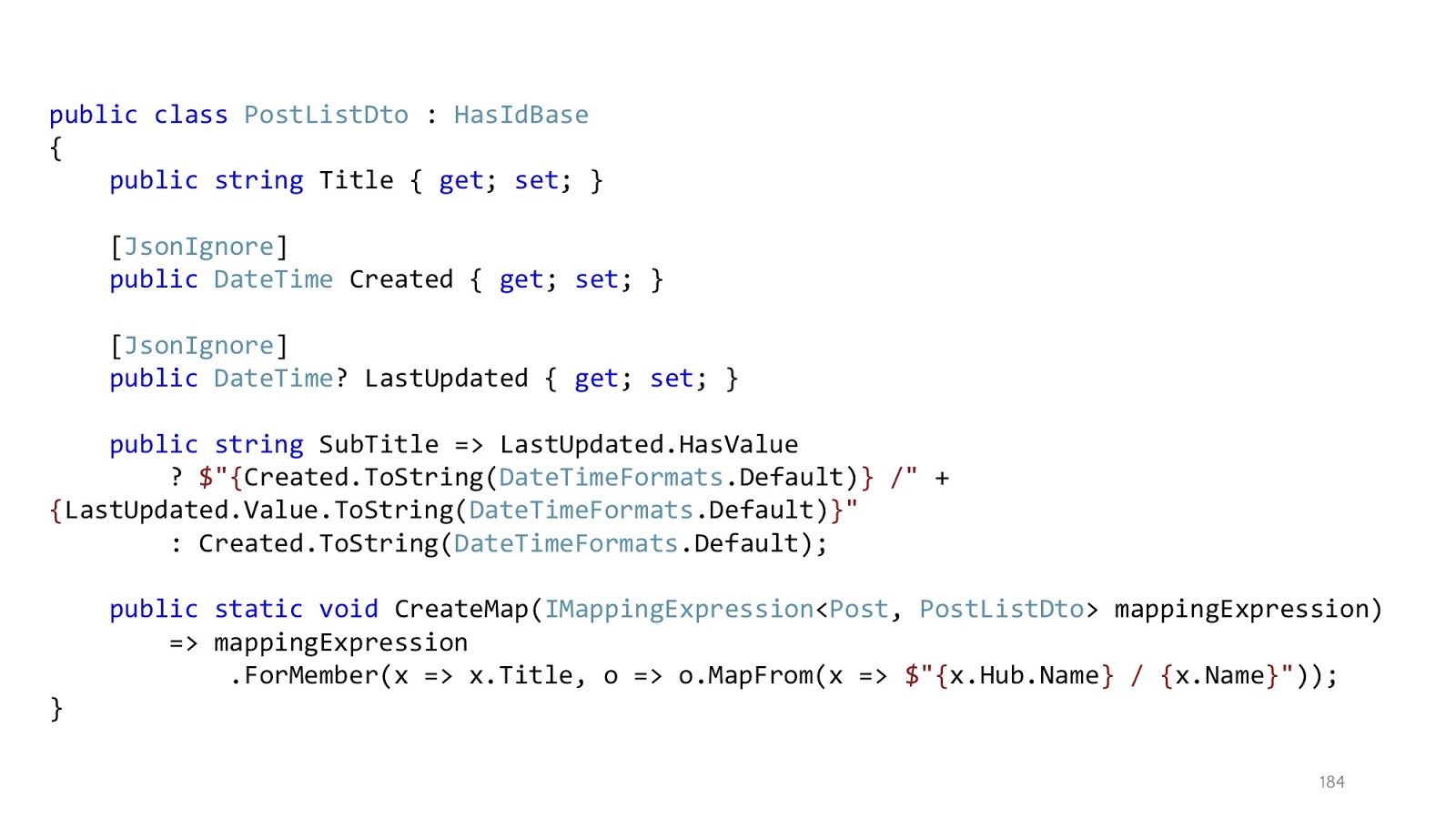

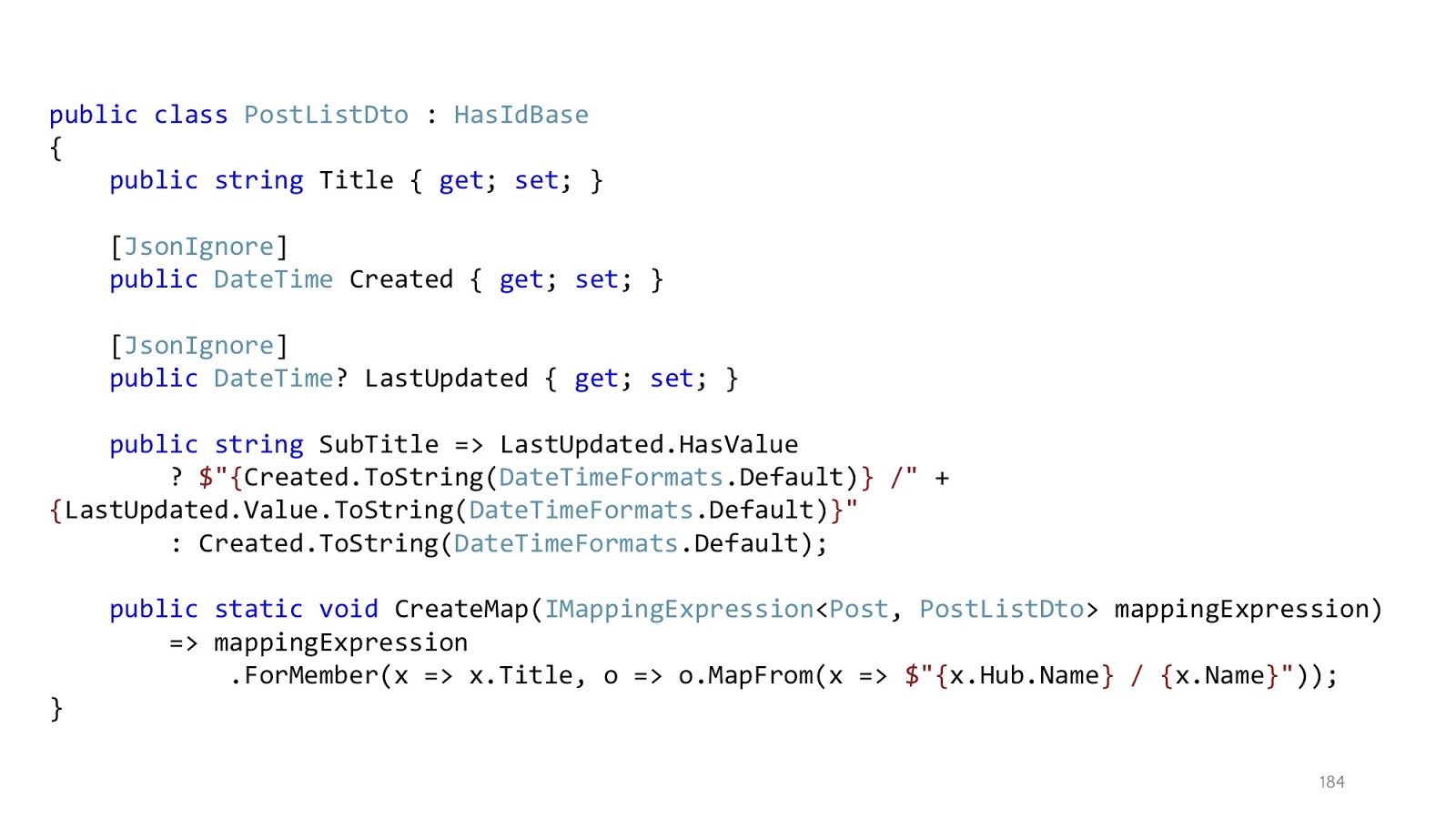

, . , Title, Title , . , . SubTitle, , , - , queryable- . , .

, . , , . , , . «JsonIgnore», . , , Dto. , , . JSON, , Created LastUpdated , SubTitle — , . , , , , , . , - .

. , -, , . , pipeline, . — , , . , SaveChanges, Query SaveChanges. , , , NuGet, .

. , - , , . , , , , , — . , , : « », — . .

, ?

- . .

, , , . MediatR , . , , — , MediatR pipeline behaviour. , Request/Response, RequestHandler' . Simple Injector, — .

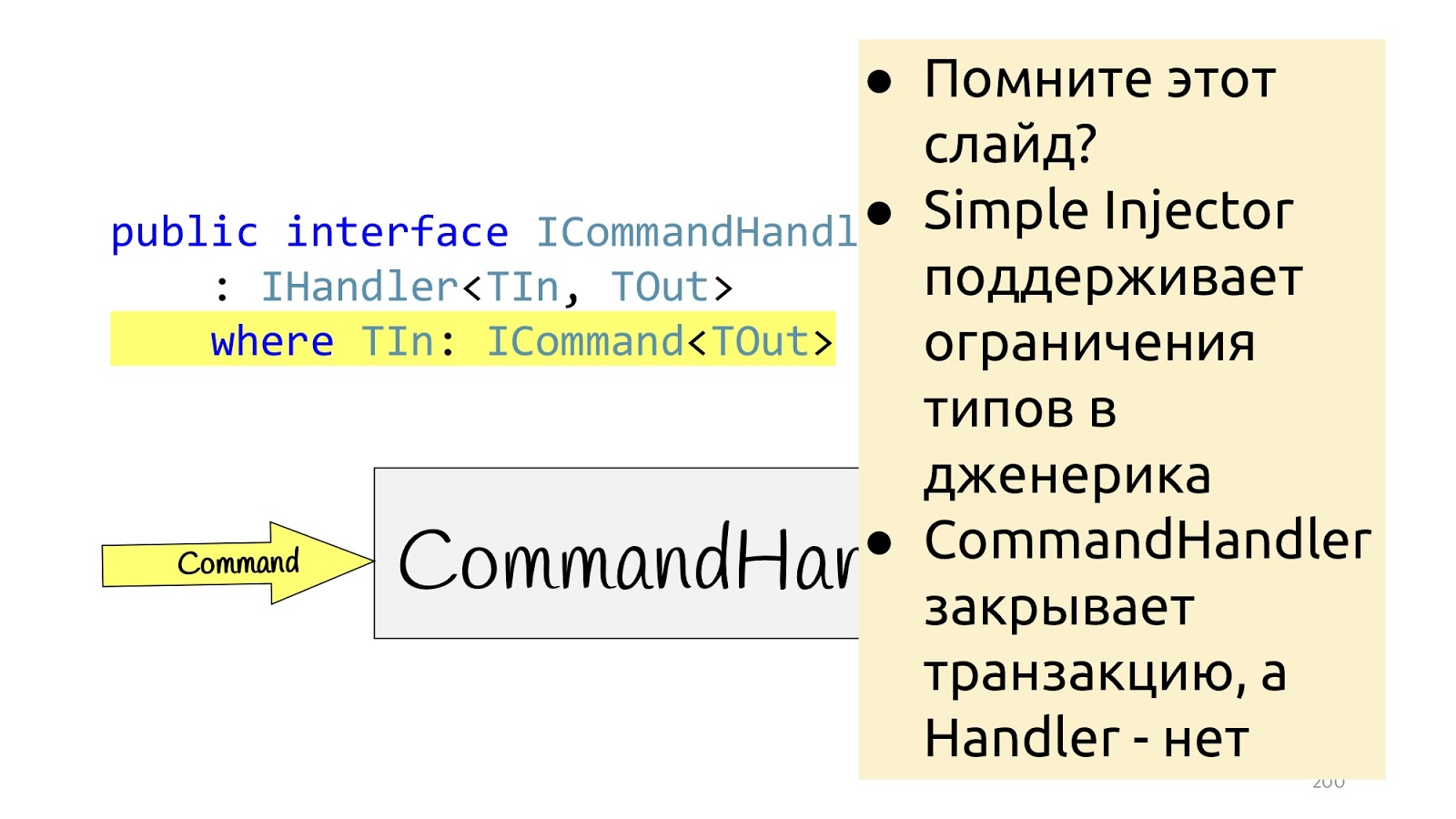

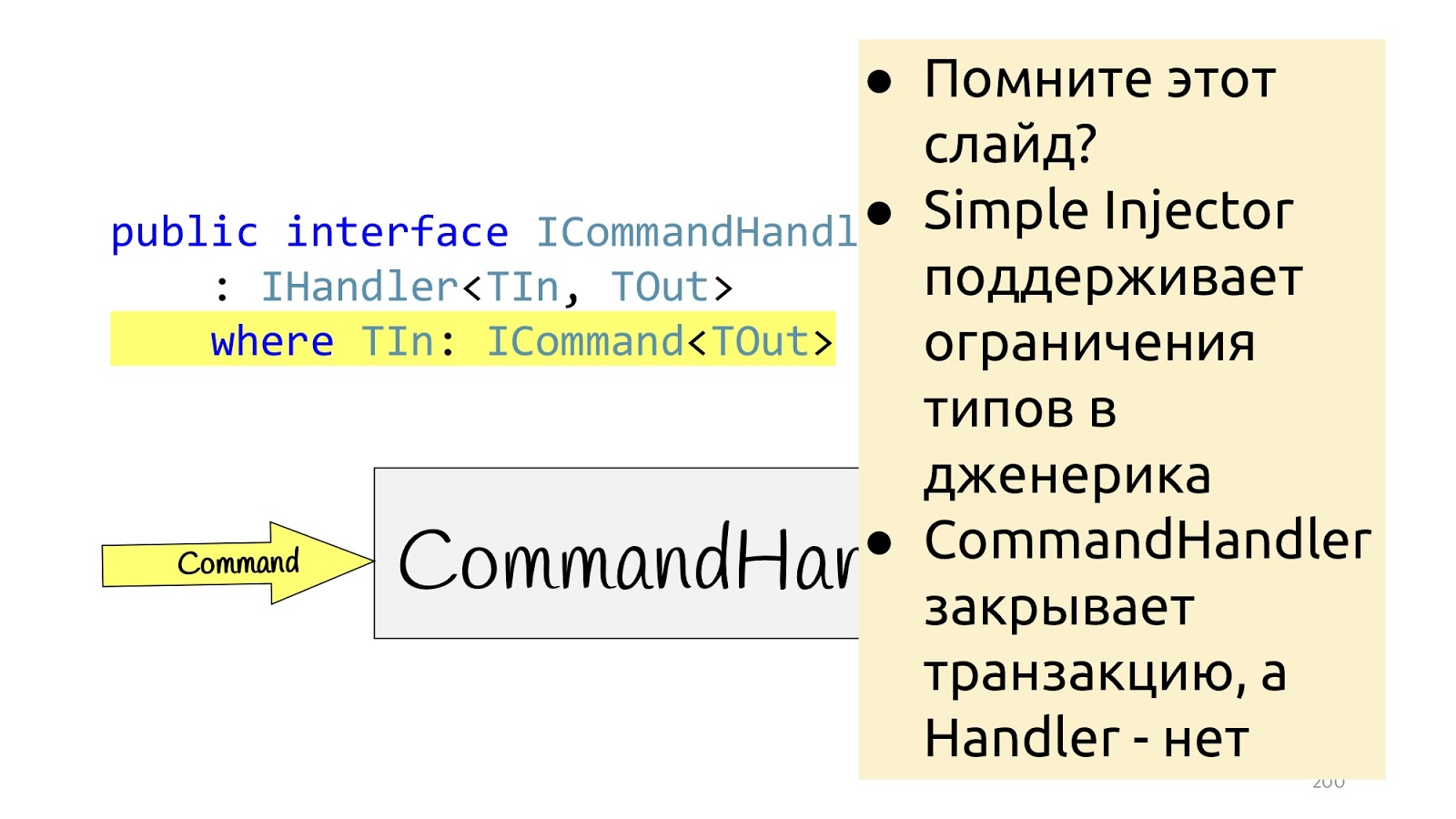

, , , , TIn: ICommand.

Simple Injector' constraint' . , , , constraint', Handler', constraint. , constraint ICommand, SaveChanges constraint' ICommand, Simple Injector , constraint' , Handler'. , , , .

? Simple Injector MeriatR — , , Autofac', -, , , . , .

, «».

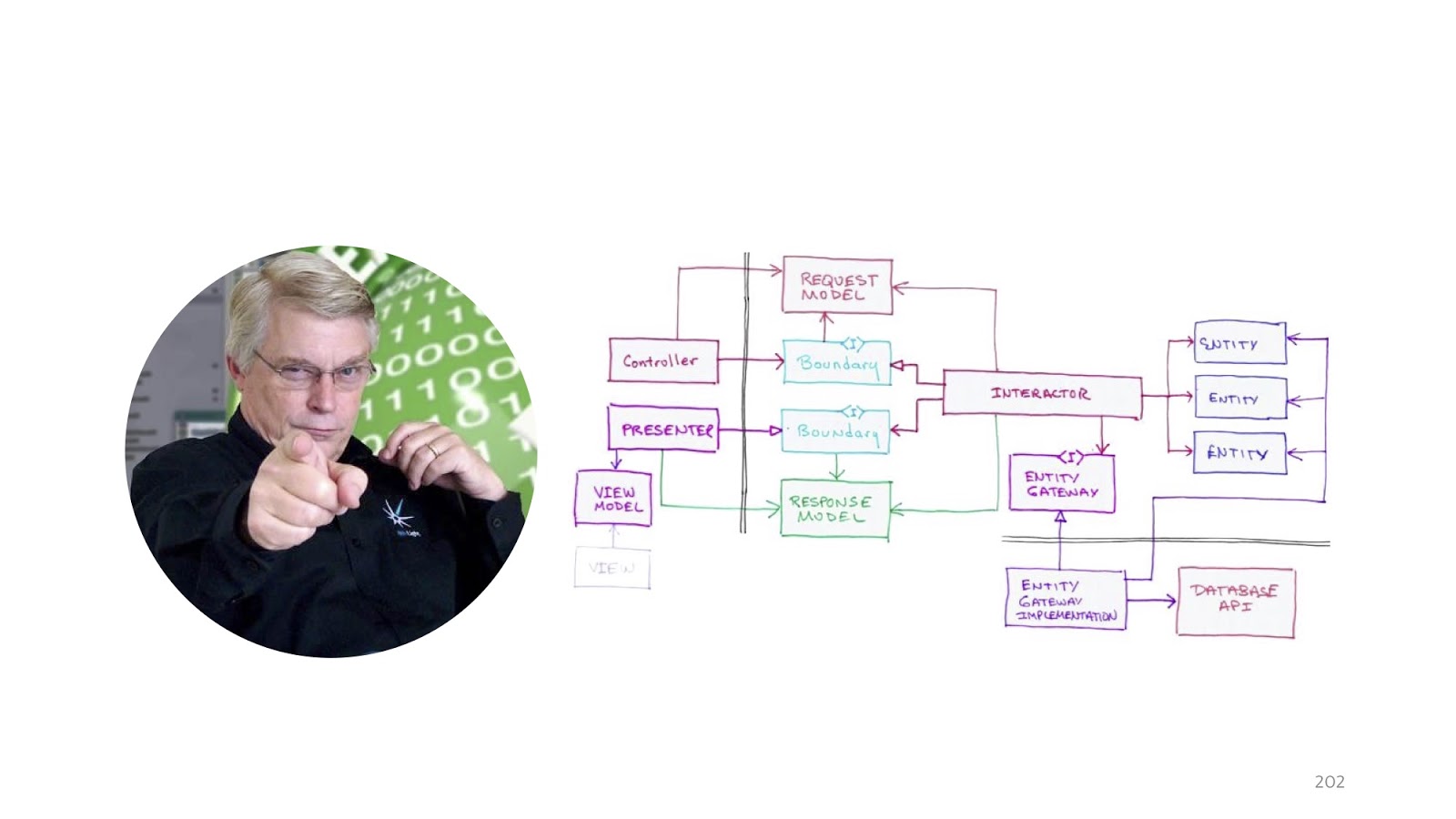

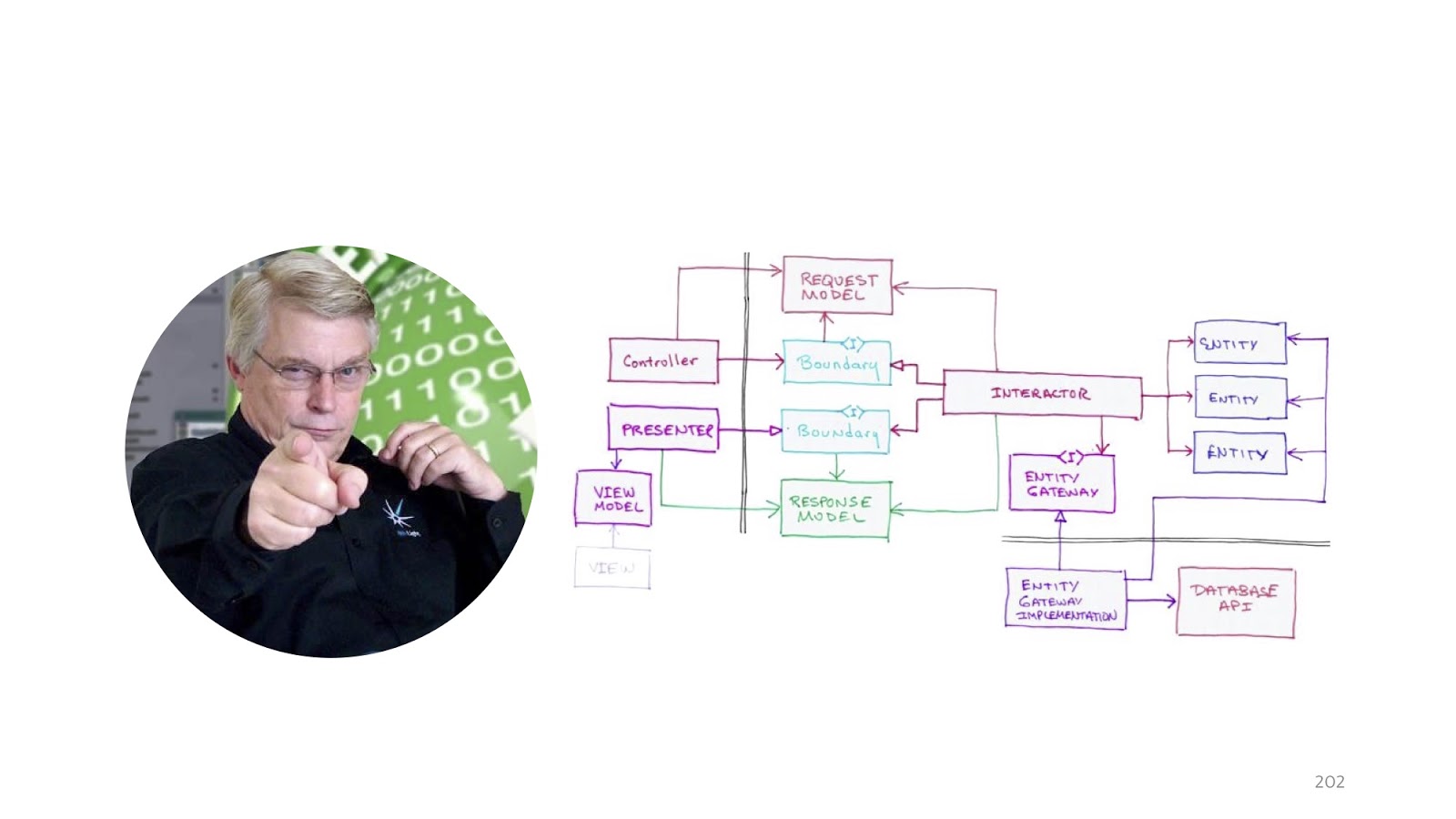

, «Clean architecture». .

- - , MVC, , .

, , , Angular, , , , . , : « — MVC-», : « Features, : , Blog - Import, - ».

, , , , MVC-, , - , . MVC . , , — . .

- , - -, .

-, , . , . , - , User Service, pull request', , User Service , . , - , - , . - , .

. , . , , , . , , , , , , , - . , ( , ), , «Delete»: , , . .

— «», , , , . , : , , , . , . , , . , , .

: . « », : , , . , , , , , , , . , . , - pull request , — , — - , . VCS : - , ? , - , , .

, , , . : . , . , , , , . , , , . , , . « », , . , , — , , .

: , - , . . - , , , , . - , - , , , , . .

. , IHandler . .

IHandler ICommandHandler IQueryHandler , . , , . , CommandHandler, CommandHandler', .

Why is that? , Query , Query — . , , , Hander, CommandHandler QueryHandler, - use case, .

— , , , , : , .

, . , . , -.

C# 8, nullable reference type . , , , , .

ChangeTracker' ORM.

Exception' — , F#, C#. , - , - , . , , Exception', , LINQ, , , , , , Dapper - , , , .NET.

, LINQ, , permission' — . , , - , , . , — .

. :

— . . — «Domain Modeling Made Functional», F#, F#, , , , , . C# , , Exception'.

, , — «Entity Framework Core In Action». , Entity Framework, , DDD ORM, , ORM DDD .

In this article we will look at the criteria for good code and bad code, how and what to measure. We will see an overview of typical tasks and approaches, analyze the pros and cons. At the end there will be recommendations and best practices for designing web applications.

This article is a transcript of my report from the conference DotNext 2018 Moscow. In addition to the text, under the cut there is a video and a link to the slides.

')

Slides and report page on the site .Briefly about me: I am from Kazan, I work in the “Hightech Group” company. We develop business software. Recently, I have been teaching a course called Corporate Software Development at the Kazan Federal University. From time to time I still write articles on Habr about engineering practices, about developing corporate software.

As you, probably, could guess, today I will talk about the development of corporate software, namely, how to structure modern web applications:

- criteria

- a brief history of the development of architectural thought (what was, what has become, what problems exist);

- review of the flaws of the classical puff architecture

- decision

- step-by-step analysis of sales without immersion in details

- totals.

Criteria

We formulate the criteria. I really don't like it when the talk about design is conducted in the style of "my kung fu is stronger than your kung fu." Business has, in principle, one specific criterion, which is called "money." Everyone knows that time is money, so these two components are most often the most important.

So, the criteria. In principle, business most often asks us "as many features as possible per unit of time", but with one nuance - these features should work. And the first stage at which it may break is the review code. That is, it seems, the programmer said: "I will do it in three hours." Three hours have passed, the review has come to the code, and the team leader says: "Oh, no, redo it." There are three more - and how many iterations the review code has passed, you need to multiply three hours by that.

The next moment is the return from the acceptance test phase. Same. If the feature does not work, then it is not done, these three hours stretch for a week, two - well, as usual there. The last criterion is the number of regressions and bugs, which nevertheless, despite testing and acceptance, were passed in production. This is also very bad. There is one problem with this criterion. It is difficult to track, because the connection between the fact that we have launched something into the repository, and the fact that after two weeks something broke, it can be difficult to track. But, nevertheless, it is possible.

Architecture development

Long ago, when programmers were just starting to write programs, there was still no architecture, and everyone did everything the way they like.

Therefore, such an architectural style was obtained. We call it “noodles”, abroad they say “spaghetti code”. Everything is connected with everything: we change something at point A - at point B it breaks, to understand what is connected with what is absolutely impossible. Naturally, the programmers quickly realized that this would not work, and we needed to do some kind of structure, and decided that some layers would help us. Now, if you imagine that minced meat is a code, and lasagna are such layers, here is an illustration of a layered architecture. The stuffing was still stuffing, but now the stuffing from layer No. 1 cannot just go and talk to the stuffing from layer No. 2. We gave the code some form: even in the picture you can see that the lasagna is more framed.

Everyone is familiar with the classical layered architecture : there is a UI, there is a business logic and there is a Data Access layer. There are still all sorts of services, facades and layers, named after the architect who left the company, there can be an unlimited number of them.

The next stage was the so-called onion architecture . It would seem a huge difference: before that there was a square, and then there were circles. It seems that is completely different.

Not really. The only difference is that somewhere at this time the principles of SOLID were formulated, and it turned out that in classical onion there is a problem with dependency inversion, because the abstract domain code for some reason depends on the implementation, on Data Access, therefore Data Access decided to deploy , and made Data Access dependent on the domain.

I practiced drawing and drawing onion architecture here, but not classically with “little rings”. I got something between a polygon and circles. I did this to simply show that if you came across the words “onion,” “hexagonal,” or “ports and adapters,” they are all the same. The point is that the domain in the center, it is wrapped in services, they can be domain or application-services, as you like. And the outside world in the form of UI, tests and infrastructure, where the DAL moved - they communicate with the domain through this service layer.

A simple example. Email update

Let's see how in such a paradigm will look like a simple use case - update the user's email.

We need to send a request, perform a validation, update the value in the database, send a notification to the new email: “Everything is fine, you changed the email, we know everything is fine”, and to answer the browser “200” everything is okay.

The code might look something like this. Here we have standard ASP.NET MVC validation, there is an ORM to read and update data, and there is some email-sender that sends a notification. It seems like everything is fine, yes? One nuance - in an ideal world.

In the real world, the situation is slightly different. The point is to add authorization, error checking, formatting, logging and profiling. All this has nothing to do with our use case, but it should be. And that little piece of code has become big and scary: with a lot of nesting, with a lot of code, with the fact that it is hard to read, and most importantly, the infrastructure code is larger than the domain code.

“Where are the services?” You will say. I wrote all the logic in the controllers. Of course, this is a problem, now I will add services, and everything will be fine.

We add services, and it really gets better, because instead of a big footcloth, we got one small beautiful line.

Got better? It has become! And now we can reuse this method in different controllers. The result is obvious. Let's look at the implementation of this method.

But here everything is not so good. This code has not gone away. All the same, we just transferred to the services. We decided not to solve the problem, but simply disguise it and move it to another place. That's all.

In addition, there are some other issues. Should we do validation in the controller or here? Well, sort of, in the controller. And if you need to go to the database and see what such an ID is or that there is no other user with such an email? Hmm, well, then in the service. But error handling here? This error handling is probably here, and the error handling that will be answered by the browser in the controller. And the SaveChanges method, is it in service or do I need to transfer it to the controller? It can be both so and so, because if the service is called one, it is more logical to call in the service, and if you have three controller methods in the controller that need to be called, then you need to call it outside of these services so that there is one transaction. These are the thoughts that suggest that maybe the layers do not solve any problems.

And this idea occurred to more than one person. If you google, at least three of these honorable husbands write about the same thing. From top to bottom: Stephen .NET Junkie (unfortunately, I don’t know his last name, because it doesn’t appear anywhere on the Internet) by IoC container Simple Injector . Next, Jimmy Bogard - the author of AutoMapper . And below is Scott Vlashin, the author of the site “F # for fun and profit” .

All these people talk about the same thing and offer to build applications not on the basis of layers, but on the basis of use options, that is, the requirements for which the business asks us. Accordingly, the use case in C # can be defined using the IHandler interface. It has input values, output values, and there is the method itself that actually executes this use case.

And inside this method there can be either a domain model, or any denormalized model for reading, maybe with the help of Dapper or with the help of Elastic Search, if you need to look for something, and maybe you have Legacy -system with stored procedures - no problem, as well as network requests - well, in general, anything you might need. But if there are no layers, how to be?

First, let's get rid of UserService. Remove the method and create a class. And also remove, and remove again. And then take and remove the class.

Let's think, are these classes equivalent or not? The GetUser class returns data and does not change anything on the server. This, for example, about the request "Give me the user ID." The UpdateEmail and BanUser classes return the result of the operation and change state. For example, when we say to the server: “Please change the state, you need to change something here.”

Look at the HTTP protocol. There is a GET method that, according to the HTTP protocol specification, should return data and not change the state of the server.

And there are other methods that can change the state of the server and return the result of the operation.

The CQRS paradigm seems to have been specially created for the HTTP protocol. Query is GET operations, and commands are PUT, POST, DELETE — you don’t need to invent anything.

We will define our Handler and define additional interfaces. IQueryHandler, which differs only in that we hang constraint that the type of input values is IQuery. IQuery is a marker interface, there is nothing in it except for this generic. We need a generic in order to place constraint in the QueryHandler, and now, when declaring the QueryHandler, we cannot transfer there not Query, but passing the Query object there, we know its return value. This is convenient if you have some interfaces, so that you do not look for their implementation in the code, and again not to make a mess of it. You write IQueryHandler, write the implementation there, and you cannot substitute another type of return value in TOut. It just won't compile. Thus, it is immediately apparent which input values correspond to which input data.

Absolutely the same situation for the CommandHandler with one exception: this generic will be needed for one more trick, which we will see a little further.

Handler implementation

Handlers, we announced what kind of implementation they have?

Any problem, yes? It seems that something did not help.

Decorators rush to the rescue

But it did not help, because we are still in the middle of the road, we still need to refine a little, and this time we will need to use the decorator pattern, namely, its remarkable layout feature. The decorator can be wrapped in the decorator, wrapped in the decorator, wrapped in the decorator - continue until you get bored.

Then everything will look like this: there is an input Dto, it enters the first decorator, the second, the third, then we go into the Handler and also exit it, go through all the decorators and return Dto in the browser. We declare an abstract base class in order to later inherit, the body of Handler is passed to the constructor, and we declare the abstract method Handle, in which the additional logic of the decorators will be hung.

Now with the help of decorators you can build a pipeline. Let's start with the teams. What did we have? Input values, validation, access control, the logic itself, some events that occur as a result of this logic, and return values.

Let's start with validation. We announce the decorator. IEnumerable comes from the constructor of this validator of type T validators. We execute them all, check if validation fails and the return type is

IEnumerable<validationresult> , then we can return it because the types are the same. And if this is some other Hander, well then you have to throw Exception, because there is no result here, the type of the other return value.

The next step is Security. Also we declare the decorator, we do the CheckPermission method, we check. If suddenly something went wrong, everything, we do not continue. Now, after we have carried out all the checks and are sure that everything is fine, we can execute our logic.

Obsession with primitives

Before showing the implementation of logic, I want to start a little bit earlier, namely, with the input values that come there.

Now, if we single out such a class this way, then most often it can look something like this. At least this is the code that I see in my daily work.

In order for validation to work, we add here some attributes that tell us what kind of validation it is. This will help in terms of data structure, but will not help with such validation as checking values in the database. Here, just EmailAddress, it is not clear how, where to check how to use these attributes in order to go to the database. Instead of attributes, you can go to special types, then this problem will be solved.

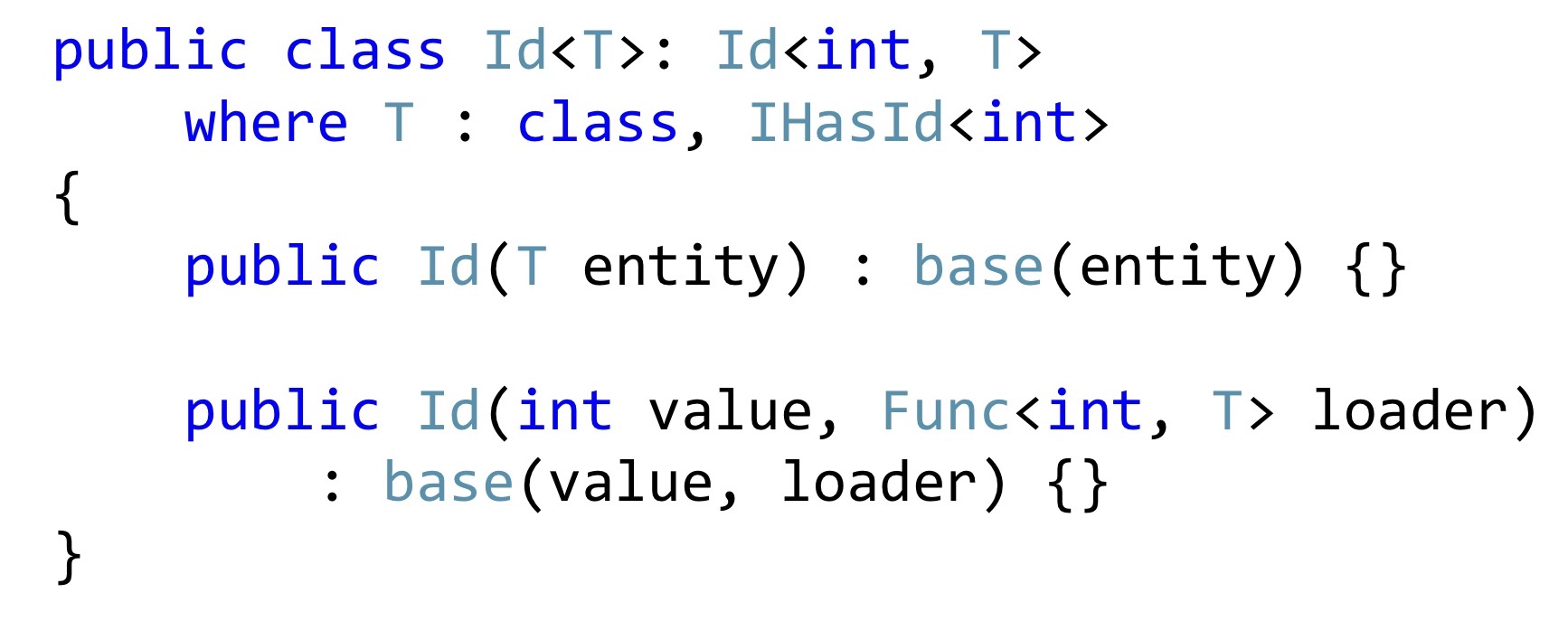

Instead of the primitive

int declare such a type Id, which has a generic, that this is a certain entity with an int key. And in the constructor, we either pass this entity, or pass it to Id, but at the same time we have to pass a function that, by Id, can take and return, check, null is there or not null.

We do the same with Email. Let's convert all Emails to the bottom line so that everything looks the same to us. Next we take the Email attribute, declare it as static for compatibility with ASP.NET validation and here we just call it. That is, so too can be done. In order for the ASP.NET infrastructure to pick up all this, you will have to slightly change the serialization and / or ModelBinding. The code is not very much there, it is relatively simple, so I will not stop there.

After these changes, instead of primitive types, we have specialized types here: Id and Email. And after these ModelBinder and updated deserializer have worked, we know for sure that these values are correct, including the values in the database. "Invariants"

The next point on which I would like to dwell is the state of invariants in the class, because quite often the anemic model is used, in which there is just a class, many getters-setters, it is completely incomprehensible how they should work together. We work with complex business logic, so it is important for us that the code be self-documenting. Instead, it is better to declare a real constructor with an empty ORM, you can declare it protected so that programmers in their application code cannot call it, and ORM can. Here we are no longer sending a primitive type, but an Email type, it is already exactly correct, if it is null, we still throw Exception. You can use any Fody, PostSharp, but soon C # 8 comes out. Accordingly, there will be a Non-nullable reference type, and it is better to wait for its support in the language. The next moment, if we want to change the name and surname, most likely we want to change them together, so there must be an appropriate public method that changes them together.

In this public method, we also verify that the length of these strings corresponds to what we use in the database. And if something is wrong, then stop execution. Here I use the same trick. I declare a special attribute and just call it in the application code.

Moreover, such attributes can be reused in Dto. Now, if I want to change the name and surname, I may have such a command to change. Is it worth it to add a special constructor here? It seems to be worth it. It will become better, no one will change these values, will not break them, they will be exactly the right ones.

Not really, really. The fact is that Dto is not really objects at all. This is such a dictionary, in which we shove deserialized data. That is, they pretend to be objects, of course, but they have only one responsibility - to be serialized and deserialized. If we try to deal with this structure, we will begin to declare some ModelBinder with designers, something to do, this is incredibly tedious, and, most importantly, it will break with new outputs of new frameworks. All this is well described by Mark Simon in the article “On the boundaries of the program are not object-oriented,” if you are interested, read his post, it’s all described in detail.

In short, we have a dirty outside world, we put in the input of the check, convert it to our clean model, and then transfer it all back to serialization, to the browser, again to the dirty outside world.

Handler

After all these changes have been made, what will Hander look like?

I wrote two lines here in order to make it easier to read, but in general can be written in one. The data is exactly correct, because we have a type system, there is validation, that is, reinforced concrete correct data, we do not need to check them again. This user also exists, there is no other user with such busy email, everything can be done. However, there is still no call to the SaveChanges method, there is no notification, and there are no logs and profilers, right? Moving on.

Events

Domain events.

Perhaps, for the first time, Udi Dahan popularized this concept in his post “Domain Events - Salvation” . There he proposes to simply declare a static class with the Raise method and throw out such events. A little later, Jimmy Bogard proposed a better implementation, and it is called “A better domain events events” .

I will show the serialization of Bogard with one small change, but important. Instead of throwing out events, we can declare some list, and in those places where some reaction should take place, right inside the entity, save these events. In this case, this

email getter is also a User class, and this class, this property, does not pretend to be a property with auto wipers and setters, but really adds something to this. That is, it is a real encapsulation, not a profanation. When we change, we check that the email is different and throw away the event. This event is not going anywhere yet; we only have it in the internal list of entities.

Further, at that moment, when we call the SaveChanges method, we take a ChangeTracker, see if there are any entities that implement the interface, whether they have domain events. And if there is, let's take all these domain events and send them to some dispatcher who knows what to do with them.

The implementation of this dispatcher is a topic for a separate conversation, there are some difficulties with multiple dispatch in C #, but this is also done. With this approach, there is another unobvious advantage. Now, if we have two developers, one can write code that changes this very email, and the other can make a notification module. They are absolutely not connected with each other, they write different code, they are connected only at the level of this domain event of the same Dto class. The first developer simply throws out this class at some point, the second one reacts to it and knows that it needs to be sent to email, SMS, push notifications to the phone and all the other million notifications, taking into account all the preferences of users, which usually are.

Here is the smallest, but important note. The Jimmy article uses an overload of the SaveChanges method, and it’s better not to. And to do it better in the decorator, because if we overload the SaveChanges method and we needed dbContext in the Handler, we will get circular dependencies. You can work with this, but the solutions are slightly less convenient and a little less beautiful. Therefore, if the pipeline is built on decorators, then it makes no sense to do it differently.

Logging and profiling

The nesting of the code remained, but in the original example we had first using MiniProfiler, then try catch, then if. In total, there were three levels of nesting, now each of this level of nesting is in its decorator. And inside the decorator, who is responsible for profiling, we have only one level of nesting, the code reads perfectly. In addition, it is clear that in these decorators only one responsibility. If the decorator is responsible for logging, then he will only log, if for profiling, respectively, only profiling, everything else is in other places.

Response

After the whole pipeline has completed, we just have to take Dto and send further to the browser, serialize JSON.

But one more small-small such thing that is sometimes forgotten: Exception can happen here at every stage, and in general it is necessary to process them somehow.

I can not fail to mention here again Scott Vlashin and his report “Railway oriented programming” . Why? The original report is entirely devoted to working with errors in the F # language, how to organize the flow a little differently, and why such an approach might be preferable to using Exception. In F #, this really works very well, because F # is a functional language, and Scott uses the capabilities of a functional language.

Since, probably, most of you still write in C #, then if you write an analogue in C # , then this approach will look something like this. Instead of throwing exceptions, we declare a Result class that has a successful branch and an unsuccessful branch. Accordingly, two designers. A class can only be in one state. This class is a special case of a type-association, discriminated union from F #, but rewritten in C #, because there is no built-in support in C #.

Instead of declaring public getters, which someone may not check for null in the code, Pattern Matching is used. Again, in F # it would be embedded in the Pattern Matching language, in C # you have to write a separate method, to which we will pass one function, which knows what to do with a successful result of the operation, how to transform it further along the chain, and what is wrong. That is, no matter which branch worked for us, we have to drop it to one return result. In F #, this all works very well, because there is a functional composition, well, everything else that I have already listed. In .NET, this works somewhat worse, because once you have more than one Result, and many - and almost every method can fail for one reason or another - almost all of your resulting function types become Result, and you need them as something to combine.

The easiest way to combine them is to use LINQ , because in general LINQ does not only work with IEnumerable, if you define the SelectMany and Select methods correctly, then the C # compiler will see what you can use for these types of LINQ syntax. In general, tracing paper is obtained from Haskell do-notation or from the same Computation Expressions in F #. How should this be read? Here we have three results of the operation, and if everything is good there in all three cases, then take these results r1 + r2 + r3 and add. The type of the resulting value will also be Result, but the new Result, which we declare in Select. In general, this is even a working approach, if not for one thing.

For all other developers, as soon as you start writing such C # code, you start to look something like this. “These are bad scary Exceptions, don't write them! They are evil! It is better to write code that no one understands and cannot debug! ”

C # is not F #, it is somewhat different, there are no different concepts on the basis of which this is done, and when we are trying to pull an owl on the globe, it turns out, to put it mildly, it is unusual.

Instead, you can use the built-in normal tools that are documented, that everyone knows and that will not cause developers cognitive dissonance. ASP.NET has global Handler Exceptions.

We know that if there are any problems with validation, you need to return code 400 or 422 (Unprocessable Entity). If the problem is with authentication and authorization, there are 401 and 403. If something went wrong, then something went wrong. And if something went wrong and you want to tell the user exactly what, define your Exception type, say it is IHasUserMessage, declare Message getter on this interface and just check: if this interface is implemented, then you can take the message from Exception and pass it to the JSON user. If this interface is not implemented, it means that there is some kind of system error there, and we will simply say to the users that something went wrong, we are already engaged, we all know - well, as usual.

Query Pipeline

With this we finish with the teams and see what we have in the Read-stack. As for the request, validation, response, it’s about the same thing, we will not stop separately. There may be an additional cache here, but in general, there are also no major problems with the cache.

Security

We’ll look better at security checks. There may also be the same Security decorator, which checks whether this query can be made or not:

But there is another case where we print not one record, but print lists, and some users have to display a complete list (for example, some superadminators), and other users we have to display limited lists, the third - limited software. to another, well, and as is often the case in corporate applications, access rights can be extremely sophisticated, so you need to be sure that these lists are not crawled by data that these users do not intend.

The problem is solved quite simply . We can add an interface (IPermissionFilter) to which the original queryable comes in and returns a queryable. The difference is that by that queryable, which is returned, we have already hung up additional where clauses, checked the current user and said: “Here, return to this user only the data that ...” - and then all your logic related to permission'ami . Again, if you have two programmers, one programmer goes to write permissions, he knows that he just needs to write a lot of permissionFilters and check that they work correctly for all entities. And other programmers do not know anything about permission'y, in the list just always pass the correct data, that's all. Because they receive at the entrance no longer the original queryable from dbContext, but limited by filters. This permissionFilter also has a layout property, we can add and apply all permissionFilters. As a result, we obtain the resulting permissionFilter, which will limit the selection of data to the maximum, taking into account all the conditions that are suitable for this entity.

Why not do it with built-in ORM tools, for example, Global Filters in entity framework? Again, in order not to dwell on all sorts of cyclical dependencies and not to drag in the context any additional story about your business layer.

Query Pipeline. Read Model

It remains to look at the model of reading. In the CQRS paradigm, the domain model in the reading stack is not used; instead, we simply immediately form the Dto that the browser needs at the moment.

If we are writing in C #, then most likely we are using LINQ, if there are not only some monstrous performance requirements, and if they are, then you may not have a corporate application. In general, this task can be solved once and for all with LinqQueryHandler. There is a pretty scary constraint on a generic: this is Query, which returns a list of projections, and it can still filter these projections and sort these projections. It also works only with some types of entities and knows how to convert these entities to projections and return the list of such projections already in the form of Dto to the browser.

The implementation of the Handle method can be quite simple. Just in case, let's check if this TQuery filter implements the initial entity. Further we do a projection, it is AutoMapper's queryable extension. If someone still does not know, AutoMapper can build projections in LINQ, that is, those who will build the Select method, and not map it in memory.

Then we apply filtering, sorting and give it all to the browser. , DotNext, , , , , , expression' , .

Moving on. , DotNext', — SQL. Select , , , queryable- .

, . , Title, Title , . , . SubTitle, , , - , queryable- . , .

, . , , . , , . «JsonIgnore», . , , Dto. , , . JSON, , Created LastUpdated , SubTitle — , . , , , , , . , - .

. , -, , . , pipeline, . — , , . , SaveChanges, Query SaveChanges. , , , NuGet, .

. , - , , . , , , , , — . , , : « », — . .

, ?

- . .

, , , . MediatR , . , , — , MediatR pipeline behaviour. , Request/Response, RequestHandler' . Simple Injector, — .

, , , , TIn: ICommand.

Simple Injector' constraint' . , , , constraint', Handler', constraint. , constraint ICommand, SaveChanges constraint' ICommand, Simple Injector , constraint' , Handler'. , , , .

? Simple Injector MeriatR — , , Autofac', -, , , . , .

,

, «».

, «Clean architecture». .

- - , MVC, , .

, , , Angular, , , , . , : « — MVC-», : « Features, : , Blog - Import, - ».

, , , , MVC-, , - , . MVC . , , — . .

- , - -, .

-, , . , . , - , User Service, pull request', , User Service , . , - , - , . - , .

. , . , , , . , , , , , , , - . , ( , ), , «Delete»: , , . .

— «», , , , . , : , , , . , . , , . , , .

: . « », : , , . , , , , , , , . , . , - pull request , — , — - , . VCS : - , ? , - , , .

, , , . : . , . , , , , . , , , . , , . « », , . , , — , , .

: , - , . . - , , , , . - , - , , , , . .

. , IHandler . .

IHandler ICommandHandler IQueryHandler , . , , . , CommandHandler, CommandHandler', .

Why is that? , Query , Query — . , , , Hander, CommandHandler QueryHandler, - use case, .

— , , , , : , .

, . , . , -.

C# 8, nullable reference type . , , , , .

ChangeTracker' ORM.

Exception' — , F#, C#. , - , - , . , , Exception', , LINQ, , , , , , Dapper - , , , .NET.

, LINQ, , permission' — . , , - , , . , — .

. :

- Vertical Slices

- www.youtube.com/watch?v=SUiWfhAhgQw

- www.cuttingedge.it/blogs/steven/pivot/entry.php?id=91

- cuttingedge.it/blogs/steven/pivot/entry.php?id=92

- Domain Events

- udidahan.com/2009/06/14/domain-events-salvation

- lostechies.com/jimmybogard/2014/05/13/a-better-domain-events-pattern

- enterprisecraftsmanship.com/2017/10/03/domain-events-simple-and-reliable-solution

- DDD: habr.com/post/334126

- ROP

- LINQ Expressions:

- Clean Architecture: www.youtube.com/watch?v=JEeEic-c0D4

— . . — «Domain Modeling Made Functional», F#, F#, , , , , . C# , , Exception'.

, , — «Entity Framework Core In Action». , Entity Framework, , DDD ORM, , ORM DDD .

Minute advertising. 15-16 2019 .NET- DotNext Piter, . , .

Source: https://habr.com/ru/post/447308/

All Articles