Operating Systems: Three Easy Pieces. Part 2: Abstraction: The Process (Translation)

Introduction to operating systems

Hi, Habr! I want to bring to your attention a series of articles-translations of one interesting in my opinion literature - OSTEP. This material takes a rather in-depth look at the work of unix-like operating systems, namely, working with processes, various schedulers, memory, and other similar components that make up a modern OS. The original of all materials you can see here . Please note that the translation was made unprofessionally (fairly freely), but I hope I saved the general meaning.

Laboratory work on this subject can be found here:

- original: pages.cs.wisc.edu/~remzi/OSTEP/Homework/homework.html

- original: github.com/remzi-arpacidusseau/ostep-code

- my personal adaptation: github.com/bykvaadm/OS/tree/master/ostep

- Part 3: Scheduler Introduction

Other parts:

- Part 1: Intro

- Part 2: Abstraction: the process

- Part 3: Introduction to the Process API

- Part 4: Scheduler Introduction

And you can also look to me on the channel in the telegram =)

Consider the most fundamental abstraction that the OS provides to users: the process. The process definition is quite simple - it is a working program . The program itself is a lifeless thing, located on the disk is a set of instructions and perhaps some static data waiting for the launch moment. It is the OS that takes these bytes and runs them, converting the program into something useful.

Most often, users want to run more than one program at a time, for example, you can run a browser, game, media player, text editor, and the like on your laptop. In fact, a typical system can run tens and hundreds of processes simultaneously. This fact makes the system easier to use, you never have to worry about whether the CPU is free, you just run the programs.

')

Hence the problem: how to ensure the illusion of multiple CPUs? How does the OS create the illusion of a virtually infinite number of CPUs, even if you have only one physical CPU?

The OS creates this illusion through CPU virtualization. By starting one process, then stopping it, starting another process, and so on, the OS can maintain the illusion that there are many virtual CPUs, although in fact it will be one or more physical processors. Such a technique is called time sharing of CPU resources . This technique allows users to run as many simultaneous processes as they wish. The cost of this solution is performance - because if the CPUs share several processes, each process will be processed more slowly.

To implement CPU virtualization, and especially to do this well, the OS needs both low-level and high-level support. Low-level support is called mechanisms - these are low-level methods or protocols that implement the necessary part of the functionality. An example of such functionality is context-sensitive switching, which allows the OS to stop one program and start another program on the processor. This time division is implemented in all modern operating systems.

At the top of these mechanisms is some logic embedded in the OS, in the form of a “politician”. A policy is a decision-making algorithm by the operating system. Such politicians, for example, decide which program to run (from the list of commands) first. So, for example, this task will be solved by a policy called scheduling policy and when choosing a solution it will be guided by such data as: launch history (which program was running the longest in the last minutes), what load this process carries out (what types of programs were running performance metrics (whether the system is optimized for interactive or bandwidth), and so on.

Abstraction: the process

The abstraction of a running program performed by the operating system is what we call a process . As it was said before, the process is just a working program, at any instant. A program with the help of which we can obtain summary information from various system resources and which are accessed or affected by this program during its execution.

To understand the components of the process you need to understand the state of the system: what the program can read or change during its work. At any time you need to understand what elements of the system are important for the execution of the program.

One of the obvious elements of the state of a system that a process involves is memory . Instructions are located in memory. The data that the program reads or writes is also stored in memory. Thus, the memory that a process can address (the so-called address space) is part of the process.

Registers are also part of the system state. Many instructions are intended to change the value of registers or read their meaning and thus registers also become an important part of the process.

It should be noted that the state of the machine is also formed from some special registers. For example, IP - instruction pointer - a pointer to the instruction that the program is currently executing. There is also a stack pointer and a frame pointer associated with it, which are used to control: function parameters, local variables, and return addresses.

Finally, programs often access a ROM (Read Only Memory). Such information about “I / O” (I / O) should include a list of files currently open by the process.

Process API

In order to improve the understanding of the work process, we study examples of system calls that should be included in any interface of the operating system. These APIs are available in one form or another on any OS.

● Create : The OS must include some method that allows you to create new processes. When you enter a command into the terminal or launch an application by double-clicking on the icon, a call is sent to the OS to create a new process and then launch the specified program.

● Delete : Once there is an interface for creating a process, the OS must also provide the option to force the removal of a process. Most programs will naturally run and complete on their own as they are executed. Otherwise, the user would like to be able to kill them and thus the interface for stopping the process will not be superfluous.

● Wait : Sometimes it is useful to wait for the process to complete, so some interfaces are provided that provide the ability to wait.

● Misc Control (miscellaneous control): In addition to killing and waiting for the process, there are other various controlling methods. For example, most operating systems provide the opportunity to freeze the process (stopping its execution for a certain period) and the subsequent resumption (continued execution)

● Status : There are various interfaces for getting some information about the status of the process, such as the duration of its work or what state it is in now.

Process creation: details

One of the interesting things is how exactly programs transform into processes. Especially as the OS picks up and launches the program. How exactly is the process created?

First of all, the OS must load the program code and static data into memory (into the process address space). Programs are usually located on a disk or solid-state drive in some executable format. Thus, the process of loading a program and static data into memory requires the OS to read these bytes from the disk and place them somewhere in memory.

In early OSs, the boot process was performed impatiently (eagerly), which means that the code was loaded into memory entirely before the program was started. Modern OSs do this lazily, that is, downloading pieces of code or data only when they are required by the program during its execution.

After the code and static data are loaded into the OS memory, you need to perform several more things before starting the process. A certain amount of memory must be allocated for the stack. Programs use a stack for local variables, function parameters, and return addresses . The OS allocates this memory and gives it to the process. The stack can also be allocated with some arguments, specifically it fills the parameters of the main () function, for example, with the argc and argv array.

The operating system may also allocate some amount of memory for a heap of the program. The heap is used by programs for explicitly requested dynamically allocated data . Programs request this space by calling the malloc () function and explicitly clear by calling the free () function. A heap is needed for data structures such as: linked sheets, hash tables, trees, and others. At first, a small amount of memory is allocated for the heap, but over time, as the program runs, the heap can request a larger amount of memory, through the library API call malloc (). The operating system is involved in allocating more memory to help meet these challenges.

The operating system will also perform initialization tasks, in particular those related to I / O. For example, on UNIX systems, by default each process has 3 open file descriptors for standard input, output, and error streams. These descriptors allow programs to read input from the terminal, as well as display information on the screen.

Thus, by loading code and static data into memory, creating and initializing the stack, and also performing other work related to performing I / O tasks, the OS prepares a platform for the process. In the end, the last task remains: to launch the program through its entry point, called the main () function. Turning to the execution of the function main (), the OS transfers control of the CPU to the newly created process, so the program starts to run.

Process state

Now that we have some understanding of what a process is and how it is created, we list the states of the process in which it can be. In its simplest form, a process can be in one of these states:

● Running . In the running state, the process is performed on the processor. This means that instructions are being executed.

● Ready . In the ready state, the process is ready to start, but for some reason the OS is not executing it at a given point in time.

● Blocked . In the locked state, the process performs some operations that prevent it from being ready for execution until an event occurs. One common example is when a process initializes an IO operation, it becomes blocked, and thus some other process may use the processor.

Imagine these states can be in the form of a graph. As we can see in the picture, the state of the process can vary between RUNNING and READY at the discretion of the OS. When a process state changes from READY to RUNNING, this means that the process has been scheduled. In the opposite direction - removed from the layout. At the moment when the process becomes BLOCKED, for example, I initialize the IO operation, the OS will keep it in this state until a certain event occurs, for example, the completion of IO. at this point, the transition to the READY state and possibly instantly to the RUNNING state, if the OS so decides.

Let's take a look at an example of how the two processes go through these states. To begin with, imagine that both processes are running, and each uses only the CPU. In this case, their states will look like this.

In the following example, the first process, after a while, requests IO and goes into the BLOCKED state, allowing the other process to start (RIS 1.4). The OS sees that process 0 does not use the CPU and starts process 1. During the execution of process 1 - IO terminates and the status of process 0 changes to READY. Finally, process 1 is completed, and when it ends, process 0 starts, runs, and ends its work.

Data structure

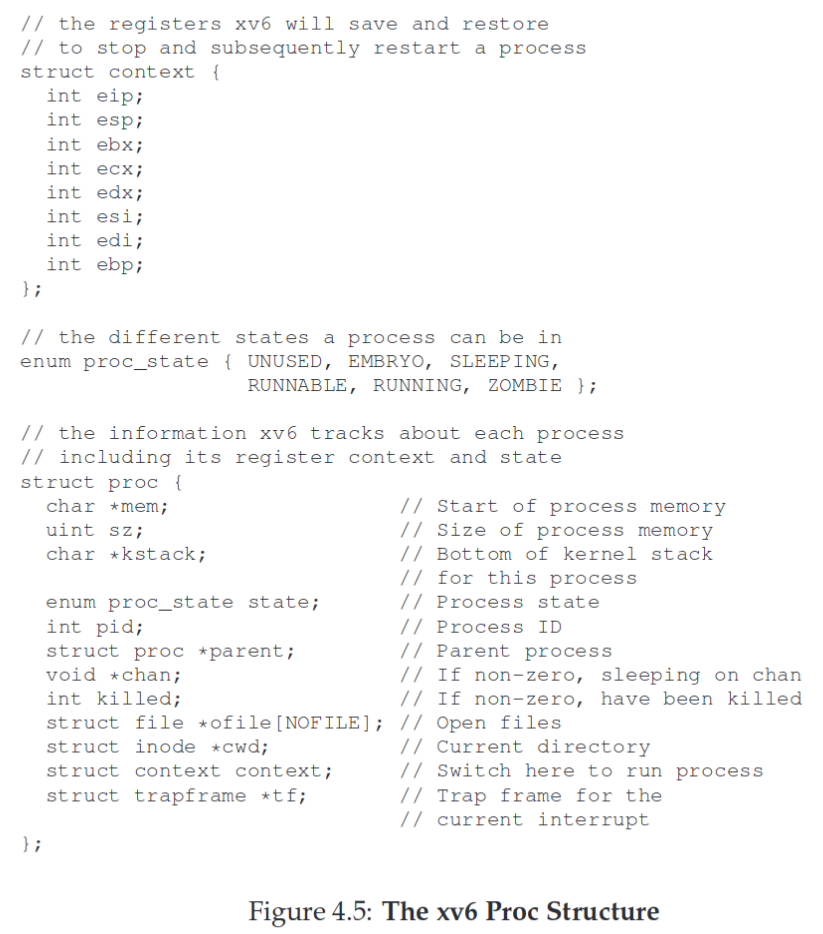

The OS itself is a program, and like any other program it has some key data structures that track a variety of relevant pieces of information. To track the status of each process in the OS, some process list will be maintained for all processes in the READY state and some additional information to track the processes that are currently running. Also, the OS should monitor and blocked processes. After completing the IO, the OS must wake up the desired process and put it into a state of readiness for launch.

For example, the OS must maintain the state of the processor registers. At the time of stopping the process, the state of the registers is stored in the address space of the process, and at the time of its continuation, to restore the values of the registers and thus continue the execution of this process.

In addition to the states ready, blocked, running, there are some other states. Sometimes at the time of creation a process may have an INIT state. Finally, the process can be placed in the FINAL state when it has already completed, but information about it has not yet been cleared. On UNIX systems, this state is called a zombie process . This state is useful for cases where the parent process wants to know the return code of the child, for example, usually 0 indicates a successful completion, and 1 erroneous, however programmers can make additional output codes, signaling various problems. Upon completion, the parent process makes the last system call, such as wait (), to wait for the child process to finish and signal the OS that any data associated with the completed process can be cleared.

Key points of the lecture:

● Process - the main abstraction of a running program in the OS. At any time, a process can be described by its state: the contents of the memory in its address space, the contents of the processor registers, including the instruction pointer and stack pointer, are also information about IO, for example, open files that are read or written.

● The Process API consists of calls that programs can make to processes. These are usually creation, delete, or other calls.

● The process is in one of many states, including running, ready, blocked. Various events, such as planning, de-planning or waiting, can translate the state of a process from one to another.

● The process list contains information about all the processes in the system. Each entry in it is called a process control block, which in reality is a structure that contains all the necessary information about a particular process.

Source: https://habr.com/ru/post/446866/

All Articles