Kubernetes tips & tricks: on local development and Telepresence

We are increasingly asked about the development of microservices in Kubernetes. Developers, especially interpreted languages, want to quickly correct the code in their favorite IDE and without waiting for the build / deployment to see the result - by simply pressing F5. And when it came to a monolithic application, it was enough to locally raise the database and the web server (in Docker, VirtualBox ...), after which - immediately enjoy the development. With the sawing of monoliths on microservices and the arrival of Kubernetes, with the appearance of dependencies on each other, everything became a bit more complicated . The more of these microservices, the more problems. To enjoy development again, you need to raise more than one and not two Docker containers, and sometimes not even a dozen ... In general, all this can take a lot of time, since it also needs to be kept up to date.

At different times we tried different solutions to the problem. And I will start with accumulated workarounds or simply “crutches”.

')

1. Crutches

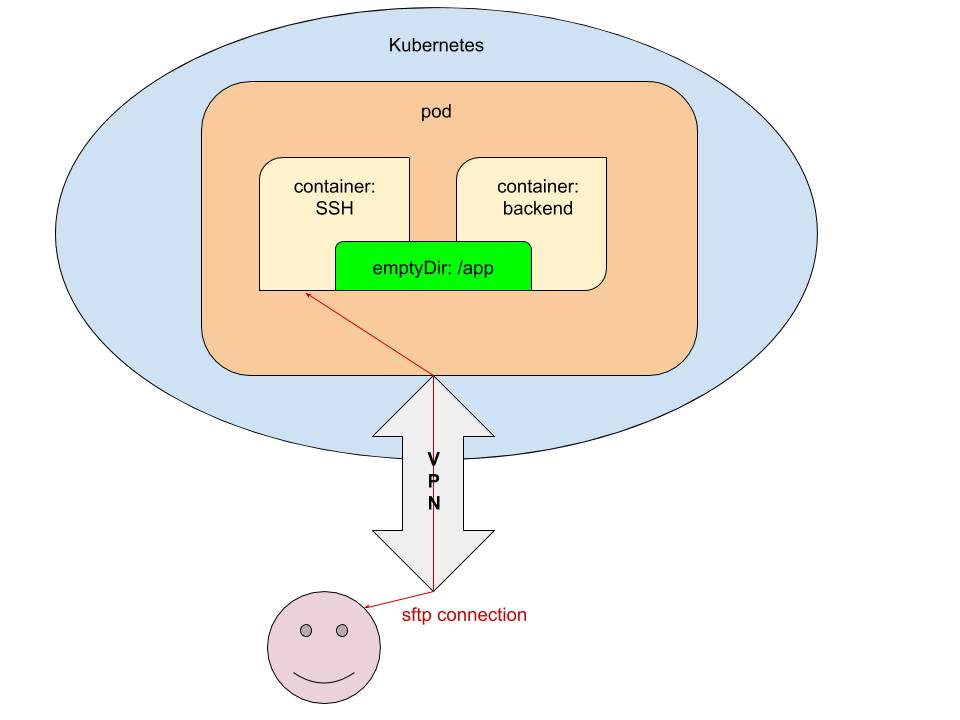

Most IDEs have the ability to edit code directly on the server using FTP / SFTP. This path is very obvious and we immediately decided to use it. The essence of it is as follows:

- In pod, the development environment (dev / review) launches an additional container with SSH access and forwarding the public SSH key of the developer that will commit / deploy the application.

- At the init-stage (as part of the

prepare-appcontainer), we transfer the code toemptyDirin order to have access to the code from the containers with the application and the SSH server.

For a better understanding of the technical implementation of such a scheme, I will cite fragments of the involved YAML configurations in Kubernetes.

Configurations

1.1. values.yaml

ssh_pub_key: vasya.pupkin: <ssh public key in base64> Here,

vasya.pupkin is the value of the ${GITLAB_USER_LOGIN} variable.1.2. deployment.yaml

... {{ if eq .Values.global.debug "yes" }} volumes: - name: ssh-pub-key secret: defaultMode: 0600 secretName: {{ .Chart.Name }}-ssh-pub-key - name: app-data emptyDir: {} initContainers: - name: prepare-app {{ tuple "backend" . | include "werf_container_image" | indent 8 }} volumeMounts: - name: app-data mountPath: /app-data command: ["bash", "-c", "cp -ar /app/* /app-data/" ] {{ end }} containers: {{ if eq .Values.global.debug "yes" }} - name: ssh image: corbinu/ssh-server volumeMounts: - name: ssh-pub-key readOnly: true mountPath: /root/.ssh/authorized_keys subPath: authorized_keys - name: app-data mountPath: /app ports: - name: ssh containerPort: 22 protocol: TCP {{ end }} - name: backend volumeMounts: {{ if eq .Values.global.debug "yes" }} - name: app-data mountPath: /app {{ end }} command: ["/usr/sbin/php-fpm7.2", "--fpm-config", "/etc/php/7.2/php-fpm.conf", "-F"] ... 1.3. secret.yaml

{{ if eq .Values.global.debug "yes" }} apiVersion: v1 kind: Secret metadata: name: {{ .Chart.Name }}-ssh-pub-key type: Opaque data: authorized_keys: "{{ first (pluck .Values.global.username .Values.ssh_pub_key) }}" {{ end }} Final touch

After that, it will only be necessary to transfer the necessary gitlab-ci.yml variables :

dev: stage: deploy script: - type multiwerf && source <(multiwerf use 1.0 beta) - type werf && source <(werf ci-env gitlab --tagging-strategy tag-or-branch --verbose) - werf deploy --namespace ${CI_PROJECT_NAME}-stage --set "global.env=stage" --set "global.git_rev=${CI_COMMIT_SHA}" --set "global.debug=yes" --set "global.username=${GITLAB_USER_LOGIN}" tags: - build Voila: the developer running the deployment can connect by the service name ( we already told you how to issue access to the cluster safely) from your desktop via SFTP and edit the code without waiting for it to be delivered to the cluster.

This is quite a working solution, but in terms of implementation has obvious drawbacks:

- the need to refine the Helm-chart, which further complicates its reading;

- can only be used by those who have registered the service;

- you need to remember to synchronize it with the local directory with the code and commit to Git.

2. Telepresence

The project Telepresence is known for a long time, but seriously try it in practice with us, as they say, "did not get around." However, the demand has done its job and now we are happy to share experiences that may be useful to readers of our blog - especially since there have been no other materials about Telepresence on the site yet.

In short, everything was not so scary. All actions that require execution by the developer, we placed in a text file Helm-chart, called

NOTES.txt . Thus, the developer, after deploying the service to Kubernetes, sees the instructions for launching the local dev environment in the GitLab job log: !!! , Kubernetes !!! * * * VPN * * kubectl ( https://kubernetes.io/docs/tasks/tools/install-kubectl/ ) * * config- kubectl ( ~/.kube/config) * * telepresence ( https://www.telepresence.io/reference/install ) * * Docker * * reporter https://gitlab.site.com/group/app * * registry / GitLab ( ): ######################################################################### docker login registry.site.com ######################################################################### * ######################################################################### telepresence --namespace {{ .Values.global.env }} --swap-deployment {{ .Chart.Name }}:backend --mount=/tmp/app --docker-run -v `pwd`:/app -v /tmp/app/var/run/secrets:/var/run/secrets -ti registry.site.com/group/app/backend:v8 ######################################################################### We will not dwell on the steps described in this manual ... except for the last. What happens during the launch of Telepresence?

Work with Telepresence

At the start (according to the last command specified in the instructions above) we ask:

- namespace, in which microservice is running;

- the names of the deployment and the container into which we want to penetrate.

The remaining arguments are optional. If our service interacts with the Kubernetes API and is created for it by ServiceAccount , we need to mount the certificates / tokens on our desktop. To do this, use the option

--mount=true (or --mount=/dst_path ), which will mount the root (/) from the container in Kubernetes to us on the desktop. After that, we can (depending on the OS and the way the application is launched) use the "keys" from the cluster.At the beginning we will consider the most universal way of launching an application - in a Docker-container. To do this, use the

--docker-run key and mount the directory with the code in the container: -v `pwd`:/appPlease note that this means starting from the project directory. The application code will be mounted to the

/app directory in the container.Next:

-v /tmp/app/var/run/secrets:/var/run/secrets - to mount the directory with the certificate / token in the container.This option is finally followed by the image in which the application will run. NB : When assembling an image, you must specify

CMD or ENTRYPOINT !What exactly will happen next?

- In Kubernetes, for the specified Deployment, the number of replicas will be changed to 0. Instead of it, a new Deployment will be launched - with the

backendcontainer replaced. - Two containers will be launched on the desktop: the first one will be from Telepresence (it will perform proxying of requests from / to Kubernetes), the second one will be developed with the application.

- If the exec go to the container with the application, then all the ENV variables transmitted by Helm during the deployment will be available to us, as well as all the services available. It remains only to edit the code in your favorite IDE and enjoy the result.

- At the end of the work, it is enough just to close the terminal where Telepresence is running (terminate the session on Ctrl + C) - the Docker containers will stop on the desktop, and in Kubernetes everything will return to the initial state. It only remains to commit, issue the MR and transfer it to review / merge / ... (depending on your workflow).

If we don’t want to run the application in a Docker container - for example, we are developing not with PHP, but with Go, and still collect it locally, launching Telepresence will be even easier:

telepresence --namespace {{ .Values.global.env }} --swap-deployment {{ .Chart.Name }}:backend --mount=true If the application accesses the Kubernetes API, you will need to mount a directory with keys (https://www.telepresence.io/howto/volumes). For Linux, there is the proot utility:

proot -b $TELEPRESENCE_ROOT/var/run/secrets/:/var/run/secrets bash After launching Telepresence without the

--docker-run option, all environment variables will be available in the current terminal, therefore, the application launch must be done in it.NB : When using, for example, PHP, you need to remember to disable various op_cache, apc and other accelerators for development - otherwise editing the code will not lead to the desired result.

Results

Local development with Kubernetes is a problem, the need for which is growing in proportion to the spread of this platform. Receiving appropriate requests from the developers (from our clients), we began to solve them with the first available means, which, however, did not prove to be in the long run. Fortunately, it became obvious not only now and not only to us, therefore more suitable means have already appeared in the world, and Telepresence is the most famous of them (by the way, there is still a skaffold from Google). Our experience of its use is not so great yet, but it already gives grounds to recommend to “colleagues in the workshop” - try it!

PS

Other K8s series tips & tricks:

Source: https://habr.com/ru/post/446788/

All Articles