We meet new Intel processors

Yesterday, 04/02/2019, Intel announced the long-awaited upgrade of the Intel® Xeon® Scalable Processors family, introduced in mid-2017. The new processors are based on the microarchitecture, codenamed Cascade Lake, and built on an improved 14-nm process.

Features of new processors

First, look at the differences in labeling. In the previous article about Skylake-SP, we already mentioned that all processors are divided into 4 series - Bronze , Silver , Gold and Platinum . About the series to which the processor model belongs the first digit of the number says:

')

- 3 - Bronze,

- 4 - Silver,

- 5, 6 - Gold,

- 8 - Platinum.

The second number indicates the generation of the processor. In the case of the Intel® Xeon® Scalable Processors family of code names:

- 1 - Skylake,

- 2 - Cascade Lake.

The next two digits denote the so-called SKU (Stock Keeping Unit). In essence, this is just a CPU ID with a specific set of available functions.

Also, after the model number can go indices, denoted by one or two letters. The first letter of the index denotes features of the architecture or optimization of the processor itself, and the second - the memory capacity per socket.

For example, take a processor with the designation Intel® Xeon® 6240 . We decipher:

- 6 - Gold series processor

- 2 - Cascade Lake Generation

- 40 - SKU.

Performance

New generation processors are designed for use in the fields of virtualization, artificial intelligence, as well as high-performance computing. The first noticeable change was the increase in clock frequency. This became quite expected, since there are a large number of server applications for which the clock frequency is more important than the number of processor cores. For example, financial product 1C, whose system requirements clearly say that the higher the processor frequency, the faster the end user will get the result.

In a number of cases, the number of nuclei was also increased. For clarity, we have compiled a comparative table of several processors Intel® Xeon® Scalable Processors of the first and second generation:

| Intel® Xeon® Silver 4114 (10 cores) | Intel® Xeon® Silver 4214 (12 cores) | |

| Clock frequency | 2.20 GHz | 2.20 GHz |

| In Turbo mode | 3.00 GHz | 3.20 GHz |

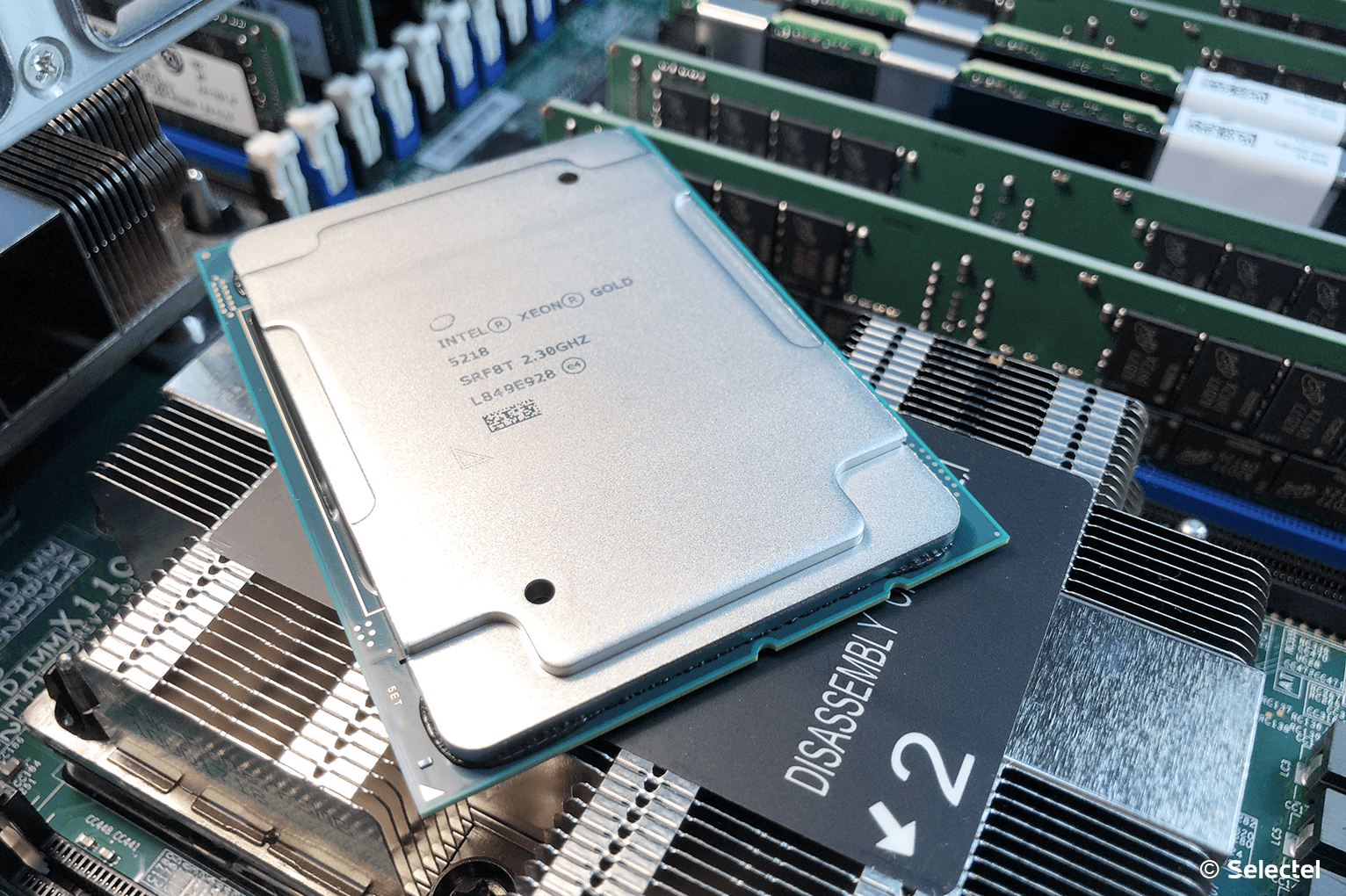

| Intel® Xeon® Gold 5118 (12 cores) | Intel® Xeon® Gold 5218 (16 cores) | |

| Clock frequency | 2.30 GHz | 2.30 GHz |

| In Turbo mode | 3.20 GHz | 3.90 GHz |

| Intel® Xeon® Gold 6140 (18 cores) | Intel® Xeon® Gold 6240 (18 cores) | |

| Clock frequency | 2.30 GHz | 2.60 GHz |

| In Turbo mode | 3.70 GHz | 3.90 GHz |

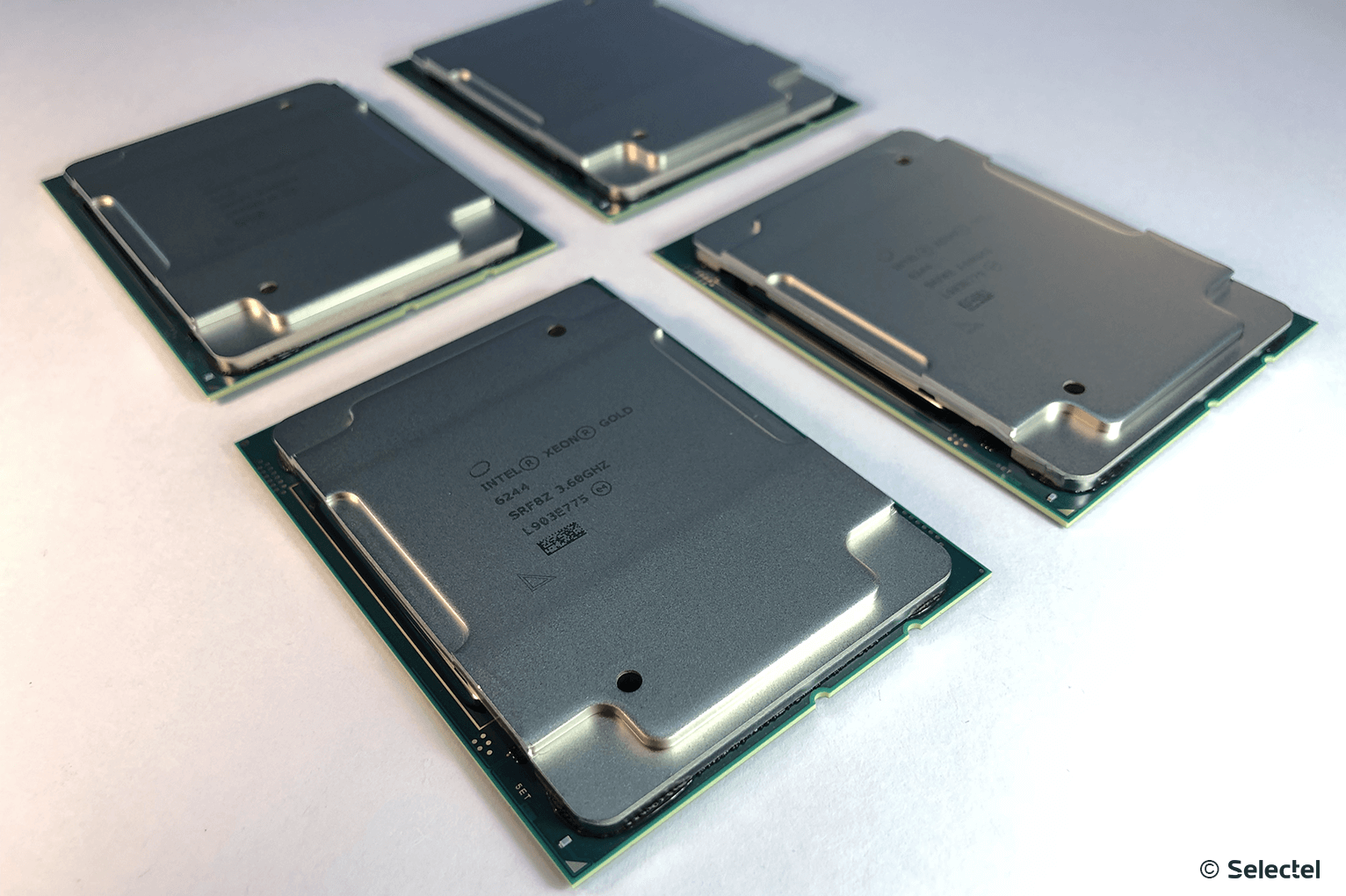

| Intel® Xeon® Gold 6144 (8 cores) | Intel® Xeon® Gold 6244 (8 cores) | |

| Clock frequency | 3.50 GHz | 3.60 GHz |

| In Turbo mode | 4.20 GHz | 4.40 GHz |

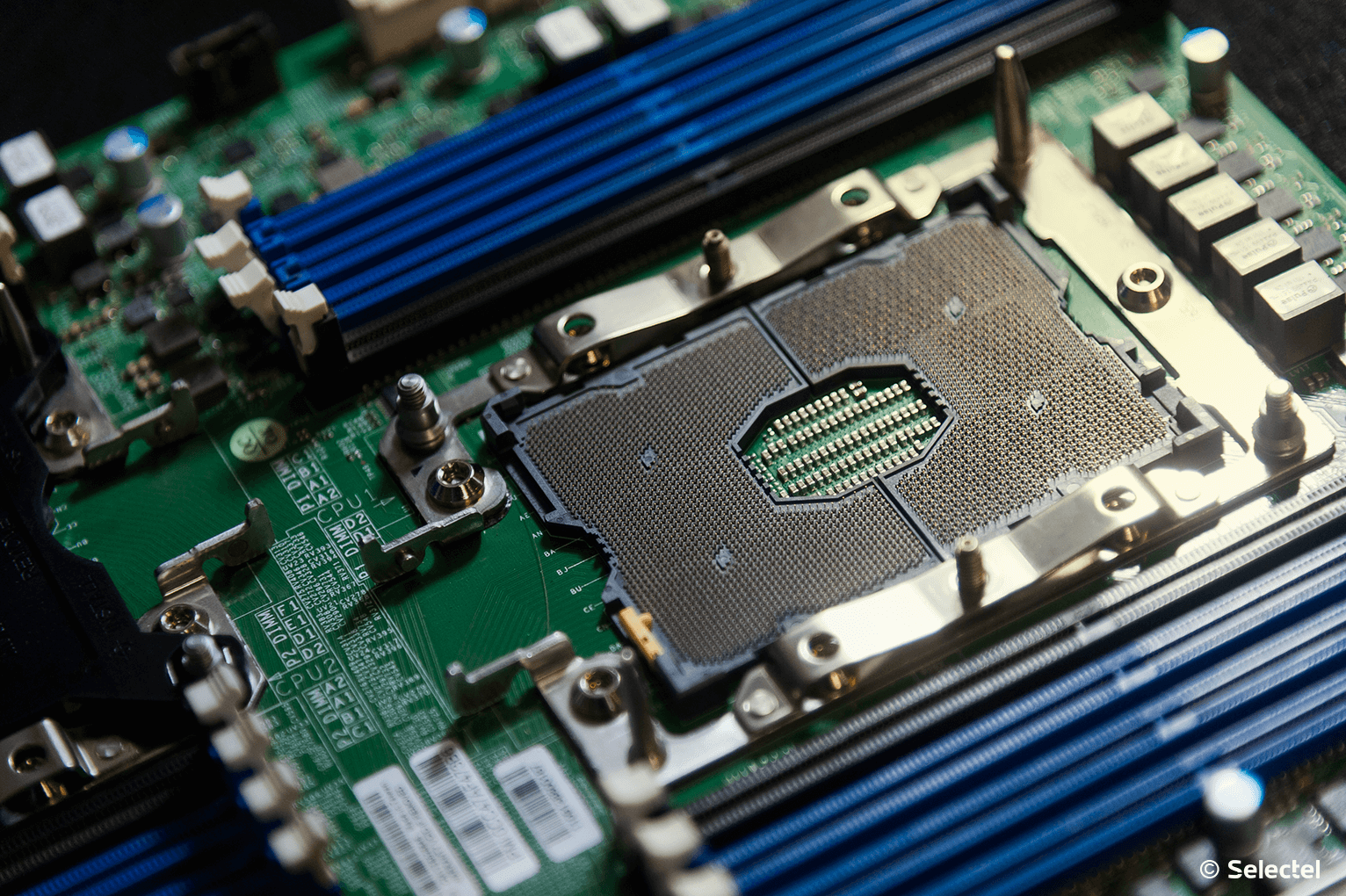

As in the previous generation Skylake SP, processors are installed in the socket LGA3647 (Socket P), which is due to the use of a 6-channel memory controller (up to a maximum of 2 memory modules per channel). The memory frequency is 2666 MT / s , however, when using processors of the 6000 and 8000 series, you can use the memory with a frequency of 2933 MT / s (no more than 1 module per channel).

The Ultra-Path Interconnect bus, successfully used in first-generation Intel Xeon SP processors, remained in the second generation, providing data exchange between processors at speeds of 9.6 GT / s or 10.4 GT / s for each channel. This allows you to effectively scale the hardware platform up to 8 physical processors, optimizing throughput and energy efficiency.

Tests

We started testing new-generation processors using the SPEC test suite, which simulates the load based on solving the most vital life problems. These tests are both the simplest calculations and the calculation of various physical processes, for example, solving problems of molecular physics and hydrodynamics.

Currently, we have some SPEC tests for integer calculations using the example of Intel® Xeon® Gold 6140 and Intel® Xeon® Gold 6240 processors.

Intrate

| Test | Intel® Xeon® Gold 6140 | Intel® Xeon® Gold 6240 |

| 500.perlbench_r | 147 | 157 |

| 531.deepsjeng_r | 127 | 139 |

| 541.leela_r | 125 | 127 |

| 548.exchange2_r | 176 | 203 |

Intspeed

| Test | Intel® Xeon® Gold 6140 | Intel® Xeon® Gold 6240 |

| 600.perlbench_s | 5.67 | 6.33 |

| 602.gcc_s | 6.95 | 8.74 |

| 641.leela_s | 3.24 | 3.62 |

| 648.exchange2_s | 5.94 | 7.90 |

Test description

- perlbench_r is a shortened version of Perl. The test load imitates the work of the popular SpamAssassin anti-spam system;

- deepsjeng_r - a simulation of a game of chess. The server performs a deep study of gaming positions using the alpha-beta-clipping algorithm;

- leela_r - go game simulation In the process of testing, an analysis of movement patterns is carried out, as well as a selective search in a tree based on upper confidence limits;

- exchange2_r - generator of non-trivial sudoku puzzles. Written in Fortran 95, it employs most of the array processing functions;

- The gcc_s compiler of the C language. The test load "collects" the GCC compiler from the source codes for the IA-32 microprocessor architecture.

According to the results of the tests performed, it becomes clear that the new generation processors perform integer calculations faster than the previous generation. We will share the results of other tests in one of the following articles.

Intel® Optane ™ DC Persistent Memory Support

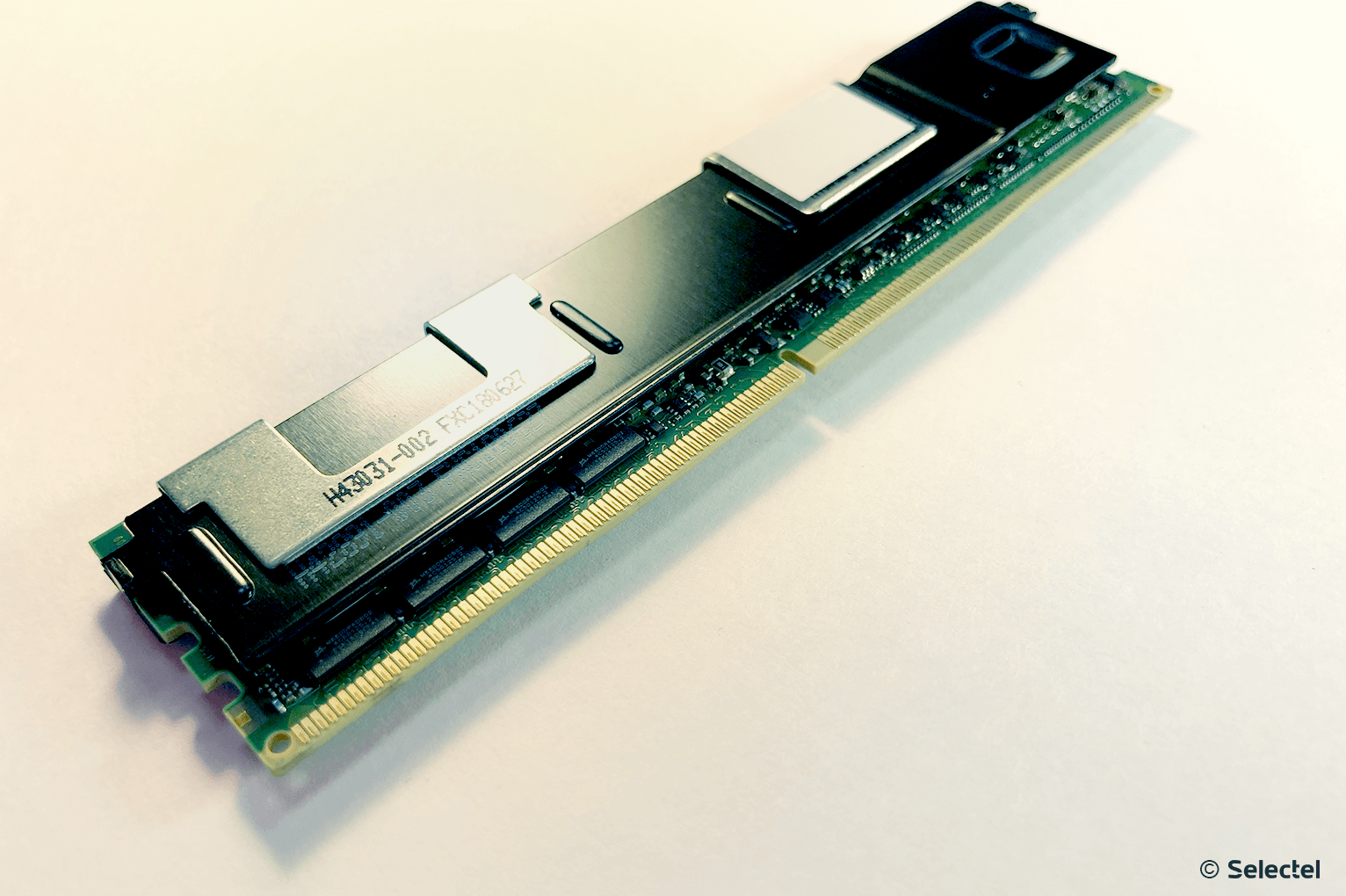

Accelerating high load databases and applications is what all customers expected from the upcoming update. Therefore, a key innovation was the support of Intel® Optane ™ DC Persistent Memory, better known by the code name Apache Pass.

This memory is intended to be a universal solution to the problem, when the use of DRAM of the required volume is economically disadvantageous, and the speed characteristics of even the flagship SSD-drives are not enough.

A prime example is the placement of databases directly in the memory of Intel® Optane ™ DC Persistent Memory, which will avoid the need for constant data exchange between the RAM and the storage device (a feature inherent in traditional systems).

A new type of memory is installed directly in the DIMM slot and is fully compatible with it. Modules with the following capacity are available:

- 128 GB

- 256 GB,

- 512 GB

Such significant volumes of modules will allow you to flexibly configure the hardware platform, getting a very capacious and very fast disk space for high-load systems. Intel® Optane ™ DC Persistent Memory has truly enormous potential for use, including for machine learning purposes.

Acceleration of deep learning

In addition to supporting the new type of memory, Intel engineers have taken care to accelerate the process of deep learning. Since convolutional neural networks often require multiple multiplication of 8-bit and 16-bit values, new processors are supported by AVX-512 VNNI instructions (Vector Neural Network Instructions). This will optimize and speed up the calculations several times.

The best efficiency is achieved by introducing the following set of instructions:

- VPDPBUSB (for INT8 calculations),

- VPDPWSSD (for INT16 calculations).

The bottom line is to reduce the number of items processed per cycle. The VPDPWSSD instruction combines two INT16 instructions, and also uses the INT32 constant to replace the two current PMADDWD and VPADDD instructions . The VPDPUSB instruction likewise reduces the number of elements, replacing the three existing instructions VPMADDUSBW , VPMADDWD and VPADDD .

Thus, with the correct application of the new set of instructions, it is possible to reduce the number of elements processed per cycle by a factor of two or three and increase the speed of data processing. The appropriate framework for new instructions will become part of such popular machine learning software libraries as:

Load sharing optimization

Uniform loading of computational resources has become easier with Intel® Speed Select Technology (on processors with an Y index). The bottom line is that each operation begins to be associated with the number of cores involved and the clock frequency. Depending on the profile chosen for each operation, resources are allocated as follows:

- more cores, but with a lower clock frequency;

- fewer cores, but with increased clock speed.

This approach allows the most complete utilization of resources, which is especially important when using virtualized environments. This will reduce costs by optimizing the load on virtualization hosts.

Accelerating Scientific Computing

Processing scientific data, especially when modeling physical processes at the particle level (for example, calculating electromagnetic interactions), requires an enormous amount of parallel computing. This task can be solved using a CPU, GPU or FPGA.

Multi-core CPUs are universal due to the large number of software and libraries for data processing. The use of a GPU for these purposes is also very effective, because they can run thousands of parallel threads directly on hardware-based graphics cores. There are frameworks that are convenient for development, such as OpenCL or CUDA, which allow you to create applications of any complexity using GPU computing .

Nevertheless, there is another hardware tool, which we have already described in previous articles - FPGA. The ability to program such devices to perform specific calculations allows you to speed up data processing, partially unloading the CPU. A similar scenario can be implemented on new Cascade Lake processors in conjunction with discrete Intel® Stratix® 10 SX FPGA.

Despite the lower clock speeds compared to conventional CPUs, FPGA can show performance ten times higher. For some types of tasks, such as digital signal processing, Intel® Stratix® 10 SX can show results up to 10 TFLOPS (tera floating-point operations per second).

Scaling platforms

Doing business in real time means not only stability, but also the ability to scale on-demand. A good example is the high-performance SAP HANA platform used to store and process data. Physical deployment of this platform requires very powerful hardware resources.

Intel® Xeon® Scalable processors are designed to transform multi-socket systems into basic IT infrastructure components, providing scalability to meet business application requirements.

This is implemented in the form of support for external Node-controllers, which allows you to create configurations of a higher level than one single platform can provide. For example, you can create a configuration of 32 physical processors by combining the resources of several multi-socket platforms into a single whole.

Conclusion

Increasing operating frequencies and processor cores, increasing performance, supporting Intel® Optane ™ DC Persistent Memory — all these improvements significantly increase the computing power of each platform, reducing the cost of the amount of equipment used and increasing the efficiency of data processing. The scalability principle laid down at the architecture level allows building an IT infrastructure of any complexity and achieving high performance and energy efficiency.

Since Selectel is a partner of Intel Platinum level, our customers are already available for ordering new generation Intel® Xeon® Scalable processors in arbitrary configuration servers.

It is very easy to rent a server with new generation processors! Just go to the configurator page and select the necessary components. Any questions regarding the work of the services can be asked to our specialists by creating a ticket in the control panel. Paying the server for several months in advance, you get a discount of up to 15%.

If you are interested in taking part in testing the latest technologies, then join our Selectel Lab.

We will be glad to hear your questions and suggestions in the comments.

Source: https://habr.com/ru/post/446494/

All Articles