SIEM depths: out-of-box correlations. Part 5. Methodology for developing correlation rules

We are completing a series of articles on the correlation rules that work out of the box. Our goal was to formulate an approach that would allow us to create correlation rules that can work out of the box with a minimum number of false positives.

Image: Software Marketing

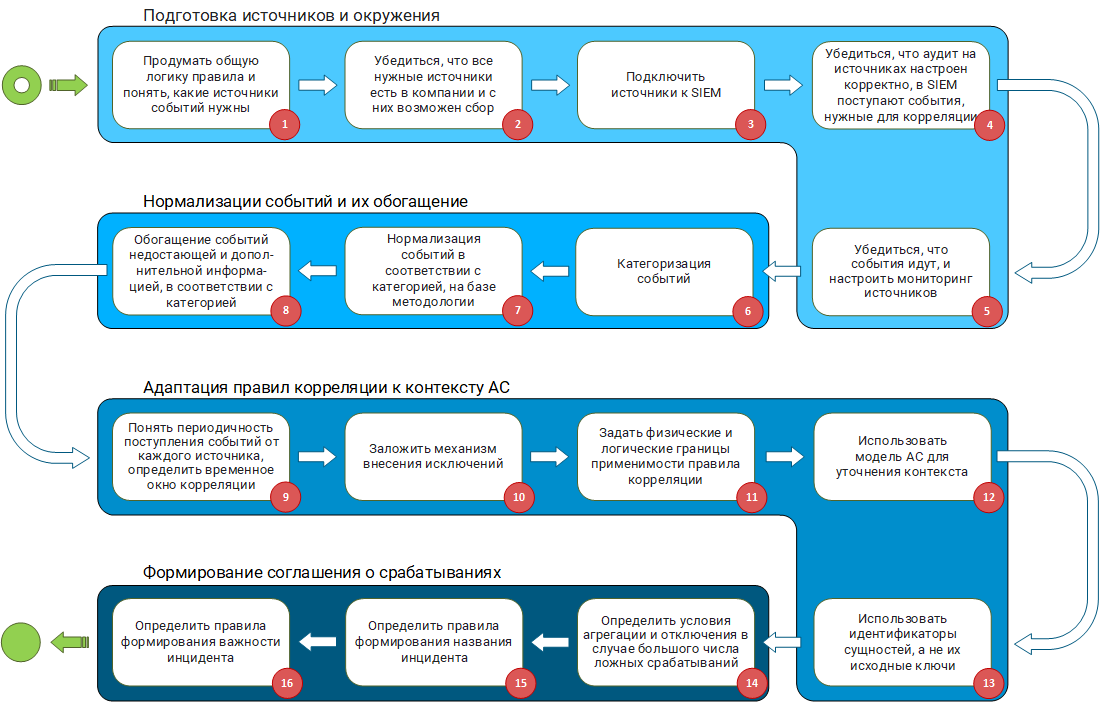

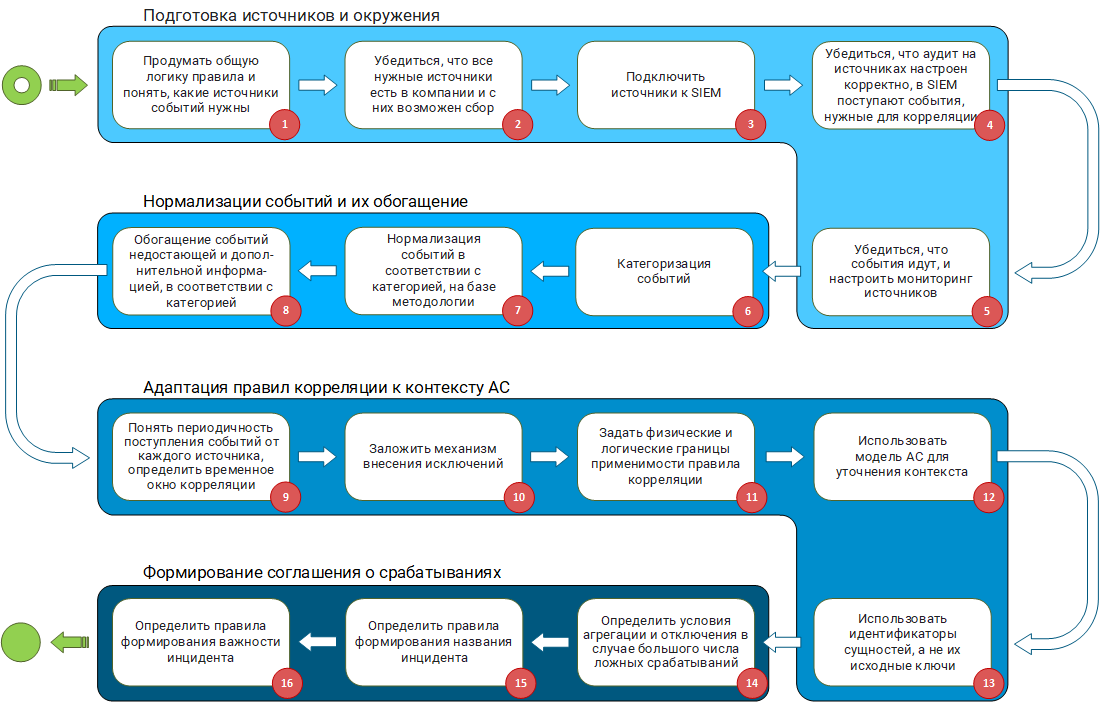

All key points of the article are available in the output , there this methodology is presented in the form of a graphic scheme .

Briefly about what happened in previous articles: described how a set of fields of a normalized event should look like - a schema ; what event categorization system to use; using the categorization system and scheme to unify the process of event normalization. We also reviewed the context of the execution of the correlation rules and disassembled what SIEM should know about the Automated System (AS) for which it observes, and why.

')

All the above approaches and reasoning are the blocks that form the methodology for developing correlation rules. It's time to bring them together and look at the whole picture.

The whole methodology for developing correlation rules consists of four blocks:

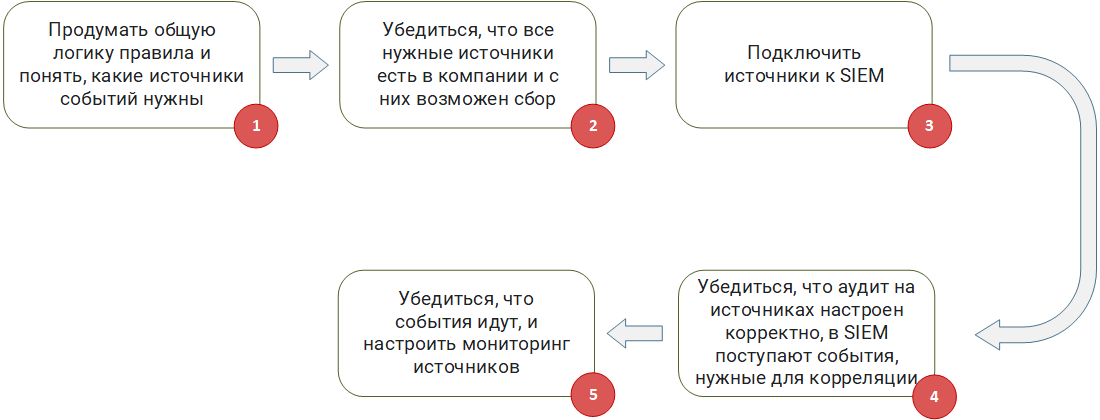

Correlation rules operate on events that generate sources. In this regard, it is extremely important that the sources required for correlation rules are present in the AU and are correctly configured.

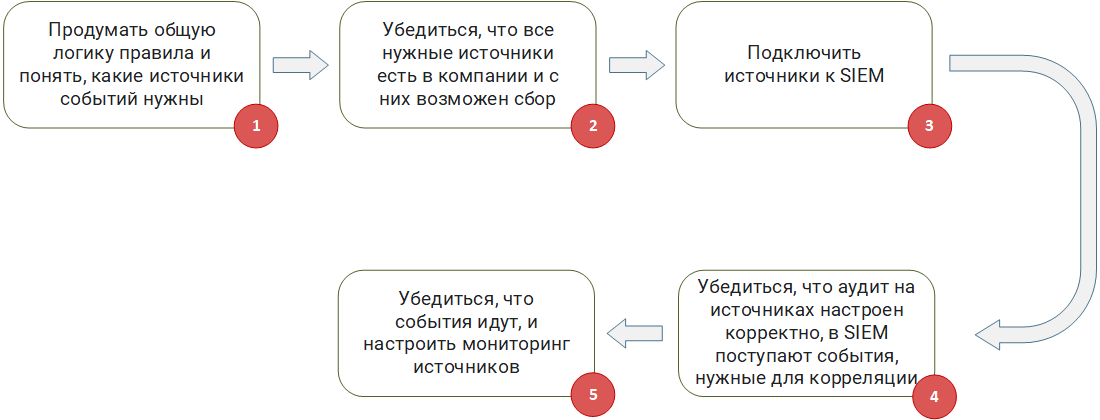

Preparation of sources and environments

Step 1 : Consider the general logic of the rules and understand what sources of events are needed. If you are developing from scratch or take a ready-made Sigma correlation rule , then you need to understand on the basis of events from which sources it will work.

Step 2 : Make sure that all the necessary sources are in the company and collection is possible from them. It is possible that the rule operates with a chain of events from several sources of the form (event A from source 1) - (event B from source 2) - (event C from source 3) for 5 minutes. If your company does not have at least one source, this rule becomes useless, because it will never work. You need to understand whether it is possible in principle to collect events from the necessary sources and whether your SIEM can provide it. For example, the source writes events to the file, but the file is encrypted, or a non-standard database is used at the source for storage, access to which cannot be provided through the regular ODBC / JDBC driver.

Step 3 : Connect the sources to the SIEM. However trite it may sound, but at this step it is necessary to implement the collection of events itself. There are often many problems. For example, organizational problems, when IT management categorically prohibits connecting to mission critical systems. Or technical, when without additional settings, the SIEM agent (SmartConnector, Universal Forwarder) simply kills the source when collecting events, leading to a denial of service. This can often be observed when connecting highly loaded DBMS to SIEM.

Step 4 : Ensure that the audit on the sources is configured correctly, the events needed for correlation are received in the SIEM. Correlation rules expect certain types of events. They must generate a source. It often happens that in order to generate the necessary events for the rules, the source must be additionally configured: an extended audit is enabled and the log output is configured in a specific format.

The inclusion of an extended audit often affects the volume of event flow (EPS) that enters the SIEM from the source. Due to the fact that the source itself and the SIEM are in the area of responsibility of different departments, there is always a risk that the expanded audit may be disabled and, as a result, the necessary types of events will stop coming to the SIEM. In part, this problem can be monitored by monitoring the flow of events for each source, and more precisely, by monitoring changes in Events per Second (EPS).

Step 5 : Make sure the events are on and set up monitoring of the sources. In any infrastructure, sooner or later there are failures in the network or the source itself. At this point, the SIEM loses communication with the source and cannot receive events. If the source is passive and writes its logs to a file or database, events will not be lost if it fails and when the connection is restored, SIEM can get them. If the source is active and sends events to SIEM itself, for example, via syslog, without saving them additionally, then if an error occurs, the events will be lost, and your correlation rule simply will not work, since it will not wait for the desired event. After digging deeper, you can see that, even working with a passive source, when you restore communication with it after a failure, there are no guarantees that the correlation rules will work, especially those that operate with time windows. Consider the rule example described above: (event A from source 1) - (event B from source 2) - (event C from source 3) for 5 minutes. If the crash happens after the event B and the connection is restored in an hour, the correlation will not work, because in the expected 5 minutes the event C will not come.

Remembering these features, you should configure monitoring of the sources from which events are collected. This monitoring should monitor the availability of sources, the timeliness of the arrival of events from them, the power of the stream of collected events (EPS).

The activation of the monitoring system is the first call, which indicates the appearance of a negative factor affecting the performance of all or part of the correlation rules.

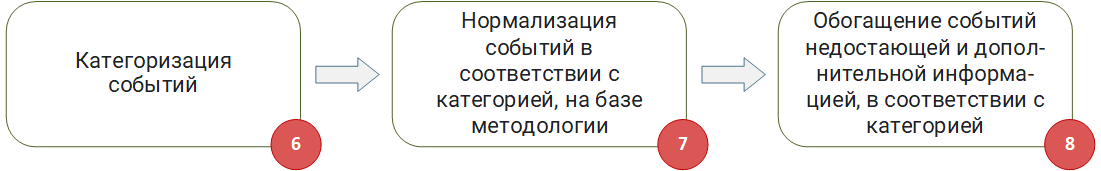

Collecting events necessary for correlation is not enough. Events entering the SIEM must be normalized strictly in accordance with accepted rules. We wrote about the problems of normalization and the formation of the methodology of normalization in a separate article . In general, this block can be described as a fight against garbage in, garbage out ( GIGO ).

Normalization and enrichment of events

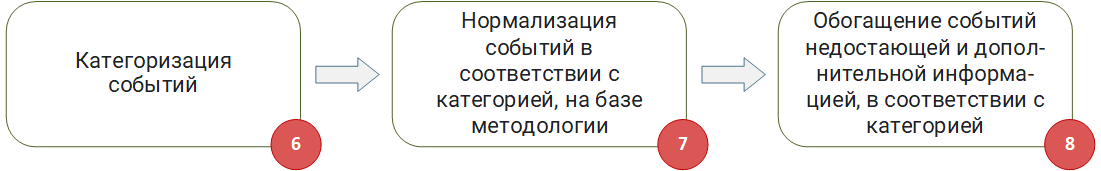

Step 6 and Step 7 : Categorizing the events and normalizing the events according to the category, based on the methodology. We will not dwell on them in detail, since we examined these steps in detail in the article “Methodology for the Normalization of Events” .

Step 8 : Enrich events with missing and additional information, according to category. Often, incoming events do not always contain information in the amount necessary for the operation of correlation rules. For example, an event contains only the IP address of the host, but it does not contain information about its FQDN or Hostname. Another example: an event contains a user ID, but there is no username in the event. In this case, the necessary information should be extracted from external sources - databases, domain controllers or other reference books and added to the event.

It is important to note that the categorization of events is at the very beginning - before normalization. In addition to the fact that a category defines the rules for normalizing an event, it also specifies the list of data that must be searched for in external sources if they are not in the event itself.

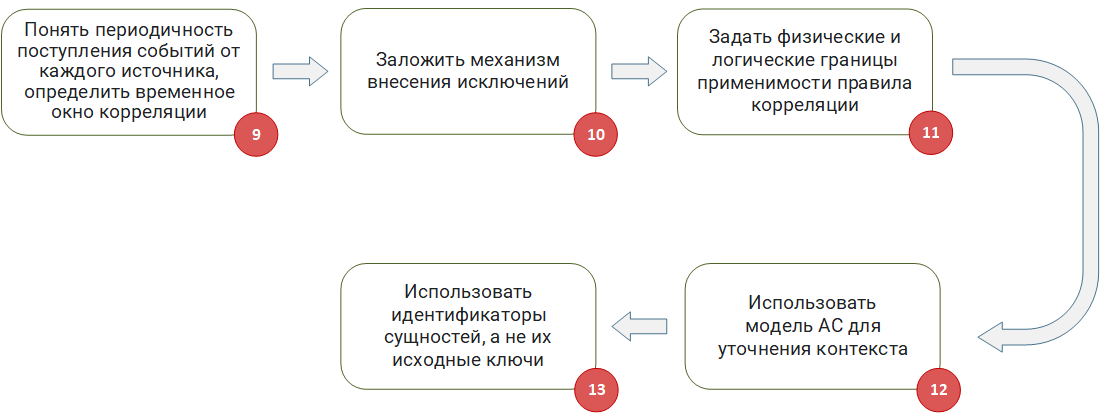

After you have prepared the input data (events) and proceeded to the development of correlation rules, it is necessary to take into account the specifics of the incoming events, the AC itself and its variability. More on this was in the article “System Model as a Context of Correlation Rules” .

Adaptation of correlation rules to the context of the AU

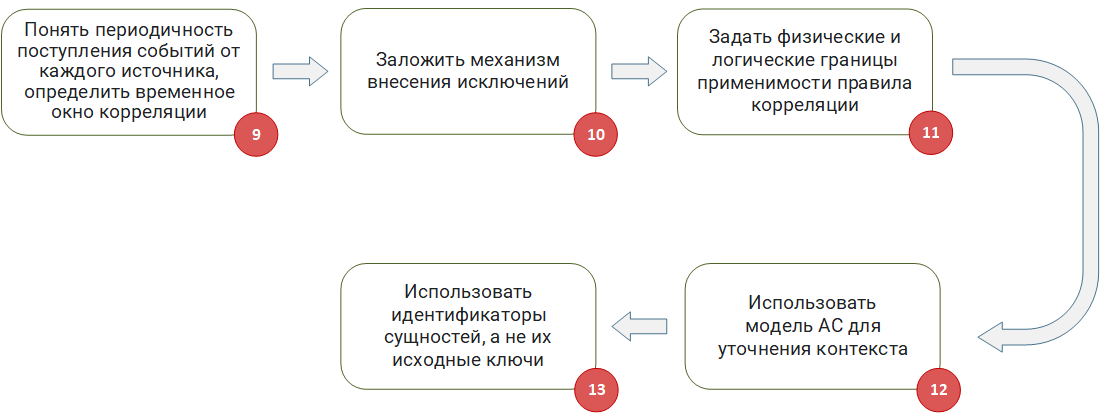

Step 9 : Understand the frequency of receipt of events from each source, determine the time window of the correlation. Quite often, time windows are used in the correlation rules when it is necessary to wait for a certain event to arrive during a given time interval. When developing such rules it is important to consider the delay in receiving events. They are usually caused by two factors.

First , the source itself may not immediately write events to the database, to a file, or send it via syslog. The time of this delay should be evaluated and taken into account in the rule.

Secondly , there is a delay in the delivery of events in the SIEM. For example, the collection of events from the database is configured so that the event request is performed 1 time per 10 minutes, naturally, the correlation window at 5 minutes in this situation is not the best solution.

The problem is exacerbated when it is necessary to develop a correlation rule that works with events from several sources at once. In this case, it is important to understand that the delivery time may be different. In the worst case, the events will come in random order in violation of the chronology. In such a situation, the correlation rule developer needs to clearly understand at what time the SIEM implements the correlation (in event time or event arrival time in SIEM). I note that the correlation in the time of receipt of events is the most technically simple and common variant for processing events in the pseudo-real time mode. However, this option only exacerbates the problems described above, and does not solve them.

If your SIEM provides a correlation in event time, then, most likely, there are event reordering mechanisms that can restore the actual chronology of events.

In the case when you understand that the time window is too large to do the correlation on the stream, you must use the retro correlation mechanism, in which already saved events are selected from the SIEM database on a schedule and run through the correlation rules.

Step 10 : Lay the mechanism for making exceptions. In the real world, there is always an object with a special behavior that should not be handled by a specific correlation rule, since this leads to a false positive. Consequently, at the stage of developing the rules, mechanisms should be laid down for introducing such objects into exceptions. For example, if your rule works with IP addresses of machines, you need a tabular list where you can add addresses for which the rule will not work. Similarly, if the rule works with user logins or process names, it is necessary to pre-set the rules for working with table exclusion lists in the logic of the rule.

This approach will allow you to automatically or manually make objects into exceptions, without rewriting the rule body itself.

Step 11 : Set the physical and logical limits of applicability of the correlation rule. When developing a correlation rule, it is important to initially understand the limits of applicability (scope) of the rule, and whether they exist at all. Working through the logic and debugging the rule, it is necessary to focus on the specifics of this area. If a rule begins to work with data that is outside the scope of this area, the probability of false positives increases.

There are two types of scope: physical and logical. The physical scope is the company's network and adjacent networks, and the logical scope is the part of the AU, business applications or business processes. Examples of the physical area: DMZ segment, internal and external subnets, remote access networks. Examples of logical scope of rules: ACS TP, accounting, PCI DSS segment, PDN segment or just specific hardware roles — domain controllers, access switches, core routers.

It is possible to specify scopes for correlation rules via table lists. They can be filled both manually and automatically. If in your company you find time for asset management (Asset management), then all the necessary data may already be contained in the AS model created in the SIEM. The automatic generation of such tabular lists allows you to dynamically include in the scope of the new assets that appear in the company. For example, if you had a rule that worked exclusively with domain controllers, adding a new controller to the domain forest will be fixed in the model and fall within the scope of your rule.

In general, the table lists used for exceptions can be regarded as black, and the lists that are responsible for the scope of the rules, as white lists.

Step 12 : Use the AC model to clarify the context. In the process of developing correlation rules that detect malicious actions, it is important to make sure that they can be implemented. If this is not taken into account, the triggering of the rule that revealed a potential attack will be false, since this type of attack may simply not apply to your infrastructure. Let me explain with an example:

During the investigation, you quickly find out that myserver.local 3389 is closed and never opened by any service and there is Linux. This is a false positive of the rule, which has taken away your time to investigate.

Another example: IPS sends a signature triggering event for attempting to exploit the vulnerability CVE-2017-0144, but during the investigation it turns out that a corresponding patch is installed on the attacked machine and there is no need to respond to such an incident with the highest priority.

Using data from the AU model will help level this problem.

Step 13 : Use entity identifiers, not their source keys. As already described in the article “System Model as a Context of Correlation Rules”, the IP address, FQDN, and even the asset MAC can change. Thus, if you use initial asset identifiers in a correlation rule or a tabular list, then there is a high chance to get false positives for a fairly trivial reason, for example, the DHCP server simply issued this IP to another machine.

If your SIEM has a mechanism for identifying assets, tracking their changes and allowing you to operate with their identifiers, you should use identifiers, not the original keys of the asset.

Approaching the final block of creating a correlation rule, we recall that the result of the rule is the incident brought into the SIEM. Responsible specialists should respond to such an incident. Although the purpose of this series of articles does not include consideration of the incident response process, it should be noted that part of the information on the incident is already formed at the stage of creating the corresponding correlation rule.

Next, we consider the basic moments that need to be taken into account when setting the parameters for triggering the correlation rule and generating an incident.

Forming an agreement on triggers

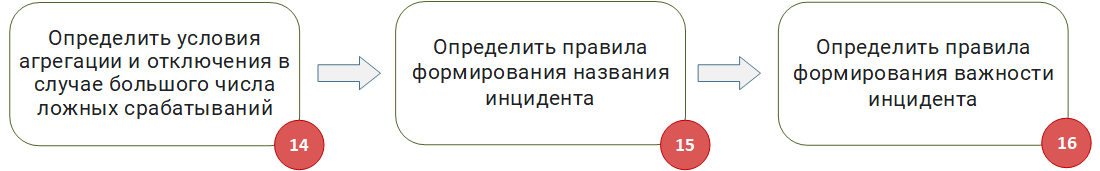

Step 14 : Determine the conditions of aggregation and disconnection in case of a large number of false positives. At the stage of debugging, and in the course of its operation, if you do not follow this technique :), false positives of the rules can occur. It is good if there are one or two such operations per day, but what if one rule has thousands or tens of thousands of operations? Of course, this suggests that the rule must be refined. However, it is necessary to make sure that in such situations such a massive false positive:

Problems of this kind can be solved if, when creating a correlation rule at the level of the entire system or for each rule separately, set the conditions for the aggregation of incidents and the conditions for the emergency shutdown of the rule.

The mechanism of aggregation of incidents will allow not creating millions of similar incidents, but “sticking” new incidents to one, provided that they are identical. In extreme cases, when even the aggregation of incidents gives a significant load, it is recommended to configure automatic shutdown of the correlation rule when the specified number of operations per unit of time is exceeded (minute, hour, day).

Step 15 : Define the rules for forming the name of the incident. This item is often neglected, especially if the rules are not developed for their company, for example, if a third-party company is responsible for introducing the SIEM and developing the rules. The name of the correlation rule, as well as the incident generated by it, must be short and clearly reflect the essence of the specific rule.

If more than one person works with incidents and correlation rules in your company, it is recommended to develop naming rules. They must be understood and accepted by the entire team working with SIEM.

Step 16 : Determine the rules of the importance of the incident. Most SIEM solutions at the final stage of incident creation allow you to set its importance and significance. Some solutions even calculate the importance automatically, based on the context of the incident and the objects involved in it.

If your SIEM works exclusively with automatic calculation of the importance of incidents, it is worth finding out on the basis of what and by what formula it is calculated. For example, if the importance is calculated on the basis of the significance of the assets involved in the incident, it is necessary to take a serious approach to asset management (Asset Management) in a company in advance.

If you specify the importance of the incident manually, it is recommended to develop a calculation formula that takes into account at least the following:

Also, as in the naming of incidents, it is important that all interested parties clearly and equally understand the rules that shape the importance of the incident.

Summing up our series of articles, I note that it is possible to create correlation rules that work out of the box, in my opinion. The exit can be a fusion of organizational and technical approaches. Something must be able to SIEM itself, but something should be done and know its experts.

Summing up:

Methodology for developing correlation rules working out of the box

Many thanks to all who mastered the entire cycle of articles, or at least read up to these lines. If you have any questions - write in a personal or ask them in the comments. I will be glad to discuss.

Cycle of articles:

SIEM depths: out-of-box correlations. Part 1: Pure marketing or unsolvable problem?

SIEM depths: out-of-box correlations. Part 2. Data scheme as a reflection of the “world” model

SIEM depths: out-of-box correlations. Part 3.1. Event categorization

SIEM depths: out-of-box correlations. Part 3.2. Event Normalization Methodology

SIEM depths: out-of-box correlations. Part 4. System model as a context of correlation rules

SIEM depths: out-of-box correlations. Part 5. Methodology for developing correlation rules ( This article )

Image: Software Marketing

All key points of the article are available in the output , there this methodology is presented in the form of a graphic scheme .

Briefly about what happened in previous articles: described how a set of fields of a normalized event should look like - a schema ; what event categorization system to use; using the categorization system and scheme to unify the process of event normalization. We also reviewed the context of the execution of the correlation rules and disassembled what SIEM should know about the Automated System (AS) for which it observes, and why.

')

All the above approaches and reasoning are the blocks that form the methodology for developing correlation rules. It's time to bring them together and look at the whole picture.

The whole methodology for developing correlation rules consists of four blocks:

- preparation of sources and environments;

- normalization of events and their enrichment;

- adaptation of correlation rules to the context of the AU;

- the formation of a response agreement.

Preparation of sources and environments

Correlation rules operate on events that generate sources. In this regard, it is extremely important that the sources required for correlation rules are present in the AU and are correctly configured.

Preparation of sources and environments

Step 1 : Consider the general logic of the rules and understand what sources of events are needed. If you are developing from scratch or take a ready-made Sigma correlation rule , then you need to understand on the basis of events from which sources it will work.

Step 2 : Make sure that all the necessary sources are in the company and collection is possible from them. It is possible that the rule operates with a chain of events from several sources of the form (event A from source 1) - (event B from source 2) - (event C from source 3) for 5 minutes. If your company does not have at least one source, this rule becomes useless, because it will never work. You need to understand whether it is possible in principle to collect events from the necessary sources and whether your SIEM can provide it. For example, the source writes events to the file, but the file is encrypted, or a non-standard database is used at the source for storage, access to which cannot be provided through the regular ODBC / JDBC driver.

Step 3 : Connect the sources to the SIEM. However trite it may sound, but at this step it is necessary to implement the collection of events itself. There are often many problems. For example, organizational problems, when IT management categorically prohibits connecting to mission critical systems. Or technical, when without additional settings, the SIEM agent (SmartConnector, Universal Forwarder) simply kills the source when collecting events, leading to a denial of service. This can often be observed when connecting highly loaded DBMS to SIEM.

Step 4 : Ensure that the audit on the sources is configured correctly, the events needed for correlation are received in the SIEM. Correlation rules expect certain types of events. They must generate a source. It often happens that in order to generate the necessary events for the rules, the source must be additionally configured: an extended audit is enabled and the log output is configured in a specific format.

The inclusion of an extended audit often affects the volume of event flow (EPS) that enters the SIEM from the source. Due to the fact that the source itself and the SIEM are in the area of responsibility of different departments, there is always a risk that the expanded audit may be disabled and, as a result, the necessary types of events will stop coming to the SIEM. In part, this problem can be monitored by monitoring the flow of events for each source, and more precisely, by monitoring changes in Events per Second (EPS).

Step 5 : Make sure the events are on and set up monitoring of the sources. In any infrastructure, sooner or later there are failures in the network or the source itself. At this point, the SIEM loses communication with the source and cannot receive events. If the source is passive and writes its logs to a file or database, events will not be lost if it fails and when the connection is restored, SIEM can get them. If the source is active and sends events to SIEM itself, for example, via syslog, without saving them additionally, then if an error occurs, the events will be lost, and your correlation rule simply will not work, since it will not wait for the desired event. After digging deeper, you can see that, even working with a passive source, when you restore communication with it after a failure, there are no guarantees that the correlation rules will work, especially those that operate with time windows. Consider the rule example described above: (event A from source 1) - (event B from source 2) - (event C from source 3) for 5 minutes. If the crash happens after the event B and the connection is restored in an hour, the correlation will not work, because in the expected 5 minutes the event C will not come.

Remembering these features, you should configure monitoring of the sources from which events are collected. This monitoring should monitor the availability of sources, the timeliness of the arrival of events from them, the power of the stream of collected events (EPS).

The activation of the monitoring system is the first call, which indicates the appearance of a negative factor affecting the performance of all or part of the correlation rules.

Normalization of events and their enrichment

Collecting events necessary for correlation is not enough. Events entering the SIEM must be normalized strictly in accordance with accepted rules. We wrote about the problems of normalization and the formation of the methodology of normalization in a separate article . In general, this block can be described as a fight against garbage in, garbage out ( GIGO ).

Normalization and enrichment of events

Step 6 and Step 7 : Categorizing the events and normalizing the events according to the category, based on the methodology. We will not dwell on them in detail, since we examined these steps in detail in the article “Methodology for the Normalization of Events” .

Step 8 : Enrich events with missing and additional information, according to category. Often, incoming events do not always contain information in the amount necessary for the operation of correlation rules. For example, an event contains only the IP address of the host, but it does not contain information about its FQDN or Hostname. Another example: an event contains a user ID, but there is no username in the event. In this case, the necessary information should be extracted from external sources - databases, domain controllers or other reference books and added to the event.

It is important to note that the categorization of events is at the very beginning - before normalization. In addition to the fact that a category defines the rules for normalizing an event, it also specifies the list of data that must be searched for in external sources if they are not in the event itself.

Adaptation of correlation rules to the context of the AU

After you have prepared the input data (events) and proceeded to the development of correlation rules, it is necessary to take into account the specifics of the incoming events, the AC itself and its variability. More on this was in the article “System Model as a Context of Correlation Rules” .

Adaptation of correlation rules to the context of the AU

Step 9 : Understand the frequency of receipt of events from each source, determine the time window of the correlation. Quite often, time windows are used in the correlation rules when it is necessary to wait for a certain event to arrive during a given time interval. When developing such rules it is important to consider the delay in receiving events. They are usually caused by two factors.

First , the source itself may not immediately write events to the database, to a file, or send it via syslog. The time of this delay should be evaluated and taken into account in the rule.

Secondly , there is a delay in the delivery of events in the SIEM. For example, the collection of events from the database is configured so that the event request is performed 1 time per 10 minutes, naturally, the correlation window at 5 minutes in this situation is not the best solution.

The problem is exacerbated when it is necessary to develop a correlation rule that works with events from several sources at once. In this case, it is important to understand that the delivery time may be different. In the worst case, the events will come in random order in violation of the chronology. In such a situation, the correlation rule developer needs to clearly understand at what time the SIEM implements the correlation (in event time or event arrival time in SIEM). I note that the correlation in the time of receipt of events is the most technically simple and common variant for processing events in the pseudo-real time mode. However, this option only exacerbates the problems described above, and does not solve them.

If your SIEM provides a correlation in event time, then, most likely, there are event reordering mechanisms that can restore the actual chronology of events.

In the case when you understand that the time window is too large to do the correlation on the stream, you must use the retro correlation mechanism, in which already saved events are selected from the SIEM database on a schedule and run through the correlation rules.

Step 10 : Lay the mechanism for making exceptions. In the real world, there is always an object with a special behavior that should not be handled by a specific correlation rule, since this leads to a false positive. Consequently, at the stage of developing the rules, mechanisms should be laid down for introducing such objects into exceptions. For example, if your rule works with IP addresses of machines, you need a tabular list where you can add addresses for which the rule will not work. Similarly, if the rule works with user logins or process names, it is necessary to pre-set the rules for working with table exclusion lists in the logic of the rule.

This approach will allow you to automatically or manually make objects into exceptions, without rewriting the rule body itself.

Step 11 : Set the physical and logical limits of applicability of the correlation rule. When developing a correlation rule, it is important to initially understand the limits of applicability (scope) of the rule, and whether they exist at all. Working through the logic and debugging the rule, it is necessary to focus on the specifics of this area. If a rule begins to work with data that is outside the scope of this area, the probability of false positives increases.

There are two types of scope: physical and logical. The physical scope is the company's network and adjacent networks, and the logical scope is the part of the AU, business applications or business processes. Examples of the physical area: DMZ segment, internal and external subnets, remote access networks. Examples of logical scope of rules: ACS TP, accounting, PCI DSS segment, PDN segment or just specific hardware roles — domain controllers, access switches, core routers.

It is possible to specify scopes for correlation rules via table lists. They can be filled both manually and automatically. If in your company you find time for asset management (Asset management), then all the necessary data may already be contained in the AS model created in the SIEM. The automatic generation of such tabular lists allows you to dynamically include in the scope of the new assets that appear in the company. For example, if you had a rule that worked exclusively with domain controllers, adding a new controller to the domain forest will be fixed in the model and fall within the scope of your rule.

In general, the table lists used for exceptions can be regarded as black, and the lists that are responsible for the scope of the rules, as white lists.

Step 12 : Use the AC model to clarify the context. In the process of developing correlation rules that detect malicious actions, it is important to make sure that they can be implemented. If this is not taken into account, the triggering of the rule that revealed a potential attack will be false, since this type of attack may simply not apply to your infrastructure. Let me explain with an example:

- Suppose we have a correlation rule that reveals remote connections via RDP to servers.

- Firewall brings a connection attempt event to TCP port 3389 of the server myserver.local.

- The rule is triggered and you begin to disassemble a potential high priority incident.

During the investigation, you quickly find out that myserver.local 3389 is closed and never opened by any service and there is Linux. This is a false positive of the rule, which has taken away your time to investigate.

Another example: IPS sends a signature triggering event for attempting to exploit the vulnerability CVE-2017-0144, but during the investigation it turns out that a corresponding patch is installed on the attacked machine and there is no need to respond to such an incident with the highest priority.

Using data from the AU model will help level this problem.

Step 13 : Use entity identifiers, not their source keys. As already described in the article “System Model as a Context of Correlation Rules”, the IP address, FQDN, and even the asset MAC can change. Thus, if you use initial asset identifiers in a correlation rule or a tabular list, then there is a high chance to get false positives for a fairly trivial reason, for example, the DHCP server simply issued this IP to another machine.

If your SIEM has a mechanism for identifying assets, tracking their changes and allowing you to operate with their identifiers, you should use identifiers, not the original keys of the asset.

Forming an agreement on triggers

Approaching the final block of creating a correlation rule, we recall that the result of the rule is the incident brought into the SIEM. Responsible specialists should respond to such an incident. Although the purpose of this series of articles does not include consideration of the incident response process, it should be noted that part of the information on the incident is already formed at the stage of creating the corresponding correlation rule.

Next, we consider the basic moments that need to be taken into account when setting the parameters for triggering the correlation rule and generating an incident.

Forming an agreement on triggers

Step 14 : Determine the conditions of aggregation and disconnection in case of a large number of false positives. At the stage of debugging, and in the course of its operation, if you do not follow this technique :), false positives of the rules can occur. It is good if there are one or two such operations per day, but what if one rule has thousands or tens of thousands of operations? Of course, this suggests that the rule must be refined. However, it is necessary to make sure that in such situations such a massive false positive:

- Did not affect the performance of the SIEM.

- Among the mass of false positives, really important incidents are not lost. There is even a separate type of attack aimed at hiding the main malicious activity behind a variety of false activities .

Problems of this kind can be solved if, when creating a correlation rule at the level of the entire system or for each rule separately, set the conditions for the aggregation of incidents and the conditions for the emergency shutdown of the rule.

The mechanism of aggregation of incidents will allow not creating millions of similar incidents, but “sticking” new incidents to one, provided that they are identical. In extreme cases, when even the aggregation of incidents gives a significant load, it is recommended to configure automatic shutdown of the correlation rule when the specified number of operations per unit of time is exceeded (minute, hour, day).

Step 15 : Define the rules for forming the name of the incident. This item is often neglected, especially if the rules are not developed for their company, for example, if a third-party company is responsible for introducing the SIEM and developing the rules. The name of the correlation rule, as well as the incident generated by it, must be short and clearly reflect the essence of the specific rule.

If more than one person works with incidents and correlation rules in your company, it is recommended to develop naming rules. They must be understood and accepted by the entire team working with SIEM.

Step 16 : Determine the rules of the importance of the incident. Most SIEM solutions at the final stage of incident creation allow you to set its importance and significance. Some solutions even calculate the importance automatically, based on the context of the incident and the objects involved in it.

If your SIEM works exclusively with automatic calculation of the importance of incidents, it is worth finding out on the basis of what and by what formula it is calculated. For example, if the importance is calculated on the basis of the significance of the assets involved in the incident, it is necessary to take a serious approach to asset management (Asset Management) in a company in advance.

If you specify the importance of the incident manually, it is recommended to develop a calculation formula that takes into account at least the following:

- The importance of the scope in which the rule works. For example, an incident in the Mission critical zone of systems may be more critical compared to if exactly the same incident occurred in the Business important zone of systems.

- The importance of assets and user accounts involved in the incident.

- The repetition of the incident in the company.

Also, as in the naming of incidents, it is important that all interested parties clearly and equally understand the rules that shape the importance of the incident.

findings

Summing up our series of articles, I note that it is possible to create correlation rules that work out of the box, in my opinion. The exit can be a fusion of organizational and technical approaches. Something must be able to SIEM itself, but something should be done and know its experts.

Summing up:

- The method consists of the following blocks:

- Preparation of sources and environments.

- Normalization of events and their enrichment.

- Adaptation of correlation rules to the context of the AU.

- Forming an agreement on triggers.

- Each unit has an organizational and technical components.

- From a technical point of view, the described blocks affect almost all the basic functions of the SIEM, ranging from gathering events and ending with the generation of an incident.

- Virtually the entire technical component of this methodology can be provided by existing foreign, as well as some domestic SIEM solutions.

- A more detailed consideration and justification of the steps of this methodology was given in previous articles of the cycle. Links to them are given at the end of the article.

Methodology for developing correlation rules working out of the box

Many thanks to all who mastered the entire cycle of articles, or at least read up to these lines. If you have any questions - write in a personal or ask them in the comments. I will be glad to discuss.

Cycle of articles:

SIEM depths: out-of-box correlations. Part 1: Pure marketing or unsolvable problem?

SIEM depths: out-of-box correlations. Part 2. Data scheme as a reflection of the “world” model

SIEM depths: out-of-box correlations. Part 3.1. Event categorization

SIEM depths: out-of-box correlations. Part 3.2. Event Normalization Methodology

SIEM depths: out-of-box correlations. Part 4. System model as a context of correlation rules

SIEM depths: out-of-box correlations. Part 5. Methodology for developing correlation rules ( This article )

Source: https://habr.com/ru/post/446212/

All Articles