Machine learning without Python, Anaconda and other reptiles

No, well, I, of course, not seriously. There must be a limit to what extent it is possible to simplify the subject. But for the first stages, an understanding of basic concepts and a quick “entry” into the topic, perhaps, it is permissible. And how to properly name this material (variants: “Machine learning for dummies”, “Data analysis from the cradle”, “Algorithms for the smallest”), we will discuss at the end.

To the point. I wrote several MS Excel application programs for visualizing and visualizing the processes that occur in different machine learning methods for data analysis. Seeing is believing, in the end, as culture carriers say, which developed most of these methods (by the way, not everything. The most powerful “support vector machine” method, or SVM, support vector machine is an invention of our compatriot Vladimir Vapnik, Moscow Institute of Management. 1963, by the way! Now, however, he teaches and works in the United States).

Three files for review

The tasks of this type relate to “learning without a teacher”, when we need to divide the source data into a certain number of categories known in advance, but at the same time we do not have any number of “correct answers”, we must extract them from the data themselves. The fundamental classical problem of finding subspecies of iris flowers (Ronald Fisher, 1936!), Which is considered the first sign of this field of knowledge - just of this nature.

')

The method is quite simple. We have a set of objects represented as vectors (sets of N numbers). For irises, these are sets of 4 numbers that characterize a flower: the length and width of the outer and inner share of the perianth, respectively ( Iris Fisher - Wikipedia ). As a distance, or measure of proximity between objects, the usual Cartesian metric is chosen.

Further, in an arbitrary way (or not arbitrary, see below), cluster centers are selected, and distances from each object to cluster centers are calculated. Each object in this iteration step is marked as belonging to the nearest center. Then the center of each cluster is transferred to the arithmetic mean of the coordinates of its members (by analogy with physics, it is also called the "center of mass"), and the procedure is repeated.

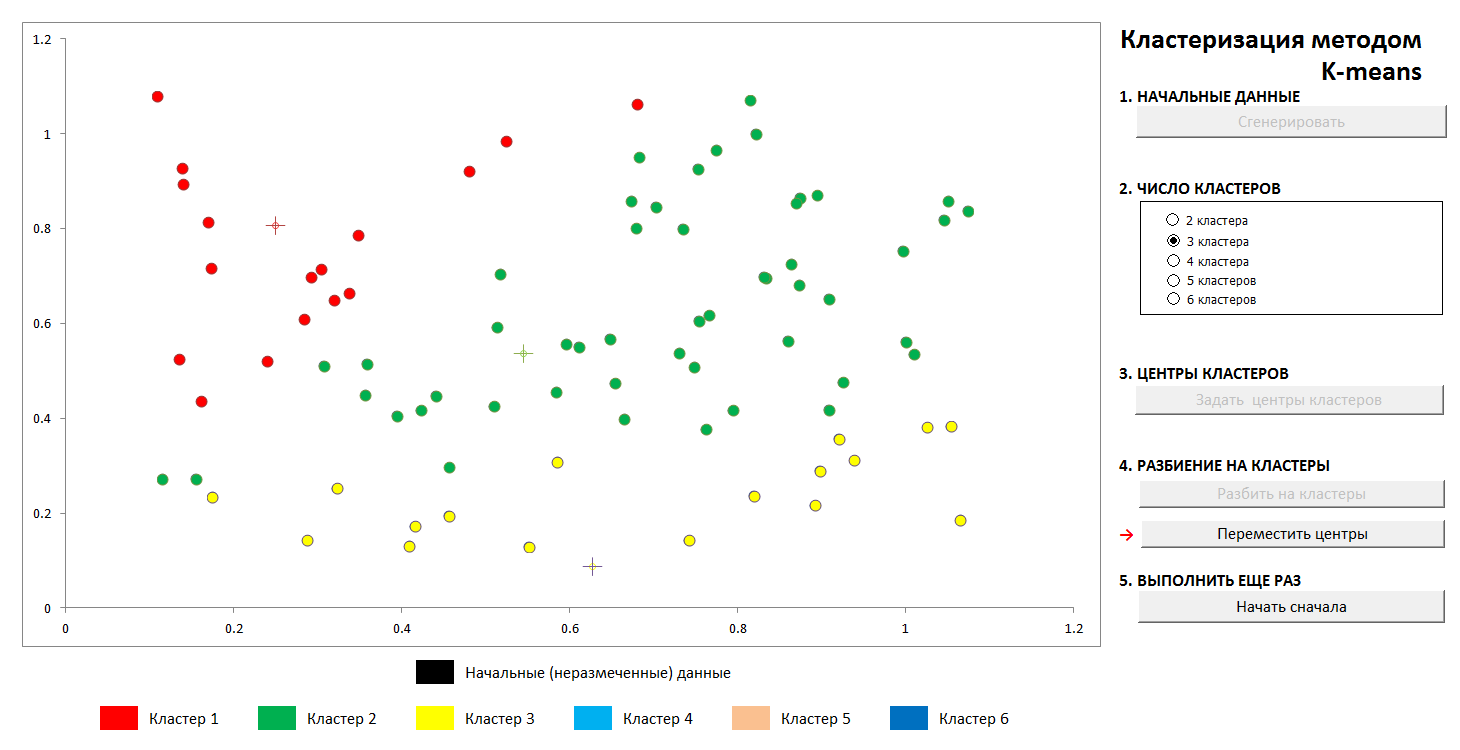

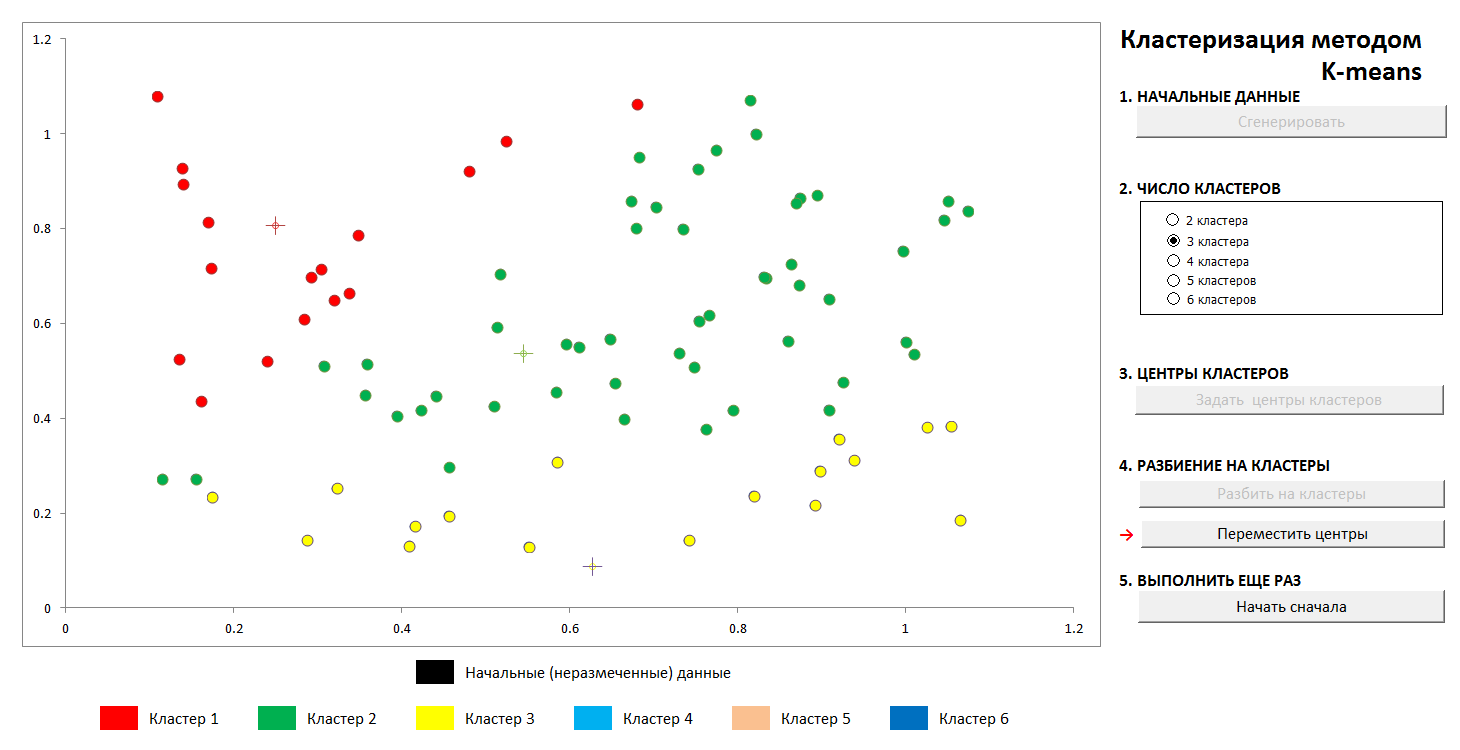

The process quickly converges. In the two-dimensional pictures it looks like this:

1. The initial random distribution of points on the plane and the number of clusters

2. Assigning cluster centers and assigning points to their clusters

3. The transfer of the coordinates of the centers of the clusters, recalculation of the belonging of points until the centers are stabilized. You can see the trajectory of the center of the cluster in the final position.

At any time, you can specify new cluster centers (without generating a new distribution of points!) And see that the partitioning process is not always unambiguous. Mathematically, this means that the optimized function (the sum of the squares of the distances from the points to the centers of its clusters) we find not a global, but a local minimum. This problem can be overcome either by a non-random choice of initial cluster centers, or by enumeration of possible centers (sometimes it is advantageous to place them precisely in one of the points, then at least there is a guarantee that we will not get empty clusters). In any case, a finite set always has an exact lower bound.

You can play with this file via this link (do not forget to enable macro support. Files are checked for viruses)

Wikipedia description of the method - k-means method

A remarkable scientist and popularizer of data science, K.V. Vorontsov briefly speaks about methods of machine learning as about the “science of conducting curves through points”. In this example, we will find a pattern in the data by the method of least squares.

The technique of splitting the initial data into “training” and “control”, as well as such a phenomenon as retraining, or “re-tuning” under the data, is shown. With the right approximation, we will have a certain error on the training data and a slightly larger one on the control data. If it is wrong, fine adjustment for the training data and a huge error on the controls.

(It’s a well-known fact that a single N-1-th degree curve can be drawn through N points, and this method in general does not give the desired result. The Lagrange interpolation polynomial on Wikipedia )

1. Set the initial distribution

2. We divide the points on the "training" and "control" in the ratio of 70 to 30.

3. We draw an approximating curve along the training points, we see the error it gives on the control data

4. We draw an exact curve through the training points, and we see a monstrous error on the control data (and zero on the training data, but what's the point?).

It is shown, of course, the simplest variant with a single partition into “training” and “control” subsets, in the general case this is done many times for the best adjustment of the coefficients.

The file is available here, antivirus checked. Enable macros to work correctly.

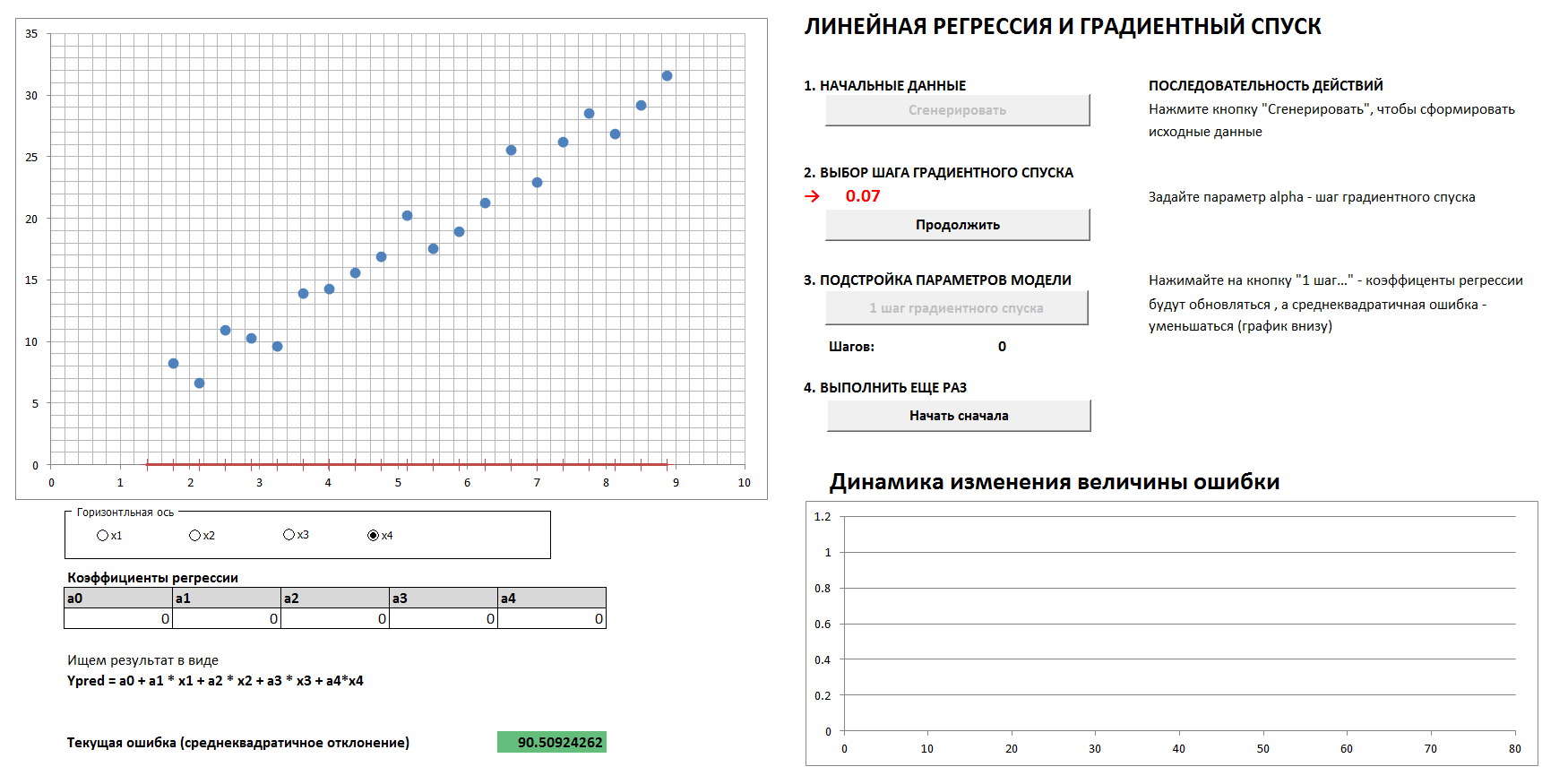

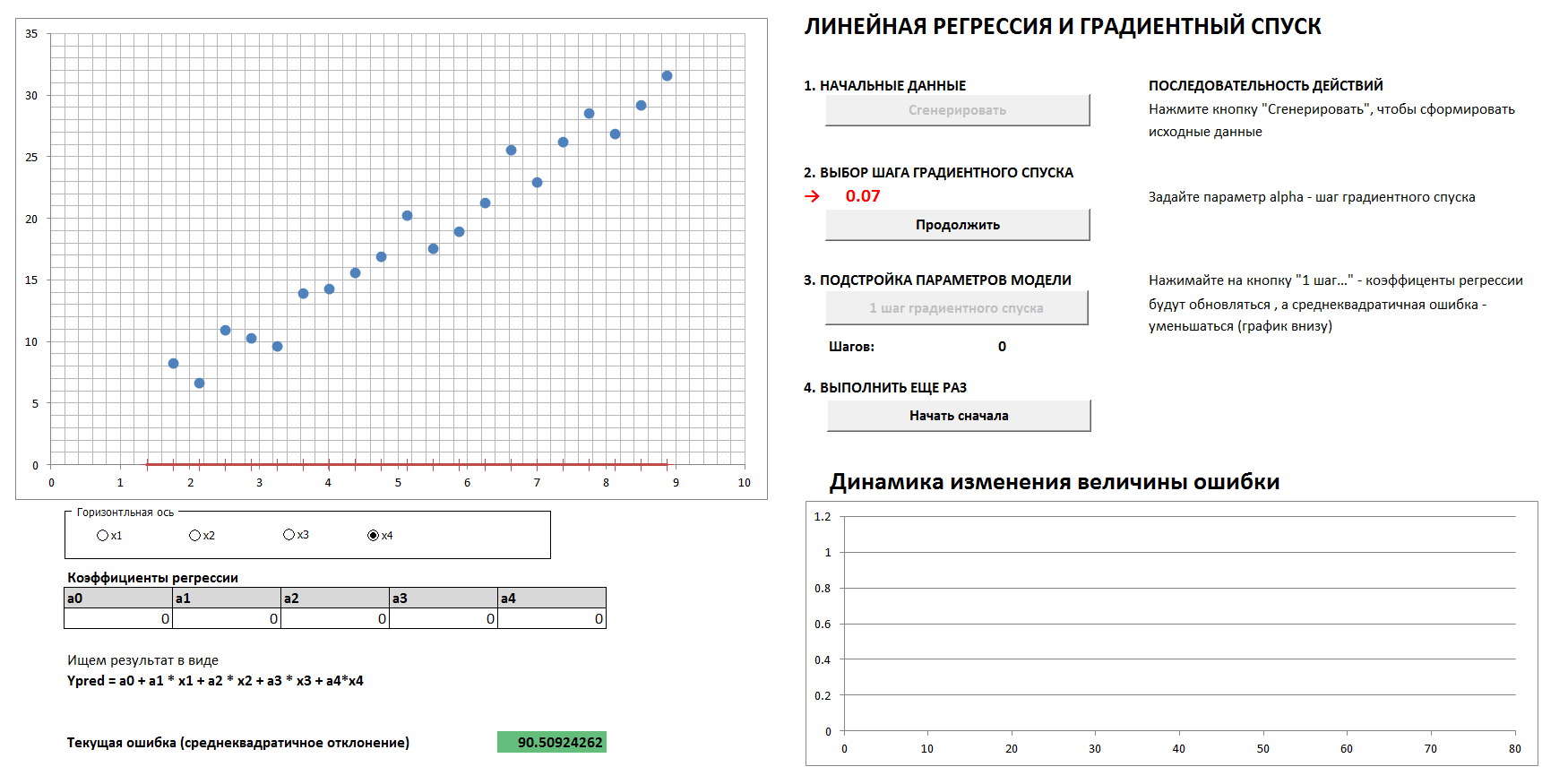

There will be a 4-dimensional case and linear regression. The linear regression coefficients will be determined by steps using the gradient descent method, initially all coefficients are zeroes. On a separate graph, the dynamics of the decrease in error are seen as the coefficients are adjusted more and more precisely. It is possible to see all four 2-dimensional projections.

If you set too large a step of gradient descent, then it is clear that every time we will skip the minimum and come to the result in a greater number of steps, although in the end we will still come (unless we pull up the descent step too much - then the algorithm goes " in spacing "). And the graph of the dependence of the error on the iteration step will not be smooth, but “jerky”.

1. We generate data, set the step gradient descent

2. With the proper selection of the gradient descent step, we smoothly and fairly quickly reach the minimum

3. If the step of the gradient descent is chosen incorrectly, we skip the maximum, the error graph is “jerky”, convergence takes a greater number of steps.

and

4. With a completely wrong selection of the gradient descent step, we move away from the minimum.

(To reproduce the process with the values of the step gradient descent shown in the pictures, tick "reference data").

File - this link, you need to include macros, no viruses.

According to a respected community, is such a simplification and method of presenting material acceptable? Should I translate the article into English?

To the point. I wrote several MS Excel application programs for visualizing and visualizing the processes that occur in different machine learning methods for data analysis. Seeing is believing, in the end, as culture carriers say, which developed most of these methods (by the way, not everything. The most powerful “support vector machine” method, or SVM, support vector machine is an invention of our compatriot Vladimir Vapnik, Moscow Institute of Management. 1963, by the way! Now, however, he teaches and works in the United States).

Three files for review

1. K-Medium Clustering

The tasks of this type relate to “learning without a teacher”, when we need to divide the source data into a certain number of categories known in advance, but at the same time we do not have any number of “correct answers”, we must extract them from the data themselves. The fundamental classical problem of finding subspecies of iris flowers (Ronald Fisher, 1936!), Which is considered the first sign of this field of knowledge - just of this nature.

')

The method is quite simple. We have a set of objects represented as vectors (sets of N numbers). For irises, these are sets of 4 numbers that characterize a flower: the length and width of the outer and inner share of the perianth, respectively ( Iris Fisher - Wikipedia ). As a distance, or measure of proximity between objects, the usual Cartesian metric is chosen.

Further, in an arbitrary way (or not arbitrary, see below), cluster centers are selected, and distances from each object to cluster centers are calculated. Each object in this iteration step is marked as belonging to the nearest center. Then the center of each cluster is transferred to the arithmetic mean of the coordinates of its members (by analogy with physics, it is also called the "center of mass"), and the procedure is repeated.

The process quickly converges. In the two-dimensional pictures it looks like this:

1. The initial random distribution of points on the plane and the number of clusters

2. Assigning cluster centers and assigning points to their clusters

3. The transfer of the coordinates of the centers of the clusters, recalculation of the belonging of points until the centers are stabilized. You can see the trajectory of the center of the cluster in the final position.

At any time, you can specify new cluster centers (without generating a new distribution of points!) And see that the partitioning process is not always unambiguous. Mathematically, this means that the optimized function (the sum of the squares of the distances from the points to the centers of its clusters) we find not a global, but a local minimum. This problem can be overcome either by a non-random choice of initial cluster centers, or by enumeration of possible centers (sometimes it is advantageous to place them precisely in one of the points, then at least there is a guarantee that we will not get empty clusters). In any case, a finite set always has an exact lower bound.

You can play with this file via this link (do not forget to enable macro support. Files are checked for viruses)

Wikipedia description of the method - k-means method

2. Approximation by polynomials and data breakdown. Retraining

A remarkable scientist and popularizer of data science, K.V. Vorontsov briefly speaks about methods of machine learning as about the “science of conducting curves through points”. In this example, we will find a pattern in the data by the method of least squares.

The technique of splitting the initial data into “training” and “control”, as well as such a phenomenon as retraining, or “re-tuning” under the data, is shown. With the right approximation, we will have a certain error on the training data and a slightly larger one on the control data. If it is wrong, fine adjustment for the training data and a huge error on the controls.

(It’s a well-known fact that a single N-1-th degree curve can be drawn through N points, and this method in general does not give the desired result. The Lagrange interpolation polynomial on Wikipedia )

1. Set the initial distribution

2. We divide the points on the "training" and "control" in the ratio of 70 to 30.

3. We draw an approximating curve along the training points, we see the error it gives on the control data

4. We draw an exact curve through the training points, and we see a monstrous error on the control data (and zero on the training data, but what's the point?).

It is shown, of course, the simplest variant with a single partition into “training” and “control” subsets, in the general case this is done many times for the best adjustment of the coefficients.

The file is available here, antivirus checked. Enable macros to work correctly.

3. Gradient descent and error dynamics

There will be a 4-dimensional case and linear regression. The linear regression coefficients will be determined by steps using the gradient descent method, initially all coefficients are zeroes. On a separate graph, the dynamics of the decrease in error are seen as the coefficients are adjusted more and more precisely. It is possible to see all four 2-dimensional projections.

If you set too large a step of gradient descent, then it is clear that every time we will skip the minimum and come to the result in a greater number of steps, although in the end we will still come (unless we pull up the descent step too much - then the algorithm goes " in spacing "). And the graph of the dependence of the error on the iteration step will not be smooth, but “jerky”.

1. We generate data, set the step gradient descent

2. With the proper selection of the gradient descent step, we smoothly and fairly quickly reach the minimum

3. If the step of the gradient descent is chosen incorrectly, we skip the maximum, the error graph is “jerky”, convergence takes a greater number of steps.

and

4. With a completely wrong selection of the gradient descent step, we move away from the minimum.

(To reproduce the process with the values of the step gradient descent shown in the pictures, tick "reference data").

File - this link, you need to include macros, no viruses.

According to a respected community, is such a simplification and method of presenting material acceptable? Should I translate the article into English?

Source: https://habr.com/ru/post/446150/

All Articles