We write an operating system on Rust. Implementation of paging memory (new version)

In this article we will understand how to implement support for paged memory in our kernel. First, we will study various methods so that the frames of the physical page table become accessible to the kernel, and we will discuss their advantages and disadvantages. Then we implement the address translation function and the function to create a new mapping.

This series of articles published posted on GitHub . If you have any questions or problems, open the corresponding ticket there. All source codes for the article are in this thread .

Another article about paging memory?

If you follow this cycle, then you saw the article “Page memory: advanced level” at the end of January. But I was criticized for recursive page tables. Therefore, I decided to rewrite the article using a different approach for accessing frames.

Here is a new version. The article still explains how recursive page tables work, but we use a simpler and more powerful implementation. We will not delete the previous article, but mark it as outdated and will not update it.

')

I hope you enjoy the new version!

From the last article, we learned about the principles of paging memory and how four-level page tables work on

The article ended up with the fact that we could not access the page tables from our kernel, because they are stored in physical memory, and the kernel is already running on virtual addresses. Here we will continue the topic and explore different options for accessing the frames of the page table from the kernel. We discuss the advantages and disadvantages of each of them, and then choose the appropriate option for our core.

We need support for the bootloader, so we will configure it first. Then we implement a function that passes through the entire hierarchy of page tables in order to translate virtual addresses into physical ones. Finally, we will learn how to create new mappings in the page tables and how to find unused memory frames to create new tables.

This article requires you to write in dependencies

Changes in these versions can be found in the bootloader log and in the x86_64 log .

Access to the page tables from the kernel is not as simple as it may seem. To understand the problem, take another look at the four-level hierarchy of the tables from the previous article:

It is important that each page entry stores the physical address of the following table. This avoids the translation of these addresses, which reduces performance and easily leads to endless loops.

The problem is that we cannot directly access the physical addresses from the kernel, since it also works on virtual addresses. For example, when we refer to the address

Therefore, to access the frames of the page tables, you need to map certain virtual pages to these frames. There are different ways to create such comparisons.

A simple solution is the identical display of all page tables .

In this example, we see the same frame mapping. The physical addresses of the page tables are at the same time valid virtual addresses, so that we can easily access the tables of pages of all levels, starting with register CR3.

However, this approach clutters up the virtual address space and makes it difficult to find large contiguous areas of free memory. Let's say we want to create a 1000 KiB virtual memory area in the figure above, for example, to display a file in memory . We cannot start from the

In addition, the creation of new page tables is much more complicated, since we need to find physical frames whose corresponding pages are not yet used. For example, we reserved a 1000 KiB of virtual memory for our file, starting at address

To avoid cluttering the virtual address space, you can display page tables in a separate area of memory . Therefore, instead of the identity mapping, we associate frames with a fixed offset in the virtual address space. For example, the offset may be 10 TiB:

By allocating this range of virtual memory purely to display page tables, we avoid the problems of identity mapping. Reserving such a large area of virtual address space is possible only if the virtual address space is much larger than the size of physical memory. This is not a problem with

But this approach has the disadvantage that when creating each page table you need to create a new mapping. In addition, it does not allow access to tables in other address spaces, which would be useful when creating a new process.

We can solve these problems by displaying all the physical memory , not just the frames of the page tables:

This approach allows the kernel to access arbitrary physical memory, including the frames of the page tables of other address spaces. A virtual memory range of the same size as before is reserved, but there are no remaining pages in it.

The disadvantage of this approach is that additional page tables are needed to display physical memory. These page tables should be stored somewhere, so they use part of the physical memory, which can be a problem on devices with a small amount of RAM.

However, on x86_64 we can use huge 2 MiB pages to display instead of the default size of 4 KiB. Thus, a total of 132 KiB per page table is required to display 32 GiB of physical memory: only one third-level table and 32 second-level tables. Huge pages are also cached more efficiently because they use fewer entries in the dynamic translation buffer (TLB).

For devices with a very small amount of physical memory, you can display page tables only temporarily when you need to access them. For temporary comparisons, only the first level table is required:

In this figure, the level 1 table manages the first 2 MiB of the virtual address space. This is possible because access is made from the CR3 register through zero entries in the tables of levels 4, 3 and 2. The entry with index

By writing to the identically mapped level 1 table, our kernel can create up to 511 time comparisons (512 minus the record needed for the identical mapping). In the given example, the kernel creates two temporary comparisons:

Now the kernel can access a level 2 table by writing to a page that starts at

Thus, access to an arbitrary frame of the page table with temporary comparisons consists of the following actions:

With this approach, the virtual address space remains clean, since the same 512 virtual pages are constantly used. The disadvantage is some cumbersome, especially since the new mapping may require changing several levels of the table, that is, we need to repeat the described process several times.

Another interesting approach that does not require additional page tables at all is recursive mapping .

The idea is to translate some records from the table of the fourth level into itself. Thus, we actually reserve part of the virtual address space and match all current and future table frames with this space.

Consider an example to see how this all works:

The only difference from the example at the beginning of the article is an additional entry with the index

When the CPU follows this record, it does not refer to the level 3 table, but again refers to the level 4 table. This is similar to a recursive function that calls itself. It is important that the processor assumes that each entry in a table of level 4 points to a table of level 3, so now it treats a table of level 4 as a table of level 3. This works because the tables of all levels in x86_64 have the same structure.

By following the recursive notation one or more times before starting the actual conversion, we can effectively reduce the number of levels that the processor goes through. For example, if we follow the recursive notation once, and then go to the level 3 table, the processor thinks that the level 3 table is a level 2 table. Going further, it views the level 2 table as a level 1 table, and a level 1 table as mapped frame in physical memory. This means that we can now read and write to the Tier 1 page table, because the processor thinks it is a mapped frame. The figure below shows the five steps of such a broadcast:

Similarly, we can follow the recursive notation twice before starting the conversion, in order to reduce the number of levels passed to two:

Let's go through this procedure step by step. First, the CPU follows the recursive notation in the level 4 table and thinks it has reached the level 3 table. Then it follows again the recursive record and thinks it has reached level 2. But in fact it is still at level 4. Then the CPU goes to a new address and enters the table of level 3, but thinks that it is already in the table of level 1. Finally, at the next entry point in the table of level 2, the processor thinks that it turned to the frame of physical memory. This allows us to read and write to level 2 table.

It also access the tables of levels 3 and 4. To access the table of level 3, we follow the recursive write three times: the processor thinks that it is already in the table of level 1, and in the next step we reach level 3, which the CPU considers as a mapped frame. To access the level 4 table itself, we simply follow the recursive notation four times until the processor treats the level 4 table itself as the displayed frame (in blue in the figure below).

The concept is difficult to understand at first, but in practice it works quite well.

So, we can access the tables of all levels by following the recursive notation one or more times. Since the indices in the tables of four levels are derived directly from the virtual address, for this method you need to create special virtual addresses. As we remember, the indexes of the page table are extracted from the address as follows:

Suppose we want to access a level 1 table that displays a specific page. As we learned above, you need to go through the recursive notation once, and then through the indices of the 4th, 3rd and 2nd levels. To do this, we move all blocks of addresses one block to the right and set the index of the recursive record to the place of the initial level 4 index:

To access the table of level 2 of this page, we move all blocks of indices two blocks to the right and set the recursive index to the place of both source blocks: level 4 and level 3:

To access the table of level 3, we do the same, just moving three blocks of addresses to the right.

Finally, to access the level 4 table, move everything four blocks to the right.

Now you can calculate virtual addresses for page tables of all four levels. We can even calculate an address that points precisely to a specific page table entry by multiplying its index by 8, the size of the page table entry.

The table below shows the structure of addresses for accessing different types of frames:

Here

The addresses are octal , because each octal character represents three bits, which allows you to clearly separate the 9-bit indexes of tables of different levels. This is not possible in hexadecimal, where each character represents four bits.

To construct such addresses in the Rust code, you can use bitwise operations:

This code assumes a recursive display of the last entry of level 4 with the index

As an alternative to manually performing bitwise operations, you can use the

Again, this code requires proper recursive mapping. With this mapping, the missing

Recursive mapping is an interesting method that shows how powerful a mapping can be through a single table. It is relatively easy to implement and requires only minimal configuration (just one recursive entry), so this is a good choice for the first experiments.

But he has some drawbacks:

All described approaches require changes to the page tables and the corresponding settings. For example, for the identity mapping of physical memory or the recursive mapping of a fourth-level table entry. The problem is that we cannot make these settings without having access to the page tables.

So, I need help loader. It has access to the page tables, so it can create any mappings that we need. In its current implementation, the

For our kernel, we choose the first option, because it is a simple, platform independent and more powerful approach (it also gives access to the rest of the frames, not only to the page tables). To support the loader, add a function to its dependencies

If this feature is enabled, the bootloader maps complete physical memory to some unused range of virtual addresses. In order to transfer a range of virtual addresses to the kernel, the loader passes the structure of the boot information .

The crate

The loader passes the structure

It is important to specify the correct type of argument, since the compiler does not know the correct type signature of our entry point function.

Since the function

To ensure that the entry point function always has the correct signature, the crate

You no longer need to use for the entry point

Now we have access to physical memory and we can finally begin implementing the system. First, consider the current active page tables on which the kernel is running. In the second step, we will create a translation function that returns the physical address that this virtual address is associated with. In the last step, we will try to modify the page tables to create a new mapping.

First, create a new module in the code

For the module, create an empty file

At the end of the previous article, we tried to look at the tables of the pages on which the kernel runs, but could not access the physical frame pointed to by the register

First we read the physical frame of the active level 4 table from the register

There is no need to insert an unsafe block, because Rust regards the whole body

Now we can use this function to display the records of the fourth level table:

In quality we

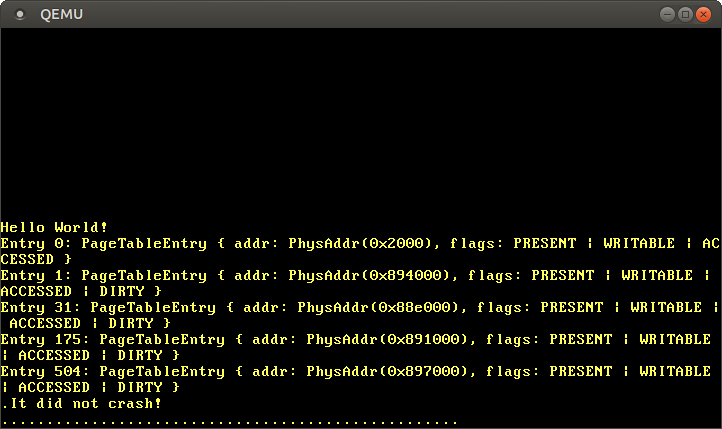

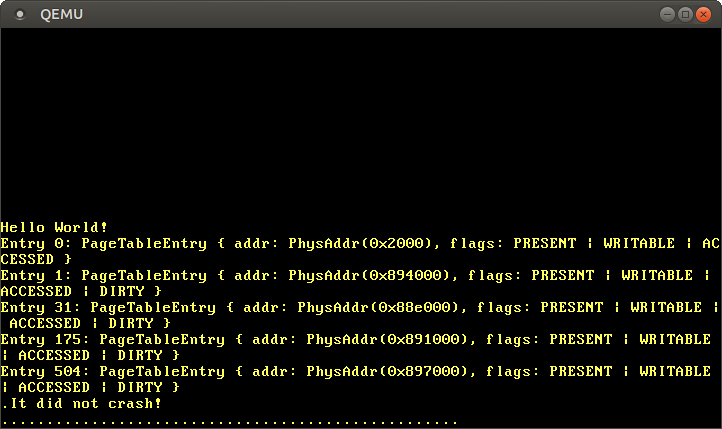

When we run the code, we see the following result:

We see several non-empty records that are mapped to various third-level tables. So many areas of memory are used because separate areas are needed for kernel code, kernel stack, physical memory translation, and boot information.

To go through the tables of pages and look at the table of the third level, we can again convert the displayed frame into a virtual address:

To view the tables of the second and first level, we repeat this process, respectively, for the records of the third and second levels. As you can imagine, the amount of code is growing very quickly, so we will not publish a full listing.

Manual crawling of tables is interesting because it helps to understand how the processor performs address translation. But usually we are only interested in displaying one physical address for a specific virtual address, so let's create a function for that.

To translate a virtual address into a physical one, we must go through a four-level page table until we reach the mapped frame. Create a function that performs this address translation:

We refer to a secure function

The special internal function has real functionality:

Instead of reusing the function,

The structure

Within the loop, we again apply

So, let's check the translation function at some addresses:

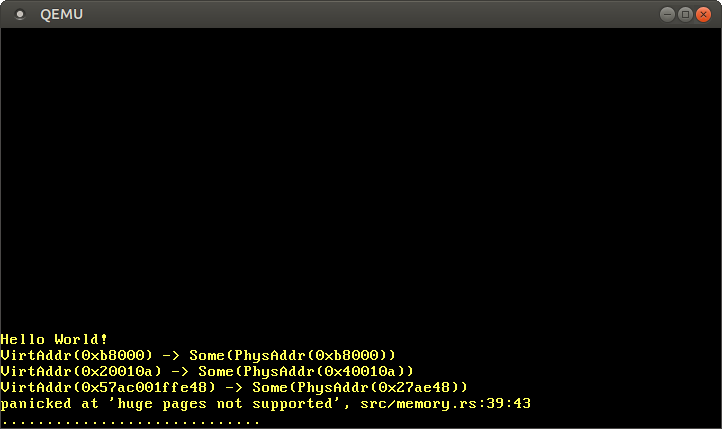

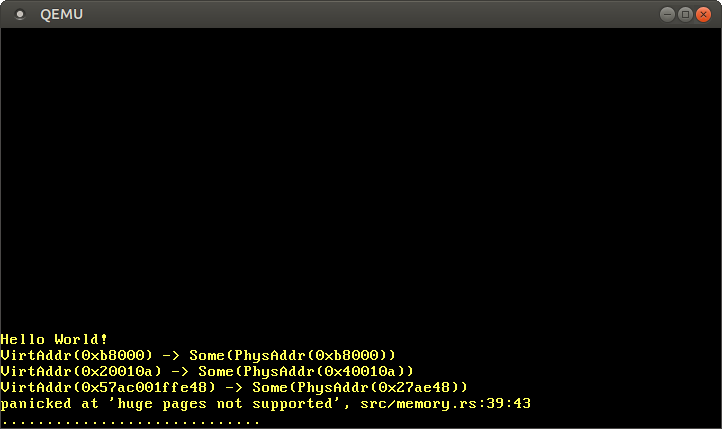

When we run the code, we get the following result:

As expected, with the identical mapping, the address is

Translation of virtual addresses into physical ones is a typical task of the OS kernel, therefore the crate

The basis of the abstraction is two traits that define the various functions of the translation of the page table:

Traits define only the interface, but provide no implementation. The crate now

We have all the physical memory mapped in

We cannot directly return

The function

We also do a

To use the method

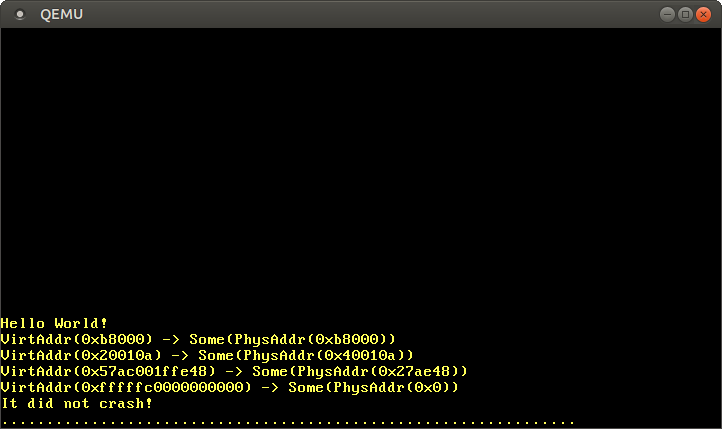

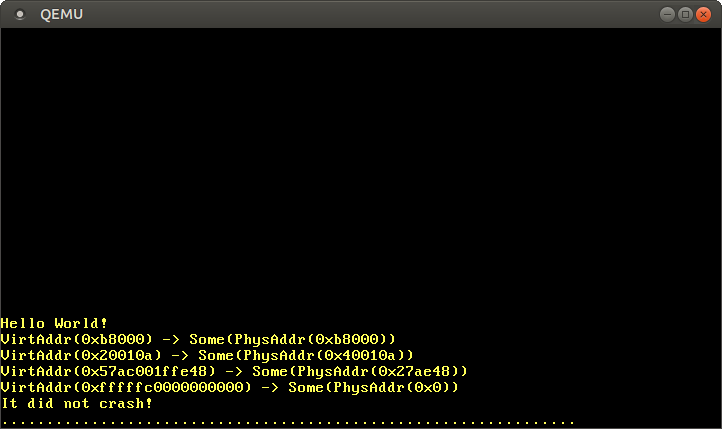

After launch, we see the same translation results as before, but only huge pages now also work:

As expected, the virtual address is

Until now, we only looked at the page tables, but did not change anything. Let's create a new mapping for a previously unmapped page.

We will use the function

Function

The first step of our implementation is the creation of a new function

The function

In addition to the page

For mapping, set the flag

The function

Fictitious

To call

Let's start with a simple case and assume that you do not need to create new page tables. For this, a frame allocator is sufficient, which always returns

Now you need to find a page that can be displayed without creating new page tables. The loader is loaded into the first megabyte of the virtual address space, so we know that there is a valid level 1 table for this region. For our example, we can choose any unused page in this memory area, for example, the page at the address

To test the function, we first display the page

First, we create a mapping for the page in

Then convert the page to a raw pointer and write the value to the offset

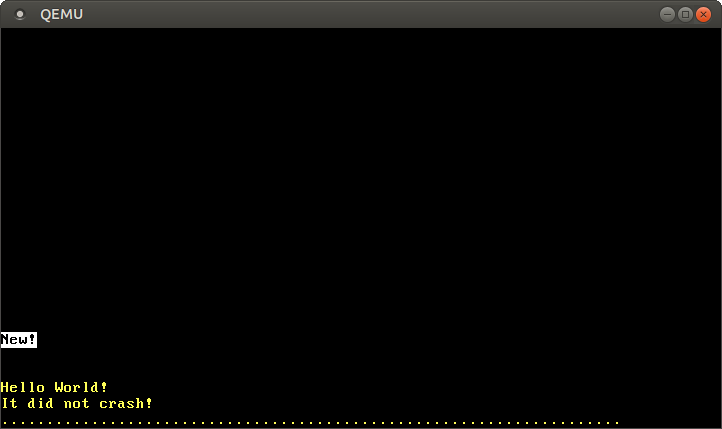

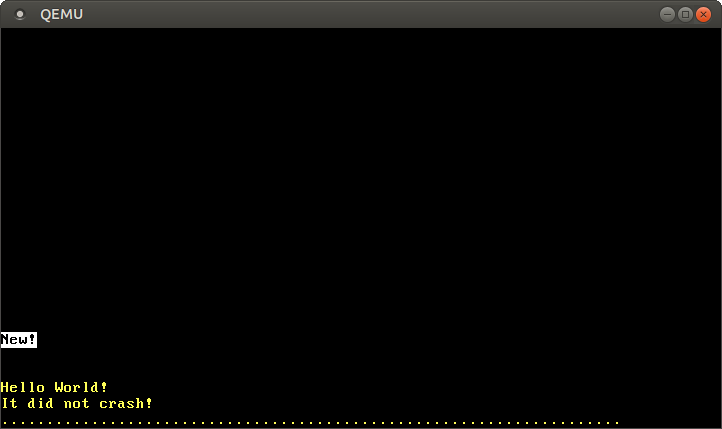

When we run the code in QEMU, we see the following result:

After writing to the page

This mapping worked because there was already a level 1 table for mapping

If this is run, a panic arises with the following error message:

To display pages that do not yet have a Level 1 page table, you need to create the correct one

For new page tables, create the correct frame allocator. Let's start with a common skeleton:

- “New!” .

, , , . .

, .

, ,

, .

This series of articles published posted on GitHub . If you have any questions or problems, open the corresponding ticket there. All source codes for the article are in this thread .

Another article about paging memory?

If you follow this cycle, then you saw the article “Page memory: advanced level” at the end of January. But I was criticized for recursive page tables. Therefore, I decided to rewrite the article using a different approach for accessing frames.

Here is a new version. The article still explains how recursive page tables work, but we use a simpler and more powerful implementation. We will not delete the previous article, but mark it as outdated and will not update it.

')

I hope you enjoy the new version!

Content

Introduction

From the last article, we learned about the principles of paging memory and how four-level page tables work on

x86_64 . We also found that the loader has already configured a hierarchy of page tables for our kernel, so the kernel works on virtual addresses. This increases security because unauthorized memory access causes a page fault instead of an arbitrary change in physical memory.The article ended up with the fact that we could not access the page tables from our kernel, because they are stored in physical memory, and the kernel is already running on virtual addresses. Here we will continue the topic and explore different options for accessing the frames of the page table from the kernel. We discuss the advantages and disadvantages of each of them, and then choose the appropriate option for our core.

We need support for the bootloader, so we will configure it first. Then we implement a function that passes through the entire hierarchy of page tables in order to translate virtual addresses into physical ones. Finally, we will learn how to create new mappings in the page tables and how to find unused memory frames to create new tables.

Dependency updates

This article requires you to write in dependencies

bootloader version 0.4.0 or higher and x86_64 version 0.5.2 or higher. You can update dependencies in Cargo.toml : [dependencies] bootloader = "0.4.0" x86_64 = "0.5.2" Changes in these versions can be found in the bootloader log and in the x86_64 log .

Access to page tables

Access to the page tables from the kernel is not as simple as it may seem. To understand the problem, take another look at the four-level hierarchy of the tables from the previous article:

It is important that each page entry stores the physical address of the following table. This avoids the translation of these addresses, which reduces performance and easily leads to endless loops.

The problem is that we cannot directly access the physical addresses from the kernel, since it also works on virtual addresses. For example, when we refer to the address

4 KiB , we get access to the virtual address 4 KiB , and not to the physical address where the table of the 4th level pages is stored. If we want to access the physical address of 4 KiB , then we need to use a virtual address that is translated into it.Therefore, to access the frames of the page tables, you need to map certain virtual pages to these frames. There are different ways to create such comparisons.

Identity mapping

A simple solution is the identical display of all page tables .

In this example, we see the same frame mapping. The physical addresses of the page tables are at the same time valid virtual addresses, so that we can easily access the tables of pages of all levels, starting with register CR3.

However, this approach clutters up the virtual address space and makes it difficult to find large contiguous areas of free memory. Let's say we want to create a 1000 KiB virtual memory area in the figure above, for example, to display a file in memory . We cannot start from the

28 KiB region, because it will rest on the already occupied page at 1004 KiB . Therefore, we will have to look further until we find a suitable large fragment, for example, with 1008 KiB . The same problem of fragmentation arises as in segmented memory.In addition, the creation of new page tables is much more complicated, since we need to find physical frames whose corresponding pages are not yet used. For example, we reserved a 1000 KiB of virtual memory for our file, starting at address

1008 KiB . Now we can no longer use any frame with a physical address between 1000 KiB and 2008 KiB , because it cannot be identically displayed.Fixed Offset Map

To avoid cluttering the virtual address space, you can display page tables in a separate area of memory . Therefore, instead of the identity mapping, we associate frames with a fixed offset in the virtual address space. For example, the offset may be 10 TiB:

By allocating this range of virtual memory purely to display page tables, we avoid the problems of identity mapping. Reserving such a large area of virtual address space is possible only if the virtual address space is much larger than the size of physical memory. This is not a problem with

x86_64 , because the 48-bit address space is 256 TiB.But this approach has the disadvantage that when creating each page table you need to create a new mapping. In addition, it does not allow access to tables in other address spaces, which would be useful when creating a new process.

Full physical memory mapping

We can solve these problems by displaying all the physical memory , not just the frames of the page tables:

This approach allows the kernel to access arbitrary physical memory, including the frames of the page tables of other address spaces. A virtual memory range of the same size as before is reserved, but there are no remaining pages in it.

The disadvantage of this approach is that additional page tables are needed to display physical memory. These page tables should be stored somewhere, so they use part of the physical memory, which can be a problem on devices with a small amount of RAM.

However, on x86_64 we can use huge 2 MiB pages to display instead of the default size of 4 KiB. Thus, a total of 132 KiB per page table is required to display 32 GiB of physical memory: only one third-level table and 32 second-level tables. Huge pages are also cached more efficiently because they use fewer entries in the dynamic translation buffer (TLB).

Temporary display

For devices with a very small amount of physical memory, you can display page tables only temporarily when you need to access them. For temporary comparisons, only the first level table is required:

In this figure, the level 1 table manages the first 2 MiB of the virtual address space. This is possible because access is made from the CR3 register through zero entries in the tables of levels 4, 3 and 2. The entry with index

8 translates the virtual page at 32 KiB into the physical frame at 32 KiB , thereby identically displaying the level 1 table itself. The figure shows this with a horizontal arrow.By writing to the identically mapped level 1 table, our kernel can create up to 511 time comparisons (512 minus the record needed for the identical mapping). In the given example, the kernel creates two temporary comparisons:

- Comparison of the zero entry of the table of level 1 with the frame at

24 KiB. This creates a temporary mapping of the virtual page at0 KiBaddress0 KiBwith the physical frame of the level 2 page table, indicated by the dotted arrow. - Comparison of the 9th entry of the table of level 1 with the frame at address

4 KiB. This creates a temporary mapping of the virtual page at36 KiBwith the physical frame of the level 4 page table, indicated by the dotted arrow.

Now the kernel can access a level 2 table by writing to a page that starts at

0 KiB and a table of level 4 by writing to a page that starts at 33 KiB .Thus, access to an arbitrary frame of the page table with temporary comparisons consists of the following actions:

- Find a free entry in the identically mapped level 1 table.

- Match this entry with the physical frame of the page table to which we want to access.

- Access this frame through the virtual page associated with the entry.

- Set the record back to unused, thereby removing the temporary mapping.

With this approach, the virtual address space remains clean, since the same 512 virtual pages are constantly used. The disadvantage is some cumbersome, especially since the new mapping may require changing several levels of the table, that is, we need to repeat the described process several times.

Recursive page tables

Another interesting approach that does not require additional page tables at all is recursive mapping .

The idea is to translate some records from the table of the fourth level into itself. Thus, we actually reserve part of the virtual address space and match all current and future table frames with this space.

Consider an example to see how this all works:

The only difference from the example at the beginning of the article is an additional entry with the index

511 in the table of level 4, which is matched with the physical frame 4 KiB , which is in the table itself.When the CPU follows this record, it does not refer to the level 3 table, but again refers to the level 4 table. This is similar to a recursive function that calls itself. It is important that the processor assumes that each entry in a table of level 4 points to a table of level 3, so now it treats a table of level 4 as a table of level 3. This works because the tables of all levels in x86_64 have the same structure.

By following the recursive notation one or more times before starting the actual conversion, we can effectively reduce the number of levels that the processor goes through. For example, if we follow the recursive notation once, and then go to the level 3 table, the processor thinks that the level 3 table is a level 2 table. Going further, it views the level 2 table as a level 1 table, and a level 1 table as mapped frame in physical memory. This means that we can now read and write to the Tier 1 page table, because the processor thinks it is a mapped frame. The figure below shows the five steps of such a broadcast:

Similarly, we can follow the recursive notation twice before starting the conversion, in order to reduce the number of levels passed to two:

Let's go through this procedure step by step. First, the CPU follows the recursive notation in the level 4 table and thinks it has reached the level 3 table. Then it follows again the recursive record and thinks it has reached level 2. But in fact it is still at level 4. Then the CPU goes to a new address and enters the table of level 3, but thinks that it is already in the table of level 1. Finally, at the next entry point in the table of level 2, the processor thinks that it turned to the frame of physical memory. This allows us to read and write to level 2 table.

It also access the tables of levels 3 and 4. To access the table of level 3, we follow the recursive write three times: the processor thinks that it is already in the table of level 1, and in the next step we reach level 3, which the CPU considers as a mapped frame. To access the level 4 table itself, we simply follow the recursive notation four times until the processor treats the level 4 table itself as the displayed frame (in blue in the figure below).

The concept is difficult to understand at first, but in practice it works quite well.

Address calculation

So, we can access the tables of all levels by following the recursive notation one or more times. Since the indices in the tables of four levels are derived directly from the virtual address, for this method you need to create special virtual addresses. As we remember, the indexes of the page table are extracted from the address as follows:

Suppose we want to access a level 1 table that displays a specific page. As we learned above, you need to go through the recursive notation once, and then through the indices of the 4th, 3rd and 2nd levels. To do this, we move all blocks of addresses one block to the right and set the index of the recursive record to the place of the initial level 4 index:

To access the table of level 2 of this page, we move all blocks of indices two blocks to the right and set the recursive index to the place of both source blocks: level 4 and level 3:

To access the table of level 3, we do the same, just moving three blocks of addresses to the right.

Finally, to access the level 4 table, move everything four blocks to the right.

Now you can calculate virtual addresses for page tables of all four levels. We can even calculate an address that points precisely to a specific page table entry by multiplying its index by 8, the size of the page table entry.

The table below shows the structure of addresses for accessing different types of frames:

| Virtual address for | Address structure ( octal ) |

|---|---|

| Page | 0o_SSSSSS_AAA_BBB_CCC_DDD_EEEE |

| Entry in table level 1 | 0o_SSSSSS_RRR_AAA_BBB_CCC_DDDD |

| Entry in table level 2 | 0o_SSSSSS_RRR_RRR_AAA_BBB_CCCC |

| Entry in table level 3 | 0o_SSSSSS_RRR_RRR_RRR_AAA_BBBB |

| Entry in table level 4 | 0o_SSSSSS_RRR_RRR_RRR_RRR_AAAA |

Here

DDD is level 1 index for the displayed frame, EEEE is its offset. RRR - recursive write index. The index (three digits) is converted to an offset (four digits) by multiplying by 8 (the size of the page table entry). At this offset, the resulting address directly points to the corresponding page table entry.SSSS are the bits of the sign bit extension, that is, they are all copies of bit 47. This is a special requirement for valid addresses in the x86_64 architecture, which we discussed in the previous article .The addresses are octal , because each octal character represents three bits, which allows you to clearly separate the 9-bit indexes of tables of different levels. This is not possible in hexadecimal, where each character represents four bits.

Rust Code

To construct such addresses in the Rust code, you can use bitwise operations:

// the virtual address whose corresponding page tables you want to access let addr: usize = […]; let r = 0o777; // recursive index let sign = 0o177777 << 48; // sign extension // retrieve the page table indices of the address that we want to translate let l4_idx = (addr >> 39) & 0o777; // level 4 index let l3_idx = (addr >> 30) & 0o777; // level 3 index let l2_idx = (addr >> 21) & 0o777; // level 2 index let l1_idx = (addr >> 12) & 0o777; // level 1 index let page_offset = addr & 0o7777; // calculate the table addresses let level_4_table_addr = sign | (r << 39) | (r << 30) | (r << 21) | (r << 12); let level_3_table_addr = sign | (r << 39) | (r << 30) | (r << 21) | (l4_idx << 12); let level_2_table_addr = sign | (r << 39) | (r << 30) | (l4_idx << 21) | (l3_idx << 12); let level_1_table_addr = sign | (r << 39) | (l4_idx << 30) | (l3_idx << 21) | (l2_idx << 12); This code assumes a recursive display of the last entry of level 4 with the index

0o777 (511) recursively mapped. This is currently not the case, so the code will not work yet. See below for how to tell the loader to set a recursive mapping.As an alternative to manually performing bitwise operations, you can use the

x86_64 crate type RecursivePageTable , which provides safe abstractions for various table operations. For example, the code below shows how to convert a virtual address to its corresponding physical address: // in src/memory.rs use x86_64::structures::paging::{Mapper, Page, PageTable, RecursivePageTable}; use x86_64::{VirtAddr, PhysAddr}; /// Creates a RecursivePageTable instance from the level 4 address. let level_4_table_addr = […]; let level_4_table_ptr = level_4_table_addr as *mut PageTable; let recursive_page_table = unsafe { let level_4_table = &mut *level_4_table_ptr; RecursivePageTable::new(level_4_table).unwrap(); } /// Retrieve the physical address for the given virtual address let addr: u64 = […] let addr = VirtAddr::new(addr); let page: Page = Page::containing_address(addr); // perform the translation let frame = recursive_page_table.translate_page(page); frame.map(|frame| frame.start_address() + u64::from(addr.page_offset())) Again, this code requires proper recursive mapping. With this mapping, the missing

level_4_table_addr calculated as in the first code example.Recursive mapping is an interesting method that shows how powerful a mapping can be through a single table. It is relatively easy to implement and requires only minimal configuration (just one recursive entry), so this is a good choice for the first experiments.

But he has some drawbacks:

- Large amount of virtual memory (512 GiB). This is not a problem in a large 48-bit address space, but can lead to non-optimal cache behavior.

- It easily gives access only to the currently active address space. Access to other address spaces is still possible by changing the recursive notation, but temporary mapping is required to switch. We described how to do this in a past (obsolete) article.

- It strongly depends on the format of the x86 page table and may not work on other architectures.

Bootloader support

All described approaches require changes to the page tables and the corresponding settings. For example, for the identity mapping of physical memory or the recursive mapping of a fourth-level table entry. The problem is that we cannot make these settings without having access to the page tables.

So, I need help loader. It has access to the page tables, so it can create any mappings that we need. In its current implementation, the

bootloader crate supports the two aforementioned approaches using cargo functions :- The

map_physical_memoryfunction maps total physical memory somewhere in a virtual address space. Thus, the kernel gains access to all physical memory and can apply an approach with the display of full physical memory . - Using the

recursive_page_tablefunction, the loader recursively displays the fourth-level page table entry. This allows the kernel to work according to the method described in the Recursive Page Tables section .

For our kernel, we choose the first option, because it is a simple, platform independent and more powerful approach (it also gives access to the rest of the frames, not only to the page tables). To support the loader, add a function to its dependencies

map_physical_memory: [dependencies] bootloader = { version = "0.4.0", features = ["map_physical_memory"]} If this feature is enabled, the bootloader maps complete physical memory to some unused range of virtual addresses. In order to transfer a range of virtual addresses to the kernel, the loader passes the structure of the boot information .

Boot information

The crate

bootloaderdefines a BootInfo structure with all the information passed to the kernel. The structure is still being finalized, so some failures are possible when upgrading to future versions that are incompatible with semver . Currently, the structure has two fields: memory_mapand physical_memory_offset:- This field

memory_mapcontains an overview of the available physical memory. It tells the kernel how much physical memory is available in the system and which areas of memory are reserved for devices, such as VGA. You can request a memory card from the BIOS or UEFI firmware, but only at the very beginning of the boot process. For this reason, it must be provided by the loader, because then the kernel will no longer be able to obtain this information. The memory card is useful to us later in this article. physical_memory_offsetreports the virtual starting address of the physical memory mapping. By adding this offset to the physical address, we get the corresponding virtual address. This gives access from the kernel to arbitrary physical memory.

The loader passes the structure

BootInfoto the kernel as an argument &'static BootInfoto the function _start. Add it: // in src/main.rs use bootloader::BootInfo; #[cfg(not(test))] #[no_mangle] pub extern "C" fn _start(boot_info: &'static BootInfo) -> ! { // new argument […] } It is important to specify the correct type of argument, since the compiler does not know the correct type signature of our entry point function.

Entry Point Macro

Since the function

_startis called externally from the loader, the signature of the function is not checked. This means that we can allow it to take arbitrary arguments without compiling errors, but this will lead to a failure or cause undefined behavior in runtime.To ensure that the entry point function always has the correct signature, the crate

bootloaderprovides a macro entry_point. Rewrite our function using this macro: // in src/main.rs use bootloader::{BootInfo, entry_point}; entry_point!(kernel_main); #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] } You no longer need to use for the entry point

extern "C"or no_manglebecause the macro defines for us the real entry point of the lower level _start. The function has kernel_mainnow become a completely normal Rust function, so we can choose an arbitrary name for it. It is important that it is checked by type, so if you use the wrong signature, for example, by adding an argument or changing its type, a compilation error will occurImplementation

Now we have access to physical memory and we can finally begin implementing the system. First, consider the current active page tables on which the kernel is running. In the second step, we will create a translation function that returns the physical address that this virtual address is associated with. In the last step, we will try to modify the page tables to create a new mapping.

First, create a new module in the code

memory: // in src/lib.rs pub mod memory; For the module, create an empty file

src/memory.rs.Access to page tables

At the end of the previous article, we tried to look at the tables of the pages on which the kernel runs, but could not access the physical frame pointed to by the register

CR3. Now we can continue working from this place: the function active_level_4_tablewill return a link to the active fourth-level page table: // in src/memory.rs use x86_64::structures::paging::PageTable; /// Returns a mutable reference to the active level 4 table. /// /// This function is unsafe because the caller must guarantee that the /// complete physical memory is mapped to virtual memory at the passed /// `physical_memory_offset`. Also, this function must be only called once /// to avoid aliasing `&mut` references (which is undefined behavior). pub unsafe fn active_level_4_table(physical_memory_offset: u64) -> &'static mut PageTable { use x86_64::{registers::control::Cr3, VirtAddr}; let (level_4_table_frame, _) = Cr3::read(); let phys = level_4_table_frame.start_address(); let virt = VirtAddr::new(phys.as_u64() + physical_memory_offset); let page_table_ptr: *mut PageTable = virt.as_mut_ptr(); &mut *page_table_ptr // unsafe } First we read the physical frame of the active level 4 table from the register

CR3. Then we take its physical starting address and convert it to a virtual address by adding physical_memory_offset. Finally, we translate the address into a raw pointer with a *mut PageTablemethod as_mut_ptr, and then unsafely create a link from it &mut PageTable. We create a link &mutinstead &, because later in the article we will modify these page tables.There is no need to insert an unsafe block, because Rust regards the whole body

unsafe fnas one big unsafe block. This increases the risk, because you can accidentally enter an unsafe operation in the previous lines. This also makes detection of unsafe operations much more difficult. An RFC has already been created to change this behavior. Rust.Now we can use this function to display the records of the fourth level table:

// in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] // initialize GDT, IDT, PICS use blog_os::memory::active_level_4_table; let l4_table = unsafe { active_level_4_table(boot_info.physical_memory_offset) }; for (i, entry) in l4_table.iter().enumerate() { if !entry.is_unused() { println!("L4 Entry {}: {:?}", i, entry); } } println!("It did not crash!"); blog_os::hlt_loop(); } In quality we

physical_memory_offsettransfer the corresponding field of structure BootInfo. Then we use the function iterto iterate over the page table entries and the combinator enumerateto add an index ito each element. We display only non-empty records, because all 512 records will not fit on the screen.When we run the code, we see the following result:

We see several non-empty records that are mapped to various third-level tables. So many areas of memory are used because separate areas are needed for kernel code, kernel stack, physical memory translation, and boot information.

To go through the tables of pages and look at the table of the third level, we can again convert the displayed frame into a virtual address:

// in the for loop in src/main.rs use x86_64::{structures::paging::PageTable, VirtAddr}; if !entry.is_unused() { println!("L4 Entry {}: {:?}", i, entry); // get the physical address from the entry and convert it let phys = entry.frame().unwrap().start_address(); let virt = phys.as_u64() + boot_info.physical_memory_offset; let ptr = VirtAddr::new(virt).as_mut_ptr(); let l3_table: &PageTable = unsafe { &*ptr }; // print non-empty entries of the level 3 table for (i, entry) in l3_table.iter().enumerate() { if !entry.is_unused() { println!(" L3 Entry {}: {:?}", i, entry); } } } To view the tables of the second and first level, we repeat this process, respectively, for the records of the third and second levels. As you can imagine, the amount of code is growing very quickly, so we will not publish a full listing.

Manual crawling of tables is interesting because it helps to understand how the processor performs address translation. But usually we are only interested in displaying one physical address for a specific virtual address, so let's create a function for that.

Address Translation

To translate a virtual address into a physical one, we must go through a four-level page table until we reach the mapped frame. Create a function that performs this address translation:

// in src/memory.rs use x86_64::{PhysAddr, VirtAddr}; /// Translates the given virtual address to the mapped physical address, or /// `None` if the address is not mapped. /// /// This function is unsafe because the caller must guarantee that the /// complete physical memory is mapped to virtual memory at the passed /// `physical_memory_offset`. pub unsafe fn translate_addr(addr: VirtAddr, physical_memory_offset: u64) -> Option<PhysAddr> { translate_addr_inner(addr, physical_memory_offset) } We refer to a secure function

translate_addr_innerto limit the amount of unsafe code. As noted above, Rust regards the whole body unsafe fnas a big insecure unit. By calling one safe function, we again make every operation explicit unsafe.The special internal function has real functionality:

// in src/memory.rs /// Private function that is called by `translate_addr`. /// /// This function is safe to limit the scope of `unsafe` because Rust treats /// the whole body of unsafe functions as an unsafe block. This function must /// only be reachable through `unsafe fn` from outside of this module. fn translate_addr_inner(addr: VirtAddr, physical_memory_offset: u64) -> Option<PhysAddr> { use x86_64::structures::paging::page_table::FrameError; use x86_64::registers::control::Cr3; // read the active level 4 frame from the CR3 register let (level_4_table_frame, _) = Cr3::read(); let table_indexes = [ addr.p4_index(), addr.p3_index(), addr.p2_index(), addr.p1_index() ]; let mut frame = level_4_table_frame; // traverse the multi-level page table for &index in &table_indexes { // convert the frame into a page table reference let virt = frame.start_address().as_u64() + physical_memory_offset; let table_ptr: *const PageTable = VirtAddr::new(virt).as_ptr(); let table = unsafe {&*table_ptr}; // read the page table entry and update `frame` let entry = &table[index]; frame = match entry.frame() { Ok(frame) => frame, Err(FrameError::FrameNotPresent) => return None, Err(FrameError::HugeFrame) => panic!("huge pages not supported"), }; } // calculate the physical address by adding the page offset Some(frame.start_address() + u64::from(addr.page_offset())) } Instead of reusing the function,

active_level_4_tablewe reread the fourth level frame from the register CR3, because this simplifies the implementation of the prototype. Do not worry, we will soon improve the solution.The structure

VirtAddralready provides methods for calculating indexes in four-level page tables. We store these indexes in a small array, because it allows you to go through all the tables using a loop for. Outside the loop, remember the last visited frame to later calculate the physical address. framepoints to the frames of the page table during the iteration and the mapped frame after the last iteration, i.e. after passing the level 1 record.Within the loop, we again apply

physical_memory_offsetto convert a frame into a link to a page table. Then we read the record of the current page table and use the function PageTableEntry::frameto extract the matched frame. If the record is not associated with the frame, return None. If the entry displays a huge 2 MiB or 1 GiB page, for now we’ll have a panic.So, let's check the translation function at some addresses:

// in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] // initialize GDT, IDT, PICS use blog_os::memory::translate_addr; use x86_64::VirtAddr; let addresses = [ // the identity-mapped vga buffer page 0xb8000, // some code page 0x20010a, // some stack page 0x57ac_001f_fe48, // virtual address mapped to physical address 0 boot_info.physical_memory_offset, ]; for &address in &addresses { let virt = VirtAddr::new(address); let phys = unsafe { translate_addr(virt, boot_info.physical_memory_offset) }; println!("{:?} -> {:?}", virt, phys); } println!("It did not crash!"); blog_os::hlt_loop(); } When we run the code, we get the following result:

As expected, with the identical mapping, the address is

0xb8000converted to the same physical address. The code page and the stack page are converted to arbitrary physical addresses, which depend on how the loader created the initial mapping for our kernel. The mapping physical_memory_offsetmust point to a physical address 0, but fails, because the translation uses huge pages for efficiency. A future bootloader version can apply the same optimization for the kernel and stack pages.Using MappedPageTable

Translation of virtual addresses into physical ones is a typical task of the OS kernel, therefore the crate

x86_64provides an abstraction for it. It already supports huge pages and several other features besides translate_addr, so we use it instead of adding support for large pages to our own implementation.The basis of the abstraction is two traits that define the various functions of the translation of the page table:

- Trait

Mapperprovides functions that work on the pages. For example,translate_pageto translate this page into a frame of the same size, as well asmap_toto create a new mapping in the table. - Trait

MapperAllSizesimplies applicationMapperfor all page sizes. In addition, it provides features that work with pages of different sizes, includingtranslate_addror commontranslate.

Traits define only the interface, but provide no implementation. The crate now

x86_64provides two types that implement traits: MappedPageTableand RecursivePageTable. The first requires that each frame of the page table be displayed somewhere (for example, with an offset). The second type can be used if the table of the fourth level is displayed recursively.We have all the physical memory mapped in

physical_memory_offset, so you can use the type MappedPageTable. To initialize it, create a new function initin the module memory: use x86_64::structures::paging::{PhysFrame, MapperAllSizes, MappedPageTable}; use x86_64::PhysAddr; /// Initialize a new MappedPageTable. /// /// This function is unsafe because the caller must guarantee that the /// complete physical memory is mapped to virtual memory at the passed /// `physical_memory_offset`. Also, this function must be only called once /// to avoid aliasing `&mut` references (which is undefined behavior). pub unsafe fn init(physical_memory_offset: u64) -> impl MapperAllSizes { let level_4_table = active_level_4_table(physical_memory_offset); let phys_to_virt = move |frame: PhysFrame| -> *mut PageTable { let phys = frame.start_address().as_u64(); let virt = VirtAddr::new(phys + physical_memory_offset); virt.as_mut_ptr() }; MappedPageTable::new(level_4_table, phys_to_virt) } // make private unsafe fn active_level_4_table(physical_memory_offset: u64) -> &'static mut PageTable {…} We cannot directly return

MappedPageTablefrom a function, because it is common for the type of closure. We will circumvent this problem with the help of the syntax impl Trait. An additional advantage is that you can then switch the kernel to RecursivePageTablewithout changing the function signature.The function

MappedPageTable::newexpects two parameters: a modifiable reference to the table of the level 4 pages and a closure phys_to_virtthat converts the physical frame into a page table index *mut PageTable. For the first parameter, we can reuse the function active_level_4_table. For the second, we create a closure that uses physical_memory_offsetto perform the transformation.We also do a

active_level_4_tableprivate function, because from now on it will only be called from init.To use the method

MapperAllSizes::translate_addrinstead of our own function memory::translate_addr, you need to change just a few lines to kernel_main: // in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] // initialize GDT, IDT, PICS // new: different imports use blog_os::memory; use x86_64::{structures::paging::MapperAllSizes, VirtAddr}; // new: initialize a mapper let mapper = unsafe { memory::init(boot_info.physical_memory_offset) }; let addresses = […]; // same as before for &address in &addresses { let virt = VirtAddr::new(address); // new: use the `mapper.translate_addr` method let phys = mapper.translate_addr(virt); println!("{:?} -> {:?}", virt, phys); } println!("It did not crash!"); blog_os::hlt_loop(); } After launch, we see the same translation results as before, but only huge pages now also work:

As expected, the virtual address is

physical_memory_offsetconverted to a physical address 0x0. Using the broadcast function for the type MappedPageTable, we eliminate the need to implement support for huge pages. We also have access to other page functions, such as map_tothat we will use in the next section. At this stage, we no longer need the function memory::translate_addr, you can delete it if you want.Create a new mapping

Until now, we only looked at the page tables, but did not change anything. Let's create a new mapping for a previously unmapped page.

We will use the function

map_tofrom the trait Mapper, so first consider this function. The documentation says that it requires four arguments: the page we want to display; frame to which the page should be matched; a set of flags for writing the page table and the frame allocator frame_allocator. The frame allocator is necessary, since the mapping of this page may require the creation of additional tables that need unused frames as backup storage.Function create_example_mapping

The first step of our implementation is the creation of a new function

create_example_mappingthat maps this page to the 0xb8000physical frame of the VGA text buffer. We select this frame because it allows you to easily check whether the display was created correctly: we just need to write to the newly displayed page and see if it appears on the screen.The function

create_example_mappinglooks like this: // in src/memory.rs use x86_64::structures::paging::{Page, Size4KiB, Mapper, FrameAllocator}; /// Creates an example mapping for the given page to frame `0xb8000`. pub fn create_example_mapping( page: Page, mapper: &mut impl Mapper<Size4KiB>, frame_allocator: &mut impl FrameAllocator<Size4KiB>, ) { use x86_64::structures::paging::PageTableFlags as Flags; let frame = PhysFrame::containing_address(PhysAddr::new(0xb8000)); let flags = Flags::PRESENT | Flags::WRITABLE; let map_to_result = unsafe { mapper.map_to(page, frame, flags, frame_allocator) }; map_to_result.expect("map_to failed").flush(); } In addition to the page

pageto be matched, the function expects an instance mapperand frame_allocator. The type mapperimplements the treyt Mapper<Size4KiB>that the method provides map_to. The common parameter is Size4KiBnecessary because the trait Mapperis common to the trait PageSize, working with both standard 4 KiB pages and huge 2 MiB and 1 GiB pages. We want to create only 4 KiB pages, so we can use Mapper<Size4KiB>instead of the requirement MapperAllSizes.For mapping, set the flag

PRESENT, since it is required for all valid entries, and the flag WRITABLEto make the displayed page writable. Callmap_tounsafe: you can violate memory security with invalid arguments, so you have to use a block unsafe. For a list of all possible flags, see the “Page Table Format” section of the previous article .The function

map_tomay fail, so it returns Result. Since this is just an example of code that should not be reliable, we simply use it expectto panic in case of an error. If successful, the function returns a type MapperFlushthat provides an easy way to clear the newly displayed page from the dynamic translation buffer (TLB) using the method flush. As well Result, this type applies the [ #[must_use]] attribute toissue a warning if we accidentally forget to use it .Fictitious FrameAllocator

To call

create_example_mapping, you must first create FrameAllocator. As noted above, the complexity of creating a new display depends on the virtual page that we want to display. In the simplest case, a level 1 table for the page already exists, and we only need to make one entry. In the most difficult case, the page is in the memory area for which level 3 has not yet been created, so you must first create page tables of level 3, 2 and 1.Let's start with a simple case and assume that you do not need to create new page tables. For this, a frame allocator is sufficient, which always returns

None. We create this EmptyFrameAllocatorto test the display function: // in src/memory.rs /// A FrameAllocator that always returns `None`. pub struct EmptyFrameAllocator; impl FrameAllocator<Size4KiB> for EmptyFrameAllocator { fn allocate_frame(&mut self) -> Option<PhysFrame> { None } } Now you need to find a page that can be displayed without creating new page tables. The loader is loaded into the first megabyte of the virtual address space, so we know that there is a valid level 1 table for this region. For our example, we can choose any unused page in this memory area, for example, the page at the address

0x1000.To test the function, we first display the page

0x1000, and then display the contents of the memory: // in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] // initialize GDT, IDT, PICS use blog_os::memory; use x86_64::{structures::paging::Page, VirtAddr}; let mut mapper = unsafe { memory::init(boot_info.physical_memory_offset) }; let mut frame_allocator = memory::EmptyFrameAllocator; // map a previously unmapped page let page = Page::containing_address(VirtAddr::new(0x1000)); memory::create_example_mapping(page, &mut mapper, &mut frame_allocator); // write the string `New!` to the screen through the new mapping let page_ptr: *mut u64 = page.start_address().as_mut_ptr(); unsafe { page_ptr.offset(400).write_volatile(0x_f021_f077_f065_f04e)}; println!("It did not crash!"); blog_os::hlt_loop(); } First, we create a mapping for the page in

0x1000by calling the function create_example_mappingwith the variable reference to the instances mapperand frame_allocator. This matches the page 0x1000with the VGA text buffer frame, so we need to see what is written there on the screen.Then convert the page to a raw pointer and write the value to the offset

400. We do not write to the top of the page because the top line of the VGA buffer directly shifts from the screen as follows println. Write the value 0x_f021_f077_f065_f04ethat corresponds to the string “New!” On a white background. As we learned in the VGA Text Mode article , writing to the VGA buffer should be volatile, so we use the method write_volatile.When we run the code in QEMU, we see the following result:

After writing to the page

0x1000, the inscription “New!” Appeared on the screen . So, we have successfully created a new mapping in the page tables.This mapping worked because there was already a level 1 table for mapping

0x1000. When we try to match a page for which a level 1 table does not exist yet, the function map_tofails because it tries to allocate frames from EmptyFrameAllocatorto create new tables. We see that this happens when we try to display the page 0xdeadbeaf000instead of 0x1000: // in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] let page = Page::containing_address(VirtAddr::new(0xdeadbeaf000)); […] } If this is run, a panic arises with the following error message:

panicked at 'map_to failed: FrameAllocationFailed', /…/result.rs:999:5 To display pages that do not yet have a Level 1 page table, you need to create the correct one

FrameAllocator. But how do you know which frames are free and how much physical memory is available?Frame allocation

For new page tables, create the correct frame allocator. Let's start with a common skeleton:

// in src/memory.rs pub struct BootInfoFrameAllocator<I> where I: Iterator<Item = PhysFrame> { frames: I, } impl<I> FrameAllocator<Size4KiB> for BootInfoFrameAllocator<I> where I: Iterator<Item = PhysFrame> { fn allocate_frame(&mut self) -> Option<PhysFrame> { self.frames.next() } } frames . alloc Iterator::next .BootInfoFrameAllocator memory_map , BootInfo . « » , BIOS/UEFI. , .MemoryRegion , , (, , . .) . , , BootInfoFrameAllocator .BootInfoFrameAllocator init_frame_allocator : // in src/memory.rs use bootloader::bootinfo::{MemoryMap, MemoryRegionType}; /// Create a FrameAllocator from the passed memory map pub fn init_frame_allocator( memory_map: &'static MemoryMap, ) -> BootInfoFrameAllocator<impl Iterator<Item = PhysFrame>> { // get usable regions from memory map let regions = memory_map .iter() .filter(|r| r.region_type == MemoryRegionType::Usable); // map each region to its address range let addr_ranges = regions.map(|r| r.range.start_addr()..r.range.end_addr()); // transform to an iterator of frame start addresses let frame_addresses = addr_ranges.flat_map(|r| r.step_by(4096)); // create `PhysFrame` types from the start addresses let frames = frame_addresses.map(|addr| { PhysFrame::containing_address(PhysAddr::new(addr)) }); BootInfoFrameAllocator { frames } } MemoryMap :- -,

iterMemoryRegion.filter. , , , (, ) ,InUse. , ,Usable- . maprange Rust .- :

into_iter, 4096-step_by. 4096 (= 4 ) — , . , .flat_mapmap,Iterator<Item = u64>Iterator<Item = Iterator<Item = u64>>. PhysFrame,Iterator<Item = PhysFrame>.BootInfoFrameAllocator.

kernel_main , BootInfoFrameAllocator EmptyFrameAllocator : // in src/main.rs #[cfg(not(test))] fn kernel_main(boot_info: &'static BootInfo) -> ! { […] let mut frame_allocator = memory::init_frame_allocator(&boot_info.memory_map); […] } - “New!” .

map_to :frame_allocator.- .

- .

- .

create_example_mapping — , . .Summary

, , , . .

, .

bootloader cargo. &BootInfo ., ,

MappedPageTable x86_64 . , FrameAllocator , .What's next?

, .

Source: https://habr.com/ru/post/445618/

All Articles