There are 17 billion computers in your brain

Neural network neural networks

Image brentsview licensed under CC BY-NC 2.0

The brain receives information from the outside world, its neurons receive data at the input, process it and give a result. It could be a thought (I want a curry for dinner), an action (to make a curry), a change in mood (hurray, curry!). Whatever happens at the output, this “something” is the conversion of data from the input (menu) to the output result (“chicken dhansak, please”). And if you imagine the brain as a converter with an entrance to an output, then an analogy with a computer is inevitable.

')

For some, it's just a useful rhetorical device, for others it's a serious idea. But the brain is not a computer. A computer is every neuron. There are 17 billion computers in the cerebral cortex.

Look at this:

A pyramidal neuron projected in 2D. The black spot in the middle is the body of the neuron, and the rest of the wires are its dendrites. Image: Alain Dextesh

This is an image of a pyramidal neuron. These cells make up the majority of the cortex of your brain. The spot in the center is the body of the neuron, and in all directions the dendrites, winding wires that collect input data from other close and distant neurons stretch and branch. The input data comes along the entire length of each dendrite, some right next to the body, and others far away at the tips. It is important where exactly the signal will go.

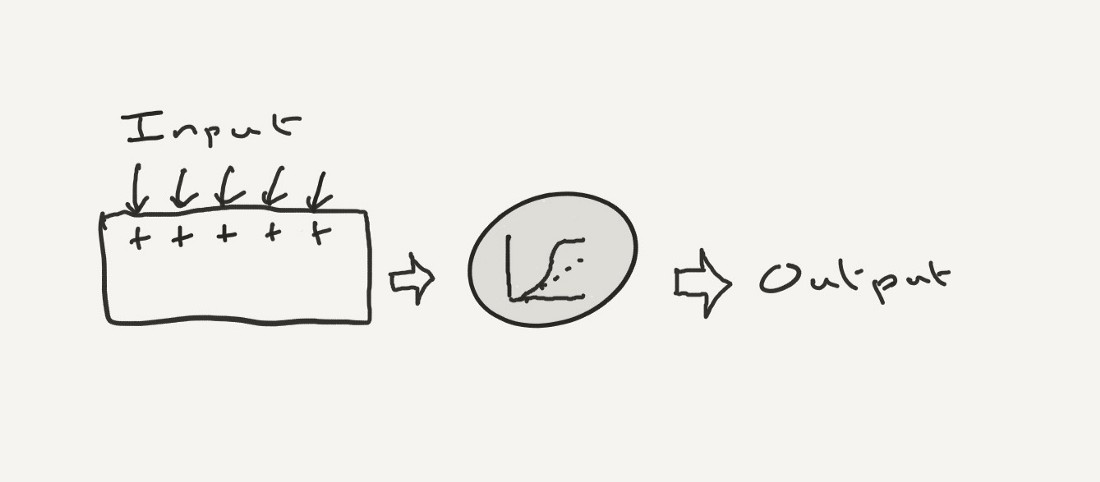

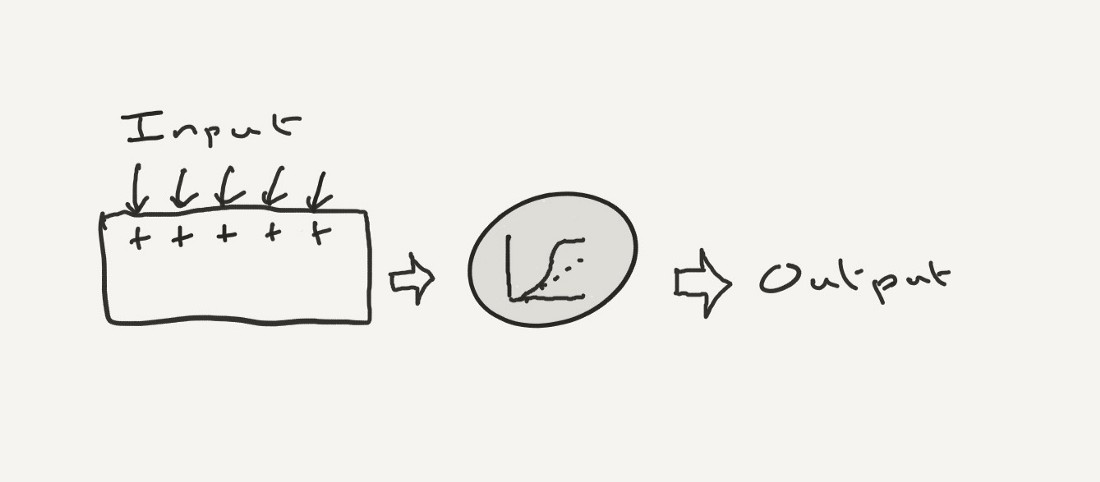

Many do not understand how important the location of the input data is. Usually the work of neurons is reduced to the idea of a simple adder. In this idea, dendrites are simply devices for collecting input data. Activating each input separately slightly changes the voltage in the electrical neural network. If we sum up the current from all dendrites, then a dendritic action potential (spike) is generated, which descends along the axon and becomes the input for other neurons.

Model of a neuron with the summation of input signals and the generation of the dendritic action potential, if the sum of the inputs exceeds the threshold (gray circle)

This is a convenient mental model, it is the basis of all artificial neural networks. But she is wrong.

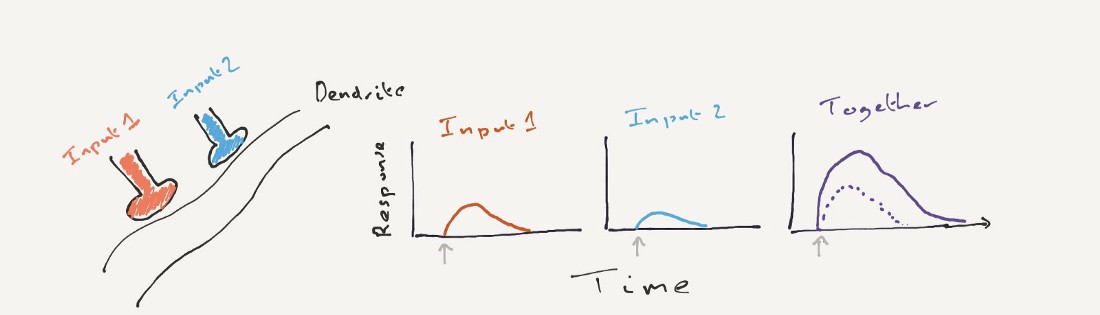

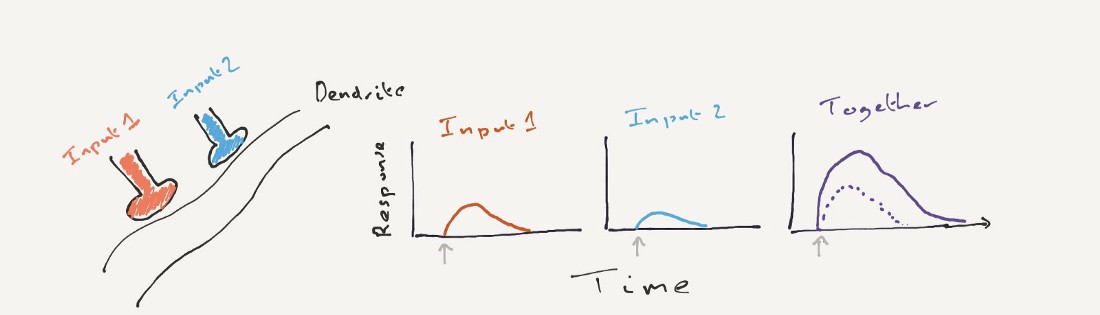

Dendrites are not just pieces of wire. They also have their own device for generating spikes. If a sufficient number of inputs are activated on one small part of the dendrite, they will be enhanced:

Two colored bunches are two entrances to one part of the dendrite. When they activate themselves, each generates a response. The gray arrow in the figure indicates the activation of this input (the response here means "voltage change"). With joint activation, the response is larger (solid line) than the sum of the individual inputs (dashed line)

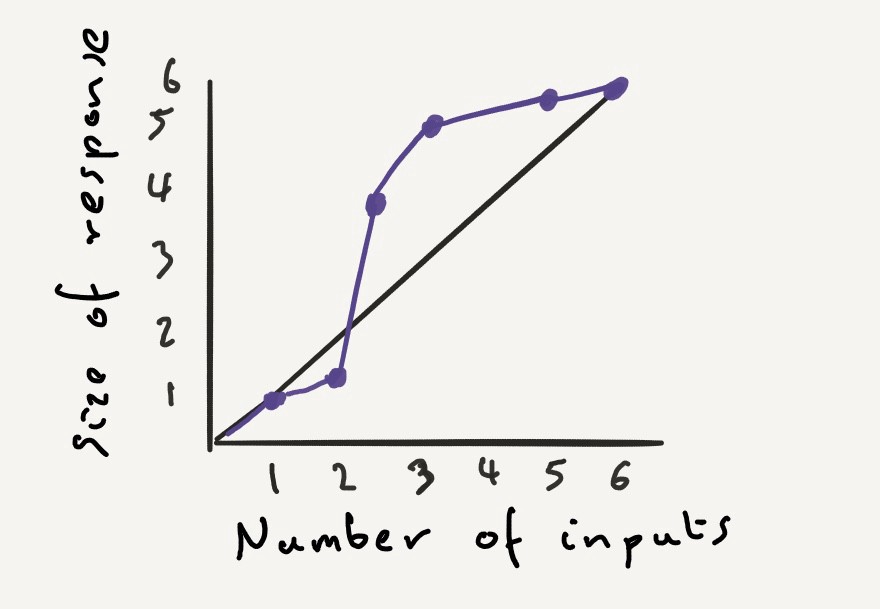

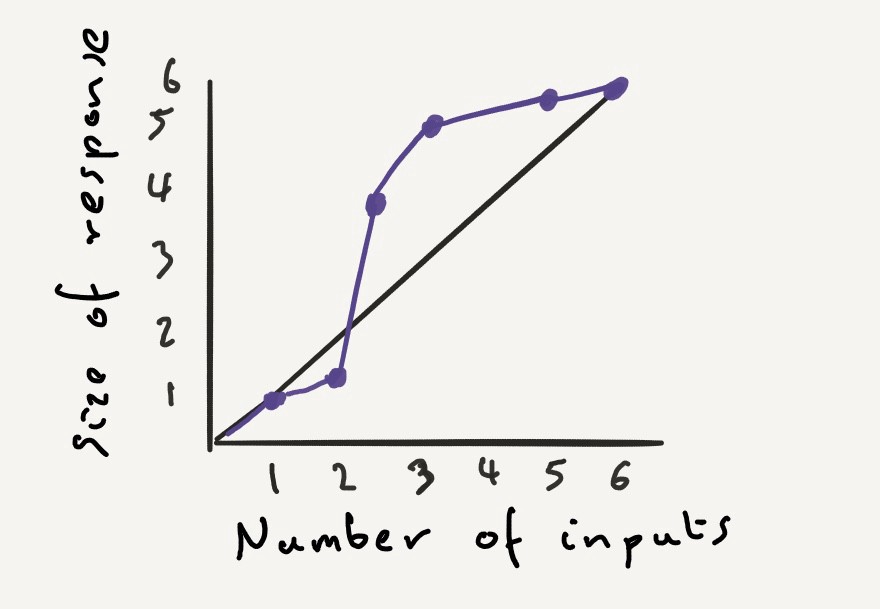

The ratio between the number of active inputs and the size of the response in a small part of the dendrite is as follows:

The size of the response in one branch of the dendrite in response to an increase in the number of active inputs. Local spike is a sharp jump from minimal to big reaction

We see a local surge: a sudden jump from an almost zero response to several inputs to a very large reaction with the addition of one more. This section of the dendrite works "superlinearly", here 2 + 2 = 6.

We have known for many years about these local bursts in certain parts of the dendrite. We saw spikes in neurons in brain slices . We saw them in animals under anesthesia , which were tickled by the paws (yes, the unconscious brain still feels something; it just does not bother to answer). Most recently, we saw them in the dendrites of neurons in animals in motion (yes, Moore and colleagues recorded an EM field a few micrometers from the brain of a running mouse; crazy, right?). The dendrites of the pyramidal neuron do generate spikes.

But why does this local surge change our perception of the brain as a computer? Because the dendrites of the pyramidal neuron have many separate branches. And each is able to calculate the result and give a surge. This means that each branch of the dendrite acts as a small non-linear output device, summing up and outputting a local surge, if this branch receives a sufficient number of inputs at about the same time:

Deja vu. One dendritic branch acts as a small device for summing the inputs and issuing a burst if enough inputs are received at the same time. And the transformation from input to output (gray circle) is the same graph that we have already seen above, which determines the spike force

Wait a minute Isn't it our neuron model? Yes, it is she. Now, if we replace each small branch of the dendrite with one of our small "neural" devices, then the pyramidal neuron looks like this:

Left: many dendritic branches of the neuron (above and below the body). Right: it turns out that this is a set of nonlinear summers (yellow boxes with nonlinear outputs) that are output to the neuron body (gray box) and summed there. Something familiar?

Yes, each pyramidal neuron is a two-layer neural network. By itself.

The wonderful work of Pourazi and Mela back in 2003 clearly showed this. They built a complex computer model of a single neuron, imitating every small piece of dendrite, local bursts inside them, and how they descend to the body. Then they directly compared the output of a neuron with the output of a two-layer neural network - and they turned out to be the same.

The extraordinary significance of these local bursts is that each neuron is a computer. By itself, a neuron is able to calculate a huge range of so-called non-linear functions, which it simply summarizes and produces a spike. For example, with four inputs (blue, sea, yellow and sun) and two branches acting as small non-linear devices, a pyramidal neuron can calculate the function of “linking signs”: react to a combination of blue and sea or a combination of yellow and sun, but not respond otherwise, for example, to the blue and the sun or yellow and the sea. Of course, neurons have much more than four inputs and much more than two branches: thus, they are able to calculate the astronomical range of logical functions.

More recently, Romain Case with friends (I am one of my friends) showed that a single neuron computes an amazing range of functions, even if it is not able to generate a local dendritic surge. Because dendrites are naturally non-linear: in their normal state, they actually summarize the input data, getting a result less than the sum of the individual values. In this mode, they work sublinearly, that is, 2 + 2 = 3.5. And the presence of many dendritic branches with sublinear summation also allows the neuron to act as a two-layer neural network. A two-layer neural network that computes a different set of non-linear functions built over neurons with superlinear dendrites. And almost every neuron has dendrites. Thus, almost all neurons can in principle be a two-layer neural network.

Another surprising consequence of a local burst is that neurons know a lot more about the world than they tell us — or other neurons, for that matter.

Recently, I asked a simple question : how does the brain distribute information? When we look at the wiring between the neurons in the brain, we can trace the path from any neuron to any other. How, then, is the information obviously available in one part of the brain (say, curry smell) not appearing in all other parts of the brain (for example, in the visual cortex)?

There are two opposite answers to this. First, in some cases, the brain is not divided: information really appears in strange places, for example, sounds reach the area of the brain that is responsible for orienteering. And another answer: the brain is shared by dendrites.

As we have just seen, a local surge is a non-linear event: it is larger than the sum of the inputs. And the body of the neuron basically can not detect anything that is not a local burst. This means that it ignores most of the input data: the area that generates the impulse for the rest of the brain is isolated from most of the information that the neuron receives. A neuron responds only when many inputs are active simultaneously in time and space (in the same area of the dendrite).

In this case, it turns out that the dendrites react to something that the neuron does not respond to. This is exactly what is happening. We have seen how many neurons in the visual cortex react only to objects that move at a certain angle. In some neurons, adhesions are generated when an object moves at an angle of 60 °, in others, 90 ° or 120 °. But dendrites react to all angles without exception . Dendrites know much more about the world than the body of a neuron.

They also see much more. The neurons of the visual cortex react only to things in a certain place: one neuron can react to objects on the upper left, and the other to objects on the lower right. More recently, Sonya Hofer and colleagues have shown that neuron bursts occur only in response to objects appearing in one particular position, but dendrites react to many different positions, often far from the specialization of the neuron. Thus, neurons react only to a small part of the information received, and the rest of the information is hidden in their dendrites.

Why is all this important? This means that each neuron can radically change its function by changing just a few inputs. Some entrances become weaker - and suddenly the whole branch of the dendrite becomes silent. The neuron, which used to be happy to see cats, because this branch loved cats, no longer reacts when your cat jumped onto the keyboard of a working computer — and as a result you became a much calmer, more collected person. Several inputs intensify - and suddenly the whole branch begins to react: a neuron that did not react to the taste of olives earlier now happily generates bursts when it fixes a full mouth of ripe green olives - in my experience, this neuron is activated in a person only after 20 years. If the inputs were simply added up, the new inputs would fight with the old ones for the function of the neuron; but each part of the dendrite acts independently and easily makes new calculations.

This means that the brain can perform many calculations. It is impossible to consider a neuron as just an adder of input data and a generator of bursts. But this is exactly how units in artificial neural networks are arranged. This suggests that deep learning and other AI systems are not even close to the computational power of the real brain.

There are 17 billion neurons in the cerebral cortex. To understand what they do, we often draw analogies with the computer. Some arguments are completely based on this analogy. Others consider it a delusion. Artificial neural networks are often cited as an example: they perform calculations and consist of neuron-like things, therefore the brain must calculate. But if we think that the brain is a computer, because it is like a neural network, now we have to recognize that individual neurons are also computers. All 17 billion in the bark. Perhaps all 86 billion in the brain.

This means that the cerebral cortex is not a neural network. This is a neural network of neural networks.

Image brentsview licensed under CC BY-NC 2.0

The brain receives information from the outside world, its neurons receive data at the input, process it and give a result. It could be a thought (I want a curry for dinner), an action (to make a curry), a change in mood (hurray, curry!). Whatever happens at the output, this “something” is the conversion of data from the input (menu) to the output result (“chicken dhansak, please”). And if you imagine the brain as a converter with an entrance to an output, then an analogy with a computer is inevitable.

')

For some, it's just a useful rhetorical device, for others it's a serious idea. But the brain is not a computer. A computer is every neuron. There are 17 billion computers in the cerebral cortex.

Look at this:

A pyramidal neuron projected in 2D. The black spot in the middle is the body of the neuron, and the rest of the wires are its dendrites. Image: Alain Dextesh

This is an image of a pyramidal neuron. These cells make up the majority of the cortex of your brain. The spot in the center is the body of the neuron, and in all directions the dendrites, winding wires that collect input data from other close and distant neurons stretch and branch. The input data comes along the entire length of each dendrite, some right next to the body, and others far away at the tips. It is important where exactly the signal will go.

Many do not understand how important the location of the input data is. Usually the work of neurons is reduced to the idea of a simple adder. In this idea, dendrites are simply devices for collecting input data. Activating each input separately slightly changes the voltage in the electrical neural network. If we sum up the current from all dendrites, then a dendritic action potential (spike) is generated, which descends along the axon and becomes the input for other neurons.

Model of a neuron with the summation of input signals and the generation of the dendritic action potential, if the sum of the inputs exceeds the threshold (gray circle)

This is a convenient mental model, it is the basis of all artificial neural networks. But she is wrong.

Dendrites are not just pieces of wire. They also have their own device for generating spikes. If a sufficient number of inputs are activated on one small part of the dendrite, they will be enhanced:

Two colored bunches are two entrances to one part of the dendrite. When they activate themselves, each generates a response. The gray arrow in the figure indicates the activation of this input (the response here means "voltage change"). With joint activation, the response is larger (solid line) than the sum of the individual inputs (dashed line)

The ratio between the number of active inputs and the size of the response in a small part of the dendrite is as follows:

The size of the response in one branch of the dendrite in response to an increase in the number of active inputs. Local spike is a sharp jump from minimal to big reaction

We see a local surge: a sudden jump from an almost zero response to several inputs to a very large reaction with the addition of one more. This section of the dendrite works "superlinearly", here 2 + 2 = 6.

We have known for many years about these local bursts in certain parts of the dendrite. We saw spikes in neurons in brain slices . We saw them in animals under anesthesia , which were tickled by the paws (yes, the unconscious brain still feels something; it just does not bother to answer). Most recently, we saw them in the dendrites of neurons in animals in motion (yes, Moore and colleagues recorded an EM field a few micrometers from the brain of a running mouse; crazy, right?). The dendrites of the pyramidal neuron do generate spikes.

But why does this local surge change our perception of the brain as a computer? Because the dendrites of the pyramidal neuron have many separate branches. And each is able to calculate the result and give a surge. This means that each branch of the dendrite acts as a small non-linear output device, summing up and outputting a local surge, if this branch receives a sufficient number of inputs at about the same time:

Deja vu. One dendritic branch acts as a small device for summing the inputs and issuing a burst if enough inputs are received at the same time. And the transformation from input to output (gray circle) is the same graph that we have already seen above, which determines the spike force

Wait a minute Isn't it our neuron model? Yes, it is she. Now, if we replace each small branch of the dendrite with one of our small "neural" devices, then the pyramidal neuron looks like this:

Left: many dendritic branches of the neuron (above and below the body). Right: it turns out that this is a set of nonlinear summers (yellow boxes with nonlinear outputs) that are output to the neuron body (gray box) and summed there. Something familiar?

Yes, each pyramidal neuron is a two-layer neural network. By itself.

The wonderful work of Pourazi and Mela back in 2003 clearly showed this. They built a complex computer model of a single neuron, imitating every small piece of dendrite, local bursts inside them, and how they descend to the body. Then they directly compared the output of a neuron with the output of a two-layer neural network - and they turned out to be the same.

The extraordinary significance of these local bursts is that each neuron is a computer. By itself, a neuron is able to calculate a huge range of so-called non-linear functions, which it simply summarizes and produces a spike. For example, with four inputs (blue, sea, yellow and sun) and two branches acting as small non-linear devices, a pyramidal neuron can calculate the function of “linking signs”: react to a combination of blue and sea or a combination of yellow and sun, but not respond otherwise, for example, to the blue and the sun or yellow and the sea. Of course, neurons have much more than four inputs and much more than two branches: thus, they are able to calculate the astronomical range of logical functions.

More recently, Romain Case with friends (I am one of my friends) showed that a single neuron computes an amazing range of functions, even if it is not able to generate a local dendritic surge. Because dendrites are naturally non-linear: in their normal state, they actually summarize the input data, getting a result less than the sum of the individual values. In this mode, they work sublinearly, that is, 2 + 2 = 3.5. And the presence of many dendritic branches with sublinear summation also allows the neuron to act as a two-layer neural network. A two-layer neural network that computes a different set of non-linear functions built over neurons with superlinear dendrites. And almost every neuron has dendrites. Thus, almost all neurons can in principle be a two-layer neural network.

Another surprising consequence of a local burst is that neurons know a lot more about the world than they tell us — or other neurons, for that matter.

Recently, I asked a simple question : how does the brain distribute information? When we look at the wiring between the neurons in the brain, we can trace the path from any neuron to any other. How, then, is the information obviously available in one part of the brain (say, curry smell) not appearing in all other parts of the brain (for example, in the visual cortex)?

There are two opposite answers to this. First, in some cases, the brain is not divided: information really appears in strange places, for example, sounds reach the area of the brain that is responsible for orienteering. And another answer: the brain is shared by dendrites.

As we have just seen, a local surge is a non-linear event: it is larger than the sum of the inputs. And the body of the neuron basically can not detect anything that is not a local burst. This means that it ignores most of the input data: the area that generates the impulse for the rest of the brain is isolated from most of the information that the neuron receives. A neuron responds only when many inputs are active simultaneously in time and space (in the same area of the dendrite).

In this case, it turns out that the dendrites react to something that the neuron does not respond to. This is exactly what is happening. We have seen how many neurons in the visual cortex react only to objects that move at a certain angle. In some neurons, adhesions are generated when an object moves at an angle of 60 °, in others, 90 ° or 120 °. But dendrites react to all angles without exception . Dendrites know much more about the world than the body of a neuron.

They also see much more. The neurons of the visual cortex react only to things in a certain place: one neuron can react to objects on the upper left, and the other to objects on the lower right. More recently, Sonya Hofer and colleagues have shown that neuron bursts occur only in response to objects appearing in one particular position, but dendrites react to many different positions, often far from the specialization of the neuron. Thus, neurons react only to a small part of the information received, and the rest of the information is hidden in their dendrites.

Why is all this important? This means that each neuron can radically change its function by changing just a few inputs. Some entrances become weaker - and suddenly the whole branch of the dendrite becomes silent. The neuron, which used to be happy to see cats, because this branch loved cats, no longer reacts when your cat jumped onto the keyboard of a working computer — and as a result you became a much calmer, more collected person. Several inputs intensify - and suddenly the whole branch begins to react: a neuron that did not react to the taste of olives earlier now happily generates bursts when it fixes a full mouth of ripe green olives - in my experience, this neuron is activated in a person only after 20 years. If the inputs were simply added up, the new inputs would fight with the old ones for the function of the neuron; but each part of the dendrite acts independently and easily makes new calculations.

This means that the brain can perform many calculations. It is impossible to consider a neuron as just an adder of input data and a generator of bursts. But this is exactly how units in artificial neural networks are arranged. This suggests that deep learning and other AI systems are not even close to the computational power of the real brain.

There are 17 billion neurons in the cerebral cortex. To understand what they do, we often draw analogies with the computer. Some arguments are completely based on this analogy. Others consider it a delusion. Artificial neural networks are often cited as an example: they perform calculations and consist of neuron-like things, therefore the brain must calculate. But if we think that the brain is a computer, because it is like a neural network, now we have to recognize that individual neurons are also computers. All 17 billion in the bark. Perhaps all 86 billion in the brain.

This means that the cerebral cortex is not a neural network. This is a neural network of neural networks.

Source: https://habr.com/ru/post/445420/

All Articles