FlexiRemap® vs. RAID

RAID algorithms were introduced to the public in the distant 1987. To this day, they remain the most popular technology to protect and accelerate data access in the field of information storage. But the age of IT technology, which has stepped over the 30-year-old frontier, is rather not maturity, but old age. The reason is progress, relentlessly carrying new opportunities. At a time when there were virtually no other drives besides HDD, RAID algorithms made the most efficient use of available storage resources. However, with the advent of SSD, the situation has radically changed. Now RAID when working with solid-state drives is already a "noose" on their performance. Therefore, to unlock the full potential of the speed characteristics of SSDs, a completely different approach to working with them is simply necessary.

In addition to the obvious differences between HDD and SSD in the principles of operation, these types of media have one more important characteristic: any hard disk can overwrite any data with granularity in one block (now it is most often 4KB). For SSD, the rewriting process is a much more complicated procedure:

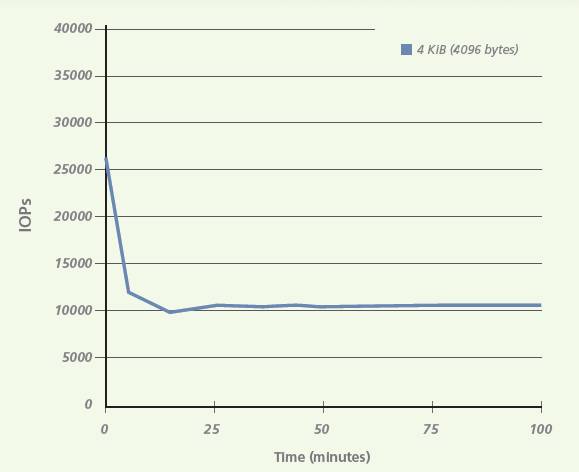

- The modified data is copied to the new location. At the same time, the granularity is the same block, but consisting of several pages and having a size of 256KB - 4MB. Those. when changing the same 4KB, it is necessary to copy including all adjacent pages, which form a single block.

- Mark the “old” blocks as unused, then wipe them with the Garbage Collector.

Sequential write / rewrite on SSD

In the case of sequential write / rewrite, this feature of the SSD does not play a big role in terms of its performance, because the blocks are located nearby, and the garbage collector copes with its work in the background. But in real life, and even more so in the Enterprise segment for the SSD most often used random access to data. And this data is written in arbitrary places on the drives.

The more data is written to the SSD, the harder it is to work with the garbage collector, as fragmentation grows. As a result, the moment comes when the drive cleaning process ceases to be “background”: the performance of the SSD drops significantly, since a significant part of it takes the Garbage Collector.

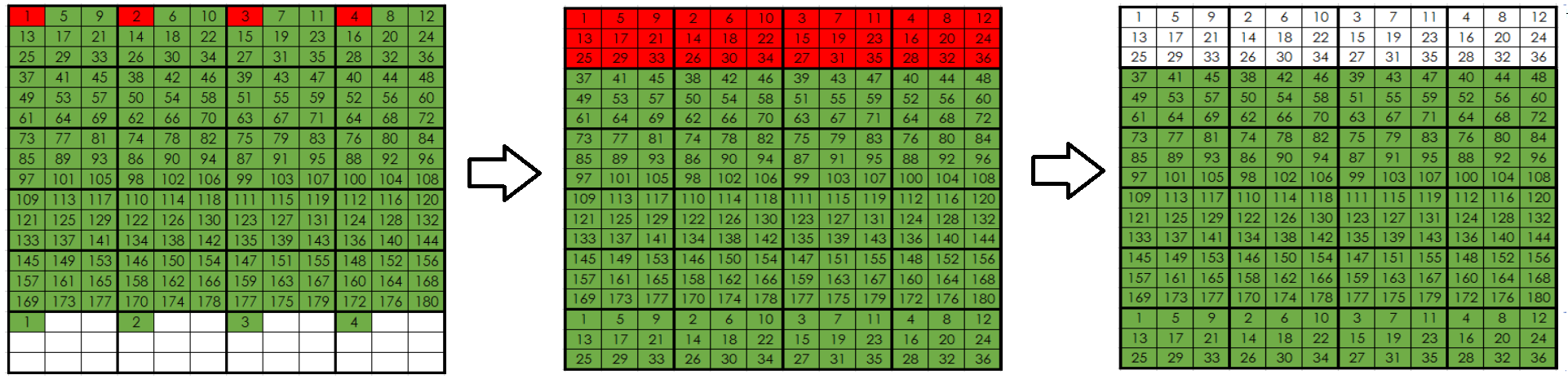

The actual location of the data on the SSD for everyday use

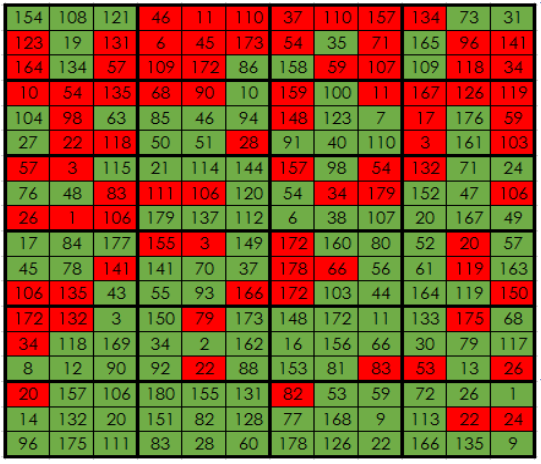

To illustrate the effect of the work of the garbage collector, depending on the recording mode on the drive, you can conduct the simplest tests: sequential and random recording in blocks of 4KB per 100GB drive. (Source - Micron Company)

Performance when writing sequentially

Performance with random write

As can be seen from the tests, the performance drop can reach more than two times. And this is just a single drive. In the case of SSD as part of a RAID group, the number of rewrites is greatly increased, thanks to the work with parity.

In general, thanks to these features of SSD, for them there is such a parameter as the write amplification factor. This is the ratio of the amount of data written to the drive to the amount of data that the host actually sent. And for the most popular RAID5, this ratio is ~ 3.5.

As a result, systems with classic RAID basically recycle SSDs only ~ 10% of their real speed and scale poorly in performance with an increase in the number of drives over a dozen.

Also note that redundant write operations not only reduce the performance of SSD, but also reduce its far from infinite resource, thereby reducing the service life of the drive.

FlexiRemap® technology , which is the core of all AccelStor products, is designed as an alternative to classic RAID algorithms for SSD. The innovativeness of the technology is marked by various patents and awards (including the Flash Memory Summit 2016), as well as the results of independent tests (for example, SPC1).

The essence of FlexiRemap® is to convert all incoming write requests, and mainly of the random type, into a set of blocks that most closely resemble the sequential write mode from the point of view of the drive. As a result, the recording on the SSD takes place in the most comfortable mode for them, and the final performance exceeds any systems with classic RAID.

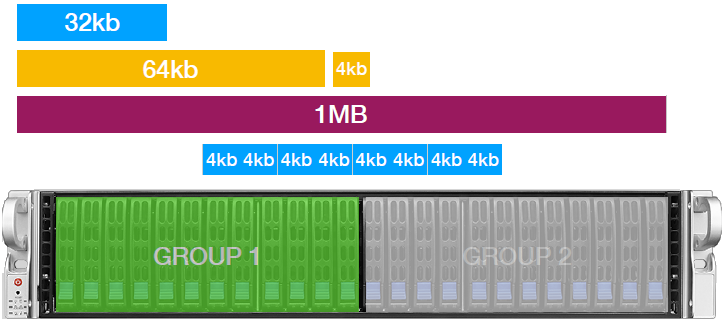

All SSDs in AccelStor systems are divided into two symmetric FlexiRemap® groups. Group size depends on the model and is 5-11 drives. For fault tolerance within a group, parity is used like RAID5. Both groups are shared, forming a common storage space. Therefore, the resulting fault tolerance will be similar to a RAID50 array consisting of two groups: the system can withstand a failure of up to two SSDs, but no more than one in each FlexiRemap® group.

All incoming write requests are broken up into 4KB blocks, which in round robin mode are written to both FlexiRemap® groups. At the same time, the system constantly keeps records of the demand for recorded blocks, trying to record such blocks as closely as possible to each other when they change. It turns out a virtual analogue of thirings, if expressed in terms of storage. In this case, the work of the garbage collector is greatly facilitated: after all, unused blocks will always be nearby.

It is worth noting that AccelStor systems , unlike competitors' products, do not use the functionality of caching incoming requests in the controller's RAM. All incoming data blocks are immediately recorded on the SSD. The host receives confirmation of successful recording only after the physical placement of data on the drives. RAM only stores block allocation tables on the SSD to speed up access and determine where to write the next block of data. Of course, for reliability, copies of these tables are located on the carriers themselves. As a result, AccelStor systems do not require any battery / capacitor cache protection (however, it is possible to establish a connection with the UPS - for “soft” shutdown in case of power problems).

Due to such an approach to organizing the recording, the garbage collector is really able to work in the background without having a significant impact on the speed of the drives, which ultimately allows the system to utilize up to 90% of SSD performance. This is precisely the high IOPS performance in AccelStor systems against All Flash, which is based on RAID algorithms.

Also an important feature of the FlexiRemap® technology is a significant reduction in redundant write operations on SSDs. So, the write amplification factor for AccelStor systems is only 1.3, which translates into common language, which means an increase in the service life of the drives by more than 2.5 times compared to RAID5!

Due to the constant monitoring by the system behind the policy of placing data on the SSD, all drives wear the same way. This approach allows you to predict their service life and in advance to signal to the administrator about the exhaustion of the recording resource.

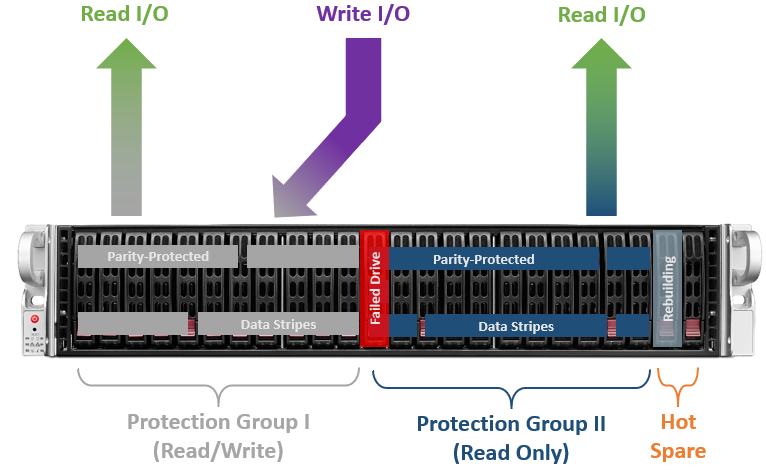

It is clear that the SSD can fail. In this case, the system will immediately launch a rebuild on one of the hot spare disks. In this case, the FlexiRemap® group, which is in the degraded state, enters the “read-only” mode, and all write requests are sent to the second group. Such a protection mechanism is designed to speed up the Rebuild operation and reduce the likelihood of another drive failing within the same group. After all, it is no secret that during the rebuild all the drives in the group are under increased load due to interferences of read, write and restore operations on hot spare. This increases the likelihood of failure of another disk. And the more write operations, the longer the rebuild will be made.

After the recovery process is completed and the FlexiRemap® group returns to its normal state, there will be a slight bias in the recording resource between the two groups. Therefore, to align it, subsequent write operations will more often fall on the restored group (of course, so that the final system performance does not suffer much).

Increasing the performance of All Flash systems based on RAID algorithms is higher than some values (~ 280K IOPS @ 4K random write) fails even when using complex caching systems. Thanks to a completely different approach to the organization of storage space, FlexiRemap® technology not only easily overcomes this barrier, but also increases the service life of SSD several times. So AccelStor systems have significant advantages among All Flash arrays on many fronts (IOPS / $, GB / $, TCO, ROI), making them ideal candidates for key positions in customer data centers to solve resource-intensive tasks.

')

Source: https://habr.com/ru/post/445336/

All Articles