Vulnerability scanning and secure development. Part 1

As part of their professional activities, developers, pentesters, security personnel have to deal with processes such as Vulnerability Management (VM), (Secure) SDLC.

Under these phrases there are various sets of practices and tools that are intertwined, although their consumers differ.

Technological progress has not yet reached the point to replace a person with a single tool for analyzing the security of infrastructure and software.

It is interesting to understand why this is so, and what problems you have to face.

')

Processes

The Vulnerability Management process (“Vulnerability Management”) is designed for continuous monitoring of infrastructure security and patch management.

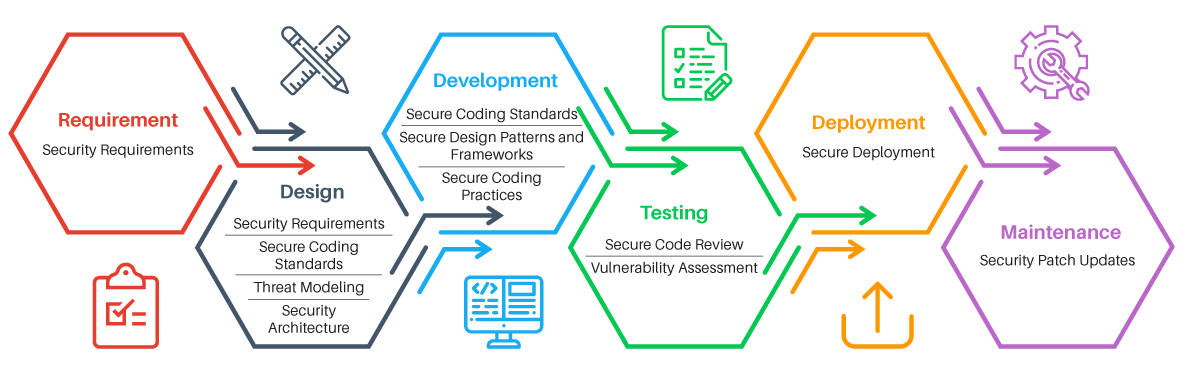

The Secure SDLC process (“secure development cycle”) is designed to support the security of the application during development and operation.

A similar part of these processes is the Vulnerability Assessment process - vulnerability assessment, vulnerability scanning.

The main difference in scanning within VM and SDLC is that, in the first case, the goal is to detect known vulnerabilities in third-party software or in configuration. For example, an outdated version of Windows or the default community string for SNMP.

In the second case, the goal is to detect vulnerabilities not only in third-party components (dependencies), but primarily in the code of a new product.

This creates differences in tools and approaches. In my opinion, the task of finding new vulnerabilities in the application is much more interesting, since it does not boil down to fingerprinting versions, collecting banners, sorting passwords, etc.

High-quality automated scanning of application vulnerabilities requires algorithms that take into account the semantics of the application, its purpose, and specific threats.

An infrastructure scanner can often be replaced with a timer, as avleonov put it. The point is that, purely statistically, you can consider your infrastructure vulnerable if you have not updated it, say, a month.

Instruments

Scanning, as well as security analysis, can be performed with either a black box or a white box.

Black box

When blackbox-scanning tool must be able to work with the service through the same interfaces through which users work with it.

Infrastructure scanners (Tenable Nessus, Qualys, MaxPatrol, Rapid7 Nexpose, etc.) look for open network ports, collect “banners”, determine the versions of installed software, and search for information about vulnerabilities in these versions in their knowledge base. They also try to detect configuration errors, such as default passwords or open data access, weak SSL ciphers, etc.

Web application scanners (Acunetix WVS, Netsparker, Burp Suite, OWASP ZAP, etc.) also know how to identify known components and their versions (for example, CMS, frameworks, JS libraries). The main steps of the scanner are cracking and fuzzing.

During crawling, the scanner collects information about existing interfaces of the application, HTTP parameters. During fuzzing, mutated or generated data is substituted into all detected parameters in order to provoke an error and detect a vulnerability.

Such application scanners belong to the classes DAST and IAST — Dynamic and Interactive Application Security Testing, respectively.

White box

With whitebox scanning, the differences are greater.

As part of the VM process, scanners (Vulners, Incsecurity Couch, Vuls, Tenable Nessus, etc.) often provide access to systems by running an authenticated scan. Thus, the scanner can download the installed versions of packages and configuration parameters directly from the system, without guessing them from the banners of the network services.

The scan is more accurate and complete.

If we talk about whitebox scanning (CheckMarx, HP Fortify, Coverity, RIPS, FindSecBugs, etc.) of applications, then we usually talk about static code analysis and the use of the corresponding tools of the SAST class - Static Application Security Testing.

Problems

There are many problems with scanning! I have to deal with most of them personally in the framework of the provision of services for the construction of scanning processes and secure development, as well as during the work on security analysis.

I will highlight 3 main groups of problems that are confirmed by conversations with engineers and heads of information security services in various companies.

Web application scanning problems

- The complexity of implementation. Scanners need to be deployed, configured, customized for each application, allocate a test environment for scans and implement the CI / CD process to make it effective. Otherwise, it will be a useless formal procedure, issuing only false positives.

- Scan duration Scanners, even in 2019, do not cope well with deduplication of interfaces and can scan for thousands of pages with 10 parameters for each day, considering them different, although the same code is responsible for them. In this case, the decision to deploy to production within the development cycle must be made quickly.

- Scanty recommendations. Scanners give quite general recommendations, and the developer cannot always quickly understand how to reduce the risk level, and most importantly, whether it needs to be done right now or is it not scary

- Destructive impact on the application. Scanners can easily do a DoS attack on an application, and also can create a large number of entities or modify existing ones (for example, create tens of thousands of comments in a blog), so you shouldn’t mindlessly run a scan in

- Low quality vulnerability detection. Scanners usually use a fixed array of payloads (“payloads”) and can easily miss a vulnerability that does not fit into the application’s known behavior scenario.

- Misunderstanding of application functions by the scanner. Scanners themselves do not know what “Internet bank”, “payment”, “comment” are. For them, there are only links and parameters, so that a huge layer of possible business logic vulnerabilities remain completely uncovered, they won’t guess whether to double write off, spy on other people's ID data or wind balance through rounding

- Misunderstanding of page semantics by the scanner. Scanners do not know how to read the FAQ, cannot recognize captcha, they themselves won’t figure out how to register, and then you need to log in, you can’t click “logout”, and you need to sign requests when changing the settings. As a result, most of the application may not be scanned at all.

Source code scan problems

- False alarms. Static analysis is a complex task, when solving which it is necessary to resort to a set of compromises. Often you have to sacrifice accuracy, and even expensive enterprise-scanners give a huge amount of false positives.

- The complexity of implementation. To increase the accuracy and completeness of static analysis, it is necessary to refine the scanning rules, and writing these rules can be too time consuming. It is sometimes easier to find all the places in the code with some kind of bug and fix them than to write a rule to detect such cases.

- No dependency support. Large projects depend on a large number of libraries and frameworks that extend the capabilities of a programming language. If the knowledge base of the scanner does not contain information about dangerous places (“sinks”) in these frameworks, it will become a blind spot, and the scanner simply won't even understand the code.

- Scan duration Finding vulnerabilities in code is also a complex task in terms of algorithms. Therefore, the process may well be delayed and require significant computational resources.

- Low coverage. Despite the consumption of resources and the duration of the scan, the developers of SAST-tools still have to resort to compromises and analyze not all the states in which the program can be

- Reproducibility of finds. Pointing to a specific string and call stack that leads to a vulnerability is fine, but in fact often the scanner does not provide enough information to check for external vulnerabilities. After all, there may be a flaw in the dead code, which is unattainable for the attacker.

Infrastructure scan problems

- Insufficient inventory. In large infrastructures, especially geographically separated, it is often the most difficult to understand which hosts need to be scanned. In other words, the scan task is tightly connected with the asset management task.

- Bad prioritization. Network scanners often give many results with flaws that are not exploitable in practice, but formally their risk level is high. The consumer receives a report that is difficult to interpret, and it is not clear what needs to be corrected first.

- Scanty recommendations. The knowledge base of the scanner is often only very general information about the vulnerability and how to fix it, so that admins will have to arm themselves with Google. The situation is a little better with whitebox scanners that can issue a specific command to correct.

- Handwork. Infrastructures can have many nodes, which means there are potentially many shortcomings, reports on which have to be disassembled and analyzed manually at each iteration

- Bad coverage. The quality of the infrastructure scan directly depends on the amount of the knowledge base about vulnerabilities and software versions. At the same time, it turns out that even the leaders of the market do not have a comprehensive knowledge base, and there is a lot of information in the bases of free solutions that leaders do not have

- Problems with patching. Most often, infrastructure vulnerability patching is an update to a package or a configuration file change. The big problem here is that the system, especially legacy, may unpredictably behave as a result of the update. In fact, it is necessary to carry out integration tests on a live infrastructure in the sale

Approaches

How to be?

In more detail about examples and how to deal with many of the listed problems, I will tell in the following parts, for now I will specify the main directions in which it is possible to work:

- Aggregation of various scanning tools. With proper use of multiple scanners, it is possible to achieve a significant increase in the knowledge base and detection quality. Even more vulnerabilities can be found than in total all scanners launched separately, while you can more accurately assess the level of risk and make more recommendations

- Integration of SAST and DAST. You can increase DAST coverage and SAST accuracy by sharing information between them. From the source you can get information about existing routes, and with the help of DAST you can check whether the vulnerability is visible from outside

- Machine Learning ™ . In 2015, I told (and more ) about the use of statistics in order to give hackers intuition to scanners and speed them up. This is definitely food for the development of automatic security analysis in the future.

- Integration of IAST with autotests and OpenAPI. Within the framework of the CI / CD pipeline, it is possible to create a scanning process based on tools that act as HTTP proxies and functional tests that work over HTTP. Tests and contracts OpenAPI / Swagger will give the scanner the missing information about the data streams, will give the opportunity to scan the application in various states

- Correct configuration. For each application and infrastructure, you need to create a suitable scan profile, taking into account the number and nature of the interfaces, technologies used

- Customization of scanners. Often, the application can not be scanned without completing the scanner. An example is a payment gateway in which each request must be signed. Without writing a connector to the gateway protocol, scanners will mindlessly punch requests with the wrong signature. You also need to write specialized scanners for a specific kind of flaws, such as the Insecure Direct Object Reference.

- Risk management. Using various scanners and integration with external systems, such as Asset Management and Threat Management, will allow you to use many parameters to assess the level of risk, so that management can get an adequate picture of the current state of development or infrastructure security

Stay tuned and let's disrupt the vulnerability scanning!

Source: https://habr.com/ru/post/444534/

All Articles