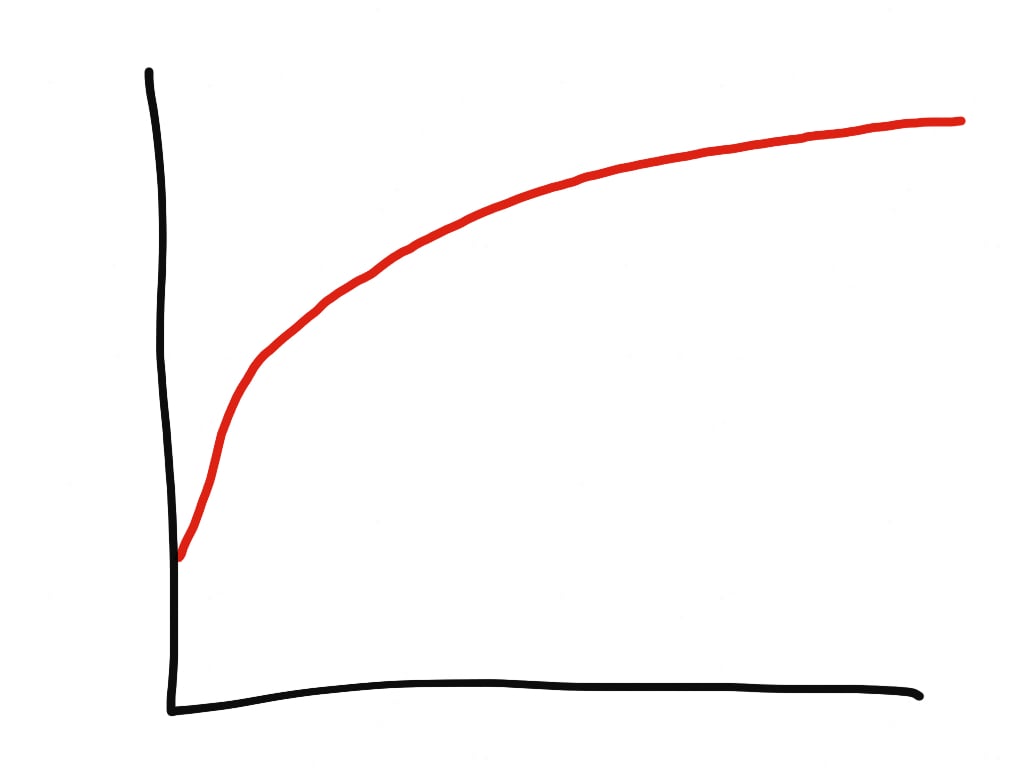

What does memory expand in Ruby?

We at Phusion run a simple multi-threaded HTTP proxy on Ruby (distributes DEB and RPM packages). I saw on it a memory consumption of 1.3 GB. But this is crazy for a stateless process ...

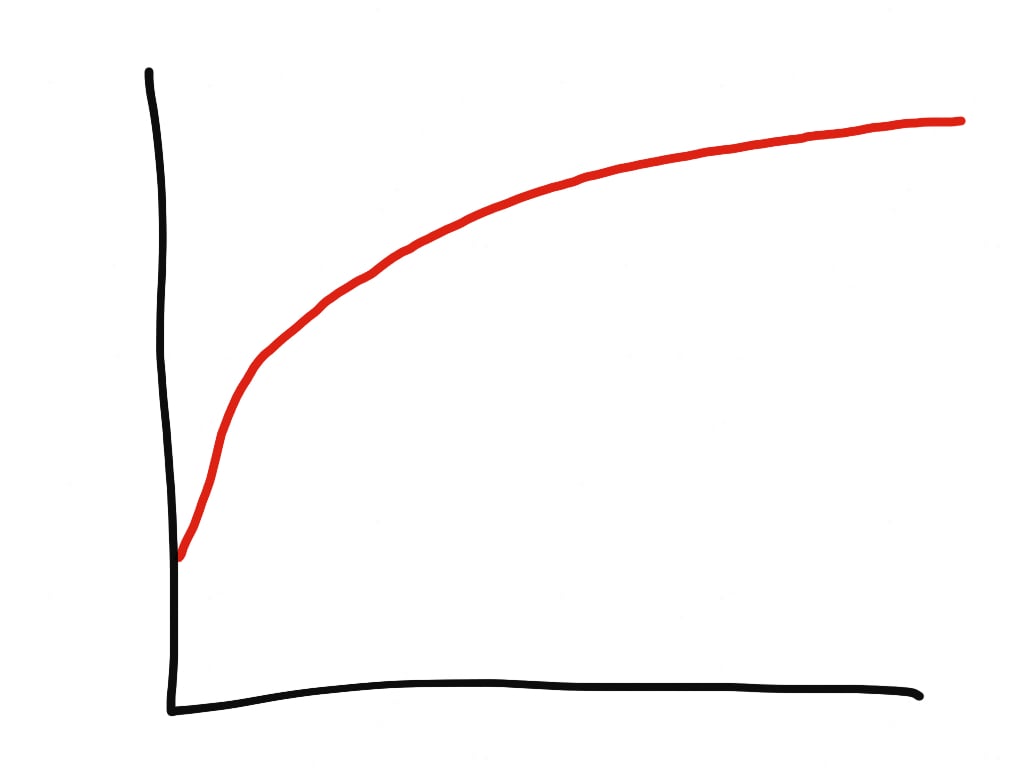

Question: What is it? Answer: Memory use by the Ruby process over time!

It turns out that I am not alone in this problem. Ruby applications can use a lot of memory. But why? According to Heroku and Nate Berkopek , swelling is mainly due to memory fragmentation and excessive heap allocation.

Berkopek came to the conclusion that there are two solutions:

')

I am worried about the description of the problem and the proposed solutions. Something is wrong here ... I am not sure that the problem is fully described or that these are the only available solutions. It also annoys me that many refer to jemalloc as a magic silver bullet.

Magic is just a science that we do not yet understand . So I went on a research trip to find out the whole truth. In this article we will cover the following topics:

Note: This article is relevant only for Linux, and only for multi-threaded Ruby applications.

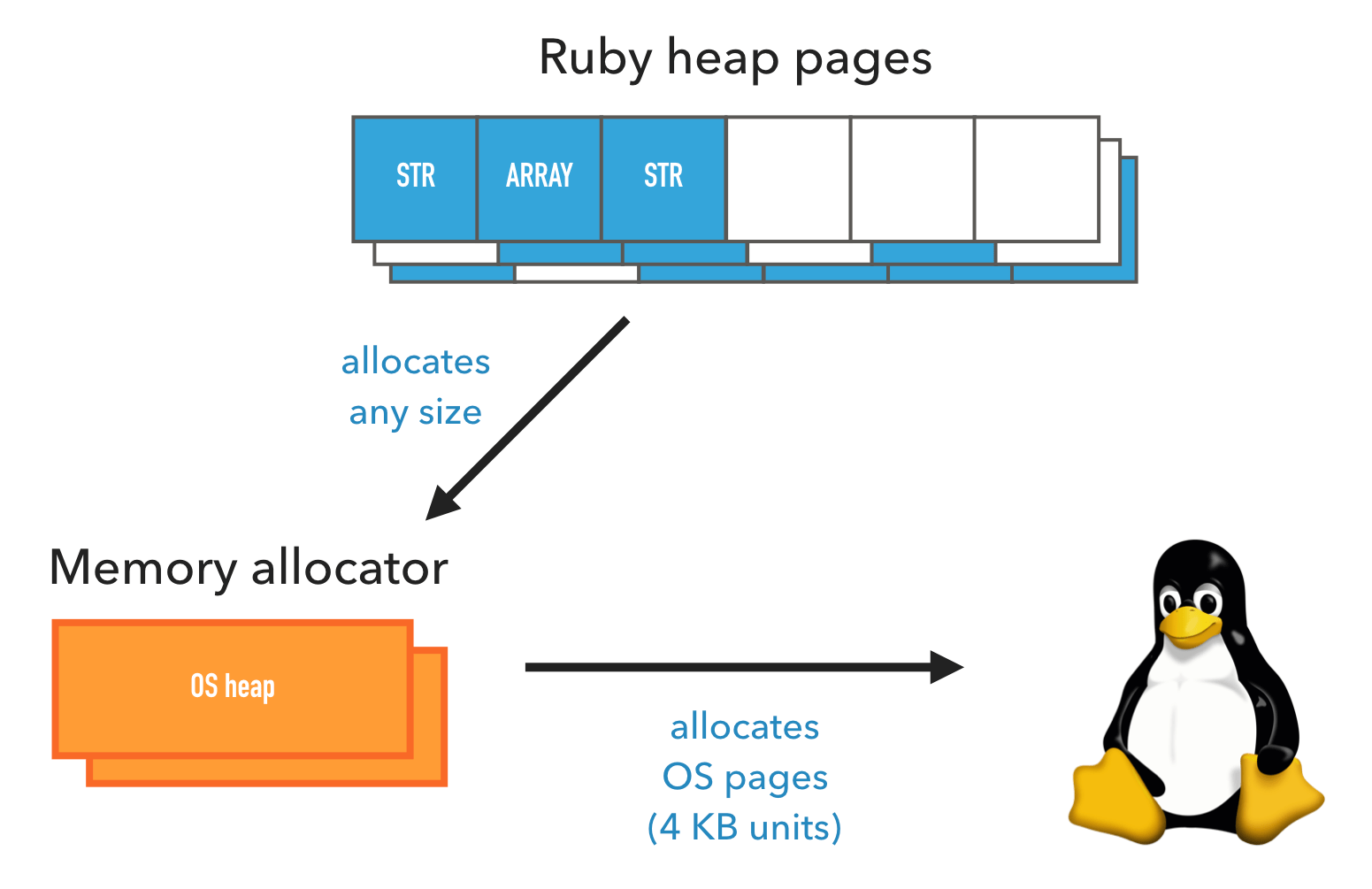

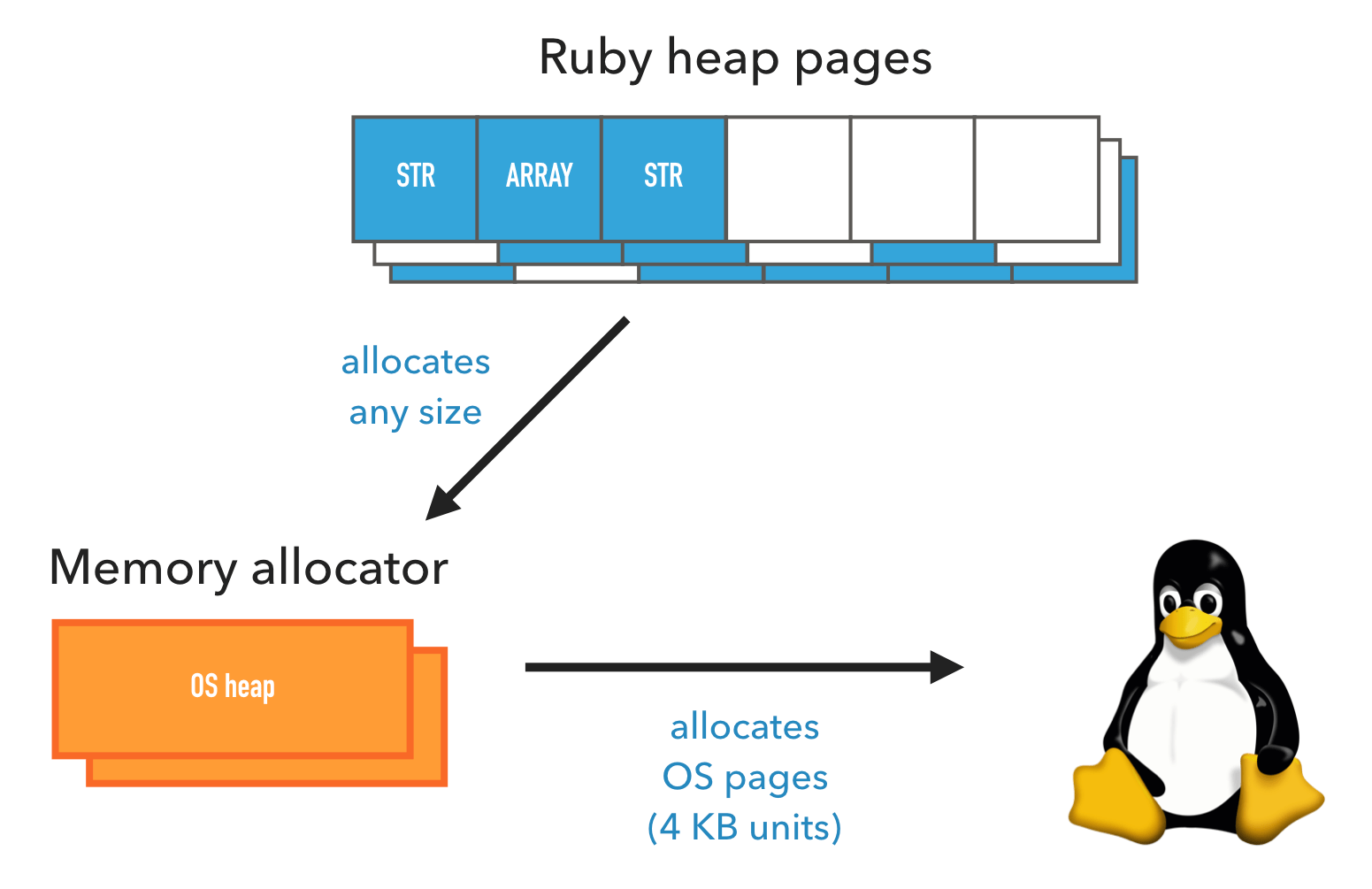

Memory allocation in Ruby occurs on three levels, from top to bottom:

Let's go through each level.

On its side, Ruby organizes objects in areas of memory called Ruby heap pages . Such a heap page is divided into equal-sized slots, where one object occupies one slot. Whether it is a string, a hash table, an array, a class, or something else, it occupies one slot.

Slots on the heap page can be busy or free. When Ruby selects a new object, it immediately tries to occupy an empty slot. If there are no free slots, a new heap page will be highlighted.

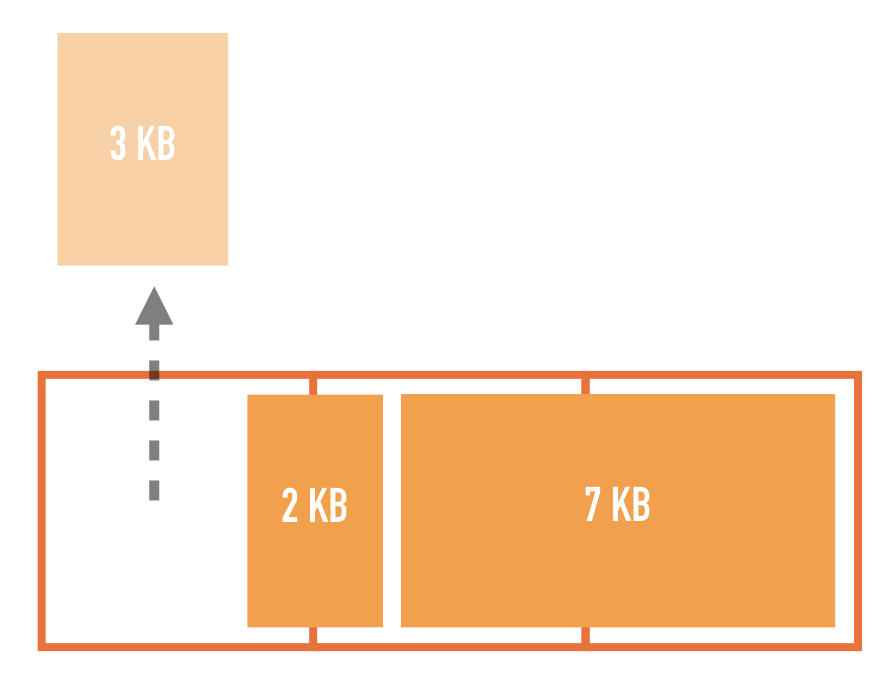

The slot is small, about 40 bytes. Obviously, some objects will not fit into it, for example, 1 MB lines each. Then Ruby stores the information elsewhere outside the heap page, and places a pointer in this external memory area in the slot.

Data that does not fit in the slot is stored outside the heap page. Ruby places a pointer to external data in the slot.

As pages of a heap of Ruby, and any external areas of storage are selected by means of the distributor of memory of system.

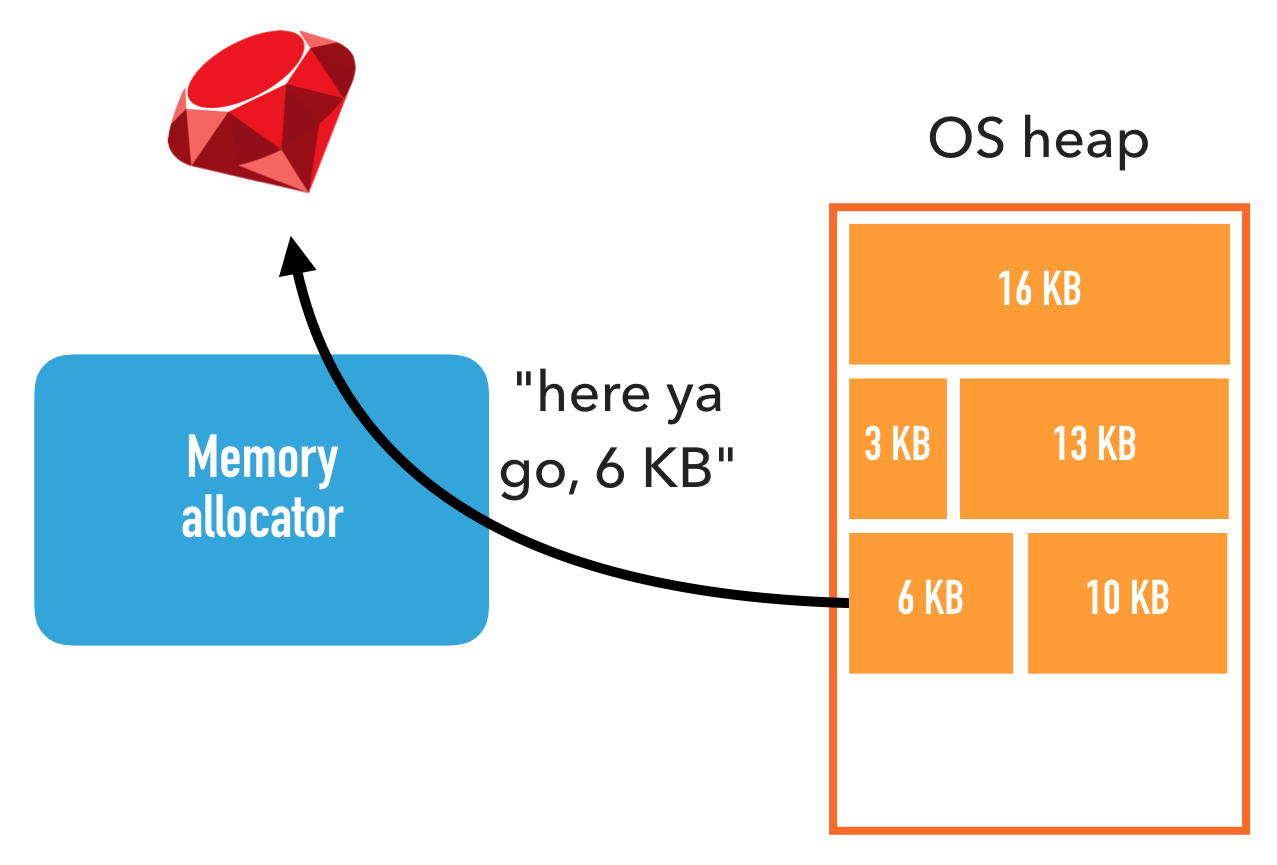

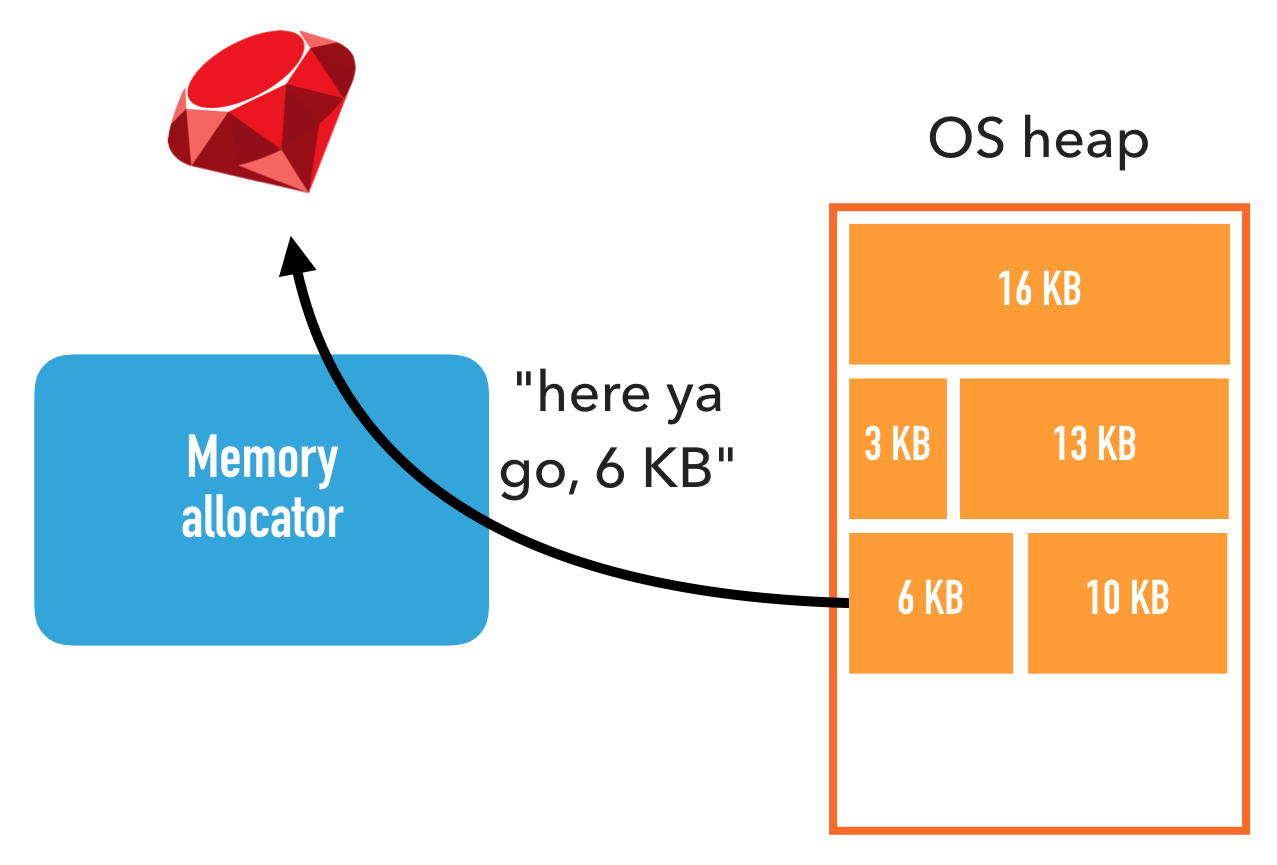

The operating system memory allocator is part of glibc (runtime environment C). It is used by almost all applications, not just Ruby. It has a simple API:

Unlike Ruby, where slots of the same size are allocated, the memory allocator deals with requests for allocating memory of any size. As you will learn later, this fact leads to some complications.

In turn, the memory allocator accesses the kernel API. It takes much larger chunks of memory from the kernel than its own subscribers request, since the kernel call is expensive and the kernel API has a restriction: it can allocate memory only in multiples of 4 KB.

The memory allocator allocates large chunks — they are called system heaps — and divides their contents to satisfy requests from applications.

The area of memory that the memory allocator allocates from the kernel is called the heap. Note that it has nothing to do with the pages of the Ruby heap, so for clarity we will use the term system heap .

Then the memory allocator assigns parts of the system heaps to its callers until there is free space. In this case, the memory allocator allocates a new system heap from the kernel. This is similar to how Ruby selects objects from Ruby heap pages.

Ruby allocates memory from a memory allocator, which, in turn, allocates it from the kernel

The kernel can allocate memory only for 4 KB units. One such 4 KB block is called a page. In order not to be confused with the pages of the Ruby heap, for clarity we will use the term system page (OS page).

The reason is difficult to explain, but this is how all modern kernels work.

Allocating memory through the kernel has a significant impact on performance, so memory allocators try to minimize the number of kernel calls.

Thus, memory is allocated at several levels, and each level allocates more memory than it really needs. On the pages of a heap of Ruby there can be free slots, as in the system heaps. Therefore, the answer to the question “How much memory is used?” Fully depends on what level you ask!

Tools like

Fragmentation of memory means that memory allocations are randomly scattered. This can cause interesting problems.

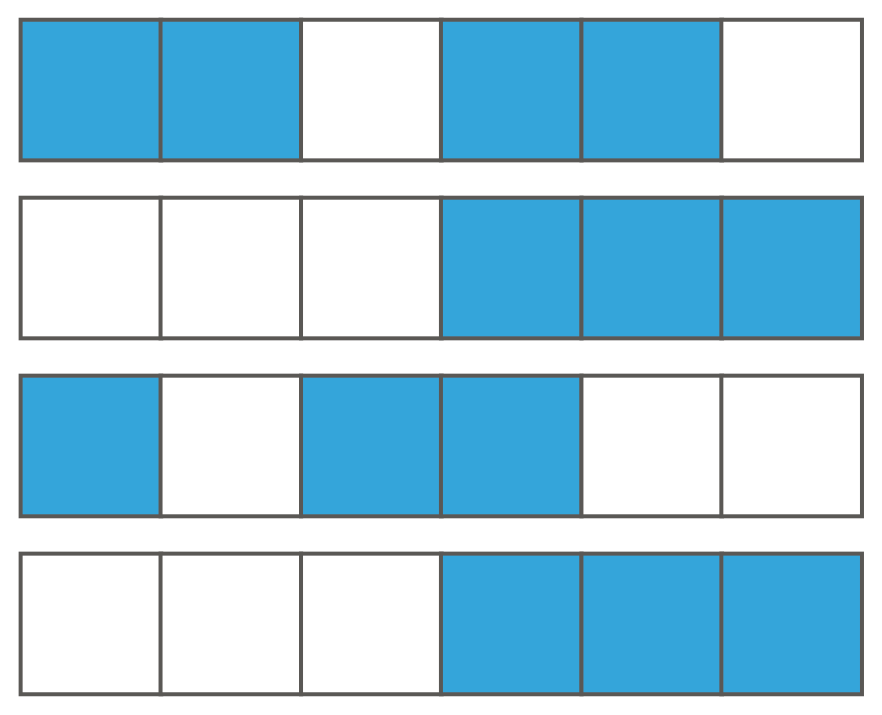

Consider Ruby garbage collection. Garbage collection for an object means marking the Ruby heap page slot as free, allowing it to be reused. If the entire Ruby heap page consists only of free slots, then it can be completely reset back to the memory allocator (and, possibly, back to the core).

But what happens if not all slots are free? What if we have a lot of Ruby heap pages, and the garbage collector frees objects in different places, so that ultimately there are a lot of free slots, but on different pages? In such a situation, Ruby has free slots to accommodate objects, but the memory allocator and the kernel will continue to allocate memory!

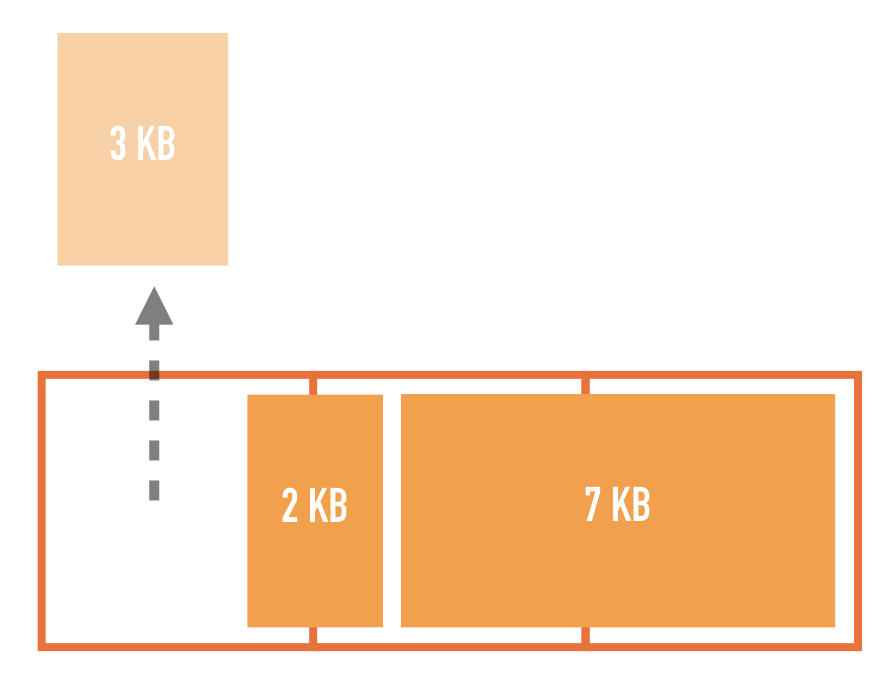

The memory allocator has a similar, but completely different problem. He does not need to immediately release entire system heaps. In theory, it can free up any single system page. But since the memory allocator deals with allocations of memory of arbitrary size, there may be several allocations on the system page. It cannot free up the system page until all selections are released.

Think about what happens if we have a selection of 3 KB, as well as a selection of 2 KB, divided into two system pages. If you release the first 3 KB, both system pages will remain partially occupied and cannot be released.

Therefore, in the event of an unsuccessful set of circumstances, there will be a lot of free space on the system pages, but they are not completely free.

Worse: what to do if there are a lot of empty seats, but none of them is large enough to satisfy a new selection request? The memory allocator will have to allocate a completely new system heap.

It is likely that fragmentation causes excessive memory use in Ruby. If so, which of the two fragmentation does more harm? It…

The first option is just enough to check. Ruby provides two APIs:

To summarize them, this is all the memory Ruby knows about, and it includes the fragmentation of the Ruby heap pages. If, in terms of the kernel, memory usage is higher, then the remaining memory goes somewhere outside the control of Ruby, for example, to third-party libraries or fragmentation.

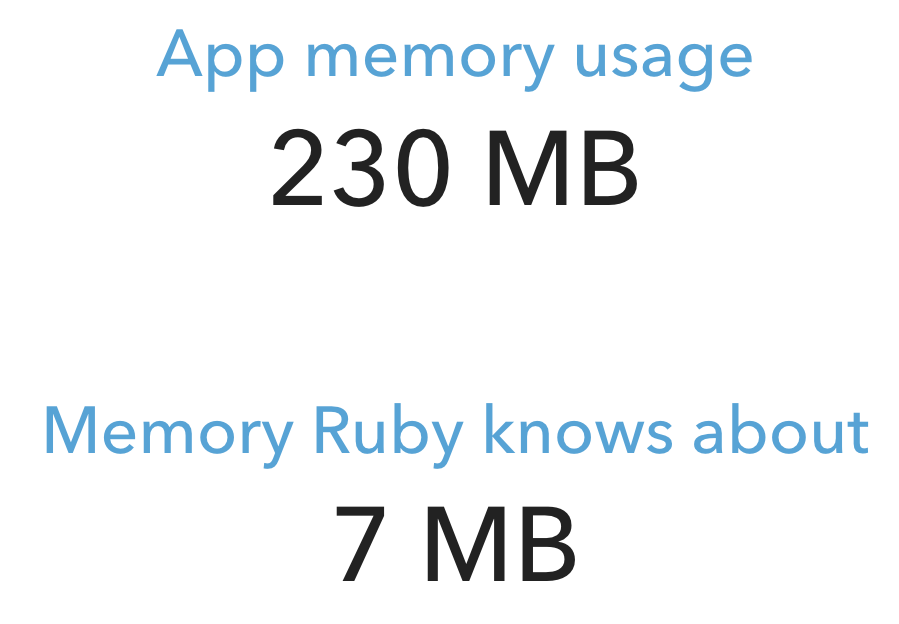

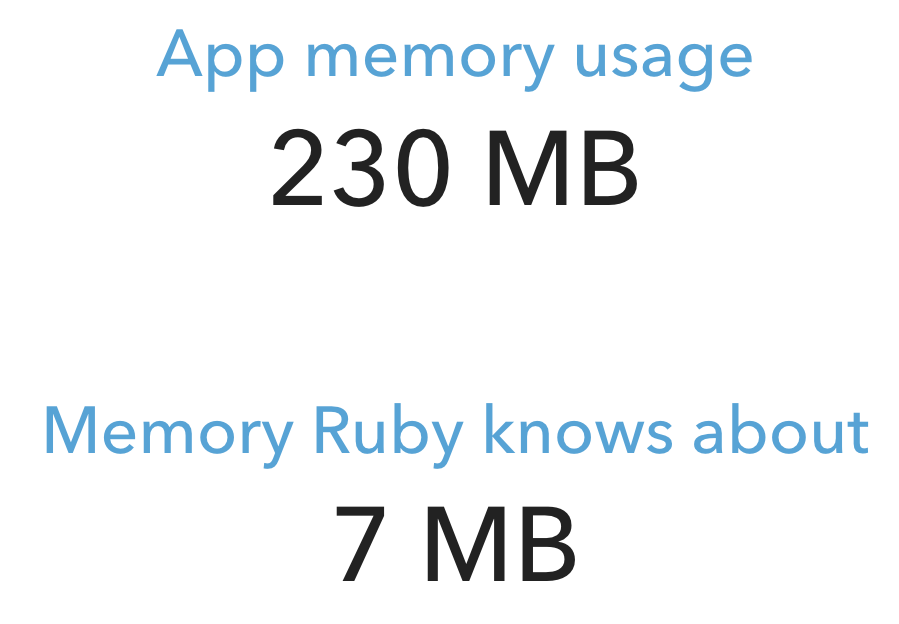

I wrote a simple test program that creates a bunch of threads, each of which selects lines in a loop. Here is the result after a while:

it's ... just ... madness!

The result shows that Ruby has such a weak effect on the total amount of used memory that it does not matter whether Ruby heap pages are fragmented or not.

We'll have to look for the culprit elsewhere. At least we now know that Ruby is not to blame.

Another likely suspect is a memory allocator. In the end, Neith Berkopek and Heroku noticed that fussing with the memory allocator (either a complete replacement with jemalloc, or setting the magic environment variable

Let's first see what

The reason why

At any one time, only one thread can work with the system heap. In multithreaded tasks, a conflict occurs and, consequently, performance decreases.

There is an optimization in the memory allocator for such a case. He tries to create several system heaps and assign them to different threads. Most of the time, the thread only works with its heap, avoiding conflicts with other threads.

In fact, the maximum number of system heaps allocated in this way is, by default, equal to the number of virtual processors multiplied by 8. That is, in a dual-core system with two hyper-threads each,

Why is the default multiplier so big? Because the leading developer of the memory allocator is Red Hat. Their clients are large companies with powerful servers and a ton of RAM. The above optimization allows to increase the average performance of multithreading by 10% due to a significant increase in memory usage. For Red Hat customers, this is a good compromise. For most of the rest - it is unlikely.

Nate in his blog and the Heroku article argue that increasing the number of system heaps increases fragmentation, and refers to official documentation. The variable

Is it true that Nate and Heroku claim that increasing the number of system heaps increases fragmentation? In fact, is there any problem with fragmentation at the memory allocator level? I didn’t want to take either of these assumptions for granted, so I began research.

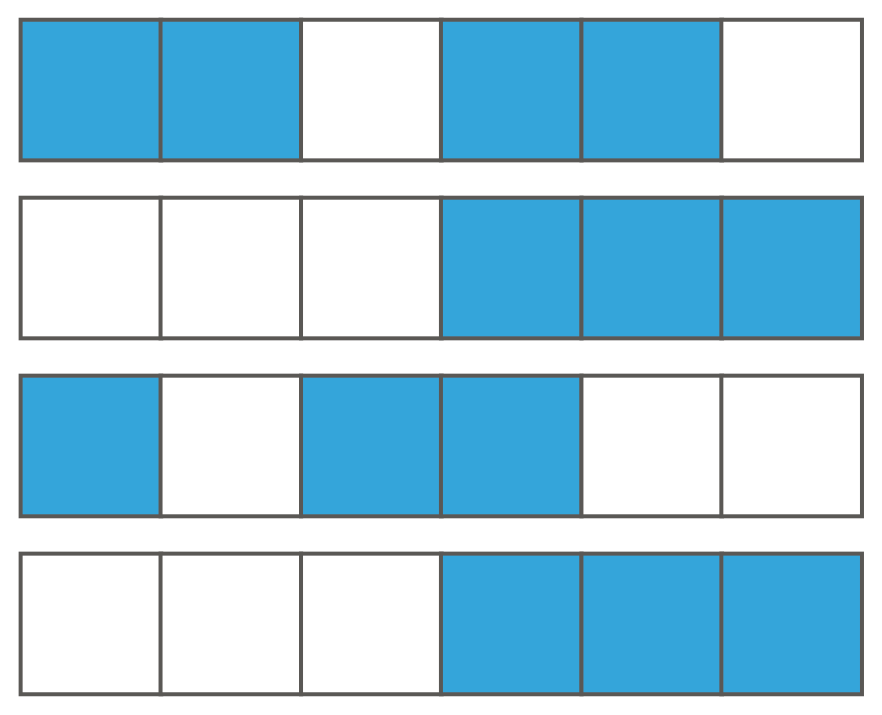

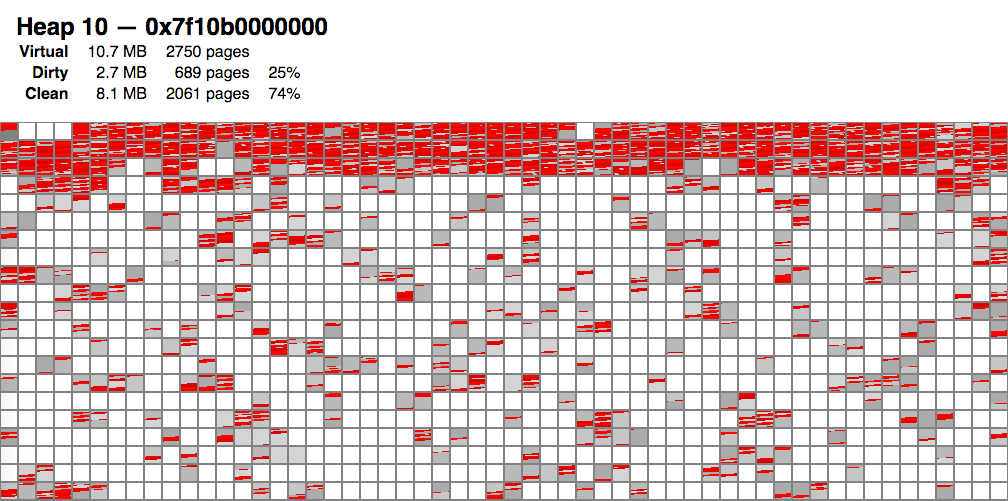

Unfortunately, there are no tools for visualizing system heaps, so I wrote such a visualizer myself .

First, you need to somehow save the system heap distribution scheme. I studied the sources of the memory allocator and looked at how it represents memory internally. Then I wrote a library that iterates through these data structures and writes a schema to a file. Finally, I wrote a tool that takes such a file as input and compiles the visualization as HTML and PNG images ( source code ).

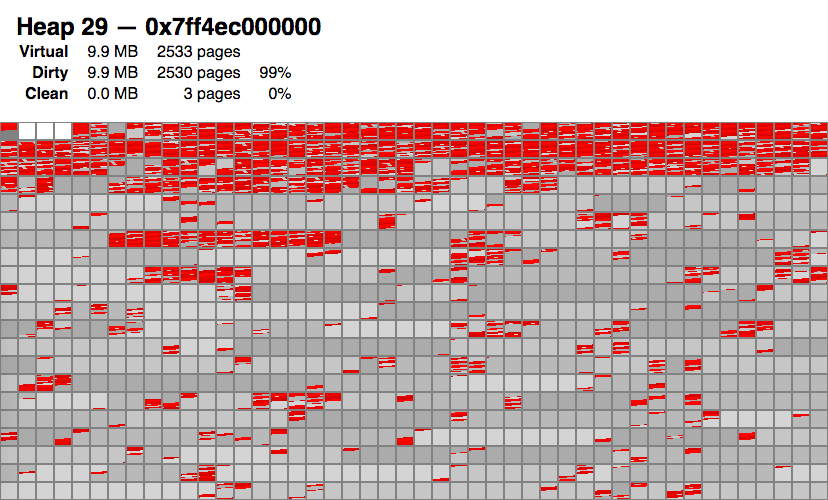

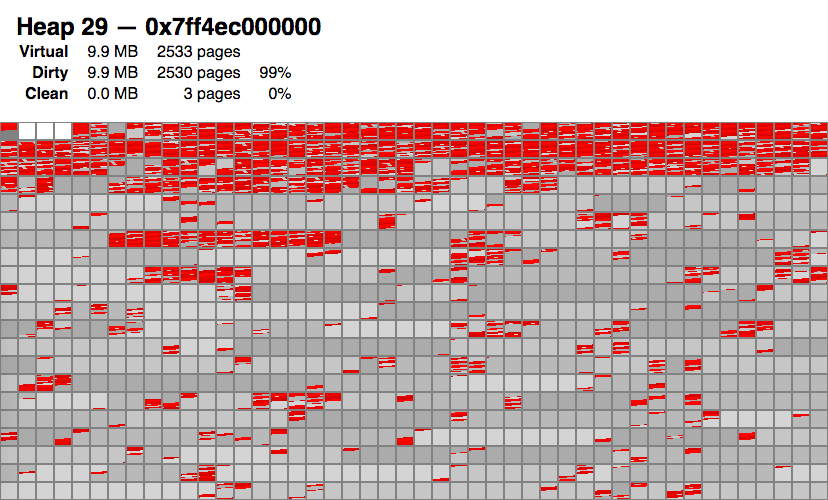

Here is an example of the visualization of one particular system heap (there are many more). The small blocks in this visualization represent the system pages.

The following conclusions can be drawn from the visualization:

And then it hit me:

Although fragmentation remains a problem, it's not about her!

Rather, the problem is in a large amount of gray: this memory allocator does not give the memory back to the core !

After re-examining the source code of the memory allocator, it turned out that by default it sends only system pages to the kernel at the end of the system heap, and even this rarely does. Probably, this algorithm is implemented for performance reasons.

Fortunately, I found one trick. There is one software interface that will cause the memory allocator to free up for the kernel not only the last, but all the relevant system pages. It is called malloc_trim .

I knew about this function, but did not think that it was useful, because the manual says the following:

The manual is wrong! Analysis of the source code says that the program frees all relevant system pages, not just the top ones.

What happens if you call this function during garbage collection? I modified the source code of Ruby 2.6 to call

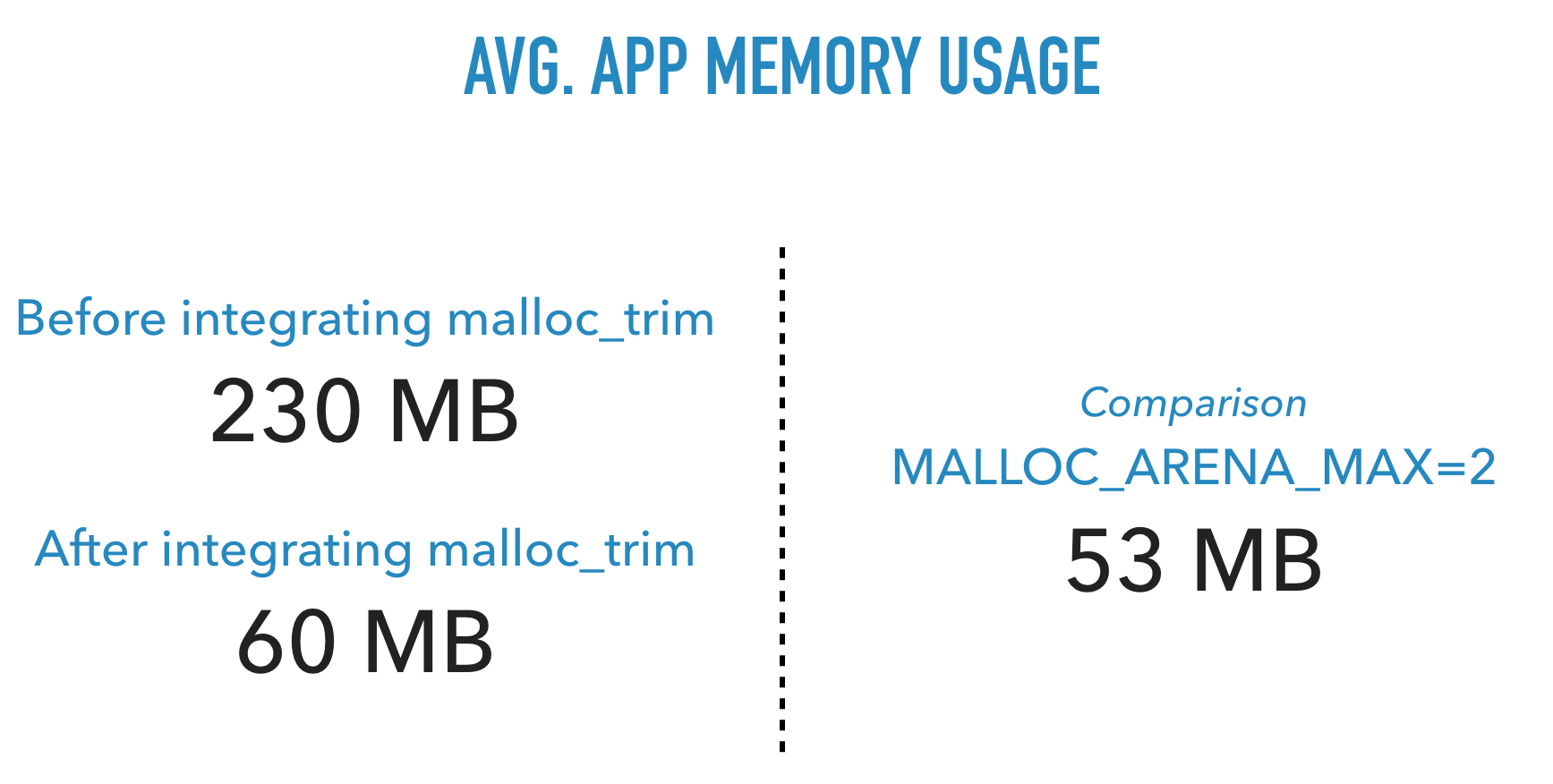

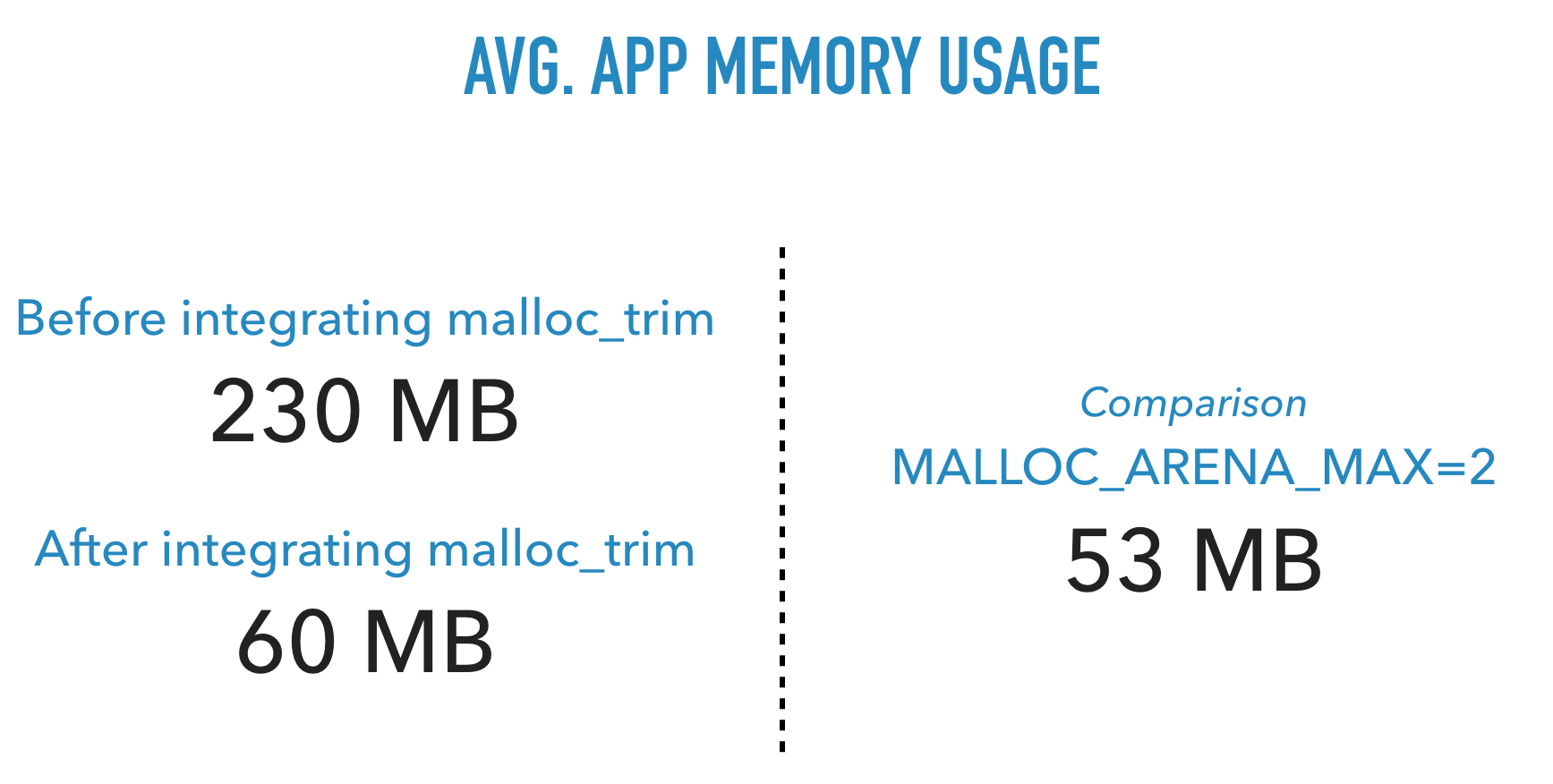

And here are the test results:

What a big difference! A simple patch reduced memory consumption to almost the level of

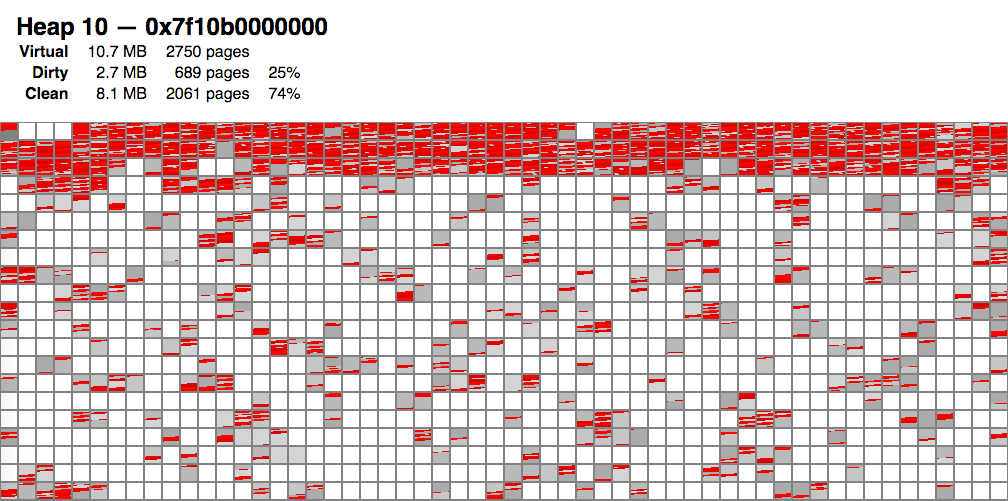

Here is how it looks in the visualization:

We see a lot of white areas that correspond to system pages released back to the core.

It turned out that the fragmentation, in the main, nothing to do with it. Defragmentation is still useful, but the main problem is that the memory allocator does not like to free memory back to the core.

Fortunately, the solution was very simple. The main thing was to find the root cause.

Source

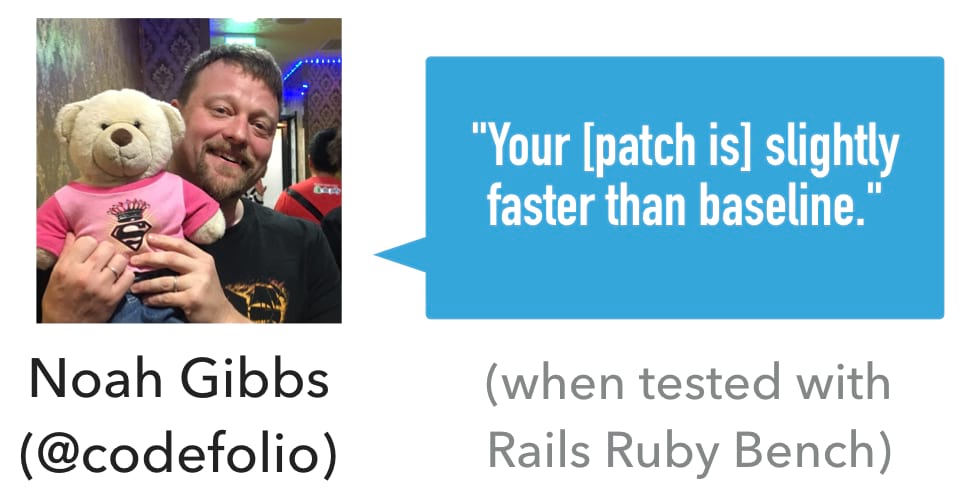

Performance remained one of the main concerns. The call to

It blew my mind. The effect is incomprehensible, but the news is good.

In this study, only a limited number of cases have been verified. It is not known what the effect is on other workloads. If you want to help with testing, please contact me .

Question: What is it? Answer: Memory use by the Ruby process over time!

It turns out that I am not alone in this problem. Ruby applications can use a lot of memory. But why? According to Heroku and Nate Berkopek , swelling is mainly due to memory fragmentation and excessive heap allocation.

Berkopek came to the conclusion that there are two solutions:

')

- Either use a completely different memory allocator than glibc - usually jemalloc , or:

- Set the magic environment variable

MALLOC_ARENA_MAX=2.

I am worried about the description of the problem and the proposed solutions. Something is wrong here ... I am not sure that the problem is fully described or that these are the only available solutions. It also annoys me that many refer to jemalloc as a magic silver bullet.

Magic is just a science that we do not yet understand . So I went on a research trip to find out the whole truth. In this article we will cover the following topics:

- How memory allocation works.

- What is this “fragmentation” and “excessive allocation” of memory that everyone is talking about?

- What causes high memory consumption? Is the situation consistent with what people are saying, or is there something else? (spoiler: yes, there is something else).

- Are there any alternative solutions? (spoiler: I found one).

Note: This article is relevant only for Linux, and only for multi-threaded Ruby applications.

Content

- Ruby Memory Allocation: An Introduction

- What is fragmentation?

- Is the fragmentation of Ruby heap pages causing memory swelling?

- The study of fragmentation at the level of memory allocator

- Magic trick: circumcision

- Conclusion

Ruby Memory Allocation: An Introduction

Memory allocation in Ruby occurs on three levels, from top to bottom:

- A Ruby interpreter that manages Ruby objects.

- Library of the operating system memory allocator.

- Core.

Let's go through each level.

Ruby

On its side, Ruby organizes objects in areas of memory called Ruby heap pages . Such a heap page is divided into equal-sized slots, where one object occupies one slot. Whether it is a string, a hash table, an array, a class, or something else, it occupies one slot.

Slots on the heap page can be busy or free. When Ruby selects a new object, it immediately tries to occupy an empty slot. If there are no free slots, a new heap page will be highlighted.

The slot is small, about 40 bytes. Obviously, some objects will not fit into it, for example, 1 MB lines each. Then Ruby stores the information elsewhere outside the heap page, and places a pointer in this external memory area in the slot.

Data that does not fit in the slot is stored outside the heap page. Ruby places a pointer to external data in the slot.

As pages of a heap of Ruby, and any external areas of storage are selected by means of the distributor of memory of system.

System memory allocator

The operating system memory allocator is part of glibc (runtime environment C). It is used by almost all applications, not just Ruby. It has a simple API:

- Memory is allocated by calling

malloc(size). You give it the number of bytes you want to allocate, and it returns either the allocation address or an error. - The allocated memory is freed by calling

free(address).

Unlike Ruby, where slots of the same size are allocated, the memory allocator deals with requests for allocating memory of any size. As you will learn later, this fact leads to some complications.

In turn, the memory allocator accesses the kernel API. It takes much larger chunks of memory from the kernel than its own subscribers request, since the kernel call is expensive and the kernel API has a restriction: it can allocate memory only in multiples of 4 KB.

The memory allocator allocates large chunks — they are called system heaps — and divides their contents to satisfy requests from applications.

The area of memory that the memory allocator allocates from the kernel is called the heap. Note that it has nothing to do with the pages of the Ruby heap, so for clarity we will use the term system heap .

Then the memory allocator assigns parts of the system heaps to its callers until there is free space. In this case, the memory allocator allocates a new system heap from the kernel. This is similar to how Ruby selects objects from Ruby heap pages.

Ruby allocates memory from a memory allocator, which, in turn, allocates it from the kernel

Core

The kernel can allocate memory only for 4 KB units. One such 4 KB block is called a page. In order not to be confused with the pages of the Ruby heap, for clarity we will use the term system page (OS page).

The reason is difficult to explain, but this is how all modern kernels work.

Allocating memory through the kernel has a significant impact on performance, so memory allocators try to minimize the number of kernel calls.

Memory usage

Thus, memory is allocated at several levels, and each level allocates more memory than it really needs. On the pages of a heap of Ruby there can be free slots, as in the system heaps. Therefore, the answer to the question “How much memory is used?” Fully depends on what level you ask!

Tools like

top or ps show the memory usage from the kernel point of view. This means that the upper levels must work in concert to free up memory from the kernel's point of view. As you learn further, it is more difficult than it seems.What is fragmentation?

Fragmentation of memory means that memory allocations are randomly scattered. This can cause interesting problems.

Ruby Fragmentation

Consider Ruby garbage collection. Garbage collection for an object means marking the Ruby heap page slot as free, allowing it to be reused. If the entire Ruby heap page consists only of free slots, then it can be completely reset back to the memory allocator (and, possibly, back to the core).

But what happens if not all slots are free? What if we have a lot of Ruby heap pages, and the garbage collector frees objects in different places, so that ultimately there are a lot of free slots, but on different pages? In such a situation, Ruby has free slots to accommodate objects, but the memory allocator and the kernel will continue to allocate memory!

Fragmentation at the memory allocator level

The memory allocator has a similar, but completely different problem. He does not need to immediately release entire system heaps. In theory, it can free up any single system page. But since the memory allocator deals with allocations of memory of arbitrary size, there may be several allocations on the system page. It cannot free up the system page until all selections are released.

Think about what happens if we have a selection of 3 KB, as well as a selection of 2 KB, divided into two system pages. If you release the first 3 KB, both system pages will remain partially occupied and cannot be released.

Therefore, in the event of an unsuccessful set of circumstances, there will be a lot of free space on the system pages, but they are not completely free.

Worse: what to do if there are a lot of empty seats, but none of them is large enough to satisfy a new selection request? The memory allocator will have to allocate a completely new system heap.

Is the fragmentation of Ruby heap pages causing memory swelling?

It is likely that fragmentation causes excessive memory use in Ruby. If so, which of the two fragmentation does more harm? It…

- Fragmentation of Ruby Heap Pages? Or

- Fragmentation of the memory allocator?

The first option is just enough to check. Ruby provides two APIs:

ObjectSpace.memsize_of_all and GC.stat . With this information, you can calculate all the memory that Ruby received from the allocator.

ObjectSpace.memsize_of_all returns the memory occupied by all active Ruby objects. That is, all the space in their slots and any external data. In the diagram above, this is the size of all blue and orange objects.GC.stat lets you know the size of all free slots, i.e. the entire gray area in the illustration above. Here is the algorithm: GC.stat[:heap_free_slots] * GC::INTERNAL_CONSTANTS[:RVALUE_SIZE] To summarize them, this is all the memory Ruby knows about, and it includes the fragmentation of the Ruby heap pages. If, in terms of the kernel, memory usage is higher, then the remaining memory goes somewhere outside the control of Ruby, for example, to third-party libraries or fragmentation.

I wrote a simple test program that creates a bunch of threads, each of which selects lines in a loop. Here is the result after a while:

it's ... just ... madness!

The result shows that Ruby has such a weak effect on the total amount of used memory that it does not matter whether Ruby heap pages are fragmented or not.

We'll have to look for the culprit elsewhere. At least we now know that Ruby is not to blame.

The study of fragmentation at the level of memory allocator

Another likely suspect is a memory allocator. In the end, Neith Berkopek and Heroku noticed that fussing with the memory allocator (either a complete replacement with jemalloc, or setting the magic environment variable

MALLOC_ARENA_MAX=2 ) dramatically reduces memory usage.Let's first see what

MALLOC_ARENA_MAX=2 does and why it helps. Then we investigate fragmentation at the distributor level.Excessive memory allocation and glibc

The reason why

MALLOC_ARENA_MAX=2 helps is multithreading. When several threads simultaneously try to allocate memory from the same system heap, they fight for access. Only one thread at a time can receive memory, which reduces the performance of multi-threaded memory allocation.

At any one time, only one thread can work with the system heap. In multithreaded tasks, a conflict occurs and, consequently, performance decreases.

There is an optimization in the memory allocator for such a case. He tries to create several system heaps and assign them to different threads. Most of the time, the thread only works with its heap, avoiding conflicts with other threads.

In fact, the maximum number of system heaps allocated in this way is, by default, equal to the number of virtual processors multiplied by 8. That is, in a dual-core system with two hyper-threads each,

2 * 2 * 8 = 32 system heaps are obtained! This is what I call over-allocation .Why is the default multiplier so big? Because the leading developer of the memory allocator is Red Hat. Their clients are large companies with powerful servers and a ton of RAM. The above optimization allows to increase the average performance of multithreading by 10% due to a significant increase in memory usage. For Red Hat customers, this is a good compromise. For most of the rest - it is unlikely.

Nate in his blog and the Heroku article argue that increasing the number of system heaps increases fragmentation, and refers to official documentation. The variable

MALLOC_ARENA_MAX reduces the maximum number of system heaps allocated for multi-threading. By this logic, it reduces fragmentation.Visualization of system heaps

Is it true that Nate and Heroku claim that increasing the number of system heaps increases fragmentation? In fact, is there any problem with fragmentation at the memory allocator level? I didn’t want to take either of these assumptions for granted, so I began research.

Unfortunately, there are no tools for visualizing system heaps, so I wrote such a visualizer myself .

First, you need to somehow save the system heap distribution scheme. I studied the sources of the memory allocator and looked at how it represents memory internally. Then I wrote a library that iterates through these data structures and writes a schema to a file. Finally, I wrote a tool that takes such a file as input and compiles the visualization as HTML and PNG images ( source code ).

Here is an example of the visualization of one particular system heap (there are many more). The small blocks in this visualization represent the system pages.

- Red areas - used memory cells.

- Gray - free areas not released back to the core.

- White areas are released for the core.

The following conclusions can be drawn from the visualization:

- There is a certain fragmentation. Red spots are scattered in memory, and some system pages are only half red.

- To my surprise, most system heaps contain a significant amount of completely free system pages (gray)!

And then it hit me:

Although fragmentation remains a problem, it's not about her!

Rather, the problem is in a large amount of gray: this memory allocator does not give the memory back to the core !

After re-examining the source code of the memory allocator, it turned out that by default it sends only system pages to the kernel at the end of the system heap, and even this rarely does. Probably, this algorithm is implemented for performance reasons.

Magic trick: circumcision

Fortunately, I found one trick. There is one software interface that will cause the memory allocator to free up for the kernel not only the last, but all the relevant system pages. It is called malloc_trim .

I knew about this function, but did not think that it was useful, because the manual says the following:

The malloc_trim () function attempts to free up free memory at the top of the heap.

The manual is wrong! Analysis of the source code says that the program frees all relevant system pages, not just the top ones.

What happens if you call this function during garbage collection? I modified the source code of Ruby 2.6 to call

malloc_trim() in the gc_start function from gc.c, for example: gc_prof_timer_start(objspace); { gc_marks(objspace, do_full_mark); // BEGIN MODIFICATION if (do_full_mark) { malloc_trim(0); } // END MODIFICATION } gc_prof_timer_stop(objspace); And here are the test results:

What a big difference! A simple patch reduced memory consumption to almost the level of

MALLOC_ARENA_MAX=2 .Here is how it looks in the visualization:

We see a lot of white areas that correspond to system pages released back to the core.

Conclusion

It turned out that the fragmentation, in the main, nothing to do with it. Defragmentation is still useful, but the main problem is that the memory allocator does not like to free memory back to the core.

Fortunately, the solution was very simple. The main thing was to find the root cause.

Visualizer source code

Source

What about performance?

Performance remained one of the main concerns. The call to

malloc_trim() cannot do for free, and according to the code the algorithm works in linear time. So I turned to Noah Gibbs , who ran the benchmark Rails Ruby Bench. To my surprise, the patch caused a slight increase in performance.

It blew my mind. The effect is incomprehensible, but the news is good.

Need more tests

In this study, only a limited number of cases have been verified. It is not known what the effect is on other workloads. If you want to help with testing, please contact me .

Source: https://habr.com/ru/post/444482/

All Articles