Emulate Amazon web services in the JVM process. Avoiding Roskomnadzor and accelerate development and testing

Why then may you need to emulate the Amazon web services infrastructure?

First of all, it is saving - saving time for development and debugging, and last but not least - saving money from the project budget. It is clear that the emulator will not be 100% identical to the source environment that we are trying to emulate. But in order to accelerate the development and automation of the process, the existing similarity should suffice. The most topical of what happened in 2018 with AWS is blocking by providers of IP addresses of AWS subnets in the Russian Federation. And these locks have affected our infrastructure hosted in the Amazon cloud. If you plan to use AWS technology and place the project in this cloud, then emulation is more than worthwhile for development and testing.

')

In the publication I will tell how we managed to perform such a trick with the services S3, SQS, RDS PostgreSQL and Redshift when migrating the data warehouse existing on AWS for many years.

This is only a part of my last year's report at the mitap , it corresponds to the topics of the AWS and Java hubs. The second part relates to PostgreSQL, Redshift and column databases and its text version can be published in the corresponding hubs, videos and slides are on the conference website .

When developing an application for AWS during the blocking of AWS subnets, the team practically did not notice them in the daily development process of the new functionality. The tests also worked and you can debug the application. And only when trying to view application logs in logentries, metrics in SignalFX, or data analysis in Redshift / PostgreSQL RDS was disappointing - services were not available through the network of a Russian provider. AWS emulation helped us to ignore this and avoid much greater delays when working with the Amazon cloud over a VPN network.

Every cloud provider “under the hood” has a lot of dragons and you should not give in to advertising. We must understand why all this is necessary for the service provider. Of course, there are advantages to the existing infrastructure of Amazon, Microsoft and Google. And when they tell you that everything was done only so that it was convenient for you to develop, they are most likely trying to plant you on their needle and give the first dose for free. That then did not get down from the infrastructure and specific technology. Therefore, we will try to avoid binding to the supplier (vendor lock-in). It is clear that it is not always possible to completely abstract away from specific solutions and I think that quite often in a project it is possible to abstract almost by 90%. But the remaining 10% of the project is tied to the technology provider, which is very important - this is either an optimization of the application performance, or the unique functions of which are not found anywhere else. You should always keep in mind the advantages and disadvantages of technologies and secure yourself as much as possible, not to “sit down” on a specific API of the cloud infrastructure provider.

Amazon on its website writes about the processing of messages . The essence and abstraction of messaging technologies is the same everywhere, although there are nuances - the transfer of messages through queues or through topics. AWS recommends using Apache ActiveMQ provided and managed by them to migrate applications from an existing messaging broker to new Amazon SQS / SNS applications. This is like an example of binding to their own API, instead of the standardized JMS API and AMQP, MMQT, STOMP protocols. It is clear that this provider with its solution may have higher performance, supported scalability, etc. From my point of view, if you use their libraries, and not standardized APIs, then there will be much more problems.

AWS has a Redshift database. This is a distributed database with a massively parallel architecture. You can load a large amount of your data into tables on multiple Redshft nodes in Amazon and perform analytical queries on large data sets. This is not an OLTP system, where it is important for you to perform small queries on a small number of records quite often with ACID guarantees. When working with Redshift, it is assumed that you do not have a large number of requests per unit of time, but they can count aggregates on a very large amount of data. This system is positioned by the vendor to upgrade your data warehouse (warehouse) on AWS and promise a simple data download. Which is not at all true.

An excerpt from the documentation on which types of Amazon Redshift supports . A rather meager set and if you need something for storing and processing data that is not listed here, it will be quite difficult for you to work. For example GUID.

Question from the hall "A JSON?"

- JSON can only be written as VARCHAR and there are several functions for working with JSON.

Comment from the audience "In Postgres, there is normal JSON support."

- Yes, it has support for this type of data and functions. But Redshift is based on PostgreSQL 8.0.2. There was a ParAccel project, if I’m not mistaken, this 2005 technology is a fork of the postgres to which a scheduler was added for distributed queries based on a massively parallel architecture. 5-6 years have passed and this project was licensed for the Amazon Web Servces platform and called Redshift. Something has been removed from the original Postgres, a lot has been added. Added that is connected with authentication / authorization in AWS, with roles, security in Amazon works fine. But if you need, for example, to connect from Redshift to another database using Foreign Data Source, then you will not find this. There are no functions for working with XML, functions for working with JSON once or twice.

When developing an application, you try not to depend on specific implementations and the application code depends only on abstractions. These facades and abstractions can be created by yourself, but in this case there are many ready-made libraries - facades. Which abstract code from specific implementations, from specific frameworks, it is clear that they can not support all the functionality, as a "common denominator" for technology. It is better to develop software relying on abstractions to simplify testing.

To emulate AWS, I’ll mention two options. The first is more honest and correct, but it works more slowly. The second is dirty and fast. I will tell you about the hack option - we try to create the entire infrastructure in one process - the tests and the cross-platform version work with Windows (where docker can not always earn you) will work faster.

The first method is perfect if you are developing in linux / macos and you have a docker, it will be better to use atlassian localstack . It is convenient to use testcontainers to integrate the localstack into the JVM.

But the same thing that stopped me from using lockalstack in docker on the project was that development was under Windows and nobody vouch for the work of the alpha version of docker when this project started ... Also, they will not be able to install a virtual machine with linux and docker in any company that is serious to information security. I'm not talking about working in a protected environment in investment banks and prohibiting almost all of the traffic firewalls there.

Let's consider options how to emulate Simple storage S3. This is not a regular Amazon file system, but rather a distributed object storage. In which you place your data as a BLOB, without the possibility of modifying and supplementing the data. There are similar full-fledged distributed repositories from other manufacturers. For example, Ceph distributed object storage allows you to work with its functionality using the S3 REST protocol and the existing client with minimal modification. But this is quite a heavy solution for the development and testing of java applications.

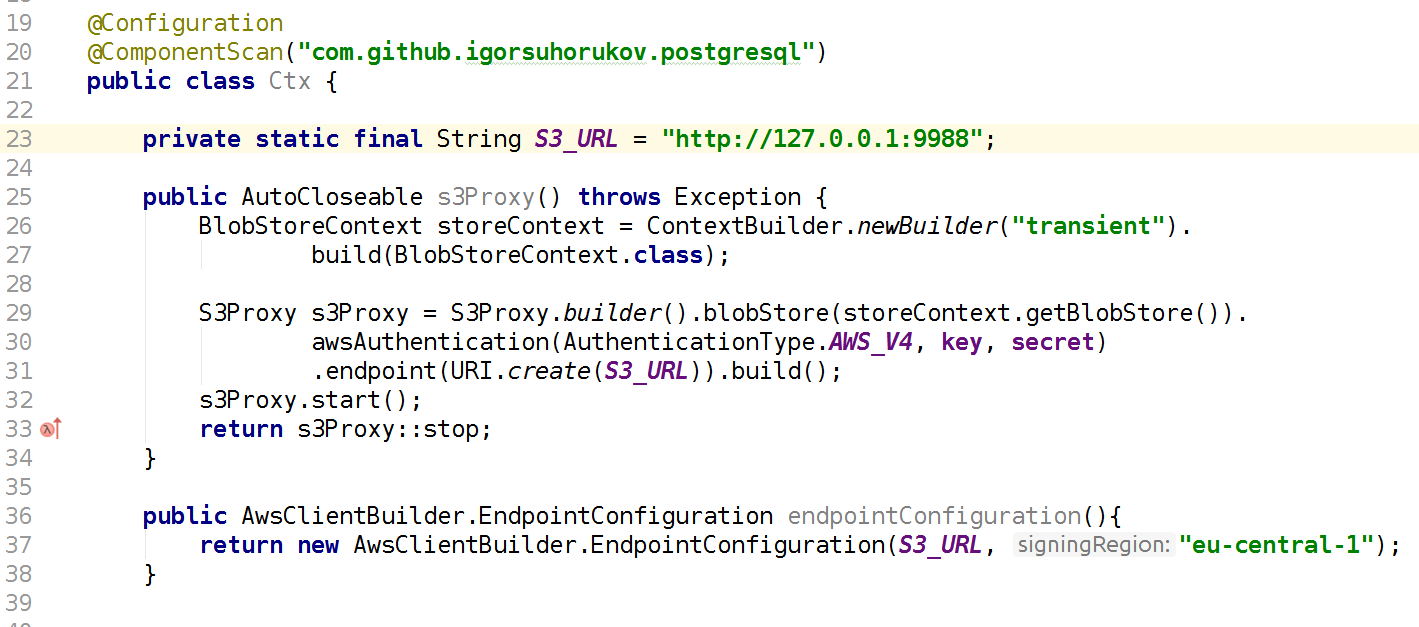

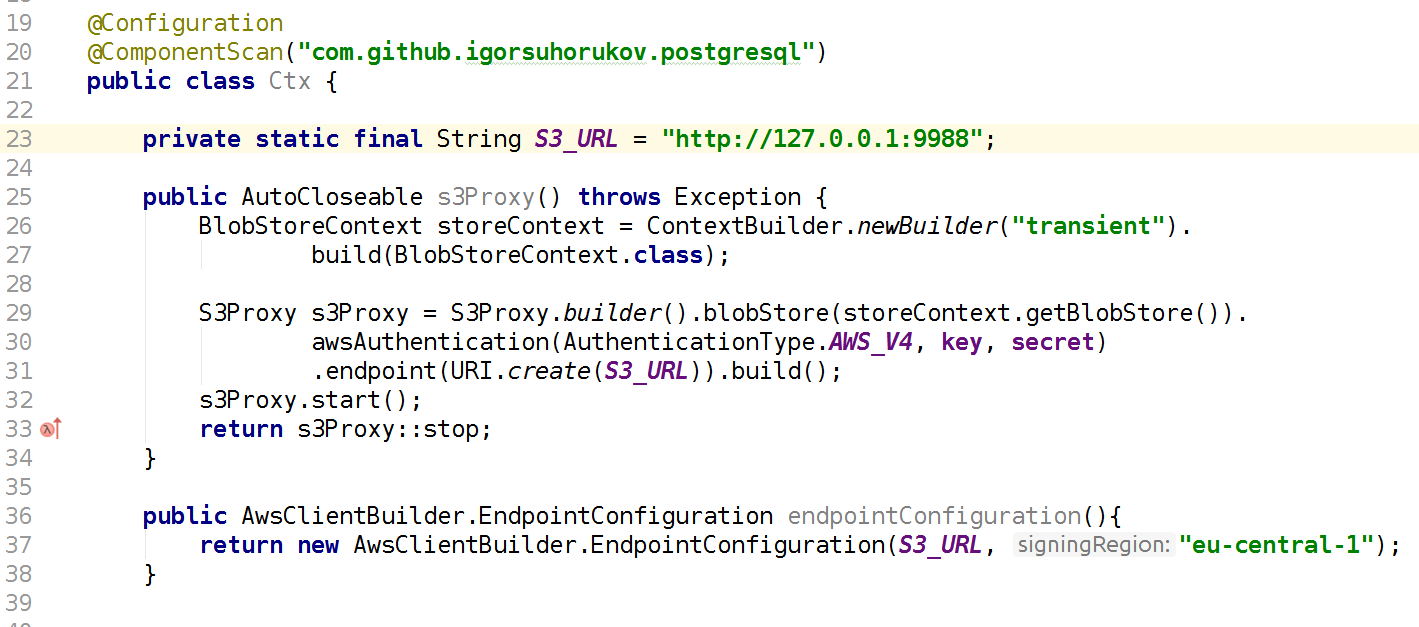

A faster and more suitable project is the java s3proxy library. It emulates the S3 REST protocol and translates it into the corresponding jcloud API calls and allows you to use many implementations for real reading and storage of data. It can broadcast calls to the Google App Engine API, Microsoft Azure API, but for tests it is more convenient to use jcloud transient in-memory storage. You also need to configure the AWS S3 authentication protocol version and specify the key and secret values, also configure the endpoint - the port and interface on which this S3 Proxy will listen. Accordingly, your code using the client's AWS SDK should connect in tests to the S3 AWS endpoint, and not the AWS region. Again, remember that s3proxy does not support all the functions of the S3 API, but all of our usage scenarios are emulated perfectly! Even Multipart upload for large files is supported by s3proxy.

Amazon Simple Queue Service is a queuing service. There is a queuing service elasticmq , which is written in scala and can be submitted to your application using Amazon SQS protocol. I did not use it in the project, so I will give the initialization code, trusting information from its developers.

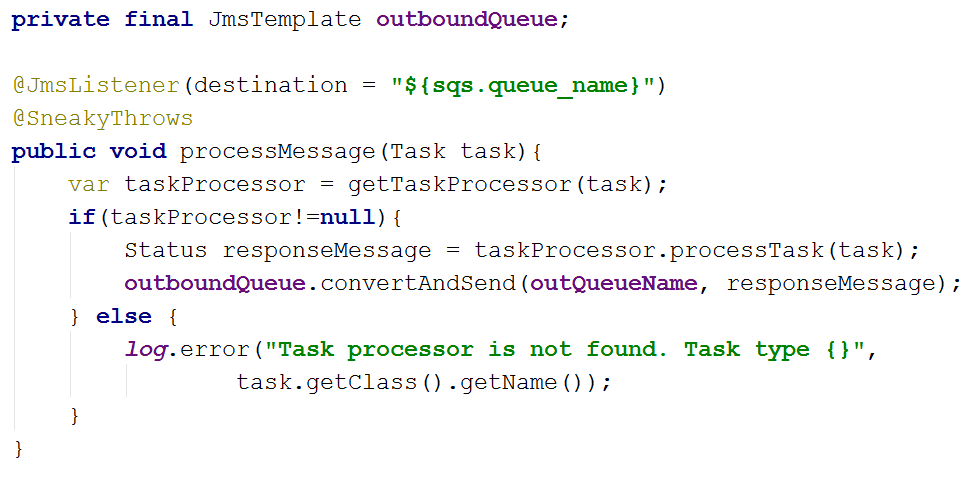

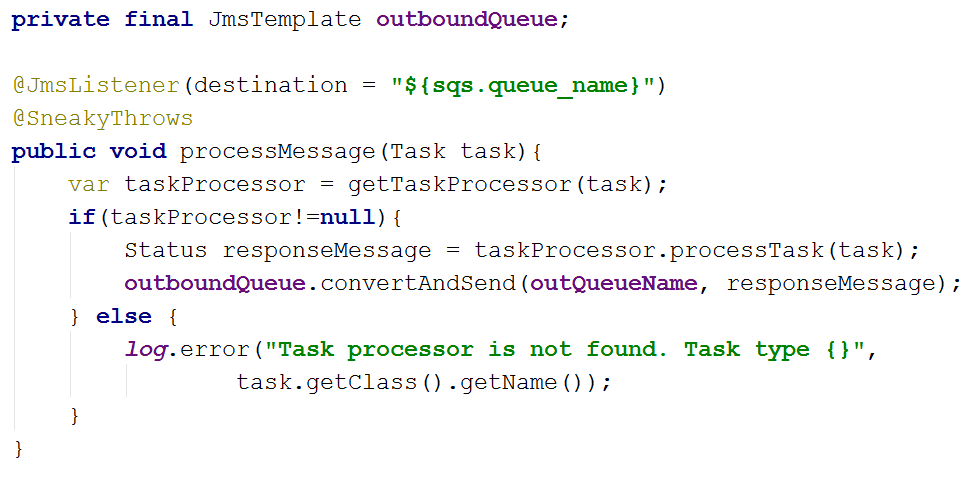

In the project, I went the other way and the code depends on the spring-jms abstractions JmsTemplate and JmsListener and in the project dependencies the JMS driver for SQS com.amazonaws is specified: amazon-sqs-java-messaging-lib. This is the main application code.

In the tests, we connect the Artemis-jms-server as the embedded JMS server for the tests and in the test Spring context instead of the SQS connection factory we use the connection factory Artemis. Artemis is the next version of Apache ActiveMQ a full-fledged, timely Message Oriented Middleware - not just a test solution. Perhaps we will move on to its use in the future, not only in autotests. Thus, using JMS abstraction in conjunction with Spring, we have simplified both the application code and the ability to easily test it. You just need to add the org.springframework.boot dependencies: spring-boot-starter-artemis and org.apache.activemq: artemis-jms-server.

In some tests, PostgreSQL can be emulated by replacing with H2Database . This will work if the tests are not acceptance tests and do not use specific PG functions. At the same time, H2 can emulate a subset of the PostgreSQL protocol wire without support for data types and functions. In our project, we use Foreing Data Wrapper, so this method does not work for us.

You can run real PostgreSQL. postgresql-embedded downloads the real distribution, more precisely the archive with binary files for the platform with which we run, unpacks it. In linux on tempfs in RAM, in windows in% TEMP%, the postgresql server process starts, configures the server settings and database settings. Due to the distribution features of builds, versions older than PG 11 do not work under Linux. For myself, I made a wrapper library that allows you to get PostgreSQL binary assemblies not only from an HTTP server but also from a maven repository. What can be very useful when working in isolated networks and building on a CI server without access to the Internet. Another convenience in working with my wrapper is the CDI component annotations, which makes it easy to use the component in a Spring context, for example. The implementation of the AutoClosable server interface appeared earlier than in the original project. No need to remember to stop the server, it will stop when you close the Spring context automatically.

At startup, you can create a database based on scripts, complementing Spring context with appropriate properties. We are now creating a database schema using flyway scripts for migrating the database schema, which are run every time the database is created in the tests.

To test the data after running the tests, use the spring-test-dbunit library. In the annotations to the test methods, we specify with what unloadings to compare the state of the database. This eliminates the need to write code for working with dbunit, just add the listener libraries to the test code. You can specify in which order the data is removed from the tables, after the test method is completed, if the database context is reused between tests. In 2019, there is a more modern approach implemented in the database-rider , which works with junit5. You can see an example of use, for example, here .

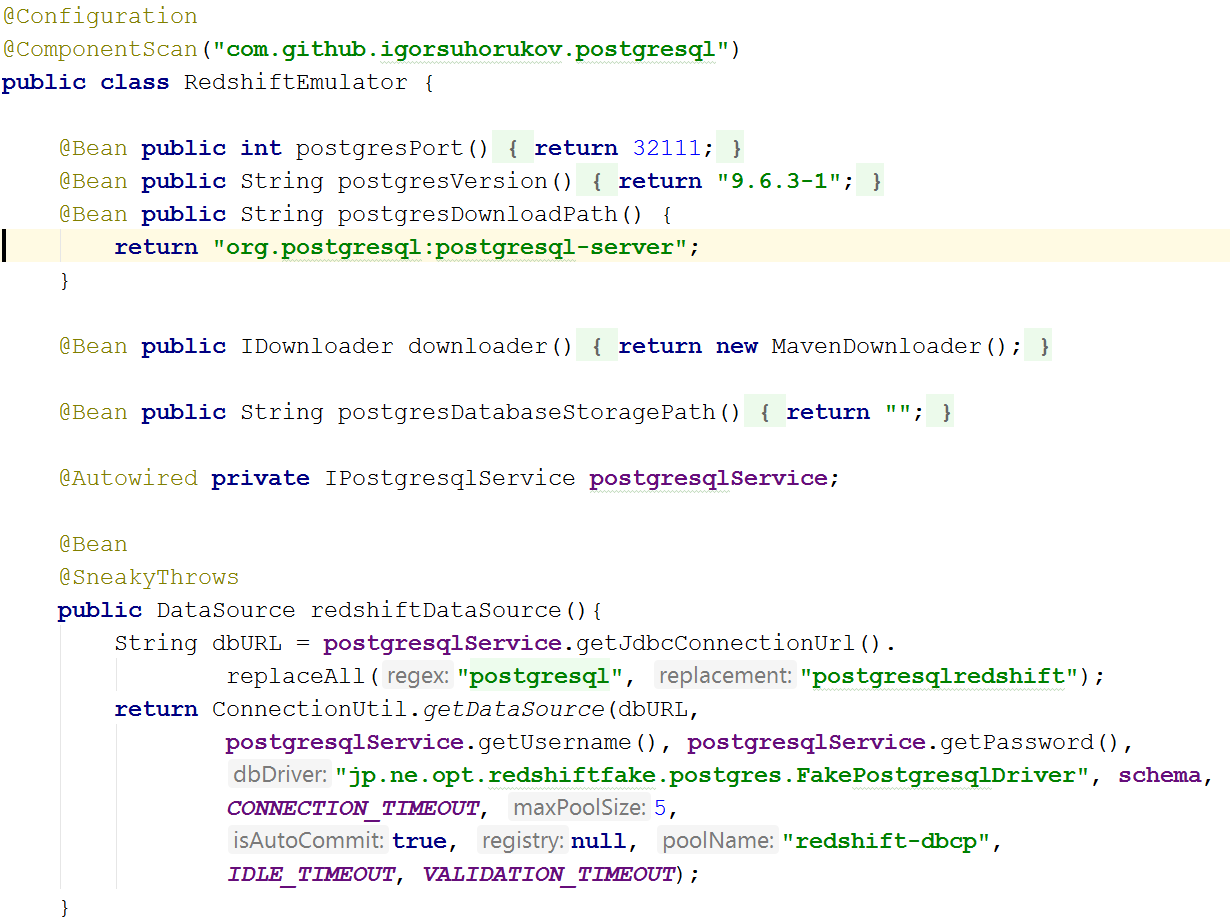

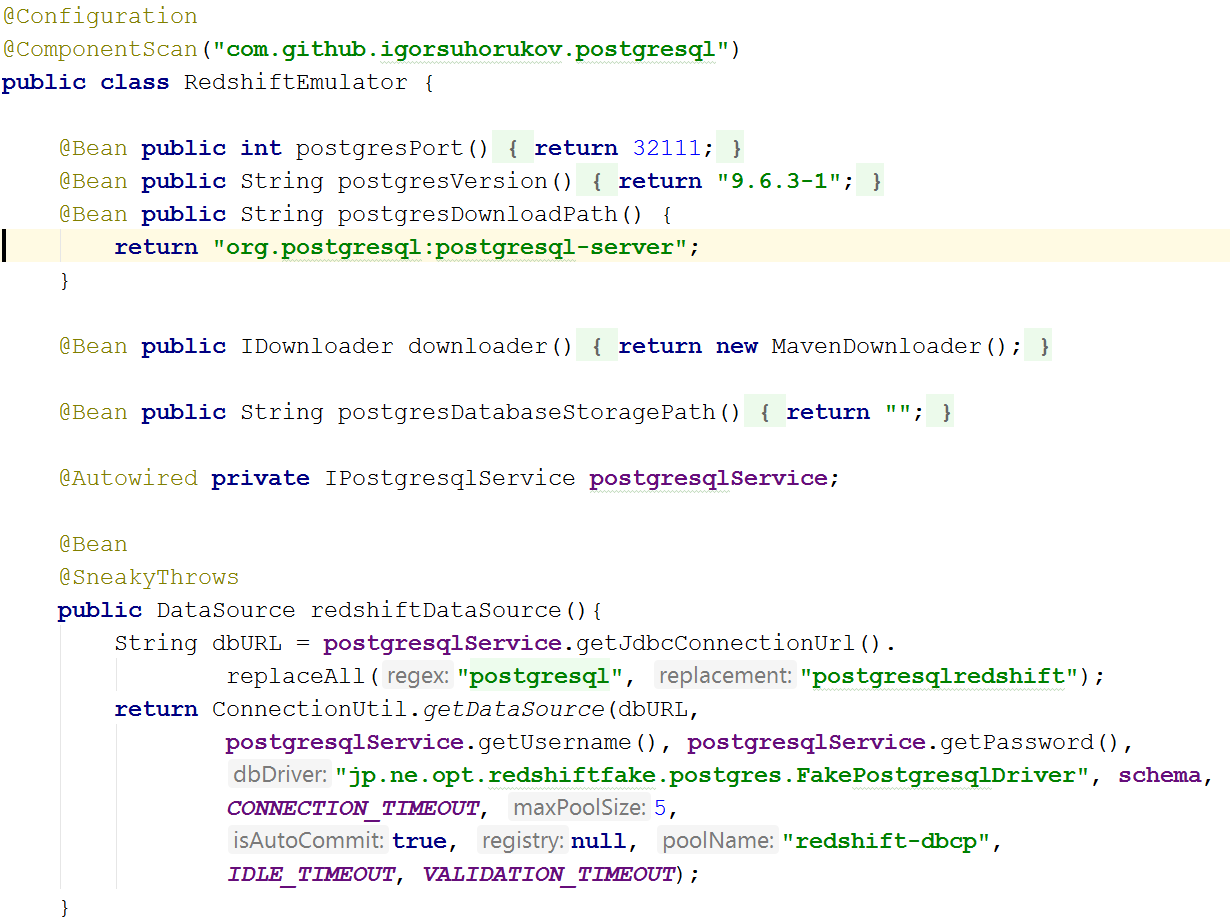

The hardest thing turned out to be to emulate Amazon Redshift. There is a project redshift-fake-driver project focused on emulating batch data loading to an analytic database from AWS. The jdbc: postgresqlredshift protocol emulator implemented the commands COPY, UNLOAD, all other commands are delegated to the regular JDBC PostgreSQL driver.

Therefore, the tests will not work the same as in Redshift update operation, which uses another table as a data source for updating (the syntax is different in Redshift and PostgreSQL 9+. Also noticed different interpretations of quoting lines in SQL commands between these databases.

Due to the nature of the architecture of the real Redshift database, insertion, update, and deletion of data is rather slow and expensive in terms of I / O operations. It is possible to insert data with acceptable performance only in large “batches” and the COPY command just allows you to load data from the S3 distributed file system. This command in the database supports several data formats AVRO, CSV, JSON, Parquet, ORC and TXT. A project emulator concentrates on CSV, TXT, JSON.

So, in order to emulate Redshift in tests, you need to launch the PostgreSQL database as mentioned before and start S3 emulation of the repository, and when creating a connection to a postgres you just need to add redshift-fake-driver to the classpath and specify the driver class jp.ne.opt.redshiftfake.postgres. FakePostgresqlDriver. After that, you can use the same flyway to migrate the database schema, and dbunit is already familiar to compare the data after running the tests.

I wonder how many readers use AWS and Redshift? Write in the comments about your experience.

Using only Open Source projects, the team was able to accelerate development in the AWS environment, save money from the project budget and not stop the team’s work when the AWS subnets are locked by Roskomnadzor.

First of all, it is saving - saving time for development and debugging, and last but not least - saving money from the project budget. It is clear that the emulator will not be 100% identical to the source environment that we are trying to emulate. But in order to accelerate the development and automation of the process, the existing similarity should suffice. The most topical of what happened in 2018 with AWS is blocking by providers of IP addresses of AWS subnets in the Russian Federation. And these locks have affected our infrastructure hosted in the Amazon cloud. If you plan to use AWS technology and place the project in this cloud, then emulation is more than worthwhile for development and testing.

')

In the publication I will tell how we managed to perform such a trick with the services S3, SQS, RDS PostgreSQL and Redshift when migrating the data warehouse existing on AWS for many years.

This is only a part of my last year's report at the mitap , it corresponds to the topics of the AWS and Java hubs. The second part relates to PostgreSQL, Redshift and column databases and its text version can be published in the corresponding hubs, videos and slides are on the conference website .

When developing an application for AWS during the blocking of AWS subnets, the team practically did not notice them in the daily development process of the new functionality. The tests also worked and you can debug the application. And only when trying to view application logs in logentries, metrics in SignalFX, or data analysis in Redshift / PostgreSQL RDS was disappointing - services were not available through the network of a Russian provider. AWS emulation helped us to ignore this and avoid much greater delays when working with the Amazon cloud over a VPN network.

Every cloud provider “under the hood” has a lot of dragons and you should not give in to advertising. We must understand why all this is necessary for the service provider. Of course, there are advantages to the existing infrastructure of Amazon, Microsoft and Google. And when they tell you that everything was done only so that it was convenient for you to develop, they are most likely trying to plant you on their needle and give the first dose for free. That then did not get down from the infrastructure and specific technology. Therefore, we will try to avoid binding to the supplier (vendor lock-in). It is clear that it is not always possible to completely abstract away from specific solutions and I think that quite often in a project it is possible to abstract almost by 90%. But the remaining 10% of the project is tied to the technology provider, which is very important - this is either an optimization of the application performance, or the unique functions of which are not found anywhere else. You should always keep in mind the advantages and disadvantages of technologies and secure yourself as much as possible, not to “sit down” on a specific API of the cloud infrastructure provider.

Amazon on its website writes about the processing of messages . The essence and abstraction of messaging technologies is the same everywhere, although there are nuances - the transfer of messages through queues or through topics. AWS recommends using Apache ActiveMQ provided and managed by them to migrate applications from an existing messaging broker to new Amazon SQS / SNS applications. This is like an example of binding to their own API, instead of the standardized JMS API and AMQP, MMQT, STOMP protocols. It is clear that this provider with its solution may have higher performance, supported scalability, etc. From my point of view, if you use their libraries, and not standardized APIs, then there will be much more problems.

AWS has a Redshift database. This is a distributed database with a massively parallel architecture. You can load a large amount of your data into tables on multiple Redshft nodes in Amazon and perform analytical queries on large data sets. This is not an OLTP system, where it is important for you to perform small queries on a small number of records quite often with ACID guarantees. When working with Redshift, it is assumed that you do not have a large number of requests per unit of time, but they can count aggregates on a very large amount of data. This system is positioned by the vendor to upgrade your data warehouse (warehouse) on AWS and promise a simple data download. Which is not at all true.

An excerpt from the documentation on which types of Amazon Redshift supports . A rather meager set and if you need something for storing and processing data that is not listed here, it will be quite difficult for you to work. For example GUID.

Question from the hall "A JSON?"

- JSON can only be written as VARCHAR and there are several functions for working with JSON.

Comment from the audience "In Postgres, there is normal JSON support."

- Yes, it has support for this type of data and functions. But Redshift is based on PostgreSQL 8.0.2. There was a ParAccel project, if I’m not mistaken, this 2005 technology is a fork of the postgres to which a scheduler was added for distributed queries based on a massively parallel architecture. 5-6 years have passed and this project was licensed for the Amazon Web Servces platform and called Redshift. Something has been removed from the original Postgres, a lot has been added. Added that is connected with authentication / authorization in AWS, with roles, security in Amazon works fine. But if you need, for example, to connect from Redshift to another database using Foreign Data Source, then you will not find this. There are no functions for working with XML, functions for working with JSON once or twice.

When developing an application, you try not to depend on specific implementations and the application code depends only on abstractions. These facades and abstractions can be created by yourself, but in this case there are many ready-made libraries - facades. Which abstract code from specific implementations, from specific frameworks, it is clear that they can not support all the functionality, as a "common denominator" for technology. It is better to develop software relying on abstractions to simplify testing.

To emulate AWS, I’ll mention two options. The first is more honest and correct, but it works more slowly. The second is dirty and fast. I will tell you about the hack option - we try to create the entire infrastructure in one process - the tests and the cross-platform version work with Windows (where docker can not always earn you) will work faster.

The first method is perfect if you are developing in linux / macos and you have a docker, it will be better to use atlassian localstack . It is convenient to use testcontainers to integrate the localstack into the JVM.

But the same thing that stopped me from using lockalstack in docker on the project was that development was under Windows and nobody vouch for the work of the alpha version of docker when this project started ... Also, they will not be able to install a virtual machine with linux and docker in any company that is serious to information security. I'm not talking about working in a protected environment in investment banks and prohibiting almost all of the traffic firewalls there.

Let's consider options how to emulate Simple storage S3. This is not a regular Amazon file system, but rather a distributed object storage. In which you place your data as a BLOB, without the possibility of modifying and supplementing the data. There are similar full-fledged distributed repositories from other manufacturers. For example, Ceph distributed object storage allows you to work with its functionality using the S3 REST protocol and the existing client with minimal modification. But this is quite a heavy solution for the development and testing of java applications.

A faster and more suitable project is the java s3proxy library. It emulates the S3 REST protocol and translates it into the corresponding jcloud API calls and allows you to use many implementations for real reading and storage of data. It can broadcast calls to the Google App Engine API, Microsoft Azure API, but for tests it is more convenient to use jcloud transient in-memory storage. You also need to configure the AWS S3 authentication protocol version and specify the key and secret values, also configure the endpoint - the port and interface on which this S3 Proxy will listen. Accordingly, your code using the client's AWS SDK should connect in tests to the S3 AWS endpoint, and not the AWS region. Again, remember that s3proxy does not support all the functions of the S3 API, but all of our usage scenarios are emulated perfectly! Even Multipart upload for large files is supported by s3proxy.

Amazon Simple Queue Service is a queuing service. There is a queuing service elasticmq , which is written in scala and can be submitted to your application using Amazon SQS protocol. I did not use it in the project, so I will give the initialization code, trusting information from its developers.

In the project, I went the other way and the code depends on the spring-jms abstractions JmsTemplate and JmsListener and in the project dependencies the JMS driver for SQS com.amazonaws is specified: amazon-sqs-java-messaging-lib. This is the main application code.

In the tests, we connect the Artemis-jms-server as the embedded JMS server for the tests and in the test Spring context instead of the SQS connection factory we use the connection factory Artemis. Artemis is the next version of Apache ActiveMQ a full-fledged, timely Message Oriented Middleware - not just a test solution. Perhaps we will move on to its use in the future, not only in autotests. Thus, using JMS abstraction in conjunction with Spring, we have simplified both the application code and the ability to easily test it. You just need to add the org.springframework.boot dependencies: spring-boot-starter-artemis and org.apache.activemq: artemis-jms-server.

In some tests, PostgreSQL can be emulated by replacing with H2Database . This will work if the tests are not acceptance tests and do not use specific PG functions. At the same time, H2 can emulate a subset of the PostgreSQL protocol wire without support for data types and functions. In our project, we use Foreing Data Wrapper, so this method does not work for us.

You can run real PostgreSQL. postgresql-embedded downloads the real distribution, more precisely the archive with binary files for the platform with which we run, unpacks it. In linux on tempfs in RAM, in windows in% TEMP%, the postgresql server process starts, configures the server settings and database settings. Due to the distribution features of builds, versions older than PG 11 do not work under Linux. For myself, I made a wrapper library that allows you to get PostgreSQL binary assemblies not only from an HTTP server but also from a maven repository. What can be very useful when working in isolated networks and building on a CI server without access to the Internet. Another convenience in working with my wrapper is the CDI component annotations, which makes it easy to use the component in a Spring context, for example. The implementation of the AutoClosable server interface appeared earlier than in the original project. No need to remember to stop the server, it will stop when you close the Spring context automatically.

At startup, you can create a database based on scripts, complementing Spring context with appropriate properties. We are now creating a database schema using flyway scripts for migrating the database schema, which are run every time the database is created in the tests.

To test the data after running the tests, use the spring-test-dbunit library. In the annotations to the test methods, we specify with what unloadings to compare the state of the database. This eliminates the need to write code for working with dbunit, just add the listener libraries to the test code. You can specify in which order the data is removed from the tables, after the test method is completed, if the database context is reused between tests. In 2019, there is a more modern approach implemented in the database-rider , which works with junit5. You can see an example of use, for example, here .

The hardest thing turned out to be to emulate Amazon Redshift. There is a project redshift-fake-driver project focused on emulating batch data loading to an analytic database from AWS. The jdbc: postgresqlredshift protocol emulator implemented the commands COPY, UNLOAD, all other commands are delegated to the regular JDBC PostgreSQL driver.

Therefore, the tests will not work the same as in Redshift update operation, which uses another table as a data source for updating (the syntax is different in Redshift and PostgreSQL 9+. Also noticed different interpretations of quoting lines in SQL commands between these databases.

Due to the nature of the architecture of the real Redshift database, insertion, update, and deletion of data is rather slow and expensive in terms of I / O operations. It is possible to insert data with acceptable performance only in large “batches” and the COPY command just allows you to load data from the S3 distributed file system. This command in the database supports several data formats AVRO, CSV, JSON, Parquet, ORC and TXT. A project emulator concentrates on CSV, TXT, JSON.

So, in order to emulate Redshift in tests, you need to launch the PostgreSQL database as mentioned before and start S3 emulation of the repository, and when creating a connection to a postgres you just need to add redshift-fake-driver to the classpath and specify the driver class jp.ne.opt.redshiftfake.postgres. FakePostgresqlDriver. After that, you can use the same flyway to migrate the database schema, and dbunit is already familiar to compare the data after running the tests.

I wonder how many readers use AWS and Redshift? Write in the comments about your experience.

Using only Open Source projects, the team was able to accelerate development in the AWS environment, save money from the project budget and not stop the team’s work when the AWS subnets are locked by Roskomnadzor.

Source: https://habr.com/ru/post/444472/

All Articles