The book "Applied analysis of textual data in Python"

Technologies for analyzing textual information are rapidly changing under the influence of machine learning. Neural networks from theoretical scientific research have moved into real life, and text analysis is actively integrated into software solutions. Neural networks are capable of solving the most complex problems of natural language processing, no one is surprised by machine translation, “conversation” with a robot in an online store, paraphrasing, answering questions and maintaining a dialogue. Why, then, Siri, Alex and Alice do not want to understand us, Google doesn’t find what we are looking for, and machine translators amuse us with examples of “translation difficulties” from Chinese to Albanian? The answer lies in the details - in algorithms that work correctly in theory, but difficult to implement in practice. Learn how to use machine learning techniques to analyze text in real-world problems using features and Python libraries. From searching for a model and preprocessing data, you will proceed to methods of classifying and clustering texts, then proceed to visual interpretation, analysis of graphs, and after becoming familiar with scaling techniques, learn how to use deep learning for text analysis.

Technologies for analyzing textual information are rapidly changing under the influence of machine learning. Neural networks from theoretical scientific research have moved into real life, and text analysis is actively integrated into software solutions. Neural networks are capable of solving the most complex problems of natural language processing, no one is surprised by machine translation, “conversation” with a robot in an online store, paraphrasing, answering questions and maintaining a dialogue. Why, then, Siri, Alex and Alice do not want to understand us, Google doesn’t find what we are looking for, and machine translators amuse us with examples of “translation difficulties” from Chinese to Albanian? The answer lies in the details - in algorithms that work correctly in theory, but difficult to implement in practice. Learn how to use machine learning techniques to analyze text in real-world problems using features and Python libraries. From searching for a model and preprocessing data, you will proceed to methods of classifying and clustering texts, then proceed to visual interpretation, analysis of graphs, and after becoming familiar with scaling techniques, learn how to use deep learning for text analysis.What is told in this book

This book discusses the use of machine learning methods for analyzing text using the Python libraries just listed. The applied nature of the book suggests that we will focus not on academic linguistics or statistical models, but on the effective deployment of models trained on text within the application.

The text analysis model we propose is directly related to the machine learning process — a search for a model consisting of attributes, an algorithm, and hyperparameters that would give the best results on training data in order to evaluate unknown data. This process begins with the creation of a training data set, which in the text analysis domain is called a corpus. Then we explore the methods of extracting features and preprocessing to represent the text in the form of numerical data that are understandable to machine learning methods. Next, having become acquainted with some of the basics, we turn to the study of methods of classifying and clustering text, the story of which completes the first chapters of the book.

')

The following chapters focus on expanding models with richer feature sets and creating text analysis applications. First we will look at how to present and implement the context in the form of signs, then move on to visual interpretation to control the process of choosing a model. Then we will see how to analyze the complex relationships that are extracted from the text using the techniques of analyzing graphs. After that, we will turn our eyes towards dialogue agents and deepen our understanding of the syntactic and semantic analysis of the text. In conclusion, the book will present a practical discussion of methods for scaling text analysis in multiprocessor systems using Spark, and, finally, we will consider the next stage of text analysis: deep learning.

Who is this book for?

This book is addressed to Python programmers interested in using natural language processing and machine learning techniques in their software products. We do not assume that our readers have special academic or mathematical knowledge and instead focus on tools and techniques, rather than lengthy explanations. First of all, this book discusses the analysis of texts in English, so readers can benefit from at least a basic knowledge of grammatical entities, such as nouns, verbs, adverbs and adjectives, and how they are related. Readers who have no experience in machine learning and linguistics, but who have programming skills in Python, will not feel lost in the study of the concepts that we present.

Excerpt Extracting graphs from text

Extracting a graph from text is a difficult task. Its solution usually depends on the subject area, and, generally speaking, the search for structured elements in unstructured or semi-structured data is determined by context-sensitive analytic issues.

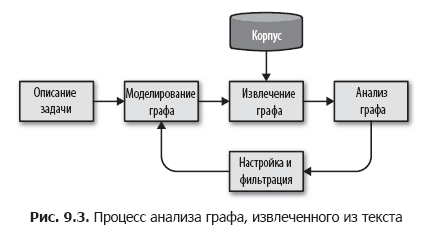

We propose to break this task into smaller steps by organizing a simple process of analyzing graphs, as shown in Fig. 9.3.

In this process, we first define the entities and the connections between them, based on the description of the problem. Further, on the basis of this scheme, we determine the method for separating a graph from a corpus using metadata, documents in the corpus, and phrases or lexemes in documents for extracting data and links between them. The method of allocating a graph is a cyclic process that can be applied to the body, generate a graph and save this graph to disk or memory for further analytical processing.

At the analysis stage, calculations are made on the extracted graph, for example, clustering, structural analysis, filtering, or evaluation, and a new graph is created, which is used in applications. According to the results of the analysis phase, we can return to the beginning of the cycle, refine the methodology and scheme, extract or collapse groups of nodes or edges in order to try to achieve more accurate results.

Creating a social graph

Consider our corpus of news articles and the task of modeling the links between different entities in the text. If we consider the issue of differences in coverage between different information agencies, we can construct a graph of the elements representing the names of publications, the names of authors and sources of information. And if the goal is to unite references to one entity in multiple articles, in addition to demographic details, our networks can fix the form of appeal (respectful and others). Entities of interest to us may be located in the structure of the documents themselves or be contained directly in the text.

Suppose our goal is to find out people, places and anything connected with each other in our documents. In other words, we need to build a social network by performing a series of transformations, as shown in Fig. 9.4. We begin the construction of the graph with the application of the class EntityExtractor, created in Chapter 7. Then we add converters, one of which searches for pairs of related entities, and the second converts these pairs into a graph.

Search for entity pairs

Our next step is to create the EntityPairs class, which retrieves documents in the form of entity lists (created by the EntityExtractor class from Chapter 7). This class should act as a converter in the Pipeline pipeline from Scikit-Learn, which means inherit the BaseEstimator and TransformerMixin classes, as described in Chapter 4. It is assumed that the entities in the same document are unconditionally related, so we add the pairs method using the itertools function .permutations to create all possible pairs of entities in one document. Our transform method will call pairs for each document in the corpus:

import itertools from sklearn.base import BaseEstimator, TransformerMixin class EntityPairs(BaseEstimator, TransformerMixin): def __init__(self): super(EntityPairs, self).__init__() def pairs(self, document): return list(itertools.permutations(set(document), 2)) def fit(self, documents, labels = None): return self def transform(self, documents): return [self.pairs(document) for document in documents] Now you can sequentially extract entities from documents and make pairs. But we cannot yet distinguish pairs of entities that occur frequently from pairs that occur only once. We must somehow encode the weight of the relationship between the entities in each pair, which we will do in the next section.

Property Graphs

The mathematical model of the graph determines only the sets of nodes and edges and can be represented as an adjacency matrix, which can be used in a variety of calculations. But it does not support the mechanism for modeling strength or types of relationships. Do two entities appear in just one document or in many? Do they occur together in articles of a particular genre? To support such reasoning, we need some way to preserve meaningful properties in the nodes and edges of the graph.

The model of the property graph allows you to embed more information into the graph, thereby expanding our capabilities. In the property column, nodes are objects with incoming and outgoing edges and, as a rule, containing a type field, resembling a table in a relational database. Edges are objects that define the starting and ending points; these objects typically contain a label field that identifies the type of connection, and a weight field that defines the strength of the connection. Using graphs for text analysis, we often use nouns as nodes, and verbs as edges. After the transition to the modeling stage, this will allow us to describe the types of nodes, link labels and the intended structure of the graph.

About the authors

Benjamin Bengfort (Benjamin Bengfort) - a specialist in data science, who lives in Washington, inside the ring highway, but completely ignoring the policy (a common thing for the District of Columbia) and preferring to engage in technology. He is currently working on his doctoral thesis at the University of Maryland, where he studies machine learning and distributed computing. There are robots in his laboratory (although this is not his favorite area), and to his great chagrin, assistants constantly equip these robots with knives and tools, probably with the aim of winning a culinary competition. Watching the robot trying to chop a tomato, Benjamin prefers to host himself in the kitchen, where he cooks French and Hawaiian dishes, as well as kebabs and barbecues of all kinds. A professional programmer by training, a vocational data researcher, Benjamin often writes articles covering a wide range of issues - from natural language processing to researching data in Python and using Hadoop and Spark in analytics.

Dr. Rebecca Bilbro is a data science specialist, Python programmer, teacher, lecturer, and author of articles; lives in Washington, DC He specializes in visual assessment of machine learning results: from feature analysis to model selection and setting up hyper parameters. Conducts research in the field of natural language processing, building semantic networks, resolving entities and processing information with a large number of dimensions. As an active member of the open source community of users and developers, Rebecca is pleased to collaborate with other developers on projects such as Yellowbrick (a Python package whose goal is predictive modeling in the manner of a black box). In his spare time, he often rides a bike with his family or practices playing the ukulele. She received her doctoral degree at the University of Illinois, in Urbana-Champaign, where she studied practical communication and visualization techniques in engineering.

»More information about the book can be found on the publisher's website.

» Table of Contents

» Excerpt

For Habrozhiteley a discount of 20% for the coupon - Python

Upon payment of the paper version of the book, an electronic version of the book is sent to the e-mail.

PS: 7% of the cost of the book will go to the translation of new computer books, a list of books submitted to the printing press here .

Source: https://habr.com/ru/post/444384/

All Articles