An extensive response to the comment, as well as a little about the life of providers in the Russian Federation

Spurred me on this post here this comment here .

I bring it here:

')

Indeed, kaleman almost guessed the reason for the problems with mail.ru (although for a long time we refused to believe in this).

Further will be divided into two parts:

Oh, that's a pretty long story.

The fact is that in order to implement the requirements of the state (in more detail in the second part) we purchased, set up, installed some equipment, both for filtering prohibited resources and for implementing NAT translations of subscribers.

Some time ago, we finally rebuilt the core of the network so that all subscriber traffic passed through this equipment strictly in the right direction.

A few days ago they turned on the filtering of the ban on it (at the same time leaving the old system to work) - everything seemed to go well.

Then - gradually began to include for different parts of subscribers NAT on this equipment. In appearance, everything, too, seems to have gone well.

But today, having turned on the NAT equipment for the next part of subscribers, from the very morning we were faced with a decent number of complaints about the unavailability or partial availability of mail.ru and other Mail Ru Group resources.

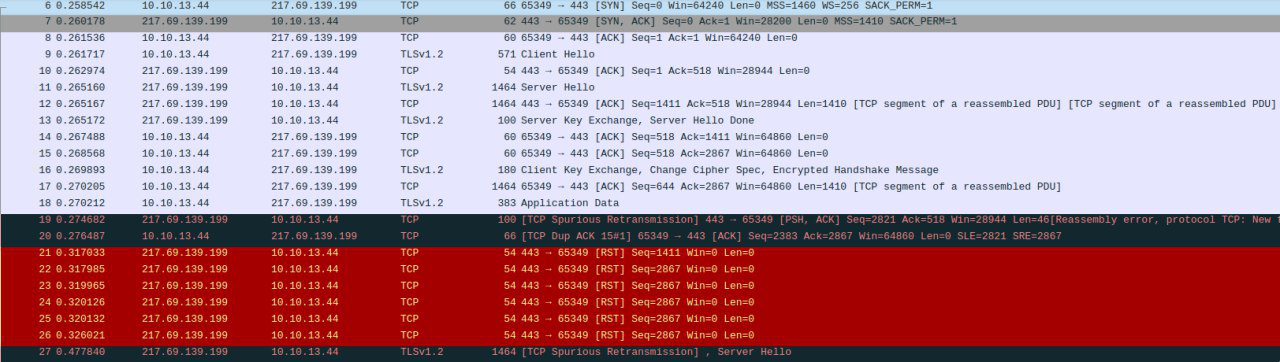

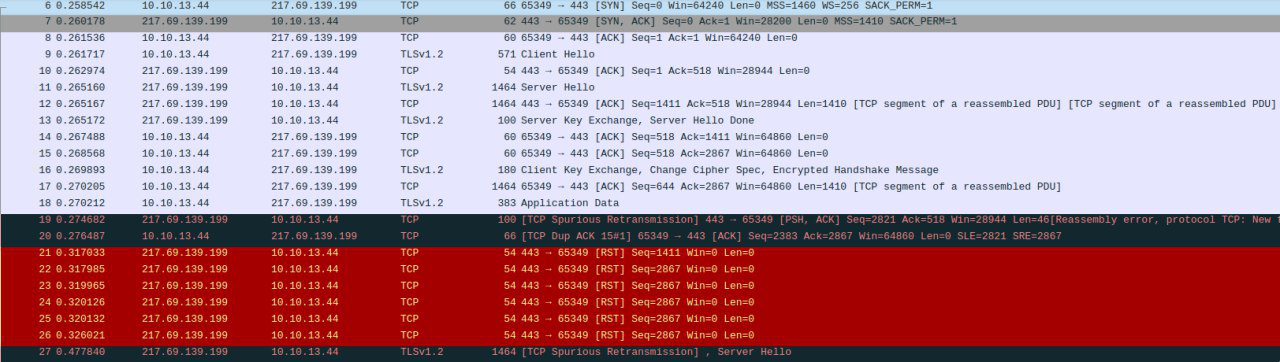

Began to check: something somewhere sometimes , occasionally sends TCP RST in response to requests exclusively to mail.ru networks. Moreover, it sends an incorrectly generated (without ACK), clearly artificial TCP RST. It looked like this:

Naturally, the first thoughts were about the new equipment: a terrible DPI, no confidence in it, you never know what it is - after all, TCP RST is a fairly common thing among the means of blocking.

We also put forward the assumption that kaleman is filtering someone “superior” —but they were immediately rejected.

Firstly, we have enough sane uplinks to not suffer like this :)

Secondly, we are connected to several IXs in Moscow, and the traffic to mail.ru goes exactly through them - and they have neither duties, nor any other motive to filter traffic.

The next half of the day was spent on what is commonly called shamanism - along with the vendor of the equipment, for which they were thankful, they did not give up :)

In the second half of the day, a virtual machine was allocated, going online as a regular user, and representatives of the vendor were given access to it and the equipment. The shamanism continued :)

In the end, the representative of the vendor confidently stated that the piece of iron had absolutely nothing to do with it: rst `s come from somewhere higher.

After that, in the evening - there was nothing left but to return to the assumption of strange filtering somewhere higher.

I looked through what IX traffic now goes to the networks of the IWG and just paid off the bgp-sessions to it. And - lo and behold! - everything returned to normal :(

On the one hand, it is very unfortunate that all day was spent searching for a problem, although it was solved in five minutes.

On the other hand:

- in my memory this is an unprecedented thing. As I wrote above - IX `s really there is no point in filtering transit traffic. They usually have hundreds of gigabits / terabits per second. I just until the last could not seriously assume.

- an incredibly successful set of circumstances: a new complex iron, which does not have much confidence and from which it is not clear what can be expected - sharpened just for blocking resources, including TCP RST `s

Currently, the NOC of this internet exchange is looking for a problem. According to them, (and I believe them) they have no specially deployed filtering system. But, thanks to heaven, the next quest is no longer our problem :)

It was a small attempt to justify, please understand and forgive :)

PS: I deliberately do not call either the manufacturer DPI / NAT, or IX (I, in fact, do not even have special claims to them, the main thing is to understand what it was)

I spent the last weeks, significantly rebuilding the core of the network, producing a bunch of “profitable” manipulations, with the risk of significantly affecting live user traffic. Given the goals, results and consequences of all this - all this is morally hard enough. Especially - once again listening to the wonderful-hearted speeches about the protection of the stability of the RuNet, sovereignty, etc. etc.

In this section, I will try to tell the "evolution" of the core network of a typical Internet service provider over the past ten years.

A dozen years ago.

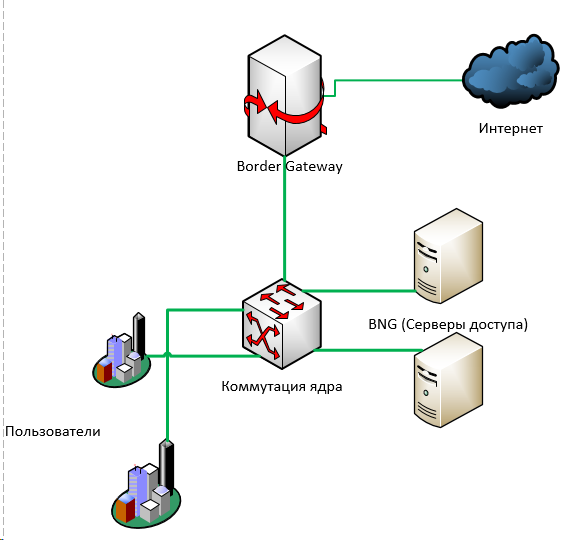

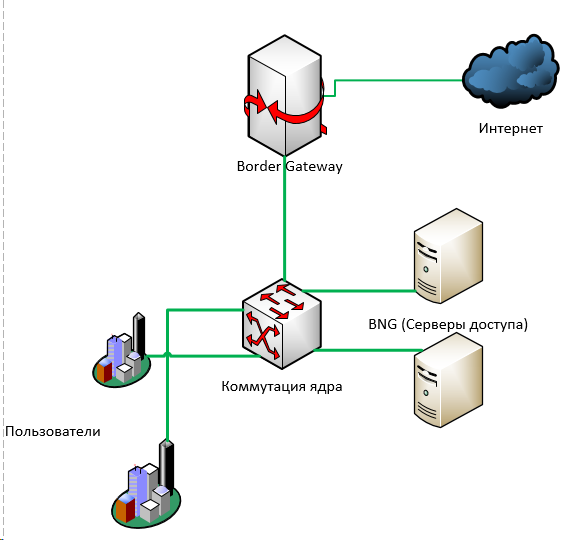

In those blessed times, the core of the provider network could be as simple and reliable as a traffic jam:

In this very, very simplified picture there are no highways, rings, ip / mpls routing.

Its essence is that user traffic ultimately came to the core level switching — from where it went to BNG , from which, as a rule, back to the kernel switching, and then “to the exit” —through one or more border gateway's to the Internet.

Such a scheme is very, very easy to back up both on L3 (dynamic routing) and L2 (MPLS).

You can put N + 1 anything: access servers, switches, boarders - and somehow reserve them for an automatic file server.

After a few years, it became clear to everyone in Russia that it was impossible to continue living like this: it was urgent to protect children from the corrupting influence of the network.

There was a need to urgently find ways to filter user traffic.

There are different approaches.

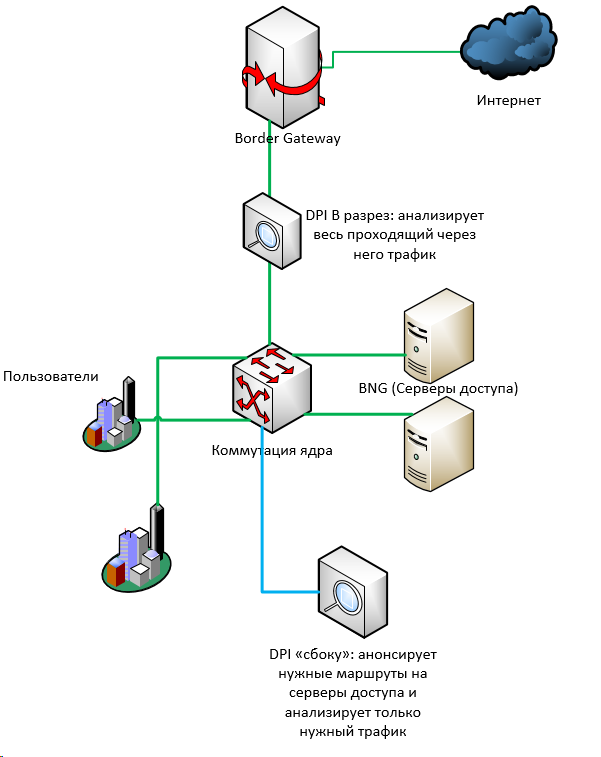

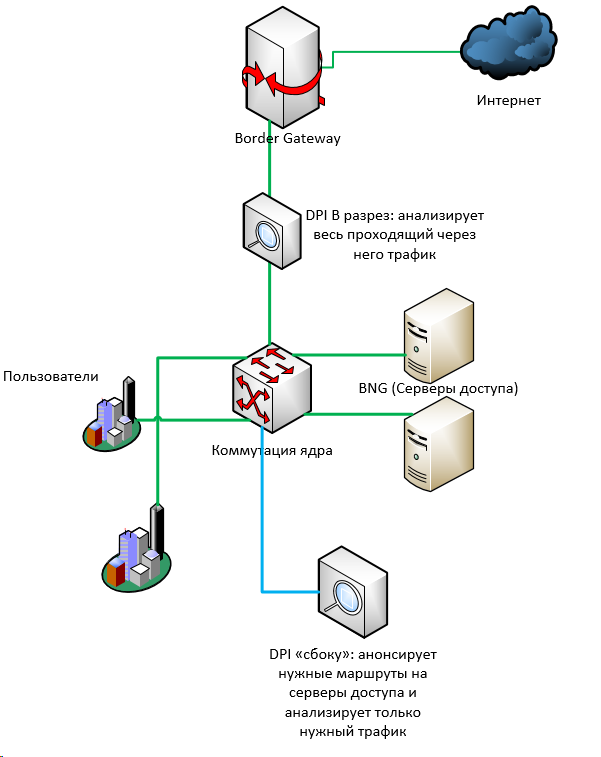

In a not very good case - something is put "in the cut": between user traffic and the Internet. The traffic passing through this “something” is analyzed and, for example, a fake packet with a redirect is sent to the side of the subscriber.

In the best case - if the traffic volumes allow - you can make a small feint with your ears: send only the traffic coming from users to the addresses that need to be filtered (for this you can either take from the registry the IP addresses specified there, or additionally resolve the existing ones). in the registry domains).

At one time for this purpose, I wrote a simple mini-dpi - although even the language does not turn so to call it. It is very simple and not very productive - however, it allowed us, and dozens (if not hundreds) of other providers, not to spread millions on industrial DPI-systems at once, but gave several additional years of time.

Now everything looked like this:

After another couple of years , everyone already had auditors; resources in the registry became more and more. For some old equipment (for example, cisco 7600), the scheme with “side filtering” became simply inapplicable: the number of routes on 76 platforms is limited to about nine hundred thousand, while the number of only IPv4 routes is now approaching 800 thousand. And if ipv6 is also ... And how many are there? 900000 individual addresses in the bath pkn? =)

Someone switched to a scheme with the mirroring of all trunk traffic to the filtering server, which should analyze the entire stream and send something bad to both sides (to the sender and receiver) RST when something bad is found.

However, the more traffic, the less applicable such a scheme. At the slightest delay in processing, the mirrored traffic will simply fly by unnoticed, and the provider will receive a protocol on fines.

More and more providers are forced to put DPI-systems of varying degrees of reliability in the context of highways.

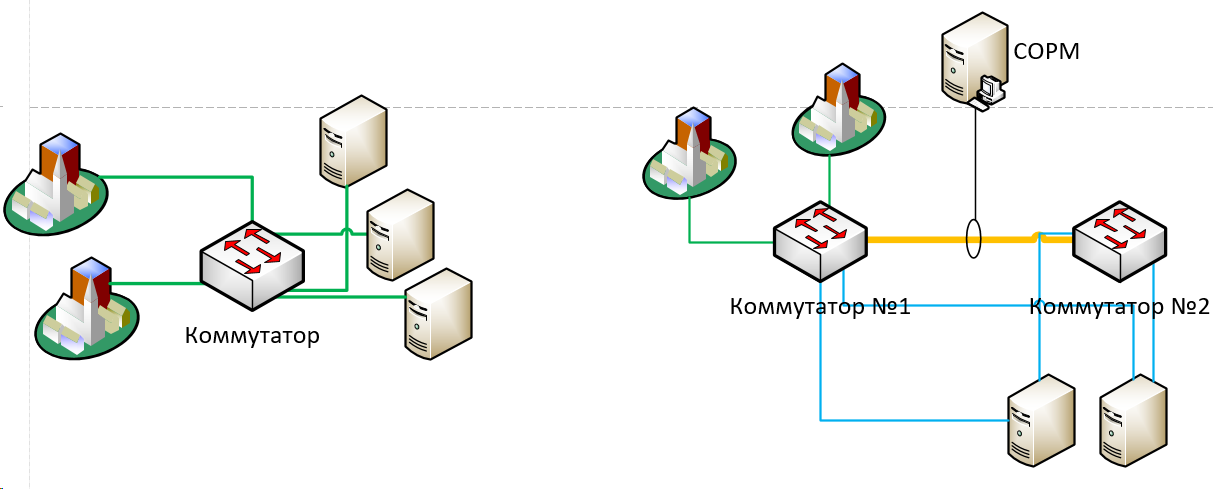

A year or two ago, according to rumors, practically all FSB started demanding the actual installation of SORM equipment (previously, most providers managed to agree with the SORM plan authorities - a plan of operational measures in case something needed to be found somewhere)

In addition to money (not so outright, absolutely transcendental, but still - millions), SORM has demanded many more manipulations with the network from many.

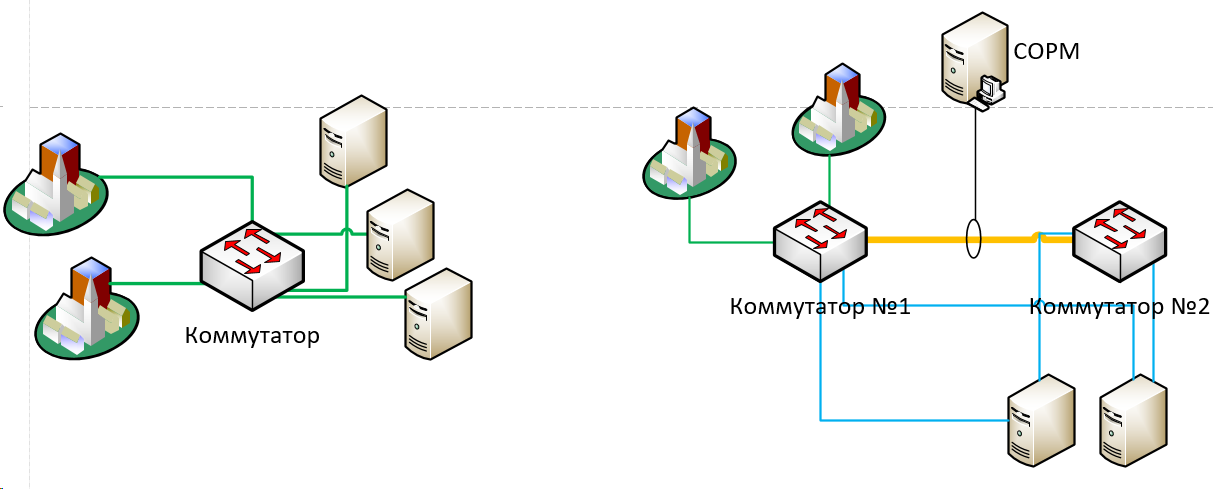

Therefore, we, in particular, had to coolly rebuild a piece of the core - just to collect user traffic to access servers somewhere in one place. In order to mirror it with several links in SORM.

That is, very simplified, it was (left) vs became (right):

Now most providers require the introduction of SORM-3 as well - which includes, among other things, the logging of nat-broadcasts.

For these purposes, we also had to add separate equipment for NAT (exactly the one mentioned in the first part) to the scheme above. And add in a certain order: since SORM must “see” the traffic before the addresses are translated - the traffic must go strictly as follows: users -> switching, core -> access servers -> SORM -> NAT -> switching, core -> Internet. To do this, we had to, literally, “deploy” traffic flows in the other direction to gain money, which was also quite difficult.

Total: over a dozen years, the circuit of the middle provider core has become much more complicated, and additional points of failure (both in the form of equipment and in the form of single switching lines) have increased significantly. Actually, in itself, the requirement to “see everything” implies reducing this “all” to one point.

It seems to me that this can be completely transparently extrapolated to current initiatives for the sovereignization of the RuNet, its protection, stabilization and improvement :)

And ahead still Spring.

I bring it here:

Kaleman today at 18:53The fact is that, it seems, we are the same provider: (

I was pleased today by the provider. Together with the update of the site blocking system, he got a mail.ru. In the morning I pull tech support, can not do anything. The provider is small, and apparently the higher providers are blocking. I also noticed a slowdown in the opening of all sites, maybe some kind of crooked DLP was hung? Previously, there were no access problems. The destruction of the Runet goes right before my eyes ...

')

Indeed, kaleman almost guessed the reason for the problems with mail.ru (although for a long time we refused to believe in this).

Further will be divided into two parts:

- The reasons for our current problems with mail.ru and an exciting quest for finding them

- the existence of an ISP in today's realities, the stability of a sovereign Runet.

Problems with the availability of mail.ru

Oh, that's a pretty long story.

The fact is that in order to implement the requirements of the state (in more detail in the second part) we purchased, set up, installed some equipment, both for filtering prohibited resources and for implementing NAT translations of subscribers.

Some time ago, we finally rebuilt the core of the network so that all subscriber traffic passed through this equipment strictly in the right direction.

A few days ago they turned on the filtering of the ban on it (at the same time leaving the old system to work) - everything seemed to go well.

Then - gradually began to include for different parts of subscribers NAT on this equipment. In appearance, everything, too, seems to have gone well.

But today, having turned on the NAT equipment for the next part of subscribers, from the very morning we were faced with a decent number of complaints about the unavailability or partial availability of mail.ru and other Mail Ru Group resources.

Began to check: something somewhere sometimes , occasionally sends TCP RST in response to requests exclusively to mail.ru networks. Moreover, it sends an incorrectly generated (without ACK), clearly artificial TCP RST. It looked like this:

Naturally, the first thoughts were about the new equipment: a terrible DPI, no confidence in it, you never know what it is - after all, TCP RST is a fairly common thing among the means of blocking.

We also put forward the assumption that kaleman is filtering someone “superior” —but they were immediately rejected.

Firstly, we have enough sane uplinks to not suffer like this :)

Secondly, we are connected to several IXs in Moscow, and the traffic to mail.ru goes exactly through them - and they have neither duties, nor any other motive to filter traffic.

The next half of the day was spent on what is commonly called shamanism - along with the vendor of the equipment, for which they were thankful, they did not give up :)

- filtering was completely disabled

- NAT was disabled by the new scheme

- test pc was placed in a separate isolated pool

- changed IP addressing

In the second half of the day, a virtual machine was allocated, going online as a regular user, and representatives of the vendor were given access to it and the equipment. The shamanism continued :)

In the end, the representative of the vendor confidently stated that the piece of iron had absolutely nothing to do with it: rst `s come from somewhere higher.

Note

At this point, someone can say: but it was much easier to remove the dump not from the test PC, but from the trunk above the DPI?

No, unfortunately, to take a dump (and even just to die) 40 + gbps is not at all trivial.

No, unfortunately, to take a dump (and even just to die) 40 + gbps is not at all trivial.

After that, in the evening - there was nothing left but to return to the assumption of strange filtering somewhere higher.

I looked through what IX traffic now goes to the networks of the IWG and just paid off the bgp-sessions to it. And - lo and behold! - everything returned to normal :(

On the one hand, it is very unfortunate that all day was spent searching for a problem, although it was solved in five minutes.

On the other hand:

- in my memory this is an unprecedented thing. As I wrote above - IX `s really there is no point in filtering transit traffic. They usually have hundreds of gigabits / terabits per second. I just until the last could not seriously assume.

- an incredibly successful set of circumstances: a new complex iron, which does not have much confidence and from which it is not clear what can be expected - sharpened just for blocking resources, including TCP RST `s

Currently, the NOC of this internet exchange is looking for a problem. According to them, (and I believe them) they have no specially deployed filtering system. But, thanks to heaven, the next quest is no longer our problem :)

It was a small attempt to justify, please understand and forgive :)

PS: I deliberately do not call either the manufacturer DPI / NAT, or IX (I, in fact, do not even have special claims to them, the main thing is to understand what it was)

Today's (as well as yesterday and the day before yesterday) reality from the point of view of an Internet provider

I spent the last weeks, significantly rebuilding the core of the network, producing a bunch of “profitable” manipulations, with the risk of significantly affecting live user traffic. Given the goals, results and consequences of all this - all this is morally hard enough. Especially - once again listening to the wonderful-hearted speeches about the protection of the stability of the RuNet, sovereignty, etc. etc.

In this section, I will try to tell the "evolution" of the core network of a typical Internet service provider over the past ten years.

A dozen years ago.

In those blessed times, the core of the provider network could be as simple and reliable as a traffic jam:

In this very, very simplified picture there are no highways, rings, ip / mpls routing.

Its essence is that user traffic ultimately came to the core level switching — from where it went to BNG , from which, as a rule, back to the kernel switching, and then “to the exit” —through one or more border gateway's to the Internet.

Such a scheme is very, very easy to back up both on L3 (dynamic routing) and L2 (MPLS).

You can put N + 1 anything: access servers, switches, boarders - and somehow reserve them for an automatic file server.

After a few years, it became clear to everyone in Russia that it was impossible to continue living like this: it was urgent to protect children from the corrupting influence of the network.

There was a need to urgently find ways to filter user traffic.

There are different approaches.

In a not very good case - something is put "in the cut": between user traffic and the Internet. The traffic passing through this “something” is analyzed and, for example, a fake packet with a redirect is sent to the side of the subscriber.

In the best case - if the traffic volumes allow - you can make a small feint with your ears: send only the traffic coming from users to the addresses that need to be filtered (for this you can either take from the registry the IP addresses specified there, or additionally resolve the existing ones). in the registry domains).

At one time for this purpose, I wrote a simple mini-dpi - although even the language does not turn so to call it. It is very simple and not very productive - however, it allowed us, and dozens (if not hundreds) of other providers, not to spread millions on industrial DPI-systems at once, but gave several additional years of time.

By the way, about the then and current DPI

By the way, many who purchased DPI-systems available at that time on the market have already thrown them out. Well, they are not sharpened by the like: hundreds of thousands of addresses, tens of thousands of URLs.

And at the same time, domestic producers have risen to this market very strongly. I'm not talking about the hardware component - everything is clear to everyone here, but software is the main thing in DPI - perhaps today if not the most advanced in the world, then surely a) it is developing by leaps and bounds, and b) at the price of boxed - just not comparable with foreign competitors.

I would like to be proud, but a little sad =)

And at the same time, domestic producers have risen to this market very strongly. I'm not talking about the hardware component - everything is clear to everyone here, but software is the main thing in DPI - perhaps today if not the most advanced in the world, then surely a) it is developing by leaps and bounds, and b) at the price of boxed - just not comparable with foreign competitors.

I would like to be proud, but a little sad =)

Now everything looked like this:

After another couple of years , everyone already had auditors; resources in the registry became more and more. For some old equipment (for example, cisco 7600), the scheme with “side filtering” became simply inapplicable: the number of routes on 76 platforms is limited to about nine hundred thousand, while the number of only IPv4 routes is now approaching 800 thousand. And if ipv6 is also ... And how many are there? 900000 individual addresses in the bath pkn? =)

Someone switched to a scheme with the mirroring of all trunk traffic to the filtering server, which should analyze the entire stream and send something bad to both sides (to the sender and receiver) RST when something bad is found.

However, the more traffic, the less applicable such a scheme. At the slightest delay in processing, the mirrored traffic will simply fly by unnoticed, and the provider will receive a protocol on fines.

More and more providers are forced to put DPI-systems of varying degrees of reliability in the context of highways.

A year or two ago, according to rumors, practically all FSB started demanding the actual installation of SORM equipment (previously, most providers managed to agree with the SORM plan authorities - a plan of operational measures in case something needed to be found somewhere)

In addition to money (not so outright, absolutely transcendental, but still - millions), SORM has demanded many more manipulations with the network from many.

- SORM must see the "gray" addresses of users, before nat-broadcast

- SORM has a limited number of network interfaces.

Therefore, we, in particular, had to coolly rebuild a piece of the core - just to collect user traffic to access servers somewhere in one place. In order to mirror it with several links in SORM.

That is, very simplified, it was (left) vs became (right):

Now most providers require the introduction of SORM-3 as well - which includes, among other things, the logging of nat-broadcasts.

For these purposes, we also had to add separate equipment for NAT (exactly the one mentioned in the first part) to the scheme above. And add in a certain order: since SORM must “see” the traffic before the addresses are translated - the traffic must go strictly as follows: users -> switching, core -> access servers -> SORM -> NAT -> switching, core -> Internet. To do this, we had to, literally, “deploy” traffic flows in the other direction to gain money, which was also quite difficult.

Total: over a dozen years, the circuit of the middle provider core has become much more complicated, and additional points of failure (both in the form of equipment and in the form of single switching lines) have increased significantly. Actually, in itself, the requirement to “see everything” implies reducing this “all” to one point.

It seems to me that this can be completely transparently extrapolated to current initiatives for the sovereignization of the RuNet, its protection, stabilization and improvement :)

And ahead still Spring.

Source: https://habr.com/ru/post/444274/

All Articles