Migrating from Nagios to Icinga2 in Australia

Hello.

I am a linux sysadmin, moved from Russia to Australia on an independent professional visa in 2015, but the article will not be about how to start a tractor. There are already enough of such articles (nevertheless, if there is interest, I will write about it), so I would like to tell you how I was the initiator of migration from one system in my work in Australia as a linux-ops engineer. monitoring to another. Specifically - Nagios => Icinga2.

The article is partly technical and partly about communication with people and problems associated with differences in culture and methods of work.

Unfortunately, the "code" tag does not highlight the Puppet and yaml code, so I had to use "plaintext".

Nothing foreshadowed trouble in the morning of December 21, 2016. I, as usual, read Habr to an unregistered anonymous in the first half hour of the working day, absorbing coffee and came across this article .

Since Nagios was used in my company, without thinking twice, I created a ticket in Redmine and threw the link into a general chat, because I thought it was important. The initiative is punishable even in Australia, so the lead engineer put this problem on me, since I discovered it.

In our department, before presenting our opinion, it is customary to propose at least one alternative, even if the choice is obvious, so I started with googleing which monitoring systems are generally relevant at the moment because in Russia I had my own self-written system at the last job, very primitive, but nevertheless quite working and performing all the tasks assigned to it. Python, St. Petersburg Polytechnic and subway control. No, the subway - sucks. This is a personal (11 years of work) and worthy of a separate article, but not now.

A little about the rules for making changes to the infrastructure configuration at my current location. We use Puppet, Gitlab and the principle of Infrastructure as a Code, so that:

- No manual changes via SSH by manually changing any files on virtual machines. For three years of work, I received a lot of headaches for this many times, the last one a week ago and I don’t think it was the last time. Well, in fact - to fix one line in the config, restart the service and see if the problem was resolved - 10 seconds. Create a new branch in Gitlab, push the changes, wait for r10k to work on Puppetmaster, run Puppet - environment = mybranch and wait a couple of minutes until all this works - at least 5 minutes.

- Any changes are made by creating a Merge Request in Gitlab and you need to get approval from at least one team member. Major changes to solve teamwork require two or three approvals.

- All changes are textual in one way or another (since Puppet manifests, Hiera scripts and data are text), binary files are highly discouraged and good reasons are needed for the approval of such files.

So, the options that I considered:

- Munin - if there are more than 10 servers in the infrastructure, the administration turns into hell (from this article . I didn’t have a particular desire to check this, so I took a word for it).

- Zabbix - has long been watched, even in Russia, but then he was redundant for my tasks. Here - I had to drop it because of the use of Puppet as a configuration manager and Gitlab as a version control system. At that time, as far as I understood, Zabbix stores the entire configuration in the database, and therefore it was not clear how to manage the configuration in the current conditions and how to track changes.

- Prometheus is what we come to as a result, judging by the mood in the department, but at that time I did not master it and could not demonstrate a real working sample (Proof of Concept), so I had to refuse.

- There were also several other options that either required a complete processing of the system, or were in their infancy / abandoned and for the same reason were rejected.

In the end, I stopped at Icinga2 for three reasons:

1 - compatibility with Nrpe (client service, which runs checks on commands from Nagios). It was very important, because at that time we had 135 (now 2019 165 of them) virtual machines with a bunch of self-written services / checks and redoing it all would be a fierce hemorrhoids.

2 - all text configuration files, which makes it easy to edit this case, create merge requests with the ability to see what is added or deleted.

3 is a live and developing OpenSource project. We are very fond of OpenSource and make a feasible contribution to it by creating Pull Requests and Issues to solve problems.

So, let's go, Icinga2.

The first thing you had to face was the inertness of colleagues. Everyone got used to Nagios / Nadjios (although even here they could not come to a compromise on how to pronounce them) and the CheckMK interface. In icinga, the interface looks completely different (it was a minus), but there is an opportunity to flexibly configure what you need to see with the help of filters literally by any parameter (it was a plus, but I notably warred for it).

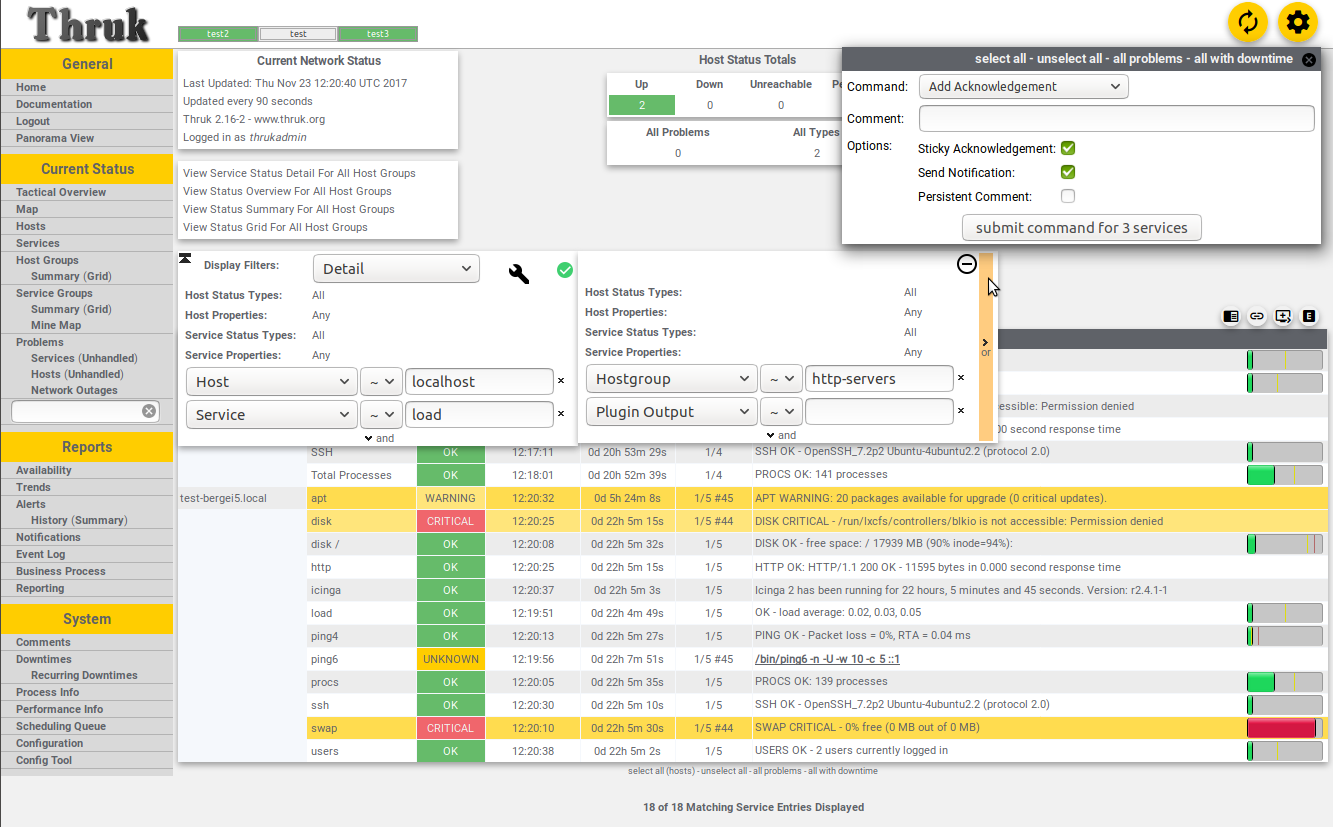

Estimate the ratio of the size of the scroll bar to the size of the field to scroll.

Secondly, everyone is accustomed to seeing the entire infrastructure on one monitor, because CheckMk allows you to work with several Nagios hosts, but Icinga did not know how to interface (actually knew how, but more on that below). The alternative was a thing called Thruk, but its design caused the gagging impulses of all team members, except one - the one who suggested it (not me).

After a couple of days of brainstorm, I proposed the idea of cluster monitoring, when there is one master host in the production zone and two subordinates - one in dev / test and one external host located at another provider in order to monitor our services from the point of view of the client or an outsider the observer. This configuration allowed you to see all the problems in one web-interface and worked quite well for yourself, but Puppet ... The problem with Puppet was that the master host now had to know about all the hosts and services / checks in the system and had to distribute them between zones (dev-test, staging-prod, ext), but sending changes through Icinga API takes a couple of seconds, but compiling the Puppet directory of all services for all hosts takes a couple of minutes. This is still blamed on me, although I have already explained several times how everything works and why it all takes so long.

The third is a bunch of SnowFlakes (snowflakes) - things that are knocked out of the general system, because there is something special about them, so the general rules do not apply to them. It was solved by a frontal attack - if there are anxieties, but in fact everything is in order, then it is necessary to dig deeper and understand why it is alarming, although it should not. Or vice versa - why Nagios panics, but Icinga - no.

Fourth, Nagios worked here for three years before me and there was initially more trust in him than in my new-fashioned hipster system, so every time Icinga panicked, no one did anything until Nagios was excited about the same issue. But very rarely, Icinga gave out real alarms earlier than Nagios, and I think this is a serious jamb, which I will discuss in the “Conclusions” section.

As a result, commissioning was delayed by more than 5 months (planned on June 28, 2018, in fact - on December 3, 2018), mainly due to the "parity check" - the crap when there are several services in Nagios, about which no one I have not heard anything for the last couple of years, but NOW, they fucking gave out crit without any reason and I had to explain why they are not in my panel and I had to add them to Icinga to "parity check is complete" (All services / checks in Nagios correspond to services / checks in Icinga)

Implementation:

The first is the Code vs Data war, such as Puppet Style. All data, right here at all, should be in Hiera and nothing else. All code is in .pp files. Variables, abstractions, functions - everything goes to pp.

As a result, we have a bunch of virtual machines (165 at the time of this writing) and 68 web applications that need to be monitored for operability and the validity of SSL certificates. But because of historical hemorrhoids, information for monitoring applications is taken from a separate gitlab repository and the data format has not changed since Puppet 3, which creates additional difficulties in configuration.

define profiles::services::monitoring::docker_apps( Hash $app_list, Hash $apps_accessible_from, Hash $apps_access_list, Hash $webhost_defaults, Hash $webcheck_defaults, Hash $service_overrides, Hash $targets, Hash $app_checks, ) { #### APPS #### $zone = $name $app_list.each | String $app_name, Hash $app_data | { $notify_group = { 'notify_group' => ($webcheck_defaults[$zone]['notify_group'] + pick($app_data['notify_group'], {} )) } # adds notifications for default group (systems) + any group defined in int/pm_docker_apps.eyaml $data = merge($webhost_defaults, $apps_accessible_from, $app_data) $site_domain = $app_data['site_domain'] $regexp = pick($app_data['check_regex'], 'html') # Pick a regex to check $check_url = $app_data['check_url'] ? { undef => { 'http_uri' => '/' }, default => { 'http_uri' => $app_data['check_url'] } } $check_regex = $regexp ?{ 'absent' => {}, default => {'http_expect_body_regex' => $regexp} } $site_domain.each | String $vhost, Hash $vdata | { # Split an app by domains if there are two or more $vhost_name = {'http_vhost' => $vhost} $vars = $data['vars'] + $vhost_name + $check_regex + $check_url $web_ipaddress = is_array($vdata['web_ipaddress']) ? { # Make IP-address an array if it's not, because askizzy has 2 ips and it's an array true => $vdata['web_ipaddress'], false => [$vdata['web_ipaddress']], } $access_from_zones = [$zone] + $apps_access_list[$data['accessible_from']] # Merge default zone (where the app is defined) and extra zones if they exist $web_ipaddress.each | String $ip_address | { # For each IP (if we have multiple) $suffix = length($web_ipaddress) ? { # If we have more than one - add IP as a suffix to this hostname to avoid duplicating resources 1 => '', default => "_${ip_address}" } $octets = split($ip_address, '\.') $ip_tag = "${octets[2]}.${octets[3]}" # Using last octet only causes a collision between nginx-vip 203.15.70.94 and ext. ip 49.255.194.94 $access_from_zones.each | $zone_prefix |{ $zone_target = $targets[$zone_prefix] $nginx_vip_name = "${zone_prefix}_nginx-vip-${ip_tag}" # If it's a host for ext - prefix becomes 'ext_' (ext_nginx-vip...) $nginx_host_vip = { $nginx_vip_name => { ensure => present, target => $zone_target, address => $ip_address, check_command => 'hostalive', groups => ['nginx_vip',], } } $ssl_vars = $app_checks['ssl'] $regex_vars = $app_checks['http'] + $vars + $webcheck_defaults[$zone] + $notify_group if !defined( Profiles::Services::Monitoring::Host[$nginx_vip_name] ) { ensure_resources('profiles::services::monitoring::host', $nginx_host_vip) } if !defined( Icinga2::Object::Service["${nginx_vip_name}_ssl"] ) { icinga2::object::service {"${nginx_vip_name}_ssl": ensure => $data['ensure'], assign => ["host.name == $nginx_vip_name",], groups => ['webchecks',], check_command => 'ssl', check_interval => $service_overrides['ssl']['check_interval'], target => $targets['services'], apply => true, vars => $ssl_vars } } if $regexp != 'absent'{ if !defined(Icinga2::Object::Service["${vhost}${$suffix} regex"]){ icinga2::object::service {"${vhost}${$suffix} regex": ensure => $data['ensure'], assign => ["match(*_nginx-vip-${ip_tag}, host.name)",], groups => ['webchecks',], check_command => 'http', check_interval => $service_overrides['regex']['check_interval'], target => $targets['services'], enable_flapping => true, apply => true, vars => $regex_vars } } } } } } } } The configuration code for hosts and services also looks awful:

class profiles::services::monitoring::config( Array $default_config, Array $hostgroups, Hash $hosts = {}, Hash $host_defaults, Hash $services, Hash $service_defaults, Hash $service_overrides, Hash $webcheck_defaults, Hash $servicegroups, String $servicegroup_target, Hash $user_defaults, Hash $users, Hash $oncall, Hash $usergroup_defaults, Hash $usergroups, Hash $notifications, Hash $notification_defaults, Hash $notification_commands, Hash $timeperiods, Hash $webhost_defaults, Hash $apps_access_list, Hash $check_commands, Hash $hosts_api = {}, Hash $targets = {}, Hash $host_api_defaults = {}, ) { # Profiles::Services::Monitoring::Hostgroup <<| |>> # will be enabled when we move to icinga completely #### APPS #### case $location { 'int', 'ext': { $apps_by_zone = {} } 'pm': { $int_apps = hiera('int_docker_apps') $int_app_defaults = hiera('int_docker_app_common') $st_apps = hiera('staging_docker_apps') $srs_apps = hiera('pm_docker_apps_srs') $pm_apps = hiera('pm_docker_apps') + $st_apps + $srs_apps $pm_app_defaults = hiera('pm_docker_app_common') $apps_by_zone = { 'int' => $int_apps, 'pm' => $pm_apps, } $app_access_by_zone = { 'int' => {'accessible_from' => $int_app_defaults['accessible_from']}, 'pm' => {'accessible_from' => $pm_app_defaults['accessible_from']}, } } default: { fail('Please ensure the node has $location fact set (int, pm, ext)') } } file { '/etc/icinga2/conf.d/': ensure => directory, recurse => true, purge => true, owner => 'icinga', group => 'icinga', mode => '0750', notify => Service['icinga2'], } $default_config.each | String $file_name |{ file {"/etc/icinga2/conf.d/${file_name}": ensure => present, source => "puppet:///modules/profiles/services/monitoring/default_config/${file_name}", owner => 'icinga', group => 'icinga', mode => '0640', } } $app_checks = { 'ssl' => $services['webchecks']['checks']['ssl']['vars'], 'http' => $services['webchecks']['checks']['http_regexp']['vars'] } $apps_by_zone.each | String $zone, Hash $app_list | { profiles::services::monitoring::docker_apps{$zone: app_list => $app_list, apps_accessible_from => $app_access_by_zone[$zone], apps_access_list => $apps_access_list, webhost_defaults => $webhost_defaults, webcheck_defaults => $webcheck_defaults, service_overrides => $service_overrides, targets => $targets, app_checks => $app_checks, } } #### HOSTS #### # Profiles::Services::Monitoring::Host <<| |>> # This is for spaceship invasion when it's ready. $hosts_has_large_disks = query_nodes('mountpoints.*.size_bytes >= 1099511627776') $hosts.each | String $hostgroup, Hash $list_of_hosts_with_settings | { # Splitting site lists by hostgroups - docker_host/gluster_host/etc $list_of_hosts_in_group = $list_of_hosts_with_settings['hosts'] $hostgroup_settings = $list_of_hosts_with_settings['settings'] $merged_hostgroup_settings = deep_merge($host_defaults, $list_of_hosts_with_settings['settings']) $list_of_hosts_in_group.each | String $host_name, Hash $host_settings |{ # Splitting grouplists by hosts # Is this host in the array $hosts_has_large_disks ? If so set host.vars.has_large_disks if ( $hosts_has_large_disks.reduce(false) | $found, $value| { ( $value =~ "^${host_name}" ) or $found } ) { $vars_has_large_disks = { 'has_large_disks' => true } } else { $vars_has_large_disks = {} } $host_data = deep_merge($merged_hostgroup_settings, $host_settings) $hostgroup_settings_vars = pick($hostgroup_settings['vars'], {}) $host_settings_vars = pick($host_settings['vars'], {}) $host_notify_group = delete_undef_values($host_defaults['vars']['notify_group'] + $hostgroup_settings_vars['notify_group'] + $host_settings_vars['notify_group']) $host_data_vars = delete_undef_values(deep_merge($host_data['vars'] , {'notify_group' => $host_notify_group}, $vars_has_large_disks)) # Merging vars separately $hostgroups = delete_undef_values([$hostgroup] + $host_data['groups']) profiles::services::monitoring::host{$host_name: ensure => $host_data['ensure'], display_name => $host_data['display_name'], address => $host_data['address'], groups => $hostgroups, target => $host_data['target'], check_command => $host_data['check_command'], check_interval => $host_data['check_interval'], max_check_attempts => $host_data['max_check_attempts'], vars => $host_data_vars, template => $host_data['template'], } } } if !empty($hosts_api){ # All hosts managed by API $hosts_api.each | String $zone, Hash $hosts_api_zone | { # Split api hosts by zones $hosts_api_zone.each | String $hostgroup, Hash $list_of_hosts_with_settings | { # Splitting site lists by hostgroups - docker_host/gluster_host/etc $list_of_hosts_in_group = $list_of_hosts_with_settings['hosts'] $hostgroup_settings = $list_of_hosts_with_settings['settings'] $merged_hostgroup_settings = deep_merge($host_api_defaults, $list_of_hosts_with_settings['settings']) $list_of_hosts_in_group.each | String $host_name, Hash $host_settings |{ # Splitting grouplists by hosts # Is this host in the array $hosts_has_large_disks ? If so set host.vars.has_large_disks if ( $hosts_has_large_disks.reduce(false) | $found, $value| { ( $value =~ "^${host_name}" ) or $found } ) { $vars_has_large_disks = { 'has_large_disks' => true } } else { $vars_has_large_disks = {} } $host_data = deep_merge($merged_hostgroup_settings, $host_settings) $hostgroup_settings_vars = pick($hostgroup_settings['vars'], {}) $host_settings_vars = pick($host_settings['vars'], {}) $host_api_notify_group = delete_undef_values($host_defaults['vars']['notify_group'] + $hostgroup_settings_vars['notify_group'] + $host_settings_vars['notify_group']) $host_data_vars = delete_undef_values(deep_merge($host_data['vars'] , {'notify_group' => $host_api_notify_group}, $vars_has_large_disks)) $hostgroups = delete_undef_values([$hostgroup] + $host_data['groups']) if defined(Profiles::Services::Monitoring::Host[$host_name]){ $hostname = "${host_name}_from_${zone}" } else { $hostname = $host_name } profiles::services::monitoring::host{$hostname: ensure => $host_data['ensure'], display_name => $host_data['display_name'], address => $host_data['address'], groups => $hostgroups, target => "${host_data['target_base']}/${zone}/hosts.conf", check_command => $host_data['check_command'], check_interval => $host_data['check_interval'], max_check_attempts => $host_data['max_check_attempts'], vars => $host_data_vars, template => $host_data['template'], } } } } } #### END OF HOSTS #### #### SERVICES #### $services.each | String $service_group, Hash $s_list |{ # Service_group and list of services in that group $service_list = $s_list['checks'] # List of actual checks, separately from SG settings $service_list.each | String $service_name, Hash $data |{ $merged_defaults = merge($service_defaults, $s_list['settings']) # global service defaults + service group defaults $merged_data = merge($merged_defaults, $data) $settings_vars = pick($s_list['settings']['vars'], {}) $this_service_vars = pick($data['vars'], {}) $all_service_vars = delete_undef_values($service_defaults['vars'] + $settings_vars + $this_service_vars) # If we override default check_timeout, but not nrpe_timeout, make nrpe_timeout the same as check_timeout if ( $merged_data['check_timeout'] and ! $this_service_vars['nrpe_timeout'] ) { # NB: Icinga will convert 1m to 60 automatically! $nrpe = { 'nrpe_timeout' => $merged_data['check_timeout'] } } else { $nrpe = {} } # By default we use nrpe and all commands are run via nrpe. So vars.nrpe_command = $service_name is a default value # If it's server-side Icinga command - we don't need 'nrpe_command' # but there is no harm to have that var and the code is shorter if $merged_data['check_command'] == 'nrpe'{ $check_command = $merged_data['vars']['nrpe_command'] ? { undef => { 'nrpe_command' => $service_name }, default => { 'nrpe_command' => $merged_data['vars']['nrpe_command'] } } }else{ $check_command = {} } # Assembling $vars from Global Default service settings, servicegroup settings, this particular check settings and let's not forget nrpe settings. if $all_service_vars['graphite_template'] { $graphite_template = {'check_command' => $all_service_vars['graphite_template']} }else{ $graphite_template = {'check_command' => $service_name} } $service_notify = [] + pick($settings_vars['notify_group'], []) + pick($this_service_vars['notify_group'], []) # pick is required everywhere, otherwise becomes "The value '' cannot be converted to Numeric" $service_notify_group = $service_notify ? { [] => $service_defaults['vars']['notify_group'], default => $service_notify } # Assing default group (systems) if no other groups are defined $vars = $all_service_vars + $nrpe + $check_command + $graphite_template + {'notify_group' => $service_notify_group} # This needs to be merged separately, because merging it as part of MERGED_DATA overwrites arrays instead of merging them, so we lose some "assign" and "ignore" values $assign = delete_undef_values($service_defaults['assign'] + $s_list['settings']['assign'] + $data['assign']) $ignore = delete_undef_values($service_defaults['ignore'] + $s_list['settings']['ignore'] + $data['ignore']) icinga2::object::service {$service_name: ensure => $merged_data['ensure'], apply => $merged_data['apply'], enable_flapping => $merged_data['enable_flapping'], assign => $assign, ignore => $ignore, groups => [$service_group], check_command => $merged_data['check_command'], check_interval => $merged_data['check_interval'], check_timeout => $merged_data['check_timeout'], check_period => $merged_data['check_period'], display_name => $merged_data['display_name'], event_command => $merged_data['event_command'], retry_interval => $merged_data['retry_interval'], max_check_attempts => $merged_data['max_check_attempts'], target => $merged_data['target'], vars => $vars, template => $merged_data['template'], } } } #### END OF SERVICES #### #### OTHER BORING STUFF #### $servicegroups.each | $servicegroup, $description |{ icinga2::object::servicegroup{ $servicegroup: target => $servicegroup_target, display_name => $description } } $hostgroups.each| String $hostgroup |{ profiles::services::monitoring::hostgroup { $hostgroup:} } $notifications.each | String $name, Hash $settings |{ $assign = pick($notification_defaults['assign'], []) + $settings['assign'] $ignore = pick($notification_defaults['ignore'], []) + $settings['ignore'] $merged_settings = $settings + $notification_defaults icinga2::object::notification{$name: target => $merged_settings['target'], apply => $merged_settings['apply'], apply_target => $merged_settings['apply_target'], command => $merged_settings['command'], interval => $merged_settings['interval'], states => $merged_settings['states'], types => $merged_settings['types'], assign => delete_undef_values($assign), ignore => delete_undef_values($ignore), user_groups => $merged_settings['user_groups'], period => $merged_settings['period'], vars => $merged_settings['vars'], } } # Merging notification settings for users with other settings $users_oncall = deep_merge($users, $oncall) # Magic. Do not touch. create_resources('icinga2::object::user', $users_oncall, $user_defaults) create_resources('icinga2::object::usergroup', $usergroups, $usergroup_defaults) create_resources('icinga2::object::timeperiod',$timeperiods) create_resources('icinga2::object::checkcommand', $check_commands) create_resources('icinga2::object::notificationcommand', $notification_commands) profiles::services::sudoers { 'icinga_runs_ping_l2': ensure => present, sudoersd_template => 'profiles/os/redhat/centos7/sudoers/icinga.erb', } } I am still working on these noodles and improving them as far as possible. However, it was this code that allowed using a simple and clear syntax in Hiera:

profiles::services::monitoring::config::services: perf_checks: settings: check_interval: '2m' assign: - 'host.vars.type == linux' checks: procs: {} load: {} memory: {} disk: check_interval: '5m' vars: notification_period: '24x7' disk_iops: vars: notifications: - 'silent' cpu: vars: notifications: - 'silent' dns_fqdn: check_interval: '15m' ignore: - 'xenserver in host.groups' vars: notifications: - 'silent' iftraffic_nrpe: vars: notifications: - 'silent' logging: settings: assign: - 'logserver in host.groups' checks: rsyslog: {} nginx_limit_req_other: {} nginx_limit_req_s2s: {} nginx_limit_req_s2x: {} nginx_limit_req_srs: {} logstash: {} logstash_api: vars: notifications: - 'silent' All checks are divided into groups, each group has default settings of the form where and how often to run these checks, which notifications to send and to whom.

In each check, you can override any option, and all this eventually adds up with the default settings for all checks in general. Therefore, such noodles are written in config.pp - there is a merging of all default settings with the settings of groups and then with each individual check.

Also a very important change was the ability to use functions in the settings, for example, the function of replacing the port, address and url to check http_regex.

http_regexp: assign: - 'host.vars.http_regex' - 'static_sites in host.groups' check_command: 'http' check_interval: '1m' retry_interval: '20s' max_check_attempts: 6 http_port: '{{ if(host.vars.http_port) { return host.vars.http_port } else { return 443 } }}' vars: notification_period: 'host.vars.notification_period' http_vhost: '{{ if(host.vars.http_vhost) { return host.vars.http_vhost } else { return host.name } }}' http_ssl: '{{ if(host.vars.http_ssl) { return false } else { return true } }}' http_expect_body_regex: 'host.vars.http_regex' http_uri: '{{ if(host.vars.http_uri) { return host.vars.http_uri } else { return "/" } }}' http_onredirect: 'follow' http_warn_time: 8 http_critical_time: 15 http_timeout: 30 http_sni: true This means - if the definition of the host has the http_port variable - use it, otherwise 443. For example, the jabber web interface hangs on 9090, and Unifi hangs on 7443.

http_vhost means to ignore DNS and take this address.

If uri is specified in the host, then go through it, otherwise take "/".

There was a funny story with http_ssl - this infection didn’t want to shut off on demand. I was stupid for a long time in this line, until it came to me that the variable in the definition of the host:

http_ssl: false It is substituted into the expression

if(host.vars.http_ssl) { return false } else { return true } as false and it turns out

if(false) { return false } else { return true } that is, the ssl check is always active. It was decided to replace the syntax:

http_ssl: no Conclusions :

Pros:

- We now have one monitoring system, not two, as it was the last 7-8 months, or one, outdated and vulnerable.

- The data structure of hosts / services (checks) is now (in my opinion) much more readable and understandable. For others, this was not so obvious, so I had to write down a couple of pages in the local wiki to explain how it all works and what to edit.

- It is possible to flexibly configure checks using variables and functions, for example, to check the http_regexp, the desired pattern, return code, url and port can be set in the host settings.

- There are several panels (dashboards), for each of which you can define your own list of displayed alarms and manage all of these via Puppet and merge requests.

Minuses:

- The inertia of the team members - Nagios worked, worked and worked, and this is your Ising constantly buggy and slow. And how can I see the story? And, damn, it is not updated ... (The real problem - the history of alarms is not updated automatically, only on F5)

- Inertia of the system - when I click on the "update now" (check now) in the web interface - the result of the execution depends on the weather on Mars, especially on complex services that require tens of seconds to complete. A similar result is a normal thing.

- In general, on the semiannual statistics of the work of the two systems, side by side, Nagios always worked faster than Icinga, and this irritated me very much. It seems to me that there was something upset with timers and checking every five minutes in fact goes every 5:30 or something like that.

- If you restart the service at any time (systemctl restart icinga2) - all the checks that were in progress at that time would give the critical <terminated by signal 15> alarm to the screen and from the side it looks as if everything has even fallen ( confirmed bug ).

But in general - it works.

')

Source: https://habr.com/ru/post/444060/

All Articles