6 Nezhdanchikov from Julia

Finally, the Russian-language Julia language guide appeared. There is a full-fledged introduction to the language for those who have little experience in programming (the rest will be useful for general development), there is also an introduction to machine learning and a lot of tasks for securing the material.

While searching, I stumbled upon a programming course for economists (besides Julia, there is a Python). Experienced can go through the express course or read the book How to Think Like a Computer Scientist

The following is a translation of the material from the blog Christopher Rackauckas 7 Julia Gotchas and How to Handle Them

Let me start by saying that Julia is a wonderful language. I love her, this is what I consider the most powerful and intuitive language I have ever used. This is undoubtedly my favorite language. However, there are some 'pitfalls', tricky little things you need to know about. Every language has them, and one of the first things you need to do to master a language is to find out what they are and how to avoid them. The point of this post is to help you speed up this process, exposing some of the most common that offer alternative programming techniques.

Julia is a good language for understanding what is happening, because there is no magic in it. Julia's developers wanted clear rules of behavior. This means that all behavior can be explained. However, this may mean that you have to strain your head in order to understand why exactly this and not the other is happening. This is why I’m not just going to pose some common problems, but I’m also going to explain why they arise. You will see that there are some very similar patterns, and as soon as you become aware of them, you will no longer be intimidating of any of them. Because of this, Julia has a slightly steeper learning curve compared to simpler languages, such as MATLAB / R / Python . However, once you have mastered this, you can fully utilize Julia’s brevity in obtaining C / Fortran performance. Now let's dig deeper.

Times nezhdanchik: REPL (terminal) has a global scope

This is by far the most common problem reported by new Julia users. Someone will say: “I heard Julia is fast!”, Will open the REPL, quickly write some familiar algorithm and execute this script. After doing it, they look at the time and say: "Wait a second, why is it slow, like on Python?" Since this is such an important and common problem, let's spend some time studying the reasons why this happens in order to understand how to avoid it.

A small digression: why is Julia quick

You have to understand that Julia is not only a compilation of code, but also a specialization of types (that is, a compilation of code that is specific to these types). Let me repeat: Julia is not fast, because the code is compiled using the JIT compiler, rather the secret of speed is that the type-specific code is compiled.

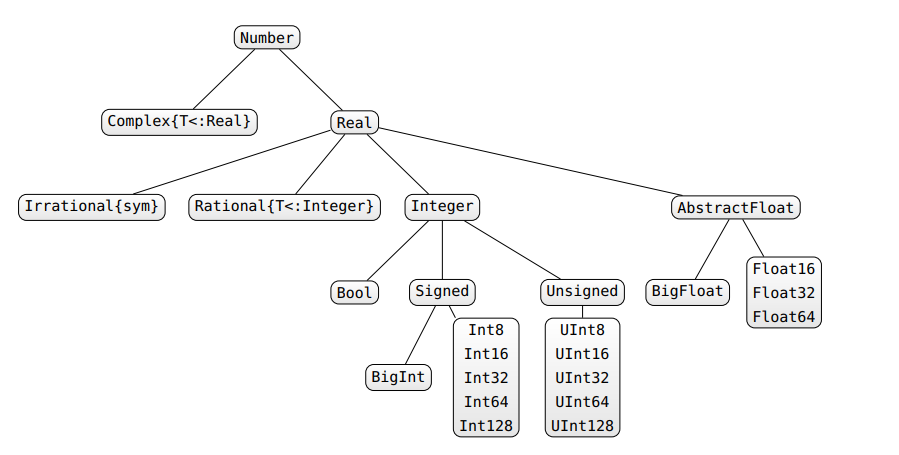

If you need a full story, check out some of the notes that I wrote for the upcoming seminar . Type specificity is determined by the basic design principle of Julia: multiple dispatching . When you write code:

function f(a,b) return 2a+b end it seems that this is only one , but in fact a large number of are created here. In the language of Julia, a function is an abstraction, and what is actually called is a method. If you call f(2.0,3.0) , Julia will run the compiled code, which takes two floating-point numbers and returns the value 2a + b . If you call f(2,3) , then Julia will run another compiled code that takes two integers and returns the value 2a + b . The function f is an abstraction or abbreviation for many different methods that have the same form, and this scheme of using the f symbol to call all these different methods is called multiple scheduling. And this applies everywhere: the + operator is actually a function that will call methods depending on the types it sees. Julia actually gets its speed, because the code compiled by it knows its types, and therefore the compiled code that calls f (2.0,3.0) is the compiled code that you get by defining the same function in C / Fortran . You can check this with the code_native macro to see the compiled assembly:

@code_native f(2.0,3.0) pushq %rbp movq %rsp, %rbp Source line: 2 vaddsd %xmm0, %xmm0, %xmm0 vaddsd %xmm1, %xmm0, %xmm0 popq %rbp retq nop This is the same compiled assembly that you expect from a function in C / Fortran , and it differs from the assembly code for integers:

@code_native f(2,3) pushq %rbp movq %rsp, %rbp Source line: 2 leaq (%rdx,%rcx,2), %rax popq %rbp retq nopw (%rax,%rax) Essence: REPL / Global Scope does not allow type specifics

This brings us to the basic idea: REPL / Global Scope is slow because it does not allow type specification. First of all, note that the REPL is a global scope, because Julia allows nested scope for functions. For example, if we define

function outer() a = 5 function inner() return 2a end b = inner() return 3a+b end then we will see that this code works. This is because Julia allows you to capture from an external function into an internal function. If you apply this idea recursively, you will realize that the highest area is the area that is the REPL itself (which is the global scope of the Main module). But now let's think about how the function will be compiled in this situation. We implement the same thing, but using global variables:

a=2.0; b=3.0 function linearcombo() return 2a+b end ans = linearcombo() and

a = 2; b = 3 ans2= linearcombo() Question: What types should the compiler take for a and b ? Notice that in this example we changed the types and still called the same function. It can deal with any types we add to it: floating, integers, arrays, strange user types, etc. In Julia, this means that the variables must be boxed, and the types are checked every time they are used. What do you think the compiled code looks like?

pushq %rbp movq %rsp, %rbp pushq %r15 pushq %r14 pushq %r12 pushq %rsi pushq %rdi pushq %rbx subq $96, %rsp movl $2147565792, %edi # imm = 0x800140E0 movabsq $jl_get_ptls_states, %rax callq *%rax movq %rax, %rsi leaq -72(%rbp), %r14 movq $0, -88(%rbp) vxorps %xmm0, %xmm0, %xmm0 vmovups %xmm0, -72(%rbp) movq $0, -56(%rbp) movq $10, -104(%rbp) movq (%rsi), %rax movq %rax, -96(%rbp) leaq -104(%rbp), %rax movq %rax, (%rsi) Source line: 3 movq pcre2_default_compile_context_8(%rdi), %rax movq %rax, -56(%rbp) movl $2154391480, %eax # imm = 0x806967B8 vmovq %rax, %xmm0 vpslldq $8, %xmm0, %xmm0 # xmm0 = zero,zero,zero,zero,zero,zero,zero,zero,xmm0[0,1,2,3,4,5,6,7] vmovdqu %xmm0, -80(%rbp) movq %rdi, -64(%rbp) movabsq $jl_apply_generic, %r15 movl $3, %edx movq %r14, %rcx callq *%r15 movq %rax, %rbx movq %rbx, -88(%rbp) movabsq $586874896, %r12 # imm = 0x22FB0010 movq (%r12), %rax testq %rax, %rax jne L198 leaq 98096(%rdi), %rcx movabsq $jl_get_binding_or_error, %rax movl $122868360, %edx # imm = 0x752D288 callq *%rax movq %rax, (%r12) L198: movq 8(%rax), %rax testq %rax, %rax je L263 movq %rax, -80(%rbp) addq $5498232, %rdi # imm = 0x53E578 movq %rdi, -72(%rbp) movq %rbx, -64(%rbp) movq %rax, -56(%rbp) movl $3, %edx movq %r14, %rcx callq *%r15 movq -96(%rbp), %rcx movq %rcx, (%rsi) addq $96, %rsp popq %rbx popq %rdi popq %rsi popq %r12 popq %r14 popq %r15 popq %rbp retq L263: movabsq $jl_undefined_var_error, %rax movl $122868360, %ecx # imm = 0x752D288 callq *%rax ud2 nopw (%rax,%rax) For dynamic languages without type specialization, this bloated code with all the additional instructions is as good as it can be, so Julia slows down to their speed. To understand why this is so important, note that each piece of code that you write in Julia is compiled. Suppose you write a loop in your script:

a = 1 for i = 1:100 a += a + f(a) end The compiler will have to compile this cycle, but since it cannot guarantee that the types do not change, it conservatively winds the footcloth on all types, which leads to slow execution.

How to avoid problems

There are several ways to avoid this problem. The easiest way is to always wrap your scripts in a function. For example, the previous code will look like this:

function geta(a) # can also just define a=1 here for i = 1:100 a += a + f(a) end return a end a = geta(1) This will give you the same result, but since the compiler may specialize in type a , it will provide the compiled code you want. Another thing you can do is define your variables as constants.

const b = 5 By doing this, you tell the compiler that the variable will not change, and thus it will be able to specialize all the code that uses it on the type that it is at present. There is a slight fad that Julia actually allows you to change the value of a constant, but not the type. Thus, you can use const to tell the compiler that you will not change the type. However, note that there are some small quirks:

const a = 5 f() = a println(f()) # Prints 5 a = 6 println(f()) # Prints 5 # WARNING: redefining constant a this does not work as expected, because the compiler, realizing that it knows the answer to f () = a (since a is constant), simply replaced the function call with the answer, giving a different behavior than if a not constant.

Moral: do not write your scripts directly in the REPL, always enclose them in a function.

Nezhdanchik two: type instability

So, I just expressed the opinion on how important code specialization is for data types. Let me ask you a question, what happens when your types can change? If you guessed it: “Well, in this case you cannot specialize the compiled code,” then you are right. Such a problem is known as type instability. They may appear differently, but one common example is that you initialize a value with a simple, but not necessarily the type that it should be. For example, let's look at:

function g() x=1 for i = 1:10 x = x/2 end return x end Note that 1/2 is a floating point number in Julia. Therefore, if we started with x = 1 , the integer will change to a floating point number, and thus the function must compile the inner loop, as if it could be of any type. If instead we had:

function h() x=1.0 for i = 1:10 x = x/2 end return x end then the entire function can be optimally compiled, knowing that x will remain a floating point number (this ability for the compiler to define types is called type inference). We can check the compiled code to see the difference:

pushq %rbp movq %rsp, %rbp pushq %r15 pushq %r14 pushq %r13 pushq %r12 pushq %rsi pushq %rdi pushq %rbx subq $136, %rsp movl $2147565728, %ebx # imm = 0x800140A0 movabsq $jl_get_ptls_states, %rax callq *%rax movq %rax, -152(%rbp) vxorps %xmm0, %xmm0, %xmm0 vmovups %xmm0, -80(%rbp) movq $0, -64(%rbp) vxorps %ymm0, %ymm0, %ymm0 vmovups %ymm0, -128(%rbp) movq $0, -96(%rbp) movq $18, -144(%rbp) movq (%rax), %rcx movq %rcx, -136(%rbp) leaq -144(%rbp), %rcx movq %rcx, (%rax) movq $0, -88(%rbp) Source line: 4 movq %rbx, -104(%rbp) movl $10, %edi leaq 477872(%rbx), %r13 leaq 10039728(%rbx), %r15 leaq 8958904(%rbx), %r14 leaq 64(%rbx), %r12 leaq 10126032(%rbx), %rax movq %rax, -160(%rbp) nopw (%rax,%rax) L176: movq %rbx, -128(%rbp) movq -8(%rbx), %rax andq $-16, %rax movq %r15, %rcx cmpq %r13, %rax je L272 movq %rbx, -96(%rbp) movq -160(%rbp), %rcx cmpq $2147419568, %rax # imm = 0x7FFF05B0 je L272 movq %rbx, -72(%rbp) movq %r14, -80(%rbp) movq %r12, -64(%rbp) movl $3, %edx leaq -80(%rbp), %rcx movabsq $jl_apply_generic, %rax vzeroupper callq *%rax movq %rax, -88(%rbp) jmp L317 nopw %cs:(%rax,%rax) L272: movq %rcx, -120(%rbp) movq %rbx, -72(%rbp) movq %r14, -80(%rbp) movq %r12, -64(%rbp) movl $3, %r8d leaq -80(%rbp), %rdx movabsq $jl_invoke, %rax vzeroupper callq *%rax movq %rax, -112(%rbp) L317: movq (%rax), %rsi movl $1488, %edx # imm = 0x5D0 movl $16, %r8d movq -152(%rbp), %rcx movabsq $jl_gc_pool_alloc, %rax callq *%rax movq %rax, %rbx movq %r13, -8(%rbx) movq %rsi, (%rbx) movq %rbx, -104(%rbp) Source line: 3 addq $-1, %rdi jne L176 Source line: 6 movq -136(%rbp), %rax movq -152(%rbp), %rcx movq %rax, (%rcx) movq %rbx, %rax addq $136, %rsp popq %rbx popq %rdi popq %rsi popq %r12 popq %r13 popq %r14 popq %r15 popq %rbp retq nop vs

pushq %rbp movq %rsp, %rbp movabsq $567811336, %rax # imm = 0x21D81D08 Source line: 6 vmovsd (%rax), %xmm0 # xmm0 = mem[0],zero popq %rbp retq nopw %cs:(%rax,%rax) Such a difference in the number of calculations to get the same value!

How to find and cope with type instability

At this point, you may ask: "Well, why not just use C so that you do not have to look for these instabilities?" The answer is:

- Easy to find

- They may be helpful.

You can handle instability with functional barriers.

Julia gives you the macro

code_warntypeto show where the type instabilities are. For example, if we use this in thegfunction we created:@code_warntype g()

Variables: #self#::#g x::ANY #temp#@_3::Int64 i::Int64 #temp#@_5::Core.MethodInstance #temp#@_6::Float64 Body: begin x::ANY = 1 # line 3: SSAValue(2) = (Base.select_value)((Base.sle_int)(1,10)::Bool,10,(Base.box)(Int64,(Base.sub_int)(1,1)))::Int64 #temp#@_3::Int64 = 1 5: unless (Base.box)(Base.Bool,(Base.not_int)((#temp#@_3::Int64 === (Base.box)(Int64,(Base.add_int)(SSAValue(2),1)))::Bool)) goto 30 SSAValue(3) = #temp#@_3::Int64 SSAValue(4) = (Base.box)(Int64,(Base.add_int)(#temp#@_3::Int64,1)) i::Int64 = SSAValue(3) #temp#@_3::Int64 = SSAValue(4) # line 4: unless (Core.isa)(x::UNION{FLOAT64,INT64},Float64)::ANY goto 15 #temp#@_5::Core.MethodInstance = MethodInstance for /(::Float64, ::Int64) goto 24 15: unless (Core.isa)(x::UNION{FLOAT64,INT64},Int64)::ANY goto 19 #temp#@_5::Core.MethodInstance = MethodInstance for /(::Int64, ::Int64) goto 24 19: goto 21 21: #temp#@_6::Float64 = (x::UNION{FLOAT64,INT64} / 2)::Float64 goto 26 24: #temp#@_6::Float64 = $(Expr(:invoke, :(#temp#@_5), :(Main./), :(x::Union{Float64,Int64}), 2)) 26: x::ANY = #temp#@_6::Float64 28: goto 5 30: # line 6: return x::UNION{FLOAT64,INT64} end::UNION{FLOAT64,INT64} Notice that at the beginning we say that type x is Any . It will use any type that is not designated as the strict type , that is, it is an abstract type that must be placed in the box / checked at each step. We see that at the end we return x as UNION {FLOAT64, INT64} , which is another non-strict type. This tells us that the type has changed, causing difficulties. If we instead look at the code_warntype for h , we get all the strict types:

@code_warntype h() Variables: #self#::#h x::Float64 #temp#::Int64 i::Int64 Body: begin x::Float64 = 1.0 # line 3: SSAValue(2) = (Base.select_value)((Base.sle_int)(1,10)::Bool,10,(Base.box)(Int64,(Base.sub_int)(1,1)))::Int64 #temp#::Int64 = 1 5: unless (Base.box)(Base.Bool,(Base.not_int)((#temp#::Int64 === (Base.box)(Int64,(Base.add_int)(SSAValue(2),1)))::Bool)) goto 15 SSAValue(3) = #temp#::Int64 SSAValue(4) = (Base.box)(Int64,(Base.add_int)(#temp#::Int64,1)) i::Int64 = SSAValue(3) #temp#::Int64 = SSAValue(4) # line 4: x::Float64 = (Base.box)(Base.Float64,(Base.div_float)(x::Float64,(Base.box)(Float64,(Base.sitofp)(Float64,2)))) 13: goto 5 15: # line 6: return x::Float64 end::Float64 This indicates that the function is stable in type and will be compiled essentially into the optimal C-code . Thus, type instability is not difficult to find. What's harder is finding the right design. Why allow type instability? This is a long-standing question that led to the fact that dynamically typed languages dominate the playing field of scenarios. The idea is that in many cases you want to find a compromise between performance and reliability.

For example, you can read a table from a web page in which integers are mixed with floating-point numbers. In Julia, you can write your function so that if they were all integers, it would compile well, and if they were all floating-point numbers, it would also compile well. And if they are mixed? It will still work. This is the flexibility / convenience that we know and love from a language like Python / R. But Julia will tell you directly ( via code_warntype ) when you sacrifice performance.

How to cope with type instability

There are several ways to cope with type instability. First of all, if you like something like C / Fortran , where your types are declared and cannot change (which ensures the stability of types), you can do it in Julia:

local a::Int64 = 5 This makes a 64-bit integer, and if future code tries to change it, an error message will be displayed (or the correct conversion will be performed. But since the conversion will not be automatically rounded, it will most likely cause errors). Sprinkle them around your code, and you will get type stability, ala, C / Fortran . A less complicated way to handle this is with type statements. Here you put the same syntax on the other side of the equal sign. For example:

a = (b/c)::Float64 It seems to say: "calculate b / c and make sure that Float64 is output. If this is not the case, try automatic conversion. If the conversion cannot be easily performed, output an error". Placing such constructions will help you make sure that you know which types are involved. However, in some cases type instability is necessary. For example, let's say you want to have a reliable code, but the user gives you something crazy, like:

arr = Vector{Union{Int64,Float64}}(undef, 4) arr[1]=4 arr[2]=2.0 arr[3]=3.2 arr[4]=1 which is an array of 4x1 integers and floating point numbers. The actual element type for the array is Union {Int64, Float64} , which, as we saw earlier, was loose, which could lead to problems. The compiler knows only that each value can be an integer or floating point number, but not what element of which type. This means that it is naive to perform arithmetic with this array, for example:

function foo{T,N}(array::Array{T,N}) for i in eachindex(array) val = array[i] # do algorithm X on val end end will be slow since the operation will be boxed. However, we can use multiple dispatching to run codes in a specialized way. This is known as the use of functional barriers. For example:

function inner_foo{T<:Number}(val::T) # Do algorithm X on val end function foo2{T,N}(array::Array{T,N}) for i in eachindex(array) inner_foo(array[i]) end end Note that due to multiple dispatch, the call to inner_foo calls either a method specifically compiled for floating-point numbers, or a method specially compiled for integers. Thus, you can put a long calculation in inner_foo and at the same time make it work well, without yielding to the strict typing that the functional barrier gives you.

So I hope that you see that Julia offers a good combination of strong typing performance and dynamic typing convenience. A good programmer, Julia, has at his disposal both that and the other, in order to maximize productivity and / or performance if necessary.

Nezhdanchik 3: Eval works globally

One of the greatest strengths of Julia is her metaprogramming capabilities. This allows you to easily write a program that generates code, effectively reducing the amount of code you need to write and maintain. A macro is a function that runs at compile time and (usually) spits out code. For example:

macro defa() :(a=5) end will replace any instance of defa with the code a = 5 ( :(a = 5) is the quoted expression . Julia's code is expressions, and therefore metaprogramming is an assembly of expressions).

You can use this to build any complex Julia program you want and put it into a function as a type of really smart abbreviation. However, sometimes you may need to directly evaluate the generated code. Julia gives you the eval function or the @eval macro for this. In general, you should try to avoid eval , but there are some codes where necessary, for example, my new library for transferring data between different processes for parallel programming . However, note that if you use it:

@eval :(a=5) (REPL). , / . For example:

function testeval() @eval :(a=5) return 2a+5 end , a REPL. , , :

function testeval() @eval :(a=5) b = a::Int64 return 2b+5 end b — , , , , , . eval , , REPL .

4:

Julia , . : , .

, ? , , . For example:

a = 2 + 3 + 4 + 5 + 6 + 7 +8 + 9 + 10+ 11+ 12+ 13 a , 90, 27. ? a = 2 + 3 + 4 + 5 + 6 + 7 , a = 27 , +8 + 9 + 10+ 11+ 12+ 13 , , , :

a = 2 + 3 + 4 + 5 + 6 + 7 + 8 + 9 + 10+ 11+ 12+ 13 90, . , .

. — , . rssdev10

. For example:

x = rand(2,2) a = [cos(2*pi.*x[:,1]).*cos(2*pi.*x[:,2])./(4*pi) -sin(2.*x[:,1]).*sin(2.*x[:,2])./(4)] b = [cos(2*pi.*x[:,1]).*cos(2*pi.*x[:,2])./(4*pi) - sin(2.*x[:,1]).*sin(2.*x[:,2])./(4)] , a b — , ! (2,2) , — (1-) 2. , , :

a = [1 -2] b = [1 - 2] : 1 -2 . : 1-2 . - . :

a = [1 2 3 -4 2 -3 1 4] 2x4. , . : hcat :

a = hcat(cos(2*pi.*x[:,1]).*cos(2*pi.*x[:,2])./(4*pi),-sin(2.*x[:,1]).*sin(2.*x[:,2])./(4)) !

№5: ,

(View) — () , ( ), .

, , . , . , .

— "". "" , . "" — ( ). ( () ) . , :

a = [3;4;5] b = a b[1] = 1 , a — [1; 4; 5] , . . b a . , b = a b a . , , b , , ( b a ). , . , , :

a = rand(2,2) # Makes a random 2x2 matrix b = vec(a) # Makes a view to the 2x2 matrix which is a 1-dimensional array b , b a , b . , , ( , ). . , . For example:

c = a[1:2,1] ( , c a ). , , , , . , , :

d = @view a[1:2,1] e = view(a,1:2,1) d , e — , d e a , , , . ( , , — reshape , .) , . For example:

a[1:2,1] = [1;2] a , a[1:2,1] view (a, 1:2,1) , , a . -? , :

b = copy(a) , b a , , b a . a , copy! (B, a) , a a ( , b ). . , Vector {Vector} :

a = [ [1, 2, 3], [4, 5], [6, 7, 8, 9] ] . , ?

b = copy(a) b[1][1] = 10 a 3-element Array{Array{Int64,1},1}: [10, 2, 3] [4, 5] [6, 7, 8, 9] , a[1][1] 10! ? copy a . a , b , b . , deepcopy :

b = deepcopy(a) , . , , .

№6: , In-Place

MATLAB / Python / R . Julia , , , " ". (. . , , , , ). (in-place), . ? in-place ( mutable function ) — , , . , . , :

function f() x = [1;5;6] for i = 1:10 x = x + inner(x) end return x end function inner(x) return 2x end , inner , , 2x . , . , - y , :

function f() x = [1;5;6] y = Vector{Int64}(3) for i = 1:10 inner(y,x) for i in 1:3 x[i] = x[i] + y[i] end copy!(y,x) end return x end function inner!(y,x) for i=1:3 y[i] = 2*x[i] end nothing end . inner!(y, x) , y . y , y , , , inner! (y, x) . , , mutable (, ""). ! ( ).

, inner!(y, x) . copy!(y, x) — , x y , . , , . : x y . , x + inner(x) , , , 11 . , .

, , , . - ( loop-fusion ). Julia v0.5 . ( ( broadcast ), ). , f.(x) — , f x , , . f x , x = x + f. (x) . :

x .= x .+ f.(x) .= , , ,

for i = 1:length(x) x[i] = x[i] + f(x[i]) end , :

function f() x = [1;5;6] for i = 1:10 x .= x .+ inner.(x) end return x end function inner(x) return 2x end MATLAB / R / Python , , , . , , C / Fortran .

: ,

: , . , . , . , , . , .

- , C / Fortran , . - , , !

: ? , . , , ? [ , Javascript var x = 3 x , x = 3 x . ? , - Javascript!]

')

Source: https://habr.com/ru/post/443484/

All Articles