We write a high-performance http client using the example of fasthttp. Alexander Valyalkin (VertaMedia)

The Fasthttp library is an accelerated alternative to net / http from the standard Golang packages.

How is it arranged? Why is she so fast?

I bring to your attention a transcript of the report by Alexander Valyalkin Fasthttp client internals.

Fasthttp patterns can be used to speed up your applications, your code.

Who cares, welcome under cat.

I am Alexander Valyalkin. I work in the company VertaMedia. I designed a fasthttp for our needs. It includes the http client implementation and the http server implementation. Fasthttp works much faster than net / http from standard Go packages.

Fasthttp is a fast implementation of an http server and client. Located by fasthttp on github.com

I think that many have heard about the fasthttp server, that it is very fast. But few have heard of the fasthttp client. Fasthttp server participates in techempower benchmark - a well-known benchmark in narrow circles for http servers. Fasthttp server participates in rounds 12 and 13. Round 13 has not yet come out (in 2016 - Ed.).

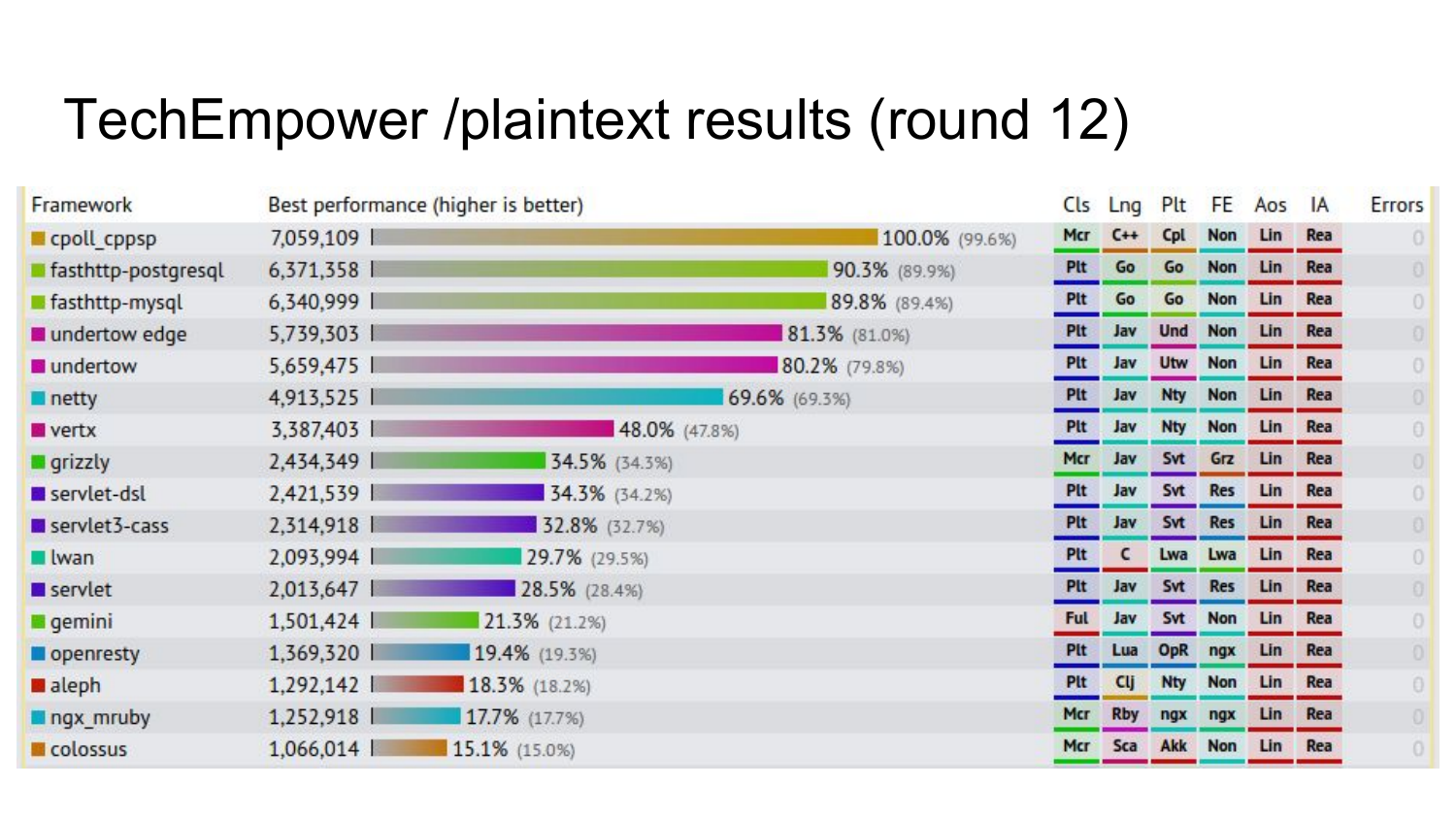

The results of one of the tests of the 12th round, where fasthttp is almost at the top. The numbers show how many requests he makes per second for a given test. In this test, a request is made to a page that gives to hello world. The hello world fasthttp is very fast.

Preliminary results of the next round, which has not yet come out (in 2016 - ed.). 4 fasthttp implementations take the first places in the benchmark, which not only gives hello world, but it also climbs into the base and forms an html-page based on the template.

About fasthttp client, few people know. But in fact, he is also cool. In this report I will tell you about the internal structure of the fasthttp client and why it was developed.

In fact, there are several clients in fasthttp: Client, HostClient and PipelineClient. Then I will tell you more about each of them.

Fasthttp.Client is a typical general purpose http client. With it, you can make requests to any Internet site, receive answers. Its features: it works fast, you can limit the number of open connections for each host, unlike the net / http package. The documentation is located at https://godoc.org/github.com/valyala/fasthttp#Client .

Fasthttp.HostClient is a specialized client for communicating with only one server. It is usually used to access the HTTP API: REST API, JSON API. It can also be used for proxying traffic from the Internet to an internal DataCenter on several servers. The documentation is here: https://godoc.org/github.com/valyala/fasthttp#HostClient .

Just like Fasthttp.Client, with Fasthttp.HostClient, you can limit the number of open connections to each of the Backend servers. This functionality is absent in net / http, and also this feature is absent in free nginx. This functionality is only in paid nginx, as far as I know.

Fasthttp.PipelineClient is a specialized client that allows you to manage pipeline requests for a server or for a limited number of servers. It can be used to access the API, over the HTTP protocol, where you need to perform a lot of requests and as quickly as possible. The restriction of Fasthttp.PipelineClient is that it may suffer from Head of Line blocking. This is when we send many requests to the server and do not wait for a response to each request. The server on some of these requests is blocked. Because of this, all other requests that went after him will wait until this server processes the slow request. Fasthttp.PipelineClient should be used only if you are sure that the server will instantly respond to your requests. Documentation

Now I’ll start talking about the internal implementation of each of these clients. I’ll start with Fasthttp.HostClient, because almost all the other clients are based on it.

This is the simplest HTTP client implementation in pseudocode on Go. Connect, get http response to this URL. We are connecting to this host. We receive connection. In this code, so that it is less than the volume, all checks for errors are missing. In fact, this is impossible. You should always check for errors. We create connection. Close the connection using defer. Send a request to this connection at the URL. We receive the answer, we return this answer. What is wrong with this implementation of the HTTP Client?

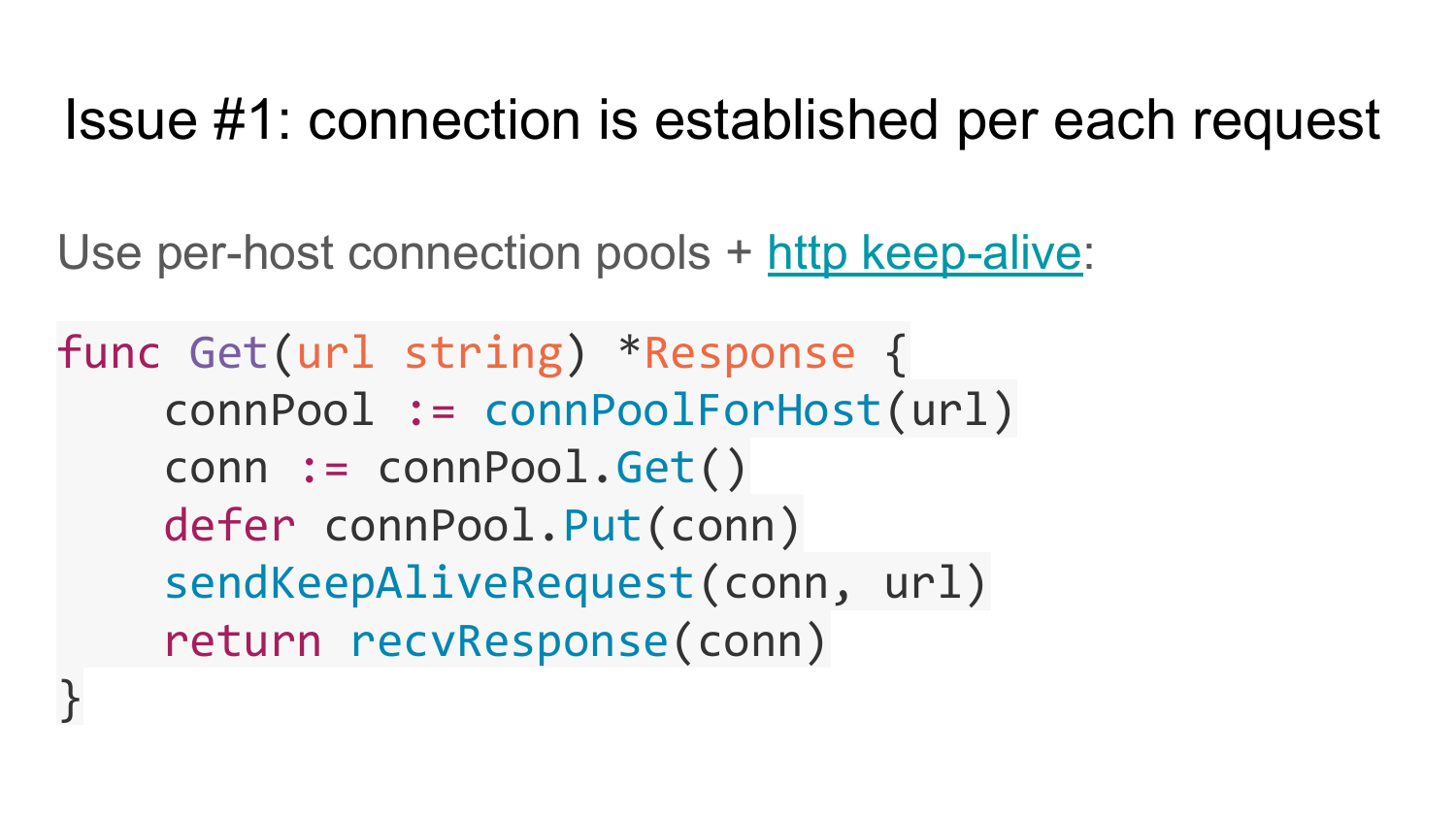

The first problem is that in this implementation, the connection is set for each request. This implementation does not support HTTP KeepAlive. How to solve this problem? You can use the Connection Pool for each server. You cannot use the Connection Pool for all servers, because the next request is not clear to which server to send. Each server should have its own connection pool. And use HTTP KeepAlive. This means that in Header it is not necessary to specify a Connection Close. In HTTP / 1.1, by default there is support for HTTP KeepAlive and Connection Close should be removed from the Header. Here is the implementation in client pseudocode with the support of the Connection Pool. There is a set of several connection pool to each host. The first function, connPoolForHost, returns the Connection Pool for the given host from the given URL. Then we pull out the connection from this Connection Pool, plan with Defer to send this connection back to the Pool, send a KeepAlive request to this connection, return a response. After the response, Defer is executed and connection returns to the Pool. Thus, HTTP KeepAlive support is enabled and everything starts to work faster. Because we do not waste time on creating a connection for each request.

But the solution also has problems. If you look at the function signature, you can see that it returns a response object for each request. This means that each time you need to allocate memory for this object, initialize it and return it. This is bad for performance. It can be bad if you have a lot of such calls to Get functions.

Therefore, this problem can be solved as it is solved in Fasthttp by placing the pointer object on the response object in the parameters of this function. Thus, that calling code can reuse this response object many times. On the slide, the implementation of this idea. In the Get function, we pass a reference to the response object, and the function fills this response. The last line fills this object.

Here is how it might look in your code. A function that receives a channel, which is transmitted a list of URLs that need to be polled. Let's organize a cycle on this channel. Create a response object once and reuse it in a loop. We cause Get, we transfer the pointer to object, we process this response. After we have processed it, reset it to its original state. Thus we avoid memory allocation and speed up our code.

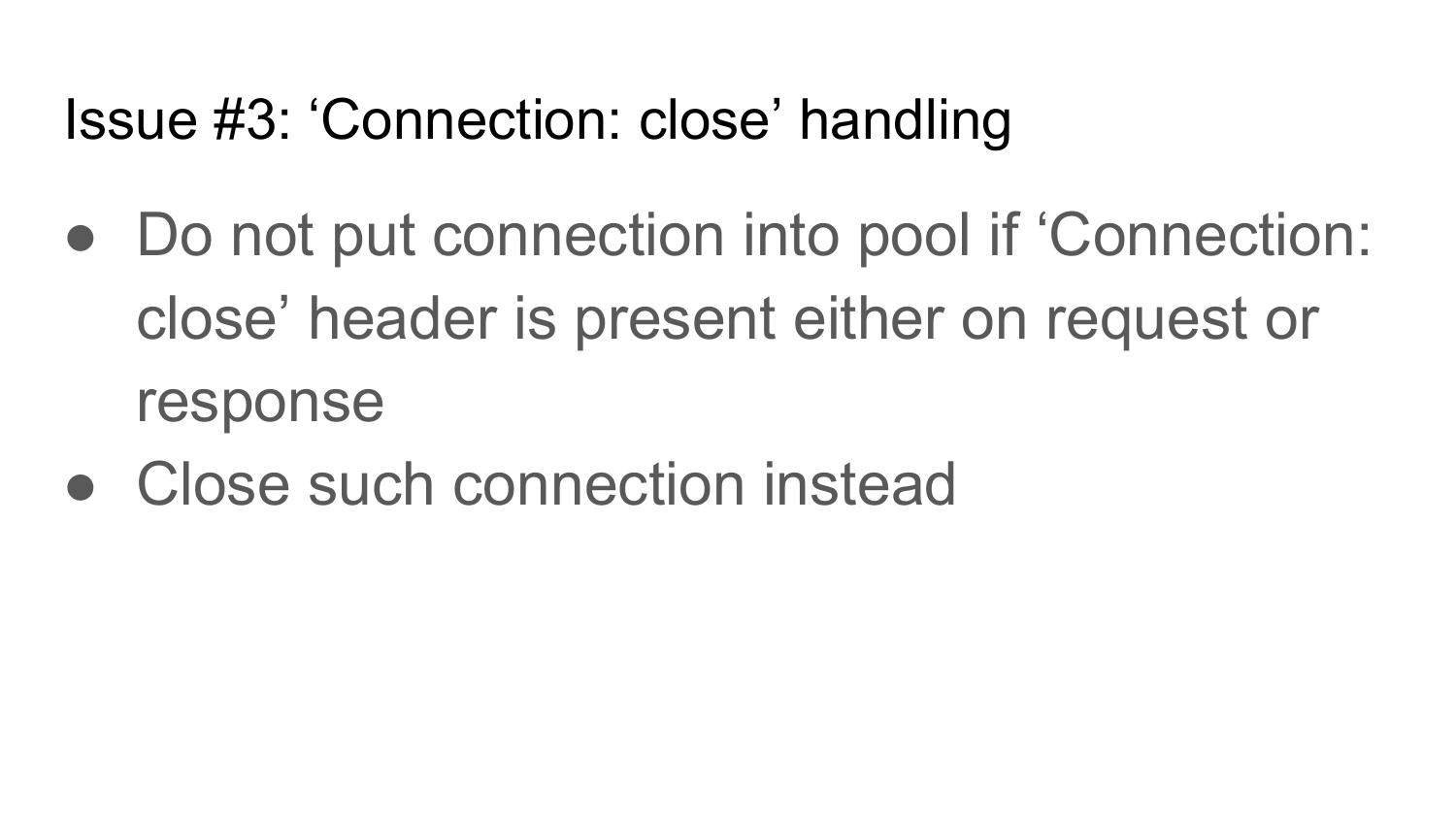

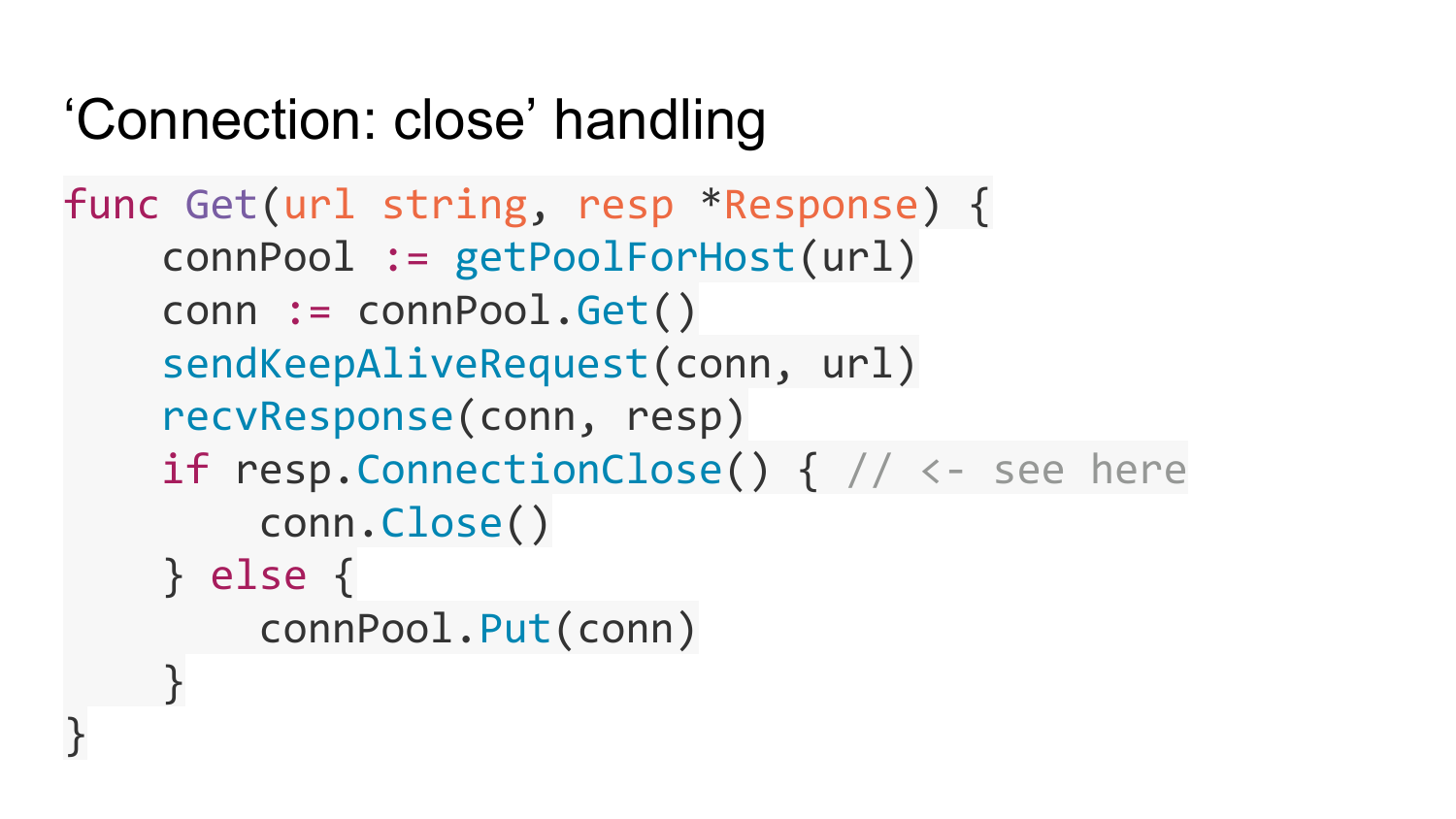

The third problem is the connection close. Connection close is an HTTP header that can appear in both request and response. If we get such a header, then this connection should be closed. Therefore, in the implementation of the client it is necessary to provide a connection close. If you sent a request with header connection close, then after receiving the answer you need to close this connection. If you send a request without a connection close, and the answer with a connection close is returned to you, it means that you also need to close this connection after you have received the answer.

Here is the pseudocode of this implementation. After you have received the answer, we check whether Connected close headers are installed there. If installed, just close the connection. If not set, return the connection back to the pool. If you do not do this, then if the server closes the connection after it returns responses, then your connection pool will contain broken connections that the server has closed, and you will try to write something in them and you will have errors.

The fourth problem affecting HTTP clients is a slow server, or a slow, non-operational network. Servers may stop responding to your requests for various reasons. For example, the server has broken or the network between your client and server has stopped working. Because of this, all your gorutiny, which call the Get function, which was previously described, will be blocked, waiting for a response from the server indefinitely. For example, if you implement an http proxy that accepts an incoming connection and calls a Get function for each connection, a large number of GORUTINs will be created and they will all hang on your server until the server collapses until the memory runs out.

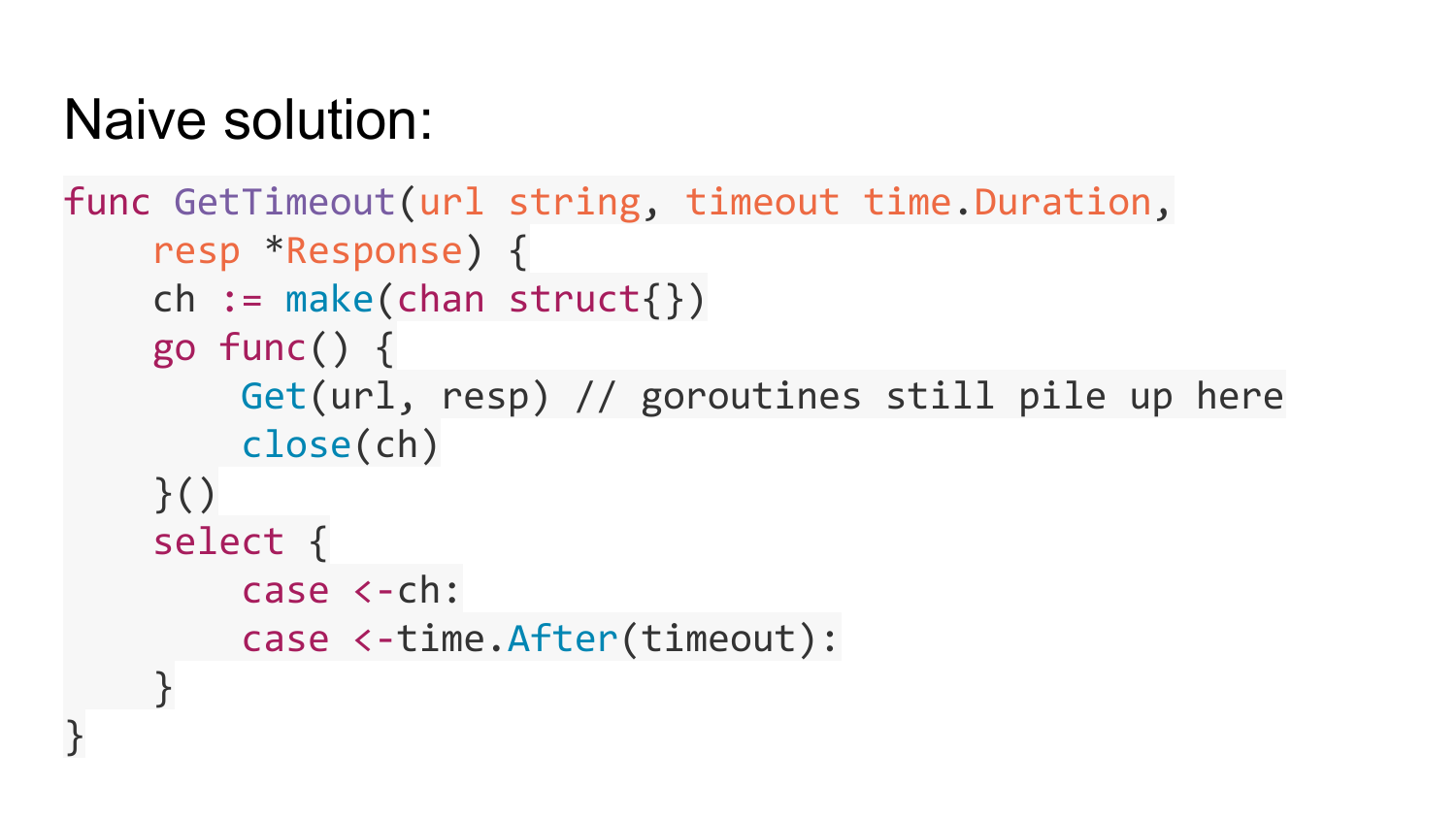

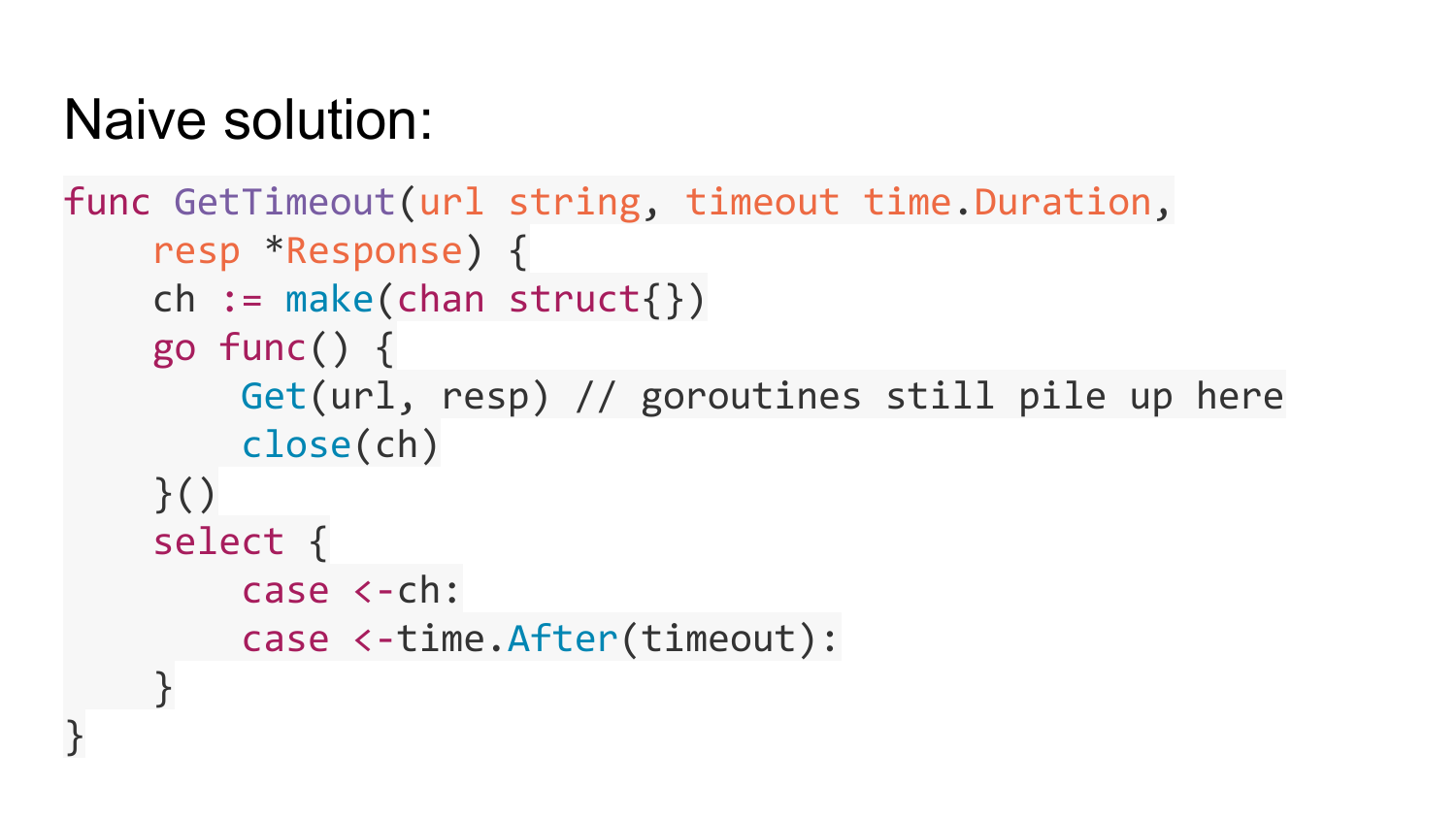

How to solve this problem? There is such a naive solution, which for the first time comes to mind - just turn this Get into a separate mountain. Then pass an empty channel to the gorutin, which will be closed after Get. After the launch of this gorutina wait on this channel for some time (timeout). In this case, if it takes you some time and this Get failed, then the exit from this function will occur on timeout. If this Get is executed, then the channel will close and exit will occur. But this decision is wrong, because it transfers the problem from a sick head to a healthy one. All the same, the gorutines will be created and hung no matter what timeout is used. The number of gorutins that caused Get timeout will be limited, but there will be an unlimited number of Gorutins that will be created inside Get with a timeout.

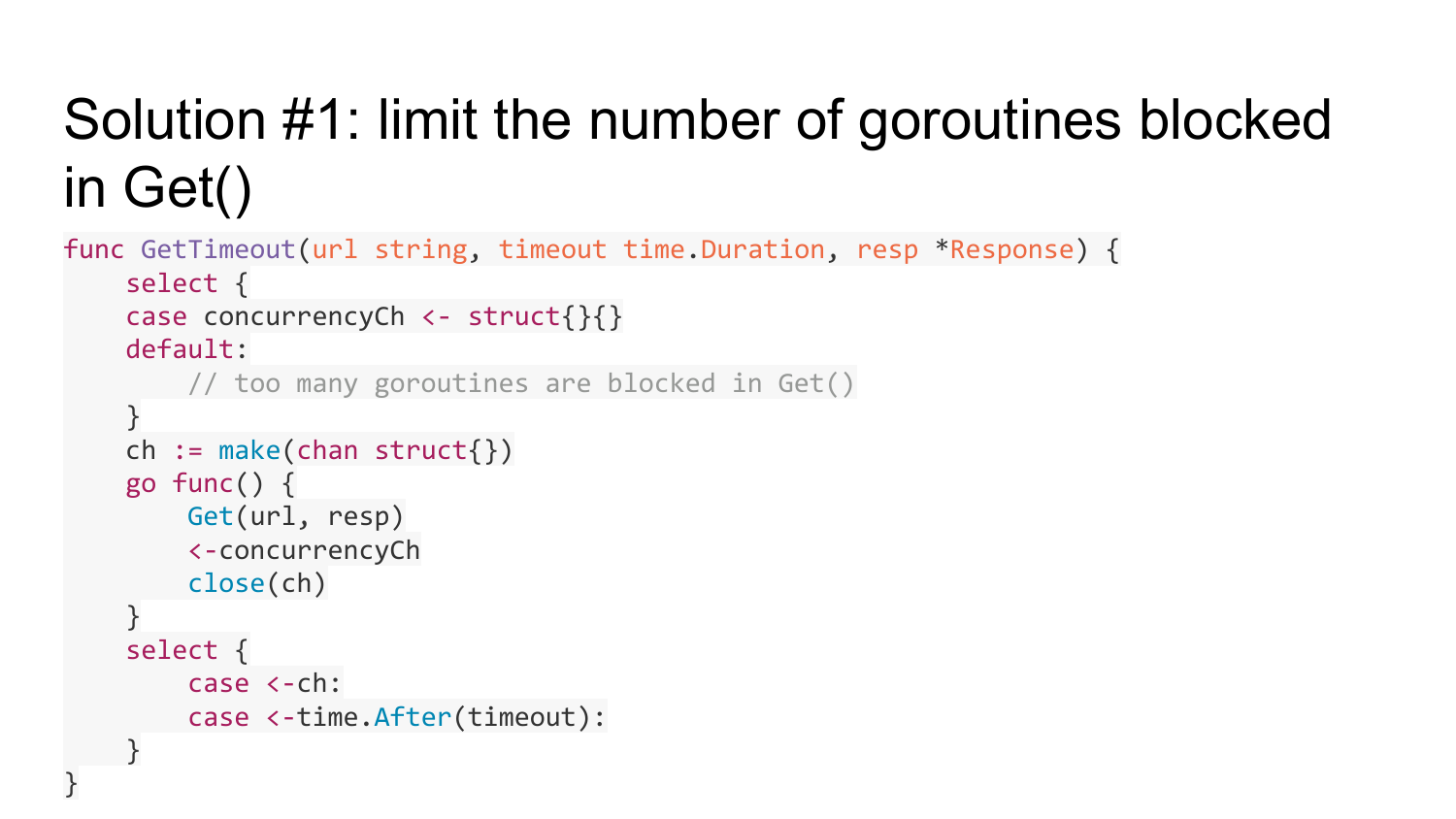

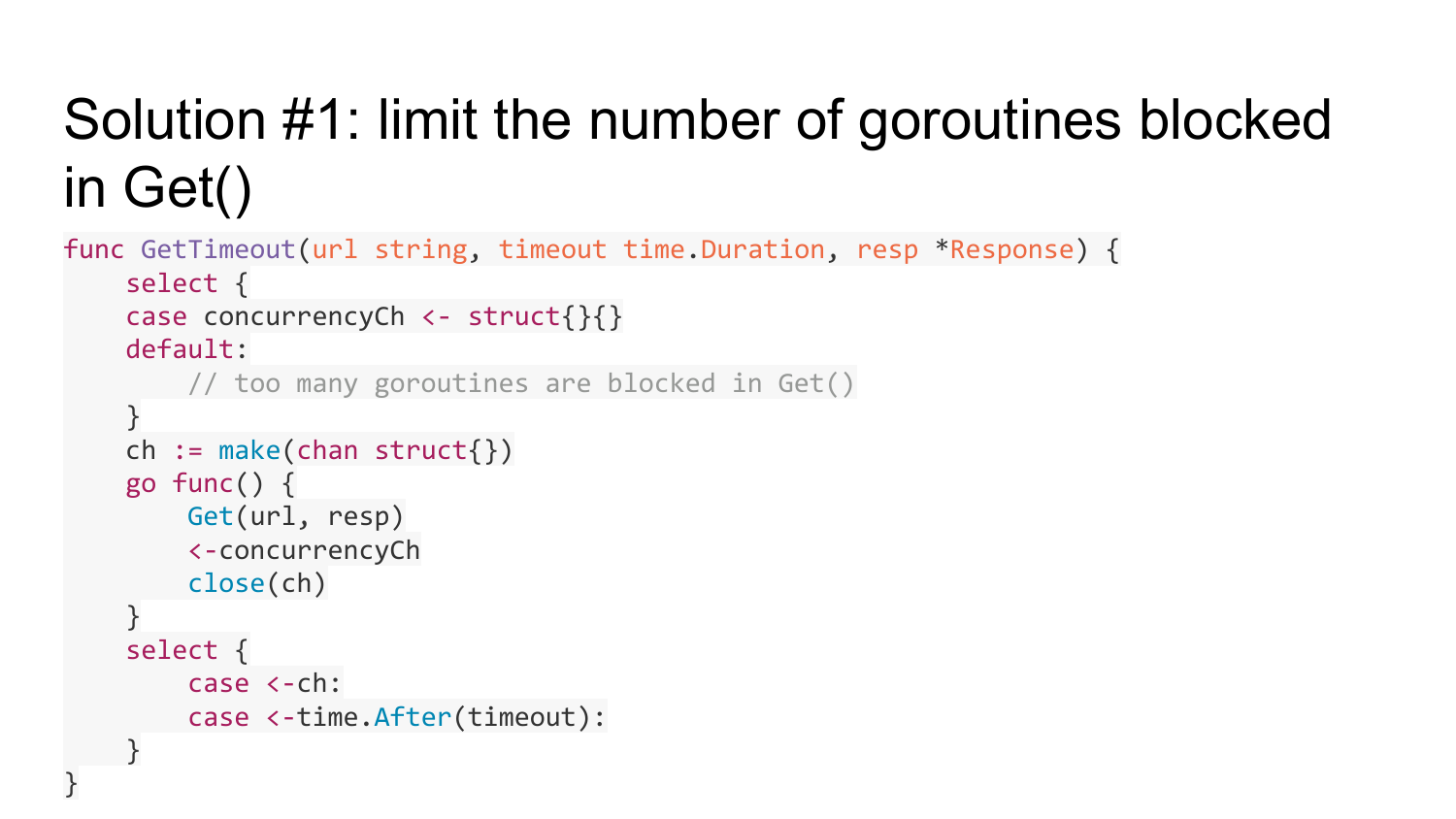

How to solve this problem? There is a first solution - this is to limit the number of blocked gorutin in the function Get. This can be done using a well-known pattern such as using a buffered channel of limited length, which will count the number of gorutins that perform the Get function. If this amount of gorutin exceeds some kind of limit - the capacity of this channel, then we will exit to the default branch. This means that all the Gortonins that Get do are busy with us, and in default the branch just needs to return an Error that there are no free resources. Before we create a mountain, we try to write some empty structure to this channel. If this does not work, then we have exceeded the number of gorutin. If it works, then we create this gorutin and after Get has completed, we read one value from this channel. Thus, we limit the number of gorutin that can be blocked in Get.

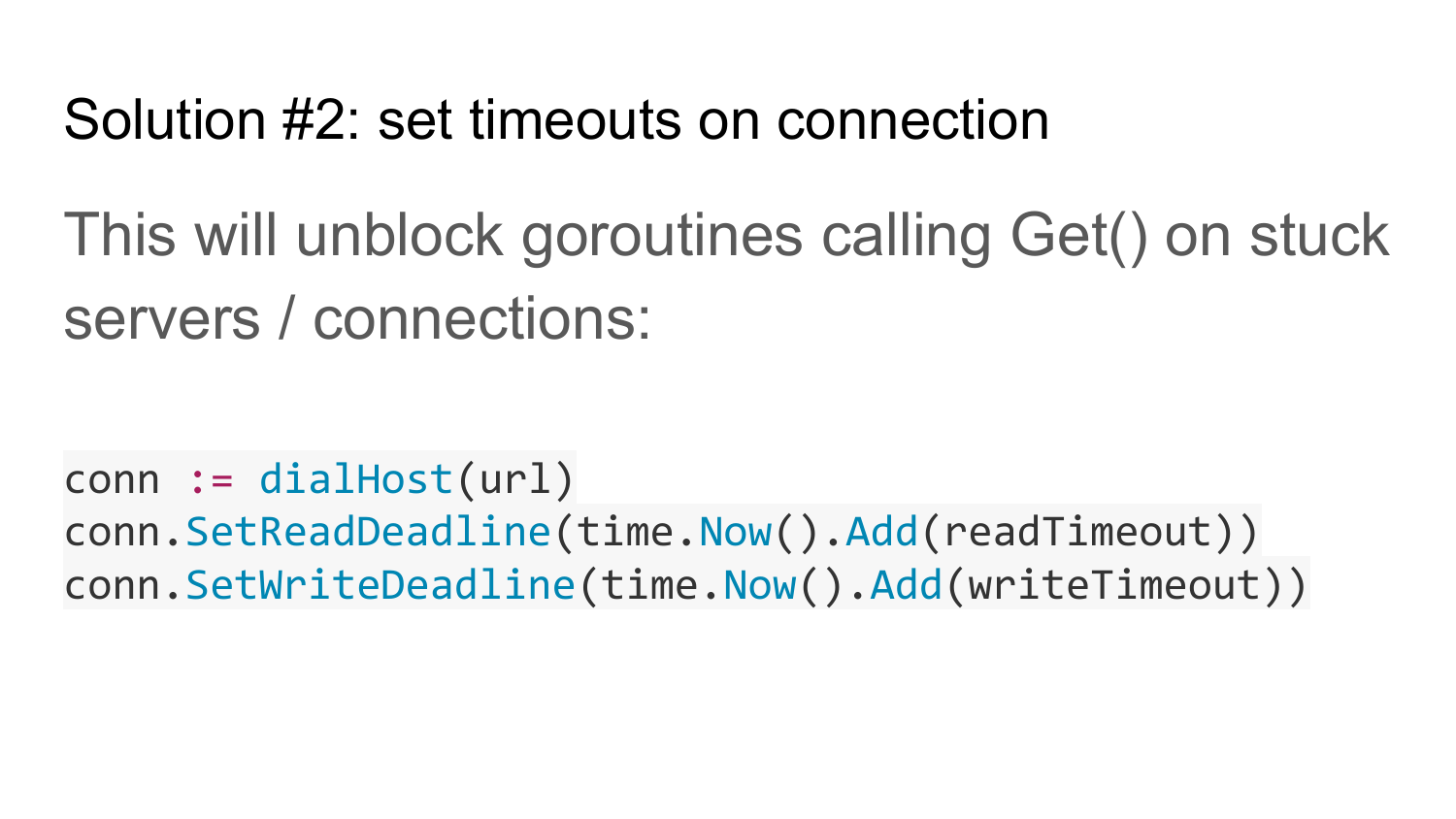

The second solution that complements the first is to set timeouts for connection to the server. This will unlock the get function if the server does not respond for a long time or the network is down.

If the network does not work in Solution # 1, then everything will hang. After cuncurrency has scored a limited number of gorutins that are stuck here, the getimeout function will always return an error. In order for it to start working normally, you need a second solution (Solution # 2), which sets a timeout for reading and writing from the connection. This helps to unblock blocked gorutiny if the network or server stops working.

Solution # 1 has a data race. The response object, which passed the pointer, will be busy if we have Get blocked. But this function get timeout can be timed out. In this case, we quit this function, a response will hang and over time will be overwritten. Thus it turns out data race. Since we have a response after exiting the function, it is still used somewhere in the gorutin.

The problem is solved by creating a response copy and passing the response copy to the mountain. After Get executed, we copy from this response a copy of the response to our original response, which is transmitted here. Thus, the data race is solved. This response copy lives for a short time and returns back to the pool. We reuse response. A copy of the response may not fit in the pool only on timeout. By timeout, the response from the pool is lost.

Do I need to close the connection after the server did not return the response during the timeout? The answer is no. Rather, yes, if you want to doS server. Because when you send a request to the server, wait for someone for a while, the server doesn’t respond during this time - it does not cope with requests. For example, you close this connection, but this does not mean that the server will immediately stop the execution of this request. The server will continue its execution. The server will find that this request does not need to be executed after trying to return a response to you. You closed the connection, tried to create a new request again, again the timeout passed, again closed, created a new request. You will have a server load going up. As a result, your service is for the DoS of your requests. This is a DoS at the level of http requests. If you have servers that are slow and you don’t want to disable them, then you don’t need to close the connection after a timeout. You need to wait for some time, leave the connection for the atonement of this server. Let him try to give you the answer. In the meantime, use other free connections. Everything that was told before is all stages of the implementation of Fasthttp.Client and problems that arose during the implementation of Fasthttp.Client. These problems are resolved in Fasthttp.HostClient.

Did we get a fast client now? Not really. We need to see how the Connection Pool is implemented.

Did we get a fast client now? Not really. We need to see how the Connection Pool is implemented.

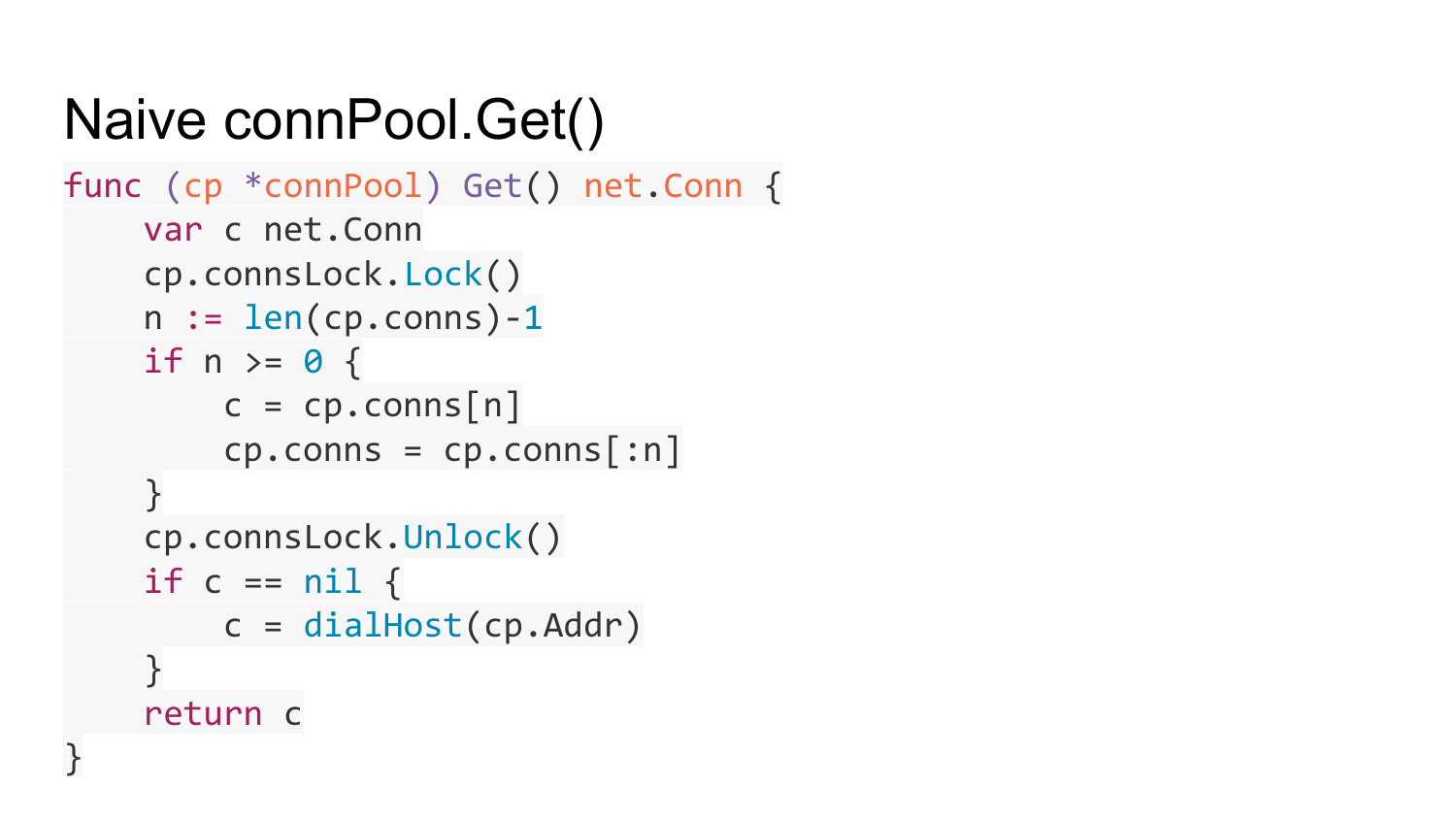

The naive implementation of the Connection Pool looks like this. There is some server address where you need to establish a connection. There is a list of free connection and a lock to synchronize access to this list.

Here is the function of getting a connection from the connection pool. We see our list of collection. If there is something there, then we get a free connection and return it. If there is nothing, then create a new connection to this server and return it. What is wrong here?

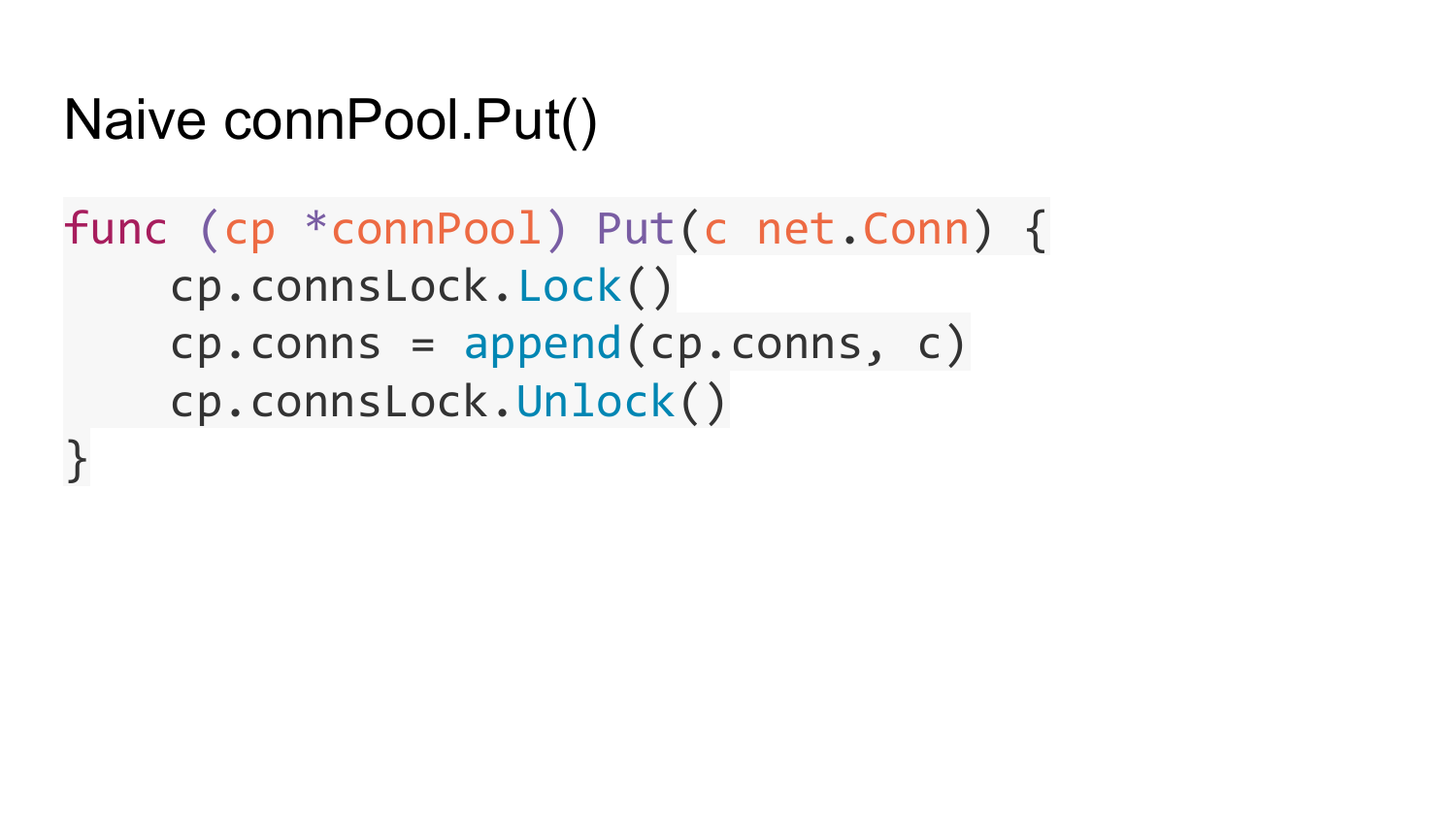

The function connPool.Put returns a free connection.

The function connPool.Put returns a free connection.

On account of timeout. In Fasthttp.Client, you can specify the maximum lifetime of an open unused connection. After this time has passed, unused connections are closed automatically and thrown out of this pool.

Older connections become unused over time and are automatically closed and removed from the pool.

When a connection is taken from the pool, and it turns out that its server is closed, and you tried to write something there, a second attempt is made - it gets a new connection and tries again to send requests for that connection. But this is only if this request is idempotent - that is, a request that can be executed many times without side effects on the server - is it a GET or HEAD request. For example, in the standard net / http they just now added a check for closed connections. They made a more clever check. They check, when they are trying to send a new request to the connection from the pool, if at least one byte has gone to this connection. If you go, then return Error. If not, then take a new connection from the pool.

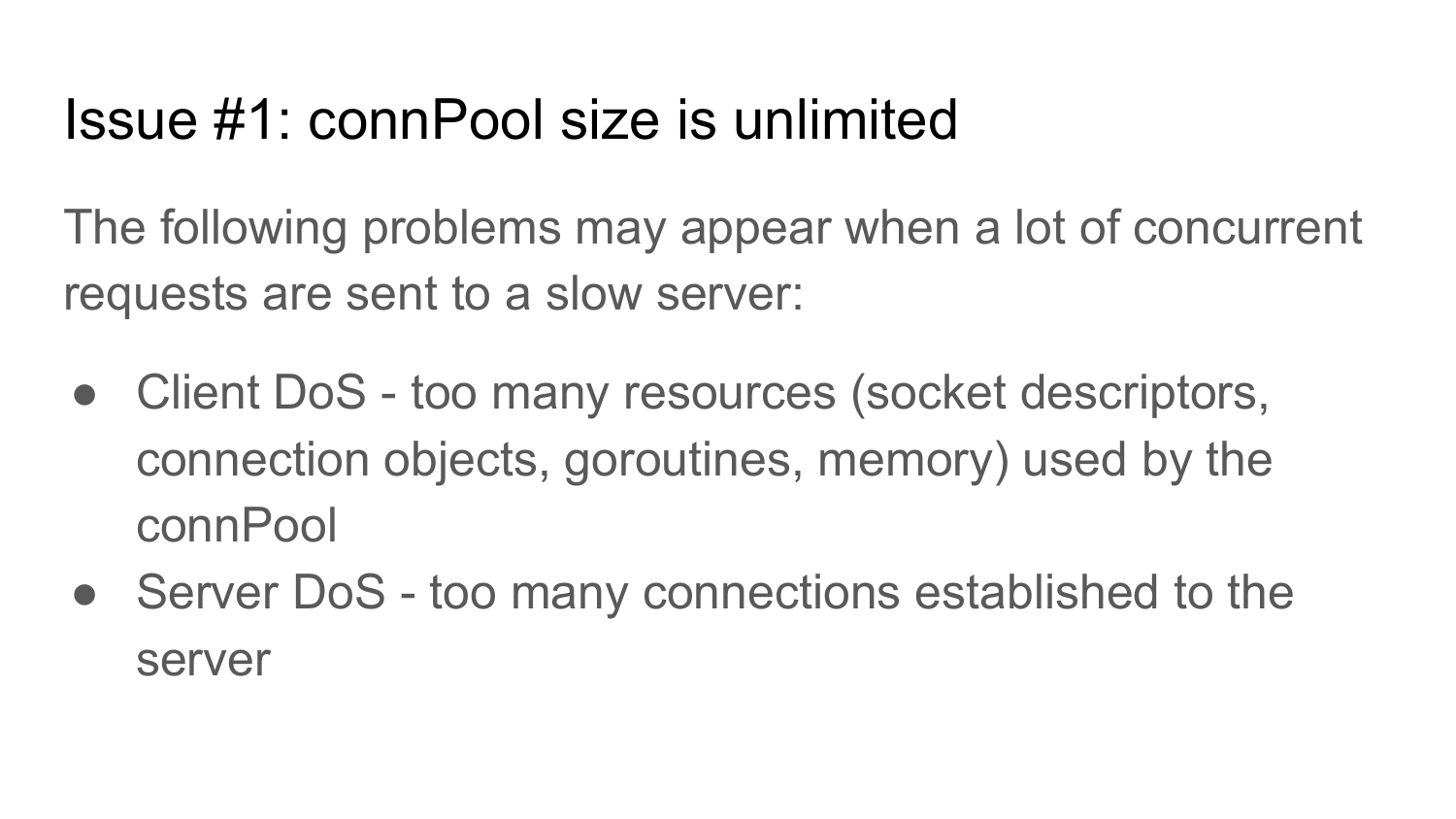

What is wrong with the pool? Its size is not limited. Same implementation as in net / http. If you write a client that is breaking from millions of gorutin to a slow server, then the client will try to create a million connection to this server. In the standard net / http package there is no limit on the maximum number of connections. For a client that is used to access APIs via HTTP, it is advisable to limit the size of this connection pool. Otherwise, your clients may go down because all resources will be used: streams, objects, connections, gorutines and memory. It can also lead to DoS of your servers, since there will be a lot of connection to them, which are either not used or are used inefficiently, because the server cannot hold so much connection.

We restrict connection pool. There is no code here because it is too big to fit on one slide. Those interested can see the implementation of this feature on github.com.

The second problem. A lot of requests come to the client at some point in time. And after that there is a decline and a return to the previous number of requests. For example, 10,000 requests came simultaneously, then the number of requests returned to 1,000 per unit time. After this connection pool will grow to 10,000 connection. These connections will hang there endlessly. Such a problem was in the standard net / http client up to version 1.7. Therefore, we need to solve this problem.

This problem is solved by limiting the life of an unused connection. If for some time not a single request has been sent through the connection, then it is simply closed and thrown out of the pool. The implementation is missing because it is too big.

We got a customer who works fast and cool? Not certainly in that way. We still have the function to create a connection - dialHost.

Let's look at its implementation. A naive implementation looks like this. Just the address where you need to connect. We call the standard net.Dial function. It returns a connection. What is wrong with this implementation?

By default, net.Dial makes a dns request for each call. This may lead to increased resource utilization of your DNS subsystem. If API clients connect to servers that do not support KeepAlive connections, then they close the connections. You are supported by KeepAlive, but the servers are not supported. After this response, the server closes the connection. It turns out, net.Dial is called for each request. Such requests about 10 thousand per second. You have 10 thousand times per second resolve in dns. This loads the DNS subsystem.

How to solve this problem? Create a cache, which is a map host in IP for a short time right in your Go code, and do not call dns resolving to each net.Dial. Connect to ready IP addresses.

The second problem is the uneven load on the servers, if you have several servers hidden behind your domain name. For example, like Round Robin DNS. If you cache one IP address in DNS for a while, then during this time all requests will go to one server. Although you may have several of them there. Need to solve this problem. It is solved on by enumerating all the available IPs that are hidden behind the given domain name. This is also done in Fasthttp.Client.

The third problem is that net.Dial can also hang indefinitely due to problems with the network or the server where you are trying to connect. In this case, your gorutiny will hang on the function Get. This can also lead to increased resource utilization.

The solution is to add a timeout. Or use Dial with timeout from standard package net. But as far as I know, it is implemented incorrectly. Maybe now they fixed it, but earlier it was implemented as I told you.

The solution is to add a timeout. Or use Dial with timeout from standard package net. But as far as I know, it is implemented incorrectly. Maybe now they fixed it, but earlier it was implemented as I told you.

This is how it was implemented. Instead of Get, there was a Dial function. It was performed in some kind of gorutin. If Dial hung, it turned out that the gorutines accumulated. The number of such gorutin, which hung, could grow infinitely. This is the standard implementation of DialTimeout. Maybe they already fixed it.

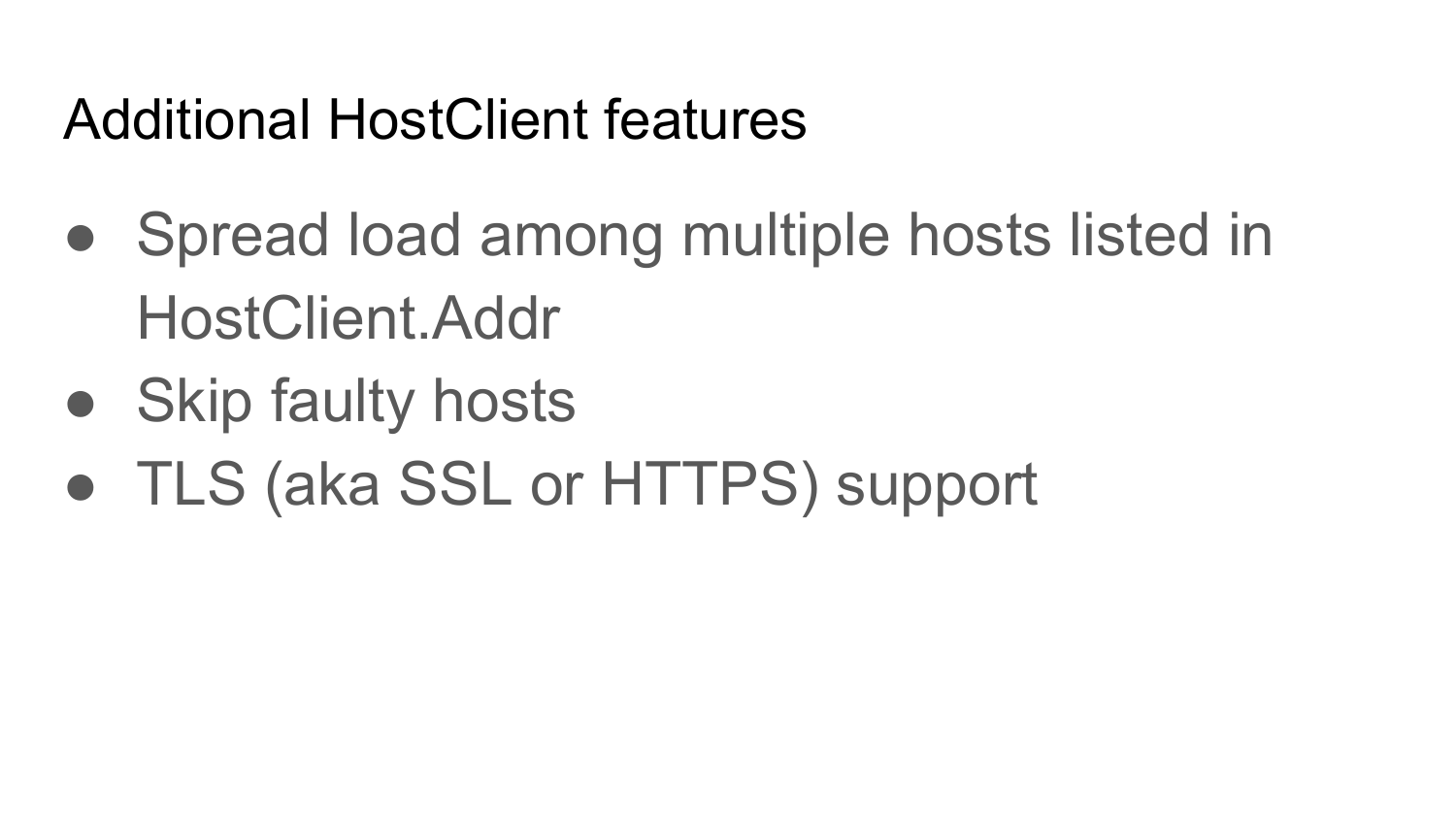

In addition, HostClient has the following features.

HostClient is able to distribute the load on the list of servers that you specified. Thus, a primitive LoadBalance is implemented.

Also HostClient can skip non-working servers. If at some point in time some servers stop working, then HostClient will detect this when trying to access this server. In the next connection, he will not access this server. Thus implemented load balancing. You lose the minimum number of requests.

Fauly host can be for two reasons.

The first reason is that we cannot establish a connection to the server. Hang on the dial. In this case, it turns out, we are stuck on this Dial. Get, which is frozen, will wait some time. While he is waiting, at this time all other requests will go to other servers. Thus, more requests will go through other hosts than through this one.

The second option is when the server begins to respond slowly. He gets more time in Get than other servers. In this case, the number of requests sent to this server becomes less than to other servers.

If Error just returned, then there is an attempt to connect to the next server in Round Robin.

SSL support is very easy to do, since Golang has a very cool implementation. It is convenient to use and connect in their decisions.

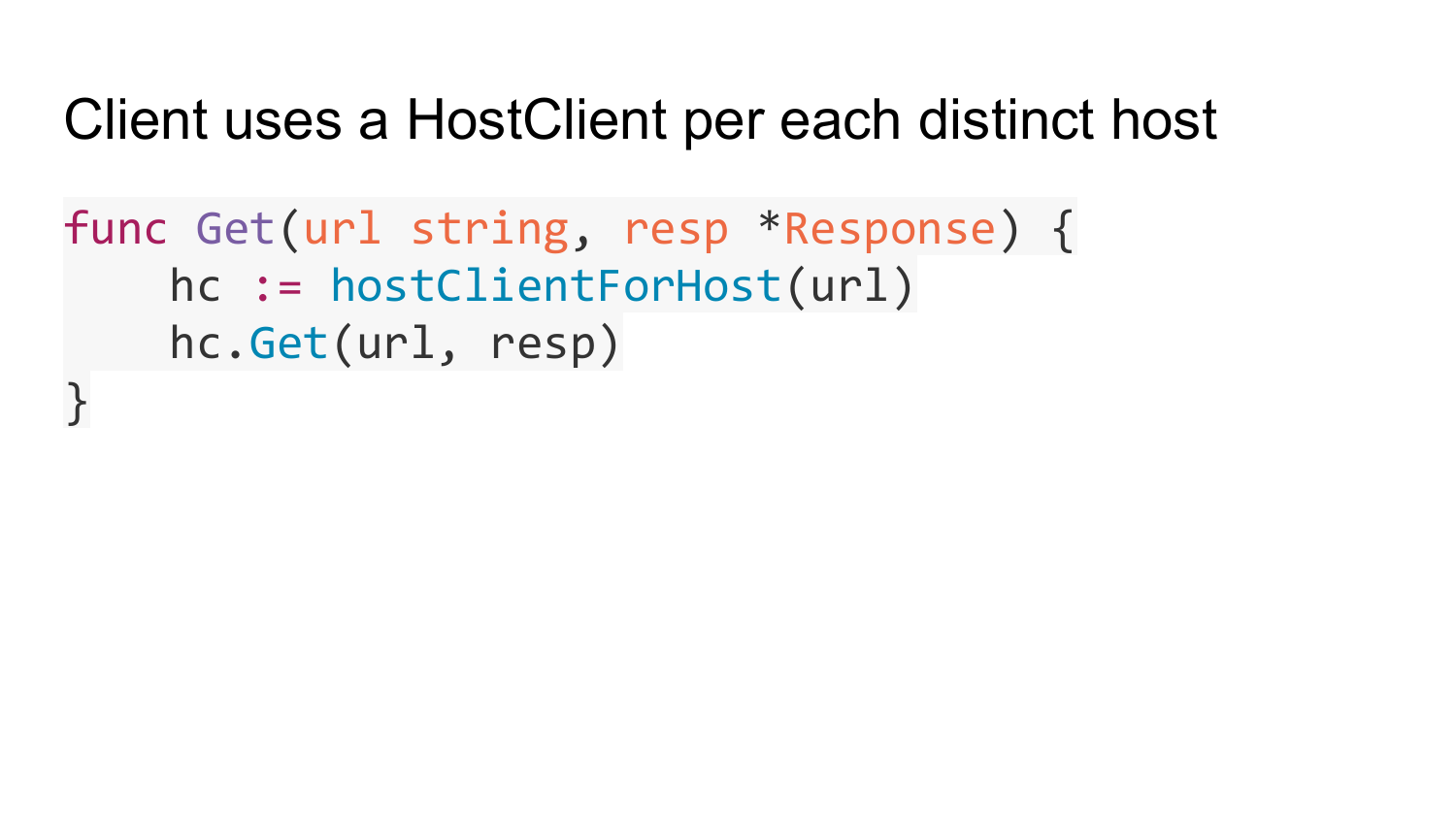

Go to fasthttp.Client. In fact, everything is much simpler compared to HostClient, since fasthttp.Client is implemented on the basis of HostClient.

Here is the primitive pseudocode for the client to implement the Get function We have a HostClient list for each known host. This function returns the desired HostClient for a given host from a given angle. Then we are in this HostClient called function Get. Here is the entire client implementation based on HostClient.

This function can create new HostClient for any new tails that appear in our URL. If you use for web-crawling (climbing on the Internet), then your client can access millions of sites. As a result, you get a million of these HostClient to each site and all memory is over. This is how it was in the standard net / http, maybe the problem has already been solved. To prevent this from happening, you should periodically clean HostClient, which has not been addressed for a long time. So does fasthttp.

Unlike Client and HostClient, PipelineClient has a slightly different implementation. There is no connection pool in the PipelineClient. PipelineClient has an option for the number of connections that need to be established on a host. PipelineClient will try to push through all requests through this amount of connection. Therefore, there is no connection pool. PipelineClient immediately establishes a connection and scatters incoming requests into available connections.

For PipelineClient, for each connection, two gorutinas are launched. PipelineConnClient.writer - writes requests to the connection, without waiting for a response. PipelineConnClient.reader - reads the responses from this connection and matches them with the requests that were sent via PipelineConnClient.writer. PipelineConnClient.reader returns the response to the code that called this Get function.

On the slide, an exemplary implementation of the PipelineClient.Get function for PipelineClient. In the pipelineWork structure there is a url to which you need to refer, there is a pointer to the response, there is channel done, which signals that the response is ready.

Below is the Get implementation. We create and fill the structure. We send it to the channel, which is read by PipelineConnClient.writer and all requests are written to the connection. We are waiting on channel w.done, which is closed by PipelineConnClient.reader, when a response for this request came.

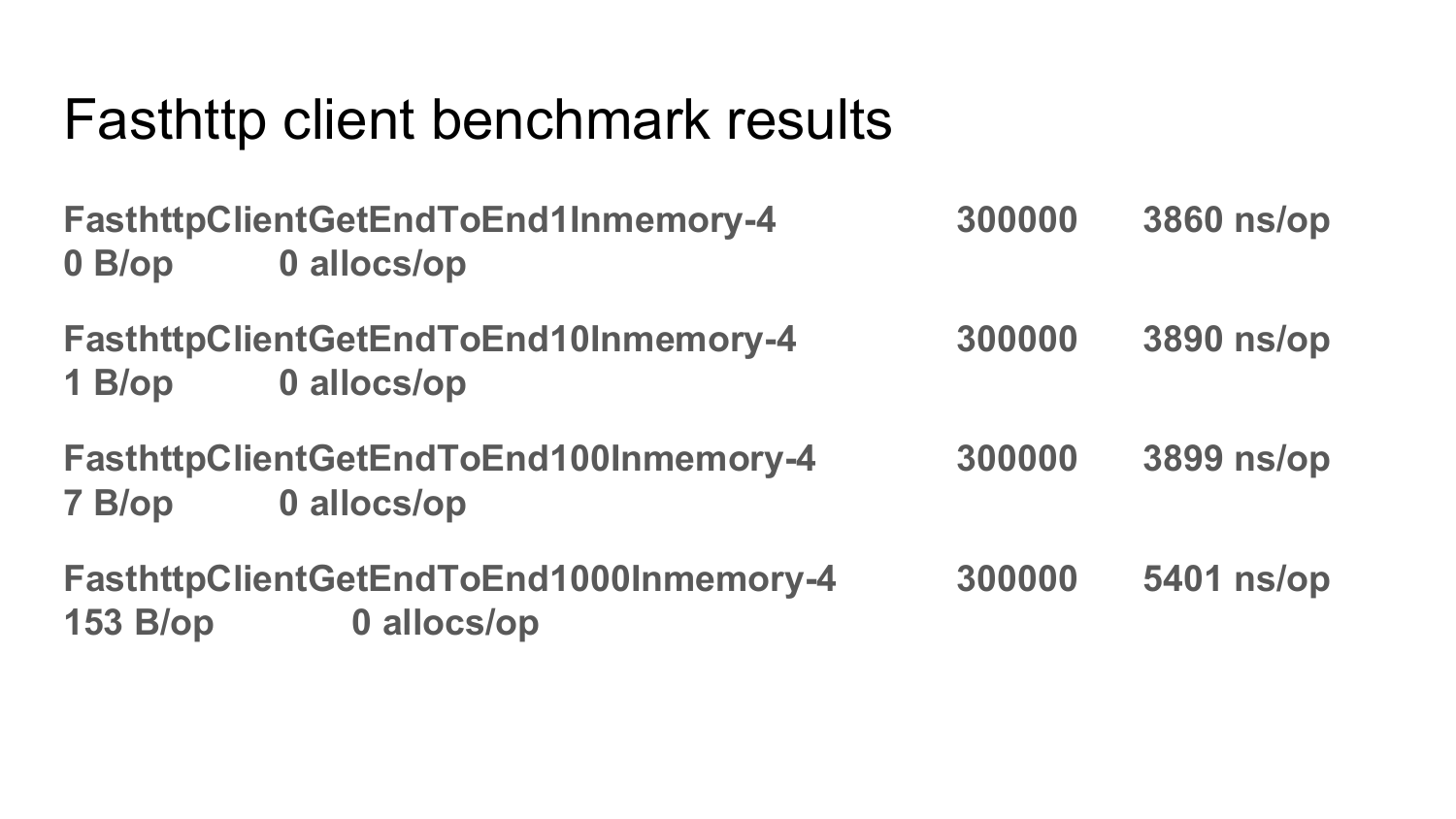

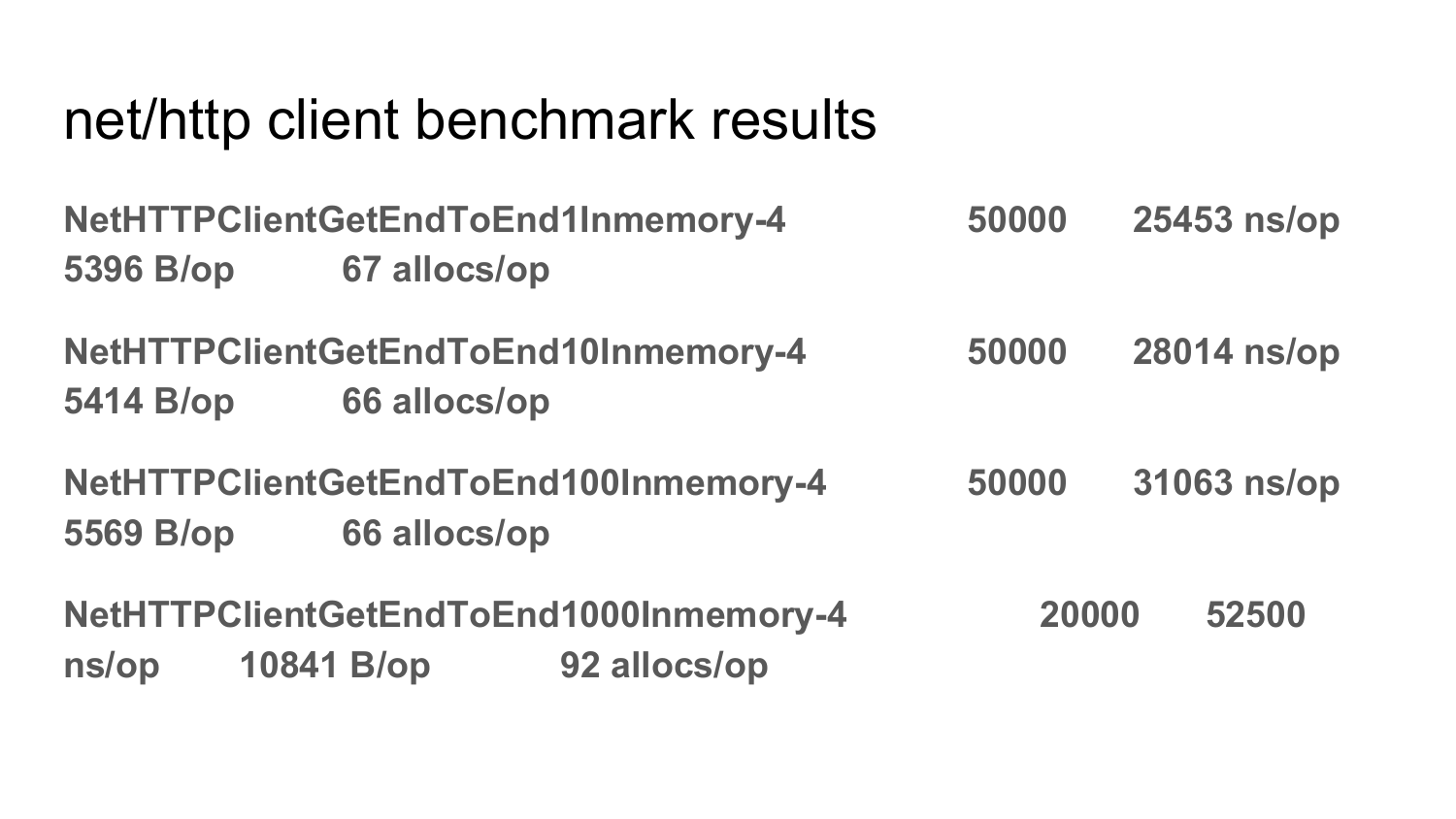

Comparing net / http client performance with fasthttp.Client on the next 2 slides.

The benchmarks that are shown on these slides are present in fasthttp. You can run, test, test them yourself. fasthttp. , fasthttp, . allocation . .

net/http. , allocation net/nttp. .

: PipelineClient connection?

: — pending , . . request, pending , Error.

: API , fasthttp, net/http?

: . net/http . . string -, string . , net/http, . - , . fasthttp , . . net/http fasthttp , net/http POST-, response, () . fasthttp , request response . 10 request 10 response . , . fasthttp 10 request 10 response? . — . , net/http. . , net/http — .

PS .

— .

')

Source: https://habr.com/ru/post/443378/

All Articles