Security Week 11: RSA 2019 and a bright future

The twenty-eighth RSA conference took place last week, and if in 2018 this industry's largest business event was marked by some difficulty in finding new meanings, this time everything is fine. The opening presentation of RSA President Rohit Guy was devoted to the “landscape of trust”, and in the course of it an attempt was made to draw a positive scenario for the future, specifically - in 2049.

The twenty-eighth RSA conference took place last week, and if in 2018 this industry's largest business event was marked by some difficulty in finding new meanings, this time everything is fine. The opening presentation of RSA President Rohit Guy was devoted to the “landscape of trust”, and in the course of it an attempt was made to draw a positive scenario for the future, specifically - in 2049.He is positive because he managed to solve many problems of today, including not even cyber security issues, but rather difficulties with a new model of the development of society, which is almost entirely tied to the Internet and digital services. Indeed, if you try to look at this topic from above (what is traditionally liked to do at RSA), it’s no longer just a matter of being able to hack someone’s computer or server. There is, for example, the problem of manipulation in social networks, and the services themselves sometimes develop somewhere not there. In this context, the idea of trust - users to companies, people to artificial intelligence - is really important.

If you are interested in how they talk about security at business events, watch the video.

In the presentation another interesting idea was expressed: artificial intelligence does not need to be forced to perform tasks that can be done only by people, where facts play a smaller role compared to emotions and, for example, ethics. And vice versa: decisions in which strict adherence to facts is required should be more often left at the mercy of machines that are (supposedly) less prone to errors. Decisions about whether to trust any source of information on the network or not should be based on reputation. A similar idea can be applied to the problem of cyber incidents: yes, they happen to everyone sooner or later, but organizations with an amount of effort to protect customer data outweigh the consequences of hacking have an advantage.

')

Theoretical attacks on machine learning algorithms

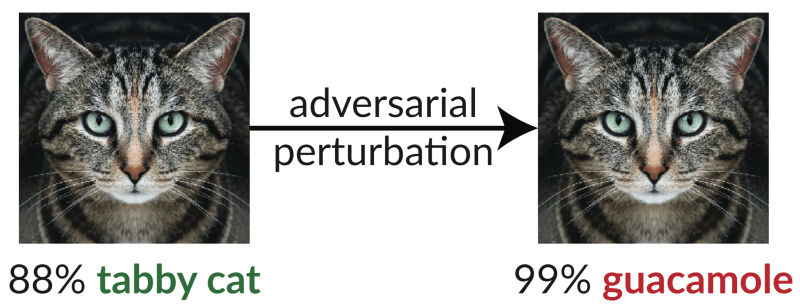

However, the RSA still shows how complex the relationship within the industry is between those who find new problems and those who offer solutions. Apart from a couple of protocol speeches, the most interesting speeches at the conference, if they draw the future, then in some not very optimistic tones. A presentation by Google representative Nicolas Carlini ( news ) deserves attention. He summarized the experience of attacks on machine learning algorithms, starting with this already classic example from 2017:

The original image of the cat is modified completely unnoticed by humans, but the recognition algorithm classifies this picture in a completely different way. What is the threat of such a modification? Another is not the most recent, but informative example:

The road sign seems to have suffered a little from vandals, but for a man it is quite understandable. A car can recognize a sign with such changes as a completely different sign - with information about speed limits, and will not stop at an intersection. Further more interesting:

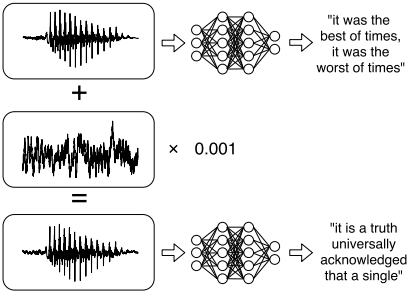

The same method can be applied to sound, which was demonstrated in practice. In the first example, the speech recognition system “recognized” the text in a piece of music. In the second, the voice recording manipulation imperceptible to humans led to the recognition of a completely different set of words (as in the picture). In the third case, the text was recognized altogether from senseless noise. This is an interesting situation: at some point, people and their digital assistants begin to see and hear completely different things. Finally, machine learning algorithms can theoretically disclose personal data on which they were trained, with further use. The simplest and most understandable example is the aka “damned T9” predictive typing systems.

Medical Device Security

Security in medicine has recently been discussed in areas of incredibly outdated software and the lack of budgets for the development of IT. As a result, the effects of cyber attacks are more serious than usual, and this involves the loss or leakage of highly sensitive patient data. At the RSA conference, Check Point Software specialists shared the results of a study of the computer network of a real hospital in Israel. In most medical institutions, the computer network is not divided into zones, so it was easy enough to detect specialized devices, in this case, a device for ultrasound.

The story about the search for vulnerabilities in the computer part of the device was very short. Ultrasound is running Windows 2000, and finding an exploit for one of the critical vulnerabilities in this OS was not difficult. The researchers got access to the archive of images with the names of patients, were able to edit this information and had the opportunity to activate the extortioner Trojan. The device manufacturer said that more modern models are built on the same software, software updates are regularly delivered there (but not the fact that they are installed), but updating medical devices costs (a lot) money, and what is the point if older devices work?

Recommendations for medical organizations are understandable: local network segmentation, separation of devices storing private data from all others. Interestingly, for the development of machine learning technologies in medicine, on the contrary, the widest possible access to patient data is necessary for training algorithms.

Disclaimer: The opinions expressed in this digest may not always coincide with the official position of Kaspersky Lab. Dear editors generally recommend to treat any opinions with healthy skepticism.

Source: https://habr.com/ru/post/443330/

All Articles