The art of parsing or DOM do it yourself

Hi, Habr! I recently wondered about creating a simple markdown language like markdown, which would be perfect for my tasks, namely, quickly writing lectures with formatting and the ability to insert mathematical formulas on the fly, using only one keyboard. To translate text written in this format into a more understandable form, for example, a LibreOffice Writer document, you need a parser , in other words, a parser . Since I was used to making bicycles, I went to the search engines with the queries “parser example”, “html to DOM”, “how to parse html” and others. As a descent, either ready-made solutions such as flex, bison, llvm and yacc were used. There were even more libraries for parsing strictly defined languages (gumbo, jsoup, rapidjson, Qt tools, etc.) Neither did not enter into my plans for writing a parser of my C ++ markup using only the standard library, therefore my the source of knowledge about the art of parsing, instead of electronic resources, was the textbooks of technical institutes. How to take the text and build from it AST (abstract syntactic tree), about some pitfalls that I stumbled upon in the process, I will tell you about possible errors today.

Immediately make a reservation - if your goal is your own scripting language or something even more difficult, this article will not be enough to implement it. Ideally, you need to perfectly know automata theory and discrete structures. But as a starting point, for now, I can limit myself to my experience, which I will generously share under the cut. This is not exactly what I originally intended, but it is ideal for an example. Parse we will be HTML, as a simple and familiar language.

First of all, parsing or parsing is not a synonym for the complete process of turning a text into an object model. The process itself consists of two stages:

But let's order. Before you open your favorite IDE and write code, you need to develop a grammar for the future language. Of the formal context-free grammars, the most famous are the Backus-Naur form (BNF) and the extended Backus-Naur form . I used their symbiosis, taking the best from both forms. Any expression can be defined through other expressions like this:

')

Here one expression is defined through three others, one after another. They, in turn, must also be represented through “third” expressions, etc.

When to stop?

The syntax description of any language in formal grammars consists of two types of tokens: terminals and non-terminals . Non - terminals - expressions that require definition:

Terminals are self-sufficient, they do not need to be defined. The above examples can be written as:

where "+", "*", "/" are terminals.

You need to select the terminals from the grammar right away, you can even write them out in a separate list at the bottom of the basic definitions - they will come in handy later.

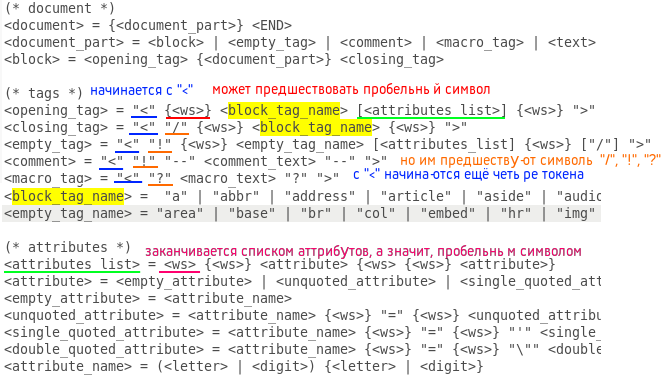

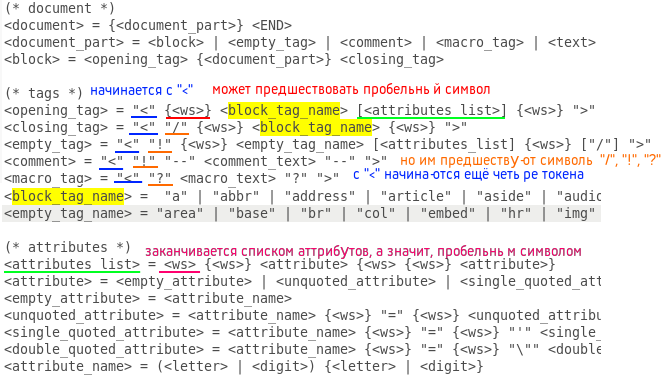

A full description of the BNF is available on Wikipedia here and here . The compilation of the grammar of a language is an important stage in the creation of a language that does not tolerate frivolity. One mistake in it can lead to completely non-working code, which will have to be rewritten from scratch. Therefore, before taking the next step, make sure that there are no controversial points in the compiled grammar. If you have two monitors, it will be convenient for the rest of the work time to occupy one monitor with a grammar document so that you can quickly move your eyes to it when you code. Believe me, you have to do it all the time. Here is the HTML5 grammar compiled by me in the BNF form:

When the grammar is ready, you can proceed to the lexical analyzer (another name for the lexical parser, because in addition to parsing, it reveals lexical errors in the document). At first glance, everything is simple: to absorb characters, write to the buffer, and when a key terminal is detected, define the resulting lexeme as a token with a certain type, right? Yes, only the type of token here is more important than the symbol. Now I will explain. Of course, the disassemble procedure (ifsteam & file) must contain a loop that reads one character from the input stream and sends it to the process (const char & c) procedure, where this character is processed. It seems that the process procedure needs to have a switch ©, where each key symbol has its own functions depending on the current type of token. In fact, the opposite is true: it is better to use a switch to check the type of the token, and define the functions for characters. Moreover, the current token often has an undefined type, one of many. For example, after opening a corner bracket, you can go: opening, closing, empty tags, as well as HTML-style comments or macro tags (PHP script enclosed in "<? ...?>". And for all such associations you need your own case. How is to implement? With the help of bit flags. Let a finite number of token types be given (the more the better, because the task of the lexical analyzer is to keep as little syntactic work as possible) A unique number of power of two is set for each type (1, 2, 4, 8 etc.) Then in binary format they will look like this: 0001, 0010, 0 100, etc., and with the bitwise addition of any number of any types, you get a unique number. If the text description is difficult to understand, here is the code. Here is the definition of types:

Abbreviated process:

We check with the switch the type of the expected token (or tokens), and inside each case we define the procedures for each of the key terminals. There are not so many functions, all perform simple actions: either adding a character to the buffer, or draining the buffer into another token, or changing the expected type of token (tokens), or throwing an exception. It is easy to determine the desired procedure from the grammar written above using a searchable text editor. We simply look for all inclusions of the expected token (tokens) in the definitions of other expressions, then including these expressions in “third” and so on. Here is an example for the opening tag in the gedit text editor:

Initially, it is difficult to navigate the grammar, but with time and experience it becomes as simple as dividing the bar. And here is the disassemble procedure:

The first expected token should obviously be set to TEXT type, and at the end add an END type token with any text (or empty, like this).

For example, I took one of my HTML document templates with a comment, added a PHP pseudo-script to it, processed the lexer and displayed a list of tokens in the format "[" <token_text> ": <token_type>]". Here's what happened:

Now we are ready to proceed to the second part - the construction of the syntactic tree. Since our tags have attributes, the nodes of the tree, in addition to communication with other nodes, will contain arrays of key-value pairs. The resulting construction can rightfully be called the object model of the DOM document mentioned in the title of the article.

How many classes are needed to implement all the properties of HTML elements?

Ideally, one class for each element so that cascading style sheets can be defined for them, but we will limit ourselves to three — the empty “Node” tag, the Block block inherited from it (the content between two pair tags) and inherited from him root of the tree "Root". We will also define in the parser an array of tags that can contain text, such as <p>, <li>, <strong>, etc., in order to deselect tokens with unmarked text. Now it's up to you. If you have worked well for a lexical analyzer, then the syntactic task is to simply absorb tokens and perform one of three operations in an open node: add an empty node to it, open a new one or close it yourself, returning the pointer to the parent. For the latter, it will be required that all classes, starting with the base Node, contain such a pointer, obtained by creating the element. This process is called downward parsing .

Parsing procedure:

That's all! If you did everything correctly, the resulting tree can be displayed on the screen:

However, although the resulting tree can really be called DOM, it’s far from jQuery, Jsoup, beautifulsoup or Gumbo to a full-fledged parser, particularly because it cannot correctly process text between the <style> and <script> pair tags, and therefore the source until I quote. But be sure to add, if habrovchane express such a desire. Successes.

PS Filled in the public access source . IMHO, damp, so I will be cutting to a full-fledged library.

Immediately make a reservation - if your goal is your own scripting language or something even more difficult, this article will not be enough to implement it. Ideally, you need to perfectly know automata theory and discrete structures. But as a starting point, for now, I can limit myself to my experience, which I will generously share under the cut. This is not exactly what I originally intended, but it is ideal for an example. Parse we will be HTML, as a simple and familiar language.

First of all, parsing or parsing is not a synonym for the complete process of turning a text into an object model. The process itself consists of two stages:

- Lexical analysis of text on tokens - small pieces of this text that have a certain syntactic meaning.

- Syntactic parsing is the construction of an abstract syntax tree (AST - abstract syntax tree) or a document object model (DOM) based on their values.

But let's order. Before you open your favorite IDE and write code, you need to develop a grammar for the future language. Of the formal context-free grammars, the most famous are the Backus-Naur form (BNF) and the extended Backus-Naur form . I used their symbiosis, taking the best from both forms. Any expression can be defined through other expressions like this:

')

<> = <_1> <_> <_2> Here one expression is defined through three others, one after another. They, in turn, must also be represented through “third” expressions, etc.

When to stop?

The syntax description of any language in formal grammars consists of two types of tokens: terminals and non-terminals . Non - terminals - expressions that require definition:

<_1> = <> (<_> | <_>) <> Terminals are self-sufficient, they do not need to be defined. The above examples can be written as:

<> = <_1> "+" <_2> <_1> = <> ("*" | "/") <> where "+", "*", "/" are terminals.

You need to select the terminals from the grammar right away, you can even write them out in a separate list at the bottom of the basic definitions - they will come in handy later.

A full description of the BNF is available on Wikipedia here and here . The compilation of the grammar of a language is an important stage in the creation of a language that does not tolerate frivolity. One mistake in it can lead to completely non-working code, which will have to be rewritten from scratch. Therefore, before taking the next step, make sure that there are no controversial points in the compiled grammar. If you have two monitors, it will be convenient for the rest of the work time to occupy one monitor with a grammar document so that you can quickly move your eyes to it when you code. Believe me, you have to do it all the time. Here is the HTML5 grammar compiled by me in the BNF form:

(* document *) <document> = {<document_part>} <END> <document_part> = <block> | <empty_tag> | <comment> | <macro_tag> | <text> <block> = <opening_tag> {<document_part>} <closing_tag> (* tags *) <opening_tag> = "<" {<ws>} <block_tag_name> [<attributes_list>] {<ws>} ">" <closing_tag> = "<" "/" {<ws>} <block_tag_name> {<ws>} ">" <empty_tag> = "<" "!" {<ws>} <empty_tag_name> [<attributes_list] {<ws>} ["/"] ">" <comment> = "<" "!" "--" <comment_text> "--" ">" <macro_tag> = "<" "?" <macro_text> "?" ">" <block_tag_name> = "a" | "abbr" | "address" | "article" | "aside" | "audio" | "b" | "bdo" | "blockquote" | "body" | "button" | "canvas" | "caption" | "cite" | "code" | "colgroup" | "data" | "datalist" | "dd" | "del" | "details" | "dfn" | "dialog" | "div" | "dl" | "dt" | "em" | "fieldset" | "figcaption" | "figure" | "footer" | "form" | "h1" | "h2" | "h3" | "h4" | "h5" | "h6" | "head" | "header" | "html" | "i" | "iframe" | "ins" | "kbd" | "label" | "legend" | "li" | "main" | "map" | "mark" | "meter" | "nav" | "noscript" | "object" | "ol" | "optgroup" | "option" | "output" | "p" | "picture" | "pre" | "progress" | "q" | "ruby" | "rb" | "rt" | "rtc" | "rp" | "s" | "samp" | "script" | "section" | "select" | "small" | "span" | "strong" | "style" | "sub" | "summary" | "sup" | "table" | "tbody" | "td" | "template" | "textarea" | "tfoot" | "th" | "thead" | "time" | "title" | "tr" | "track" | "u" | "ul" | "var" | "video" <empty_tag_name> = "area" | "base" | "br" | "col" | "embed" | "hr" | "img" | "input" | "link" | "menuitem" | "meta" | "param" | "source" | "track" | "wbr" (* attributes *) <attributes_list> = <ws> {<ws>} <attribute> {<ws> {<ws>} <attribute>} <attribute> = <empty_attribute> | <unquoted_attribute> | <single_quoted_attribute> | <double_quoted_attribute> <empty_attribute> = <attribute_name> <unquoted_attribute> = <attribute_name> {<ws>} "=" {<ws>} <unquoted_attribute_value> <single_quoted_attribute> = <attribute_name> {<ws>} "=" {<ws>} "'" <single_quoted_attribute_value> "'" <double_quoted_attribute> = <attribute_name> {<ws>} "=" {<ws>} "\"" <double_quoted_attribute_value> "\"" <attribute_name> = (<letter> | <digit>) {<letter> | <digit>} {* attribute values *) <unquoted_attribute_value> = /^[\s"'=<>/]/ {/^[\s"'=<>/]/} <single_quoted_attribute_value> = /^[']/ {/^[']/} <double_quoted_attribute_value> = /^["]/ {/^["]/} (* nonterminals *) <text> = {/^[<>]/} <comment_text> = ... <macro_text> = ... <letter> = /[a-zA-Z]/ <digit> = /[0-9]/ <ws> = " " | "\t" | "\n" (* terminals *) "<", ">", "/", "!", "?", " ", "\t", "\n" When the grammar is ready, you can proceed to the lexical analyzer (another name for the lexical parser, because in addition to parsing, it reveals lexical errors in the document). At first glance, everything is simple: to absorb characters, write to the buffer, and when a key terminal is detected, define the resulting lexeme as a token with a certain type, right? Yes, only the type of token here is more important than the symbol. Now I will explain. Of course, the disassemble procedure (ifsteam & file) must contain a loop that reads one character from the input stream and sends it to the process (const char & c) procedure, where this character is processed. It seems that the process procedure needs to have a switch ©, where each key symbol has its own functions depending on the current type of token. In fact, the opposite is true: it is better to use a switch to check the type of the token, and define the functions for characters. Moreover, the current token often has an undefined type, one of many. For example, after opening a corner bracket, you can go: opening, closing, empty tags, as well as HTML-style comments or macro tags (PHP script enclosed in "<? ...?>". And for all such associations you need your own case. How is to implement? With the help of bit flags. Let a finite number of token types be given (the more the better, because the task of the lexical analyzer is to keep as little syntactic work as possible) A unique number of power of two is set for each type (1, 2, 4, 8 etc.) Then in binary format they will look like this: 0001, 0010, 0 100, etc., and with the bitwise addition of any number of any types, you get a unique number. If the text description is difficult to understand, here is the code. Here is the definition of types:

enum Token_type { END = 1, TEXT = 2, OPENING_BLOCK_TAG_NAME = 4, CLOSING_BLOCK_TAG_NAME = 8, EMPTY_TAG_NAME = 16, COMMENT = 32, MACRO_TAG = 64, ATTRIBUTE_NAME = 128, UNQUOTED_ATTRIBUTE_VALUE = 256, SINGLE_QUOTED_ATTRIBUTE_VALUE = 512, DOUBLE_QUOTED_ATTRIBUTE_VALUE = 1024 }; Abbreviated process:

void Lexer::process (const char &c) { switch (curr_token_type) { case END: { throw string("unexpected ending!"); break; } case TEXT: { if (c == '>') throw string("unexpected symbol: \">\"!"); else if (c == '<') { if (!buffer.empty()) { add(buffer, TEXT); buffer.clear(); } curr_token_type = OPENING_BLOCK_TAG_NAME | CLOSING_BLOCK_TAG_NAME | EMPTY_TAG_NAME | COMMENT | MACRO_TAG; } else buffer.push_back(c); break; } case OPENING_BLOCK_TAG_NAME: { throw string("error!"); break; } case CLOSING_BLOCK_TAG_NAME: { if (c == '<') throw string("unexpected symbol: \"<\"!"); else if (c == '/') throw string("unexpected symbol: \"<\"!"); else if (c == '!') throw string("unexpected symbol: \"!\"!"); else if (c == '?') throw string("unexpected symbol: \"?\"!"); else if (c == ' ') throw string("unexpected symbol: \" \"!"); else if (c == '\t') throw string("unexpected symbol: \"\\t\"!"); else if (c == '\n') throw string("unexpected symbol: \"\\n\"!"); else if (c == '>') { for (unsigned int i(0); i < BLOCK_TAGS_COUNT; i++) if (buffer == block_tags[i]) { add(buffer, CLOSING_BLOCK_TAG_NAME); buffer.clear(); curr_token_type = TEXT; break; } } else buffer.push_back(c); break; } case EMPTY_TAG_NAME: { throw string("error!"); break; } case COMMENT: { ... break; } case MACRO_TAG: { ... break; } case OPENING_BLOCK_TAG_NAME | CLOSING_BLOCK_TAG_NAME | EMPTY_TAG_NAME | COMMENT | MACRO_TAG: { ... break; } case EMPTY_TAG_NAME | COMMENT: { ... break; } case ATTRIBUTE_NAME: { ... break; } case ATTRIBUTE_NAME | UNQUOTED_ATTRIBUTE_VALUE | SINGLE_QUOTED_ATTRIBUTE_VALUE | DOUBLE_QUOTED_ATTRIBUTE_VALUE: { ... break; } case UNQUOTED_ATTRIBUTE_VALUE | SINGLE_QUOTED_ATTRIBUTE_VALUE | DOUBLE_QUOTED_ATTRIBUTE_VALUE: { ... break; } case UNQUOTED_ATTRIBUTE_VALUE: { ... break; } case SINGLE_QUOTED_ATTRIBUTE_VALUE: { ... break; } case DOUBLE_QUOTED_ATTRIBUTE_VALUE: { ... break; } } } We check with the switch the type of the expected token (or tokens), and inside each case we define the procedures for each of the key terminals. There are not so many functions, all perform simple actions: either adding a character to the buffer, or draining the buffer into another token, or changing the expected type of token (tokens), or throwing an exception. It is easy to determine the desired procedure from the grammar written above using a searchable text editor. We simply look for all inclusions of the expected token (tokens) in the definitions of other expressions, then including these expressions in “third” and so on. Here is an example for the opening tag in the gedit text editor:

Initially, it is difficult to navigate the grammar, but with time and experience it becomes as simple as dividing the bar. And here is the disassemble procedure:

void Lexer::disassemble (ifstream &file) { tokens_count = 0; curr_token_type = 0; unsigned long line(1), pos(1); try { char c; curr_token_type = TEXT; while ((c = file.get()) != EOF) { if (c == '\n') { pos = 1; line++; } else pos++; process(c); } if (buffer.size() != 0) { if (!(curr_token_type | TEXT)) throw string("text was expected!"); add(buffer, TEXT); buffer.clear(); } add("", END); } catch (const string &error) { throw string("lexer: " + to_string(line) + "," + to_string(pos) + ": " + error); } } The first expected token should obviously be set to TEXT type, and at the end add an END type token with any text (or empty, like this).

For example, I took one of my HTML document templates with a comment, added a PHP pseudo-script to it, processed the lexer and displayed a list of tokens in the format "[" <token_text> ": <token_type>]". Here's what happened:

Document itself

<!DOCTYPE html> <html lang="ru"> <head> <meta http-equiv="content-type" content="text/html" charset="utf-8" /> <meta name="author" content="Interquadro" /> <meta name="description" content="" /> <meta name="keywords" content=""> <meta name="viewport" content="width=device-width, initial-scale=1" /> <meta name="format-detection" content="telephone=no" /> <meta http-equiv="x-rim-auto-match" content="telephone=none" /> <meta name="referrer" content="no-referrer" /> <meta name="_suburl" content="" /> <title></title> <link rel="shortcut icon" href=".ico" /> <link rel="stylesheet" type="text/css" href=".css" title="" /> <!--[if lt IE 9]> <script src="http://html5shiv.googlecode.com/svn/trunk/html5-els.js"></script> <![endif]--> </head> <body> <header> <div id="intro"> </div> </header> <nav> <ul id="nav"> <li class="nav"><a href="#"> </a></li> <li class="nav"><a href="#"> </a></li> <li class="nav"><a href=""> </a></li> </ul> </nav> <main id="content"> <?php ?> </main> <footer> <hr /> <small id="copyright">Copyright © 2019. .</small> </footer> </body> </html> Token List

["! DOCTYPE": EMPTY_TAG_NAME] ["html": ATTRIBUTE_NAME] [" ": TEXT] ["html": OPENING_BLOCK_TAG_NAME] ["lang": ATTRIBUTE_NAME] ["ru": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["head": OPENING_BLOCK_TAG_NAME] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["http-equiv": ATTRIBUTE_NAME] ["content-type": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["text / html": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["charset": ATTRIBUTE_NAME] ["utf-8": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["author": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["Interquadro": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["description": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["keywords": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["viewport": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["width = device-width, initial-scale = 1": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["format-detection": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["telephone = no": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["http-equiv": ATTRIBUTE_NAME] ["x-rim-auto-match": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["telephone = none": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["referrer": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["no-referrer": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["meta": EMPTY_TAG_NAME] ["name": ATTRIBUTE_NAME] ["_suburl": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["content": ATTRIBUTE_NAME] ["": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["title": OPENING_BLOCK_TAG_NAME] ["title": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["link": EMPTY_TAG_NAME] ["rel": ATTRIBUTE_NAME] ["shortcut icon": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["href": ATTRIBUTE_NAME] [".ico": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["link": EMPTY_TAG_NAME] ["rel": ATTRIBUTE_NAME] ["stylesheet": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["type": ATTRIBUTE_NAME] ["text / css": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["href": ATTRIBUTE_NAME] [".css": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["title": ATTRIBUTE_NAME] ["": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["[if lt IE 9]> <script src = "http://html5shiv.googlecode.com/svn/trunk/html5-els.js"> </ script> <! [endif] ": COMMENT] [" ": TEXT] ["head": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["body": OPENING_BLOCK_TAG_NAME] [" ": TEXT] ["header": OPENING_BLOCK_TAG_NAME] [" ": TEXT] ["div": OPENING_BLOCK_TAG_NAME] ["id": ATTRIBUTE_NAME] ["intro": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["div": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["header": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["nav": OPENING_BLOCK_TAG_NAME] [" ": TEXT] ["ul": OPENING_BLOCK_TAG_NAME] ["id": ATTRIBUTE_NAME] ["nav": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["li": OPENING_BLOCK_TAG_NAME] ["class": ATTRIBUTE_NAME] ["nav": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["a": OPENING_BLOCK_TAG_NAME] ["href": ATTRIBUTE_NAME] ["#": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["Home": TEXT] ["a": CLOSING_BLOCK_TAG_NAME] ["li": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["li": OPENING_BLOCK_TAG_NAME] ["class": ATTRIBUTE_NAME] ["nav": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["a": OPENING_BLOCK_TAG_NAME] ["href": ATTRIBUTE_NAME] ["#": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["Review": TEXT] ["a": CLOSING_BLOCK_TAG_NAME] ["li": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["li": OPENING_BLOCK_TAG_NAME] ["class": ATTRIBUTE_NAME] ["nav": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["a": OPENING_BLOCK_TAG_NAME] ["href": ATTRIBUTE_NAME] ["": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["Help": TEXT] ["a": CLOSING_BLOCK_TAG_NAME] ["li": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["ul": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["nav": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["main": OPENING_BLOCK_TAG_NAME] ["id": ATTRIBUTE_NAME] ["content": DOUBLE_QUOTED_ATTRIBUTE_VALUE] [" ": TEXT] ["php": MACRO_TAG] [" ": TEXT] ["main": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["footer": OPENING_BLOCK_TAG_NAME] [" ": TEXT] ["hr": EMPTY_TAG_NAME] [" ": TEXT] ["small": OPENING_BLOCK_TAG_NAME] ["id": ATTRIBUTE_NAME] ["copyright": DOUBLE_QUOTED_ATTRIBUTE_VALUE] ["Copyright © 2019. All Rights Reserved." : TEXT] ["small": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["footer": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["body": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["html": CLOSING_BLOCK_TAG_NAME] [" ": TEXT] ["": END]

Now we are ready to proceed to the second part - the construction of the syntactic tree. Since our tags have attributes, the nodes of the tree, in addition to communication with other nodes, will contain arrays of key-value pairs. The resulting construction can rightfully be called the object model of the DOM document mentioned in the title of the article.

How many classes are needed to implement all the properties of HTML elements?

Ideally, one class for each element so that cascading style sheets can be defined for them, but we will limit ourselves to three — the empty “Node” tag, the Block block inherited from it (the content between two pair tags) and inherited from him root of the tree "Root". We will also define in the parser an array of tags that can contain text, such as <p>, <li>, <strong>, etc., in order to deselect tokens with unmarked text. Now it's up to you. If you have worked well for a lexical analyzer, then the syntactic task is to simply absorb tokens and perform one of three operations in an open node: add an empty node to it, open a new one or close it yourself, returning the pointer to the parent. For the latter, it will be required that all classes, starting with the base Node, contain such a pointer, obtained by creating the element. This process is called downward parsing .

Parsing procedure:

void Parser::parse (const Lexer &lexer) { Block * open_block = (Block*) tree; Node * last_node = (Node*) tree; try { unsigned long long size = lexer.count(); for (unsigned long long i(0); i < size-2; i++) { switch (lexer[i].type) { case Lexer::TEXT: { for (unsigned int j(0); j < TEXT_TAGS_COUNT; j++) if (open_block->get_name() == text_tags[j]) last_node = open_block->add("TEXT", lexer[i].lexeme); break; } case Lexer::OPENING_BLOCK_TAG_NAME: { last_node = open_block = open_block->open(lexer[i].lexeme); break; } case Lexer::CLOSING_BLOCK_TAG_NAME: { if (lexer[i].lexeme != open_block->get_name()) throw string("unexpected closing tag: </" + lexer[i].lexeme + ">"); open_block = open_block->close(); break; } case Lexer::EMPTY_TAG_NAME: { last_node = open_block->add(lexer[i].lexeme); break; } case Lexer::COMMENT: { last_node = open_block->add("COMMENT", lexer[i].lexeme); break; } case Lexer::MACRO_TAG: { last_node = open_block->add("MACRO", lexer[i].lexeme); break; } case Lexer::ATTRIBUTE_NAME: { last_node->add_attr(lexer[i].lexeme, lexer[i].lexeme); break; } case Lexer::UNQUOTED_ATTRIBUTE_VALUE: { last_node->set_last_attr(lexer[i].lexeme); break; } case Lexer::SINGLE_QUOTED_ATTRIBUTE_VALUE: { last_node->set_last_attr(lexer[i].lexeme); break; } case Lexer::DOUBLE_QUOTED_ATTRIBUTE_VALUE: { last_node->set_last_attr(lexer[i].lexeme); break; } case Lexer::END: { if (open_block->get_type() != Node::ROOT) throw string("unexpected ending!"); open_block->close(); } } } } catch (const string &error) { throw string("parser: " + error); } } That's all! If you did everything correctly, the resulting tree can be displayed on the screen:

|

+ - <ROOT>

|

+ - <! DOCTYPE>

|

+ - <html>

|

+ - <head>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <meta>

| |

| + - <title>

| |

| + - <link>

| |

| + - <link>

| |

| + - <COMMENT>

|

+ - <body>

|

+ - <header>

| |

| + - <div>

|

+ - <nav>

| |

| + - <ul>

| |

| + - <li>

| | |

| | + - <a>

| |

| + - <li>

| | |

| | + - <a>

| |

| + - <li>

| |

| + - <a>

|

+ - <main>

| |

| + - <MACRO>

|

+ - <footer>

|

+ - <hr>

|

+ - <small>

However, although the resulting tree can really be called DOM, it’s far from jQuery, Jsoup, beautifulsoup or Gumbo to a full-fledged parser, particularly because it cannot correctly process text between the <style> and <script> pair tags, and therefore the source until I quote. But be sure to add, if habrovchane express such a desire. Successes.

PS Filled in the public access source . IMHO, damp, so I will be cutting to a full-fledged library.

Source: https://habr.com/ru/post/442964/

All Articles