DataPower Training Course

The material was prepared in collaboration with the user wedmeed

In 2017, when our project began in Vietnam, we were faced with a new beast IBM DataPower. IBM DataPower is a product that is a gateway between clients and backends, intended for filtering, routing, enrichment or other transformations of messages passing through it (hereinafter referred to as queries). It was necessary to study quickly, there was no time for the buildup, so we were asked to familiarize ourselves with it, after which there were many hours of Skype conferences with our colleague from Moscow, who shared their knowledge and experience with this product.

Independent training was based on studying the documentation and watching educational videos from the Internet - and then a trick was waiting for me. I almost could not find information in Russian. By the way, my knowledge of English at that time was not at the highest level; moreover, it was my first project and, probably, these factors complicated my life. This inspired me to write a tutorial article in Russian and in the simplest possible way for novice developers who have encountered this product and are trying to quickly understand its basics. The article will not free you from reading the documentation, but it will make life easier in the early stages of understanding how it works.

It is also worth noting that the structure given in practice will be close to the real project, which will allow you to use it as a base, expanding and complementing it to your requirements. In conclusion, the “Theory” sections will contain a few words about the project already implemented, as well as some features that are worth paying attention to.

Part 1. Installing DataPower with Docker Toolbox

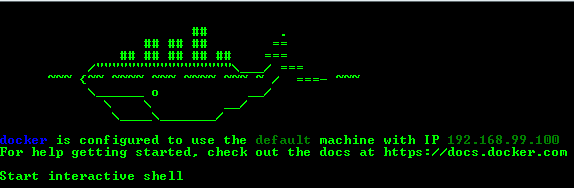

Install and run the Docker Toolbox application. Immediately after launch, you will see the IP address of the machine at which DataPower will be available in the future:

To start the image, you need to change some settings of the virtual machine (relevant for the IDG.2018.4.1.0 version) and restart it:

- Stop the docker-machine with the command:

docker-machine stop - System -> Motherboard -> Main memory: minimum 4096Mb;

- System -> Processor: at least 2;

- Display -> Screen -> Video memory: at least 128Mb;

- Start the docker-machine with the command:

')docker-machine start

Note. If you are prompted to restart "docker-machine env" - run:docker-machine env - Next, you need to download the IBM DataPower image:

docker pull ibmcom/datapower - Then start the docker-container with the following command:

docker run -it \ -v $PWD/config:/drouter/config \ -v $PWD/local:/drouter/local \ -e DATAPOWER_ACCEPT_LICENSE=true \ -e DATAPOWER_INTERACTIVE=true \ -p 9090:9090 \ -p 3630:3630 \ -p 9022:22 \ -p 7170:7170 \ --name idg \ ibmcom/datapower - After completing the command, press Enter and enter admin / admin as the login and password

- To launch the Web Management Interface, use the following commands:

co

andweb-mgmt 0 9090 - After all these steps, perform in the browser https://192.168.99.100:9090/

Part 2. Domains

2.1. Theory

Domains in DataPower allow you to separate administration and development tools, as well as provide security.

After installation, there is only a default domain from which application domains can be created. For each domain has its own configuration settings.

Some shared resources and settings can only be defined in the default domain, these include network interfaces, users and access control, application domains, and others.

The application domain is the development section for request processing services. The services defined in it cannot be shared with another application domain. Application domains can be restarted separately and independently from each other, without the need to completely restart DataPower.

You can create, restart, reset, suspend, resume or delete domains. For more information on all administration features, please refer to the official documentation.

A little about the implemented project. We used 3 domains:

- default - the default domain containing shared resources and parameters;

- trunk is the primary domain containing everything needed to process requests;

- settings - the domain of settings and security; local files contain information about service routing rules and security parameters.

The need to transfer all settings to a separate domain arose in connection with the search for a simpler deployment path. As in many projects of the dev environment, test and prod were separated, and the removal to a separate domain of settings allowed to set all main domains from the dev environment in other environments through export / import, without the risk of losing the environment settings.

2.2. Practice

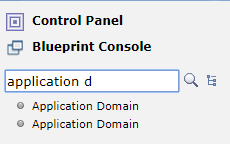

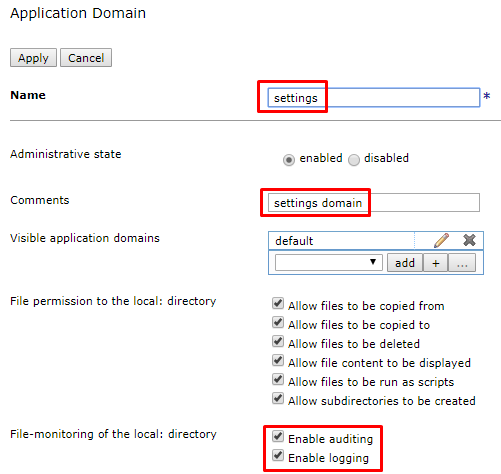

- In the search field, enter "domain", select "Application Domain" and click "Add"

- Here you need to specify the domain name, comment (if desired) and activate auditing and logging. Fill in the fields and apply the changes.

- Similarly, add another domain "trunk"

- Go to Review changes

- and save the domain configuration

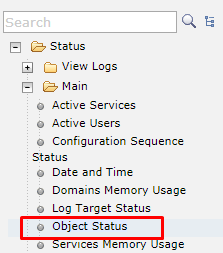

- You can check the status of created objects by going to the default domain in the navigation tree in Status -> Main -> Object Status

- Select View by: types

- Find the Application Domain in the list and check the status of the objects: each of them should be saved, turned on and in the up state. If so, proceed to the next chapter.

Part 3. Queue managers

The queue manager is not a required component of IBM DataPower, but using the MQ example you can show the power of this product. We will use MQ from IBM. During the testing described in Chapter 6, we will need to send a message to the local queue manager. In this article I will do this with the rfhutil utility, but you can use any method available to you. For testing, you will need to create a connection from DP to your local queue manager using the MQ Queue Manager.

3.1. Theory

The queue manager provides data exchange between the gateway and remote queue managers.

You can also customize the MQ Queue Manager Group, which will improve system resiliency. This can be useful, for example, if you want to connect a client to any of a set of working queue managers and in some other cases that you can see in the official documentation.

From the experience of the project, it is worth noting only one feature: at one time we wanted to try to implement load balancing by means of DataPower, in particular, using groups of queue managers, but in practice we did not find such an opportunity. An alternative solution is to create a cluster of queue managers.

3.2. Practice

3.2.1. Training

- Install WebSphere MQ;

- Create a local queue manager LOCAL_DP_QM, available on port 3630;

- Configure the DP.SVRCONN channel;

When creating a channel, you may find the following commands helpful:

strmqm LOCAL_DP_QM /* mq*/ runmqsc LOCAL_DP_QM /* mq*/ DEFINE CHANNEL (DP.SVRCONN) CHLTYPE(SVRCONN) MCAUSER('evlasenko') /* */ SET CHLAUTH('DP.SVRCONN') TYPE(USERMAP) CLNTUSER('evlasenko') USERSRC(channel) ADDRESS('*') ACTION(ADD) /* */ SET CHLAUTH(DP.SVRCONN) TYPE(BLOCKUSER) USERLIST('nobody') /* */ ALTER LISTENER (SYSTEM.DEFAULT.LISTENER.TCP) TRPTYPE(TCP) PORT(3630) control(QMGR) /* 3630*/ ALTER AUTHINFO (SYSTEM.DEFAULT.AUTHINFO.IDPWOS) AUTHTYPE(IDPWOS) CHCKCLNT(OPTIONAL) /* */ REFRESH SECURITY TYPE(CONNAUTH) /* */ endmqm LOCAL_DP_QM /* mq*/ strmqm LOCAL_DP_QM /* mq*/ END - Create the queues DP.IIB.REQIN and DP.IIB.RESIN

- Run rfhutil under the username for which you created the channel. In the line Queue Manager Name (to connect to) list:

DP.SVRCONN/TCT/127.0.0.1(3630) - Try to load the list of queue names; there should not be any errors in the message window. Channel connection test passed.

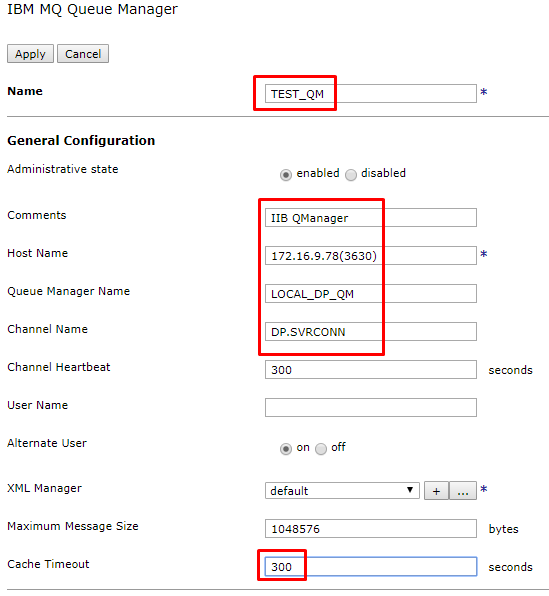

3.2.2. Create IBM MQ Queue Manager

- Switch to the trunk domain.

- In the search type MQ, select IBM MQ Queue Manager and click add.

- You need to specify the name (TEST_QM), the hostname of the queue manager, the name of the queue manager and the name of the channel, as well as a timeout. Configure and save your changes.

Check the status of the queue manager object in the same way as checking the status of domains. To do this, from the trunk domain, select Object Status and filter the View by: types. In the IBM MQ Queue Manager section, find the corresponding object and check its status.

Part 4. Multiprotocol gateways

4.1. Theory

Multi-Protocol Gateway (MPG) is a multi-protocol gateway that allows receiving requests from clients using various protocols and then transferring them to a remote server using various protocols. The protocol used by the client may not match the protocol used by the remote access server.

In the main MPG settings you can define the following components:

- XML Manager - manages the compilation and caching of style sheets (xsl, xslt), document caching.

- Policy - consists of rules, each of which defines a set of actions applied to a message passing through a gateway.

- Settings front and back side (setting url, types of incoming and outgoing messages, timeouts and other).

A few words about the project:

In the project implementation, 4 multiprotocol gateways are used (routing, 2 transformational for different end systems and an additional one designed to receive files from the settings domain). The diagram below shows the general interaction scheme:

The number of MPGs may vary depending on the overall solution architecture. In our case, DataPower is in front of an integration bus (IIB) and microservices that have significant differences in interfaces (json / http against xml / mq), so the transformational MPG was decided to do for each specific backend and call it appropriately. We work with json / http for all clients, so there is one routing MPG. Basic MPGs consist of 3 message processing rules — request, response, and errors. Each rule consists of the necessary actions, such as transformation, logging, routing, and others.

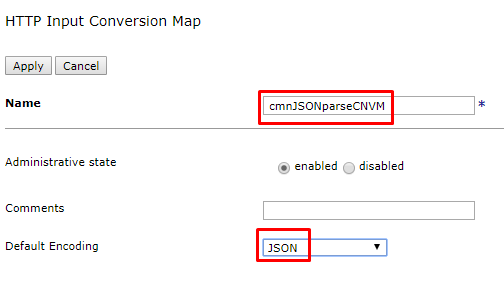

From features - if in policy you use action ConvertQueryParamToXML, then be attentive to InputConversion. If you set the Default Encoding value to JSON and try to send a GET request, you will be surprised to find that the message has not undergone any transformations indicated by you and you will not find any traces of it. This feature will help to overcome the creation of a separate rule for GET requests.

4.2. Practice

4.2.1. Training

All the necessary files for the work can be found at https://github.com/EvgenyaVlasenko/IBM_DataPower.git

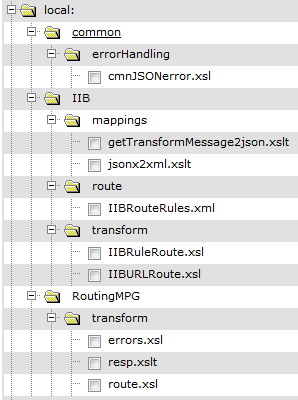

4.2.1.1. Trunk domain

- Switch to the trunk domain.

- In the control panel, select File Management.

- In the local directory, create the following directory structure and place the corresponding files in it (each file contains a brief description of what functions it performs, as well as a more detailed look at this later in the article).

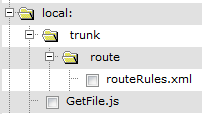

4.2.1.2. Domain settings

- Go to domain settings.

- In the control panel, select File Management.

- In the local directory, create the following directory structure and place the corresponding files in it (a short description is also contained inside the files).

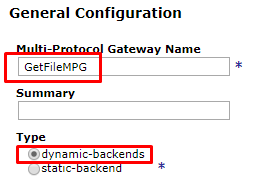

4.2.2. Create GetFileMPG

First we will create a simple auxiliary MPG that will return files from the settings domain.

- Go to domain settings.

- In the control panel, select Multi-Protocol Gateway and click create.

- Specify a name (GetFileMPG), a description (optional) and the type of backend (dynamic). In fact, since the call to the backend will not actually be, but will only return a file from the local system, in this example, you can specify any type of backend.

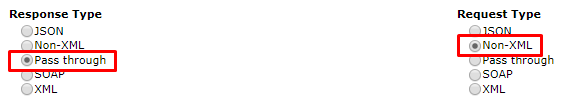

- Set the request and response types. Explicit specification of types will reduce the number of built-in checks. Specifying the type of Pass through will allow you not to create a rule (in this case for the transformation of the response). If we specify the Request Type too Pass through, we will not be able to process the message in any way. This option does not suit us, so we limit the type of request using Non-XML.

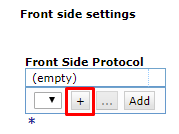

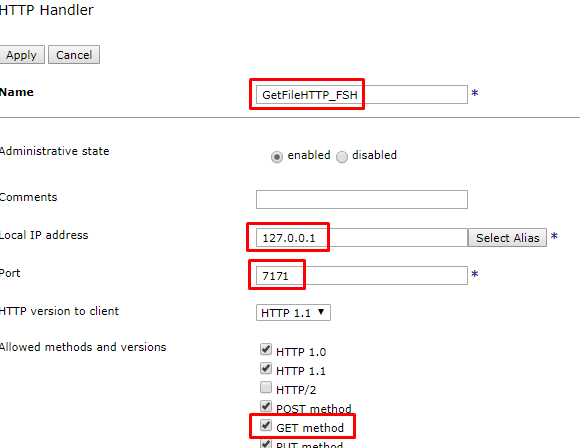

- Click on + and select HTTP Handler to create a new Front Side Protocol.

- Here you should specify the name, ip-address, port and list of allowed methods. Should pay attention to the ip-address. If you specify 0.0.0.0, then everyone can access this gateway. If 127.0.0.1 - only other gateways within the same DataPower. Since there are security settings among the settings, we use the second option. Fill in the fields and click "Apply", the protocol will automatically be added to the gateway.

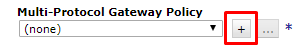

- Click on + to add a new policy.

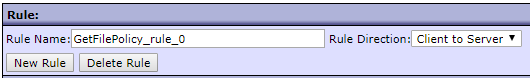

- Fill in the policy name "GetFilePolicy".

- Create a new rule, fill in the name and direction of the rule. Since there is actually no backend, but only the required file is returned, the rule will be only one (client to server).

- Double-click on the Match action to configure it, select an existing rule and apply the changes. Ideologically correct here would be to establish a restriction for being able to receive only Get-requests, but within the framework of the learning task, you can choose an existing one.

- Add another action, GatewayScript, by dragging it onto the rule, and configure it. As a transformation, a prepared file will be used, which in the local: /// file system will find the file by name from the URI of the incoming request and place it in the message body. The result of the operation will be passed directly to the output buffer of the rule. Save the changes.

- The final result of the policy should look like this:

- The creation of the MPG is complete, save the changes.

- You can check the success of its creation using Object Status, similar to checking the status of domains and the queue manager. To do this, from the settings domain, select Object Status and filter View by: services. In the “Multi-Protocol Gateway” section, find the corresponding object and check its status.

- You cannot call this MPG from the outside, as you protected it with ip. Temporarily change the ip from 127.0.0.1 to 0.0.0.0 and the port from 7171 to 7170 and run the following query:

curl -vv -k "http://192.168.99.100:7170/trunk/route/routeRules.xml" - You should get the following answer:

- Again, change the ip and port to the original 127.0.0.1:7171.

4.2.3. Creating RoutingMPG

Now create a RoutingMPG. Based on the input request and the routing rules, it will determine where and with what parameters the request should be sent.

- Switch to the trunk domain.

- Create a new Multi-Protocol Gateway in accordance with clauses 2-10 of section 4.2.2 using the following values:

- 3 - name: RoutingMPG, type of backend: Dynamic (to be able to route requests to different MPGs, if necessary).

- 4 - Rq: Non-xml, Rs: Non-xml.

- 6 - name: RoutingHTTP_FSH, ip: 0.0.0.0, port: 7170, + Get method.

- 8 - Name: RoutingPolicy.

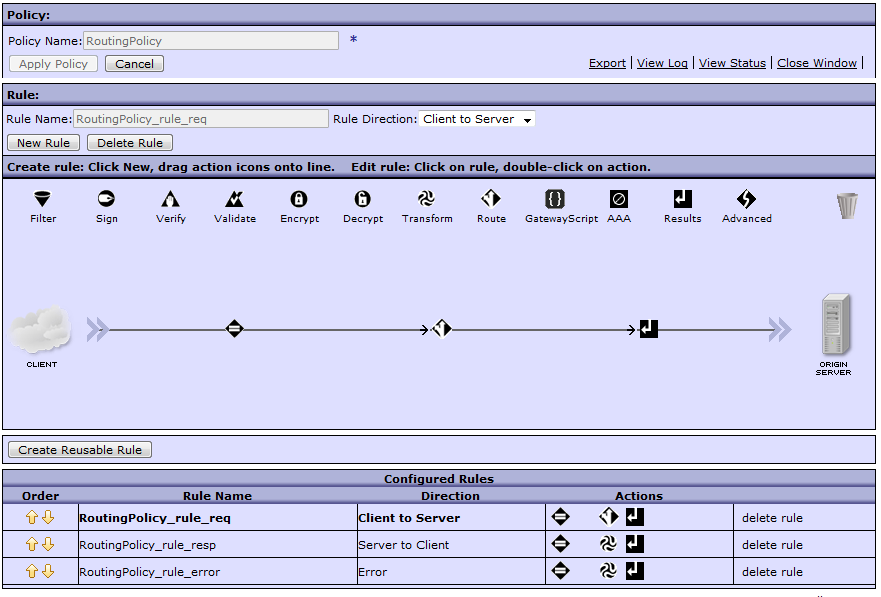

- 9 - name: RoutingPolicy_rule_req, direction: Client to Server.

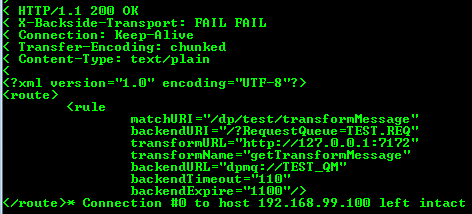

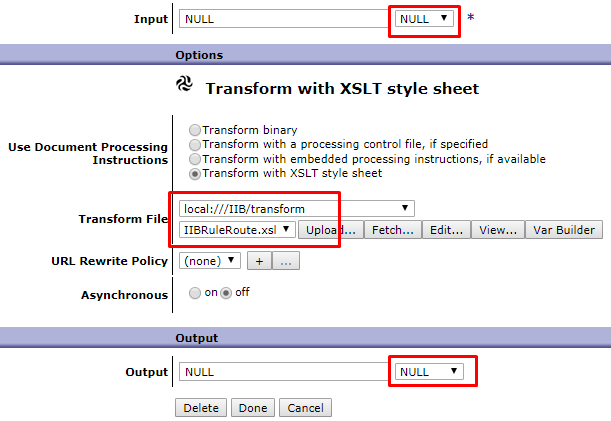

- Add another action to route the request. To do this, drag the action “Route” to the rule, double-click it to configure it, fill in the fields and apply the changes. The route.xsl file receives the routing settings file from the settings domain through the previously created GetFileMPG. After that, based on the URI, the settings that are necessary for this operation are already selected from the file. Some of them are used for routing, and some are added to headers for use in other MPGs. The input and output parameters define the way to work only with the message body and do not affect headers and variables in any way. Therefore: input from null - since no information from the message body is used for routing. The output is null - since the result of the transformation is only a change in the service information.

- Similarly, create 2 more rules and save all changes:

- Direction: Server-client, name: RoutingPolicy_rule_resp;

Transformation: Input INPUT, Output NULL, transformation file local: ///RoutingMPG/transform/resp.xslt. The resp.xslt file receives the http-response transformation MPG status and explicitly sets it to the response RoutingMPG. If this is not done, the default code will be 200, even if an error has occurred in the transformation MPG. - Direction: Error, name: RoutingPolicy_rule_error;

Transformation: Input INPUT, Output PIPE (according to the documentation, using PIPE between two adjacent action nodes as INPUT and OUTPUT can eliminate additional processing and reduce the amount of memory used), the transformation file is local: ///RoutingMPG/transform/errors.xsl. The errors.xsl file receives the error code and text from the response from the transformational MPG and generates a JSON error message in the format expected by the client.

- Direction: Server-client, name: RoutingPolicy_rule_resp;

- The final result of the policy should look like this:

- The creation of the MPG is complete, save the changes.

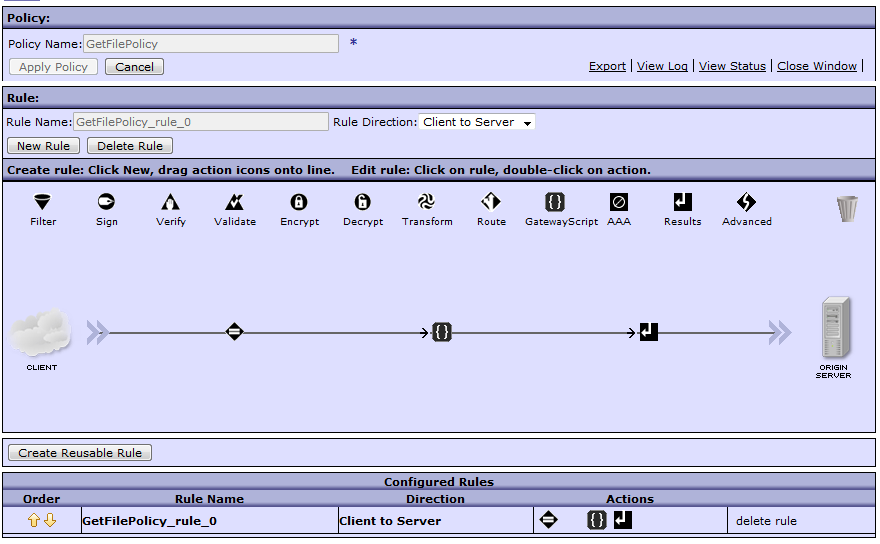

- Using, for example, the curl utility, run the following query:

curl -vv -k "http://192.168.99.100:7170/dp/test/transformMessage" - If you get the following error, then everything is correct. This error means that the message was successfully received and processed, but an attempt to call the backend (in this case, IIBMPG) was unsuccessful. Go to the next step.

4.2.4. Create IIBMPG

The next step is to create a transformational MPG. Suppose the external system is in JSON request format, and the internal is XML. We need to convert the input message so that the internal system can understand it. It is worth noting that this is not always a simple transformation of the entire message. Often it is required to transmit a truncated or supplemented message, sometimes with a completely reworked structure.

- Switch to the trunk domain.

- Create a new Multi-Protocol Gateway in accordance with clauses 2-10 of section 4.2.2 using the following values:

- 3 - Name: IIBMPG, Backend Type: Dynamic

- 4 - Rq: JSON, Rs: XML

- 6 - name: IIBHTTP_FSH, ip: 127.0.0.1 (only requests from the same DataPower), port: 7172, + Get method

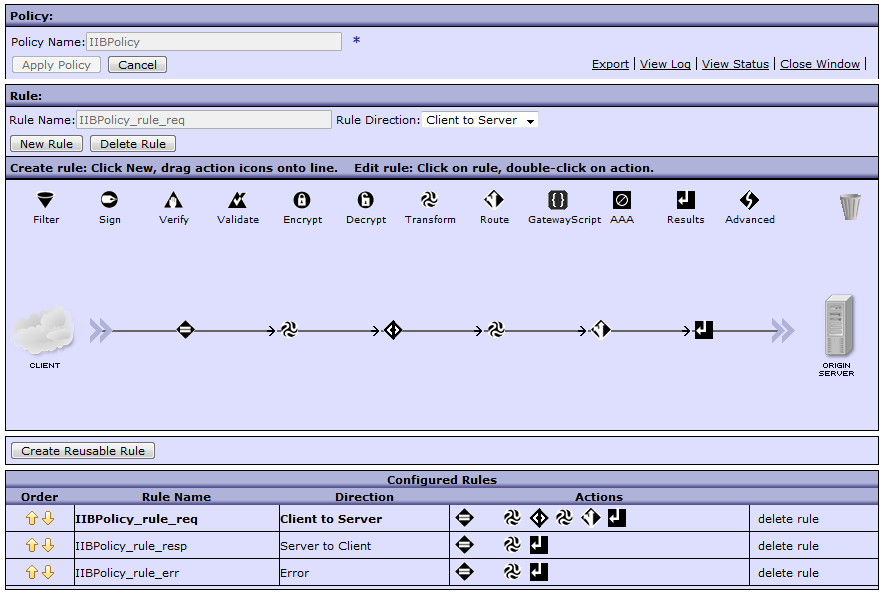

- 8 - Name: IIBPolicy

- 9 - name: IIBPolicy_rule_req, direction: Client to Server

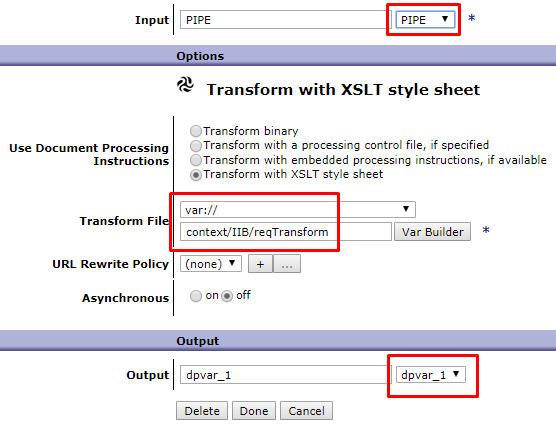

- Add a description. The IIBRuleRoute.xsl file based on the X-DP Transform-Name in the request headers receives from the local: ///IIB/route/IIBRouteRules.xml file the request, response and error transformation files for this service and sets their values to the corresponding context variables var: // context / IIB / reqTransform, var: // context / IIB / ansTransform, var: // context / IIB / errTransform. Also, other values from headers (url, uri, expire, timeout) are placed in context variables.

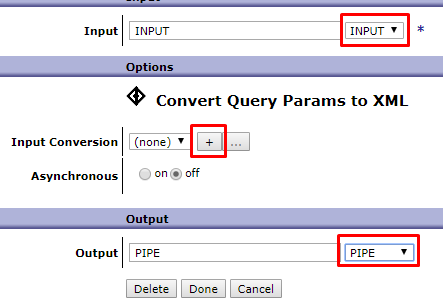

- Add another action by dragging Advanced onto your rule and selecting from the Convert Query Params to XML list, configure. You need to add a new input conversion map, giving it a name (cmnJSONParseCNVM) and the required type (JSON).

- Add a transformation after the standard conversion and configure it. To specify the transformation file in this case, the variable set in the previous conversion is used. This is done so that the transformation is universal, and the file itself is substituted on the fly, depending on the input message. The message body is ready. The next action we need to route the message, the body of the message will not change, so create a variable dpvar_1 and save the result in it. It is this variable that we point to the input to the Results action. Save the changes.

- Add a routing action and set the following parameters. The IIBURLRoute.xsl file gets the values of context variables, some of them sets the request as service variables, and from the others it forms the URI for the request to the target system, which also saves it into the service variable.

- Similarly, create 2 more rules and save all changes:

- Direction: Server-client, name: IIBPolicy_rule_resp;

Transformation: Input INPUT, Output PIPE, var: // context / IIB / ansTransform transformation file (context variable for on-the-fly transformation transformation substitution). - Direction: Error, name: IIBPolicy_rule_error;

Transformation: Input NULL, Output PIPE, var: // context / IIB / errTransform transformation file (context variable for on-the-fly transformation of the error transformation).

- Direction: Server-client, name: IIBPolicy_rule_resp;

- The final result of the policy should look like this:

- The creation of the MPG is complete, save the changes.

Part 5. Testing

5.1. Training

- Download, for example, the rfhutil utility to read and write messages to a queue;

- Test files are located in the tests folder of the same directory as the project files.

5.2. Health check

- Send the request using the curl utility (for the request below, the current directory should be the same as example.json).

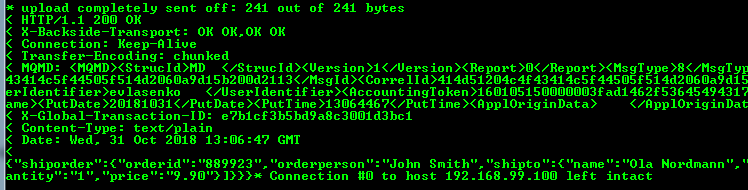

curl -vv -k "http://192.168.99.100:7170/dp/test/transformMessage" -H "Content-Type: application/json" --data-binary @example.json - Open 2 instances of the rfhutil utility and subtract the message from the DP.IIB.REQIN queue with the first instance;

- Go to the MQMD tab and copy the MessageID;

- In the second instance, open the rs.xml file, in the MQMD tab, paste the message identifier into the CorrelID and put the message in the DP.IIB.RESIN queue;

- You should get a similar answer:

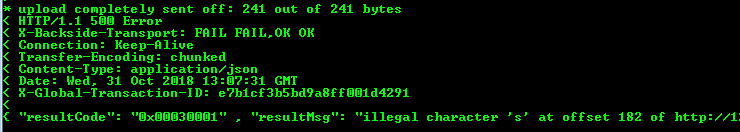

- Repeat steps 1-3;

- In the second instance, open the rs_error.xml file and send a message to the queue filling in correllId;

6.

6.1. Theory

Log Targets , .

Log Targets . , 4 1000Kb, . 2-4 . , . , DataPower, , , , , , - MPG, .

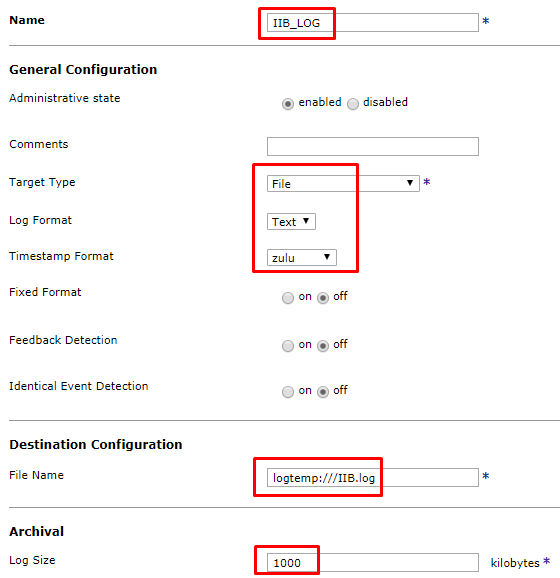

6.2. Practice

- trunk;

- Log Target ;

- :

- (IIB_LOG);

- (File);

- (Text);

- timestamp(zulu);

- (logtemp:///IIB.log — IIBMPG);

- (1000).

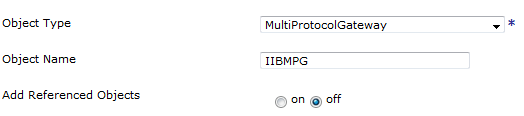

- . MPG IIBMPG.

- , , ( , ).

- ;

- ;

- .

- Log Targets MPG.

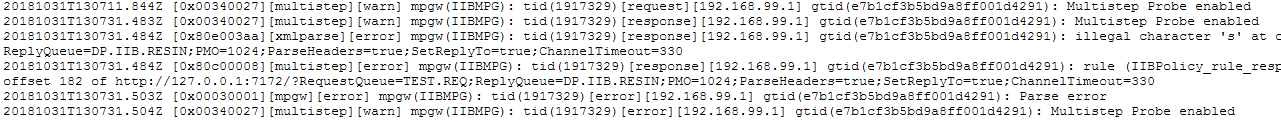

- :

- , , .

- .

- , , .

7. -

- – , . – , , - .

- . View Logs. , « », « » .

- . . MPG Show Probe -> Enable Probe. . , .

- , .

– .

Source: https://habr.com/ru/post/442686/

All Articles