Memory allocation mechanisms in Go

When I first tried to understand how Go memory allocators work, what I wanted to figure out seemed to me a mysterious black box. As is the case with any other technology, the most important thing here lies behind the many layers of abstractions, through which you need to sneak in order to understand something.

The author of the material, the translation of which we publish, decided to get to the essence of the memory allocation tools in Go and tell about it.

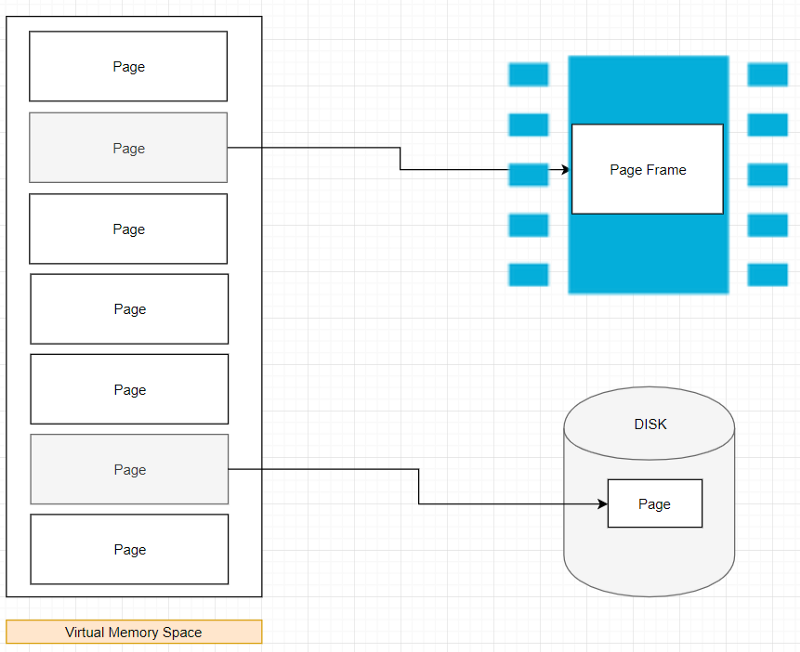

All means for memory allocation have to work with the address space of virtual memory, which is controlled by the operating system. Let's take a look at how memory works, starting from the lowest level - from memory cells.

Here's how you can imagine a cell of RAM.

')

Memory cell diagram

If, very simply, to imagine a cell of RAM and what surrounds it, then we will have the following:

The scheme of interaction of physical memory and processor

The data bus is responsible for transporting data between the processor and the physical memory.

Now let's talk about the address line and addressable bytes.

Address bus lines between the processor and physical memory

As a result, it turns out that the number of addressable bytes depends on the total number of address lines. For example, if you have 64 address lines (x86–64 processors), you can address 2 64 bytes (16 exabytes) of memory, but most architectures that use 64-bit pointers actually use 48-bit address lines (AMD64) and 42-bit address lines (Intel), which, theoretically, allows computers to be equipped with 256 terabytes of physical memory (Linux allows, on x86–64 architecture, when using address pages of 4 levels, to allocate processes up to 128 TB of address space, Windows allows 192 TB).

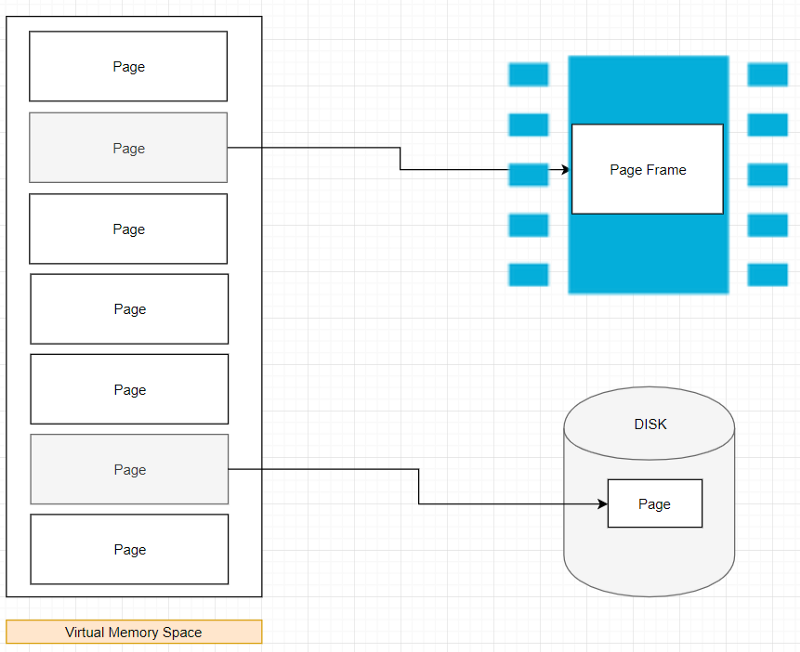

Since the size of the physical RAM is limited, each process runs in its own sandbox - in the so-called “virtual address space”, called virtual memory.

The byte addresses in the virtual address space do not correspond to the addresses that the processor uses to access physical memory. As a result, you need a system that allows you to convert virtual addresses into physical ones. Take a look at how virtual memory addresses look like.

Representation of the virtual address space

As a result, when the processor executes an instruction that refers to a memory address, the first step is to convert the logical address to a linear address. This conversion is performed by the memory management unit.

Simplified representation of the relationship of virtual and physical memory

Since logical addresses are too large to make it convenient to work with them separately (depending on various factors), the memory is organized into structures called pages. In this case, the virtual address space is divided into small areas, pages that are 4 KB in size in most OS, although this size can usually be changed. This is the smallest unit of memory management in virtual memory. Virtual memory does not store anything; it simply sets the correspondence between the address space of the program and the physical memory.

Processes see only virtual memory addresses. What happens if the program needs more dynamic memory (such memory is also called heap memory, or “heap”)? Here is an example of a simple assembler code in which additional dynamically allocated memory is requested from the system:

Here's how it can be represented as a diagram.

Increase in dynamically allocated memory

The program requests additional memory using the brk system call (sbrk / mmap and so on). The kernel updates virtual memory information, but new pages are not yet represented in physical memory, and here there is a difference between virtual and physical memory.

After we, in general terms, discussed working with virtual address space, talked about how to perform the request for additional dynamic memory (memory in the heap), it will be easier for us to talk about the means for memory allocation.

If there is enough memory in the heap to satisfy our code requests, then the memory allocator can execute these requests without referring to the kernel. Otherwise, it has to increase the heap size using a system call (using brk, for example), while requesting a large block of memory. In the case of malloc, “large” means the size described by the

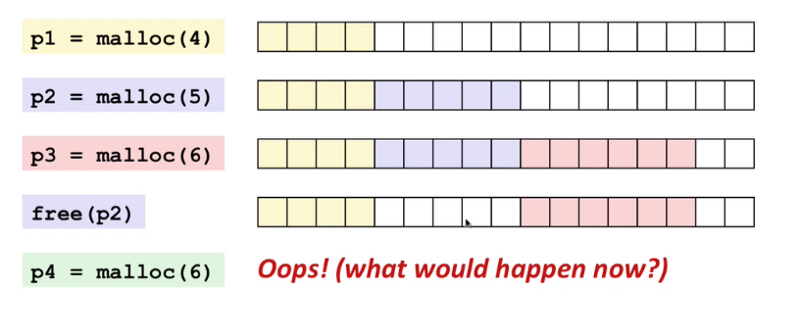

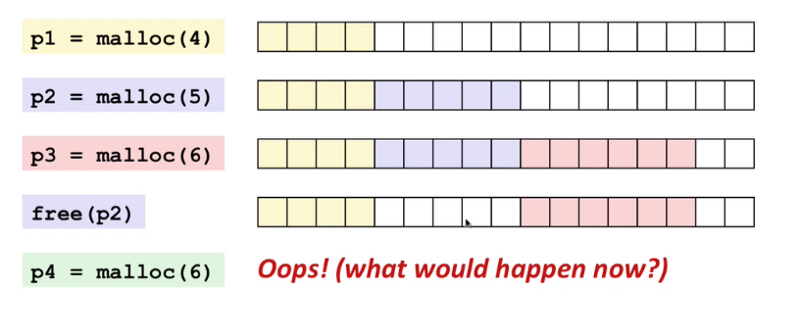

However, a memory allocator has more responsibilities than a simple memory allocation. One of his most important responsibilities is to reduce internal and external memory fragmentation, and to allocate memory blocks as quickly as possible. Suppose that our program sequentially executes requests for allocating continuous memory blocks using a function of the

Demonstration of External Fragmentation

In the previous scheme, in step p4, we do not have enough consecutive blocks of memory to fulfill the request for the allocation of six such blocks, although the total amount of free memory allows this. This situation leads to memory fragmentation.

How to reduce memory fragmentation? The answer to this question depends on the specific memory allocation algorithm, on which basic library is used for working with memory.

We will now look at the TCMalloc memory allocator, on which the Go memory allocation mechanisms are based.

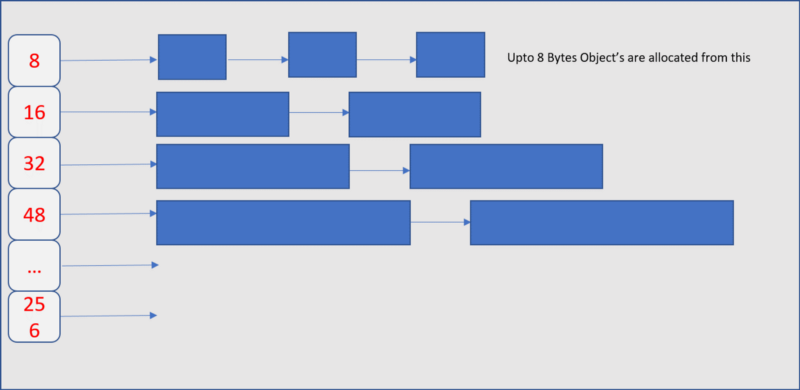

At the heart of TCMalloc is the idea of sharing memory into several levels for the sake of reducing memory fragmentation. Inside TCMalloc, memory management is divided into two parts: working with the memory of threads and working with a heap.

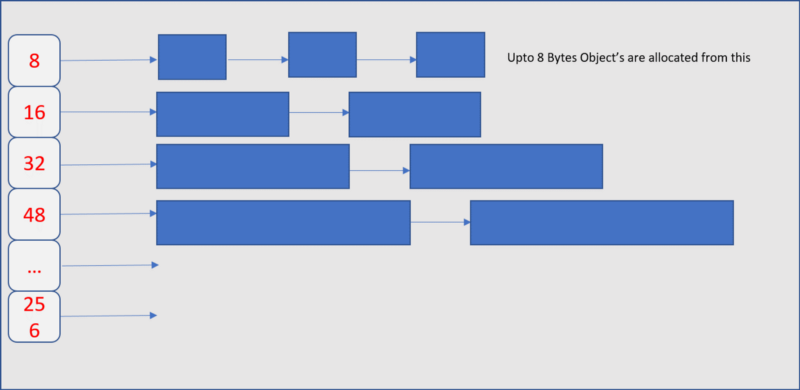

Each page of memory is divided into a sequence of fragments of certain sizes, selected in accordance with the size classes. This reduces fragmentation. As a result, each stream has a cache for small objects, which makes it very efficient to allocate memory for objects whose size is less than or equal to 32 KB.

Thread cache

A TCMalloc managed heap is a collection of pages in which a set of consecutive pages can be represented as a range of pages (span). When you need to allocate memory for an object whose size exceeds 32 KB, a heap is used to allocate memory.

Heap and work with pages

When there is not enough space to accommodate small objects in memory, they turn to the heap for memory. If there is not enough free memory on the heap, additional memory is requested from the operating system.

As a result, the presented model of working with memory supports a user-space memory pool, its use significantly improves the efficiency of memory allocation and freeing.

It should be noted that the Go memory allocator was originally based on TCMalloc, but it differs slightly from it.

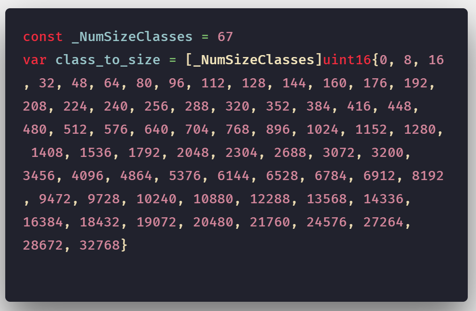

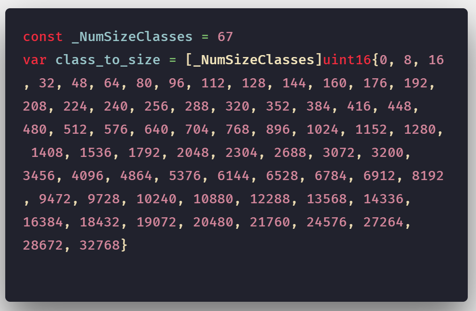

We know that the Go runtime schedules gorutin execution on logical processors. Similarly, the version of TCMalloc used in Go divides memory pages into blocks whose dimensions correspond to certain size classes, which exist 67.

If you are not familiar with the Go planner , you can read about it here .

Go Classes

Since the minimum page size in Go is 8192 bytes (8 KB), if such a page is divided into blocks of 1 KB in size, we will get 8 such blocks.

An 8 KB page is divided into blocks corresponding to a 1 KB size class.

Similar page sequences in Go are managed using a structure called mspan.

The mspan structure is a doubly linked list, an object that contains the starting address of the page, information about the page size and the number of pages included in it.

Mspan structure

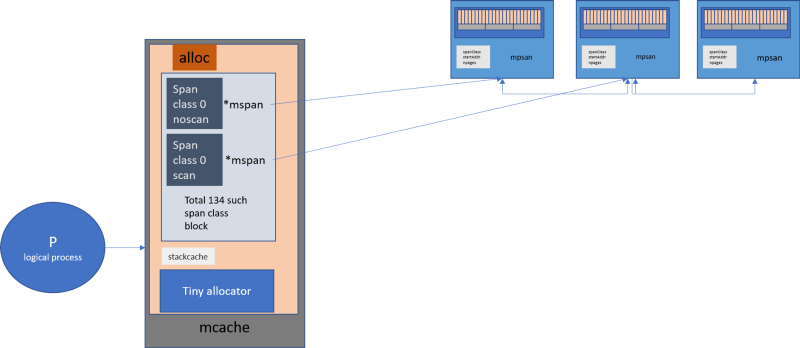

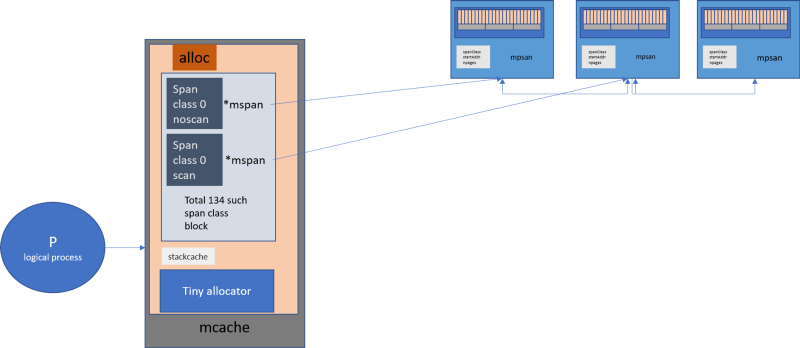

Like TCMalloc, Go provides each logical processor with a local cache for threads, known as mcache. As a result, if a gorutin needs memory, she can get it directly from mcache. To do this, you do not need to perform locks, since at any time only one gorutin is executed on one logical processor.

The mcache structure contains, in the form of a cache, mspan structures of various size classes.

The interaction between the logical processor, mcache and mspan in Go

Since each logical processor has its own mcache, there is no need for locks when allocating memory from mcache.

Each size class can be represented by one of the following objects:

One of the strengths of this approach is that when garbage collection is performed, noscan objects need not be bypassed, since they do not contain objects for which memory is allocated.

What gets into mcache? Objects that do not exceed 32 KB in size fall directly into mcache using the appropriate size class mspan.

What happens if there is no free cell in mcache? Then get a new mspan of the desired size class from the mspan object list, which is called mcentral.

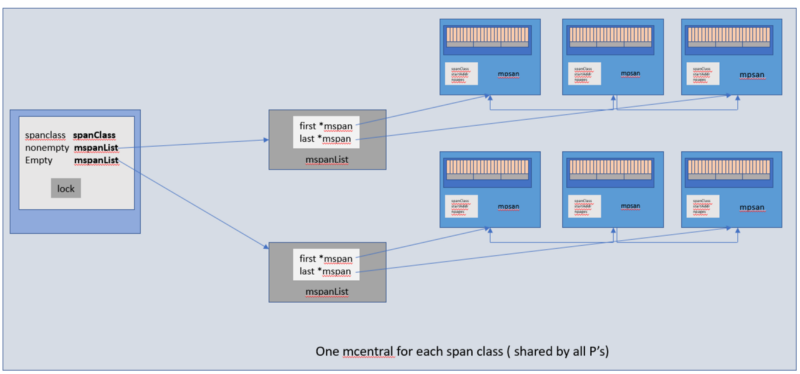

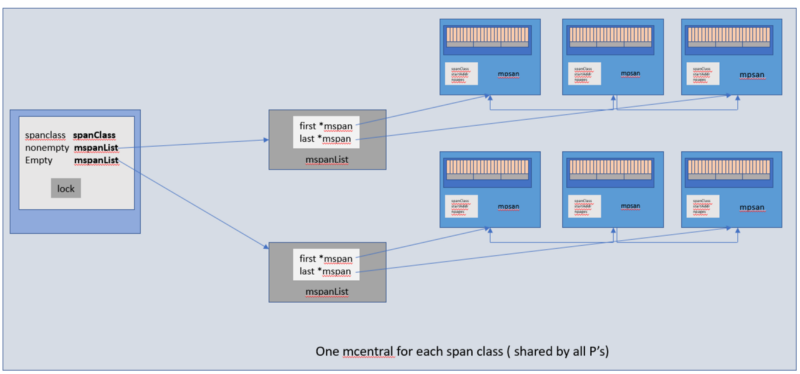

The mcentral structure collects all page ranges of a particular size class. Each mcentral object contains two lists of mspan objects.

Mcentral structure

Each mcentral structure exists within the mheap structure.

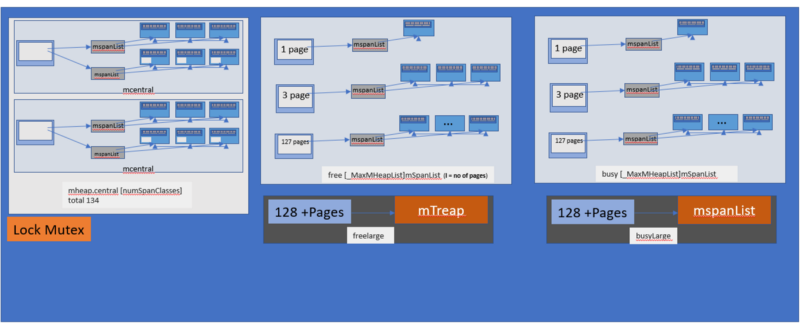

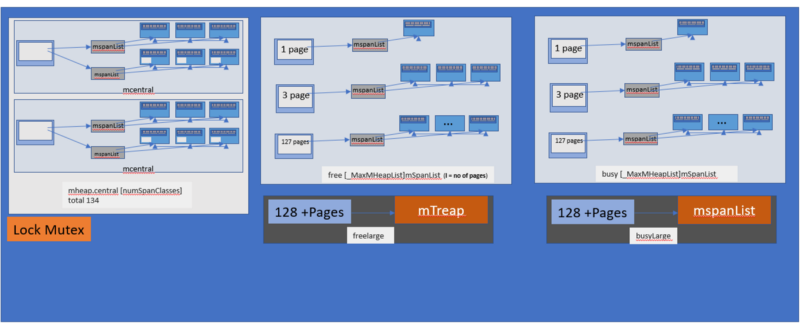

The mheap structure is represented by an object that handles the heap in Go. There is only one such global object that owns the virtual address space.

Mheap structure

As can be seen from the above diagram, the mheap structure contains an array of mcentral structures. This array contains mcentral structures for all size classes.

Since we have a mcentral structure for each size class, when mcache requests the mspan structure from mcentral, blocking is applied at the mcentral individual level, as a result, requests from other mcache that request mspan structures of other sizes can be served simultaneously.

The alignment (pad) allows you to ensure that the mcentral structures are separated from each other by the number of bytes corresponding to the

What happens if the mcentral list is empty? Then mcentral receives a sequence of pages from mheap to allocate fragments of memory of the required size class.

Objects that are larger than 32 KB are considered large objects, and memory for them is allocated directly from mheap. Requests for the allocation of memory for such objects are performed using a block, as a result, at a given point in time, a similar request from only one logical processor can be processed.

In fact, at the operating system level, Go requests the allocation of even larger chunks of memory, called arenas. The one-time allocation of large pieces of memory allows you to find a compromise between the amount of memory allocated to the application and costly in terms of performance appeals to the operating system.

The memory requested in the heap is allocated from the arena. Consider this mechanism.

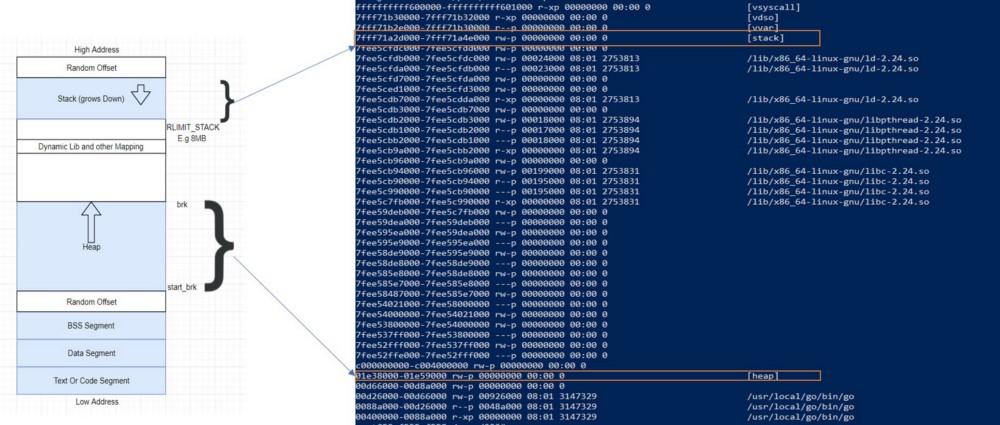

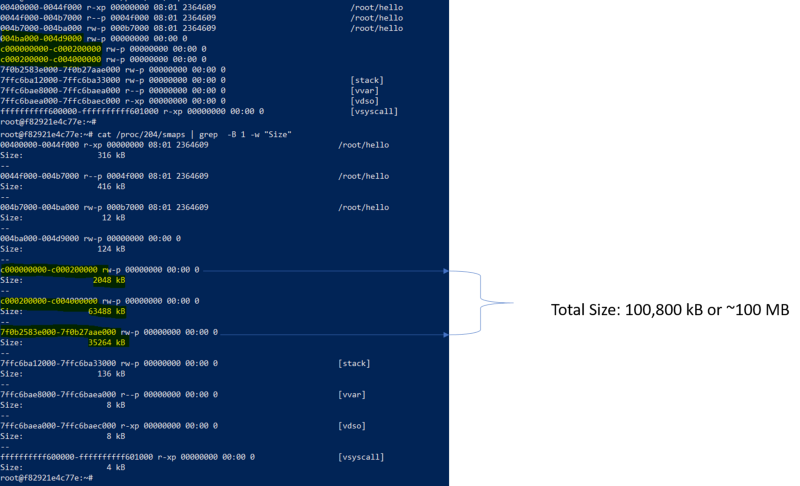

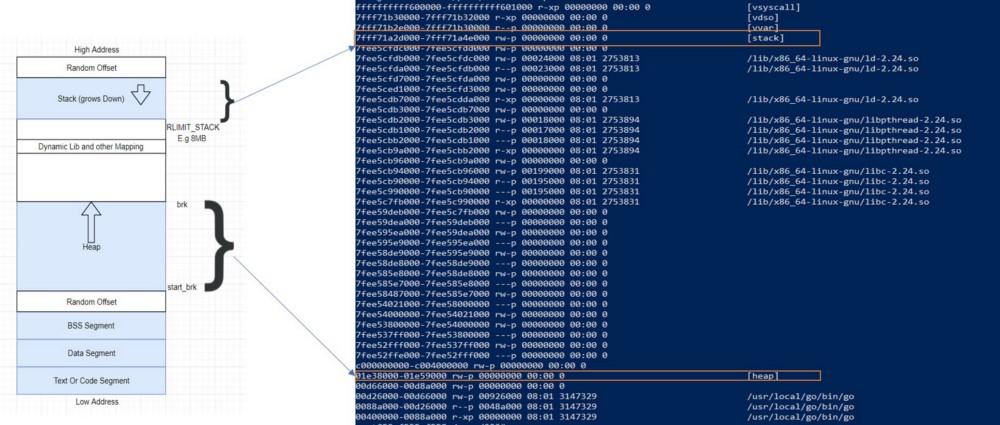

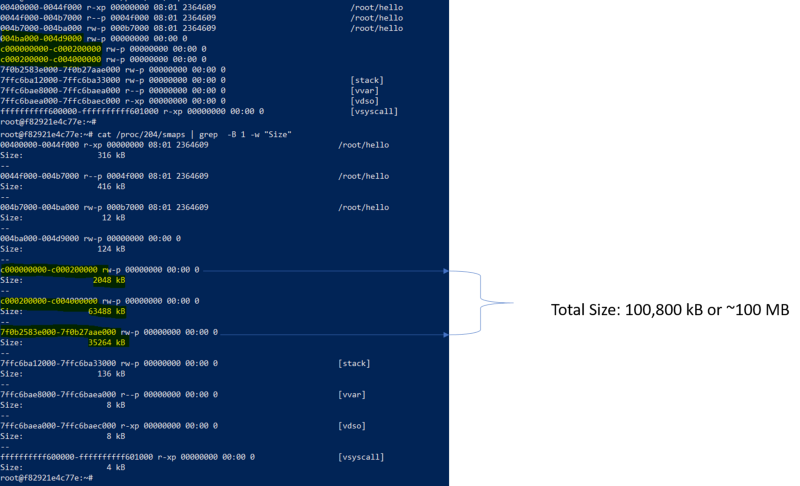

Take a look at the memory usage of a simple program written in Go:

Information about the process of the program

The virtual address space of even such a simple program is approximately 100 MB, while the RSS rate is only 696 KB. To begin, let's try to find out the reason for this discrepancy.

Map and smap data

Here you can see the memory area, the size of which is approximately equal to 2 MB, 64 MB, 32 MB. What is this memory?

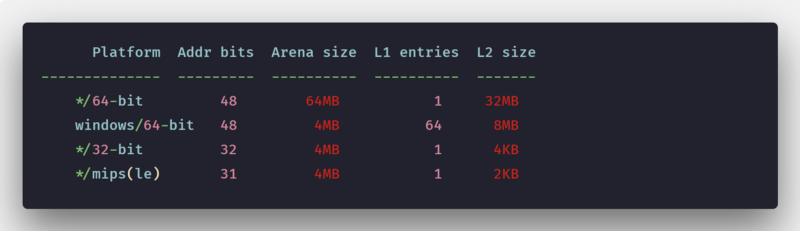

It turns out that virtual memory in Go consists of a set of arenas. The original memory size intended for the heap corresponds to one arena, that is, 64 MB (this is relevant for Go 1.11.5).

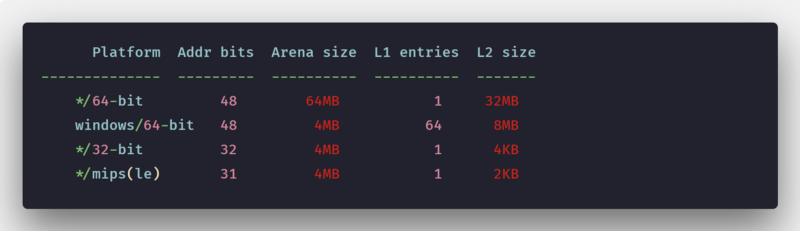

The current size of the arena in various systems

As a result, the memory required for the current needs of the program is allocated in small portions. This process begins with a single 64 MB arena.

Those numerical indicators, which we are talking about here, should not be taken for some absolute and constant values. They can change. Previously, for example, Go reserved a continuous virtual space in advance, on 64-bit systems the size of the arena was 512 GB (is it interesting to think about what will happen if the real need for memory is so large that the corresponding request is rejected by mmap?).

In fact, a bunch of we call a set of arenas. In Go, arenas are perceived as fragments of memory, divided into blocks of 8192 bytes (8 Kb).

One 64 MB arena

In Go, there are a couple more varieties of blocks - span and bitmap. Memory for them is allocated outside the heap, they store arena metadata. They are mainly used in garbage collection.

Here is a general scheme of the memory allocation mechanisms in Go.

General scheme of memory allocation mechanisms in Go

In general, it can be noted that in this material we described the Go memory subsystem in very general terms. The main idea of the memory subsystem in Go is memory allocation using various structures and caches of different levels. This takes into account the size of the objects for which the memory is allocated.

Representation of a single block of continuous memory addresses received from the operating system in the form of a multi-level structure, increases the efficiency of the memory allocation mechanism due to the fact that this approach avoids locks. The allocation of resources, taking into account the size of objects that need to be stored in memory, allows to reduce fragmentation, and, after the release of memory, allows you to speed up the collection of garbage.

Dear readers! Have you encountered problems caused by memory problems in programs written in Go?

The author of the material, the translation of which we publish, decided to get to the essence of the memory allocation tools in Go and tell about it.

Physical and virtual memory

All means for memory allocation have to work with the address space of virtual memory, which is controlled by the operating system. Let's take a look at how memory works, starting from the lowest level - from memory cells.

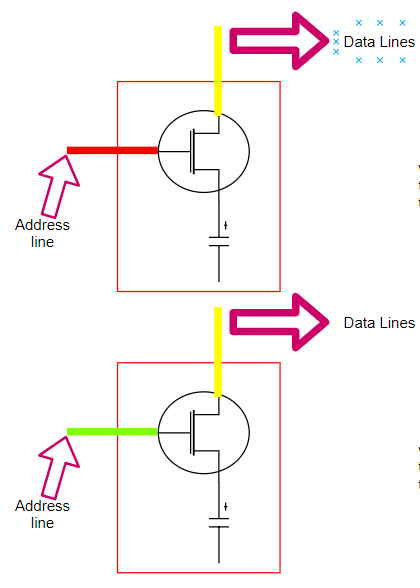

Here's how you can imagine a cell of RAM.

')

Memory cell diagram

If, very simply, to imagine a cell of RAM and what surrounds it, then we will have the following:

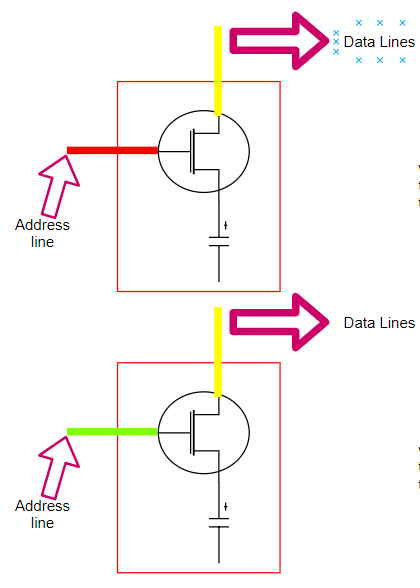

- The address line (the transistor plays the role of a switch) is what gives access to the capacitor (data lines).

- When a signal appears in the address line (red line), the data line allows data to be written to the memory cell, that is, charging the capacitor, which makes it possible to store in it a logical value corresponding to 1.

- When there is no signal in the address line (green line), the capacitor is isolated and its charge does not change. To write to cell 0, you must select its address and feed the logical 0 data line, that is, connect the data line to the minus, thereby discharging the capacitor.

- When the processor needs to read a value from memory, the signal is sent along the address line (the switch closes). If the capacitor is charged, the signal goes through the data line (read 1), otherwise the signal does not go through the data line (read 0).

The scheme of interaction of physical memory and processor

The data bus is responsible for transporting data between the processor and the physical memory.

Now let's talk about the address line and addressable bytes.

Address bus lines between the processor and physical memory

- Each byte in memory is assigned a unique numeric identifier (address). It should be noted that the number of physical bytes in memory is not equal to the number of address lines.

- Each address line can specify a 1-bit value, so it points to one bit in the address of a certain byte.

- Our circuit has 32 address lines. As a result, each addressable byte uses a 32-bit number as an address. [00000000000000000000000000000000] - the lowest memory address. [11111111111111111111111111111111] - the highest memory address.

- Since each byte has a 32-bit address, our address space consists of 2 32 addressable bytes (4 GB).

As a result, it turns out that the number of addressable bytes depends on the total number of address lines. For example, if you have 64 address lines (x86–64 processors), you can address 2 64 bytes (16 exabytes) of memory, but most architectures that use 64-bit pointers actually use 48-bit address lines (AMD64) and 42-bit address lines (Intel), which, theoretically, allows computers to be equipped with 256 terabytes of physical memory (Linux allows, on x86–64 architecture, when using address pages of 4 levels, to allocate processes up to 128 TB of address space, Windows allows 192 TB).

Since the size of the physical RAM is limited, each process runs in its own sandbox - in the so-called “virtual address space”, called virtual memory.

The byte addresses in the virtual address space do not correspond to the addresses that the processor uses to access physical memory. As a result, you need a system that allows you to convert virtual addresses into physical ones. Take a look at how virtual memory addresses look like.

Representation of the virtual address space

As a result, when the processor executes an instruction that refers to a memory address, the first step is to convert the logical address to a linear address. This conversion is performed by the memory management unit.

Simplified representation of the relationship of virtual and physical memory

Since logical addresses are too large to make it convenient to work with them separately (depending on various factors), the memory is organized into structures called pages. In this case, the virtual address space is divided into small areas, pages that are 4 KB in size in most OS, although this size can usually be changed. This is the smallest unit of memory management in virtual memory. Virtual memory does not store anything; it simply sets the correspondence between the address space of the program and the physical memory.

Processes see only virtual memory addresses. What happens if the program needs more dynamic memory (such memory is also called heap memory, or “heap”)? Here is an example of a simple assembler code in which additional dynamically allocated memory is requested from the system:

_start: mov $12, %rax # brk mov $0, %rdi # 0 - , syscall b0: mov %rax, %rsi # rsi , mov %rax, %rdi # ... add $4, %rdi # .. 4 , mov $12, %rax # brk syscall Here's how it can be represented as a diagram.

Increase in dynamically allocated memory

The program requests additional memory using the brk system call (sbrk / mmap and so on). The kernel updates virtual memory information, but new pages are not yet represented in physical memory, and here there is a difference between virtual and physical memory.

Memory allocator

After we, in general terms, discussed working with virtual address space, talked about how to perform the request for additional dynamic memory (memory in the heap), it will be easier for us to talk about the means for memory allocation.

If there is enough memory in the heap to satisfy our code requests, then the memory allocator can execute these requests without referring to the kernel. Otherwise, it has to increase the heap size using a system call (using brk, for example), while requesting a large block of memory. In the case of malloc, “large” means the size described by the

MMAP_THRESHOLD parameter, which, by default, is 128 KB.However, a memory allocator has more responsibilities than a simple memory allocation. One of his most important responsibilities is to reduce internal and external memory fragmentation, and to allocate memory blocks as quickly as possible. Suppose that our program sequentially executes requests for allocating continuous memory blocks using a function of the

malloc(size) , after which this memory is freed using the function of the free(pointer) .

Demonstration of External Fragmentation

In the previous scheme, in step p4, we do not have enough consecutive blocks of memory to fulfill the request for the allocation of six such blocks, although the total amount of free memory allows this. This situation leads to memory fragmentation.

How to reduce memory fragmentation? The answer to this question depends on the specific memory allocation algorithm, on which basic library is used for working with memory.

We will now look at the TCMalloc memory allocator, on which the Go memory allocation mechanisms are based.

TCMalloc

At the heart of TCMalloc is the idea of sharing memory into several levels for the sake of reducing memory fragmentation. Inside TCMalloc, memory management is divided into two parts: working with the memory of threads and working with a heap.

Memory threads

Each page of memory is divided into a sequence of fragments of certain sizes, selected in accordance with the size classes. This reduces fragmentation. As a result, each stream has a cache for small objects, which makes it very efficient to allocate memory for objects whose size is less than or equal to 32 KB.

Thread cache

▍ Heap

A TCMalloc managed heap is a collection of pages in which a set of consecutive pages can be represented as a range of pages (span). When you need to allocate memory for an object whose size exceeds 32 KB, a heap is used to allocate memory.

Heap and work with pages

When there is not enough space to accommodate small objects in memory, they turn to the heap for memory. If there is not enough free memory on the heap, additional memory is requested from the operating system.

As a result, the presented model of working with memory supports a user-space memory pool, its use significantly improves the efficiency of memory allocation and freeing.

It should be noted that the Go memory allocator was originally based on TCMalloc, but it differs slightly from it.

Go Memory Allocator

We know that the Go runtime schedules gorutin execution on logical processors. Similarly, the version of TCMalloc used in Go divides memory pages into blocks whose dimensions correspond to certain size classes, which exist 67.

If you are not familiar with the Go planner , you can read about it here .

Go Classes

Since the minimum page size in Go is 8192 bytes (8 KB), if such a page is divided into blocks of 1 KB in size, we will get 8 such blocks.

An 8 KB page is divided into blocks corresponding to a 1 KB size class.

Similar page sequences in Go are managed using a structure called mspan.

M mspan structure

The mspan structure is a doubly linked list, an object that contains the starting address of the page, information about the page size and the number of pages included in it.

Mspan structure

M mcache structure

Like TCMalloc, Go provides each logical processor with a local cache for threads, known as mcache. As a result, if a gorutin needs memory, she can get it directly from mcache. To do this, you do not need to perform locks, since at any time only one gorutin is executed on one logical processor.

The mcache structure contains, in the form of a cache, mspan structures of various size classes.

The interaction between the logical processor, mcache and mspan in Go

Since each logical processor has its own mcache, there is no need for locks when allocating memory from mcache.

Each size class can be represented by one of the following objects:

- The scan object is the object that contains the pointer.

- The noscan object is an object with no pointer.

One of the strengths of this approach is that when garbage collection is performed, noscan objects need not be bypassed, since they do not contain objects for which memory is allocated.

What gets into mcache? Objects that do not exceed 32 KB in size fall directly into mcache using the appropriate size class mspan.

What happens if there is no free cell in mcache? Then get a new mspan of the desired size class from the mspan object list, which is called mcentral.

▍ mcentral structure

The mcentral structure collects all page ranges of a particular size class. Each mcentral object contains two lists of mspan objects.

- A list of mspan objects that do not have free objects, or those mspan that are in mcache.

- List of mspan objects that have free objects.

Mcentral structure

Each mcentral structure exists within the mheap structure.

M mheap structure

The mheap structure is represented by an object that handles the heap in Go. There is only one such global object that owns the virtual address space.

Mheap structure

As can be seen from the above diagram, the mheap structure contains an array of mcentral structures. This array contains mcentral structures for all size classes.

central [numSpanClasses]struct { mcentral mcentral pad [sys.CacheLineSize unsafe.Sizeof(mcentral{})%sys.CacheLineSize]byte } Since we have a mcentral structure for each size class, when mcache requests the mspan structure from mcentral, blocking is applied at the mcentral individual level, as a result, requests from other mcache that request mspan structures of other sizes can be served simultaneously.

The alignment (pad) allows you to ensure that the mcentral structures are separated from each other by the number of bytes corresponding to the

CacheLineSize value. As a result, each mcentral.lock has its own cache line, which avoids the problems associated with false memory sharing.What happens if the mcentral list is empty? Then mcentral receives a sequence of pages from mheap to allocate fragments of memory of the required size class.

free[_MaxMHeapList]mSpanListis a spanList array. The mspan structure in each spanList consists of 1 ~ 127 (_MaxMHeapList - 1) pages. For example, free [3] is a linked list of mspan structures containing 3 pages. The word "free" in this case indicates that we are talking about an empty list, in which the memory is not allocated. A list may be, as opposed to an empty list, in which memory is allocated (busy).freelarge mSpanListis a list of free mspan structures. The number of pages per element (i.e., mspan) is more than 127. To support such a list, the mtreap data structure is used. The list of busy mspan structures is called busylarge.

Objects that are larger than 32 KB are considered large objects, and memory for them is allocated directly from mheap. Requests for the allocation of memory for such objects are performed using a block, as a result, at a given point in time, a similar request from only one logical processor can be processed.

The process of allocating memory for objects

- If the size of an object exceeds 32 KB, it is recognized as large, the memory for it is allocated directly from mheap.

- If the size of the object is less than 16 KB, the mcache mechanism is used, which is called tiny allocator.

- If the size of the object is in the range of 16-32 Kb, then it turns out which size class (sizeClass) should be used, then the appropriate block is allocated in mcache.

- If there are no blocks available in sizeClass corresponding to mcache, the call to mcentral is made.

- If mcentral has no free blocks, then mheap is accessed and a search is made for the most appropriate mspan. If the memory size required by the application is larger than can be allocated, the requested memory size will be processed so that the program can return as many pages as necessary by creating a new mspan structure.

- If the virtual memory of the application is still not enough, the operating system is called for a new set of pages (at least 1 MB of memory is requested).

In fact, at the operating system level, Go requests the allocation of even larger chunks of memory, called arenas. The one-time allocation of large pieces of memory allows you to find a compromise between the amount of memory allocated to the application and costly in terms of performance appeals to the operating system.

The memory requested in the heap is allocated from the arena. Consider this mechanism.

Virtual Memory Go

Take a look at the memory usage of a simple program written in Go:

func main() { for {} }

Information about the process of the program

The virtual address space of even such a simple program is approximately 100 MB, while the RSS rate is only 696 KB. To begin, let's try to find out the reason for this discrepancy.

Map and smap data

Here you can see the memory area, the size of which is approximately equal to 2 MB, 64 MB, 32 MB. What is this memory?

▍ Arenas

It turns out that virtual memory in Go consists of a set of arenas. The original memory size intended for the heap corresponds to one arena, that is, 64 MB (this is relevant for Go 1.11.5).

The current size of the arena in various systems

As a result, the memory required for the current needs of the program is allocated in small portions. This process begins with a single 64 MB arena.

Those numerical indicators, which we are talking about here, should not be taken for some absolute and constant values. They can change. Previously, for example, Go reserved a continuous virtual space in advance, on 64-bit systems the size of the arena was 512 GB (is it interesting to think about what will happen if the real need for memory is so large that the corresponding request is rejected by mmap?).

In fact, a bunch of we call a set of arenas. In Go, arenas are perceived as fragments of memory, divided into blocks of 8192 bytes (8 Kb).

One 64 MB arena

In Go, there are a couple more varieties of blocks - span and bitmap. Memory for them is allocated outside the heap, they store arena metadata. They are mainly used in garbage collection.

Here is a general scheme of the memory allocation mechanisms in Go.

General scheme of memory allocation mechanisms in Go

Results

In general, it can be noted that in this material we described the Go memory subsystem in very general terms. The main idea of the memory subsystem in Go is memory allocation using various structures and caches of different levels. This takes into account the size of the objects for which the memory is allocated.

Representation of a single block of continuous memory addresses received from the operating system in the form of a multi-level structure, increases the efficiency of the memory allocation mechanism due to the fact that this approach avoids locks. The allocation of resources, taking into account the size of objects that need to be stored in memory, allows to reduce fragmentation, and, after the release of memory, allows you to speed up the collection of garbage.

Dear readers! Have you encountered problems caused by memory problems in programs written in Go?

Source: https://habr.com/ru/post/442648/

All Articles