CI / CD using Jenkins at Kubernetes

Good day.

On Habré there are already several articles about jenkins, ci / cd and kubernetes, but in this one I want to concentrate not on analyzing the capabilities of these technologies, but on the simplest configuration for building the ci / cd pipeline.

I mean that the reader has a basic understanding of docker, and I will not dwell on the topics of installing and configuring kubernetes. All examples will be shown on the minikube, but can also be applied on EKS, GKE, or similar without significant changes.

Environments

I suggest using the following environments:

- test - for manual deployment and branch testing

- staging - the environment where all changes that fall into the master are automatically applied

- production - the environment used by real users, where the changes will get only after confirming their performance on staging

The environments will be organized using kubernetes namespaces within a single cluster. This approach is as simple and quick as possible at the start, but also has its drawbacks: the namespaces are not completely isolated from each other in the kubernetes.

In this example, each namespace will have the same set of ConfigMaps from the configurations of this environment:

apiVersion: v1 kind: Namespace metadata: name: production --- apiVersion: v1 kind: ConfigMap metadata: name: environment.properties namespace: production data: environment.properties: | env=production Helm

Helm is an application that helps manage resources installed on kubernetes.

Installation instructions can be found here .

To get started, you need to initialize tiller pod to use helm with the cluster:

helm init Jenkins

I will use Jenkins as it is a fairly simple, flexible and popular platform for building projects. It will be installed in a separate namespace for isolation from other environments. Since I plan to use helm in the future, it is possible to simplify the installation of Jenkins using the existing open source charts :

helm install --name jenkins --namespace jenkins -f jenkins/demo-values.yaml stable/jenkins demo-values.yaml contain the Jenkins version, a set of pre-installed plug-ins, a domain name and other configuration

Master: Name: jenkins-master Image: "jenkins/jenkins" ImageTag: "2.163-slim" OverwriteConfig: true AdminUser: admin AdminPassword: admin InstallPlugins: - kubernetes:1.14.3 - workflow-aggregator:2.6 - workflow-job:2.31 - credentials-binding:1.17 - git:3.9.3 - greenballs:1.15 - google-login:1.4 - role-strategy:2.9.0 - locale:1.4 ServicePort: 8080 ServiceType: NodePort HostName: jenkins.192.168.99.100.nip.io Ingress: Path: / Agent: Enabled: true Image: "jenkins/jnlp-slave" ImageTag: "3.27-1" #autoadjust agent resources limits resources: requests: cpu: null memory: null limits: cpu: null memory: null #to allow jenkins create slave pods rbac: install: true This configuration uses admin / admin as the username and password for the login, and can be reconfigured in the future. One of the possible options is SSO from google (this requires the google-login plugin, its settings are in Jenkins> Manage Jenkins> Configure Global Security> Access Control> Security Realm> Login with Google).

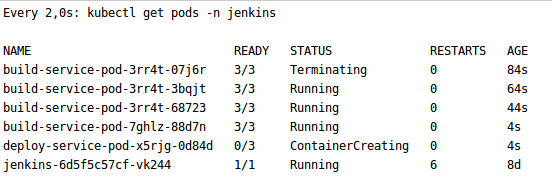

Jenkins will immediately be configured to automatically create a one-time slave for each assembly. Due to this, the team will no longer expect a free agent to build, and the business will be able to save on the number of required servers.

PersistenceVolume is also configured out of the box to save pipelines when it is restarted or updated.

In order for the automatic deployment scripts to work correctly, you need to give a cluster-admin permission for Jenkins to get a list of resources in kubernetes and manipulate them.

kubectl create clusterrolebinding jenkins --clusterrole cluster-admin --serviceaccount=jenkins:default In the future, you can update Jenkins using helm, in the case of new versions of plug-ins or configuration changes.

helm upgrade jenkins stable/jenkins -f jenkins/demo-values.yaml This can be done via the Jenkins interface itself, but with helm you will have the opportunity to roll back to previous revisions using:

helm history jenkins helm rollback jenkins ${revision} Assembling the application

As an example, I will build and deploy a simple spring boot application. Similarly with Jenkins I will use helm.

The build will occur in this sequence:

- checkout

- compilation

- unit test

- integration test

- artifact assembly

- deploy artifact in the docker registry

- deploy artifact on staging (only for master branch)

For this, I use the Jenkins file . In my opinion, this is a very flexible (but, unfortunately, not the easiest) way to configure the project build. One of its advantages is the ability to keep the project build configuration in the repository with the project itself.

checkout

In the case of bitbucket or github organization, you can configure Jenkins to periodically scan an entire account for repositories with Jenkinsfile and automatically create assemblies for them. Jenkins will collect both master and branches. Pull requests will be displayed in a separate tab. There is a simpler option - add a separate git repository, no matter where it is hosted. In this example, I will do just that. All you need is in the menu Jenkins> New item> Multibranch Pipeline to select the name of the assembly and bind the git repository.

Compilation

Since Jenkins creates a new pod for each assembly, if maven or similar collectors are used, the dependencies will be downloaded again every time. To avoid this, you can select PersistenceVolume for .m2 or similar caches and mount it in the pod that builds the project.

apiVersion: "v1" kind: "PersistentVolumeClaim" metadata: name: "repository" namespace: "jenkins" spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi In my case, this allowed us to speed up the pipeline from about 4 to 1 minute.

Versioning

For CI / CD to work correctly, each build needs a unique version.

A very good option would be to use semantic versioning . This will allow you to track backward compatible and incompatible changes, but such versioning is more difficult to automate.

In this example, I will generate a version from the id and the date of the commit, as well as the names of the branch, if it is not master. For example, 56e0fbdc-201802231623 or b3d3c143-201802231548-PR-18 .

The advantages of this approach:

- ease of automation

- from the version it is easy to get the source code and the time of its creation

- visually it is possible to distinguish the release version of the candidate (from the master) or the experimental (from the branch)

but: - this version is harder to use in oral communication

- it is not clear whether there were incompatible changes.

Since docker image can have several tags at the same time, it is possible to combine approaches: all releases use the generated versions, and those that go to production are additionally (manually) tagged with semantic versioning. In turn, this is due to the even greater complexity of implementation and the ambiguity of which version the application should show.

Artifacts

The result of the assembly will be:

- docker image with the application that will be stored and loaded from the docker registry. The example will use the built-in registry from minikube, which can be replaced with docker hub, either a private registry from amazon (ecr) or google (do not forget to provide credentials for them using the withCredentials construction).

- helm charts with the description of application deployment (deployment, service, etc) in the helm directory. Ideally, they should be stored on a separate artifact repository, but, for simplicity, they can be used by checking the correct commit from git.

Jenkinsfile

As a result, the application will be built using the following Jenkinsfile:

def branch def revision def registryIp pipeline { agent { kubernetes { label 'build-service-pod' defaultContainer 'jnlp' yaml """ apiVersion: v1 kind: Pod metadata: labels: job: build-service spec: containers: - name: maven image: maven:3.6.0-jdk-11-slim command: ["cat"] tty: true volumeMounts: - name: repository mountPath: /root/.m2/repository - name: docker image: docker:18.09.2 command: ["cat"] tty: true volumeMounts: - name: docker-sock mountPath: /var/run/docker.sock volumes: - name: repository persistentVolumeClaim: claimName: repository - name: docker-sock hostPath: path: /var/run/docker.sock """ } } options { skipDefaultCheckout true } stages { stage ('checkout') { steps { script { def repo = checkout scm revision = sh(script: 'git log -1 --format=\'%h.%ad\' --date=format:%Y%m%d-%H%M | cat', returnStdout: true).trim() branch = repo.GIT_BRANCH.take(20).replaceAll('/', '_') if (branch != 'master') { revision += "-${branch}" } sh "echo 'Building revision: ${revision}'" } } } stage ('compile') { steps { container('maven') { sh 'mvn clean compile test-compile' } } } stage ('unit test') { steps { container('maven') { sh 'mvn test' } } } stage ('integration test') { steps { container ('maven') { sh 'mvn verify' } } } stage ('build artifact') { steps { container('maven') { sh "mvn package -Dmaven.test.skip -Drevision=${revision}" } container('docker') { script { registryIp = sh(script: 'getent hosts registry.kube-system | awk \'{ print $1 ; exit }\'', returnStdout: true).trim() sh "docker build . -t ${registryIp}/demo/app:${revision} --build-arg REVISION=${revision}" } } } } stage ('publish artifact') { when { expression { branch == 'master' } } steps { container('docker') { sh "docker push ${registryIp}/demo/app:${revision}" } } } } } Additional Jenkins pipelines for application lifecycle management

Suppose the repositories are organized so that:

- contain a separate application in the form of docker image

- can be stuck using helm files, which are located in the helm directory

- are versioned using the same approach and have the helm / setVersion.sh file to set the revision to the helm charts

Then we can build several Jenkinsfile pipelines to manage the application lifecycle, namely:

- deployments to any environment

- remove from any environment

- promote with staging in production

- rollback to the previous version

In Jenkinsfile of each project, you can add a call to the deploy pipeline which will be executed at each successful compilation of the master branch or when explicitly deploying the branch to the test environment.

... stage ('deploy to env') { when { expression { branch == 'master' || params.DEPLOY_BRANCH_TO_TST } } steps { build job: './../Deploy', parameters: [ [$class: 'StringParameterValue', name: 'GIT_REPO', value: 'habr-demo-app'], [$class: 'StringParameterValue', name: 'VERSION', value: revision], [$class: 'StringParameterValue', name: 'ENV', value: branch == 'master' ? 'staging' : 'test'] ], wait: false } } ... Here you can find Jenkinsfile with all the steps.

Thus, it is possible to build a continuous deployment on the selected test or combat environment, also using jenkins or its email / slack / etc notification, to have an audit of which application, which version, by whom, when and where it was assigned.

Conclusion

Using Jenkinsfile and helm you can simply build ci / cd for your application. This method may be most relevant for small teams that have recently started using kubernetes and do not have the ability (regardless of the reason) to use services that can provide such functionality out of the box.

Configuration examples for the Jenkins and pipeline environments for application lifecycle management can be found here and an example of the Jenkinsfile application here .

')

Source: https://habr.com/ru/post/442614/

All Articles