Long live overclockers: how liquid cooling has become dominant in data centers

“One cannot do with high-speed computers”

In the movie “Iron Man 2” there is a moment when Tony Stark watches an old film of his deceased father, where he says: “I am limited by the technology of my time, but one day you will be able to figure it out. And then you will change the world. ” This is fantastic, but the idea that it expresses is very real. The ideas of engineers are often far ahead of their time. There were always gadgets in Star Trek, but the rest of the world had to work for decades to create tablets and e-books.

The concept of liquid cooling fits perfectly into this category. The idea itself has existed since the 1960s, but it remained radical compared to a much cheaper and safer option for air cooling. It took more than 40 years until liquid cooling began to develop gradually in the 2000s, and even then it was mainly the prerogative of PC lovers who were trying to overclock their CPUs far beyond the limits recommended by Intel and AMD.

Today, liquid cooling systems are gaining popularity. Such a system for a PC can be bought for less than $ 100, while handicrafts aimed at industrial applications and data centers (such as CoolIT, Asetek, Green Revolution Computing, Ebullient) offer liquid cooling (LC) for server rooms. ZHOs are mainly used in supercomputers, high-speed computing or other situations where a huge amount of computer power is required, and the processors operate with almost 100% load, but similar options are becoming more common.

There are two popular types of iron ore: direct chip cooling and submersible. With direct cooling, the radiator is attached to the CPU, like a standard cooler, but instead it is connected to two tubes. One comes up with cold water, cooling the radiator, absorbing CPU heat, and the other going hot. It then cools and returns to the CPU in a closed circuit that resembles blood flow.

')

When immersed cooling equipment is filled with liquid, which, obviously, should not conduct electricity. This approach is most similar to the cooling pools of nuclear reactors. Submersible cooling remains a more advanced option, and requires more expensive heat transfer fluids than a direct connection, where you can use ordinary water. In addition, there is always a risk of leakage. Therefore, so far the most popular is the direct connection option.

Let's take Alphabet as one of the main examples. When this parent company for Google in May 2018 introduced processors for AI TensorFlow 3.0, director Sundar Pichai said that these chips are so powerful that "for the first time we had to install liquid cooling in data centers." Alphabet had to pay this price for an eightfold increase in productivity.

On the other hand, Skybox Datacenters recently announced plans to build a huge supercomputer for 40,000 servers from DownUnder GeoSolutions (DUG), designed for the exploration of oil and gas fields. This project will produce 250 petaflops of computational power, more than any of the existing ones - and it is expected that the servers will be cooled with liquid, being immersed in tanks filled with dielectric liquid.

In any case, “liquid cooling is the cooling of the future, and it always will be,” said Craig Pennington, vice president of the design department at the data center operator Equinix. "It seems obvious that this is the right approach, but no one has taken it."

How did ZHO turn from esoteric art at the turn of computation into a practically accepted method in modern data centers? Like all technologies, this happened partly as a result of evolution, trial and error, and a large number of engineering solutions. However, today's data centers need to thank early overclockers who are unsung heroes of this method.

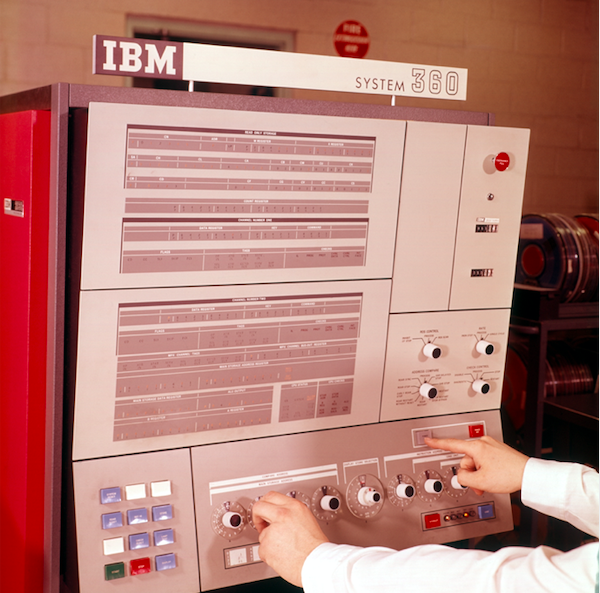

IBM System 360 data processing system control panel

What do we mean by liquid cooling

Liquid cooling became a popular idea in 1964 when IBM was studying the issue of immersion cooling for the System 360 mainframe. It was one of the company's first mainframe; 700 and 7000 series have existed for more than ten years, and System / 360 “began the era of computer compatibility - for the first time allowing different machines from the product line to work together,” as they say at IBM. The concept was simple: the cooled water had to flow through the device, cooling it to a temperature below room temperature, and then the water would be fed directly into the system. The scheme used by IBM is now known as rear cooling when the radiator is mounted behind the mainframe. The device sucked hot air from the mainframe with fans, and then this air was cooled with water, just like a radiator cools a car engine.

Since then, engineers have refined this basic concept, and two dominant forms of living organisms have emerged: immersion and direct contact. Immersion - this is it; the electronics is in a liquid bath, which, for obvious reasons, cannot be water. Fluid does not have to conduct electricity, that is, to be a dielectric (companies like 3M even specially develop liquids for this).

But immersion has many problems and shortcomings. It is possible to get to the server located in the liquid only from above. Therefore, external ports should be located there. Server placement of 1U chassis in the rack would be impractical, so the server will not be able to be placed sequentially. A dielectric, and usually this mineral is small, very expensive, and it is difficult to clean it in case of leakage. Special hard drives will be needed, and reworking the data center will require significant investments. Therefore, as in the case of the above-mentioned supercomputer, diving is best done in the new data center, rather than redoing the old one.

Direct contact, on the contrary, is that the radiator (or heat exchanger) is on the chip, like a normal radiator. Instead of a fan, it uses two water pipes - one bringing cold water for cooling, and the other carrying hot water heated by contact with a radiator. This form of LC has become the most popular, it was adopted from manufacturers such as HP Enterprise, Dell EMC and IBM, as well as from body manufacturers Chatsworth Systems and Schneider Electric.

Direct cooling uses water, but it is very sensitive to its quality. Unfiltered water from the tap can not be used. Just look at your faucet or shower head. No one needs calcium buildup in servers. At least for direct cooling requires pure distilled water, and sometimes its mixture with antifreeze. Making such a coolant is in itself a science.

Intel's liaison

How did we move from IBM radiators to modern environmentally-friendly cooling systems? Again, thanks to overclockers. At the turn of the century, liquid cooling began to gain popularity among PC overclockers and amateurs, who assembled their computers, who wanted to increase the speed of their work beyond the official limits. However, it was an esoteric art without standard designs. Everyone did something different. The person who collected all this needed to be such a MacGyver that the assembly of IKEA products seemed complete nonsense. Most of the cooling systems did not even fit into the case.

In early 2004, due to internal changes in Intel's policy, the situation began to change. An engineer from a design center in Hillsboro, Oregon — where most of the company's chips are designed, despite being headquartered in Santa Clara, California — has been working on a special cooling project for several years. The project cost the company $ 1 million, and was aimed at creating a liquid cooler for processors from Intel. Unfortunately, Intel was going to close it.

The engineer was hoping for a different outcome. To save the project, he came with this idea to Falcon Northwest, a Portland company that produced computer gaming add-ons. “The reason was that the company thought that liquid cooling incited people to overclocking, and this occupation was banned at the time,” said Kelt Reeves, president of Falcon Northwest. And in this position, Intel had its own logic. At that time, unscrupulous retailers from Asia were selling overclocked PCs under the guise of more powerful ones, and with poor cooling, and in the eyes of the public, this somehow became an Intel problem. Therefore, the company opposed overclocking.

However, this engineer from Oregon believed that if he manages to find customers and a market for such a cooler, then Intel will yield as a result. (In addition, the resulting Intel product was much better in quality than what was on the market, Reeves told us). Therefore, after certain internal persuasions and negotiations between the companies, Intel allowed Falcon to sell cooling systems - in particular, because Intel already produced thousands of them. The only catch was that Falcon could not mention the fact that Intel is involved in the case. Falcon agreed, and soon became the first manufacturer to supply all-in-one all-in-one LC systems for PCs.

Reeves noted that this advanced solution for the CI was not particularly user friendly. Falcon had to change the case to fit the radiator, and invent a cooling plate for water. But over time, manufacturers of coolers, for example, ThermalTake and Corsair, studied what Intel was doing, and engaged in consistent improvements. Since then, several products and manufacturers have emerged, for example, CoolIT and Asetek, which specifically made the JO for data centers. Some of their products — for example, pipes that do not break, crack, or leak with a warranty of up to seven years — were eventually licensed to end-user cooling system manufacturers, and this technology exchange in both directions became the norm.

And as this market grew in different directions, even Intel eventually changed its opinion. Now it advertises the overclocking capabilities of the K and X series processors, and does not even care to sell regular coolers for gamers along with the top CPU for gamers.

“ZHO is a proven technology - everyone is involved in it on the consumer side,” Reeves said. Intel has stopped supplying regular coolers with the most powerful CPUs, because they need an operational organization; this is already proven and blessing from Intel is received. I don’t think that there will be someone who says that complete solutions are not reliable enough for that. ”

Submersible cooling in the data center. Boxes filled with dielectric fluid, which flows through the pipes

Skybox Datacenters liquid cooling with immersion. Heat exchangers are immersed with computer equipment, and the dielectric fluid does not leave the tank. The water circuit passes through the rooms and approaches each heat exchanger.

Facts in favor of the practicality of liquid cooling

For a long time, traditional data centers provided a raised floor with small openings through which cold air was sucked in by the servers. This was called CRAC, or computer room air conditioning. The problem is that now it is not enough to blow cold air through the holes in the floor.

The main reason for the recent boom in liquid cooling is a necessity. Today's processors heat up too much, and the servers are too close for the air to cool them effectively, as noted even by Google. The heat capacity of water is 3,300 times greater than that of air, and the water cooling system is capable of pumping 300 liters of water per minute, compared with 20 cubic meters of air per minute.

Simply put, water can cool much more efficiently and in a much smaller space. Therefore, after many years of trying to reduce power consumption, processor manufacturers can squander power and unwind the voltage for maximum performance - knowing that liquid cooling can do this.

"We are asked to cool the chips, the power consumption of which will soon go beyond 500 W," said Jeff Lyon, director of CoolIT. - Some processors that have not yet entered the market will consume 300 watts each. All this is being developed at the request of AI and machine learning. The growth rate of the cooling level is simply not enough. ”

Lyon said CoolIT is considering expanding the cooling system to chipsets, power control systems, network chips and memory. “There will be nothing radical in being engaged in memory,” he added. - There are options for RAM with advanced packaging, consuming 18 watts per DIMM. A typical DIMM consumes 4-6 watts. Among the systems with a large amount of memory, we meet a server where 16 or even 24 DIMMs are installed - and this is a lot of heat. ”

One by one, manufacturers face such requests. Equinix observes how the average density grows from 5 kW to 7-8 kW, and now to 15-16 kW, with some equipment showing a density already at 40 kW. “So the total amount of air that needs to be pumped becomes too large. It won't happen instantly, but in the next couple of years there will be a fundamental acceptance of liquid cooling, ”Pennington of Equinix said.

Little about immersion cooling

Green Revolution Cooling focuses on immersion cooling, and its director Peter Poulin says that in terms of energy efficiency, immersion cooling is better than direct cooling for two reasons. First, fans are removed from all servers. Only this, on average, reduces power consumption by 15%. And one client of the company reduced it by 30%.

There is another indirect advantage of eliminating fans: silence. Despite the fact that very small fans are often used in servers, the servers are terribly noisy, and being in the data center is unpleasant due to both heat and noise. Liquid cooling makes these places much more pleasant to work with.

Another advantage is that very little energy is required to support the immersion cooling system. There are only three moving parts: a pump for circulating the cooler, a pump for moving it to the cooling tower, and a cooling tower fan. After replacing the air cooled liquid-cooled electricity consumption can fall to 5% of what was spent on air conditioning. “You get a huge reduction in energy consumption, which allows you to do a lot of other things,” said Poulnin. “Depending on the consumer, the data center can be more energy efficient or reduce the carbon emissions that are associated with the construction of data centers.”

Facts in favor of energy efficiency liquid cooling

Energy consumption has been a concern for the data center industry for quite some time (the US Environmental Protection Agency has been tracking this figure for at least ten years). Today's data centers are huge enterprises that consume an estimated 2% of the world's electricity, and emit as much CO 2 into the atmosphere as the airline industry. Therefore, interest in this issue does not fade away. Fortunately, liquid cooling can reduce electricity bills.

The first saving is due to the disconnection of air conditioning in the data center. The second is the elimination of fans. In each server rack there are many fans forcing air, but their number can be reduced to a small number or to zero, depending on the density.

And with the technology of "dry cooling", in which there is no freezing, you can achieve even greater savings. Initially, cooling with direct connection sent water through the refrigerator, cooling it to 15-25 degrees Celsius. But in the end it turned out that liquid coolers, which passed water through a long sequence of pipes and fans, cooled pipes heated from hot water, and natural thermal diffusion also cooled the water to a sufficient temperature.

“Since this process is so efficient, you no longer have to worry about cooling the water to some kind of low temperature,” says Pennington. - Warm water still effectively takes all the heat from the servers. No compression cycle needed, you can just use dry coolers. ”

Dry coolers also save water. A large data center that uses refrigerators can consume millions of liters of water per year, but a data center with dry coolers does not consume water. This saves both energy and water, which can be very useful if the data center is located within the city.

“We do not consume much water,” said Pennington. - If everything is carefully designed, you get a closed system. Water does not flow in or out; you just need to add water about once a year to keep the tanks full. You do not add water to your car all the time, and this is how it is with us. ”

Acceptance follows efficiency

One real-life example: Dell, switching to liquid cooling, has increased energy efficiency ( PUE ) by 56%, according to Brian Payne, vice president of product management and marketing at PowerEdge at Dell EMC. PUE is the ratio of the energy that needs to be spent on cooling the system to the energy needed to operate the system [in fact, this is the ratio of the total energy used by the data center to the energy that is spent directly on the power of the IT infrastructure / approx. trans.] PUE equal to 3 means that 2 times more energy is spent on cooling the system than on powering the system, and PUE = 2 means that the same amount of energy is spent on power and cooling. PUE cannot be equal to 1, because cooling is necessary, but data center operators are obsessed with trying to bring the figure as close as possible to 1.0.

In addition to improving PUE, the increase in computing power that Dell customers receive can be up to 23%, and this does not overload the system beyond measure. “Based on the necessary infrastructure investments, we predict the annual payback of the system,” says Payne. - I would compare this with the purchase of a more energy-efficient air conditioner for the house. You invest a little, but then you eventually get the benefit of your electricity bills. ”

As a completely different liquid cooling fan, let's take the Ohio Supercomputing Center, OSC. This cluster employs 1800 nodes. After switching to a civilian organization, as Doug Johnson, the chief systems architect, said, the center came to PUE = 1.5. The OSC uses an external circuit, so water is drained from the building and cooled to ambient temperature, which is on average 30 ° C in summer, and much less in winter. The chips reach 70 ° C, and even if the water heats up to 40 ° C, it still remains much colder than the chips, and serves its purpose.

Like many of the early adherents of the new technology, for OSC this is all new. Five years ago, the center did not use ZHO at all, and today it takes 25%. The center hopes that in three years the bar will rise to 75%, and in a few more years they will fully switch to the civil society. But even in its current state, according to Johnson, it takes four times less energy to cool the center than before switching to a civilian flight, and in general this decision reduced the total energy consumption by 2/3. "I think that the percentage will increase when we begin to integrate into the cooling system of the GPU."

From the point of view of the client, in order to evaluate the new technology, it is necessary to spend time and energy - only for such a large company as Dell agreed to cooperate with CoolIT in general to advertise for an organization. Not surprisingly, in the first place among the concerns of customers remains the possibility of leakage. However, despite all the fluctuations, it turns out that at the moment they have no particular choice if they want to achieve the best performance.

“Fear of leaks was always there,” says Lion of CoolIT. - The situation has changed, and now there are simply no other options. With high-speed computers you can't do the same. ”

Source: https://habr.com/ru/post/442576/

All Articles