On the evaluation and management of software development

The institute is taught algorithms, data structures, OOP. In a good case, they can talk about design patterns or multi-threaded programming. But I did not hear about the fact that they were told how to correctly estimate labor costs.

Meanwhile, this skill is necessary for any programmer every day. There is always more work than can be done. Evaluation helps to prioritize the right, and at all to abandon any tasks. Not to mention such domestic issues as budgeting and planning. Invalid estimates, on the contrary, create a bunch of problems: understated - conflict situations, processing and holes in budgets, overestimated - cancellation of projects or searches for other performers.

')

To be fair, it should be noted that underestimated estimates are much more common in the design. Why? Someone thinks that programmers are too optimistic by nature. I will add another reason to this: being a good programmer and being a good appraiser are not the same thing. To become a good programmer of one desire is not enough. Need knowledge and practice. Why would the assessment be different?

In the article I will talk about how my attitude to the assessment of tasks has changed and how projects in our company are being evaluated now. And I'll start with how to evaluate is not necessary. If you already know how to “do not”, go directly to the second part of the article .

Anti-evaluation patterns

Most assessments in projects are done at the beginning of their life cycle. And this does not confuse us until we understand that the estimates are obtained before the requirements are defined, and accordingly before the task itself is studied. Therefore, the assessment is usually done at the wrong time. This process is correctly called not an estimate, but a guessing or a prediction, because every spot in the requirements is a guessing game. How much does this uncertainty affect the final assessment results and its quality?

Trifle, but unpleasant

Suppose you are developing a system for entering orders, but have not yet been able to work out the requirements for entering phone numbers. Among the uncertainties that can affect the evaluation of the program, the following can be highlighted:

Suppose you are developing a system for entering orders, but have not yet been able to work out the requirements for entering phone numbers. Among the uncertainties that can affect the evaluation of the program, the following can be highlighted:- Does the client need the phone number to be checked for validity?

- If the customer needs a phone number verification system, which version will he prefer - cheap or expensive?

- If you implement a cheap version of checking phone numbers, will the client want to switch to an expensive one later?

- Is it possible to use a ready-made system for checking phone numbers or, due to some design restrictions, do you need to develop your own?

- How will the verification system be designed?

- How long does it take to program a phone number verification system?

And these are just a few questions from the list that arises in the head of an experienced project manager ... As can be seen even from this example, potential differences in the definition, design and implementation of the same opportunities can accumulate and increase the implementation time by hundreds or more times. And if we combine them in hundreds and thousands of functions of a large project, we will get a tremendous uncertainty in the evaluation of the project itself.

Another excellent example of the “swelling” would seem to be an elementary requirement in the article " How two weeks ?! "

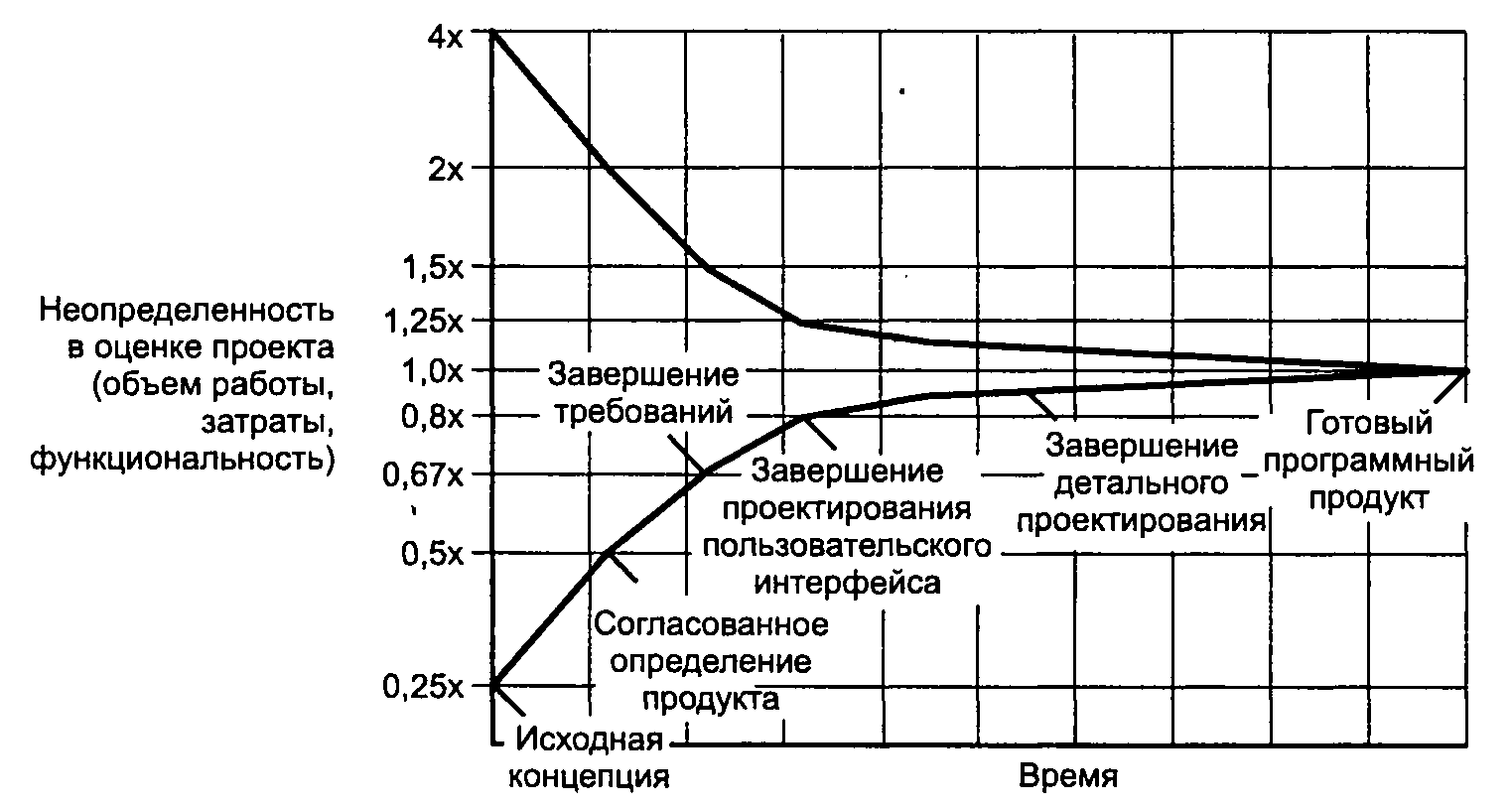

Cone of uncertainty

Software development - and many other projects - consists of thousands of solutions. The researchers found that project estimates at different stages have predictable levels of uncertainty. The cone of uncertainty shows that the estimates become more accurate as the project progresses. Please note that at the initial concept stage (where assessments are often made and commitments are taken), the error may reach 400% (four hundred percent, Karl!). Optimal commitments after the completion of detailed design.

Mythical man-month

Until now, there are managers who believe that if the functionality is rigidly fixed, a reduction in the period can be achieved at any time by adding personnel who would do more work in less time. The error of such reasoning lies in the unit itself used in the assessment and planning: man-month. Cost is actually measured as the product of the number of employees and the number of months spent. But not the achieved result. Therefore, the use of person-months as a unit of measurement of the volume of work is a dangerous delusion. All researchers agreed that reducing the nominal term increases the total amount of work. If the nominal term for a group of 7 people is 12 months, then a simple increase in staff up to 12 people will not reduce the period to 7 months.

In large groups, the costs of coordination and management increase, and the number of communication paths is growing. If all parts of the task must be separately coordinated with each other, the costs of communication grow quadratically, and the team’s “power” is linear. For three workers, three times more pair-wise communication is required than for two, for four workers it is six times as much.

The project team is trying to cope with abrasions // Ivan Aivazovsky, 1850

If 8 people can write a program in 10 months, can 80 people write the same program in one month? The inefficiency of deadlines is especially evident in extreme cases, such as the 1600 people who have to write a program in one day. Read more about this in the eponymous book by Frederick Brooks .

Evaluation patterns

So, everything is clear with the problems. What can be done?

Decomposition

Instead of estimating a large task, it is better to divide it into many small ones, evaluate them, and get the final assessment as a sum of initial estimates. Thus, we kill as many as four birds with one stone:

- We better understand the scope of work. To decompose a task, you need to read the requirements. Immediately float strange places. Reduces the risk of misinterpreting the requirements.

- During the analysis of a more detailed analysis of requirements, the mental process of knowledge systematization is automatically launched. This reduces the risk of forgetting some part of the work, such as refactoring, testing automation or additional labor costs for laying out and deploying

- The result of the decomposition can be used for project management, provided that one tool was used for both processes (this issue is discussed in more detail later in the text).

- If you measure the average error of the estimate of each task obtained during decomposition and compare this error with the error of the total assessment, it turns out that the total error is less than the average. In other words, such an assessment is more accurate (closer to the real labor costs). At first glance, this statement is counter-intuitive. How can the final assessment be more accurate if we make a mistake in evaluating each decomposed task? Consider an example. In order to create a new form, you need to a) write code on the backend, b) impose a layout and write code on the frontend, c) test and lay out. Task A was rated for 5 hours, Task B and C for 3 hours each. The total score was 11 hours. In reality, the backend was done in 2 hours, it took 4 on the form, and another 5 were spent on testing and fixing bugs. The total workload was 11 hours. The perfect hit in the assessment. In this case, the error of the task A evaluation is 3 hours, the tasks B - 1 hour, B - 2 hours. The average error is 3 hours. The fact is that the errors of underestimation and overestimation of the assessment compensate each other. The 3 hours saved on the backend compensated for the backlog at 1 and 2 hours during the frontend and testing. Real work is a random variable depending on many factors. If you get sick, it will be difficult to concentrate and instead of three hours it can take six. Or some unexpected bug will pop up that will have to be searched and corrected all day. Or, on the contrary, it may turn out that instead of writing your component, you can use an existing one, etc. Positive and negative deviations will compensate each other. Thus, the total error will be reduced.

Features and Tasks

At the heart of the decomposition we have Feature. A feature is a unit of delivery of functionality that can be put on production independently of others. Sometimes this level is called User Story, but we came to the conclusion that User Story is not always well suited for setting tasks, so we decided to use a more general formulation.

For one feature, one member of the team is responsible. Someone can help him with the implementation, but one person passes to testing. The task is also returned to him for revision. Depending on the organization of the team, this could be a team lead or the developer directly.

Unfortunately, sometimes there are great features. Alone, working on this volume will take a very long time. And a long time will have to test and implement the process of acceptance. Then we change the type of task to epic (Epic). Epic is just a very fat feature. Nothing more epic we do not start. Those. epics may just be big, huge or gigantic. In any case, the acceptance of the epic is sent in parts (features).

In order to evaluate more precisely, features are decomposed into separate subtasks (Task). For example, a feature could be the development of a new CRUD interface. The structure of tasks can look like this: “display a table with data”, “fasten filtering and search”, “develop a new component”, “add new tables to the database”. The structure of tasks is usually not at all interesting for business, but it is extremely important for the developer.

Group Evaluation, Planning Poker

Programmers are too optimistic about the amount of work. According to various sources, underestimation of the most often varies in the range of 20-30%. However, in groups, the error is reduced. This is due to the best analysis due to different points of view and temperament evaluating.

With the increasing popularity of Agile, the practice of " poker planning " has become most widespread. However, two problems are associated with group evaluation:

- Social pressure

- Time spent

Social pressure

In almost any group, the experience and personal effectiveness of the participants will vary. If the team is a strong team / tech - lead / lead programmer, other members may feel a sense of discomfort and deliberately underestimate ratings: “Well, Vasya can, but what's worse? I can do that too!". The reasons may be different: the desire to appear better than it actually is, competitiveness or just conformism. The result is one: group assessment loses all its advantages and becomes individual. Timlid assesses the rest, and the rest simply endorse him.

I pressed on the team for a long time in order to get ratings, more in line with my expectations. This invariably led to a decrease in quality and failure to meet deadlines. As a result, I changed my attitude and now my score often becomes the biggest. During the discussion, I point out the potential problems that come to mind: “here refactoring would not hurt, here the structure of the database changes, it would be necessary to do a regression test”.There are several basic recommendations:

- Most of the estimates are understated. Can't choose between two grades? Take the one that is bigger.

- Not sure about the assessment - throw out the card "?" or more appreciated. Perhaps almost never carried by.

- Always compare plan and fact. If you know that you do not fit in two times, let's estimate two times higher than what you think. Began to overstate? Multiply in mind by one and a half. After several iterations, the quality of your assessments should improve significantly.

Time spent

You know the phrase “Do you want to work? Gather a meeting! ” Not only does one programmer try to predict the future instead of writing code. Now it makes the whole group. In addition, group decision making is a much longer process than individual decision making. Thus, group assessment is an extremely costly process. It is worth looking at these costs from the other side. First, in the evaluation process, the group is forced to discuss the requirements. This means that you have to read them. Already not bad. Second, let's compare these costs with those incurred by the company due to underestimation of the project.

Many years ago, one November day, I changed jobs to a large company. It immediately became clear to me that the work was in full swing. Half the company worked to release the product before the end of the year. But after about a week it seemed to me that they would not have time by the end of the year. Every next day, the chances of success of this enterprise became more and more elusive ... The project was really passed in December, though next year. I learned about this much later, because in the summer there were problems with the payment of wages to employees and I quit with about half of the staff. You can say "well, of course, managers are fools, it was necessary to err." They insured. Half a year there were no problems with the payment of wages. Keeping a stock of working capital for half a year of financing is not an easy task. I think if the assessment were more accurate, there would be other management decisions at the top management level.If we consider investments in valuation as investments in making right management decisions, they no longer seem so expensive. Group size is another matter. Of course, it is not necessary to force the whole team to evaluate the entire scope of work. It is much more reasonable to divide the task by

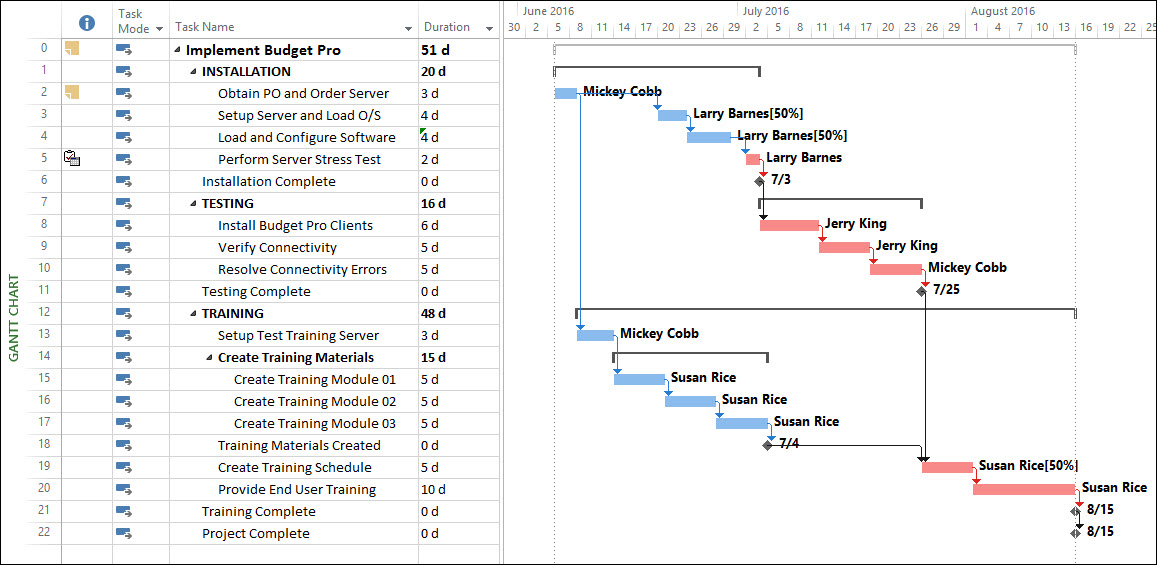

Dependency, Gantt charts

If estimates are usually given by programmers, then drawing up a project plan is the lot of middle managers. Remember, I wrote that you can help these guys if you use one tool for decomposition and project management. Score and calendar date is not the same. For example, to display a simple table with data you need:

- Table in DB

- Backend code

- Code on the frontend

To perform tasks in this order is easiest from a technical point of view. However, in reality there are different specializations. Frontend specialist may be released earlier. Instead of standing idle, it is more logical for him to start developing the UI, replacing the request to the server with a mock or hard-coded data. Then, by the time the API is ready, all that remains is to replace the code with a call to the real method ... in theory. In practice, the maximum level of parallelism can be achieved as follows:

- First, we quickly do the swagger to match the API specification.

- Then hardcode data on the back or at the front, depending on who is at hand.

- In parallel, we do database, backend and fronted. The database and backend partially block each other, but most often these competencies are combined in one person and the work actually goes sequentially: first, backend, then backend

- We collect everything and test

- Fix bugs and test again

It is important that items 1, 4 and 5 are executed as quickly as possible to reduce the number of locks. In addition to technological limitations and limitations in the availability of specialists of the necessary competence, there are also business priorities! And this means that after three weeks a demonstration was already scheduled for an important client and he didn’t want to care about the first half of your project plan. He wants to see the end result, which will be available no earlier than two months. Well, then you have to prepare a separate plan for this demonstration. We add in the plan to score the necessary data of the database, insert new links for transitions in the UI, etc. It is also desirable that the result should be about 20% of the code, and not the entire demonstration.

The artistic cutting of such a plan is not an easy task. Dependency dependency greatly simplifies the process. Before starting the report module, you need to make a data entry module. Is it logical Add a dependency. Repeat for all related tasks. Believe me, many of the dependencies will come as a surprise to you.

The tasks of automating business processes usually result in several long “snakes” of related tasks. With several large blocking nodes. Most often, the original plan is not efficient in terms of resource utilization and / or too long in calendar terms. Revising the estimate of labor costs maybe get faster - not an option. Estimation, so, most likely optimistic. We have to go back to decomposition, look for too long chains and add additional “forks” in order to increase the degree of parallelism. Thus, by increasing the total labor costs (more people are working on one project in parallel), the project’s calendar time is reduced. Remember the "mythical man month"? Compress the plan by more than 30% is unlikely to work. So that the budget and deadline agreed plan may be revised several times. There are several techniques that make the process faster and easier.

Task lock

The first reason for blocking - dependencies - we have already considered. In addition, there may simply be not clear / exact requirements. A tool is needed to block tasks and ask questions. With clarification of requirements, you can unlock tasks and adjust the assessment. This process, by the way, almost always takes place during the project, and not before it.

Critical path, risks ahead

The method of the critical path is based on the determination of the longest task sequence from the beginning of the project to its completion, taking into account their interrelation. Tasks that lie on a critical path (critical tasks) have a zero reserve of execution time, and, in the event of a change in their duration, the timing of the entire project changes. In this regard, in carrying out a project, critical tasks require more careful monitoring, in particular, the timely identification of problems and risks affecting the deadlines for their implementation and, consequently, the timing of the project as a whole. In the process of project implementation, the critical path of the project may change, as if the duration of the tasks changes, some of them may be on the critical path.

In short, if you mess up with the structure of the database, you have to rewrite the Beck, do not calculate the load, you may have to change the technology altogether. Details about the risks of design work, I wrote in the article " Cost-effective code ." The sooner the risks standing on the critical path materialize, the better. After all, there is still time and something can be done. Even better, if they do not materialize at all, but let's be realistic.

Therefore, you need to start with the most turbid, difficult and unpleasant tasks, put them in the status of "blocked" and clarify, overestimate and remove dependencies wherever possible.

Acceptance criteria, test cases

Natural language: Russian, English or Chinese - it does not matter - it can be both redundant and not accurate. Test cases make it possible to overcome these limitations. In addition, it is a good communication tool between developers, business users and the quality department.

Project management

Do you want to make God laugh? Tell him about your plans. Even if a miracle happened and you collected and clarified all the requirements before starting work, you have enough competent people, the plan allows you to do most of the work in parallel, you are still not immune to employee diseases, errors of assessment and materialization of other risks. Therefore, it is necessary to update the plan on a regular basis and compare it with the fact. And for this, the accounting of working time is important.

Time tracking aka time tracking

Accounting for working time has long become the de facto standard in the industry. It is highly desirable that it be produced in the same instrument as the evaluation. This allows you to track the deviation of the actual elapsed time from the estimated. Well, if this tool also uses the project manager. Then all delays in the critical path will be immediately noticeable. The variant with different tools is also possible, but it will require much greater labor costs for the maintenance of the process, which means that there will be a temptation to cheat. We already know how this ends. We use YouTrack . Everything about what I wrote in the article is currently available out of the box, although it requires a little tweaking.

Conclusion

- Evaluation is difficult

- Decomposition allows you to find gaps in the requirements and improve the quality of assessment

- Group estimates are more specific than individual, use poker

- Blockers, test cases and formal acceptance criteria improve communication, which in turn increases the project's chances of success.

- It is necessary to begin with the most risky tasks on the critical path of the project.

- Evaluation is not a one-time action, but an inseparable process from project management.

- Without taking into account the working time it is impossible to keep the project status relevant and adjust their estimates.

Want to know more about project evaluation?

Read the book “ How much does a software project ” by Steve McConelle and other articles on this topic on Habré:

Source: https://habr.com/ru/post/442474/

All Articles