How to save resources in the browser and not break the web. Yandex report

Despite the increase in device performance, the web is becoming increasingly demanding on memory and processor. Proper rendering and clever tab allocation is an important part of solving this problem. Konstantin Kramlich PurplePowder devoted his speech at the conference “I Frontend” to algorithms that improve performance and save resources in both the Chromium project and Yandex Browser .

Some of them - for example, Hibernate technology - we have already analyzed in a separate post . The Bones report covers the task more broadly: not only in terms of tab switching, but also taking into account content rendering methods, tiles and page layers.

Toward the end, web interface developers can learn how to identify and solve problems with the performance of sites.

')

- My name is Kostya, I am the head of the internal components development team in the Yandex.Browser team. In the Browser, I was a little more than five years old, doing different things: from all decoding in a browser, all HTML5 videos, to rendering, rendering, and other similar processes.

For the last one and a half or two years I have been involved in projects related to saving resources in the browser: CPU, memory, and battery.

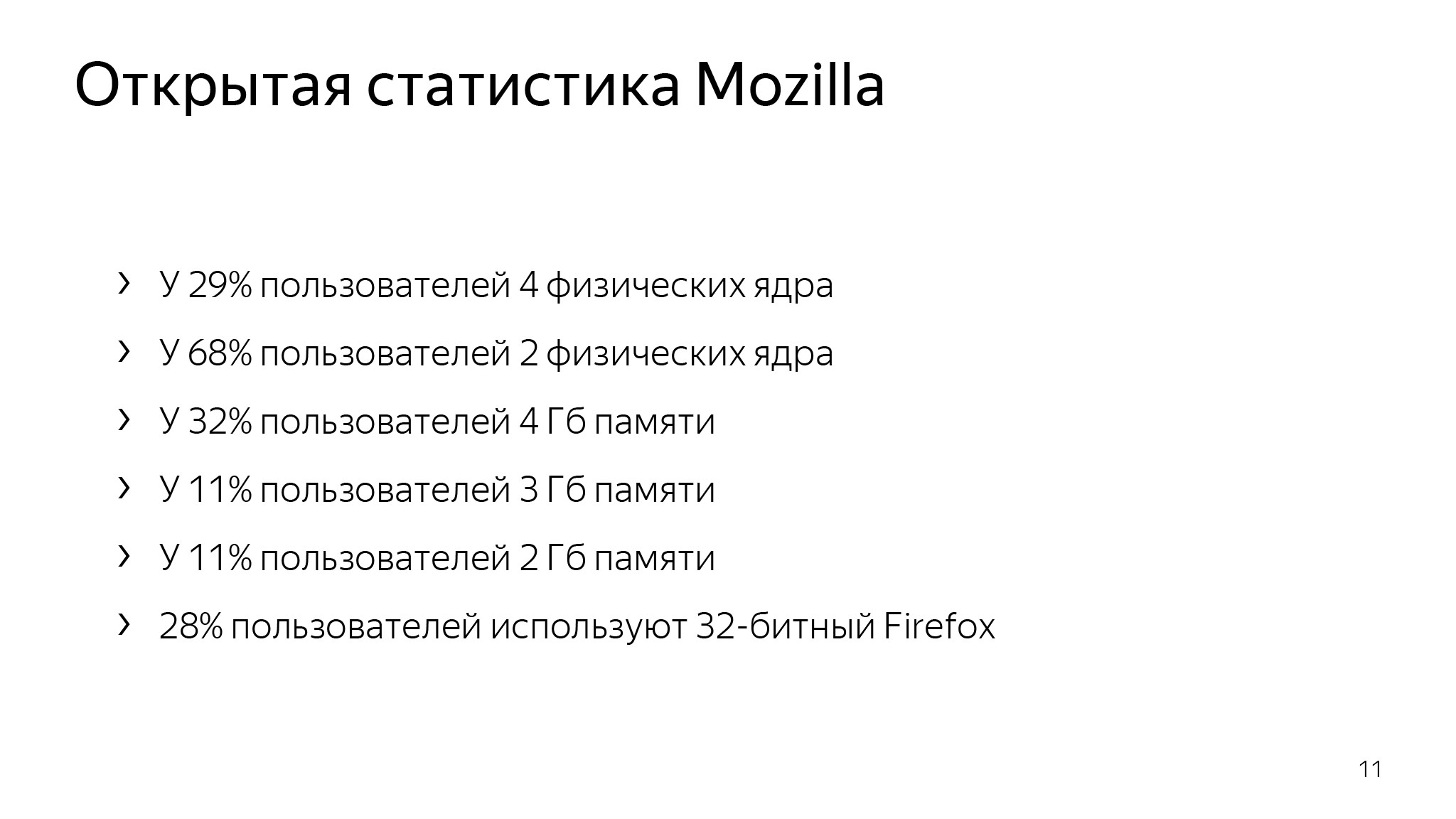

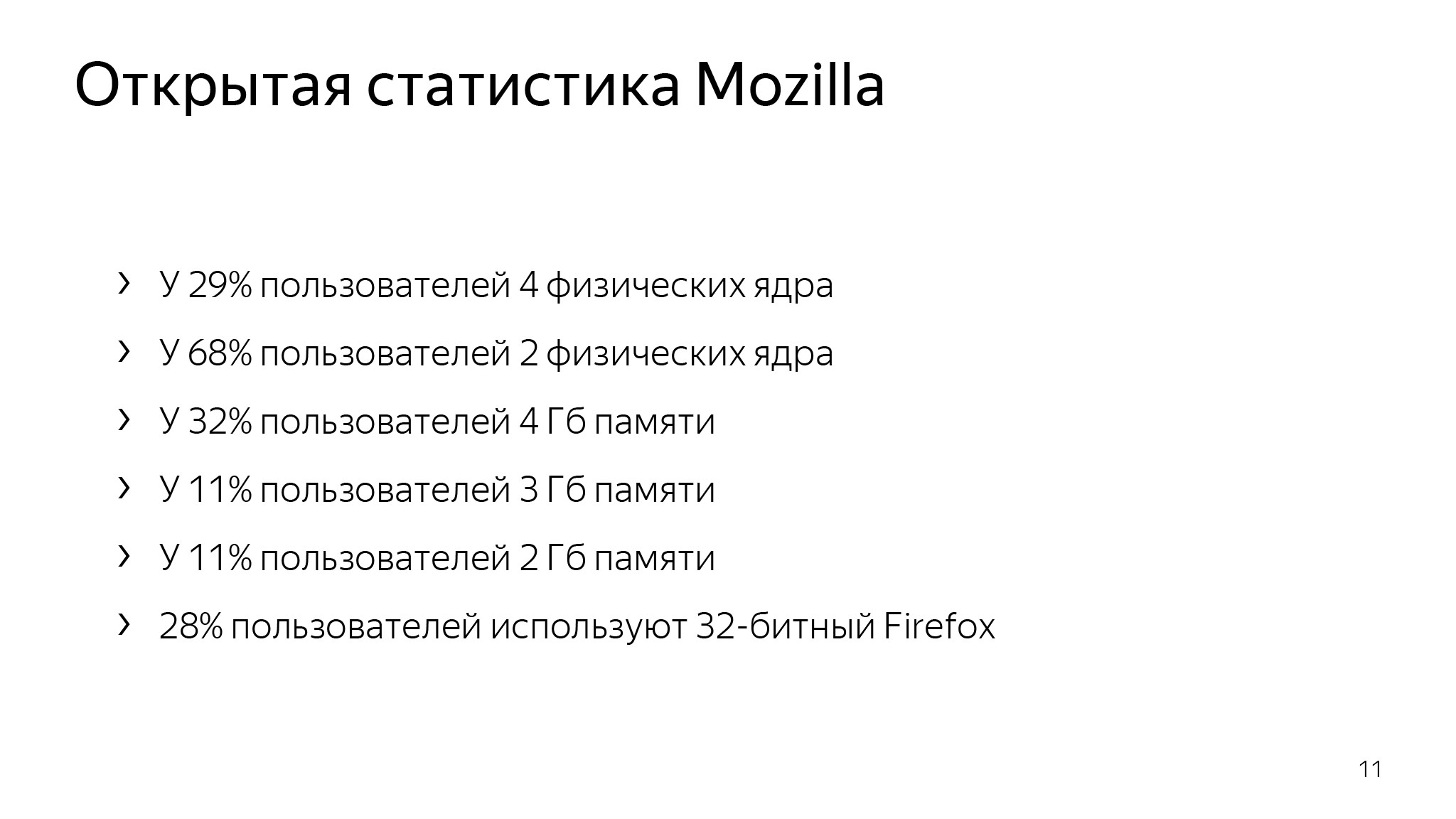

You will say - Kostya, in the courtyard of 2019, whence problems with resources? You can buy any device you want with any resources. But if we turn to the open statistics of Mozilla, we see that half of the users have 4 GB of memory or less. And many users who have one or two physical cores, they make up a considerable share of your audience. In this world we live.

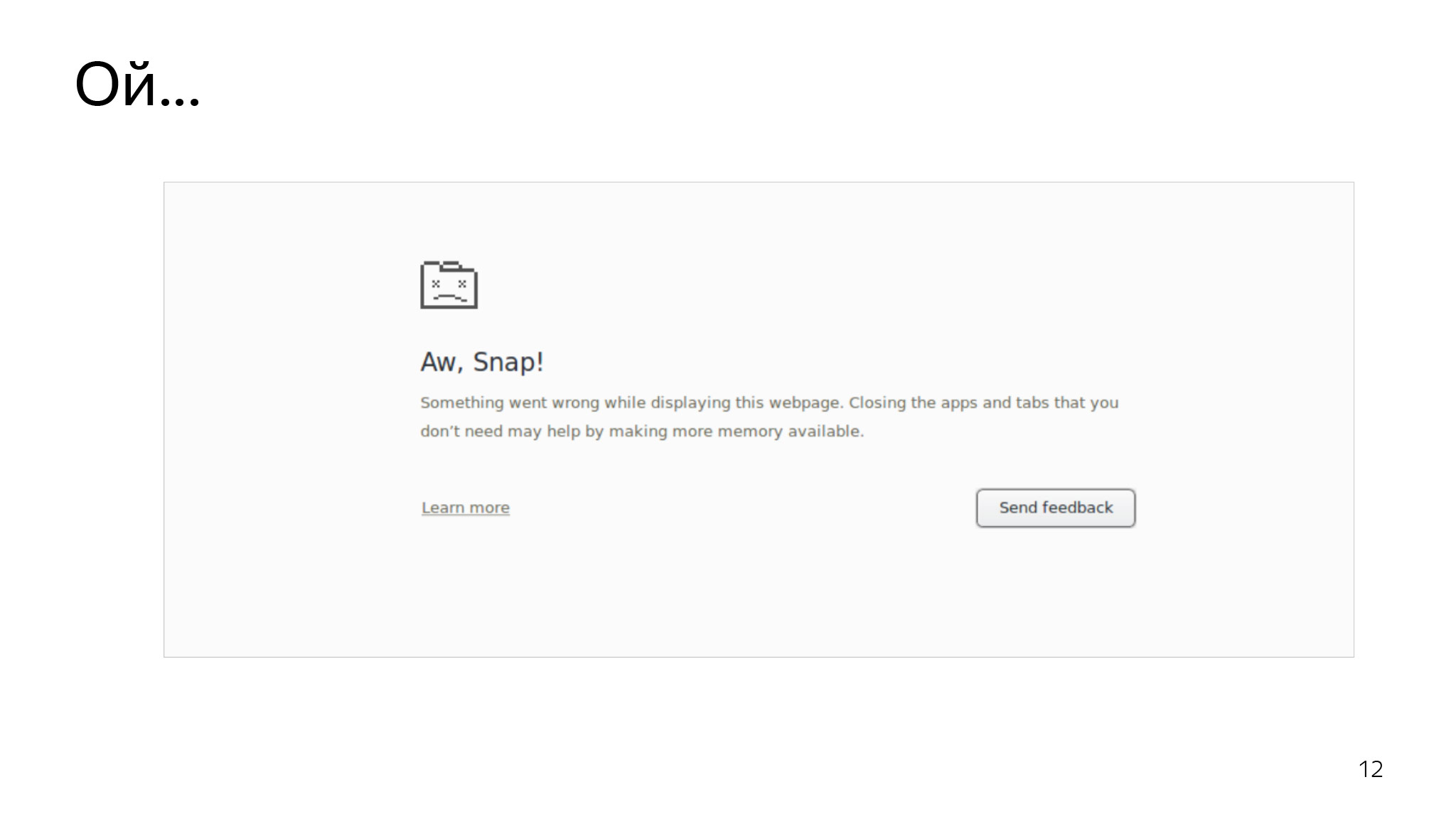

How many of you often see this tab? This is exactly what happens with ordinary users who have little RAM and old computers.

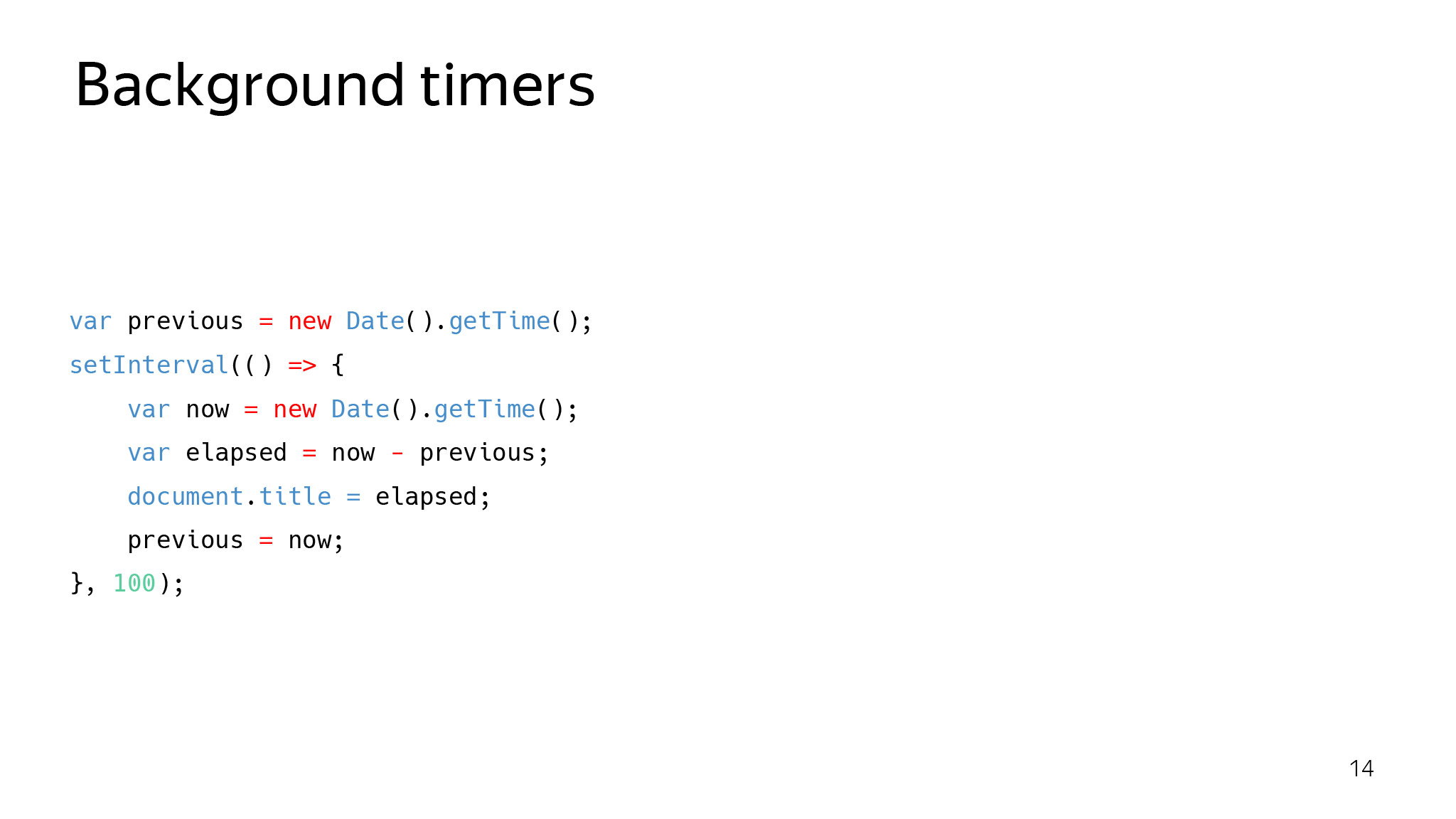

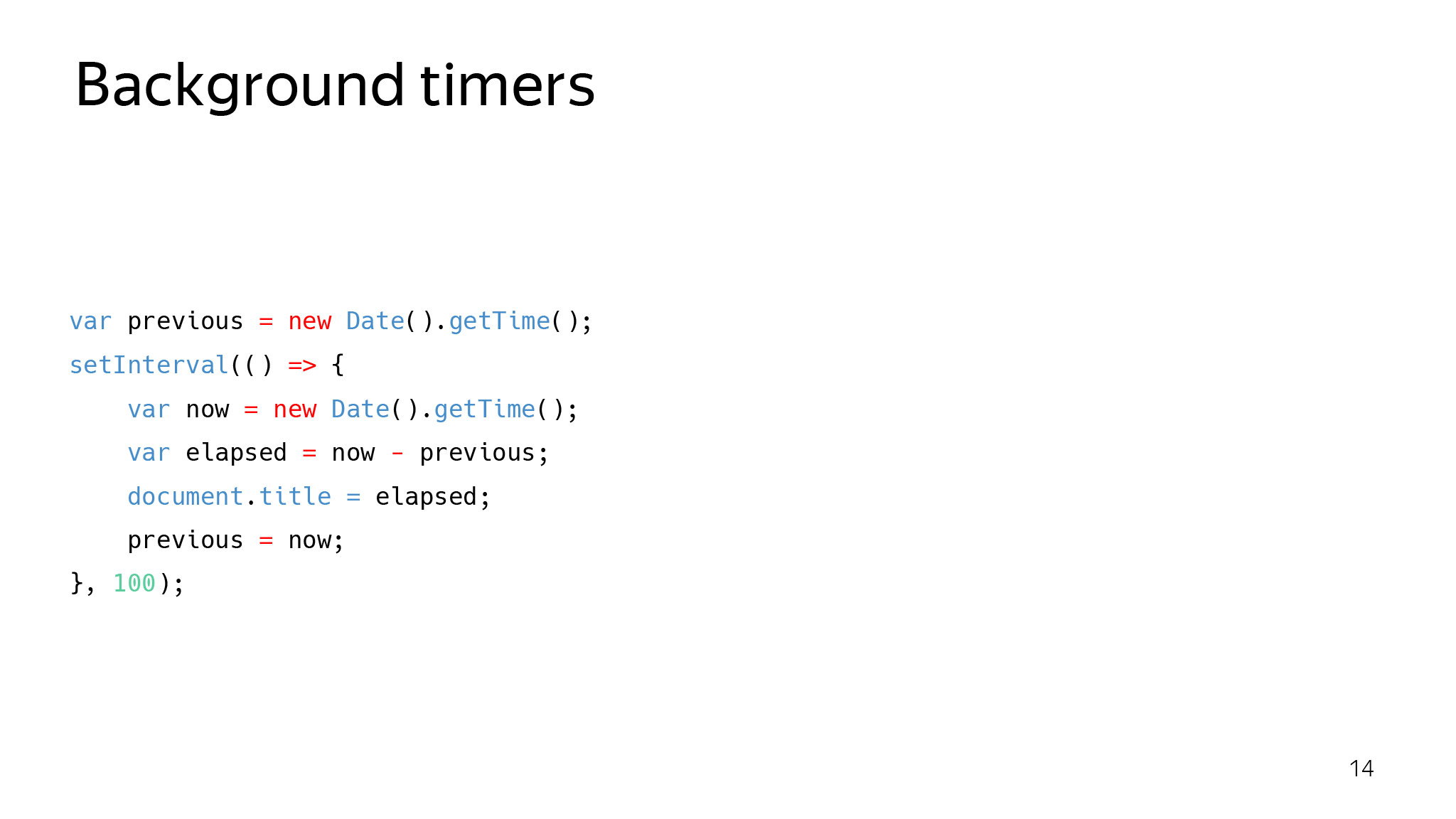

What to do? The problem is not a secret for anyone; they started to actively struggle with it three years ago. Since then, the nuts are gradually tightened in different ways. I'll show you an example that went around the Stack Overflow around 2016. There is a fairly simple snippet. This piece simply updates the title and sets the time that has passed since the last launch of this function. What should be the ideal? Every 100 ms in the title should be written + -100. If we get lucky.

But what if we open and do like this? Did someone rush on this? The question went to Stack Overflow: what the hell do Cookie Clicker stop working in my background tabs? It was one of the first Chromium initiatives to reduce CPU consumption in the browser. The idea was that if the user does not use the tab now, it means that he doesn’t need it now - let's suppress JS on it.

The browser tries to maintain the CPU load on this tab at about 1% - it starts to pause all timers, perform JS, etc. This is one of the first steps into a brighter future.

After some time in the browser, you get the situation that the background tabs stop working altogether. This is the very bright future of which I speak. According to the plans of Chromium, which they voiced on the last BlinkOn, in 2020 they plan to do this: give the tab to load and if it is in the background, it will not do anything. You should always be ready for this.

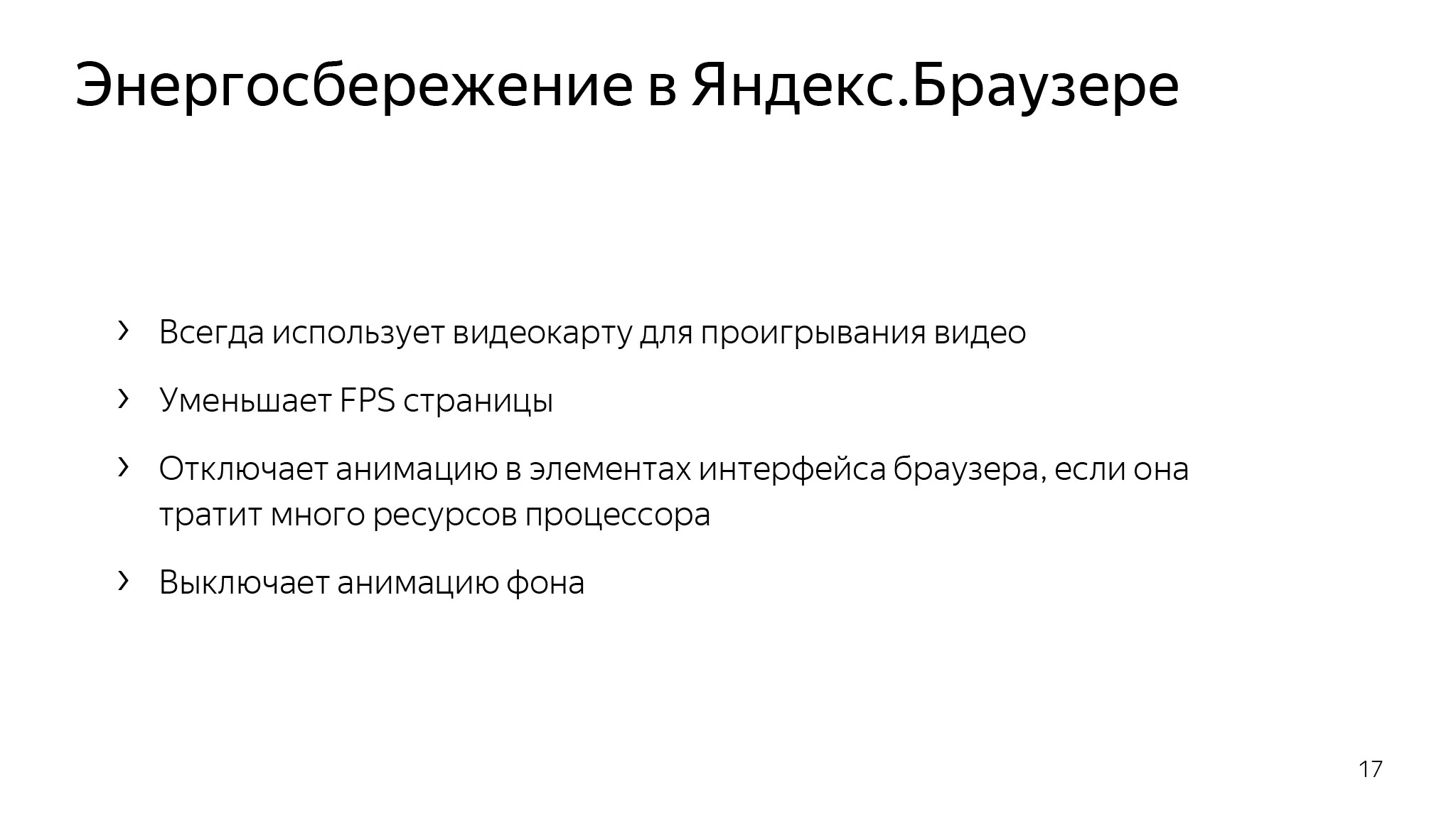

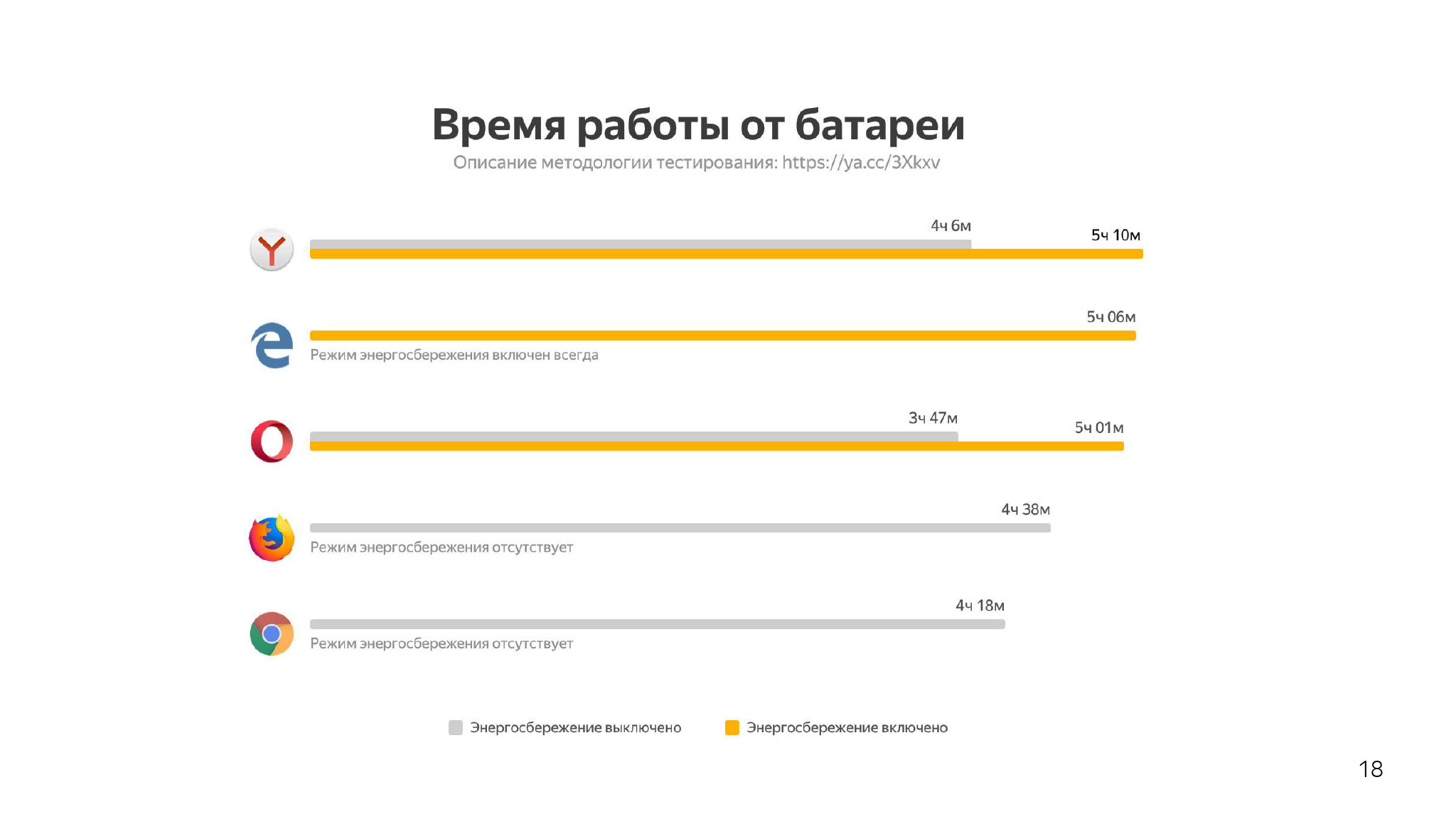

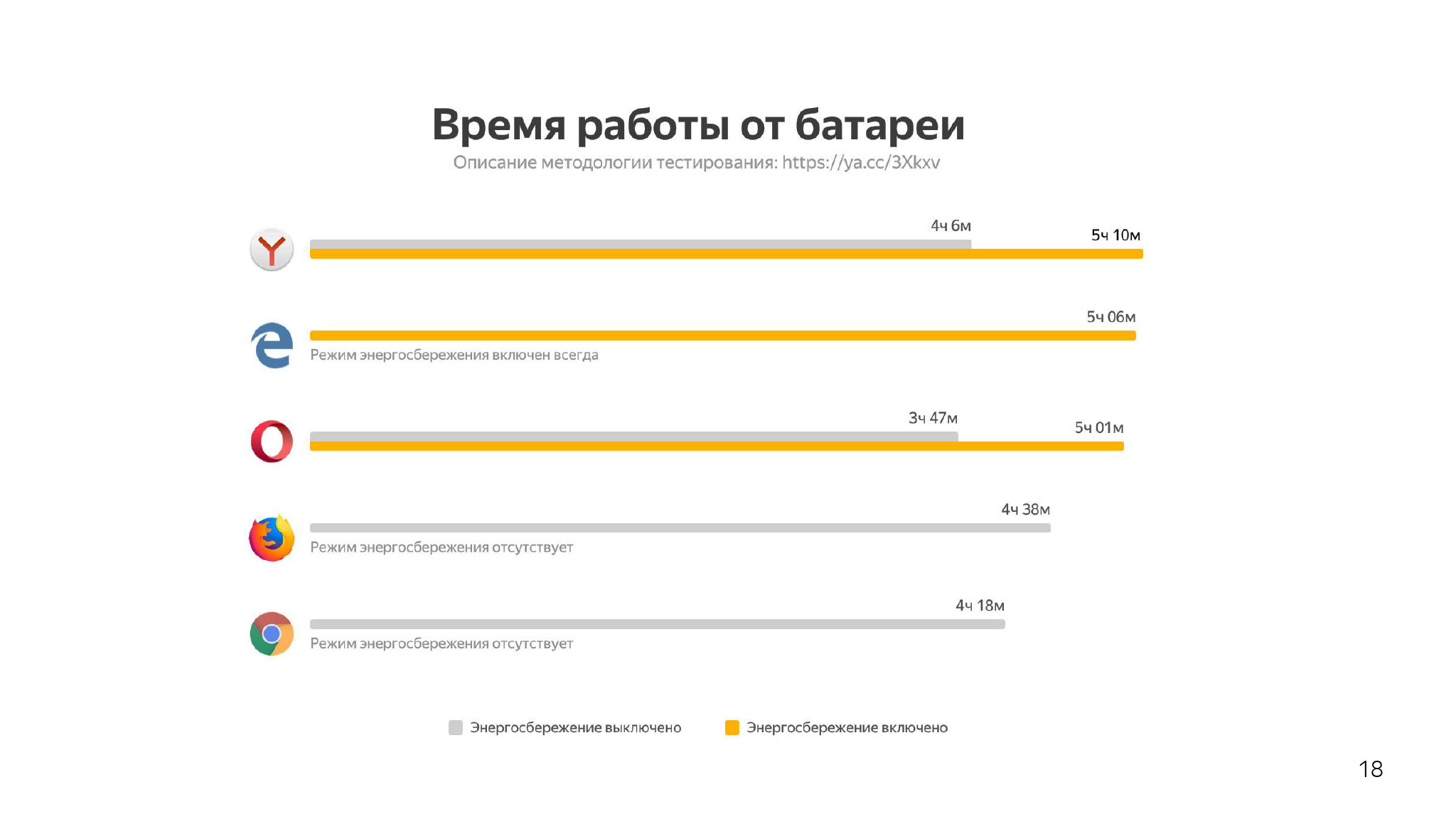

In Yandex. Browser, we also attended to such a problem, but decided it was less categorical and did not break the entire web. We created a power saving mode, which turns off decoding on the processor and leaves only decoding on the video card, and also lowers the FPS and disables some animations that are not needed right now and instead of which the user should save the battery. It gave us about an hour of extra battery life. Check can any, ixbt, for example, checked .

I think some will say: Kostya, you “broke” the web, helped some users, but did not invent anything smarter. Add hardcore! How do browsers paint pages?

The concept of layers, in a nutshell, is when the browser tries to split the page into layers and draw them separately. This is done so that some animations are executed and do not force something that is static to be redrawn. The browser does this on different heuristics. For example, it tries to select a video element in a separate layer, which, obviously, is quickly and often redrawn. And if it is shown somewhere, then you do not need to redraw everything under it.

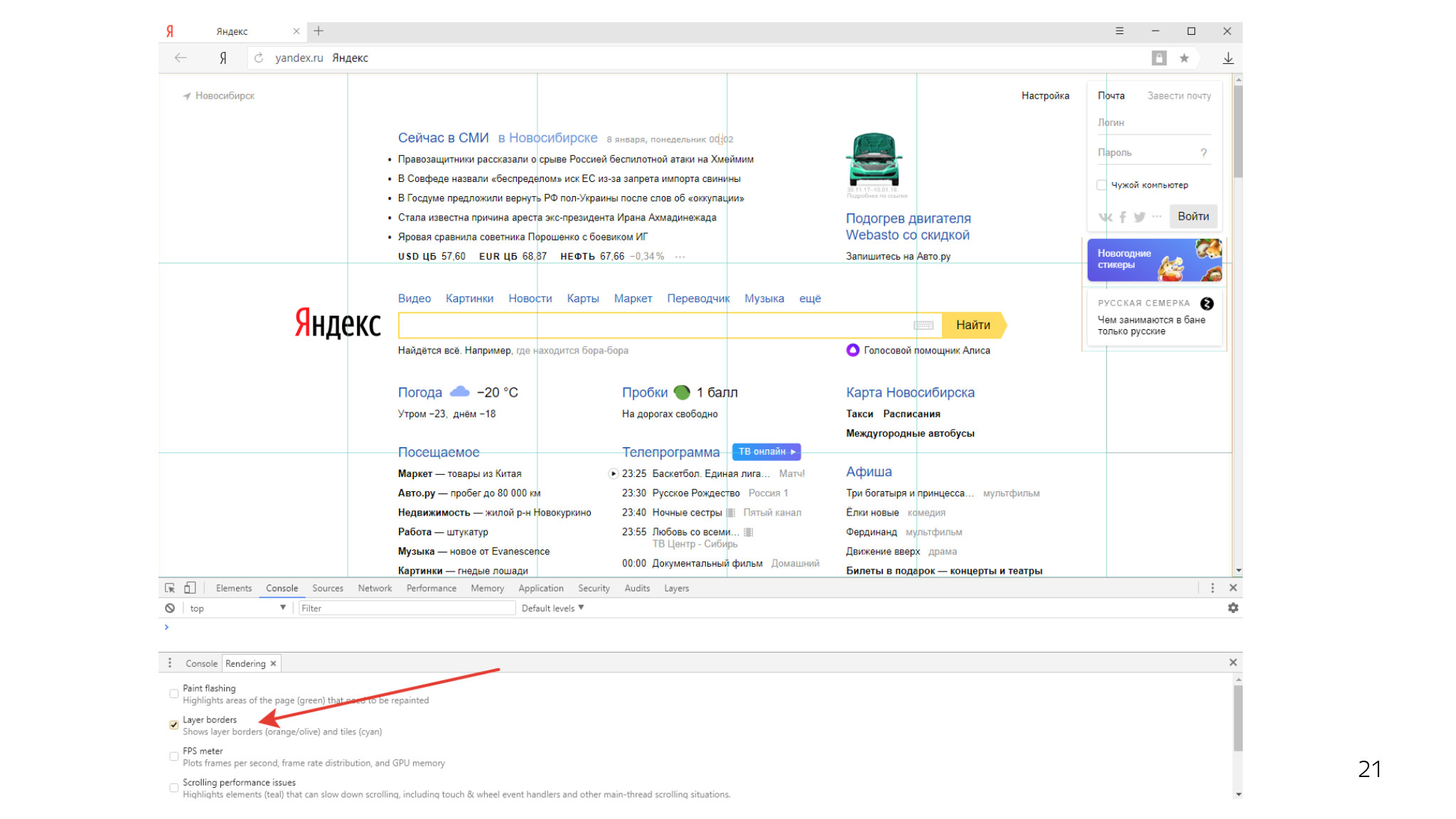

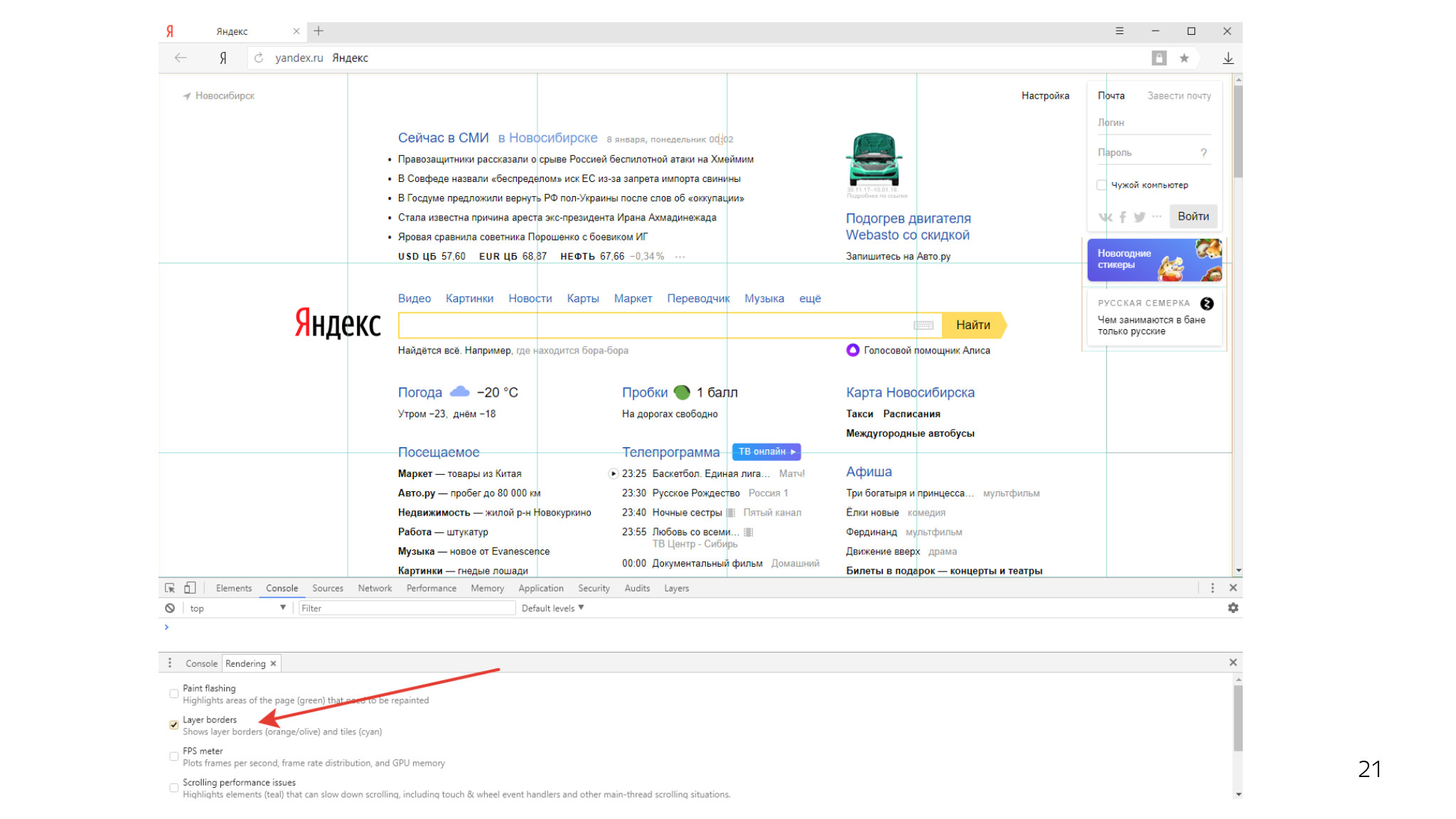

In addition, each layer is divided into such tiles - rectangles 256 by 256. In the inspector you can see something like this. There is a frame that breaks into a bunch of tiles.

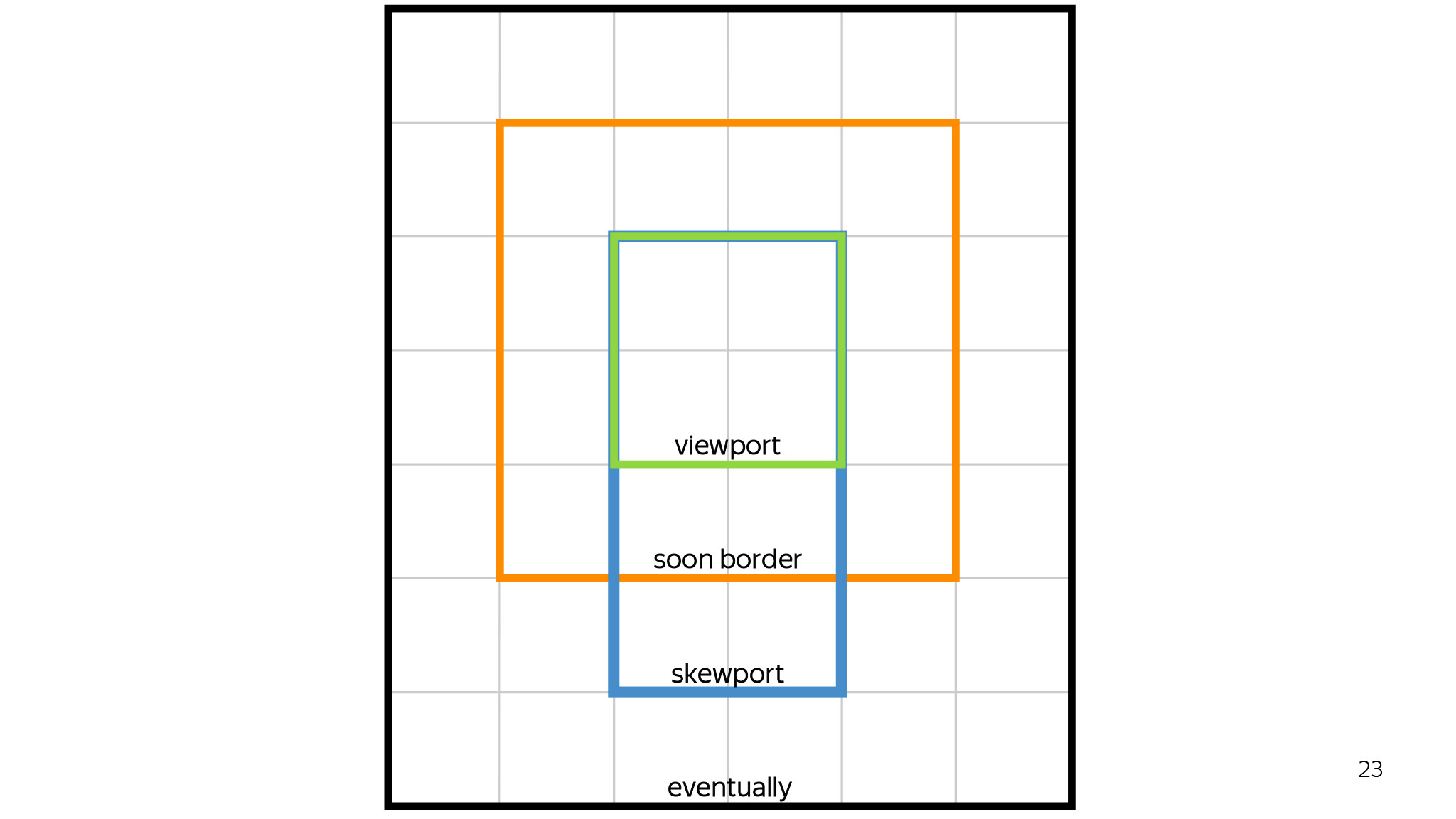

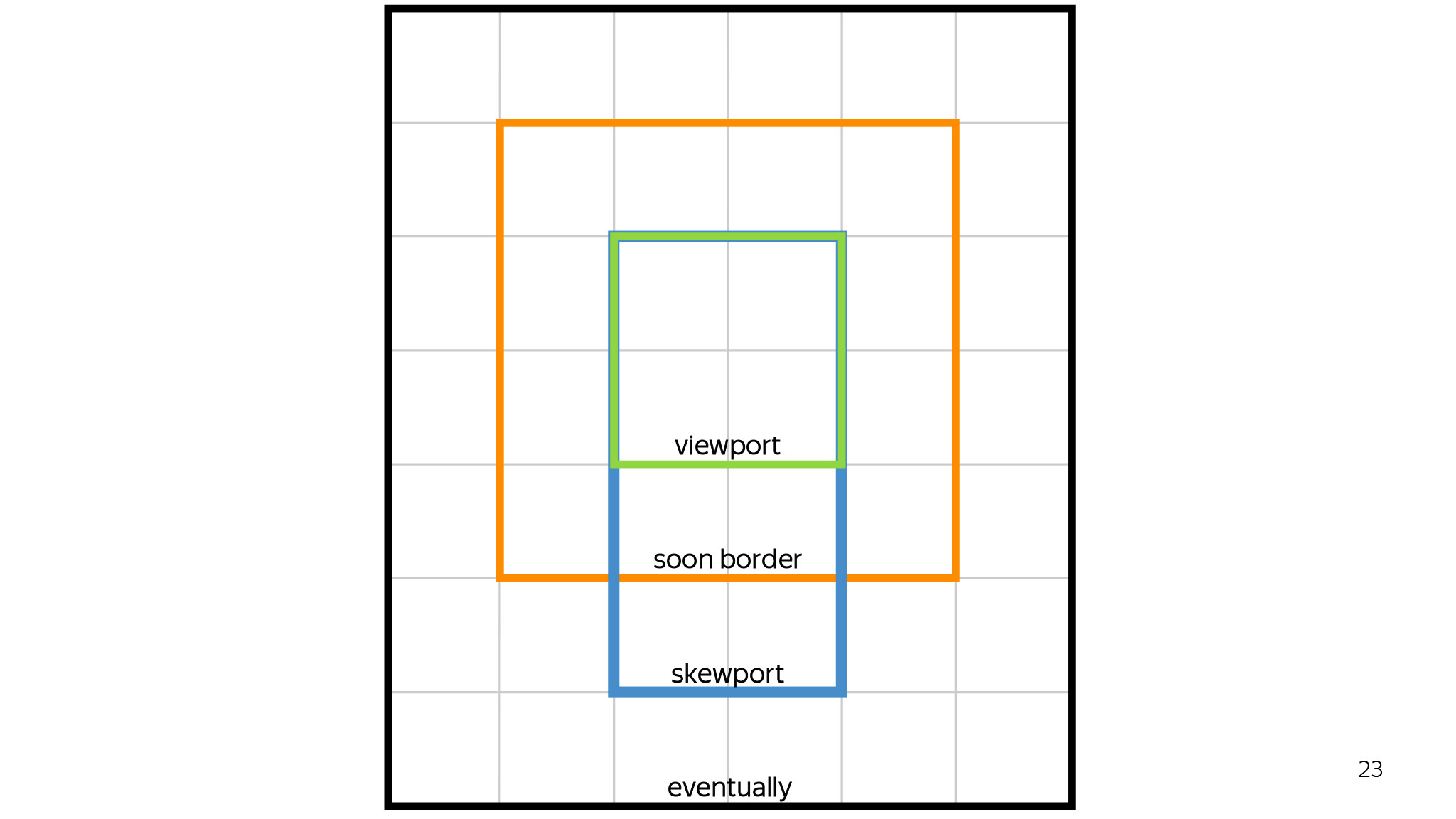

What is it and why is it needed? First of all - to prioritize rendering. If we have a huge sausage on which we all draw, then why do we need all this to draw, if now the user sees only what he has in ViewPort now?

With this approach, we first draw only what the user sees right now in the ViewPort, then one tile around, then in the direction of the scroll. If the user scrolls down - draw down, if up - up. Everything else will be drawn only if we have a quota for these tiles and we can draw them, after which the user will someday see them. And maybe never.

It also helps a lot with disabilities. Suppose the user opens the page, selects a piece, and we do not need to redraw everything. We can leave most of the previous rendering. Six tiles will be redrawn here, and everything will be fine.

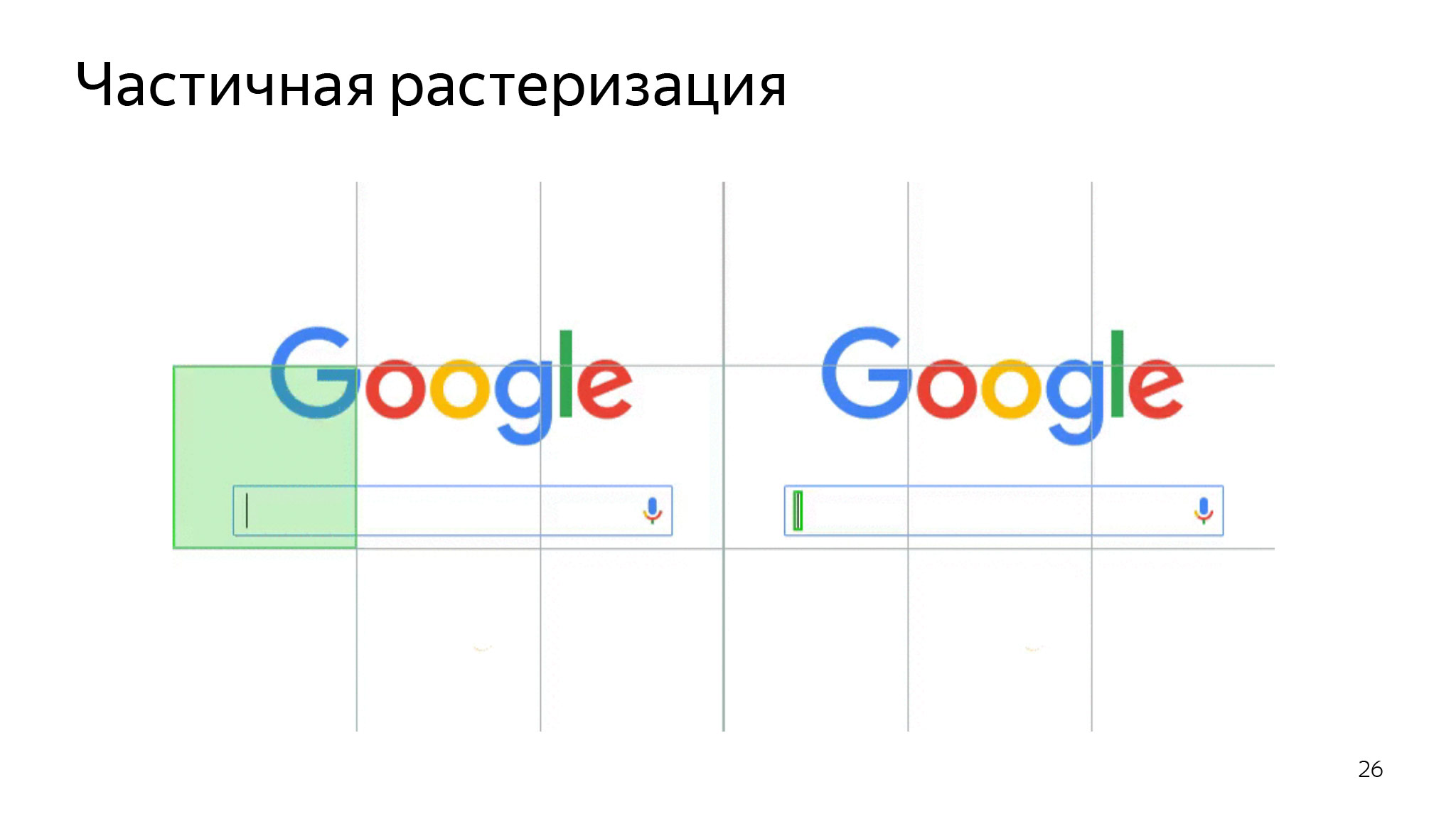

Just at this level several very successful optimizations were made. For example, Chromium made such optimization around 2017.

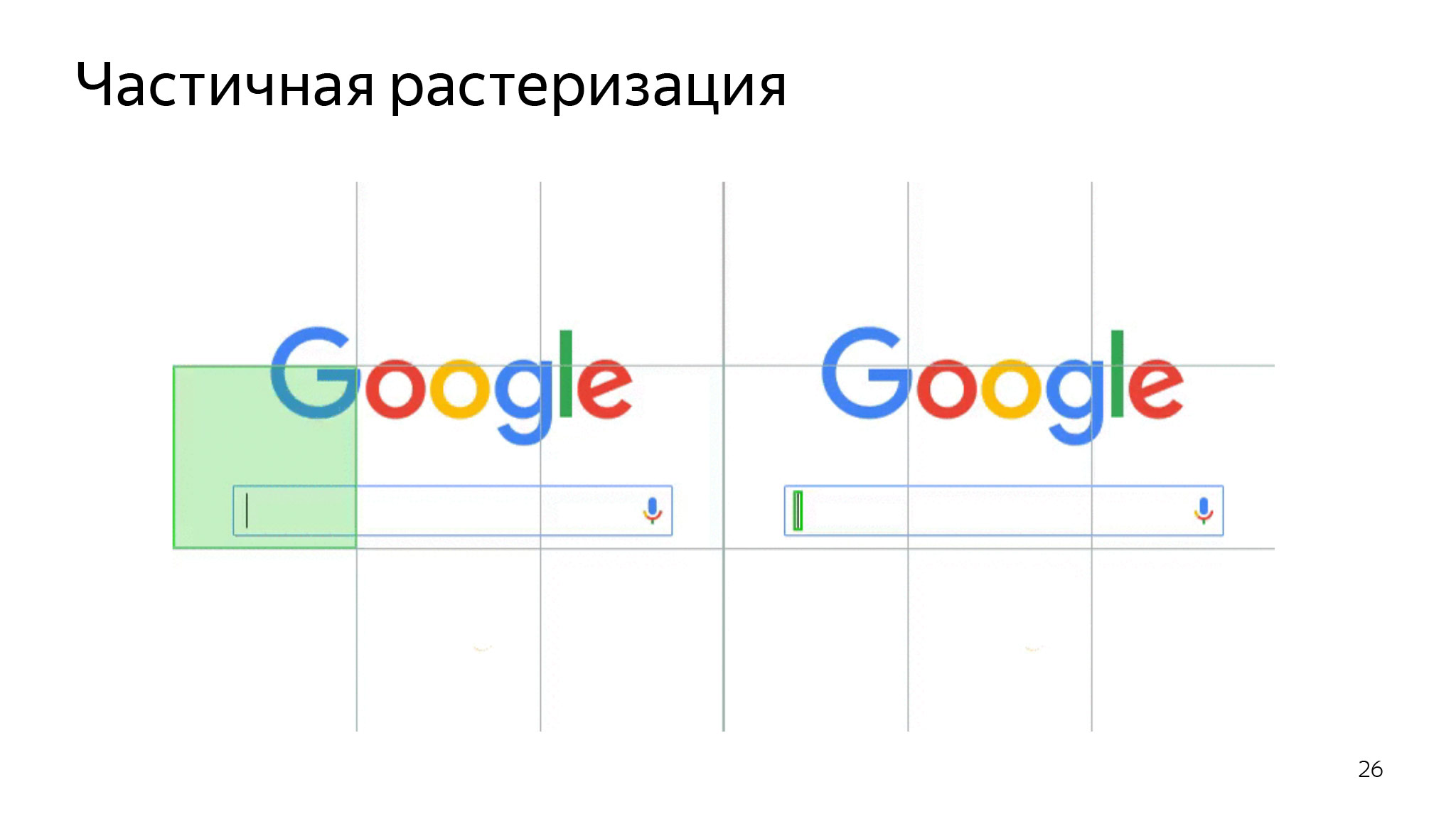

If we only have a small rendering, we only do it. Here the cursor blinks, and we redraw only the cursor area, but not the entire area of this tile. We very much save the CPU, so as not to redraw everything.

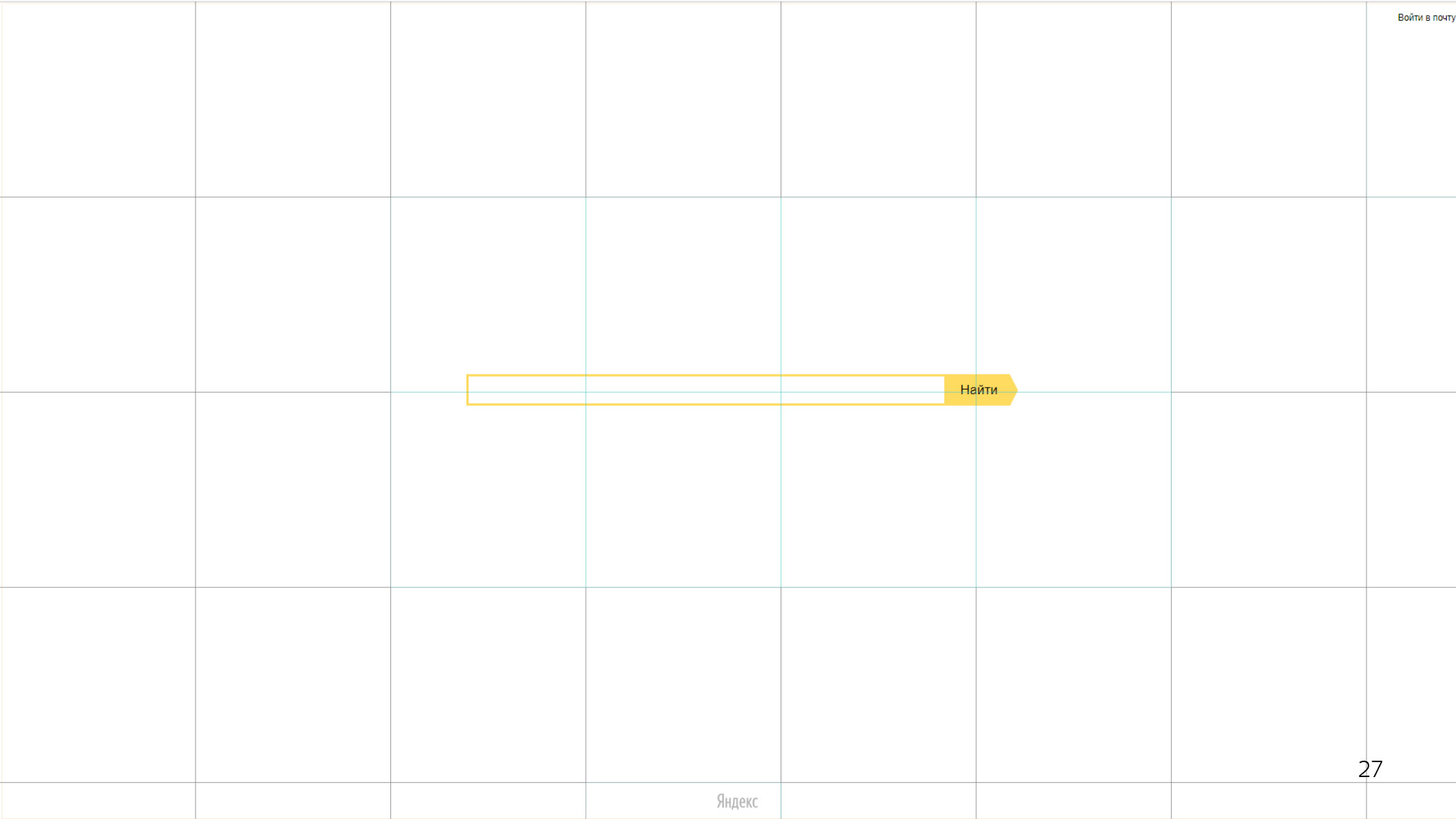

It also helps save memory. What is the problem here? Whole white rectangles. Imagine that this would be a 256 by 256 texture, four bytes per pixel. Although it would seem, this area can be encoded with only five numbers: coordinates, width, height and color.

Monochrome areas optimization was done in Chromium. If the browser understands that there are no drawings in this rectangle, that it is completely monochromatic, not transparent and still meets some conditions, then we simply say to the video card - draw a white rectangle, do not select the whole texture.

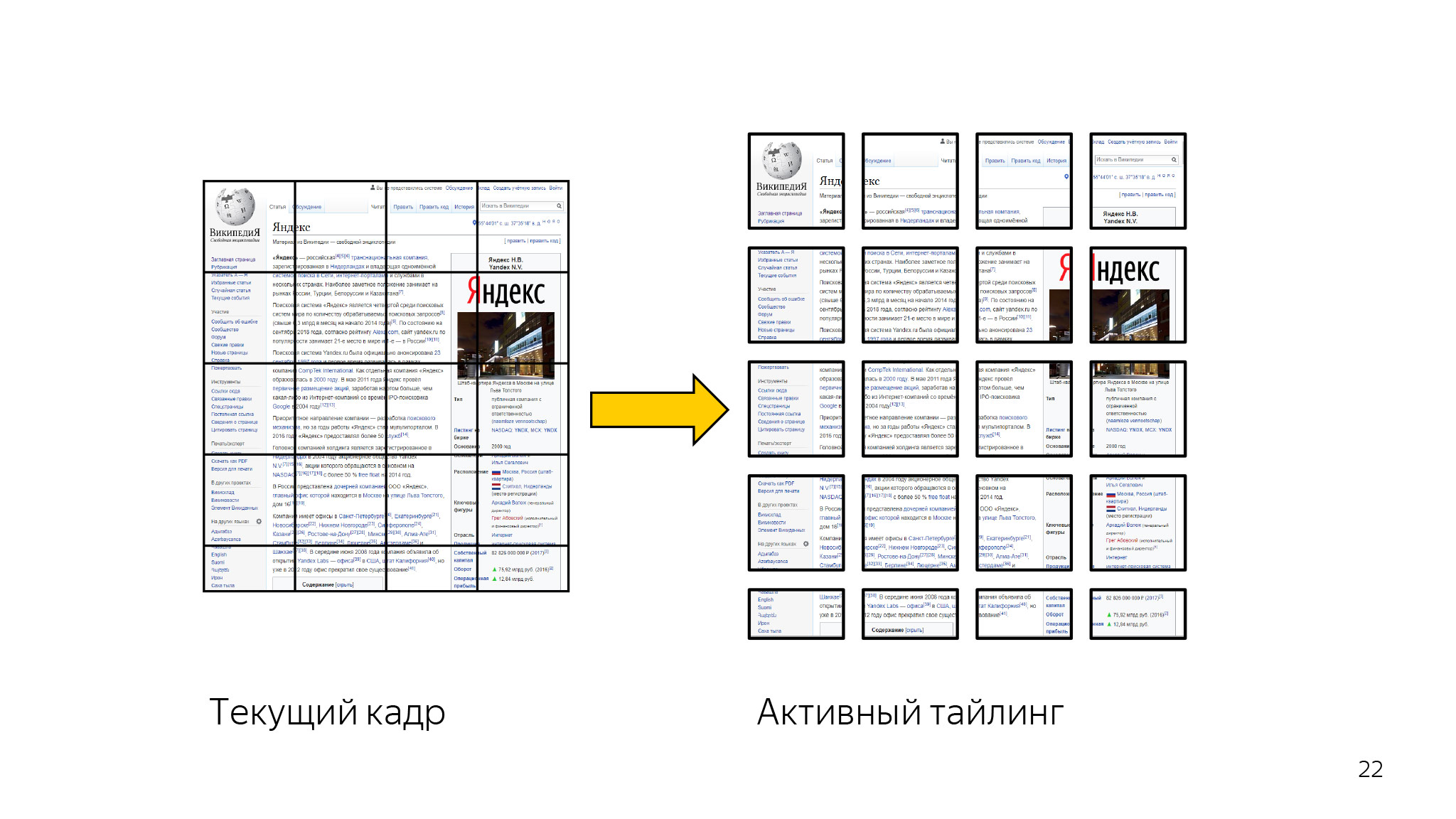

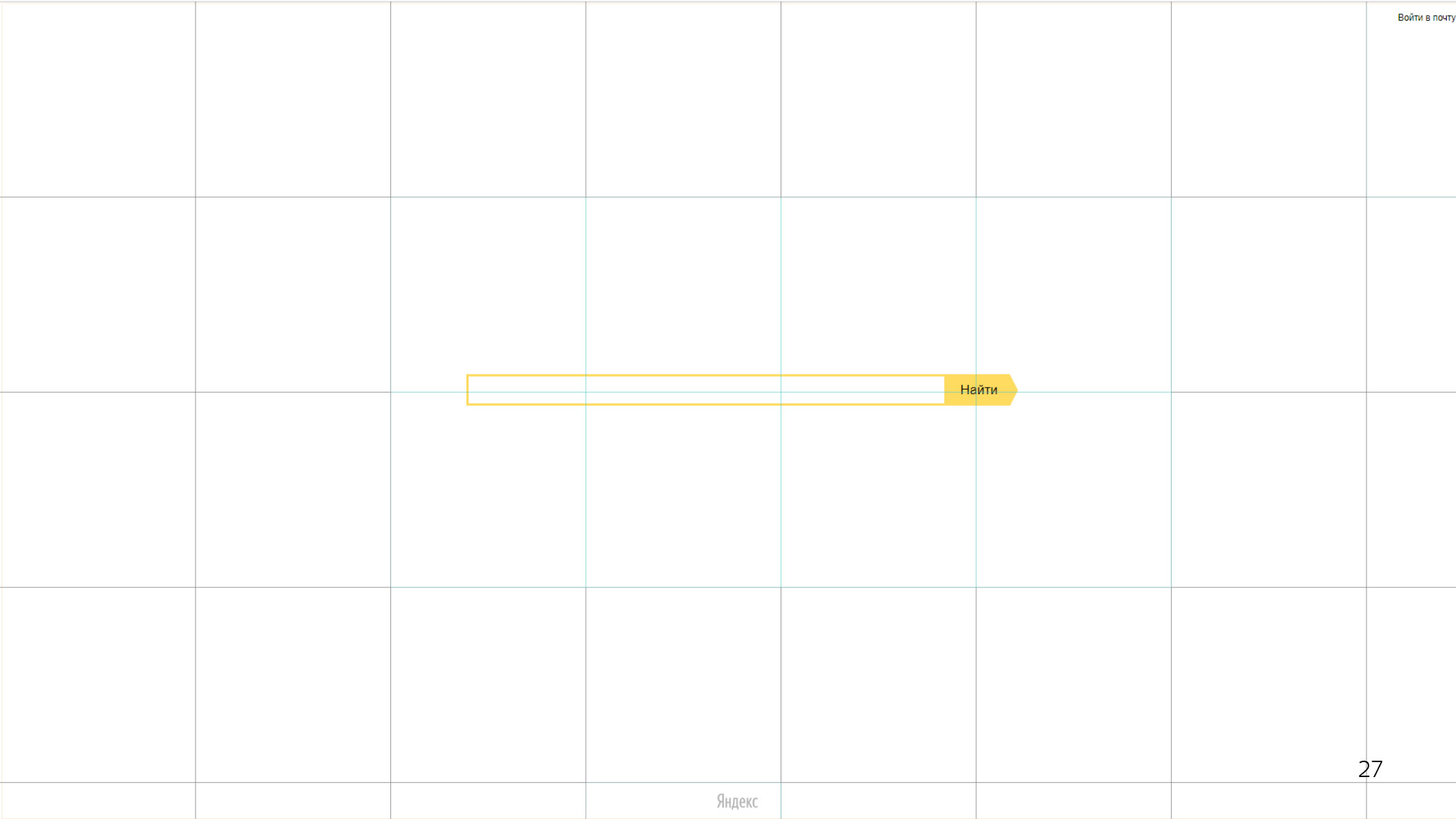

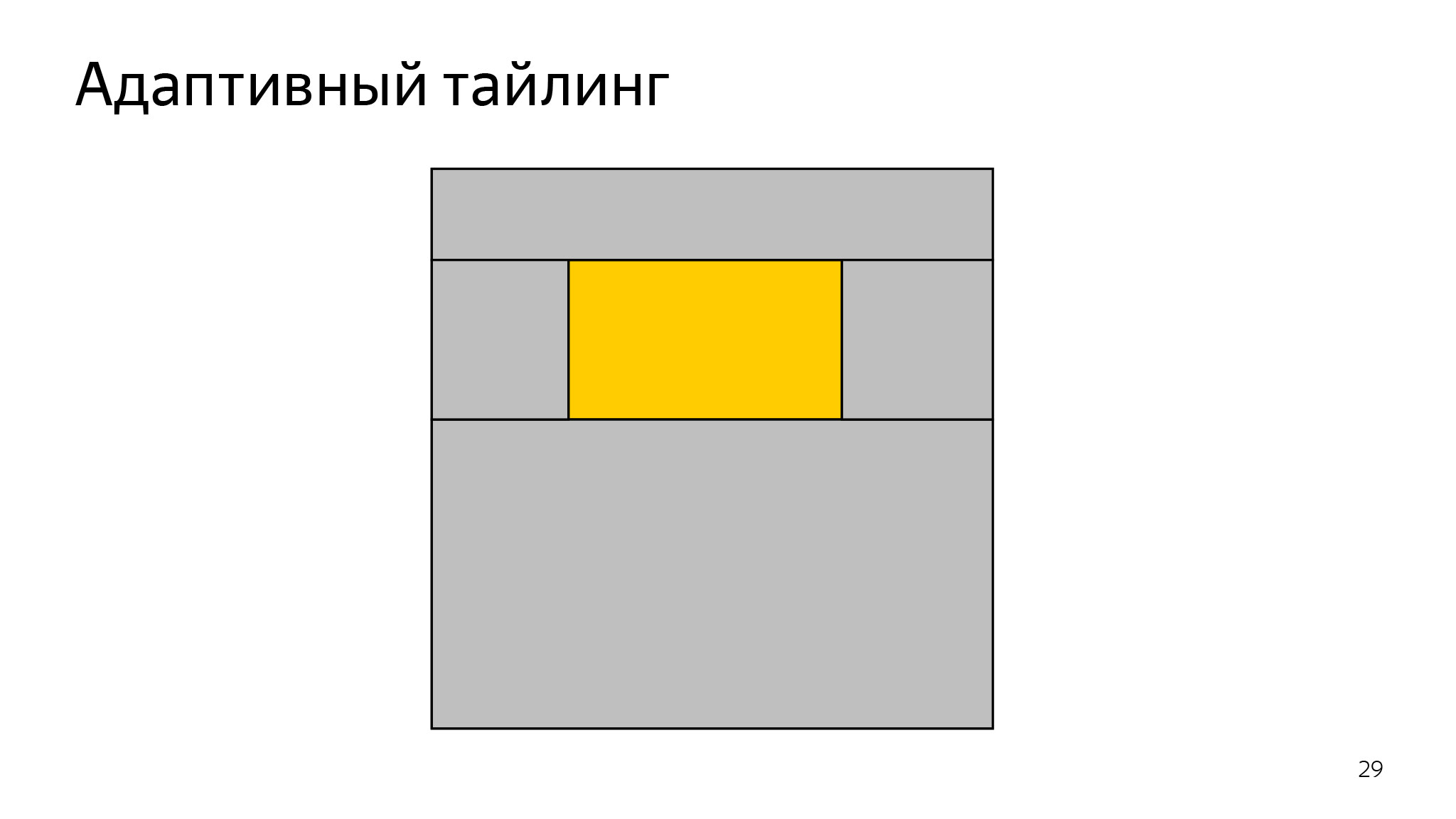

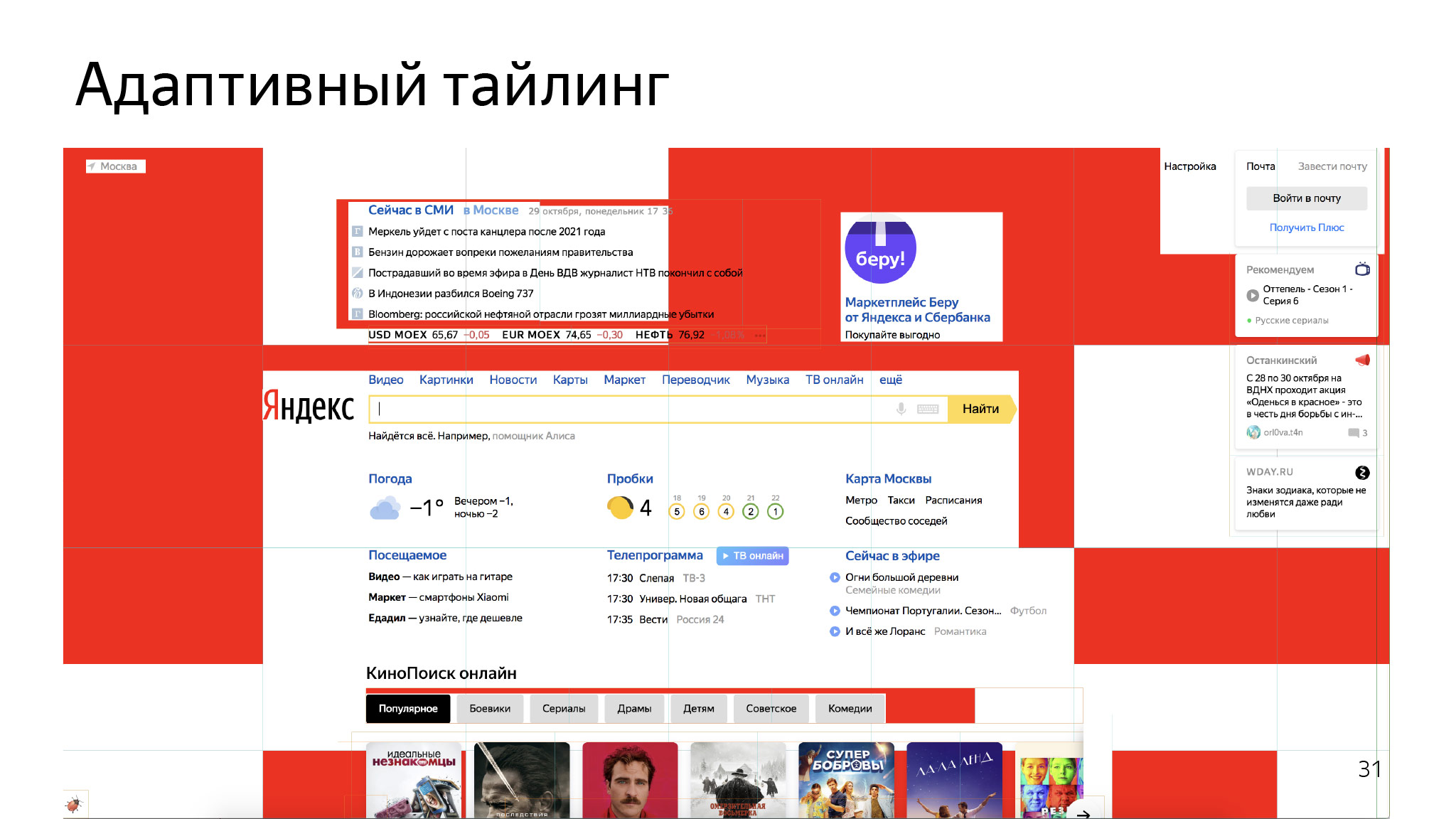

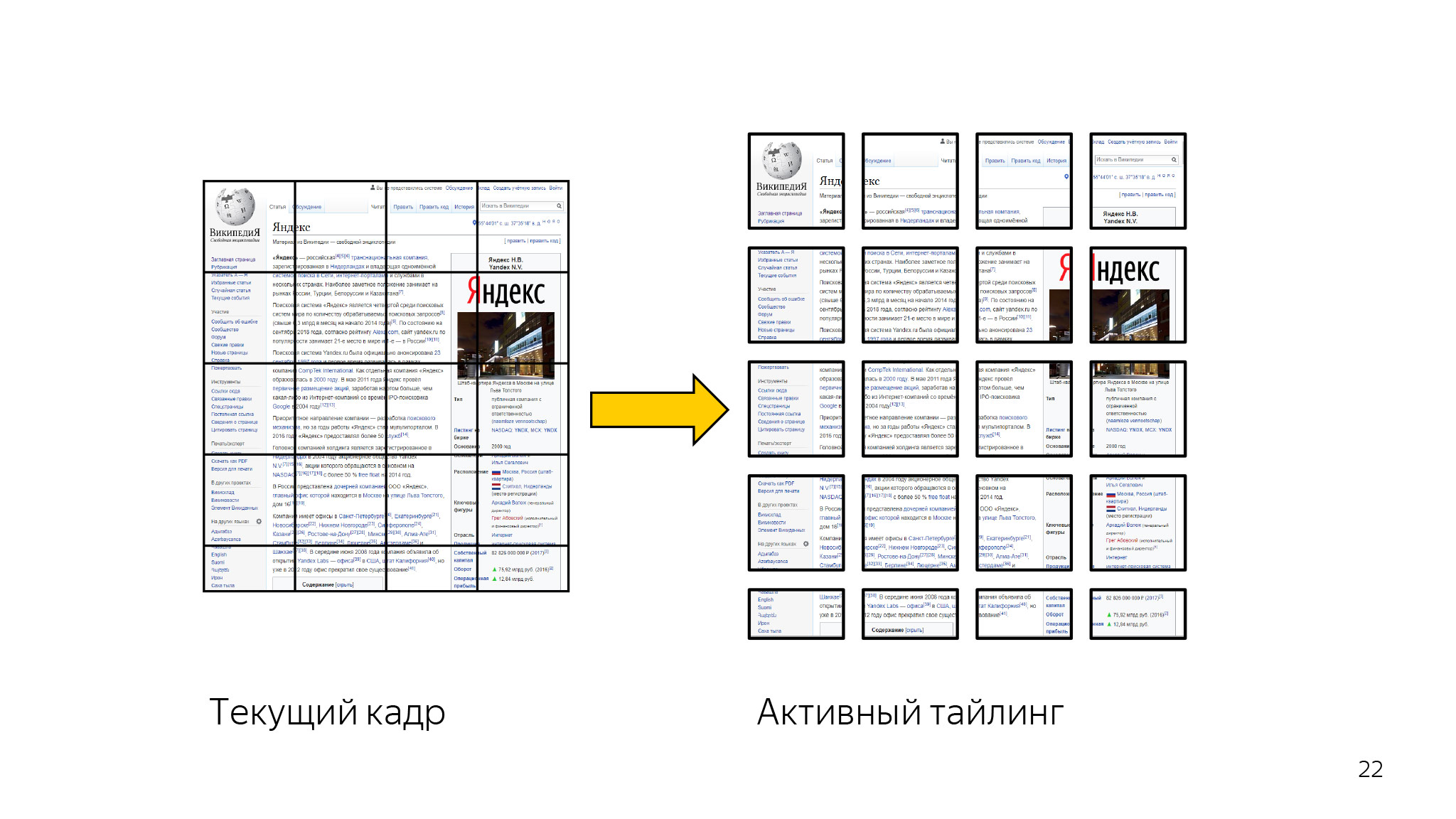

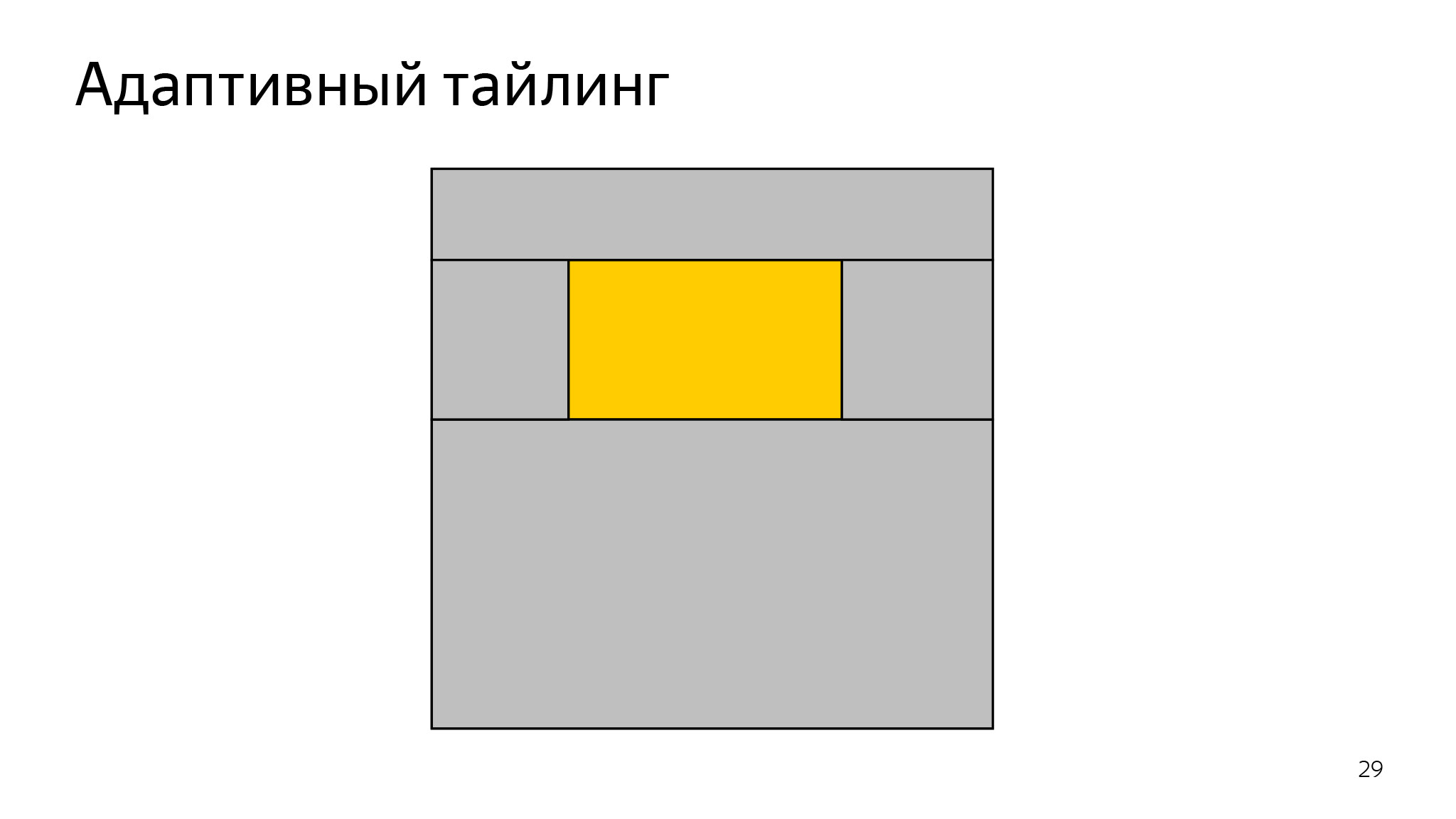

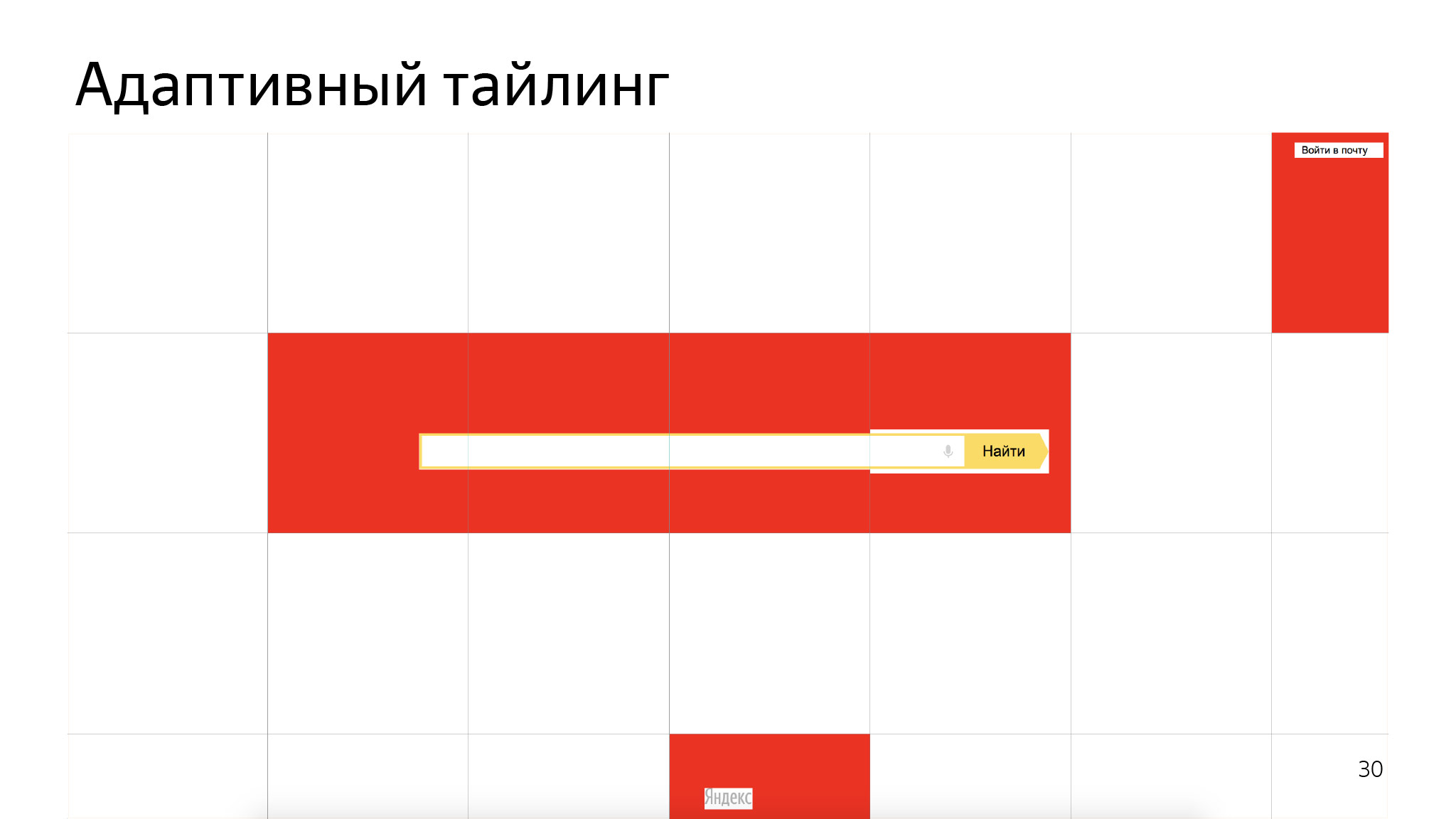

What else can you optimize? If you look at the remaining tiles, they contain a bit of content and a huge white area. We, in Yandex. Browser, thought about it and made a mechanism that was called adaptive tiling.

There is one small rectangle, tile. There is some content in the middle of the tile. We select it and - only for it - texture. Everything else is also divided into several areas, about which we speak to the video card: just draw with white color of this size.

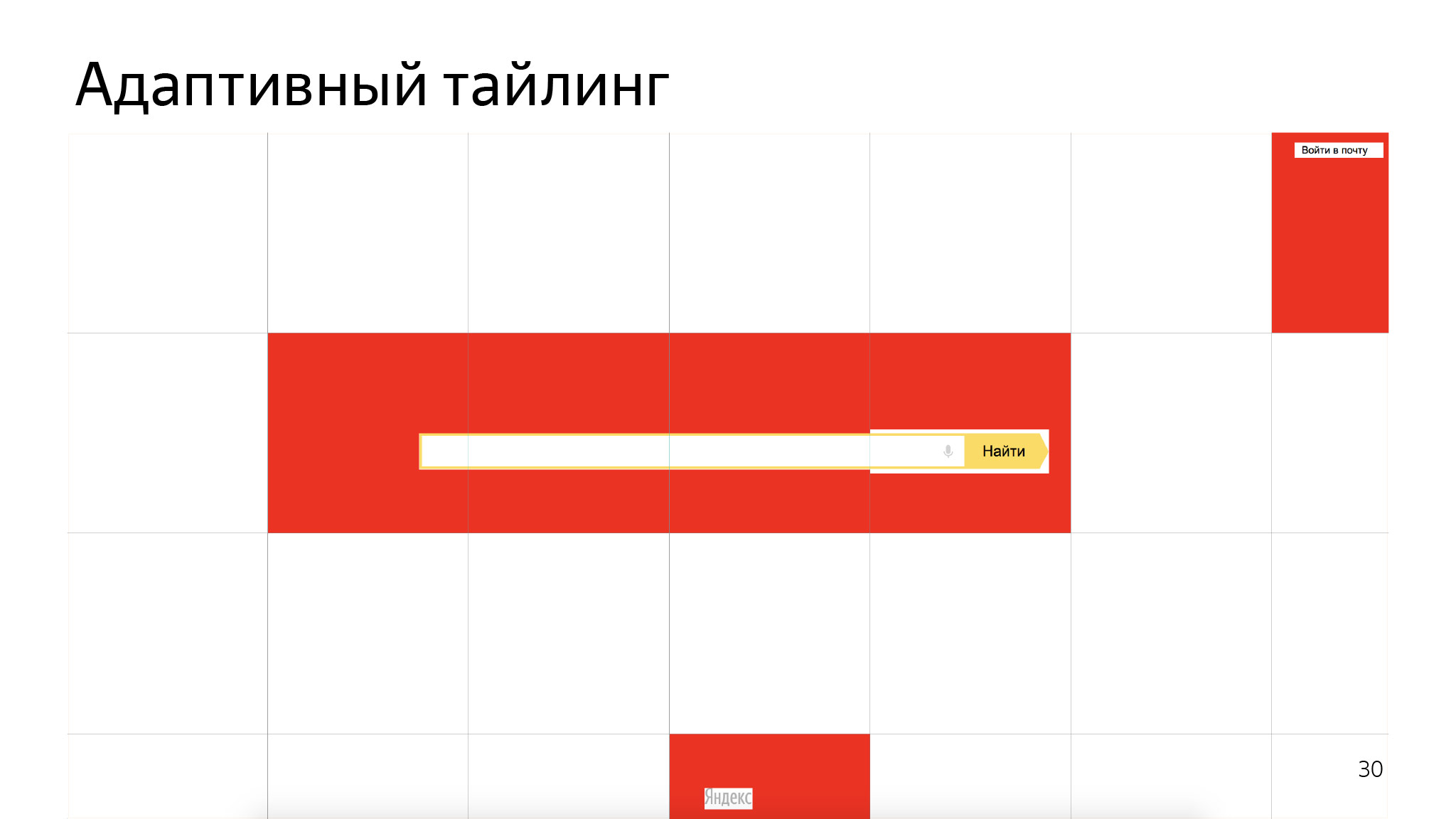

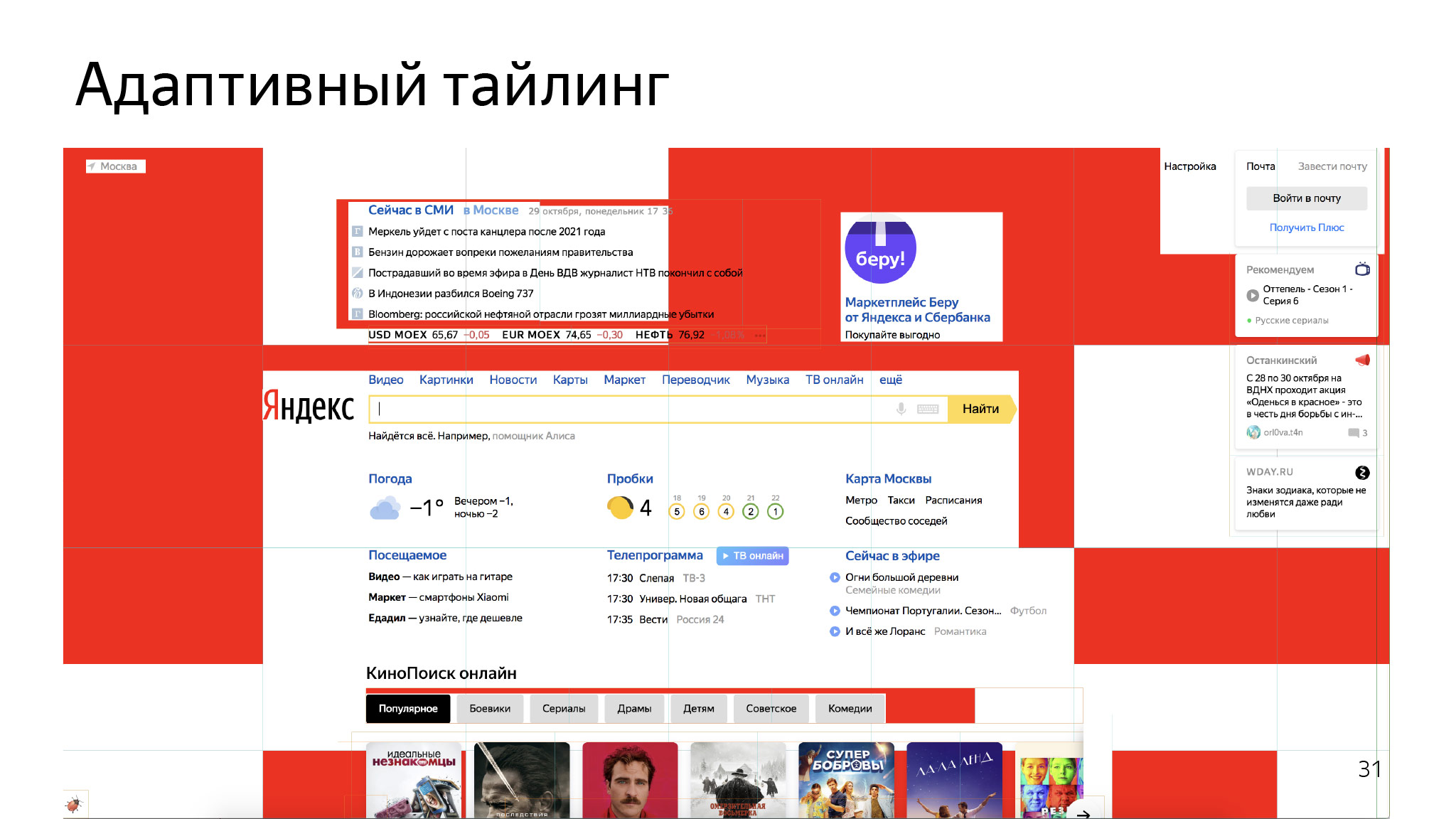

The page also starts saving everything that is highlighted in red. On more complex pages it looks something like this.

It is important to understand that there are still a bunch of layers and each layer is drawn in this way. On each layer, you can save some amount of memory. This approach allowed us to save about 40% of video memory on average for all users.

More hardcore! Here, a little memory saved, then "broke" the web - why not "break" the web next?

In Chromium there is something like a policy: if the user does not use background tabs, if he leaves them, it means that he does not need them. If we are now without memory and the browser is going to fall now, then let's take the oldest tab that the user hasn't used for a long time, and kill it. It will remain in the interface, but the process will not be there, the whole JS will die. Is it ok or not ok? It is strange to ask such a question on the front-end party and expect any answer, except for “What are you talking about?”.

Then it is not much gone. Here are the real comments from the Chromium blog: you broke all my applications to me, there was some kind of game - and hop, it has no state. It is important to understand that there and unload handler did not work, as if we just put out this insert. The user then returns to it, and we re-download it from the network, as if nothing had happened.

Then this approach was temporarily abandoned and came with a more thoughtful serious idea. They called it discard.

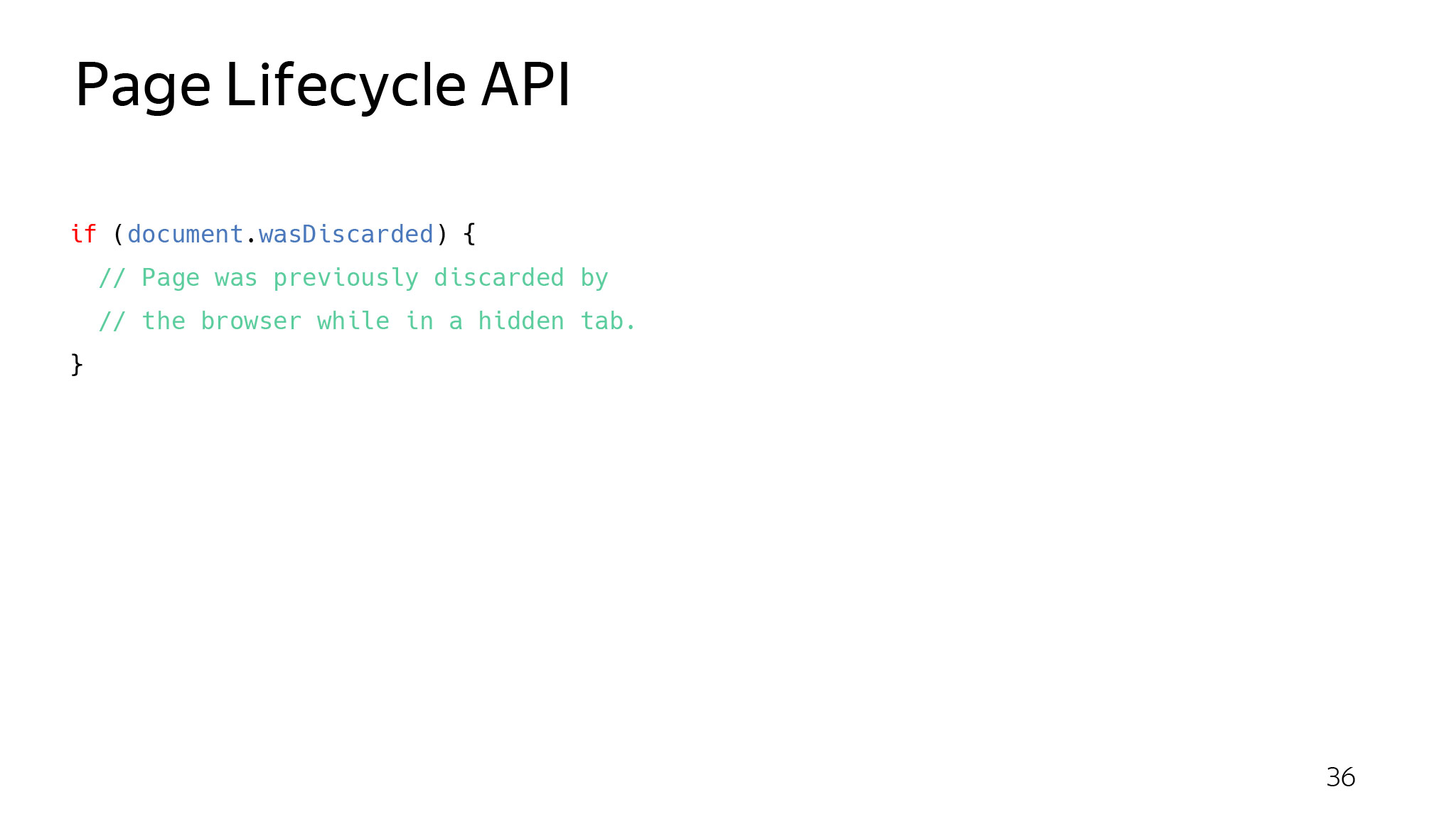

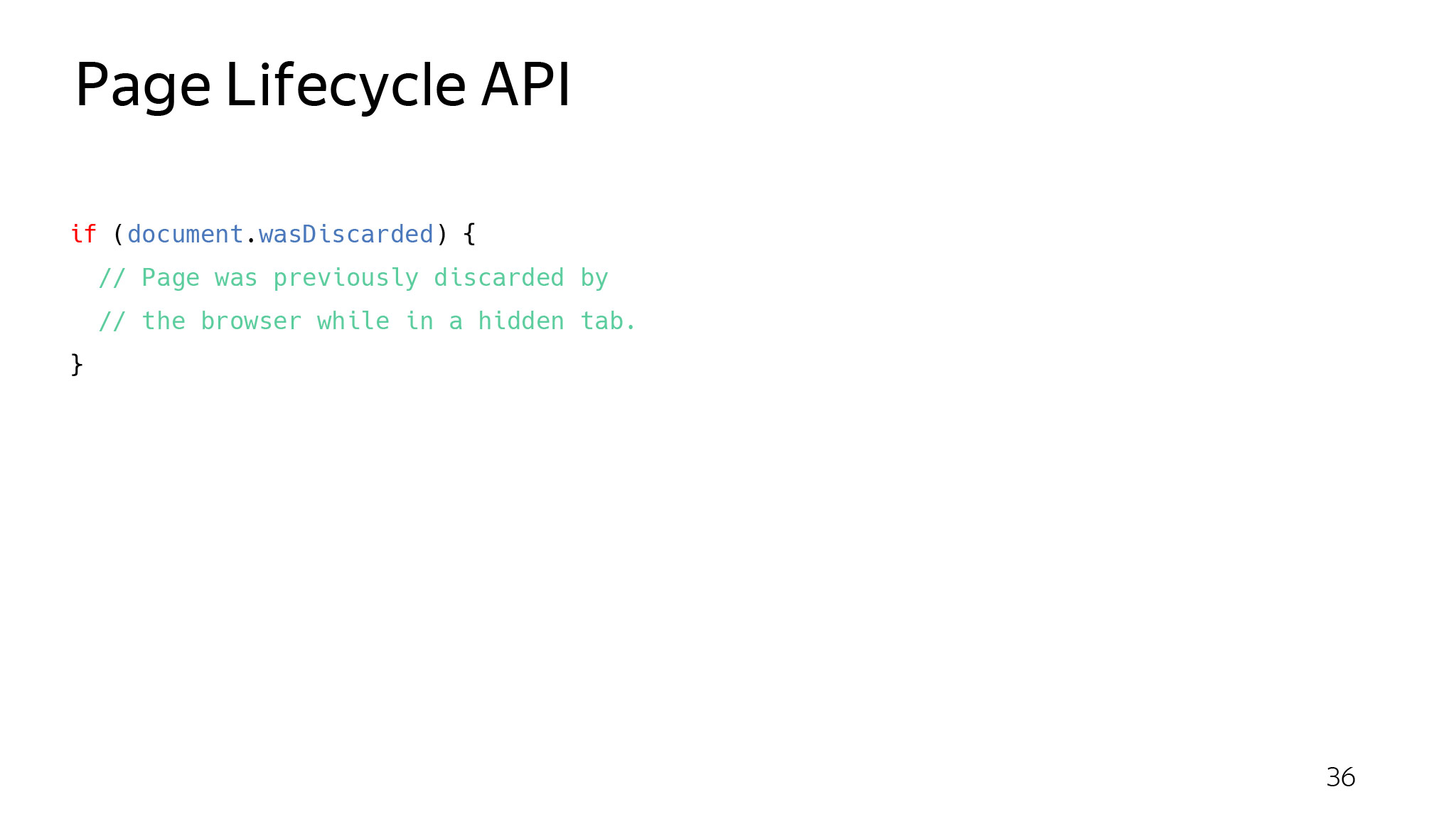

What's the point? This is all the same kill tabs, only controlled. It is called the Page Lifecycle API buzzword. If you have a tab, and the user has not seen it for a long time, it can go to the frozen state. The browser speaks through the event: now I will freeze you. After processing the event, nothing at all will be executed. Do what you need, get ready.

Then, from the frozen state, it can either exit through the resume event, as if nothing happened. Or, if the browser really needs to free up memory right now, it just takes it and kills it. But if the user goes back to this tab, we will reload it, and set the field was discarded on the document.

Right now you can already subscribe to these events and catch them, somehow handle. If the tab is really killed, you can check the field was discarded. This means that you have recovered after the discard. You can restore the previous state.

We in Yandex. Browser thought a few years ago: why not apply a complex cardinal approach. They called it Hibernate.

What's the point? There are several tabs, running some kind of JS, some kind of state. A separate process is created for each tab: a video can be played here, then you leave something on the form. Hibernate comes - and there are no processes. We have them all "abandoned." But if we now switch back to these tabs, the process will return, and the entire state will be in place, the video will continue to play from the right moment, all the text in the fields will remain in place.

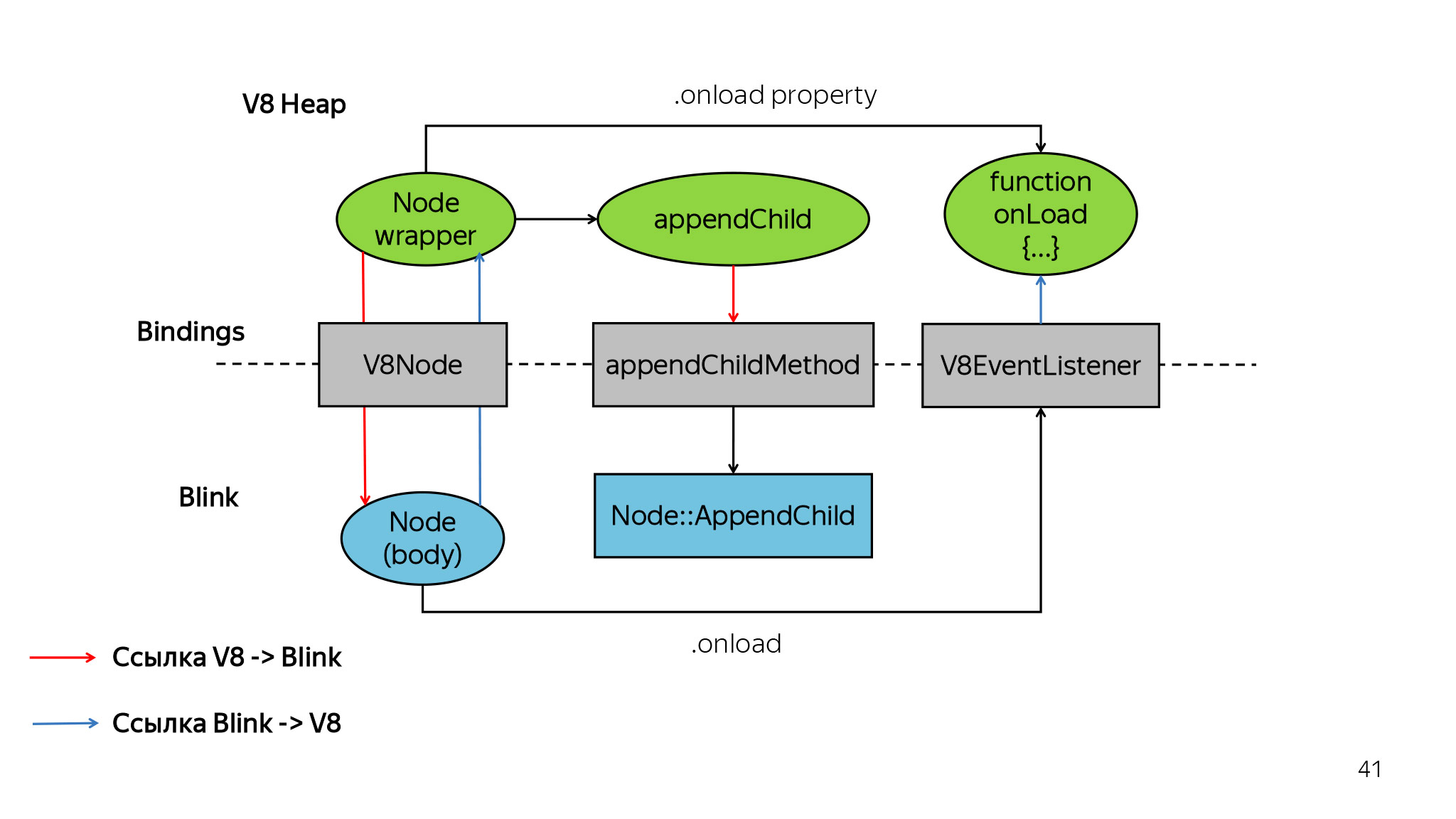

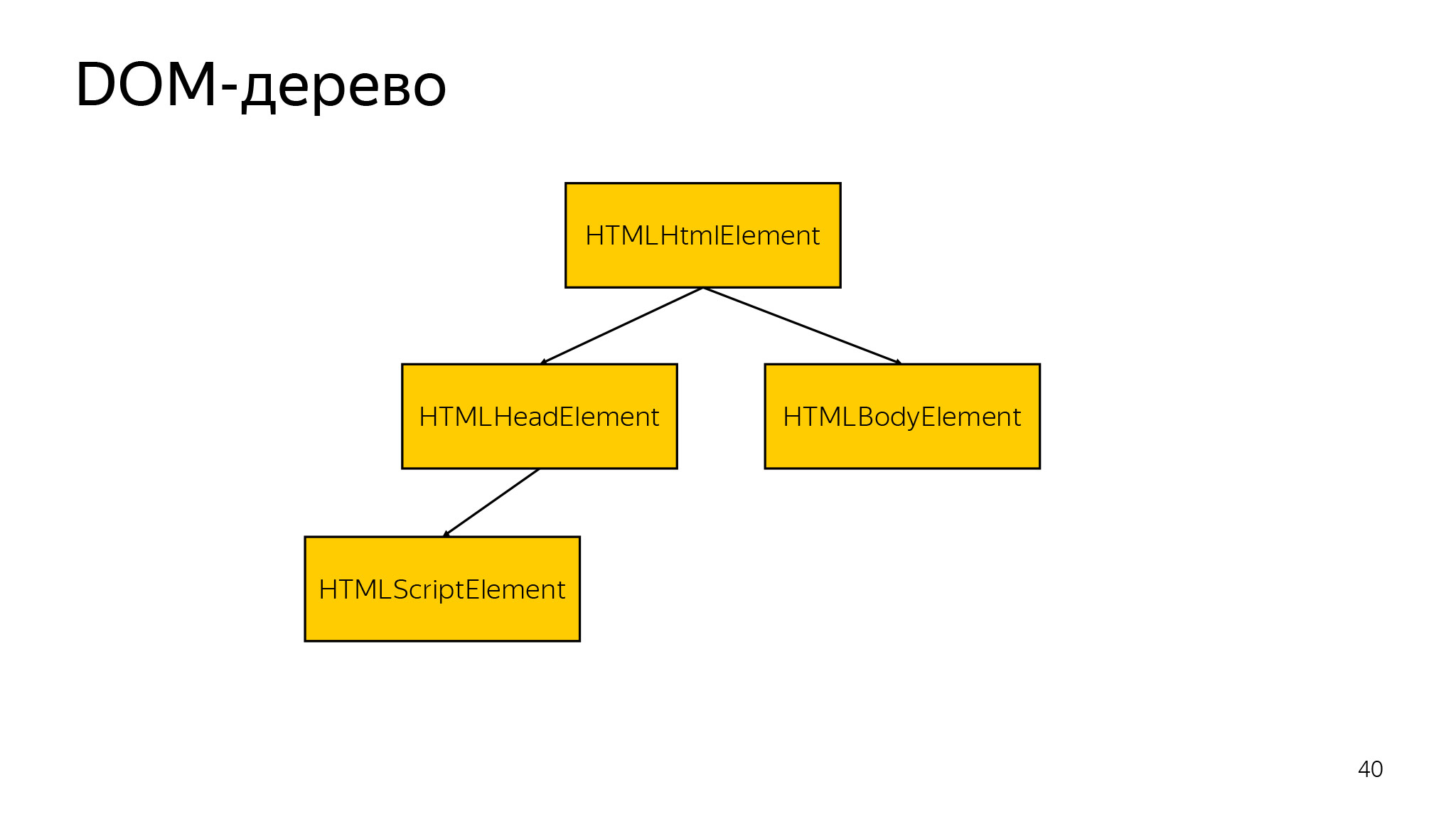

What have we done? Three most important things live inside each renderer: V8, in which all JS is spinning, Blink, in which all DOM is stored, and some kind of browser binding, which helps to tie everything together, with tabs and everything else.

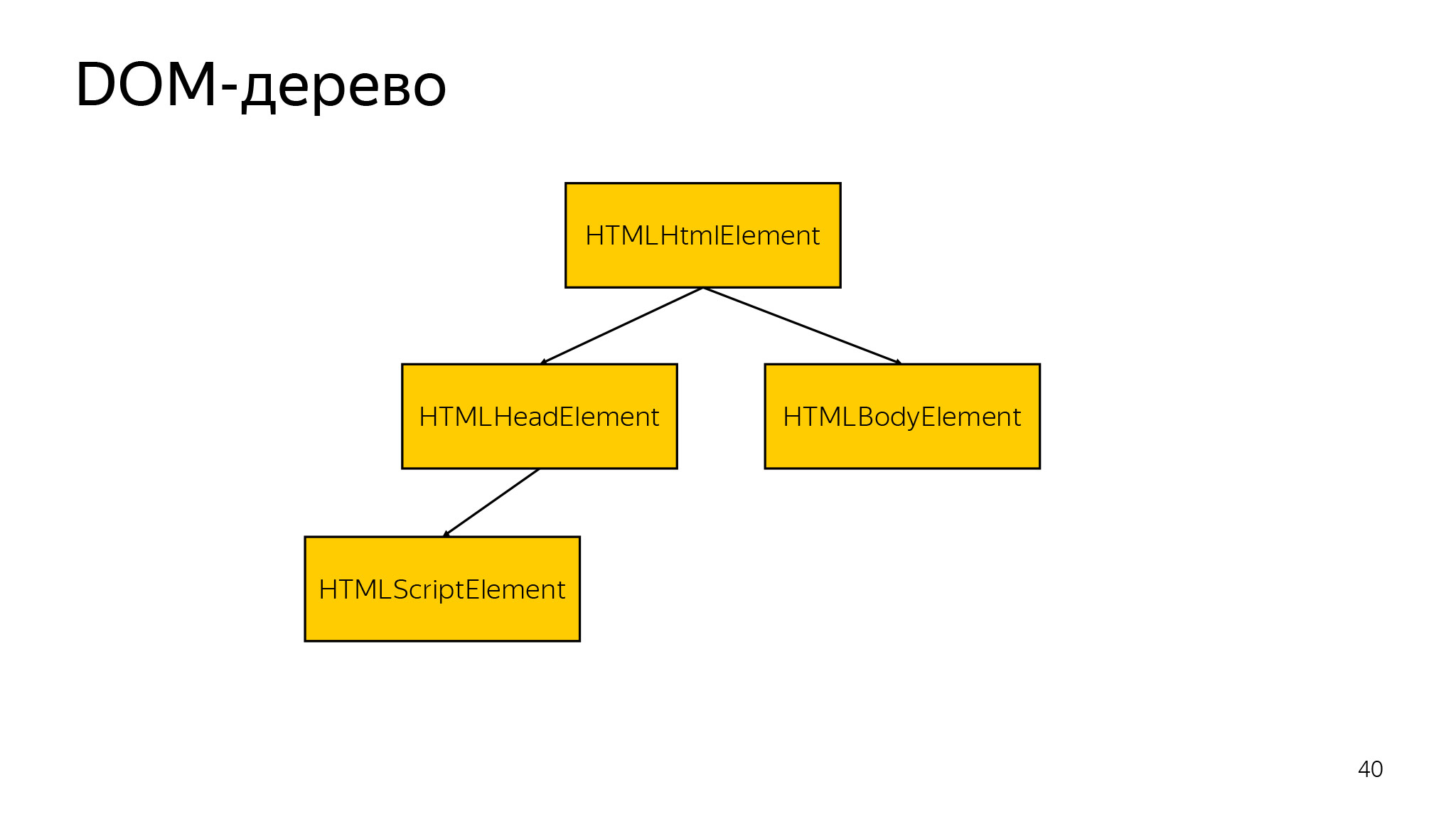

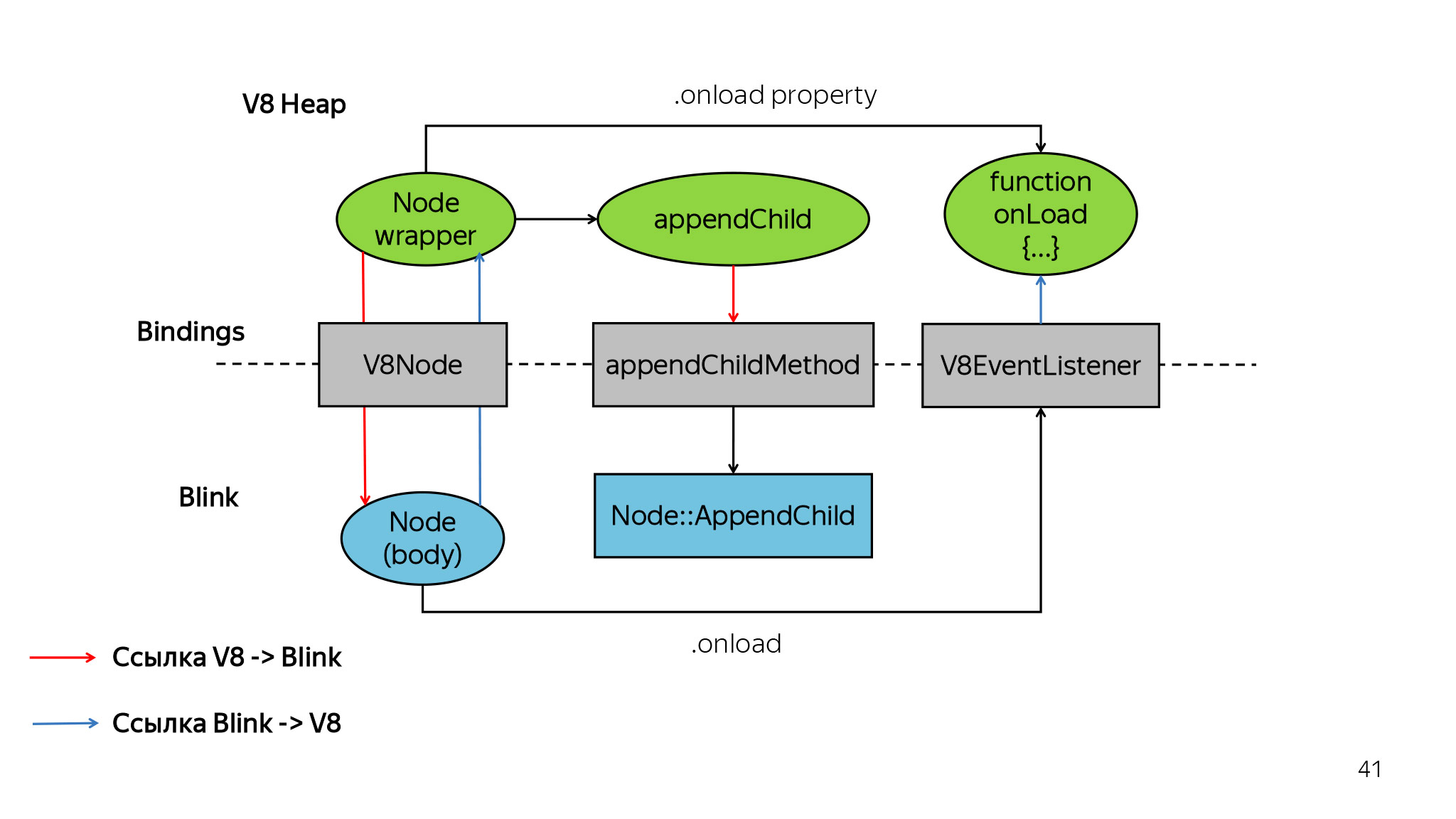

Consider for example a sample. Here we wait for onload to happen, and add a new div element to the DOM tree. For the browser, it looks something like this.

Naturally, there is a DOM tree, it has some fields, associated objects, and there is such an entity.

In V8, the state of each node is stored, and these nodes are associated with Blink objects through a layer of bindings. What have we done? We took the serializer from V8, serialized the entire V8 state, found all the related objects in Blink, wrote positive serializers that save the entire DOM tree, serialize it, then write to disk, compressed and encrypted. And we taught the browser to recover from such a snapshot back. That is, when the user goes to such a tab, we uncompress it, decrypt it and show it to the user, restore it completely. ( Separate post about Hibernate - approx. Ed.)

Right now, Hibernate is published for everyone in stable and allows each user to save on average one or two tabs. That is, on average, one tab is always saved, or maybe two. This allows you to save memory for users who have more than 10 tabs - like we have with you, but we are not representative.

I told you how the browser is trying to help, but now each of you can do something to speed up the site and improve its performance. Today you can come and do it.

First you need to understand whether there are problems with memory.

There are certain symptoms: either the site starts to degrade, or fading appears. This usually means that the garbage collector is triggered, the whole world is frozen and nothing is drawn. Or that the site just continuously slows down - these also happen.

Need to understand if there is a problem. See what happens with JS memory.

If it jumps sharply back and forth or grows continuously, this is not a good symptom. Or continuously growing.

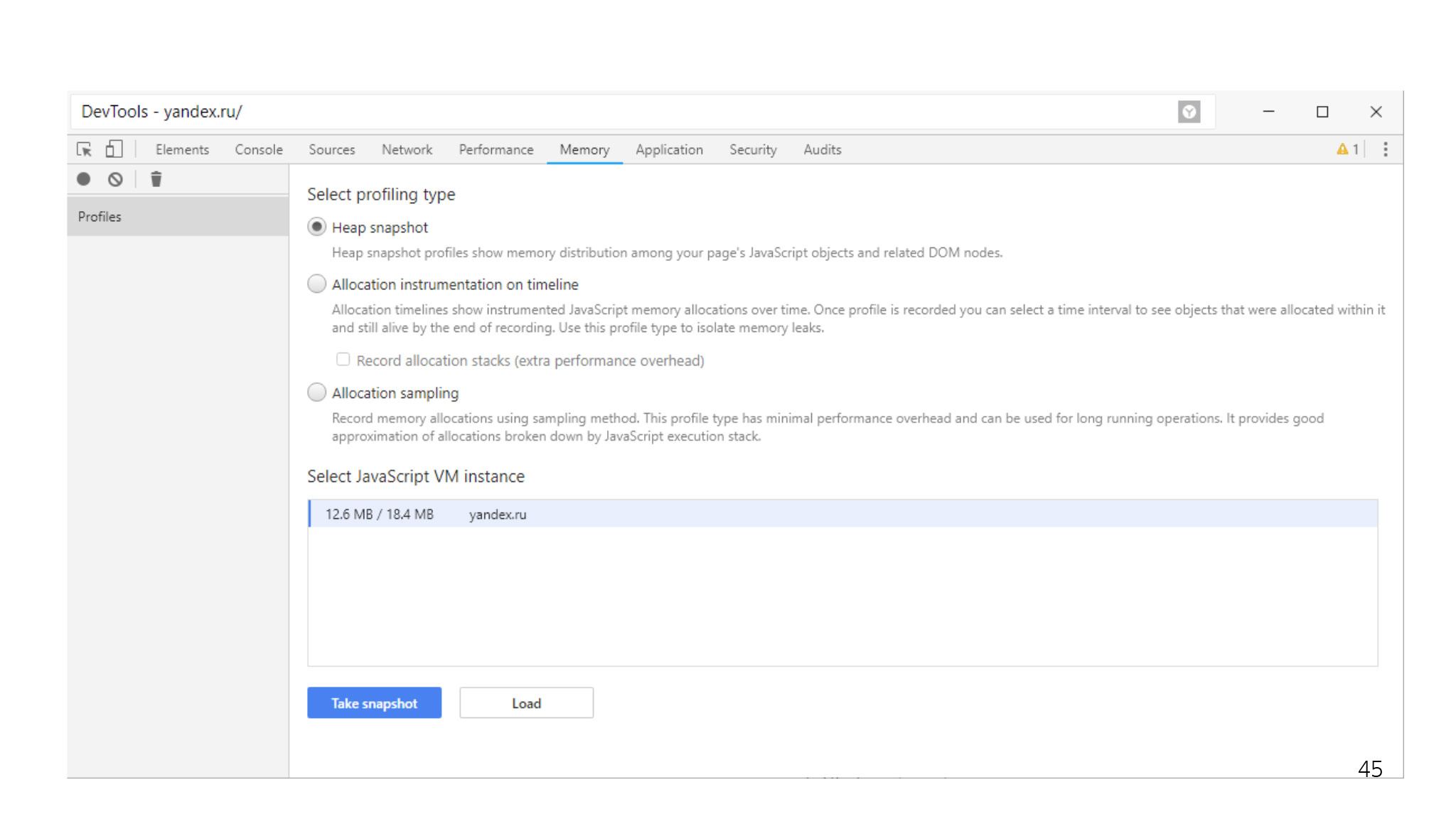

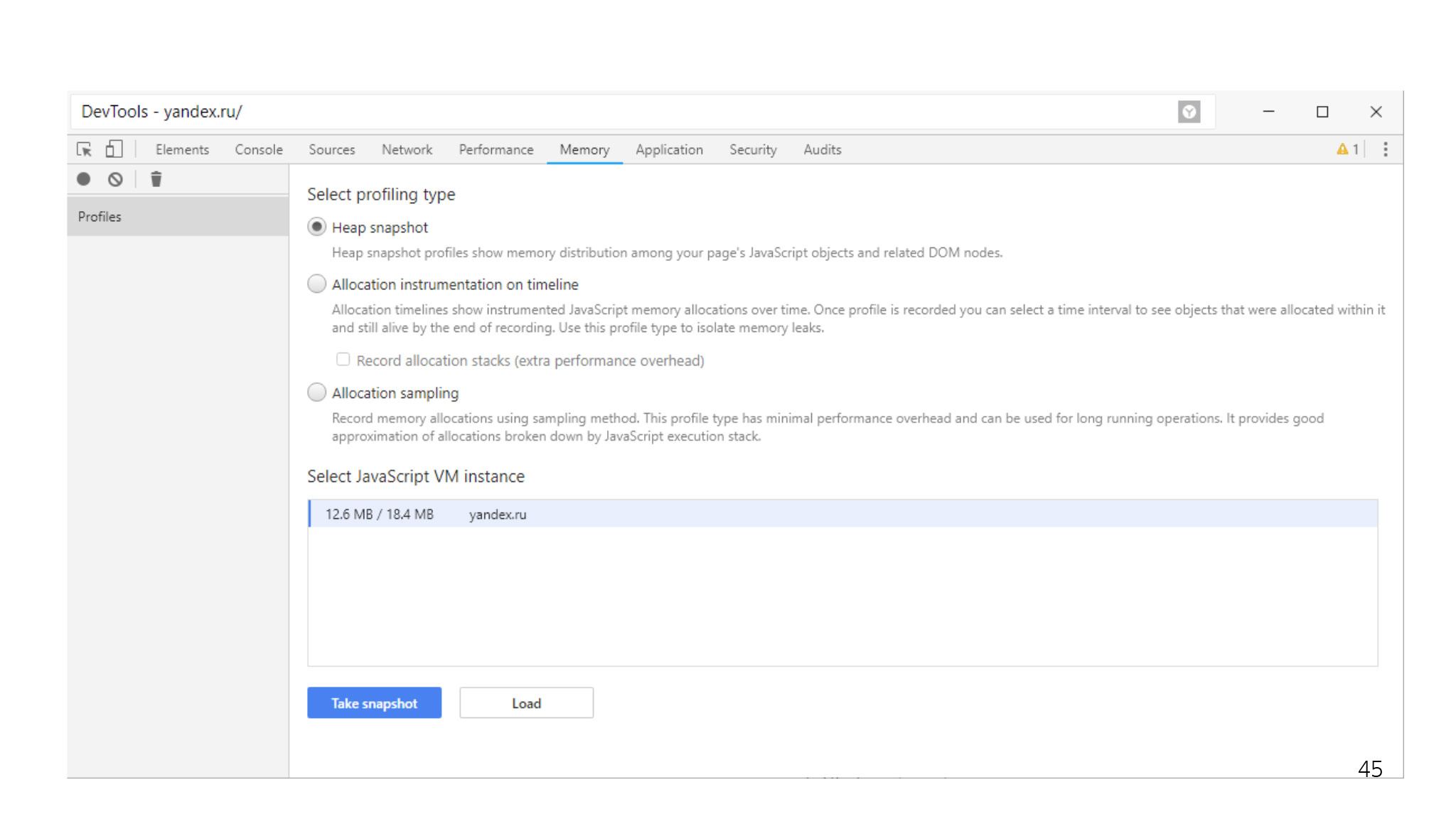

In this case, you can always take a snapshot through DevTools. Someone even bother with leaks in JS? If someone does not know, in JS it is quite possible to make a leak.

For example, you have a global variable where you add nodes that are not inserted into the tree. You forget about them, and then it turns out that they are eating hundreds of kilobytes, or even megabytes.

Strictly speaking, it is not clear what to do with such detached-nodes. You can pull them out of the tree, and then insert them back. Because usually the state of the picture or something else remains with them. This way you can find and treat the problem.

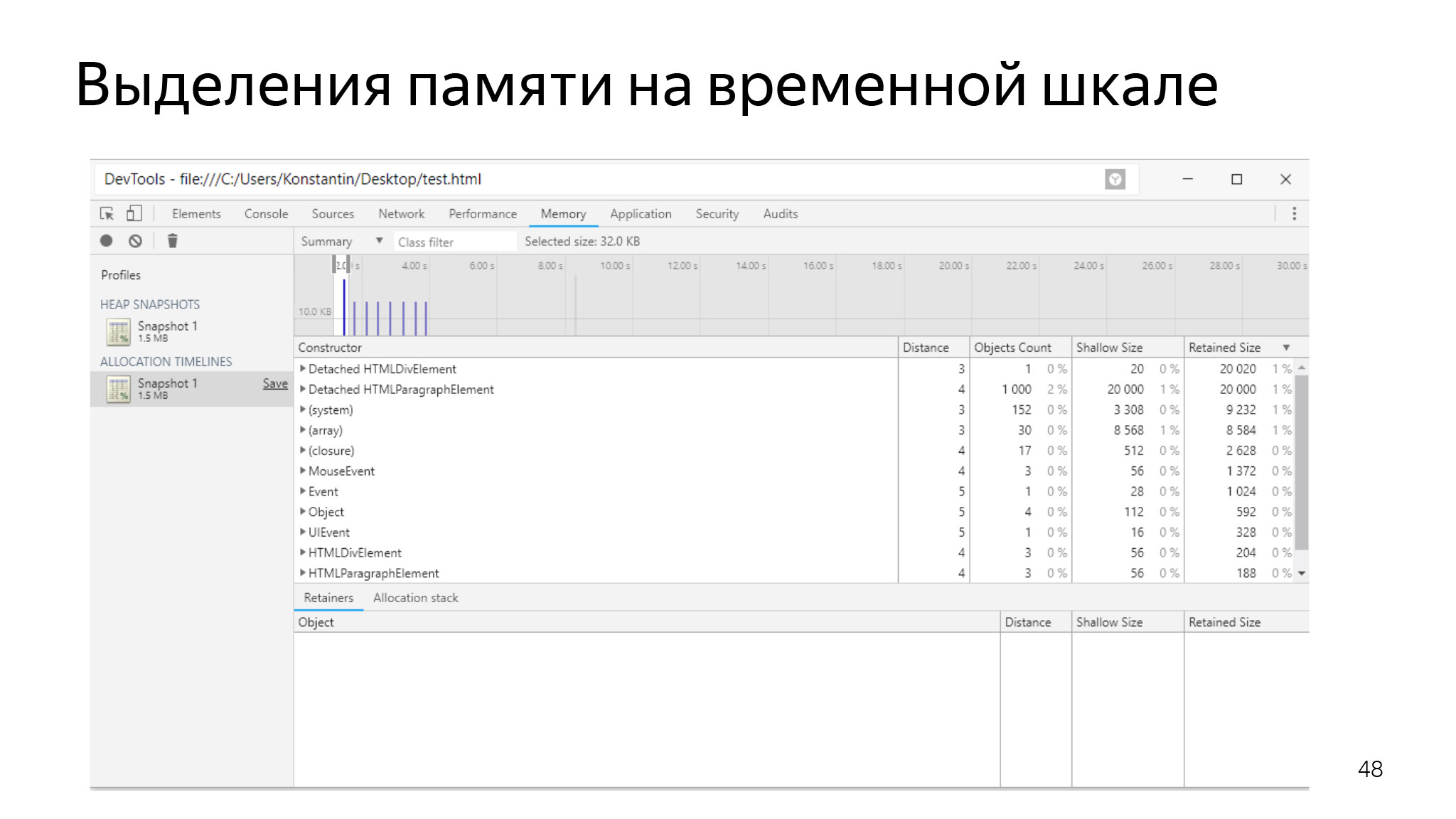

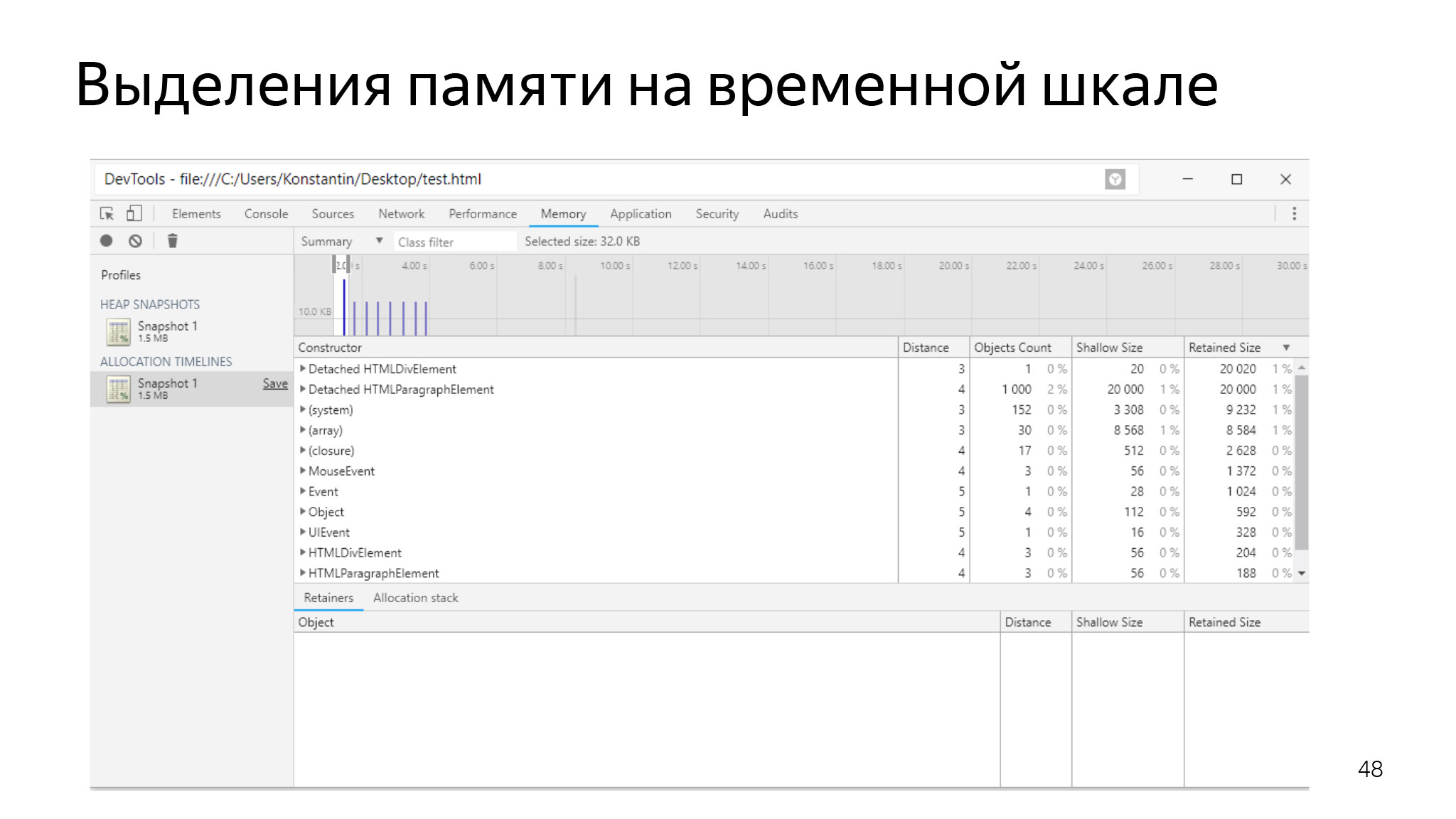

You can also look at the memory allocation in the timeline. If you go to a systematic selection and never clears anything - this is also a bad sign, I think everyone understands that.

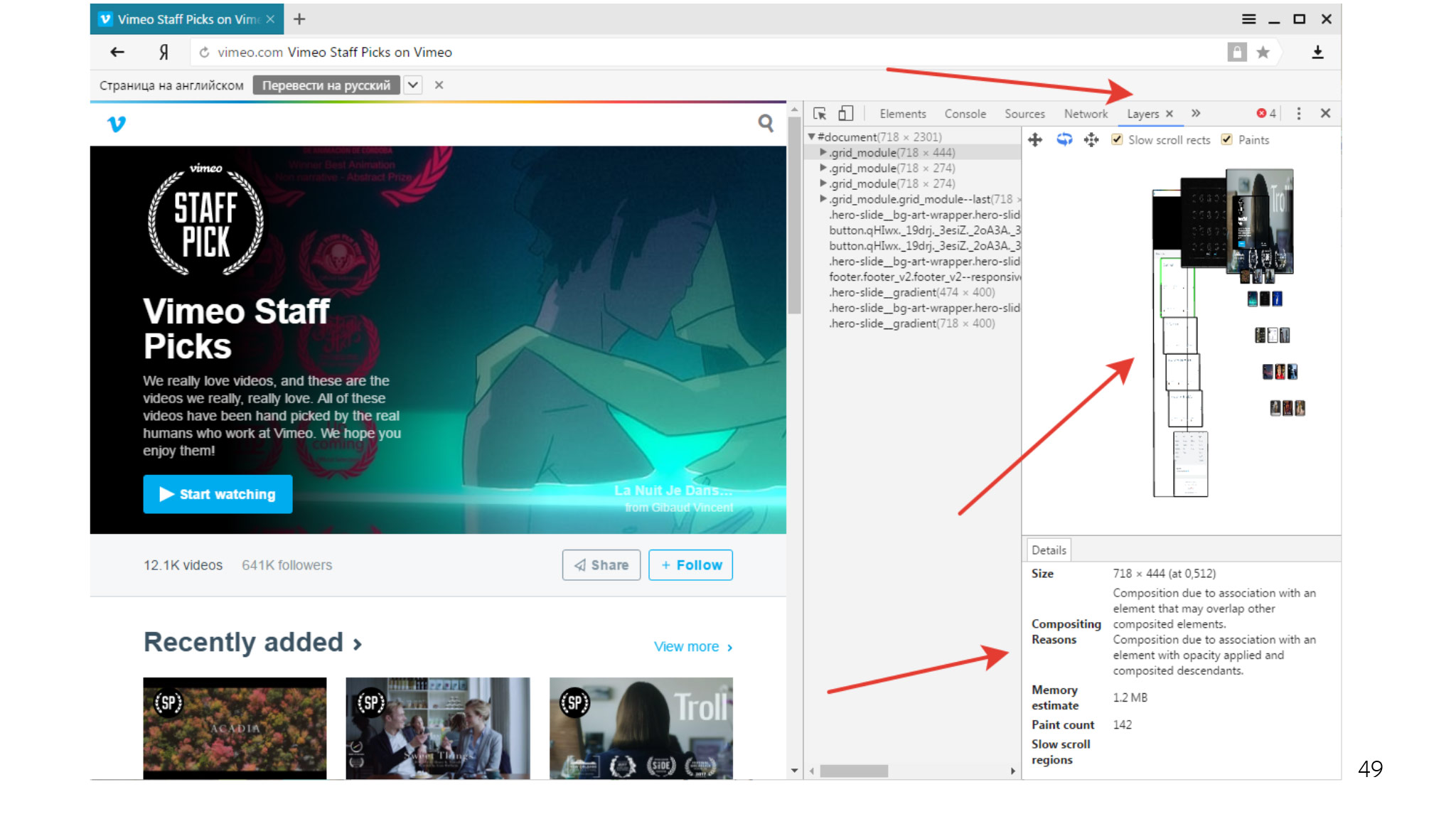

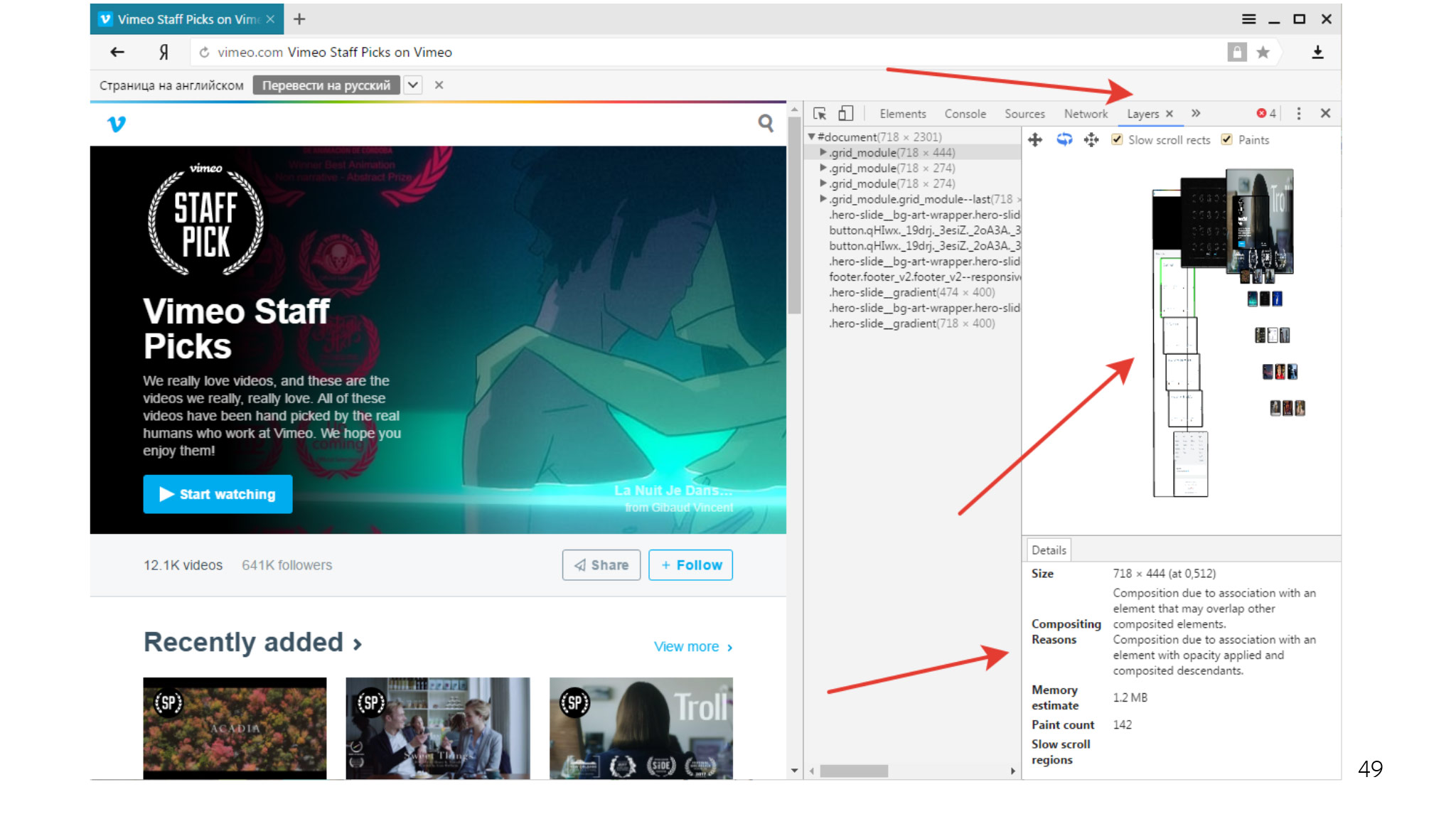

And you can play around with the layers on your tab. The browser generally works on heuristics in this regard — it tries to select into separate layers those objects that it considers often changing. But sometimes it does this not very well, and it turns out that you have so many layers that consume a lot of memory. You can always see if you have such a situation, eliminate some layers and find out how much memory this layer occupies.

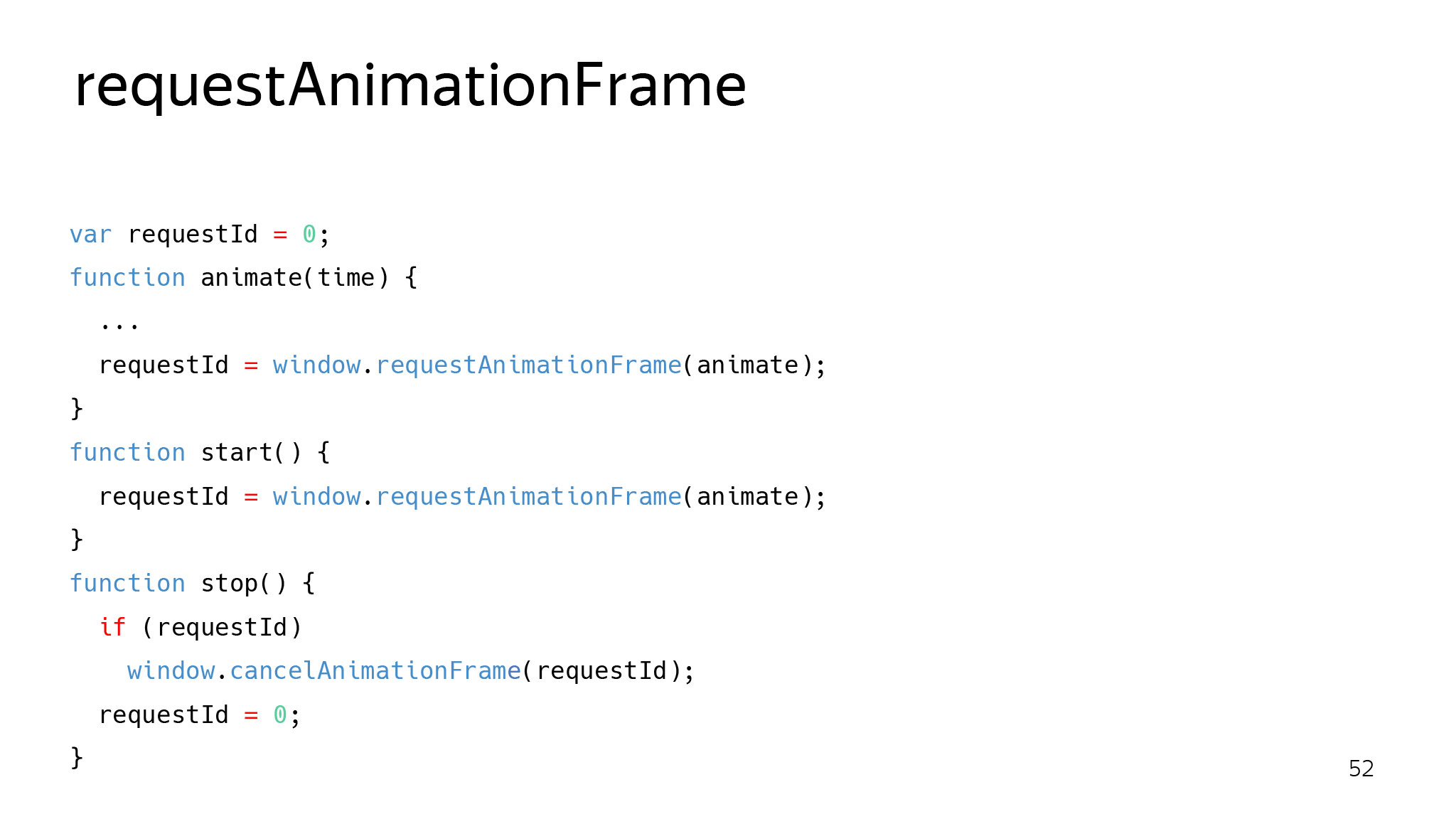

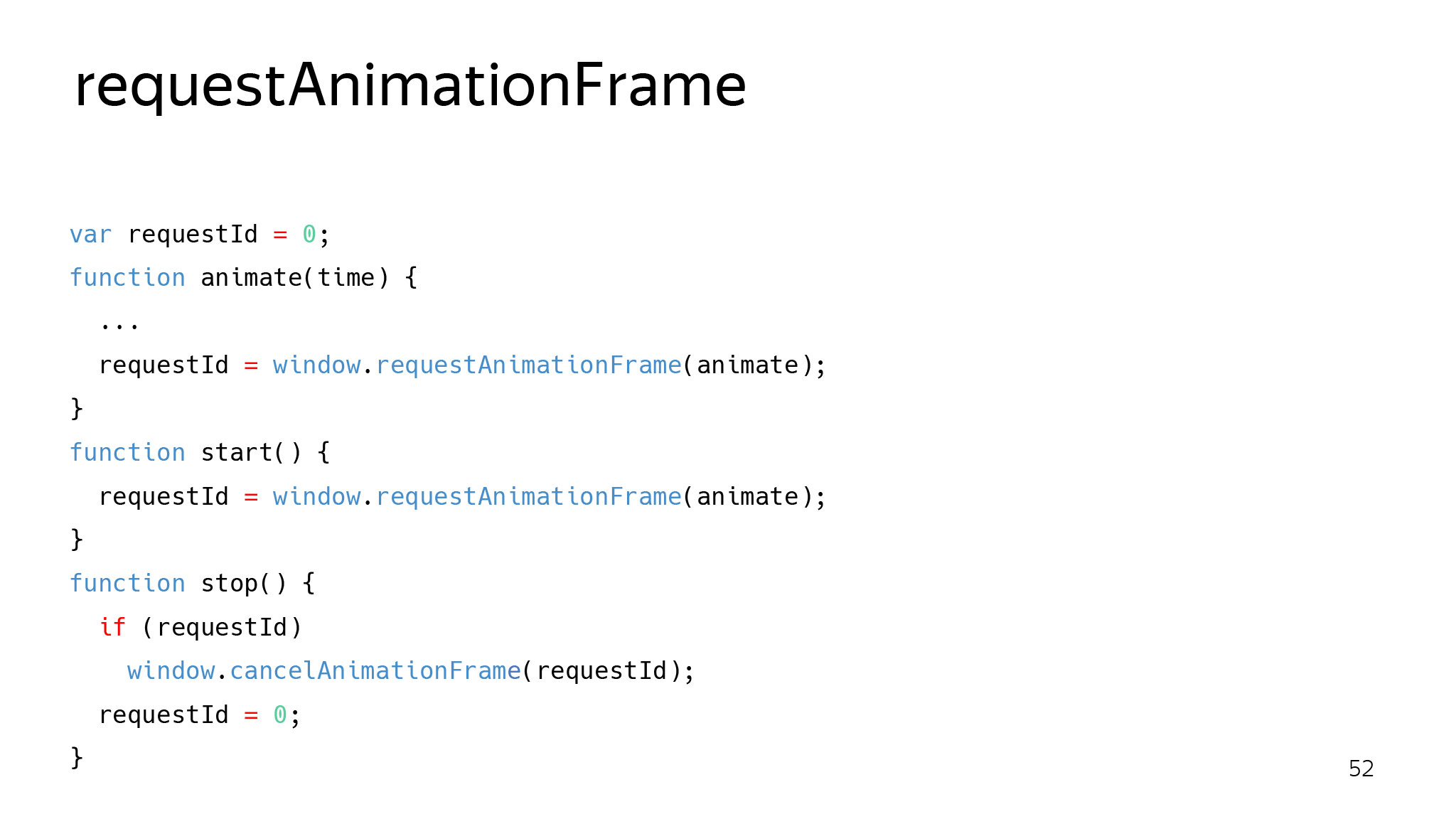

Another layer of problems - with performance. setTimeout is a bad idea for animation. It does not work the way the browser wants to render the page. It may not work in phase: the browser has requested the frame, but the animation has not yet, because setTimeout has not worked. Or it can work, even when you do not need to draw anything. The user has left, some background work remains, but we will work with him through setTimeout.

Here is the correct approach that your callback will call only when the browser needs to draw this page.

I also want to remind you that if the browser wants to draw 60 frames per second, it means that for each frame it needs about 16 ms.

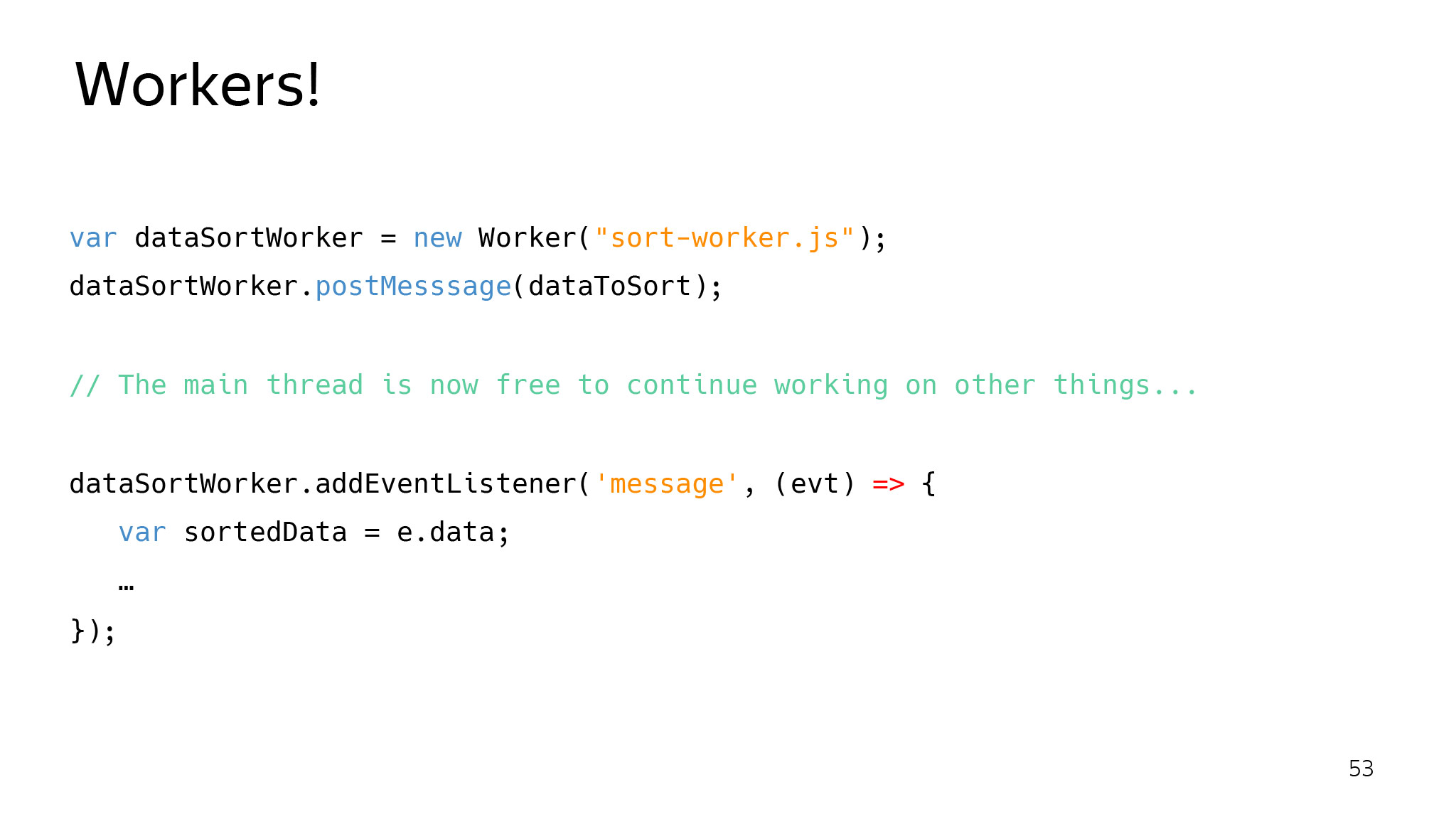

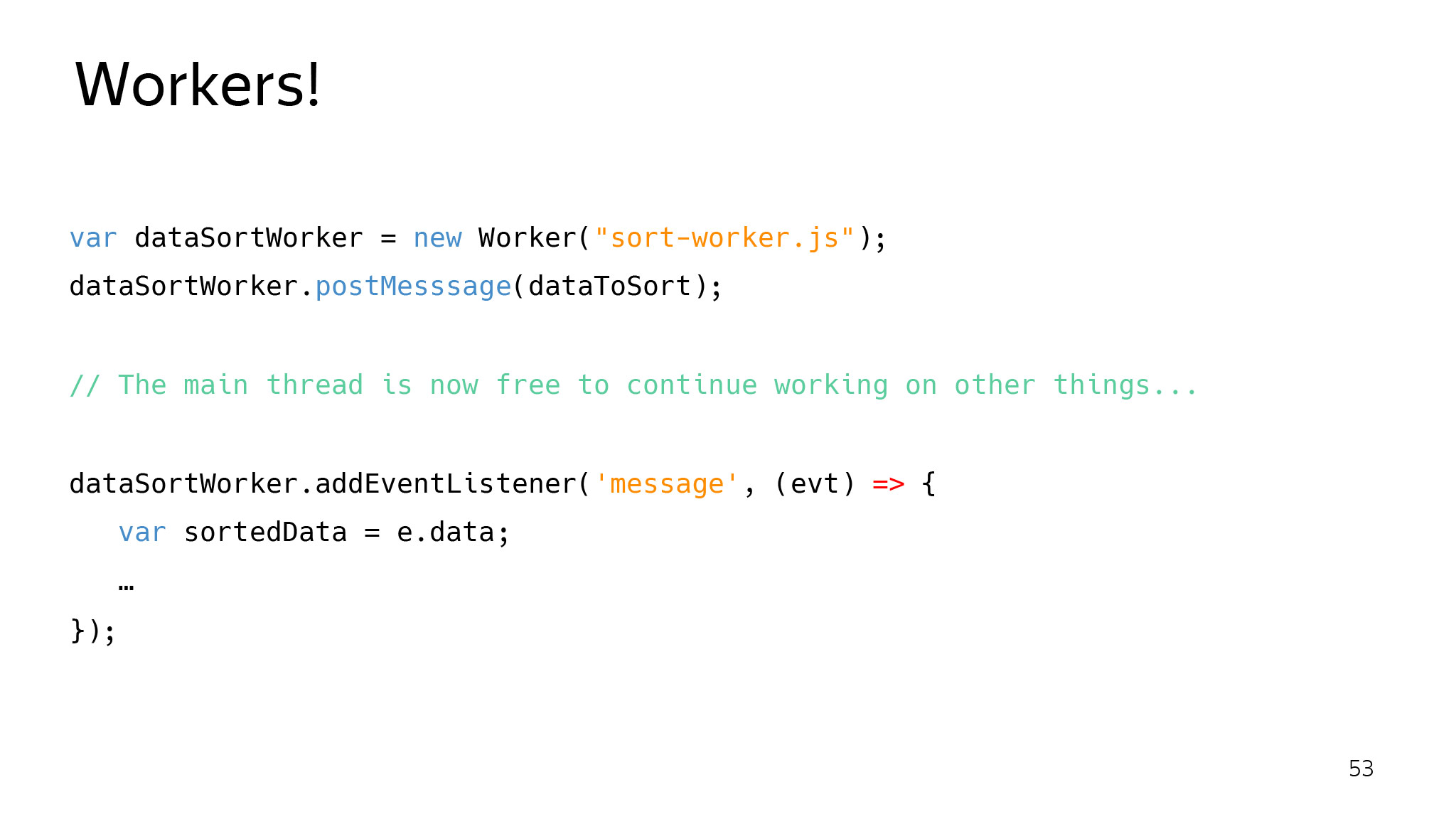

And if you occupy the main stream for longer than 16 ms, then you have a guaranteed frame drop, which is quite unpleasant for the user: the site starts to crash. Therefore, all the hard work correctly put in the background threads that you just return the result.

Or another approach is to use microtasking. Create a queue of tasks, process it after some time, until the quota is over, and let the browser quietly draw a page.

Naturally, you can add new layers. The browser works on heuristics and tries to select the layer where it sees fit. But if you know that right now this element will be animated, it is better to explicitly tell the browser that you need to select this element in a separate layer. Then it will be rendered more efficiently.

The latest interesting approach is to complain. If you have problems, it is always helpful to come and talk. Maybe this problem is easy to treat, and we will help each other.

A little background. This feature is now in the process of working out. The search results page development team has come to us. They have such a metric as the time to draw the first snippet. If the user previously sees the first link that you need to click on, and which most likely gives him the correct answer, then it is much better to draw it as soon as possible. They came and asked if it was possible to somehow speed up this time, because they had already squeezed everything out.

We searched, performed perf-testing, made a prototype, and it turned out that we can do this if the site tells us that we need to draw first. We conducted tests and see that this improves our performance. Now we are testing this in production for a small audience. See what happens. Stay tuned.

The world does not stand still. The user starts to want more and more, browsers change, the web changes. This is normal. We try not only to make product features, but also to help the user in every way to get the best experience in terms of content consumption. And you always have to be ready for changes in any popular browser. Naturally, if there are problems, come, we are ready to discuss and help each other.

Here I collected various useful links:

Some of them - for example, Hibernate technology - we have already analyzed in a separate post . The Bones report covers the task more broadly: not only in terms of tab switching, but also taking into account content rendering methods, tiles and page layers.

Toward the end, web interface developers can learn how to identify and solve problems with the performance of sites.

')

- My name is Kostya, I am the head of the internal components development team in the Yandex.Browser team. In the Browser, I was a little more than five years old, doing different things: from all decoding in a browser, all HTML5 videos, to rendering, rendering, and other similar processes.

For the last one and a half or two years I have been involved in projects related to saving resources in the browser: CPU, memory, and battery.

You will say - Kostya, in the courtyard of 2019, whence problems with resources? You can buy any device you want with any resources. But if we turn to the open statistics of Mozilla, we see that half of the users have 4 GB of memory or less. And many users who have one or two physical cores, they make up a considerable share of your audience. In this world we live.

How many of you often see this tab? This is exactly what happens with ordinary users who have little RAM and old computers.

What to do? The problem is not a secret for anyone; they started to actively struggle with it three years ago. Since then, the nuts are gradually tightened in different ways. I'll show you an example that went around the Stack Overflow around 2016. There is a fairly simple snippet. This piece simply updates the title and sets the time that has passed since the last launch of this function. What should be the ideal? Every 100 ms in the title should be written + -100. If we get lucky.

But what if we open and do like this? Did someone rush on this? The question went to Stack Overflow: what the hell do Cookie Clicker stop working in my background tabs? It was one of the first Chromium initiatives to reduce CPU consumption in the browser. The idea was that if the user does not use the tab now, it means that he doesn’t need it now - let's suppress JS on it.

The browser tries to maintain the CPU load on this tab at about 1% - it starts to pause all timers, perform JS, etc. This is one of the first steps into a brighter future.

After some time in the browser, you get the situation that the background tabs stop working altogether. This is the very bright future of which I speak. According to the plans of Chromium, which they voiced on the last BlinkOn, in 2020 they plan to do this: give the tab to load and if it is in the background, it will not do anything. You should always be ready for this.

In Yandex. Browser, we also attended to such a problem, but decided it was less categorical and did not break the entire web. We created a power saving mode, which turns off decoding on the processor and leaves only decoding on the video card, and also lowers the FPS and disables some animations that are not needed right now and instead of which the user should save the battery. It gave us about an hour of extra battery life. Check can any, ixbt, for example, checked .

I think some will say: Kostya, you “broke” the web, helped some users, but did not invent anything smarter. Add hardcore! How do browsers paint pages?

The concept of layers, in a nutshell, is when the browser tries to split the page into layers and draw them separately. This is done so that some animations are executed and do not force something that is static to be redrawn. The browser does this on different heuristics. For example, it tries to select a video element in a separate layer, which, obviously, is quickly and often redrawn. And if it is shown somewhere, then you do not need to redraw everything under it.

In addition, each layer is divided into such tiles - rectangles 256 by 256. In the inspector you can see something like this. There is a frame that breaks into a bunch of tiles.

What is it and why is it needed? First of all - to prioritize rendering. If we have a huge sausage on which we all draw, then why do we need all this to draw, if now the user sees only what he has in ViewPort now?

With this approach, we first draw only what the user sees right now in the ViewPort, then one tile around, then in the direction of the scroll. If the user scrolls down - draw down, if up - up. Everything else will be drawn only if we have a quota for these tiles and we can draw them, after which the user will someday see them. And maybe never.

It also helps a lot with disabilities. Suppose the user opens the page, selects a piece, and we do not need to redraw everything. We can leave most of the previous rendering. Six tiles will be redrawn here, and everything will be fine.

Just at this level several very successful optimizations were made. For example, Chromium made such optimization around 2017.

If we only have a small rendering, we only do it. Here the cursor blinks, and we redraw only the cursor area, but not the entire area of this tile. We very much save the CPU, so as not to redraw everything.

It also helps save memory. What is the problem here? Whole white rectangles. Imagine that this would be a 256 by 256 texture, four bytes per pixel. Although it would seem, this area can be encoded with only five numbers: coordinates, width, height and color.

Monochrome areas optimization was done in Chromium. If the browser understands that there are no drawings in this rectangle, that it is completely monochromatic, not transparent and still meets some conditions, then we simply say to the video card - draw a white rectangle, do not select the whole texture.

What else can you optimize? If you look at the remaining tiles, they contain a bit of content and a huge white area. We, in Yandex. Browser, thought about it and made a mechanism that was called adaptive tiling.

There is one small rectangle, tile. There is some content in the middle of the tile. We select it and - only for it - texture. Everything else is also divided into several areas, about which we speak to the video card: just draw with white color of this size.

The page also starts saving everything that is highlighted in red. On more complex pages it looks something like this.

It is important to understand that there are still a bunch of layers and each layer is drawn in this way. On each layer, you can save some amount of memory. This approach allowed us to save about 40% of video memory on average for all users.

More hardcore! Here, a little memory saved, then "broke" the web - why not "break" the web next?

In Chromium there is something like a policy: if the user does not use background tabs, if he leaves them, it means that he does not need them. If we are now without memory and the browser is going to fall now, then let's take the oldest tab that the user hasn't used for a long time, and kill it. It will remain in the interface, but the process will not be there, the whole JS will die. Is it ok or not ok? It is strange to ask such a question on the front-end party and expect any answer, except for “What are you talking about?”.

Then it is not much gone. Here are the real comments from the Chromium blog: you broke all my applications to me, there was some kind of game - and hop, it has no state. It is important to understand that there and unload handler did not work, as if we just put out this insert. The user then returns to it, and we re-download it from the network, as if nothing had happened.

Then this approach was temporarily abandoned and came with a more thoughtful serious idea. They called it discard.

What's the point? This is all the same kill tabs, only controlled. It is called the Page Lifecycle API buzzword. If you have a tab, and the user has not seen it for a long time, it can go to the frozen state. The browser speaks through the event: now I will freeze you. After processing the event, nothing at all will be executed. Do what you need, get ready.

Then, from the frozen state, it can either exit through the resume event, as if nothing happened. Or, if the browser really needs to free up memory right now, it just takes it and kills it. But if the user goes back to this tab, we will reload it, and set the field was discarded on the document.

Right now you can already subscribe to these events and catch them, somehow handle. If the tab is really killed, you can check the field was discarded. This means that you have recovered after the discard. You can restore the previous state.

We in Yandex. Browser thought a few years ago: why not apply a complex cardinal approach. They called it Hibernate.

What's the point? There are several tabs, running some kind of JS, some kind of state. A separate process is created for each tab: a video can be played here, then you leave something on the form. Hibernate comes - and there are no processes. We have them all "abandoned." But if we now switch back to these tabs, the process will return, and the entire state will be in place, the video will continue to play from the right moment, all the text in the fields will remain in place.

What have we done? Three most important things live inside each renderer: V8, in which all JS is spinning, Blink, in which all DOM is stored, and some kind of browser binding, which helps to tie everything together, with tabs and everything else.

Consider for example a sample. Here we wait for onload to happen, and add a new div element to the DOM tree. For the browser, it looks something like this.

Naturally, there is a DOM tree, it has some fields, associated objects, and there is such an entity.

In V8, the state of each node is stored, and these nodes are associated with Blink objects through a layer of bindings. What have we done? We took the serializer from V8, serialized the entire V8 state, found all the related objects in Blink, wrote positive serializers that save the entire DOM tree, serialize it, then write to disk, compressed and encrypted. And we taught the browser to recover from such a snapshot back. That is, when the user goes to such a tab, we uncompress it, decrypt it and show it to the user, restore it completely. ( Separate post about Hibernate - approx. Ed.)

Right now, Hibernate is published for everyone in stable and allows each user to save on average one or two tabs. That is, on average, one tab is always saved, or maybe two. This allows you to save memory for users who have more than 10 tabs - like we have with you, but we are not representative.

I told you how the browser is trying to help, but now each of you can do something to speed up the site and improve its performance. Today you can come and do it.

First you need to understand whether there are problems with memory.

There are certain symptoms: either the site starts to degrade, or fading appears. This usually means that the garbage collector is triggered, the whole world is frozen and nothing is drawn. Or that the site just continuously slows down - these also happen.

Need to understand if there is a problem. See what happens with JS memory.

If it jumps sharply back and forth or grows continuously, this is not a good symptom. Or continuously growing.

In this case, you can always take a snapshot through DevTools. Someone even bother with leaks in JS? If someone does not know, in JS it is quite possible to make a leak.

For example, you have a global variable where you add nodes that are not inserted into the tree. You forget about them, and then it turns out that they are eating hundreds of kilobytes, or even megabytes.

Strictly speaking, it is not clear what to do with such detached-nodes. You can pull them out of the tree, and then insert them back. Because usually the state of the picture or something else remains with them. This way you can find and treat the problem.

You can also look at the memory allocation in the timeline. If you go to a systematic selection and never clears anything - this is also a bad sign, I think everyone understands that.

And you can play around with the layers on your tab. The browser generally works on heuristics in this regard — it tries to select into separate layers those objects that it considers often changing. But sometimes it does this not very well, and it turns out that you have so many layers that consume a lot of memory. You can always see if you have such a situation, eliminate some layers and find out how much memory this layer occupies.

Another layer of problems - with performance. setTimeout is a bad idea for animation. It does not work the way the browser wants to render the page. It may not work in phase: the browser has requested the frame, but the animation has not yet, because setTimeout has not worked. Or it can work, even when you do not need to draw anything. The user has left, some background work remains, but we will work with him through setTimeout.

Here is the correct approach that your callback will call only when the browser needs to draw this page.

I also want to remind you that if the browser wants to draw 60 frames per second, it means that for each frame it needs about 16 ms.

And if you occupy the main stream for longer than 16 ms, then you have a guaranteed frame drop, which is quite unpleasant for the user: the site starts to crash. Therefore, all the hard work correctly put in the background threads that you just return the result.

Or another approach is to use microtasking. Create a queue of tasks, process it after some time, until the quota is over, and let the browser quietly draw a page.

Naturally, you can add new layers. The browser works on heuristics and tries to select the layer where it sees fit. But if you know that right now this element will be animated, it is better to explicitly tell the browser that you need to select this element in a separate layer. Then it will be rendered more efficiently.

The latest interesting approach is to complain. If you have problems, it is always helpful to come and talk. Maybe this problem is easy to treat, and we will help each other.

A little background. This feature is now in the process of working out. The search results page development team has come to us. They have such a metric as the time to draw the first snippet. If the user previously sees the first link that you need to click on, and which most likely gives him the correct answer, then it is much better to draw it as soon as possible. They came and asked if it was possible to somehow speed up this time, because they had already squeezed everything out.

We searched, performed perf-testing, made a prototype, and it turned out that we can do this if the site tells us that we need to draw first. We conducted tests and see that this improves our performance. Now we are testing this in production for a small audience. See what happens. Stay tuned.

The world does not stand still. The user starts to want more and more, browsers change, the web changes. This is normal. We try not only to make product features, but also to help the user in every way to get the best experience in terms of content consumption. And you always have to be ready for changes in any popular browser. Naturally, if there are problems, come, we are ready to discuss and help each other.

Here I collected various useful links:

Source: https://habr.com/ru/post/442456/

All Articles