Concurrency and error patterns hidden in code: Deadlock

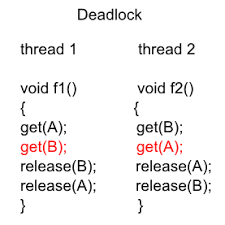

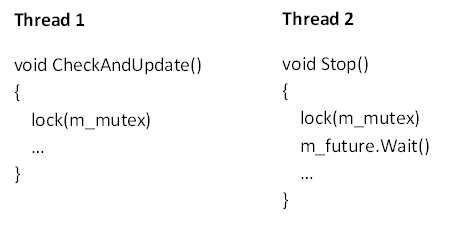

Surely, many have heard, and someone has met in practice, words such as deadlock and race condition. These concepts belong to the category of errors in the use of concurrency. If I ask you the question of what a deadlock is, you will most likely without any doubt begin to draw a classic image of the deadlock or its presentation in pseudocode. Something like that:

This information we receive at the institute, can be found in books and articles on the Internet. Such deadlock using, for example, two mutexes, in all its glory can be found in the code. But in most cases, not everything is so simple, and not everyone can see the classic error pattern in the code, if it is not presented in the usual way.

')

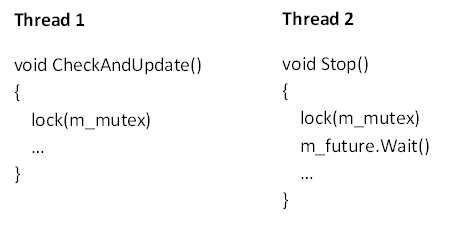

Consider the class in which we are interested in the methods StartUpdate, CheckAndUpdate and Stop, using C ++, the code is simplified as much as possible:

What you should pay attention to in the presented code:

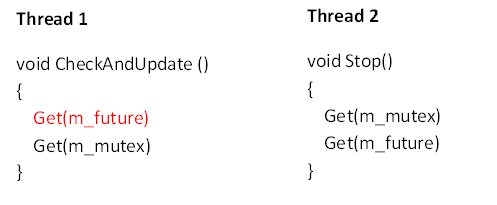

Now let's analyze all the received information and make a picture:

Taking into account the two facts presented above, it is easy to conclude that an attempt to capture a recursive mutex in one of the functions will lead to waiting for the mutex to be released if it has already been captured in another function, since the CheckAndUpdate callback is always in a separate thread.

At first glance, there is nothing suspicious about the deadlock. But to be more attentive, it all comes down to our classic picture. When a functional object starts to run, we kind of implicitly grab the m_future resource, callback directly

associated with m_future:

The order of actions leading to deadlock is as follows:

That's all: the thread making the Stop call waits for the completion of CheckAndUpdate, and another thread in turn cannot continue to work until it captures the mutex that has already been captured by the thread mentioned earlier. Quite a classic deadlock. The floor of the case is done - the cause of the problem is detected

Such a simple example with non-obvious deadlock easily comes down to the pattern of this error. Finally, I want to wish you to write reliable and thread-safe code!

This information we receive at the institute, can be found in books and articles on the Internet. Such deadlock using, for example, two mutexes, in all its glory can be found in the code. But in most cases, not everything is so simple, and not everyone can see the classic error pattern in the code, if it is not presented in the usual way.

')

Consider the class in which we are interested in the methods StartUpdate, CheckAndUpdate and Stop, using C ++, the code is simplified as much as possible:

std::recursive_mutex m_mutex; Future m_future; void Stop() { std::unique_lock scoped_lock(m_mutex); m_future.Wait(); // do something } void StartUpdate() { m_future.Wait(); m_future = Future::Schedule(std::bind(&Element::CheckAndUpdate, this), std::chrono::milliseconds(100); } void CheckAndUpdate() { std::unique_lock scoped_lock(m_mutex); //do something } What you should pay attention to in the presented code:

- recursive mutex is used. Repeated capture of a recursive mutex does not lead to waiting only if these captures occur in the same stream. At the same time, the number of mutex releases should correspond to the number of captures. If we try to capture a recursive mutex that is already captured in another thread, the thread goes into standby mode.

- the Future :: Schedule function starts (after n milliseconds) the callback passed to it in a separate thread

Now let's analyze all the received information and make a picture:

Taking into account the two facts presented above, it is easy to conclude that an attempt to capture a recursive mutex in one of the functions will lead to waiting for the mutex to be released if it has already been captured in another function, since the CheckAndUpdate callback is always in a separate thread.

At first glance, there is nothing suspicious about the deadlock. But to be more attentive, it all comes down to our classic picture. When a functional object starts to run, we kind of implicitly grab the m_future resource, callback directly

associated with m_future:

The order of actions leading to deadlock is as follows:

- CheckAndUpdate is scheduled to run, but the callback does not start immediately, after n milliseconds.

- The Stop method is called, and then it started: trying to capture the mutex - one resource is captured, we start to wait for the completion of the m_future - the object has not yet been called, we are waiting.

- CheckAndUpdate execution begins: trying to capture a mutex - we can not, the resource is already captured by another thread, we are waiting for release.

That's all: the thread making the Stop call waits for the completion of CheckAndUpdate, and another thread in turn cannot continue to work until it captures the mutex that has already been captured by the thread mentioned earlier. Quite a classic deadlock. The floor of the case is done - the cause of the problem is detected

Now a little about how to fix it.

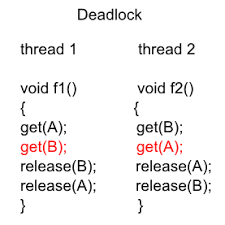

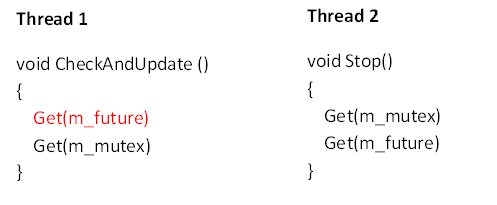

Approach 1

The order of seizure of resources should be the same, this will avoid the deadlocks. That is, you need to see if it is possible to change the order in which resources are captured in the Stop method. Since here the deadlock case is not quite obvious, and there is no obvious seizure of the m_future resource in CheckAndUpdate, we decided to think over another solution in order to avoid returning an error in the future.

Approach 2

The order of seizure of resources should be the same, this will avoid the deadlocks. That is, you need to see if it is possible to change the order in which resources are captured in the Stop method. Since here the deadlock case is not quite obvious, and there is no obvious seizure of the m_future resource in CheckAndUpdate, we decided to think over another solution in order to avoid returning an error in the future.

Approach 2

- Check if you can stop using mutex in CheckAndUpdate.

- Once we use the synchronization mechanism, we restrict access to some resources. Perhaps it will be enough for you to convert these resources into atomics (as it was with us), access to which is already thread-safe.

- It turned out that the variables, access to which was limited, can be easily converted into atomics, so the mentioned mutex is successfully removed.

Such a simple example with non-obvious deadlock easily comes down to the pattern of this error. Finally, I want to wish you to write reliable and thread-safe code!

Source: https://habr.com/ru/post/442448/

All Articles