I look and listen where I want. We integrate Chromecast into Android application

On the street, I often listen to audiobooks and podcasts from a smartphone. When I come home, I want to continue listening to them on Android TV or Google Home. But not all applications support Chromecast. And it would be convenient.

According to Google statistics over the past 3 years, the number of devices on Android TV has increased 4 times, and the number of manufacturing partners has already exceeded one hundred: smart TVs, speakers, TV set-top boxes. All of them support Chromecast. But there are still many applications in the market that clearly lack integration with it.

In this article, I want to share my experience of integrating Chromecast into an Android application for playing media content.

How it works

If you first hear the word "Chromecast", then I will try to briefly tell. In terms of use, it looks like this:

- The user is listening to music or watching a video through an application or website.

- A Chromecast device appears on the local network.

- A corresponding button should appear in the player interface.

- By clicking it, the user selects the desired device from the list. This could be a Nexus Player, Android TV, or a smart column.

- Further playback continues from this device.

Technically, something like this happens:

- Google Services track the presence of Chromecast devices on the local network through broadcasts.

- If MediaRouter is connected to your application, then you will receive an event about it.

- When the user selects a Cast device, and connects to it, a new media session opens (CastSession).

- Already in the created session we will transfer content for reproduction.

It sounds very simple.

Integration

Google has its own SDK for working with Chromecast, but it is poorly covered with documentation and its code is obfuscated. Therefore, many things had to check at random. Let's get everything in order.

Initialization

First we need to connect the Cast Application Framework and MediaRouter:

implementation "com.google.android.gms:play-services-cast-framework:16.1.0" implementation "androidx.mediarouter:mediarouter:1.0.0" Then Cast Framework should get the application ID (more on that later), and the types of supported media content. That is, if our application plays only video, then casting to the Google Home column will be impossible, and it will not be in the list of devices. To do this, create the OptionsProvider implementation:

class CastOptionsProvider: OptionsProvider { override fun getCastOptions(context: Context): CastOptions { return CastOptions.Builder() .setReceiverApplicationId(BuildConfig.CHROMECAST_APP_ID) .build() } override fun getAdditionalSessionProviders(context: Context): MutableList<SessionProvider>? { return null } } And announce it in the Manifest:

<meta-data android:name="com.google.android.gms.cast.framework.OPTIONS_PROVIDER_CLASS_NAME" android:value="your.app.package.CastOptionsProvider" /> Register application

In order for Chromecast to work with our application, you need to register it in the Google Cast SDK Developers Console . This will require a Chromecast developer account (not to be confused with a Google Play developer account). At registration you will have to make a one-time fee of $ 5. After publishing the ChromeCast Application, you need to wait a bit.

In the console, you can change the appearance of the Cast player for devices with a screen and see the casting analytics within the application.

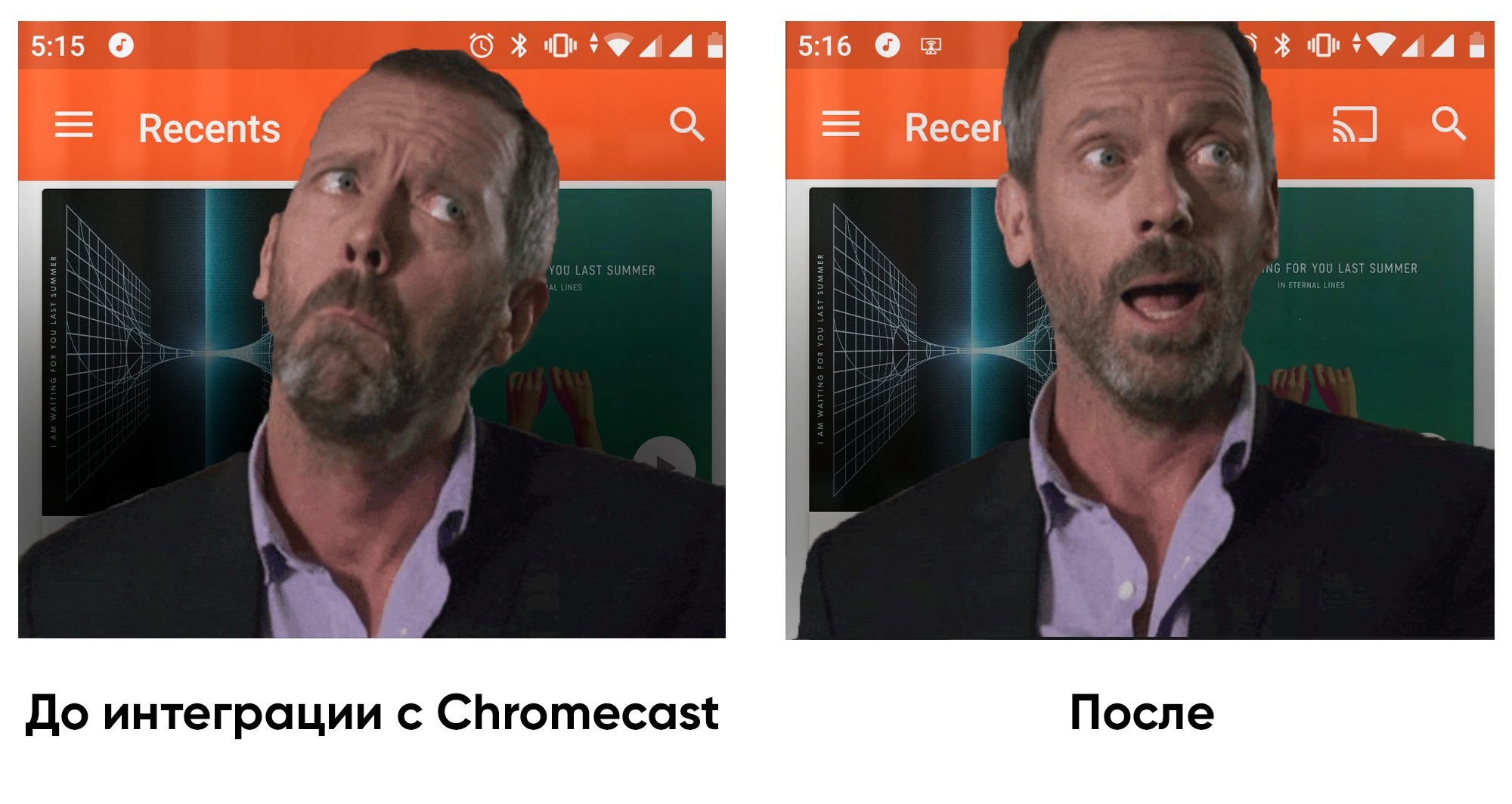

MediaRouter

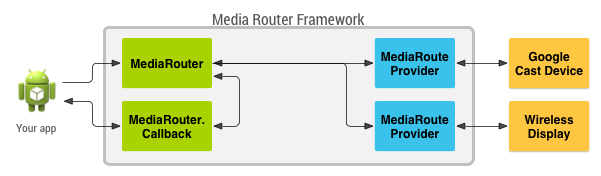

MediaRouteFramework is a mechanism that allows you to find all remote playback devices near the user. It can be not only Chromecast, but also remote displays and columns using third-party protocols. But we are interested in Chromecast.

In MediaRouteFramework, there is a View that reflects the state of the media router. There are two ways to connect it:

1) Through the menu:

<?xml version="1.0" encoding="utf-8"?> <menu xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto"> ... <item android:id="@+id/menu_media_route" android:title="@string/cast" app:actionProviderClass="androidx.mediarouter.app.MediaRouteActionProvider" app:showAsAction="always"/> ... </menu> 2) Through the layout:

<androidx.mediarouter.app.MediaRouteButton android:id="@+id/mediaRouteButton" android:layout_width="wrap_content" android:layout_height="wrap_content" android:mediaRouteTypes="user"/> And from the code you only need to register the button in the CastButtonFactory. then the current state of the media router will be thrown into it:

CastButtonFactory.setUpMediaRouteButton(applicationContext, view.mediaRouteButton) Now that the app is registered and MediaRouter is configured, you can connect to ChromeCast devices and open sessions to them.

Casting Media Content

ChromeCast supports three main types of content:

- Audio;

- Video;

- Photo.

Depending on the settings of the player, the type of media content and the cast device, the player interface may differ.

Cast session

So, the user chose the desired device, CastFramework opened a new session. Now our task is to respond to this and transfer the device information for playback.

To find out the current state of the session and subscribe to the update of this state, use the SessionManager object:

private val mediaSessionListener = object : SessionManagerListener<CastSession> { override fun onSessionStarted(session: CastSession, sessionId: String) { currentSession = session // , checkAndStartCasting() } override fun onSessionEnding(session: CastSession) { stopCasting() } override fun onSessionResumed(session: CastSession, wasSuspended: Boolean) { currentSession = session checkAndStartCasting() } override fun onSessionStartFailed(session: CastSession, p1: Int) { stopCasting() } override fun onSessionEnded(session: CastSession, p1: Int) { // do nothing } override fun onSessionResumeFailed(session: CastSession, p1: Int) { // do nothing } override fun onSessionSuspended(session: CastSession, p1: Int) { // do nothing } override fun onSessionStarting(session: CastSession) { // do nothing } override fun onSessionResuming(session: CastSession, sessionId: String) { // do nothing } } val sessionManager = CastContext.getSharedInstance(context).sessionManager sessionManager.addSessionManagerListener(mediaSessionListener, CastSession::class.java) And we can also find out if there is an open session at the moment:

val currentSession: CastSession? = sessionManager.currentCastSession We have two basic conditions under which we can begin casting:

- Session is already open.

- There is content for casting.

With each of these two events, we can check the status, and if everything is in order, then start casting.

Casting

Now that we have something to cast and where to cast, we can move on to the most important thing. In addition, CastSession has a RemoteMediaClient object, which is responsible for the playback state of media content. We will work with him.

Create MediaMetadata where information about the author, album, etc. will be stored. It is very similar to what we transfer to the MediaSession when we start local playback.

val mediaMetadata = MediaMetadata(MediaMetadata.MEDIA_TYPE_MUSIC_TRACK ).apply { putString(MediaMetadata.KEY_TITLE, “In C”) putString(MediaMetadata.KEY_ARTIST, “Terry Riley”) mediaContent?.metadata?.posterUrl?.let { poster -> addImage(WebImage(Uri.parse(“https://habrastorage.org/webt/wk/oi/pf/wkoipfkdyy2ctoa5evnd8vhxtem.png”))) } } There are many parameters for MediaMetadata, and it is better to see them in the documentation. I was pleasantly surprised that you can add an image not via bitmap, but simply by a link inside the WebImage.

The MediaInfo object carries information about the metadata of the content and will talk about where the media content comes from, what type it is, how to play it:

val mediaInfo = MediaInfo.Builder(“https://you-address.com/in_c.mp3”) .setContentType(“audio/mp3”) .setStreamType(MediaInfo.STREAM_TYPE_BUFFERED) .setMetadata(mediaMetadata) .build() Recall that contentType is a type of content according to the MIME specification.

You can also send ad inserts to MediaInfo:

- setAdBreakClips - accepts a list of AdBreakClipInfo commercials with links to content, title, timing, and the time through which the ad becomes skipped.

- setAdBreaks - information on markup and timing of commercial inserts.

In MediaLoadOptions, we describe how we will handle the media stream (speed, starting position). The documentation also says that through setCredentials, you can send the request header for authorization, but my requests from Chromecast did not include the requested authorization fields.

val mediaLoadOptions = MediaLoadOptions.Builder() .setPlayPosition(position!!) .setAutoplay(true) .setPlaybackRate(playbackSpeed) .setCredentials(context.getString(R.string.bearer_token, authGateway.authState.accessToken!!)) .setCredentialsType(context.getString(R.string.authorization_header_key)) .build() Once everything is ready, we can give all the data to the RemoteMediaClient, and the Chromecast will start playing. It is important to pause local playback.

val remoteMediaClient = currentSession!!.remoteMediaClient remoteMediaClient.load(mediaInfo, mediaLoadOptions) Event handling

The video began to play, and then what? What if the user pauses on the TV? To find out about events happening on the Chromecast side, RemoteMediaClient has callbacks:

private val castStatusCallback = object : RemoteMediaClient.Callback() { override fun onStatusUpdated() { // check and update current state } } remoteMediaClient.registerCallback(castStatusCallback) Finding current progress is also easy:

val periodMills = 1000L remoteMediaClient.addProgressListener( RemoteMediaClient.ProgressListener { progressMills, durationMills -> // show progress in your UI }, periodMills ) Integration experience with existing player

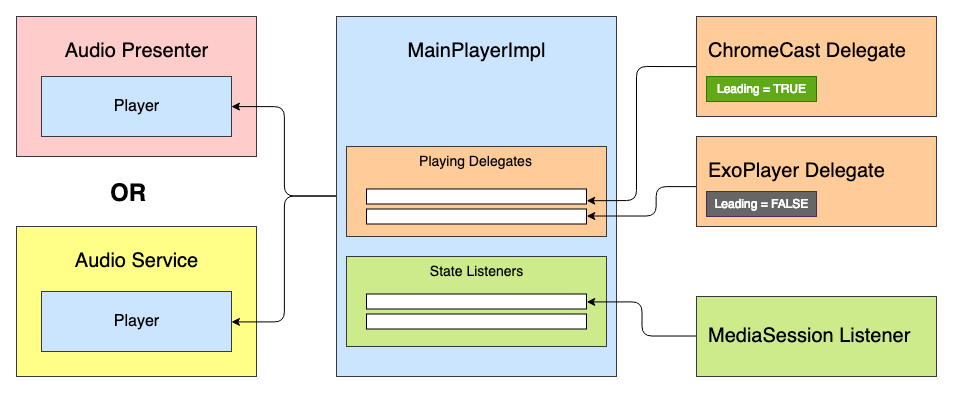

In the application I was working on, there was already a ready-made media player. The task was to integrate Chromecast support into it. At the heart of the media player lay State Machine, and the first thought was to add a new state: "CastingState". But this idea was immediately rejected, because each state of the player reflects the playback state, and it does not matter what is the implementation of ExoPlayer or ChromeCast.

Then the idea came to make a certain system of delegates with the prioritization and processing of the “life cycle” of the player. All delegates can receive player status events: Play, Pause, etc. - but only the lead delegate will play the media content.

We have about the following player interface:

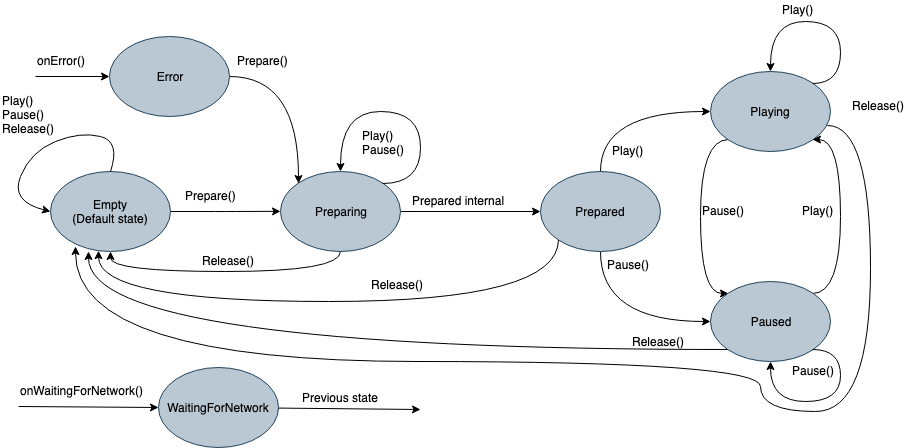

interface Player { val isPlaying: Boolean val isReleased: Boolean val duration: Long var positionInMillis: Long var speed: Float var volume: Float var loop: Boolean fun addListener(listener: PlayerCallback) fun removeListener(listener: PlayerCallback): Boolean fun getListeners(): MutableSet<PlayerCallback> fun prepare(mediaContent: MediaContent) fun play() fun pause() fun release() interface PlayerCallback { fun onPlaying(currentPosition: Long) fun onPaused(currentPosition: Long) fun onPreparing() fun onPrepared() fun onLoadingChanged(isLoading: Boolean) fun onDurationChanged(duration: Long) fun onSetSpeed(speed: Float) fun onSeekTo(fromTimeInMillis: Long, toTimeInMillis: Long) fun onWaitingForNetwork() fun onError(error: String?) fun onReleased() fun onPlayerProgress(currentPosition: Long) } } Inside it will have a State Machine with such a multitude of states:

- Empty - the initial state before initialization.

- Preparing - the player initializes the playback of media content.

- Prepared - media is loaded and ready to play.

- Playing

- Paused

- WaitingForNetwork

- Error

Previously, each state during initialization gave the command to ExoPlayer. Now it will give the command to the list of Playing delegates, and the “Lead” delegate will be able to process it. Since the delegate implements all the functions of the player, it can also be inherited from the player interface and, if necessary, used separately. Then the abstract delegate will look like this:

abstract class PlayingDelegate( protected val playerCallback: Player.PlayerCallback, var isLeading: Boolean = false ) : Player { fun setIsLeading(isLeading: Boolean, positionMills: Long, isPlaying: Boolean) { this.isLeading = isLeading if (isLeading) { onLeading(positionMills, isPlaying) } else { onDormant() } } final override fun addListener(listener: Player.PlayerCallback) { // do nothing } final override fun removeListener(listener: Player.PlayerCallback): Boolean { return false } final override fun getListeners(): MutableSet<Player.PlayerCallback> { return mutableSetOf() } /** * */ open fun netwarkIsRestored() { // do nothing } /** * */ abstract fun onLeading(positionMills: Long, isPlaying: Boolean) /** * */ abstract fun onIdle() /** * . * , * . */ abstract fun readyForLeading(): Boolean } For example, I simplified the interfaces. In reality, a little more events.

Delegates can be any number, as well as sources of reproduction. A delegate for Chromecast might look something like this:

class ChromeCastDelegate( private val context: Context, private val castCallback: ChromeCastListener, playerCallback: Player.PlayerCallback ) : PlayingDelegate(playerCallback) { companion object { private const val CONTENT_TYPE_VIDEO = "videos/mp4" private const val CONTENT_TYPE_AUDIO = "audio/mp3" private const val PROGRESS_DELAY_MILLS = 500L } interface ChromeCastListener { fun onCastStarted() fun onCastStopped() } private var sessionManager: SessionManager? = null private var currentSession: CastSession? = null private var mediaContent: MediaContent? = null private var currentPosition: Long = 0 private val mediaSessionListener = object : SessionManagerListener<CastSession> { override fun onSessionStarted(session: CastSession, sessionId: String) { currentSession = session castCallback.onCastStarted() } override fun onSessionEnding(session: CastSession) { currentPosition = session.remoteMediaClient?.approximateStreamPosition ?: currentPosition stopCasting() } override fun onSessionResumed(session: CastSession, wasSuspended: Boolean) { currentSession = session castCallback.onCastStarted() } override fun onSessionStartFailed(session: CastSession, p1: Int) { stopCasting() } override fun onSessionEnded(session: CastSession, p1: Int) { // do nothing } override fun onSessionResumeFailed(session: CastSession, p1: Int) { // do nothing } override fun onSessionSuspended(session: CastSession, p1: Int) { // do nothing } override fun onSessionStarting(session: CastSession) { // do nothing } override fun onSessionResuming(session: CastSession, sessionId: String) { // do nothing } } private val castStatusCallback = object : RemoteMediaClient.Callback() { override fun onStatusUpdated() { if (currentSession == null) return val playerState = currentSession!!.remoteMediaClient.playerState when (playerState) { MediaStatus.PLAYER_STATE_PLAYING -> playerCallback.onPlaying(positionInMillis) MediaStatus.PLAYER_STATE_PAUSED -> playerCallback.onPaused(positionInMillis) } } } private val progressListener = RemoteMediaClient.ProgressListener { progressMs, durationMs -> playerCallback.onPlayerProgress(progressMs) } // Playing delegate override val isReleased: Boolean = false override var loop: Boolean = false override val isPlaying: Boolean get() = currentSession?.remoteMediaClient?.isPlaying ?: false override val duration: Long get() = currentSession?.remoteMediaClient?.streamDuration ?: 0 override var positionInMillis: Long get() { currentPosition = currentSession?.remoteMediaClient?.approximateStreamPosition ?: currentPosition return currentPosition } set(value) { currentPosition = value checkAndStartCasting() } override var speed: Float = SpeedProvider.default() set(value) { field = value checkAndStartCasting() } override var volume: Float get() = currentSession?.volume?.toFloat() ?: 0F set(value) { currentSession?.volume = value.toDouble() } override fun prepare(mediaContent: MediaContent) { sessionManager = CastContext.getSharedInstance(context).sessionManager sessionManager?.addSessionManagerListener(mediaSessionListener, CastSession::class.java) currentSession = sessionManager?.currentCastSession this.mediaContent = mediaContent playerCallback.onPrepared() } override fun play() { if (isLeading) { currentSession?.remoteMediaClient?.play() } } override fun pause() { if (isLeading) { currentSession?.remoteMediaClient?.pause() } } override fun release() { stopCasting(true) } override fun onLeading(positionMills: Long, isPlaying: Boolean) { currentPosition = positionMills checkAndStartCasting() } override fun onIdle() { // TODO } override fun readyForLeading(): Boolean { return currentSession != null } // internal private fun checkAndStartCasting() { if (currentSession != null && mediaContent?.metadata != null && isLeading) { val mediaMetadata = MediaMetadata(getMetadataType(mediaContent!!.type)).apply { putString(MediaMetadata.KEY_TITLE, mediaContent?.metadata?.title.orEmpty()) putString(MediaMetadata.KEY_ARTIST, mediaContent?.metadata?.author.orEmpty()) mediaContent?.metadata?.posterUrl?.let { poster -> addImage(WebImage(Uri.parse(poster))) } } val mediaInfo = MediaInfo.Builder(mediaContent!!.contentUri.toString()) .setContentType(getContentType(mediaContent!!.type)) .setStreamType(MediaInfo.STREAM_TYPE_BUFFERED) .setMetadata(mediaMetadata) .build() val mediaLoadOptions = MediaLoadOptions.Builder() .setPlayPosition(currentPosition) .setAutoplay(true) .setPlaybackRate(speed.toDouble()) .build() val remoteMediaClient = currentSession!!.remoteMediaClient remoteMediaClient.unregisterCallback(castStatusCallback) remoteMediaClient.load(mediaInfo, mediaLoadOptions) remoteMediaClient.registerCallback(castStatusCallback) remoteMediaClient.addProgressListener(progressListener, PROGRESS_DELAY_MILLS) } } private fun stopCasting(removeListener: Boolean = false) { if (removeListener) { sessionManager?.removeSessionManagerListener(mediaSessionListener, CastSession::class.java) } currentSession?.remoteMediaClient?.unregisterCallback(castStatusCallback) currentSession?.remoteMediaClient?.removeProgressListener(progressListener) currentSession?.remoteMediaClient?.stop() currentSession = null if (isLeading) { castCallback.onCastStopped() } } private fun getContentType(mediaType: MediaContent.Type) = when (mediaType) { MediaContent.Type.AUDIO -> CONTENT_TYPE_AUDIO MediaContent.Type.VIDEO -> CONTENT_TYPE_VIDEO } private fun getMetadataType(mediaType: MediaContent.Type) = when (mediaType) { MediaContent.Type.AUDIO -> MediaMetadata.MEDIA_TYPE_MUSIC_TRACK MediaContent.Type.VIDEO -> MediaMetadata.MEDIA_TYPE_MOVIE } } Before giving the command about reproduction, we need to decide on the leading delegate. To do this, they are added in order of priority to the player, and each of them can give their state of readiness in the readyForLeading () method. The full code of the example can be seen on GitHub .

Is there life after ChromeCast

After I integrated Chromecast support into the application, it became more pleasant for me to come home and enjoy audio books not only through headphones, but also through Google Home. As for the architecture, the implementation of players in different applications may vary, so this approach will not be appropriate everywhere. But for our architecture it came up. I hope this article was useful, and in the near future there will be more applications that can integrate with the digital environment!

')

Source: https://habr.com/ru/post/442300/

All Articles