Emergency Room: The secret life of Facebook moderators in the US

Warning: the article contains a description of racism, violence and mental illness.

Panic attacks began in Chloe after she watched a man die.

')

She spent the last three and a half weeks at trainings, trying to toughen and resist the daily attacks of unpleasant posts: hate speech , violent attacks, pornographic images. In a few days, she will assume the position of full-time FB content moderator - a position that in her company, a professional services provider, Cognizant, is vaguely called a “process performer”.

At this stage of training, Chloe will have to deal with moderation of posts in the FB in front of other interns. When it comes its turn, it passes to the front of the room, where the video shown on the monitor is sent to the largest social network in the world. None of the interns had ever seen him, including Chloe. She presses the start button.

The video shows the murder of a man. Someone sticks a knife into him, dozens of times, he shouts and begs for mercy. Chloe's task is to tell the audience whether to delete this video. She knows that in Section 13 of FB Public Standards, placing videos that depict the murder of one or several people is prohibited. When Chloe explains this to the whole class, she hears her voice tremble.

Returning to the place, Chloe feels an irresistible desire to sob. The next intern goes forward to evaluate the next post, but Chloe is not able to concentrate. She leaves the room and begins to sob so much that it becomes difficult to breathe.

No one is trying to calm her down. She was hired for this position. And for 1000 people who, like Chloe, are engaged in moderating FB from an office in Phoenix, and for 15,000 moderators around the world, today is just another working day.

Over the past three months, I interviewed a dozen former and current Cognizant employees working in Phoenix. All of them signed non-disclosure agreements where they pledged not to discuss their work on the FB - and not even recognize that the FB is a Cognizant client. The veil of secrecy should protect employees from users who may become angry because of the actions of moderators and try to resolve the situation with the well-known contractor FB. The agreement should also prevent the distribution of the personal information of FB users by the contractor’s staff, as now the privacy concerns are under scrutiny.

But moderators told me that this secrecy also isolates Cognizant and FB from criticism about the conditions of work in these companies. They are forced not to discuss the emotional burden that work has on them, even with their loved ones, and this leads to increased feelings of isolation and anxiety. To protect them from retaliation from both employers and FB users, I agreed to use pseudonyms for all the people mentioned here, with the exception of the business process services director, Bob Duncan, and FB Global Partnership Management Director Mark Davidson .

In general, employees describe the workspace as constantly threatening to collapse into chaos. In this environment, employees survive, telling each other jokes from the category of black humor about suicide, and smoking weed in the breaks to muffle emotions. Here, an employee can be dismissed for just a few mistakes a week, and the rest live in fear of former colleagues who may be plotting revenge.

In this place, in contrast to the goodies that FB indulges its employees , the leaders of the teams are engaged in the micromanagement of moderators even when they go to the toilet or pray; here workers who were desperately trying to get a portion of dopamine in an atmosphere of suffering were found having sex under the stairs and in the rooms for expressing milk; here, people begin to feel anxiety at the training stage, and continue to suffer from the traumatic syndrome for a long time after being fired; and the psychological services offered by Cognizant for its employees are terminated immediately after their dismissal.

The moderators told me that videos and memes about conspiracy theories that they have to watch every day gradually change their views to more radical ones. One auditor agitates for a flat Earth. A former employee told me that he had begun to question certain aspects of the Holocaust. Another former employee said that he had marked all the evacuation routes from his own house and was holding a gun next to his bed, and also said: “I don’t believe that 9/11 terrorists attacked us”.

Chloe cries a little in the rest room and then in the bathroom, but then she starts to worry that she will miss an oversized piece of training. She was eager to get this job when she sent her resume, because she had recently graduated from college, and she has few other options. When she becomes a full-time moderator, she will receive $ 15 per hour - this is $ 4 more than the minimum wage in Arizona, where she lives, and more than can be expected from most retailer jobs.

Tears stop, breathing is restored. When she comes back, one of her colleagues discusses the next video with violence. She sees the drone shooting at people from the air. Chloe sees how human figures lose mobility, and then die.

She leaves the room again.

In the end, the administrator finds her in the toilet and hugs her weakly. Cognizant offers psychological services to employees, but it does not work full time and has not arrived at the office yet. Chloe is waiting for him for more than half an hour.

When a psychologist takes her, she explains that she has undergone a panic attack. He tells her that after graduation she will have more control over the video on the FB than in the training room. You can pause the video, he says, or watch it without sound. Concentrate on breathing, he says. Make sure not to get too carried away with the material being viewed.

“He told me not to worry, and that I’ll probably still be able to do this job,” says Chloe. Then he recovers: “He said that I should not worry and that I would be able to do this work.”

On May 3, 2017, Mark Zuckerberg announced the expansion of the FB " public operations " team. New employees, who will be added to the existing army of 4,500 moderators, will be responsible for reviewing each post that was reported to violate community standards. By the end of 2018, in response to criticism of the domination of cruel and exploitative content on the social network, the FB sent more than 30,000 employees to fight for security - and about half of them are engaged in moderation.

There are full-time employees among the moderators, but FB actively relies on contractors. Ellen Silver, vice president of operations for the FB, said in a blog last year that the use of contractors allowed the FB " global scaling " - moderators can work 24 hours a day, evaluate posts made in more than 50 languages in 20 offices around the world . the whole world.

The use of labor contractors has a practical advantage for FB: it costs radically cheaper. The average FB employee earns $ 240,000 annually if you add up salary, bonuses and promotions. A moderator working at Cognizant in Arizona will earn only $ 28,800 a year. This state of affairs allows FB to maintain high profitability. In the last quarter, the company earned a profit of $ 6.9 billion with an income of $ 16.9 billion. And although Zuckerberg warned investors that investing in security in the FB would reduce the company's profitability, in reality the profit grew by 61% compared to last year.

Since 2014, when Adrian Chen described in detail the difficult working conditions of social network moderators for Wired magazine, FB is sensitive to criticism that traumatizes the lowest paid employees. In her blog post, Silver reported that the FB estimates that potential moderators "will be able to cope with the image of violence," and tests them for their ability to withstand such.

Bob Duncan, who oversees Cognizant's content moderation in North America, says recruiters explain in detail the visual nature of the work to candidates. “We share examples of what can be seen so that they have an idea about it,” he says. - Everything is done to ensure that people understand this. And if they decide that this work is not suitable for them, they will be able to take appropriate decisions. ”

Until recently, most of the FB content moderation took place outside the United States. But with the growth in demand for labor, FB expanded its presence in its native country, and engaged offices in California, Arizona, Texas and Florida.

The US is the country of origin of the company, and one of the countries with the most popular social networks, says Davidson. The American moderators are more likely to have the cultural context necessary for evaluating content from the United States, where hate speech or intimidation can occur, for according to him, in such cases, the specific country-specific jargon is often used.

FB also worked on creating, as Davidson describes them, “the most advanced offices that would copy the office of the FB and convey all the sensations from it. It was important to do this because sometimes there are such notions on the market that our people sit in dark, dirty basements, illuminated only by the light of screens. In fact, this is not the case at all. ”

Indeed, the Cognizant office in Phoenix is neither dark nor dirty. It can be said that it resembles other offices of FB, because employees are offered office desks with computers on them. But if the FB employees at Menlo Park work in spacious, sun-drenched complexes by architect Frank Gehry , their contractors in Arizona huddle in overcrowded rooms, where a long queue in toilets, the number of which is very limited, can take away all the time available for a break. And if employees of the FB have the opportunity to plan their work on a very flexible schedule, the working day of the Cognizant employees is scheduled in seconds.

A moderator named Miguel arrives on the day shift shortly before her start at 7 am. He is one of 300 employees who eventually leaked to their workplaces in an office that occupies two floors in the Phoenix Business Park.

The entrance is monitored by security, ensuring that disgruntled former employees or users of the FB who could complain to the moderators due to deleted entries do not penetrate them. Miguel goes to his badge in the office and goes to the lockers. There are not enough lockers for everyone, so some employees keep their things there at night to ensure that no one takes them the next day.

Lockers occupy a narrow corridor that is filled with people during breaks. To protect the privacy of FB users, whose records they rate, workers are obliged to hide their phones in lockers during working hours.

Writing materials and paper also can not be taken with you, so that Miguel does not have the temptation to write down the personal information of some FB user. This policy even applies to scraps of paper type of gum wrappers. Small items, such as hand lotion, must be placed in clear plastic cosmetic bags so that they can be seen by managers.

To accommodate four daily shifts — with a large staff turnover — most people do not assign their own personal table to what they call Cognizant, the “production floor”. Miguel finds a free workstation and logs in to his software account called the Single Review Tool [SRT]. When he is ready to go, he presses the "continue reviews" button and goes deep into the queue of posts.

Last April, a year after many documents were already published in the Guardian, the FB published a community standard by which it tries to manage 2.3 billion users visiting a resource monthly. In the months that followed, Motherboard and Radiolab published detailed investigations on moderation issues, such as a huge amount of text.

Among the difficulties: a large number of records; the need to train a worldwide army of low-wage workers to consistently apply a single set of rules; almost daily changes and clarifications of these rules; lack of a cultural or political context among moderators; lack of context in the records, because of what they become ambiguous; Frequent disagreements between moderators about which rules should be applied in which cases.

Despite the difficulties in dictating the policy, FB obliges Cognizant and other contractors to honor the metric called “accuracy” above all else. In this case, accuracy means that when FB employees check a subset of the decisions of the contractors, they must agree with them. The company made the goal of accuracy of 95%, and can not reach it. Cognizant never maintained such accuracy over long periods — it is usually a little less or a little more than 90%, and at the time of publication it was somewhere at 92%.

Miguel diligently follows politics - although, as he says, she does not always make sense to him. An entry entitled “my favorite n ----” can be left because, according to the policy, this is “unequivocally positive content”. The call for “all autists must be sterilized” seems offensive to him, but this record also remains. Autism is not a “protected characteristic,” such as race or gender, so it does not violate politics. (The call to "sterilize all men" would have to be removed).

In January, the FB issued a policy update, where it was stated that the moderators should take into account the recent love circumstances of the user's life, evaluating the records describing hatred towards the sexes. The record "I hate all men" has always been against politics. But "I just broke up with my boyfriend, and I hate all men" does not contradict her.

Miguel processes entries in the queue. They get there without much order. Here is a racist joke. Here is a man having sex with livestock. Here is the video of the murder recorded by the drug cartel. Some of the recordings that Miguel watches come from FB, where, as he says, attacks and hate speech are more common; others come from Instagram, where you can post entries under a pseudonym, and violence, nudity and sex are more common there.

For each entry, Miguel must conduct two separate tests. First, he must determine if the entry violates community standards. Then he has to choose the right item that is broken. If he determines that the record needs to be removed, but chooses the “wrong” reason, it affects his assessment of accuracy.

Miguel does a good job. He correctly chooses actions for each record, trying to rid FB of the worst content, and protecting the maximum number of correct (albeit unpleasant) texts. For processing each record, it spends no more than 30 seconds, and processes up to 400 records per day.

When Miguel has a question, he raises his hand, and the “subject expert” (subject matter expert) (SME) is a contractor who is believed to be better and more fully aware of the FB policy, earning $ 1 per hour more than Miguel - suitable to him and assists. This takes time for Miguel, and although he does not have a minimum quota for the number of records, managers track his productivity and ask him to explain when this number becomes less than 200.

Out of 1,500 (or so) decisions made by Miguel in a week, the FB randomly selects 50-60 pieces for an audit. These records are being studied by another Cognizant employee working in the quality department, or QA, who also earns $ 1 more than Miguel. Then, FB staff conduct an audit of a subset of QA solutions, and an accuracy assessment is derived from all of these audits.

Miguel is skeptical about the resulting accuracy value. “Accuracy is assessed only with the consent of the employees. If we allow the auditor to sell cocaine, it will be evaluated as an “exact” decision, simply because we agreed, he says. “This number is nonsense.”

Fixing the FB on accuracy developed after years of criticism about solving problems with moderators. Every day billions of posts appear on the social network, and the FB feels pressure from all sides. In some cases, the company was criticized for doing too little - for example, when UN investigators discovered that the social network was involved in spreading hate speech during the persecution of Rohingya in Myanmar . In other cases, it was criticized for being far too far away - for example, when a moderator deleted a post where they quoted the declaration of US independence. (As a result, Thomas Jefferson was made a posthumous exception to the rule for users of the FB, prohibiting the use of phrases like "Indian savages").

One of the reasons why moderators find it hard to achieve their goal of accuracy is because they have to take into account several sources of information for each rule enforcement decision.

The canonical source of law enforcement is the guidelines of the FB public community, consisting of two sets of documents: published , and longer, internal, where more details are given on complex issues. These documents are supplemented by a second document of 15,000 words, entitled “Known Issues,” which gives additional comments and guidance on the thorny issues of moderation — something like a Talmud for the Torah guidelines. "Known issues" once occupied a long document, with which the moderators had to check daily. Last year it was included in internal guidelines to make it easier to search.

The third source of truth is internal discussions between moderators. During the emergence of urgent news such as mass executions, moderators are trying to reach a consensus about whether the video fits the criteria for removal, or whether they should just be marked as “disturbing”. But sometimes, as the moderators say, their consensus turns out to be erroneous, and managers have to walk around the office, explaining the right decision.

The fourth source is perhaps the most problematic: FB internal tools for disseminating information. Although official policy changes usually appear every second Wednesday, gradually increasing guidelines related to problems that are under development are distributed almost daily. Often, the instructions will be posted on Workplace, an enterprise version of the FB, which the company introduced in 2016. Like FB, Workplace has a specific news list that demonstrates entries depending on involvement. During the emergence of urgent news such as mass executions, managers often post conflicting information on how to moderate individual examples of content that appears on the Workplace without sorting by time. Six current and former employees told me that they made mistakes during moderation after seeing an outdated entry at the top of the list. Sometimes it seems that the FB own product works against them. Moderators feel this irony.

“It happened all the time,” says Diana, the former moderator. “It was terrible - one of the most unpleasant things that I had to do in person in order to properly do my job.” When a national tragedy occurs, such as shooting in Las Vegas in 2017, managers tell the moderators to remove the video - and then, in a separate post made a few hours later, leave it. Moderators decide based on which record from the Workplace appears first.

“It was a mess,” says Diana. “We were expected to make careful decisions, and all this spoiled our statistics.”

Workplace entries about policy changes are sometimes supplemented by sets of slides that are sent to Cognizant employees who cover specific topics — often tied to sad anniversaries like parkland shooting . However, moderators told me that similar presentations and other supporting materials often contain shameful mistakes. Over the past year, the FB confused members of the House of Representatives with senators; incorrectly indicated the date of the election; mistaken the name of the school Parkland, which was shooting.

And even despite the constantly changing set of rules, moderators practically do not leave room for error. This job is like a video game with high stakes, in which you start with hundreds of points - an ideal estimate of accuracy - and then you try to save these points by hook or by crook. If you drop below 95, your job is at risk.

If the quality manager marks the decision of Miguel as wrong, the latter can challenge it. Making the QA agree with you is, as they say, “return the point”. In the short term, the mistake is considered to be what was considered a mistake in QA, so moderators have every reason to challenge these decisions every time. Recently, Cognizant made it even harder to get a point back, requiring the moderators to appeal first to approve the SME before sending it to QA.

Sometimes controversial issues reach FB. But all the moderators I interviewed say that managers from Cognizant discourage employees from bringing questions to the client, apparently fearing that too many such cases will start to irritate the FB.

As a result, Cognizant began to invent policies on the fly. For example, although the guidelines do not directly prohibit coverage of the topic of sexual asphyxiation , three former moderators told me that the leader of their team announced that such images are permissible only in the absence of stifled fingers on the neck.

Before dismissing employees, they are offered mentoring and sent to a correctional program, which should ensure that they fully understand the guidelines. However, this often serves as a pretext for dismissing employees, as three former moderators told me. Sometimes contractors who have missed too many points bring the appeal to FB, where the final decision is made. But the company, as I was informed, does not always have time to comb through the entire queue of such requests before the employee is already dismissed.

Officially, moderators are prohibited from communicating with QA and convincing them to change their decision. But it still happens on a regular basis, as two former employees of the quality department told me.

One of them, Randy, sometimes, returning to his car after a working day, met moderators waiting for him there. 5-6 times a year, someone tried to intimidate him to change his decision. “They met me in the parking lot and threatened to knock all the crap out of me,” he says. - Never once did anyone try to talk to me politely or respectfully. It has always been: “You misjudged me! It was the chest! With fully visible areoles! ""

Concerned about his safety, Randy began carrying a hidden weapon with him to work. The dismissed employees regularly threatened to return to work and get even with their former colleagues, and Randy believed that some of them spoke very seriously. A former colleague told me that he knew about the pistol Randy carried with him and endorsed his decision, worrying that the efforts of local guards would not be enough in the event of an attack.

Duncan of Cognizant told me that the company would investigate various security and management issues that I was told about. He said that it was forbidden to bring a pistol to work, and that if the management found out about it, they would intervene and oppose the employee.

Randy quit after a year. He did not have the chance to shoot a pistol, but his anxiety did not let go. “I left part because I didn’t feel safe even at home,” he says.

Before you take a break, Miguel needs to click on a button in the browser extension to let the company know about it. (Davidson told me that "this is a standard procedure in such enterprises. So that you can monitor the work force and know who is where.")

Miguel can take two breaks of 15 minutes and one 30-minute break for lunch. At breaks, he usually encounters long lines at the toilet. For several hundred people in the office there is only one urinal and two booths in the men's room, as well as three booths in the women's one. Cognizant eventually allowed workers to use the toilets on the other floors, but it would take precious minutes to get there and return. By the time he went to the toilet and pushed through the crowd to his drawer, he had only five minutes left to look at his phone and go back to the table.

Miguel also relies on nine minutes a day for “improving well-being,” which he must use if he feels injured and needs to move away from the table. Several moderators told me that they used these minutes to go to the toilet when the lines were getting shorter. But the management eventually found out about this, and ordered the staff not to use this time to go to the toilet. (Recently, a group of moderators of FB, hired by Accenture in Austin, complained about the "inhuman" conditions associated with interruptions; FB blamed the problem on the company's misunderstood policy).

In Phoenix, Muslims who used the time to “improve their well-being” to perform one of the five daily prayers were ordered to end with this and practice religion during the breaks, as current and former employees of the company told me. And the staff with whom I spoke, it was not clear why prayer is not considered the correct use of time to "improve well-being." Cognizant declined to comment on these incidents, but a person familiar with one of these cases told me that one employee had requested 40 minutes to perform a daily prayer, and the company found these demands to be excessive.

Cognizant employees are required to deal with stress at work by visiting psychologists when they have time; by calling the hotline; using an employee assistance program that involves several psychotherapy sessions. Recently, yoga and other therapeutic activities have been added to the work week. But besides the rare visits to the psychologist, the remaining funds, as six employees told me, turn out to be inadequate. They said they coped with the stress at work in other ways: sex, drugs, and rude jokes.

In the list of places where Cognizant employees who have sex were found: toilet cabins, stairs, parking, room for expressing milk. At the beginning of 2018, the guards sent a message to the managers indicating the similar behavior of the workers, as one person familiar with the case told me. As a result, managers removed locks on the doors from the mother’s room and from some other locked rooms. (Now the mother’s room is locked again, but those who want to use it need to get the keys from the administrator).

Former moderator Sarah said that the secrecy associated with her work, together with her high complexity, leads to a strong relationship between employees. “You get very close to the other guys,” she says. - If you are not allowed to communicate with friends or family about work, it alienates people. And you feel that you are moving closer to these people. It feels like an emotional connection, although in reality it is just a relationship on the basis of common injuries. ”

Also, employees cope with problems with the help of drugs and alcohol, both in the work area and outside it. One former moderator, Lee, told me that he smoked marijuana using the wipe at work almost daily. He says that at breaks small groups of employees often went outside to smoke (it is legal to use marijuana for medical purposes in Arizona).

“It’s hard to count how many people I smoked,” says Lee. “Looking back, it's terrible - the heart is breaking. We went down, stomped, and returned to the workplace. This is unprofessional. Imagine that the moderators of the world's largest social networks smoke at work, moderating content ... ". He is silent.

Lee, who worked as a moderator for about a year, was one of several workers who believed that the blackest humor flourished there. Workers competed by sending the most racist or offensive memes to each other, he said, in order to cheer each other up. Lee, belonging to an ethnic minority, often became a subject for jokes, and he accepted these racist jokes as made out of good motives. But over time, his mental health began to trouble him.

“We were doing things that darkened our souls — call it what you will,” he says. - What can be done in this situation? The only thing that makes us laugh is harmful to us. I had to watch what jokes I say in public. I always accidentally said all sorts of offensive things - and then suddenly I remembered that I was in the grocery, and here it was impossible to say so.

There were also often jokes about harming oneself. Sarah heard her colleague once on a psychologist's question about how he was doing, replied, "I get drunk to forget it all." (But after that, the psychologist did not suggest a colleague to discuss this in more detail). On particularly bad days, Sarah says, people are discussing that it's time to “hang out on the roof” - a joke that one day Cognizant workers decide to throw it off.

Once, Sarah said, the moderators were distracted from their computers and saw a man standing on the roof of a nearby office building. Most of them saw suicides that began the same way. Moderators jumped up from their seats and ran to the windows.

But that man did not jump. In the end, everyone understood that it was one of their colleagues at the break.

Like most of the former moderators I spoke with, Chloe quit after about a year of work.

Among other things, she was worried about the spread of conspiracy theories among colleagues. One person from QA often discussed with colleagues his belief that the Earth was flat and “actively tried to attract other employees in his faith,” as another moderator told me. One of Miguel’s colleagues once mentioned Holohoax , from which Miguel realized that this person was referring to people who deny the reality of the Holocaust.

Six moderators said that conspiracy theories often received a warm reception in the office. After the Parkland shooting last year, the moderators were initially horrified by the results of the attack. But the more conspiracy theories people posted on FB and Instagram, the more often Chloe's colleagues expressed their doubts.

“People started to believe in the posts they were supposed to moderate,” she says. - They said: Oh gods, they really were not there . Oh, look at this CNN report about David Hogg - he's too old to be a schoolboy. ” People began to look for anything in Google instead of working, and to understand all these conspiracy theories. We told them: Guys, no, this is the crazy garbage that we need to moderate. What are you doing?"

But for the most part, Chloe is worried about the long-term effects of mental health work. Several moderators told me that they experienced a secondary post-traumatic stress disorder - a disorder that manifests when one watches how other people get injured. This disorder, the symptoms of which may coincide with post-traumatic stress disorder (PTSD), often occurs in physicians, psychotherapists and social workers. People experiencing a secondary disorder complain of anxiety, loss of sleep and dissociation .

Last year, a girl from California, a former FB moderator, filed a lawsuit against the company, claiming that this work at the contractor Pro Unlimited caused herPTSD. Her lawyers said that she was “trying to protect herself from the dangers of psychological trauma, which occurred because FB failed to organize a safe workplace for thousands of contractors who must provide a safe environment for FB users.” The trial is not over yet.

Chloe experienced traumatic symptoms for several months after leaving this job. She started a panic attack in the cinema while watching the movie " Mother!""when the massacre on the screen recalled the very first video she had to face during the internship. Another time she was sleeping on the couch, she suddenly heard automatic shooting and then panicked. Someone in the house turned on the television series with shooting. She says she started to “get mad, and begged them to turn it off."

Attacks made her think of her colleagues, especially those who could not get an internship and start working. "Many people simply cannot cope with the training period," she says. spend these four weeks, and by nd they are fired. As a result, they can accumulate exactly the same experience as me, but no access to psychologists, they will not. "

Davidson told me that last week, FB began to monitor the test moderators group, measuring their “sustainability”, as the company calls it - their ability to move away after seeing traumatic materials and continue to do their work. He says the company hopes to extend this test to all moderators around the world.

Randy also quit in about a year. Like Chloe, he was strongly influenced by a video where a man was stabbed. The victim was about the same age as him, and he remembers how this man, dying, called his mother.

“I see it every day,” says Randy. - I developed a fear of knives. I like cooking, but it's very hard for me to go back to the kitchen and be close to the knives. ”

This work also changed his attitude to the world. After he watched so many videos that said that 9/11 was not a terrorist attack, he began to believe in them. The video exposing the Los Angeles plot was also very convincing, he says, and now he believes that several people participated in the attack (the FBI said that it was the work of one shooter). "

Now Randy is sleeping with a gun at his side. He scrolls in his mind the paths of escape from his home in case of an attack. When he wakes up in the morning, he goes around the whole house with a gun in his hand in search of strangers.

He recently began visiting another psychotherapist when he was diagnosed with PTSD and anxious neurosis.

"I'm completely broken," says Randy. - My psychological state jumps to and fro. On one day, I’m completely happy; on another, I look like a zombie. Not that I had depression, I was just stuck. ”

He adds: “I don’t think that it is possible to do this work without earning an acute neurosis or PTSD.”

Moderators with whom I spoke often complain that local psychologists behave passively, and wait from workers until they themselves recognize signs of anxiety and depression, and start seeking help.

“They did absolutely nothing for us,” says Lee. - We only expected that we ourselves will be able to understand when we break down. Most of the local workers simply slowly degrade, and they do not even notice it. That's what kills me. ”

Last week, when I told the FB representatives about my conversations with the moderators, the company invited me to Phoenix so that I could look at this office myself. For the first time, FB allowed the journalist to visit the moderators' office in the United States since the company began building special offices here for this purpose two years ago. A representative who met me on the spot said that the stories told to me do not reflect the everyday experience of most contractors, either in Phoenix or in other offices around the world.

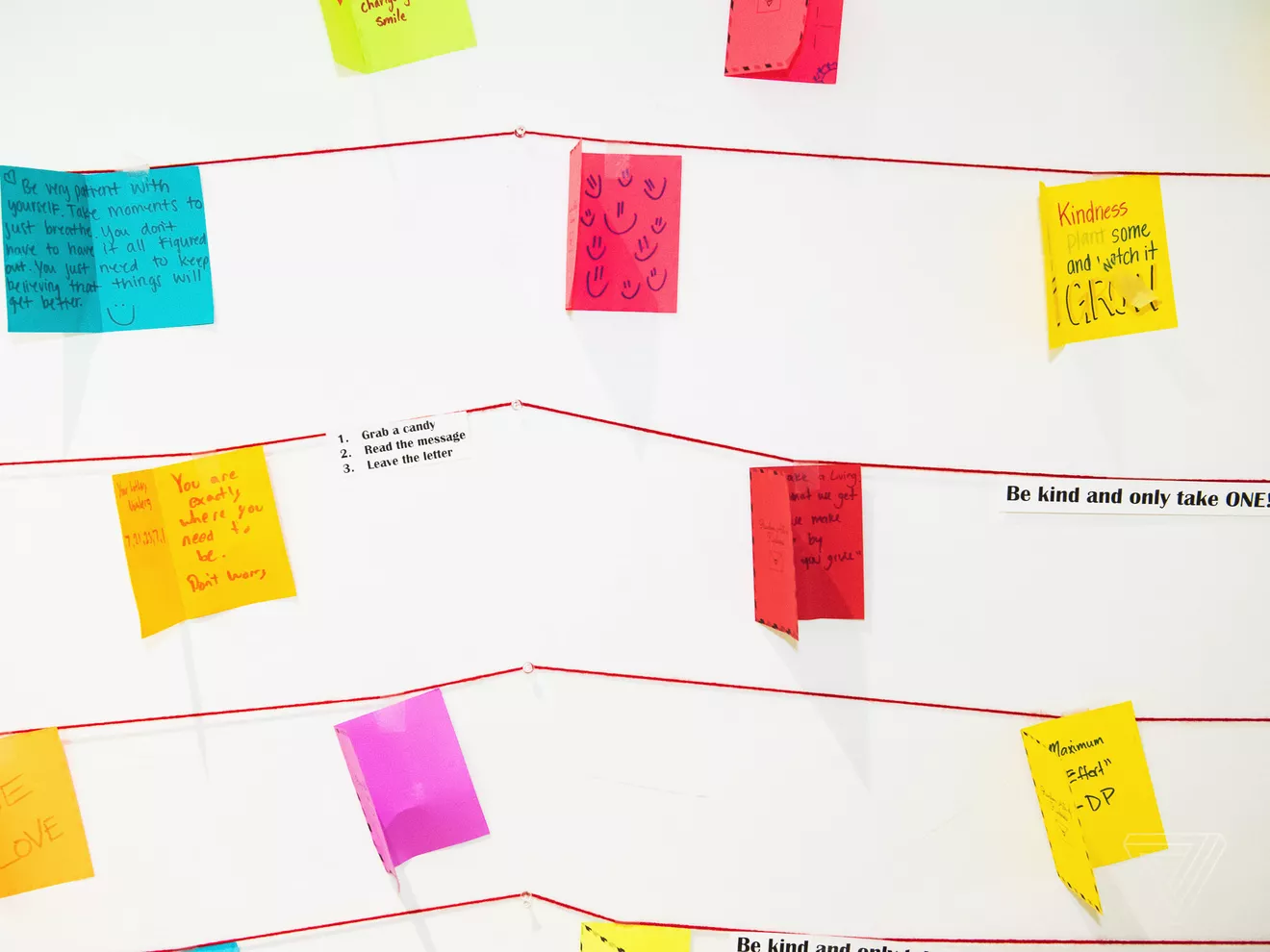

One source told me that on the eve of my arrival at the office center, where Cognizant is located, there were motivational posters hung on the walls. In general, the place turned out to be much more colorful than I expected. The neon chart on the wall described monthly activity that looked like something between a summer camp and a nursing home schedule: yoga, animal therapy, meditation, and an event inspired by the film " Mean Girls " called "we wear pink on Wednesdays". The day of my arrival was the last day of the “week of random good deeds”, during which employees were encouraged to write inspirational phrases on colored pieces of paper, which were then attached to the walls with candy.

After talking with managers from Cognizant and Facebook, I interview five employees who volunteered to do so. They are in single file in the negotiation room, and with them - the man, the head of this office. When their boss sits next to them, they acknowledge the difficulties in this job, but tell me that they feel safe, feel supported and believe that this job will provide an opportunity to reach higher-paying jobs - even if not in FB, then in the company Cognizant.

Brad, the policy manager, tells me that most of the content that they moderate with their colleagues is essentially harmless, and asks me not to exaggerate the psychological risks associated with this work.

“There is a feeling that we are being bombarded with these images and other content, but in fact the opposite is true,” says Brad, who has worked in the office for almost two years. “Most of what we see is quite calm. These are people who react too emotionally. These are people complaining about photos and videos that they just don’t want to see - and not because they have some bad content. Most of what we see is just that. ”

When I ask about the big difficulties associated with applying the policy, moderator Michael says that he is constantly faced with the need to make difficult decisions. “There are an infinite number of options for the next work, and this leads to chaos,” he says. - But because of this, the work remains interesting. There will never be such that you will work all day, knowing the answer to each question. "

In any case, says Michael, he likes this job more than the previous one at Walmart, where he was often scolded by visitors. “No one yells at me here,” he says.

The moderators leave the room in turns, and they introduce me to two full-time psychologists, one of whom is the organizer of psychological assistance in this office. They ask me not to use their real names. They say they inquire about the status of each employee every day. They say that a combination of full-time services, a hotline, and employee assistance programs is enough to protect the well-being of employees.

When asked about the risks of PTSD, a psychologist, whom I will call Logan, tells me about another psychological phenomenon: post-traumatic growth , when victims of traumatic events become stronger due to their experience. He cites the example of Malalu Yusufzai., an educational activist who was wounded in the head from one of the teenagers in the terrorist movement Tehrik-e Taliban Pakistan.

“It was an extremely traumatic event for her,” says Logan. - But, apparently, she came out of the situation, becoming stronger and more resilient. She received the Nobel Peace Prize. So there are many examples of people who have experienced difficult times and who have become stronger because of this. ”

The visit ends with a tour, during which I walk around the floor and talk with other employees. It amazes me how young they are: almost all of them are thirty or a little over thirty. While I walk around the office, all work is suspended so that I cannot accidentally see the personal information of FB users, so the staff chatting with their neighbors as I walk past. I note the inscriptions on the posters. One, from Cognizant, contains the mysterious slogan "empathy on a large scale." Another, renowned chief operating officer of FB Cheryl Sandberg, says: "What would you do if you weren't afraid?"

Randy is reminded of his gun.

Everyone I met in the office expressed great concern for the employees, and seems to be doing everything possible for them, in the context of the system in which they all have to work. FB prides itself on paying contractors at least 20% more than the minimum wage in all moderators' offices, providing full medical insurance, and psychological assistance of better quality than in the largest call centers.

And yet, the more moderators I poll, the more I have doubts about the meaningfulness of using the call center model to organize content moderation. Such a model has long been a standard among tech giants.- It is used on Twitter and Google, and therefore on YouTube. In addition to savings, outsourcing allows technology giants to quickly expand their offer of services to new markets and new languages. However, this strategy puts the most important communication and security issues into the hands of people who are paid as if they are answering customer calls from an electronics supermarket.

All the moderators I interviewed are terribly proud of their work, and they talk about it with pompous seriousness. They only want the FB staff to consider them as their colleagues, and treat them in such a way that it is even a little like equality.

“If we hadn’t done this job, FB would have looked awful,” says Lee. - We consider all this for them. And, yes, damn it, sometimes we make the wrong decisions. However, people do not understand that real human beings work here. ”

And the fact that people do not understand this, of course, was done on purpose. FB would be better to rant about his successes in the field of artificial intelligence, and that due to this in the future he will have to rely less on the work of moderator-people.

However, given the limitations of this technology and the endless speech diversity, such a day seems very far away. In the meantime, the model of the call center for moderating content is very detrimental to the many people working in it. Being in the forefront of a platform with billions of users, they perform a function that is critically important for modern civilization, and they receive less than half of what other people who work at the front line receive. They work as long as they can - and then they quit, and a non-disclosure agreement ensures that they go as deep as possible into the shadows.

And from the point of view of the FB company, it seems that they did not work there at all. And technically it is.

Source: https://habr.com/ru/post/442182/

All Articles