The book "Master Kubernetes. Orchestration of container architectures

Hi, Habrozhiteli! We recently published a book on Kubernetes version 1.10. The post covers an excerpt “Network Solutions for Kubernetes”

Hi, Habrozhiteli! We recently published a book on Kubernetes version 1.10. The post covers an excerpt “Network Solutions for Kubernetes”Networking is a broad topic. There are many ways to set up a network with connecting devices, pods and containers. Kubernetes does not limit you in this. All that this platform prescribes is a high-level network model with a flat address space for pods. Within this space, you can implement many good solutions with different capabilities and for different environments. In this section we will look at some of them and try to understand how they fit into the network model Kubernetes.

Creating bridges in hardware clusters

The simplest environment is a cluster on the "bare iron", which is a regular physical network of the L2 level. To connect containers to such a network, you can use a standard bridge for Linux. This is a rather painstaking procedure that requires experience with low-level Linux network commands, such as brctl, ip addr, ip route, ip link, nsenter, etc. You can start implementing this solution with the following guide: blog.oddbit.com/

2014/08/11 / four-ways-to-connect-a-docker / (look for the section With Linux Bridge devices).

Contiv

Contiv is a general-purpose network add-on. It is designed to connect containers via CNI and can be used with Docker (directly), Mesos, Docker Swarm and, of course, Kubernetes. Contiv deals with network policies and partially duplicates a similar object in Kubernetes. The following are some of the features of this network add-on:

')

- CNM support in libnetwork and CNI specifications;

- a feature-rich policy engine that provides security and predictable application deployment;

- best-in-kind container performance;

- multi-pending, isolation, and overlapping subnets;

- integration with IPAM and service discovery;

- wide range of physical topologies:

a) Layer 2 (VLAN) protocols;

b) Layer 3 protocols (BGP);

c) overlay networks;

d) Cisco SDN (ACI); - IPv6 support;

- scalable policy and route allocation;

- integration with application templates, including the following:

a) Docker-compose;

b) Kubernetes deployment manager;

c) distribution of the load on the services embedded in the balancer of microservices of the type “east-west” (east-west);

d) traffic isolation during storage, access control (eg, etcd / consul), network transmission and management.

Contiv has many features. This tool implements a wide range of tasks and supports various platforms, so I am not sure whether it will be the best choice for Kubernetes.

Open vSwitch

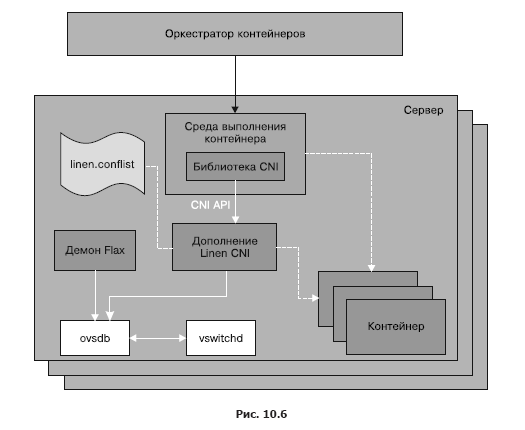

Open vSwitch is a mature solution for creating virtual (software) switches, supported by many major players in the market. The Open Virtualization Network (OVN) system allows you to build various virtual network topologies. She has a special add-on for Kubernetes, but it is very difficult to configure (see the github.com/openvswitch/ovn-kubernetes manual). The Linen CNI add-on has fewer features, but its configuration is much easier: github.com/John-Lin/linen-cni . The structure of Linen CNI is shown in fig. 10.6.

Open vSwitch can integrate physical servers, VMs, and hearths / containers into a single logical network. This system supports both overlay and physical mode.

Here are some of its key features:

- standard 802.1Q VLAN model with trunk and public ports;

- NIC binding with or without LACP for a higher level switch;

- NetFlow, sFlow® and mirroring for improved visibility;

- QoS (Quality of Service - Quality of Service) configuration plus policies;

- Geneve, GRE, VXLAN, STT and LISP tunneling;

- monitoring breaks in 802.1ag;

- OpenFlow 1.0 plus numerous add-ons;

- transactional database to store configuration with bindings for C and Python;

- high-performance redirection using Linux kernel modules.

Nuage Networks VCS

Virtualized Cloud Services (VCS) is a product from Nuage, which is a highly scalable, policy-based platform for building software-defined networks (Software-Defined Networking, SDN). This is an enterprise-level solution based on an open system Open vSwitch (for data redirection) and a multi-functional SDN controller built on open standards.

The Nuage platform integrates Kubernetes and third-party environments (virtual and hardware) into transparent overlay networks and allows you to describe detailed policies for different applications. Its real-time analysis engine provides the ability to track the visibility and security of Kubernetes applications.

In addition, all VCS components can be installed as containers. There are no specific hardware requirements.

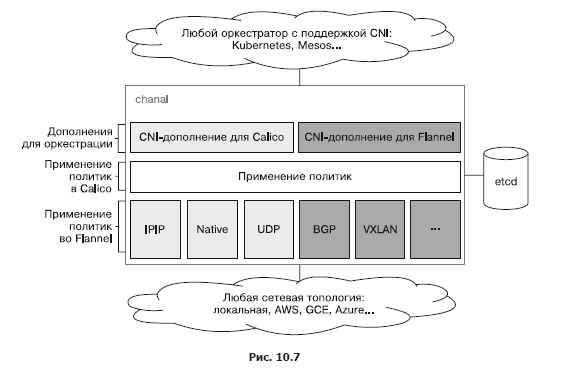

Canal

Canal is a mixture of two open source projects: Calico and Flannel. Hence the name. The Flannel project, developed by the CoreOS team, deals with the networking capabilities of containers, and Calico is responsible for network policies. Initially, they were developed separately from each other, but users wanted to use them together. The currently open Canal project is a deployment template for installing Calico and Flannel as separate CNI add-ons. Created by the founders of Calico, Tigera has been supporting both projects and even planned closer integration, but since the launch of its own solution for secure networking between applications in Kubernetes, priority has shifted towards simplifying the configuration and integration of Flannel and Calico instead of developing a unified solution. In fig. Figure 10.7 shows the current state of Canal and how it relates to orchestration platforms such as Kubernetes and Mesos.

Note that when integrating with Kubernetes Canal, it does not directly refer to etcd, but to the Kubernetes API server.

Flannel

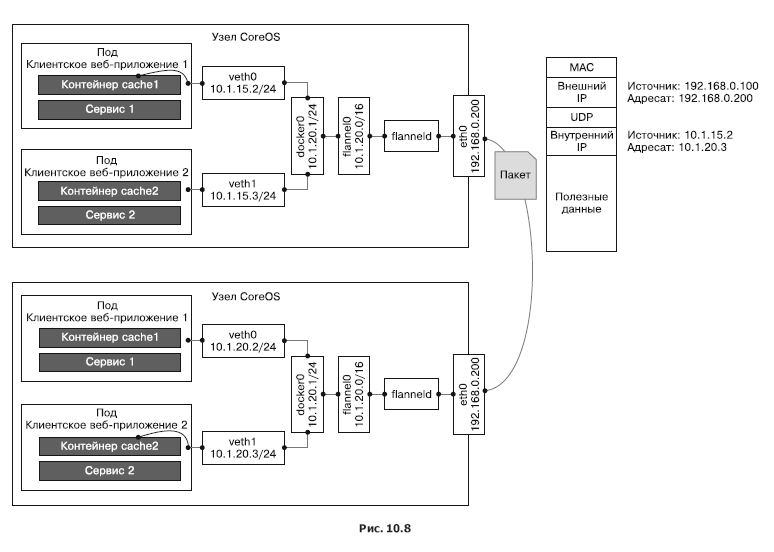

Flannel is a virtual network that allocates each node across a virtual network to work with container execution environments. At each node, the flaneld agent runs, raising the subnet based on the reserved address space stored in the etcd cluster. Packets are exchanged between containers and, by and large, a node is made by one of several servers. Most often, the server uses UDP over a TUN device, which by default tunnels traffic through port 8285 (do not forget to open it in your firewall).

In fig. 10.8 describes in detail the various components of the Flannel network, the virtual network devices it creates and how they communicate with the node and hearth through the docker0 bridge. Here you can also see the process of encapsulating UDP packets and their movement between nodes.

Other network technologies are supported:

- vxlan - encapsulates packets using VXLAN inside the kernel;

- host-gw - creates IP routes to subnets via the IP addresses of the remote server. It is worth noting that this requires a direct connection at the second network level between the servers running Flannel;

- aws-vpc - creates IP routes in the Amazon VPC routing table;

- gce - creates IP routes in the Google Compute Engine network;

- alloc - performs only the allocation of the subnet, but not the redirection of packets;

- ali-vpc - creates IP routes in the routing table of Alicloud VPC.

Calico Project

Calico is a complete solution for networking between containers and network security. It can be integrated with all major orchestration platforms and runtimes:

- Kubernetes (supplement for CNI);

- Mesos (supplement for CNI);

- Docker (add-on for libnework);

- OpenStack (add-on for Neutron).

Calico can also be deployed locally or in a public cloud while retaining all the features. The application of network policies can depend on the load, which provides a clear control of traffic and ensures that packets will always reach the desired recipients. Calico can automatically import network policies from orchestration platforms. In fact, he is responsible for implementing network policies in Kubernetes.

Romana

Romana is a modern solution for networking between containers. It was originally designed for use in the cloud and operates at the third network level, based on standard methods for managing IP addresses. Romana allows you to isolate entire networks by creating gateways and routes for them using Linux-based servers. Work at the third network level does not require encapsulation. Network policy applies to all endpoints and services in the form of a distributed firewall. Romana facilitates local and hybrid deployment between different cloud platforms, since it no longer needs to configure virtual overlay networks.

The virtual IP addresses that recently appeared in Romana allow local users to open access to their services on second-tier local area networks using external addresses and service specifications.

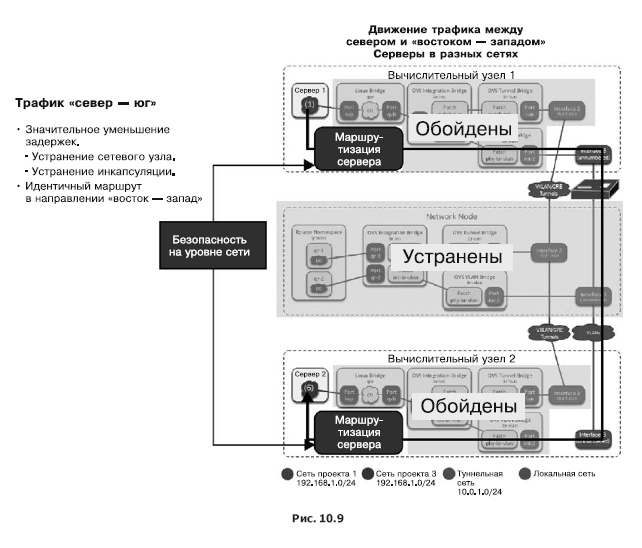

The Romana developers claim that their approach significantly improves performance. In fig. 10.9 shows how, along with the rejection of VXLAN encapsulation, you can get rid of a lot of overhead.

Weave net

The main features of the Weave Net project are ease of use and lack of configuration. It uses VXLAN encapsulation and installs a micro-DNS on each node. As a developer, you will be dealing with a high level of abstraction. After you give names to your containers, Weave Net will allow you to connect to standard ports and use the appropriate services. This helps in migrating existing applications to microservice platforms and containerization. Weave Net provides a CNI add-on to work with Kubernetes and Mesos. Starting with Kubernetes 1.4, integration with Weave Net can be performed with a single command that deploys DaemonSet:

kubectl apply -f https://git.io/weave-kube Weave Net pods located at each node are responsible for connecting any other pod instances to the Weave network. Weave Net supports network policy APIs, providing a complete and easy-to-configure solution.

Effective use of network policies

The Kubernetes network policy is designed to control traffic that is directed to specific subs and namespaces. When managing hundreds of deployed microservices (as is often the case with Kubernetes), the organization of network connections between the hubs comes to the fore. It is important to understand that this mechanism only indirectly relates to security. If the attacker is able to penetrate the internal network, he is likely to be able to create his own copy of the pod, which will comply with the network policy and allow you to freely communicate with other podami. In the previous section, we looked at various Kubernetes networking solutions, focusing on network interfaces. Here we will focus on the network policy implemented on top of these solutions, although both components are closely interrelated.

Network Policy Architecture in Kubernetes

A network policy determines how subsets of pods can interact with each other and with other network endpoints. The NetworkPolicy resource uses tags to select pods and defines a list of authorization rules that allow you to direct traffic to selected pod instances (in addition to what is already allowed by the isolation policy in a given namespace).

»More information about the book can be found on the publisher's website.

» Table of Contents

» Excerpt

For Habrozhiteley a 20% discount on the coupon - Kubernetes

Upon payment of the paper version of the book, an electronic version of the book is sent to the e-mail.

PS: 7% of the cost of the book will go to the translation of new computer books, a list of books submitted to the printing press here .

Source: https://habr.com/ru/post/441910/

All Articles