Educational program on the launch of Istio

We have been Namely for a year now as Istio. He then just left. The performance in the Kubernetes cluster dropped dramatically, we wanted distributed tracing, and we took Istio to run Jaeger and figure it out. Service mesh is so cool to fit into our infrastructure that we decided to invest in this tool.

I had to suffer, but we studied it far and wide. This is the first post in the series where I will cover how Istio integrates with Kubernetes and what we learned about its work. Sometimes we will wander into the technical jungle, but not very far. Next will be more posts.

What is Istio?

Istio is a service mesh configuration tool. It reads the Kubernetes cluster status and updates to the L7 proxy (HTTP and gRPC), which are implemented as sidecars in Kubernetes subsets. These sidecar s are Envoy containers that read the configuration from the Istio Pilot API (and the gRPC service) and route traffic through it. With the powerful L7 proxy under the hood, we can use metrics, traces, repeat logic, circuit breaker, load balancing, and canary deployments.

Let's start from the beginning: Kubernetes

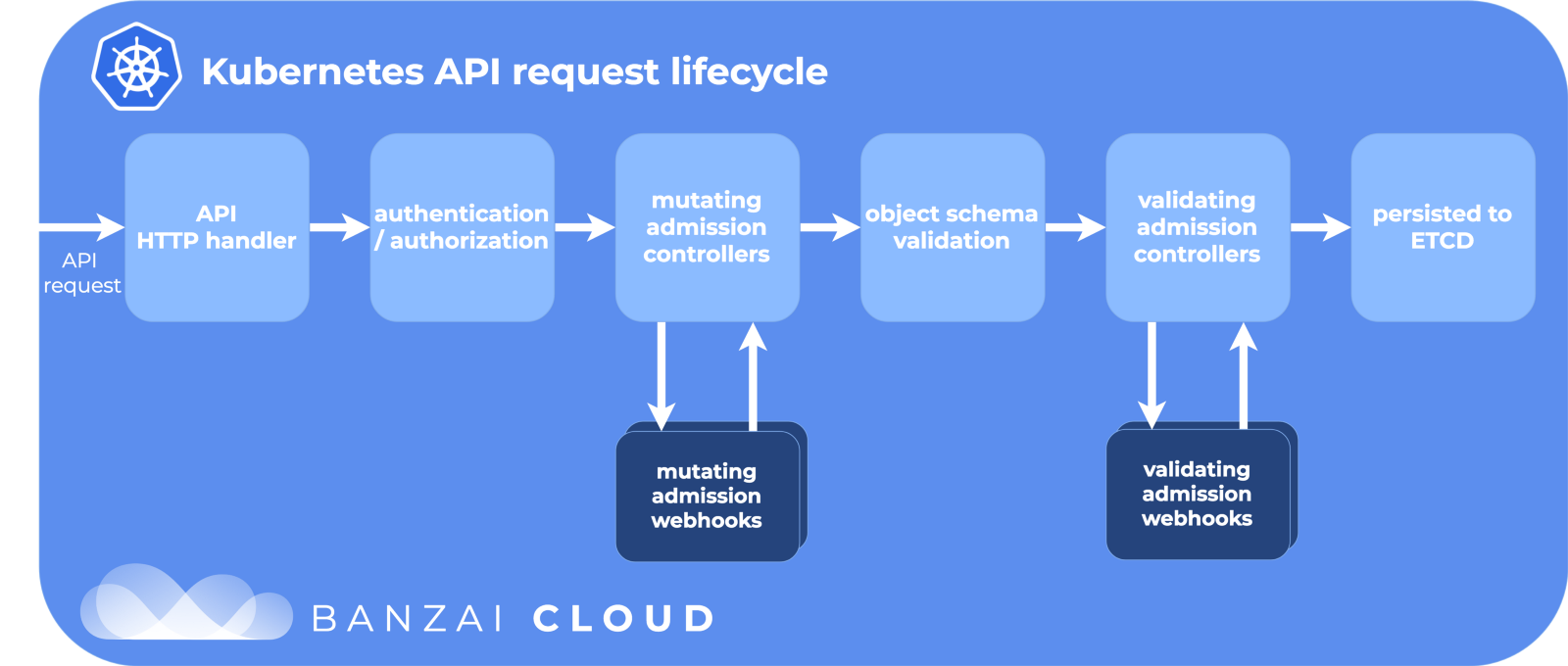

In Kubernetes, we create it under c using deployment or StatefulSet. Or it may just be “vanilla” under without a high level controller. Then Kubernetes struggles to maintain the desired state - creates pods in the cluster on the node, makes sure that they are started and restarted. When the sub is created, Kubernetes goes through the API life cycle, makes sure that every step will be successful, and only then finally creates a sub on the cluster.

API Life Cycle Stages:

Thank you Banzai Cloud for the cool picture.

One of the stages is modifying the admission web scrolls. This is a separate part of the life cycle in Kubernetes, where resources are customized before committing to the etcd repository — the source of truth for the Kubernetes configuration. And here Istio creates his magic.

Modifying webcam admissions

When a sub is created (through kubectl or Deployment ), it goes through this life cycle, and modifying access webbooks change it before being released into the big world.

During the installation of Istio, istio-sidecar-injector is added as a configuration resource for modifying webbucks:

$ kubectl get mutatingwebhookconfiguration NAME AGE istio-sidecar-injector 87d And configuration:

apiVersion: admissionregistration.k8s.io/v1beta1 kind: MutatingWebhookConfiguration metadata: labels: app: istio-sidecar-injector chart: sidecarInjectorWebhook-1.0.4 heritage: Tiller name: istio-sidecar-injector webhooks: - clientConfig: caBundle: redacted service: name: istio-sidecar-injector namespace: istio-system path: /inject failurePolicy: Fail name: sidecar-injector.istio.io namespaceSelector: matchLabels: istio-injection: enabled rules: - apiGroups: - "" apiVersions: - v1 operations: - CREATE resources: - pods It says that Kubernetes should send all the events of the creation of podov to the istio-sidecar-injector in the istio-system , if the namespace has the label istio-injection=enabled . The injector includes two more containers in the PodSpec: one temporary for setting proxy rules and one for proxying itself. The sidecar injector inserts these containers in a pattern from the istio-sidecar-injector configuration map. This process is also called sidecaring.

Sidecar pods

Sidecar's are the tricks of our magician Istio. Istio turns it up so cleverly that it’s magic from the side, if you don’t know the details. And to know them is useful if you suddenly need to debug network requests.

Init and Proxy Containers

In Kubernetes there are temporary one-time init-containers that can be run up to the main ones. They combine resources, transfer databases, or, as is the case with Istio, set up network rules.

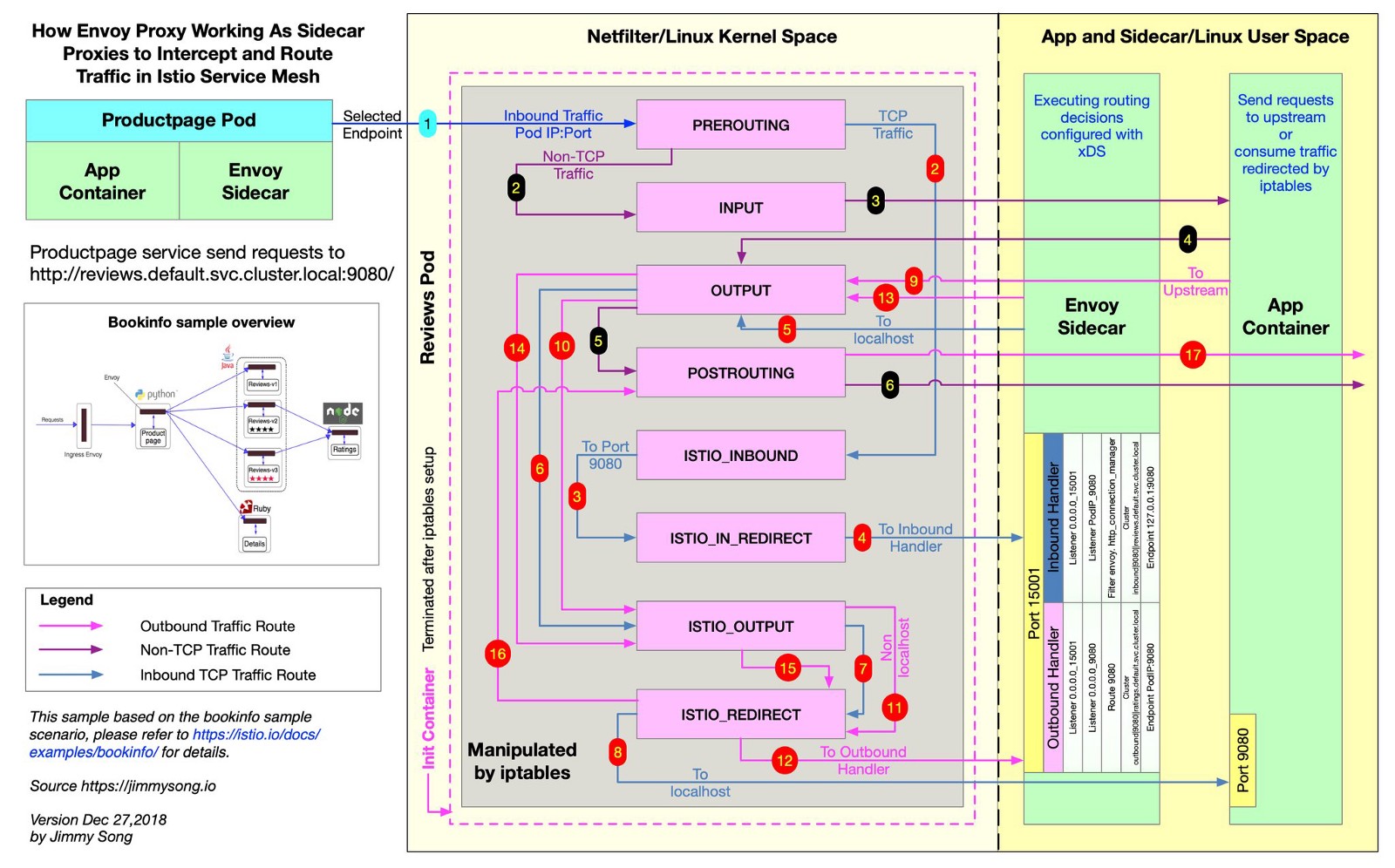

Istio uses Envoy to proxy all requests to feeds to the right routes. For this, Istio creates iptables rules, and they send inbound and outbound traffic directly to Envoy, and it carefully proxies traffic to the destination. Traffic makes a little detour, but you have distributed tracing, query metrics, and policy enforcement. In this file from the Istio repository, you can see how Istio creates iptables rules.

@jimmysongio drew an excellent communication scheme between iptables rules and Envoy proxy:

Envoy receives all incoming and all outgoing traffic, so all traffic generally moves inside Envoy, as in the diagram. Istio Proxy is another container that is added to all pods modified by the Istio sidecar-injector. This container starts the Envoy process, which receives all traffic from the pod (with some exceptions, such as traffic from your Kubernetes cluster).

The Envoy process detects all routes through the Envoy v2 API, which implements Istio.

Envoy and Pilot

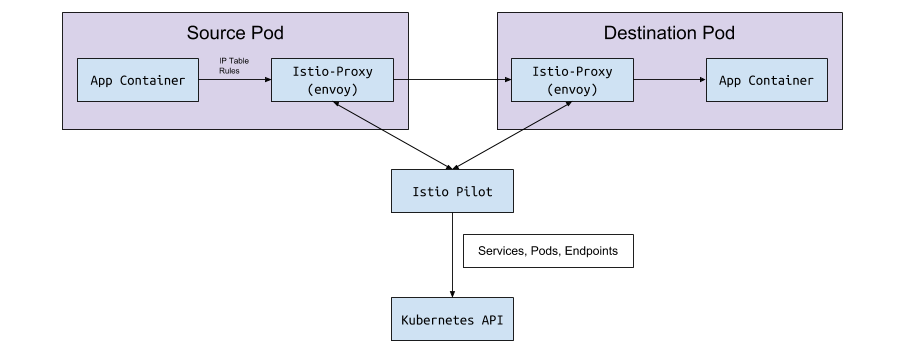

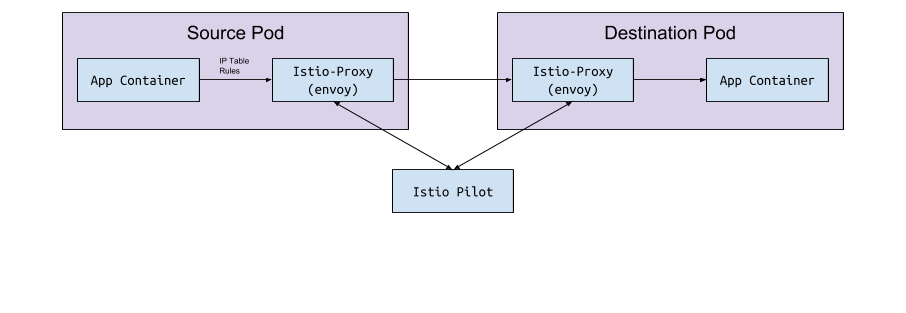

Envoy itself has no logic to detect the flows and services in the cluster. This is a data plane and it needs a control plane to guide. The Envoy configuration parameter requests the service host or port to obtain this configuration via the gRPC API. Istio, through its Pilot service, fulfills the requirements for the gRPC API. Envoy connects to this API based on the sidecar configuration implemented through the modifying webhook. The API has all the traffic rules Envoy needs to discover and route to the cluster. This is the service mesh.

Data exchange "under <-> Pilot"

Pilot connects to the Kubernetes cluster, reads the cluster status and waits for updates. He keeps track of the bogs, services and endpoints in the Kubernetes cluster, to then give the desired configuration to all Envoy sidecar-am connected to the Pilot. This is the bridge between Kubernetes and Envoy.

When pods, services, or endpoints are created or updated in Kubernetes, Pilot finds out about it and sends the desired configuration to all connected Envoy instances.

What configuration is sent?

What configuration does Envoy get from Istio Pilot?

By default, Kubernetes solves your network issues using a sevice (service) that controls the endpoint . The list of endpoints can be opened with the command:

kubectl get endpoints This is a list of all IP and ports in the cluster and their addressees (usually these are created from the deployment). Istio is important to know in order to configure and send route information to Envoy.

Services, listeners and routes

When you create a service in a Kubernetes cluster, you enable shortcuts, which will select all suitable scams. When you send traffic to an IP service, Kubernetes selects for this traffic. For example, the command

curl my-service.default.svc.cluster.local:3000 will first find the virtual IP assigned to the my-service in the default namespace, and this IP will forward the traffic to that which corresponds to the service label.

Istio and Envoy slightly change this logic. Istio configures Envoy based on services and endpoints in the Kubernetes cluster and uses Envoy's smart routing and load balancing features to bypass the Kubernetes service. Instead of proxying one IP, the Envoy connects directly to the IP hearth. To do this, Istio compares the Kubernetes configuration with the Envoy configuration .

The terms Kubernetes, Istio and Envoy are slightly different, and it is not immediately clear what they eat with.

Services

The service in Kubernetes is mapped to a cluster in Envoy. The Envoy cluster contains a list of endpoints , that is, the IP (or hostnames) of the instances to handle requests. To see the list of clusters configured in the Istio sidecar sub-run, launch istioctl proxy-config cluster < > . This command shows the current state of affairs in terms of presentation. Here is an example from our environment:

$ istioctl proxy-config cluster taxparams-6777cf899c-wwhr7 -n applications SERVICE FQDN PORT SUBSET DIRECTION TYPE BlackHoleCluster - - - STATIC accounts-grpc-gw.applications.svc.cluster.local 80 - outbound EDS accounts-grpc-public.applications.svc.cluster.local 50051 - outbound EDS addressvalidator.applications.svc.cluster.local 50051 - outbound EDS All the same services are in this namespace:

$ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) accounts-grpc-gw ClusterIP 10.3.0.91 <none> 80/TCP accounts-grpc-public ClusterIP 10.3.0.202 <none> 50051/TCP addressvalidator ClusterIP 10.3.0.56 <none> 50051/TCP How does Istio know which protocol uses the service? Configures protocols for service manifests by the name field in the port entry.

$ kubectl get service accounts-grpc-public -o yaml apiVersion: v1 kind: Service metadata: name: accounts-grpc-public spec: ports: - name: grpc port: 50051 protocol: TCP targetPort: 50051 If there is grpc or grpc prefix, Istio configures the HTTP2 protocol for the service. We have learned the hard way how Istio uses the name of the port when we mess up the proxy configs because we didn’t specify http or grpc prefixes ...

If you use kubectl and the admin port forwarding page in Envoy, you can see that account-grpc-public endpoints are implemented by Pilot as a cluster in Envoy with HTTP2 protocol. This confirms our assumptions:

$ kubectl -n applications port-forward otherpod-dc56885ff-dqc6t 15000:15000 & $ curl http://localhost:15000/config_dump | yq r - ... - cluster: circuit_breakers: thresholds: - {} connect_timeout: 1s eds_cluster_config: eds_config: ads: {} service_name: outbound|50051||accounts-grpc-public.applications.svc.cluster.local http2_protocol_options: max_concurrent_streams: 1073741824 name: outbound|50051||accounts-grpc-public.applications.svc.cluster.local type: EDS ... Port 15000 is the Envoy admin page , available on every sidecar.

Listeners

Listeners recognize Kubernetes endpoints to allow traffic to go. The address verification service has one endpoint here:

$ kubectl get ep addressvalidator -o yaml apiVersion: v1 kind: Endpoints metadata: name: addressvalidator subsets: - addresses: - ip: 10.2.26.243 nodeName: ip-10-205-35-230.ec2.internal targetRef: kind: Pod name: addressvalidator-64885ccb76-87l4d namespace: applications ports: - name: grpc port: 50051 protocol: TCP Therefore, for checking the address one listener on port 50051:

$ kubectl -n applications port-forward addressvalidator-64885ccb76-87l4d 15000:15000 & $ curl http://localhost:15000/config_dump | yq r - ... dynamic_active_listeners: - version_info: 2019-01-13T18:39:43Z/651 listener: name: 10.2.26.243_50051 address: socket_address: address: 10.2.26.243 port_value: 50051 filter_chains: - filter_chain_match: transport_protocol: raw_buffer ... Routes

In Istio, instead of the standard Kubernetes Ingress object, a more abstract and effective custom resource is VirtualService - VirtualService . VirtualService maps routes to upstream clusters, tying them to the gateway. This is how to use Kubernetes Ingress with an Ingress Controller.

In Namely, we use the Istio Ingress-Gateway for all internal GRPC traffic:

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: grpc-gateway spec: selector: istio: ingressgateway servers: - hosts: - '*' port: name: http2 number: 80 protocol: HTTP2 --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: grpc-gateway spec: gateways: - grpc-gateway hosts: - '*' http: - match: - uri: prefix: /namely.address_validator.AddressValidator retries: attempts: 3 perTryTimeout: 2s route: - destination: host: addressvalidator port: number: 50051 At first glance, the example can not figure out anything. It is not visible here, but the Istio-IngressGateway patch records which endpoints are needed, based on the istio: ingressgateway . In this example, IngressGateway sends traffic for all domains on port 80 using HTTP2 protocol. VirtualService implements the routes for this gateway, matches the /namely.address_validator.AddressValidator prefix and sends /namely.address_validator.AddressValidator to the upstream service via port 50051 with a retry rule after two seconds.

If you redirect the Istio-IngressGateway port and see the Envoy configuration, we'll see what VirtualService does:

$ kubectl -n istio-system port-forward istio-ingressgateway-7477597868-rldb5 15000 ... - match: prefix: /namely.address_validator.AddressValidator route: cluster: outbound|50051||addressvalidator.applications.svc.cluster.local timeout: 0s retry_policy: retry_on: 5xx,connect-failure,refused-stream num_retries: 3 per_try_timeout: 2s max_grpc_timeout: 0s decorator: operation: addressvalidator.applications.svc.cluster.local:50051/namely.address_validator.AddressValidator* ... What we googled, digging in Istio

Error 503 or 404 occurs

The reasons are different, but usually these are:

- Sidecar-s of the application can not communicate with the Pilot (check that the Pilot is running).

- Incorrect protocol specified in the Kubernetes service manifest.

- The VirtualService / Envoy configuration records the route in the wrong upstream cluster. Start with an edge service, where you expect incoming traffic, and examine the Envoy logs. Or use something like Jaeger to find errors.

What does NR / UH / UF mean in Istio proxy logs?

- NR - No Route.

- UH - Upstream Unhealthy (inoperative upstream).

- UF - Upstream Failure (upstream failure).

Read more on the Envoy website .

For high availability with Istio

- Add NodeAffinity to Istio components to evenly distribute hearths across different availability zones and increase the minimum number of replicas.

- Launch a new version of Kubernetes with Horizontal Pod Autoscaling. The most important scrolls will be scaled depending on the load.

Why is Cronjob not terminating?

When the main workload is completed, the sidecar-container continues to work. To work around the problem, disable sidecar in cronjobs by adding the sidecar.istio.io/inject: “false” annotation in PodSpec.

How to install Istio?

We use Spinnaker for Deployments, but usually we take the latest Helm charts, conjure over them, use the helm template -f values.yml and commit the files in Github to see the changes before applying them through kubectl apply -f - . This is in order not to accidentally change the CRD or API in different versions.

Thanks to Bobby Tables and Michael Hamrah for help writing the post.

')

Source: https://habr.com/ru/post/441616/

All Articles