Data Warehouse Architecture: Traditional and Cloud

Hi, Habr! Much has been written on the topic of data warehousing architecture, but I have not yet met so succinctly and succinctly as in the article that I accidentally came across.

I suggest you to get acquainted with this article in my translation. Comments and additions are welcome!

(Image source)

So the data storage architecture is changing. In this article, we will look at the comparison of traditional corporate data warehouses and cloud solutions with lower initial cost, improved scalability and performance.

')

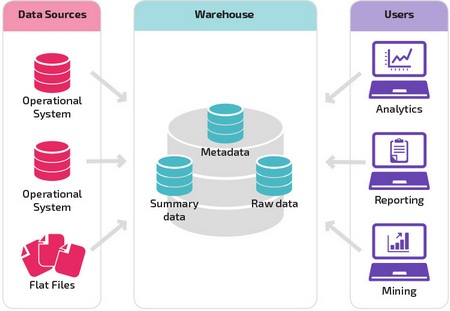

A data warehouse is a system that collects data from various sources within a company and uses this data to support management decision making.

Companies are increasingly moving to cloud storage instead of traditional local systems. Cloud storage has a number of differences from traditional storage:

The following concepts highlight some of the well-established ideas and design principles used to create traditional data warehouses.

Quite often, the traditional data warehouse architecture has a three-tier structure consisting of the following levels:

Two pioneers of data warehousing: Bill Inmon and Ralph Kimball offer different design approaches.

Ralph Kimball ’s approach is based on the importance of data marts that are repositories of data belonging to specific business areas. A data warehouse is simply a combination of different data marts that facilitate reporting and analysis. The Kimball data warehouse project uses a bottom-up approach.

Bill Inmon's approach is based on the fact that the data warehouse is the centralized storage of all corporate data. With this approach, the organization first creates a normalized data warehouse model . It then creates dimension data marts based on the storage model. This is known as a downstream approach to the data warehouse.

In the traditional architecture, there are three common data warehouse models: virtual storage, data mart, and corporate data warehouse:

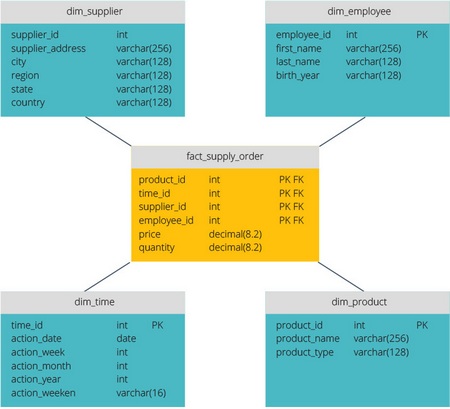

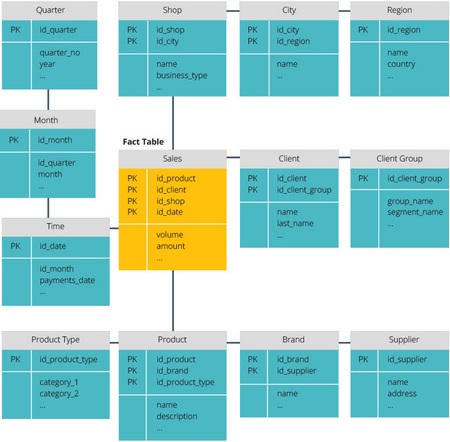

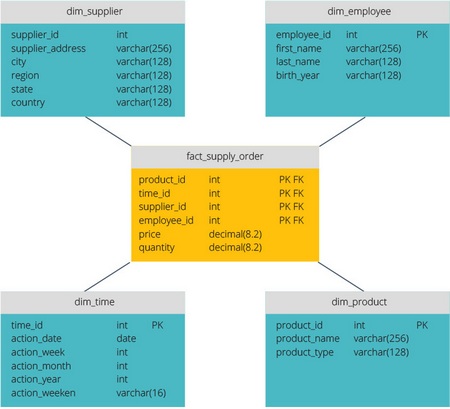

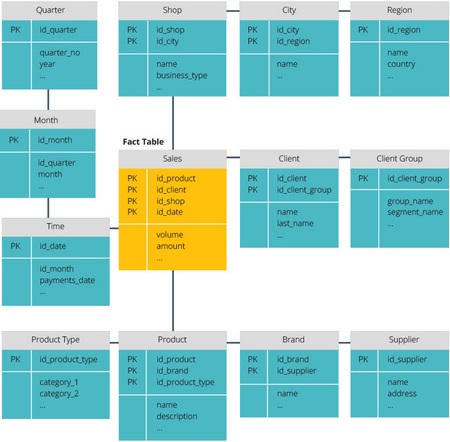

The star and snowflake schemas are two ways to structure the data warehouse.

The star schema has a centralized data repository, which is stored in a fact table. The diagram breaks the fact table into a series of denormalized dimension tables. The fact table contains the aggregated data that will be used for reporting, and the dimension table describes the stored data.

Denormalized projects are less complex because the data is grouped. The fact table uses only one reference to join each dimension table. The simpler design of the star schema greatly simplifies writing complex queries.

A snowflake pattern is different in that it uses normalized data. Normalization means effectively organizing the data so that all data dependencies are identified, and each table contains a minimum of redundancy. Thus, the individual dimension tables are branched into separate dimension tables.

The snowflake scheme uses less disk space and better maintains data integrity. The main disadvantage is the complexity of the queries required to access the data - each query must go through several table joints to get the corresponding data.

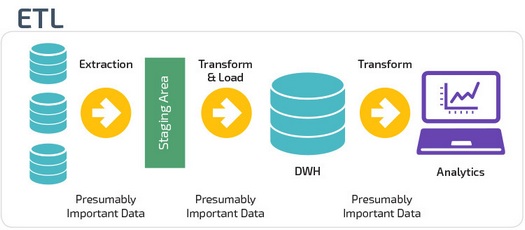

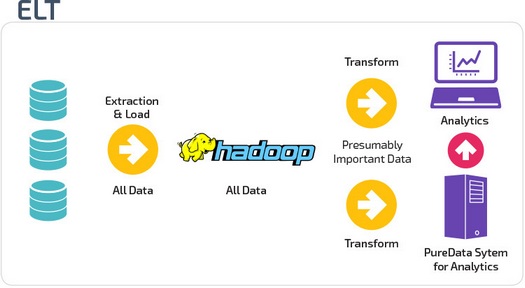

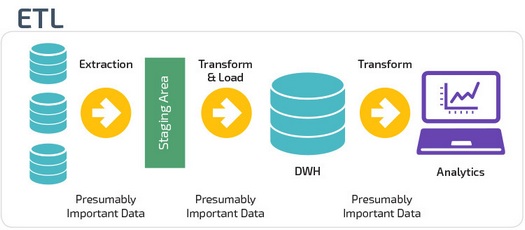

ETL and ELT are two different ways to load data into the repository.

ETL (Extract, Transform, Load) first extracts data from a pool of data sources, which are usually transactional databases. Data is stored in a temporary staging database. Then, conversion operations are performed to structure and convert the data into a suitable form for the target data storage system. Then the structured data is loaded into the repository and ready for analysis.

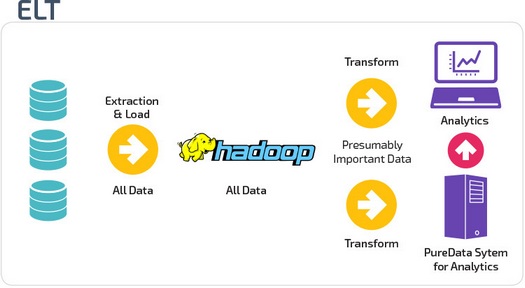

In the case of ELT (Extract, Load, Transform), the data is immediately loaded after extraction from the original data pools. There is no intermediate database, which means that data is immediately loaded into a single central repository.

Data is converted into a data warehouse system for use with business intelligence and analytics tools.

The structure of an organization’s data warehouse also depends on its current situation and needs.

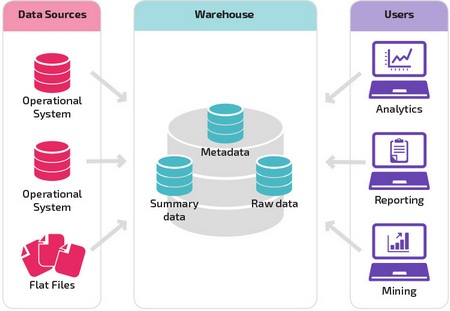

The basic structure allows end users of the repository to directly access summary data obtained from source systems, create reports and analyze this data. This structure is useful for cases when data sources come from the same types of database systems.

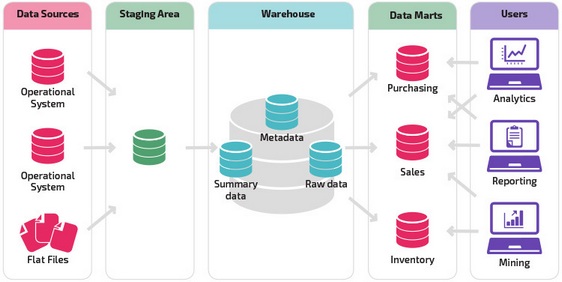

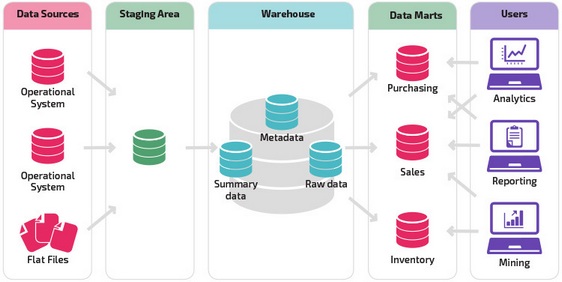

Storage with a staging area is the next logical step in an organization with disparate data sources with many different types and data formats. The staging area converts the data into a generic structured format that is easier to query using analysis and reporting tools.

One type of intermediate structure is the addition of data marts to the data warehouse. Data marts store summary data for a specific field of activity, which makes this data easily accessible for specific forms of analysis.

For example, adding data marts may allow financial analytics to more easily perform detailed queries on sales data and predict customer behavior. Data marts facilitate analysis by tailoring data specifically to meet the needs of the end user.

In recent years, data warehouses are moving to the cloud. New cloud data storage does not adhere to the traditional architecture and each of them offers its own unique architecture.

This section briefly describes the architectures used by the two most popular cloud storage: Amazon Redshift and Google BigQuery.

Amazon Redshift is a cloud-based view of traditional data storage.

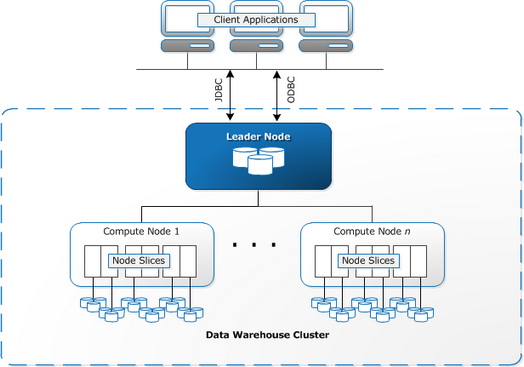

Redshift requires compute resources to be prepared and configured as clusters that contain a set of one or more nodes. Each node has its own processor, memory and RAM. Leader Node compiles requests and transfers them to compute nodes that execute requests.

At each node, data is stored in blocks called slices . Redshift uses column storage, that is, each data block contains values from one column in several rows, and not from one row with values from several columns.

Redshift uses the MPP (Massively Parallel Processing) architecture, breaking up large data sets into pieces that are assigned to the slices at each node. Requests run faster because compute nodes process requests in each slice at the same time. The Leader Node node combines the results and returns them to the client application.

Client applications, such as BI and analytical tools, can directly connect to Redshift using open source PostgreSQL JDBC and ODBC drivers. Thus, analysts can perform their tasks directly on Redshift data.

Redshift can only load structured data. You can upload data to Redshift using pre-integrated systems, including Amazon S3 and DynamoDB, by transferring data from any local host with an SSH connection or by integrating other data sources using the Redshift API.

The BigQuery architecture does not require a server, which means that Google dynamically manages the allocation of computer resources. Therefore, all resource management solutions are hidden from the user.

BigQuery allows clients to download data from Google Cloud Storage and other readable data sources. An alternative option is streaming data, which allows developers to add data to the data warehouse in real time, line by line, when they become available.

BigQuery uses a query engine called Dremel that can scan billions of data lines in just a few seconds. Dremel uses massively parallel queries to scan data in the Colossus base file management system. Colossus distributes files into pieces of 64 megabytes among a variety of computing resources, called nodes, which are grouped into clusters.

Dremel uses a column data structure similar to Redshift. The tree-like architecture sends requests to thousands of machines in seconds.

To perform queries to data using simple SQL commands.

Panoply provides comprehensive data management as a service. Its unique self-optimizing architecture uses machine learning and natural language processing (NLP) to simulate and streamline data transfer from source to analysis, reducing the time from data to as close to zero as possible.

Panoply Intelligent Data Infrastructure includes the following features:

Cloud storage is a big step forward compared to traditional approaches to architecture. However, users still face a number of problems when configuring them:

Link to original text: panoply.io/data-warehouse-guide/data-warehouse-architecture-traditional-vs-cloud

I suggest you to get acquainted with this article in my translation. Comments and additions are welcome!

(Image source)

Introduction

So the data storage architecture is changing. In this article, we will look at the comparison of traditional corporate data warehouses and cloud solutions with lower initial cost, improved scalability and performance.

')

A data warehouse is a system that collects data from various sources within a company and uses this data to support management decision making.

Companies are increasingly moving to cloud storage instead of traditional local systems. Cloud storage has a number of differences from traditional storage:

- No need to buy physical equipment;

- Cloud storage is faster and cheaper to set up and scale;

- Cloud data warehouses can usually perform complex analytical queries much faster because they use massively parallel processing.

Traditional data warehouse architecture

The following concepts highlight some of the well-established ideas and design principles used to create traditional data warehouses.

Three-tier architecture

Quite often, the traditional data warehouse architecture has a three-tier structure consisting of the following levels:

- Lower level : This level contains the database server used to extract data from many different sources, for example, from transactional databases used for interface applications.

- Middle level : The middle level contains an OLAP server that converts data into a structure better suited for analysis and complex queries. The OLAP server can work in two ways: either as an advanced relational database management system that maps operations on multidimensional data to standard relational operations (Relational OLAP), or using a multidimensional OLAP model that directly implements multidimensional data and operations.

- Top level : top level is the client level. This level contains the tools used for high-level data analysis, reporting, and data analysis.

Kimball vs. Inmon

Two pioneers of data warehousing: Bill Inmon and Ralph Kimball offer different design approaches.

Ralph Kimball ’s approach is based on the importance of data marts that are repositories of data belonging to specific business areas. A data warehouse is simply a combination of different data marts that facilitate reporting and analysis. The Kimball data warehouse project uses a bottom-up approach.

Bill Inmon's approach is based on the fact that the data warehouse is the centralized storage of all corporate data. With this approach, the organization first creates a normalized data warehouse model . It then creates dimension data marts based on the storage model. This is known as a downstream approach to the data warehouse.

Data Warehouse Models

In the traditional architecture, there are three common data warehouse models: virtual storage, data mart, and corporate data warehouse:

- Virtual data storage is a set of separate databases that can be used together so that the user can effectively access all data as if they were stored in one data storage;

- The data mart model is used for reporting and analyzing specific business lines. In this storage model, aggregated data from a number of source systems related to a specific business area, such as sales or finance;

- The corporate data warehouse model assumes the storage of aggregated data covering the entire organization. This model considers the data warehouse as the heart of an enterprise information system with integrated data from all business units.

Star vs. Snowflake

The star and snowflake schemas are two ways to structure the data warehouse.

The star schema has a centralized data repository, which is stored in a fact table. The diagram breaks the fact table into a series of denormalized dimension tables. The fact table contains the aggregated data that will be used for reporting, and the dimension table describes the stored data.

Denormalized projects are less complex because the data is grouped. The fact table uses only one reference to join each dimension table. The simpler design of the star schema greatly simplifies writing complex queries.

A snowflake pattern is different in that it uses normalized data. Normalization means effectively organizing the data so that all data dependencies are identified, and each table contains a minimum of redundancy. Thus, the individual dimension tables are branched into separate dimension tables.

The snowflake scheme uses less disk space and better maintains data integrity. The main disadvantage is the complexity of the queries required to access the data - each query must go through several table joints to get the corresponding data.

ETL vs. Elt

ETL and ELT are two different ways to load data into the repository.

ETL (Extract, Transform, Load) first extracts data from a pool of data sources, which are usually transactional databases. Data is stored in a temporary staging database. Then, conversion operations are performed to structure and convert the data into a suitable form for the target data storage system. Then the structured data is loaded into the repository and ready for analysis.

In the case of ELT (Extract, Load, Transform), the data is immediately loaded after extraction from the original data pools. There is no intermediate database, which means that data is immediately loaded into a single central repository.

Data is converted into a data warehouse system for use with business intelligence and analytics tools.

Organizational maturity

The structure of an organization’s data warehouse also depends on its current situation and needs.

The basic structure allows end users of the repository to directly access summary data obtained from source systems, create reports and analyze this data. This structure is useful for cases when data sources come from the same types of database systems.

Storage with a staging area is the next logical step in an organization with disparate data sources with many different types and data formats. The staging area converts the data into a generic structured format that is easier to query using analysis and reporting tools.

One type of intermediate structure is the addition of data marts to the data warehouse. Data marts store summary data for a specific field of activity, which makes this data easily accessible for specific forms of analysis.

For example, adding data marts may allow financial analytics to more easily perform detailed queries on sales data and predict customer behavior. Data marts facilitate analysis by tailoring data specifically to meet the needs of the end user.

New data warehouse architectures

In recent years, data warehouses are moving to the cloud. New cloud data storage does not adhere to the traditional architecture and each of them offers its own unique architecture.

This section briefly describes the architectures used by the two most popular cloud storage: Amazon Redshift and Google BigQuery.

Amazon Redshift

Amazon Redshift is a cloud-based view of traditional data storage.

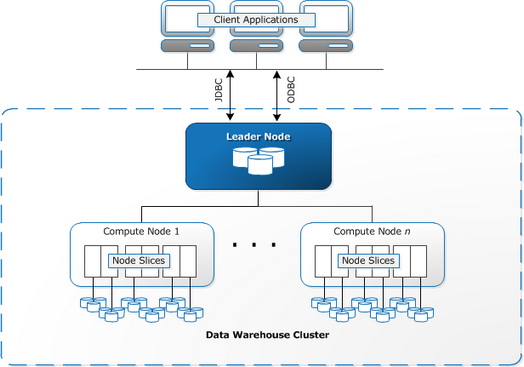

Redshift requires compute resources to be prepared and configured as clusters that contain a set of one or more nodes. Each node has its own processor, memory and RAM. Leader Node compiles requests and transfers them to compute nodes that execute requests.

At each node, data is stored in blocks called slices . Redshift uses column storage, that is, each data block contains values from one column in several rows, and not from one row with values from several columns.

Redshift uses the MPP (Massively Parallel Processing) architecture, breaking up large data sets into pieces that are assigned to the slices at each node. Requests run faster because compute nodes process requests in each slice at the same time. The Leader Node node combines the results and returns them to the client application.

Client applications, such as BI and analytical tools, can directly connect to Redshift using open source PostgreSQL JDBC and ODBC drivers. Thus, analysts can perform their tasks directly on Redshift data.

Redshift can only load structured data. You can upload data to Redshift using pre-integrated systems, including Amazon S3 and DynamoDB, by transferring data from any local host with an SSH connection or by integrating other data sources using the Redshift API.

Google bigquery

The BigQuery architecture does not require a server, which means that Google dynamically manages the allocation of computer resources. Therefore, all resource management solutions are hidden from the user.

BigQuery allows clients to download data from Google Cloud Storage and other readable data sources. An alternative option is streaming data, which allows developers to add data to the data warehouse in real time, line by line, when they become available.

BigQuery uses a query engine called Dremel that can scan billions of data lines in just a few seconds. Dremel uses massively parallel queries to scan data in the Colossus base file management system. Colossus distributes files into pieces of 64 megabytes among a variety of computing resources, called nodes, which are grouped into clusters.

Dremel uses a column data structure similar to Redshift. The tree-like architecture sends requests to thousands of machines in seconds.

To perform queries to data using simple SQL commands.

Panoply

Panoply provides comprehensive data management as a service. Its unique self-optimizing architecture uses machine learning and natural language processing (NLP) to simulate and streamline data transfer from source to analysis, reducing the time from data to as close to zero as possible.

Panoply Intelligent Data Infrastructure includes the following features:

- Query and Data Analysis — Determining the best configuration for each use case, adjusting it over time, and creating indexes, sorting keys, disk keys, data types, evacuating and splitting.

- Identify queries that do not follow advanced methods — for example, those that include nested loops or implicit casting — and rewrite them into an equivalent query that requires a fraction of the execution time or resources.

- Optimizing server configuration over time based on query patterns and exploring which server setup works best. The platform smoothly switches server types and measures the total performance.

On the other side of the cloud data storage

Cloud storage is a big step forward compared to traditional approaches to architecture. However, users still face a number of problems when configuring them:

- Uploading data to cloud data warehouses is nontrivial, and large-scale data pipelines require configuration, testing, and support for the ETL process. This part of the process is usually performed by third-party tools;

- Updates, inserts, and deletions can be complex and must be done carefully to prevent query performance;

- It is difficult to deal with semi-structured data — they need to be normalized in a relational database format, which requires automation of large data streams;

- Nested structures are usually not supported in cloud data storage. You need to convert nested tables to formats that the data store understands;

- Cluster optimization . There are various options for configuring a Redshift cluster to run your workloads. Different workloads, datasets, or even different types of queries may require different configuration. To achieve optimal performance, you must constantly review and, if necessary, further adjust the configuration;

- Query optimization - custom queries may not correspond to advanced methods and, therefore, will run much longer. You can work with users or automated client applications to optimize queries so that the data warehouse can work as expected.

- Backup and recovery - although data storage providers provide many options for backing up your data, it is nontrivial to set up and they require monitoring and close attention.

Link to original text: panoply.io/data-warehouse-guide/data-warehouse-architecture-traditional-vs-cloud

Source: https://habr.com/ru/post/441538/

All Articles