Fearless defense. Thread Safety in Rust

This is the second part of the Fearless Protection article series. In the first, we talked about memory security.

Modern applications are multi-threaded: instead of sequential execution of tasks, the program uses threads to simultaneously perform several tasks. All of us daily observe simultaneous work and concurrency :

Parallel threads speed up work, but introduce a set of synchronization problems, namely interlocks and race conditions. From a security point of view, why do we care about thread safety? Because the security of memory and threads is one and the same main problem: unacceptable use of resources. Here, attacks have the same consequences as attacks on memory, including privilege escalation, arbitrary code execution (ACE), and circumvention of security checks.

Concurrency errors, like implementation errors, are closely related to the correctness of the program. While memory vulnerabilities are almost always dangerous, implementation / logic errors do not always indicate a security problem if they do not occur in the part of the code related to the observance of security contracts (for example, permission to bypass the security check). But concurrency bugs have a peculiarity. If security problems due to logical errors often appear next to the corresponding code, then concurrency errors often arise in other functions, and not in the one where the error is made directly , which makes them difficult to track and fix. Another difficulty is a certain overlap between incorrect memory processing and concurrency errors, which we see in data races.

')

Programming languages have developed various concurrency strategies to help developers manage the performance and security problems of multi-threaded applications.

It is considered that parallel programming is more difficult than usual: our brain is better adapted to consistent reasoning. Parallel code can have unexpected and unwanted interactions between threads, including deadlocks, contention, and data races.

A deadlock occurs when several threads are waiting to perform certain actions from each other to continue. Although this unwanted behavior can cause a denial of service attack, it will not cause vulnerabilities such as ACE.

Race condition - a situation in which the time or order of tasks may affect the correctness of the program. Data race occurs when multiple threads attempt to simultaneously access the same memory location with at least one write attempt. It happens that the race condition and the data race occur independently of each other. But data races always carry danger .

The most well-known type of attack with concurrency is called TOCTOU (time of check to time of use): in fact, a race condition between condition checking (for example, security credentials) and the use of results. The result of the TOCTOU attack is a loss of integrity.

Interlocks and loss of vitality are considered performance problems, not security issues, while information loss and integrity loss are likely to be security related. The article from Red Balloon Security discusses some possible exploits. One example is pointer damage, followed by privilege escalation or remote code execution. In an exploit, a function that loads the shared ELF (Executable and Linkable Format) library correctly initiates the semaphore only on the first call, and then incorrectly limits the number of threads, which causes kernel memory corruption. This attack is an example of information loss.

The most difficult part of parallel programming is testing and debugging, because concurrency errors are difficult to reproduce. Event timings, operating system decisions, network traffic, and other factors ... all this changes the program's behavior on every launch.

Sometimes it's really easier to remove the entire program than to search for a bug. Heisenbugs

Not only does the behavior change each time it is started, but even inserting statements of output or debugging can change the behavior, as a result of which Heisenberg bugs (non-deterministic, hard-to-reproduce errors typical for parallel programming) arise and mysteriously disappear.

Parallel programming is difficult. It is difficult to predict how parallel code will interact with other parallel code. When errors appear, they are difficult to find and fix. Instead of relying on testers, let's look at ways of developing programs and using languages that make writing parallel code easier.

First, we formulate the concept of "thread safety":

In languages without static thread safety, programmers have to constantly monitor memory, which is shared with another thread and can change at any time. In sequential programming, we are taught to avoid global variables if another part of the code quietly changed them. It is impossible to demand from programmers guaranteed safe changes in general data, as well as manual memory management.

"Constant vigilance!"

As a rule, programming languages are limited to two approaches:

Languages with restriction of flows either put a restriction of 1 flow for variable variables, or require that all common variables be immutable. Both approaches eliminate the basic problem of data race — incorrectly mutable common data — but the limitations are too severe. To solve the problem in languages, low-level synchronization primitives have been made, such as mutexes. They can be used to build thread-safe data structures.

The reference implementation in Python and Cpython has a kind of mutex called Global Interpreter Lock (GIL), which blocks all other threads when one thread accesses an object. Multithreading Python is notorious for its inefficiencies due to GIL latency. Therefore, most parallel Python programs work in several processes, so that each has its own GIL.

Java supports parallel programming through a shared memory model. Each thread has its own execution path, but it can access any object in the program: the programmer must synchronize access between threads using the built-in Java primitives.

Although Java has building blocks for creating thread-safe programs, but thread-safety is not guaranteed by the compiler (as opposed to memory security). If unsynchronized memory access occurs (that is, data races), then Java will throw a run-time exception, but programmers should use parallelism primitives correctly.

While Python avoids the race condition with GIL, and Java throws exceptions at runtime, C ++ relies on the programmer to synchronize memory access manually. Prior to C ++ 11, the standard library did not include concurrency primitives .

Most languages provide tools for writing thread-safe code, and there are special methods for detecting data races and race conditions; but it does not provide any guarantees of thread safety and does not protect against data race.

Rust uses a multi-pronged approach to eliminating race conditions, using rules of ownership and safe types, to fully protect against race conditions at compile time.

In the first article, we introduced the concept of ownership, this is one of the basic concepts of Rust. Each variable has a unique owner, and ownership can be transferred or borrowed. If another thread wants to change a resource, then we transfer ownership by moving the variable to a new thread.

Moving causes an exception: multiple threads can write to the same memory, but never at the same time. Since the owner is always the same, what happens if another thread borrows a variable?

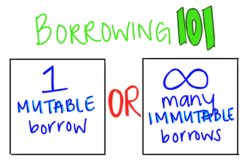

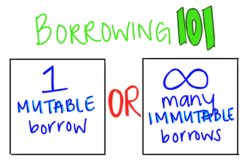

In Rust, you either have one variable borrowing, or several unchangeable ones. It is impossible to simultaneously introduce variable and unchangeable borrowings (or several variable ones). In memory security, it is important that resources are properly freed, and in thread safety, it is important that at one time only one thread has the right to change the variable. In addition, in such a situation, no other flows will refer to outdated borrowing: either recording or sharing is possible for it, but not both.

The concept of ownership is designed to eliminate memory vulnerabilities. It turned out that it also prevents data races.

Although many languages have memory security methods (for example, reference counting and garbage collection), they usually rely on manual synchronization or restrictions on concurrent sharing to prevent data races. The Rust approach is aimed at both types of security, trying to solve the main problem of determining the allowable use of resources and ensuring that this is valid during compilation.

Ownership rules do not allow multiple threads to write data to a single memory cell and prohibit simultaneous data exchange between threads and mutability, but this does not necessarily provide thread-safe data structures. Each data structure in Rust is either thread safe or not. This is passed to the compiler using a type system.

In programming languages, type systems describe acceptable behavior. In other words, a well-typed program is well defined. As long as our types are expressive enough to capture the intended meaning, a well-typed program will behave as intended.

Rust is a type-safe language, here the compiler checks the consistency of all types. For example, the following code will not compile:

All variables in Rust are of type, often implicit. We can also define new types and describe the capabilities of each type using a system of traits . Traits provide an interface abstraction. Two important built-in traits are

The example below is a simplified version of the standard library code that spawns threads:

The

The

Programmers clearly see common objects between threads, and the compiler ensures the reliability of this installation.

Although tools for parallel programming are available in many languages, it is not easy to prevent the race condition. If you require programmers to have a complex alternation of instructions and interaction between threads, then errors are inevitable. Although violations of thread and memory security lead to similar consequences, traditional memory protection tools, such as reference counting and garbage collection, do not prevent race conditions. In addition to the static memory security guarantee, the Rust ownership model also prevents unsafe data modification and incorrect sharing of objects between threads, while the type system provides thread safety during compilation.

Modern applications are multi-threaded: instead of sequential execution of tasks, the program uses threads to simultaneously perform several tasks. All of us daily observe simultaneous work and concurrency :

- Websites simultaneously serve multiple users.

- The UI performs background work that does not interfere with the user (imagine that each time you enter a character, the application hangs up for spell checking).

- A computer can run multiple applications simultaneously.

Parallel threads speed up work, but introduce a set of synchronization problems, namely interlocks and race conditions. From a security point of view, why do we care about thread safety? Because the security of memory and threads is one and the same main problem: unacceptable use of resources. Here, attacks have the same consequences as attacks on memory, including privilege escalation, arbitrary code execution (ACE), and circumvention of security checks.

Concurrency errors, like implementation errors, are closely related to the correctness of the program. While memory vulnerabilities are almost always dangerous, implementation / logic errors do not always indicate a security problem if they do not occur in the part of the code related to the observance of security contracts (for example, permission to bypass the security check). But concurrency bugs have a peculiarity. If security problems due to logical errors often appear next to the corresponding code, then concurrency errors often arise in other functions, and not in the one where the error is made directly , which makes them difficult to track and fix. Another difficulty is a certain overlap between incorrect memory processing and concurrency errors, which we see in data races.

')

Programming languages have developed various concurrency strategies to help developers manage the performance and security problems of multi-threaded applications.

Concurrency issues

It is considered that parallel programming is more difficult than usual: our brain is better adapted to consistent reasoning. Parallel code can have unexpected and unwanted interactions between threads, including deadlocks, contention, and data races.

A deadlock occurs when several threads are waiting to perform certain actions from each other to continue. Although this unwanted behavior can cause a denial of service attack, it will not cause vulnerabilities such as ACE.

Race condition - a situation in which the time or order of tasks may affect the correctness of the program. Data race occurs when multiple threads attempt to simultaneously access the same memory location with at least one write attempt. It happens that the race condition and the data race occur independently of each other. But data races always carry danger .

Potential consequences of concurrency errors

- Interlock

- Loss of information: another thread overwrites information

- Loss of integrity: interlaced information

- Loss of survivability: performance problems due to uneven access to shared resources

The most well-known type of attack with concurrency is called TOCTOU (time of check to time of use): in fact, a race condition between condition checking (for example, security credentials) and the use of results. The result of the TOCTOU attack is a loss of integrity.

Interlocks and loss of vitality are considered performance problems, not security issues, while information loss and integrity loss are likely to be security related. The article from Red Balloon Security discusses some possible exploits. One example is pointer damage, followed by privilege escalation or remote code execution. In an exploit, a function that loads the shared ELF (Executable and Linkable Format) library correctly initiates the semaphore only on the first call, and then incorrectly limits the number of threads, which causes kernel memory corruption. This attack is an example of information loss.

The most difficult part of parallel programming is testing and debugging, because concurrency errors are difficult to reproduce. Event timings, operating system decisions, network traffic, and other factors ... all this changes the program's behavior on every launch.

Sometimes it's really easier to remove the entire program than to search for a bug. Heisenbugs

Not only does the behavior change each time it is started, but even inserting statements of output or debugging can change the behavior, as a result of which Heisenberg bugs (non-deterministic, hard-to-reproduce errors typical for parallel programming) arise and mysteriously disappear.

Parallel programming is difficult. It is difficult to predict how parallel code will interact with other parallel code. When errors appear, they are difficult to find and fix. Instead of relying on testers, let's look at ways of developing programs and using languages that make writing parallel code easier.

First, we formulate the concept of "thread safety":

"A data type or static method is considered thread-safe if it behaves correctly when calling from multiple threads, regardless of how these threads are executed, and does not require additional coordination from the calling code." MIT

How programming languages work with concurrency

In languages without static thread safety, programmers have to constantly monitor memory, which is shared with another thread and can change at any time. In sequential programming, we are taught to avoid global variables if another part of the code quietly changed them. It is impossible to demand from programmers guaranteed safe changes in general data, as well as manual memory management.

"Constant vigilance!"

As a rule, programming languages are limited to two approaches:

- Restricting variability or limiting public access

- Manual thread safety (for example, locks, semaphores)

Languages with restriction of flows either put a restriction of 1 flow for variable variables, or require that all common variables be immutable. Both approaches eliminate the basic problem of data race — incorrectly mutable common data — but the limitations are too severe. To solve the problem in languages, low-level synchronization primitives have been made, such as mutexes. They can be used to build thread-safe data structures.

Python and global interpreter locking

The reference implementation in Python and Cpython has a kind of mutex called Global Interpreter Lock (GIL), which blocks all other threads when one thread accesses an object. Multithreading Python is notorious for its inefficiencies due to GIL latency. Therefore, most parallel Python programs work in several processes, so that each has its own GIL.

Java and runtime exceptions

Java supports parallel programming through a shared memory model. Each thread has its own execution path, but it can access any object in the program: the programmer must synchronize access between threads using the built-in Java primitives.

Although Java has building blocks for creating thread-safe programs, but thread-safety is not guaranteed by the compiler (as opposed to memory security). If unsynchronized memory access occurs (that is, data races), then Java will throw a run-time exception, but programmers should use parallelism primitives correctly.

C ++ and the brain of a programmer

While Python avoids the race condition with GIL, and Java throws exceptions at runtime, C ++ relies on the programmer to synchronize memory access manually. Prior to C ++ 11, the standard library did not include concurrency primitives .

Most languages provide tools for writing thread-safe code, and there are special methods for detecting data races and race conditions; but it does not provide any guarantees of thread safety and does not protect against data race.

How to solve the problem of Rust?

Rust uses a multi-pronged approach to eliminating race conditions, using rules of ownership and safe types, to fully protect against race conditions at compile time.

In the first article, we introduced the concept of ownership, this is one of the basic concepts of Rust. Each variable has a unique owner, and ownership can be transferred or borrowed. If another thread wants to change a resource, then we transfer ownership by moving the variable to a new thread.

Moving causes an exception: multiple threads can write to the same memory, but never at the same time. Since the owner is always the same, what happens if another thread borrows a variable?

In Rust, you either have one variable borrowing, or several unchangeable ones. It is impossible to simultaneously introduce variable and unchangeable borrowings (or several variable ones). In memory security, it is important that resources are properly freed, and in thread safety, it is important that at one time only one thread has the right to change the variable. In addition, in such a situation, no other flows will refer to outdated borrowing: either recording or sharing is possible for it, but not both.

The concept of ownership is designed to eliminate memory vulnerabilities. It turned out that it also prevents data races.

Although many languages have memory security methods (for example, reference counting and garbage collection), they usually rely on manual synchronization or restrictions on concurrent sharing to prevent data races. The Rust approach is aimed at both types of security, trying to solve the main problem of determining the allowable use of resources and ensuring that this is valid during compilation.

But wait! That's not all!

Ownership rules do not allow multiple threads to write data to a single memory cell and prohibit simultaneous data exchange between threads and mutability, but this does not necessarily provide thread-safe data structures. Each data structure in Rust is either thread safe or not. This is passed to the compiler using a type system.

"A well-typed program cannot be wrong." - Robin Milner, 1978

In programming languages, type systems describe acceptable behavior. In other words, a well-typed program is well defined. As long as our types are expressive enough to capture the intended meaning, a well-typed program will behave as intended.

Rust is a type-safe language, here the compiler checks the consistency of all types. For example, the following code will not compile:

let mut x = "I am a string"; x = 6; error[E0308]: mismatched types --> src/main.rs:6:5 | 6 | x = 6; // | ^ expected &str, found integral variable | = note: expected type `&str` found type `{integer}` All variables in Rust are of type, often implicit. We can also define new types and describe the capabilities of each type using a system of traits . Traits provide an interface abstraction. Two important built-in traits are

Send and Sync , they are provided by the compiler for each type by default:Sendindicates that the structure can be safely transferred between threads (required for transfer of ownership)Syncindicates that threads can safely use a structure.

The example below is a simplified version of the standard library code that spawns threads:

fn spawn<Closure: Fn() + Send>(closure: Closure){ ... } let x = std::rc::Rc::new(6); spawn(|| { x; }); The

spawn function takes a single closure argument and requires for the latter a type that implements the Send and Fn traits. When trying to create a stream and pass a closure value with the variable x compiler produces an error: error [E0277]: `std :: rc :: Rc <i32>` cannot be sent between threads safely

-> src / main.rs: 8: 1

|

8 | spawn (move || {x;});

| ^^^^^ `` std :: rc :: Rc <i32> `cannot be sent between threads safely

|

= help: within `[closure@src/main.rs: 8: 7: 8:21 x: std :: rc :: Rc <i32>]`, the trait `std :: marker :: Send` is not accepted for `std :: rc :: rc <i32>`

= note: it’s not necessary because it’s the type of [closure@src/main.rs: 8: 7: 8:21 x: std :: rc :: Rc <i32>] `

note: required by `spawn` The

Send and Sync traits allow the Rust type system to understand what data can be shared. By incorporating this information into the type system, thread safety becomes part of type safety. Instead of documentation, thread safety is implemented according to the law of the compiler .Programmers clearly see common objects between threads, and the compiler ensures the reliability of this installation.

Although tools for parallel programming are available in many languages, it is not easy to prevent the race condition. If you require programmers to have a complex alternation of instructions and interaction between threads, then errors are inevitable. Although violations of thread and memory security lead to similar consequences, traditional memory protection tools, such as reference counting and garbage collection, do not prevent race conditions. In addition to the static memory security guarantee, the Rust ownership model also prevents unsafe data modification and incorrect sharing of objects between threads, while the type system provides thread safety during compilation.

Source: https://habr.com/ru/post/441370/

All Articles