Direct routing and balancing with NFT vs Nginx

When developing high-load network applications, there is a need for load balancing.

A popular L7 balancing tool is Nginx. It allows you to cache responses, choose different strategies, and even script on LUA.

Despite all the charms of Nginx, if:

')

The question may arise: why do we need Nginx? Why spend resources on balancing on L7, isn't it easier to just forward a SYN packet? (L4 Direct Routing).

A popular packet forwarding tool was IPVS. He performed the tasks of balancing through the tunnel and Direct Routing.

In the first case, for each connection, a TCP channel was established and a packet from the user went to the balancer, then to the minion, and then in the reverse order.

In this scheme, the main problem is visible: in the opposite direction, the data go first to the balancer, and then to the user (Nginx works in the same way). Unnecessary work is done, given the fact that more data is usually sent to the user, this behavior leads to some loss of performance.

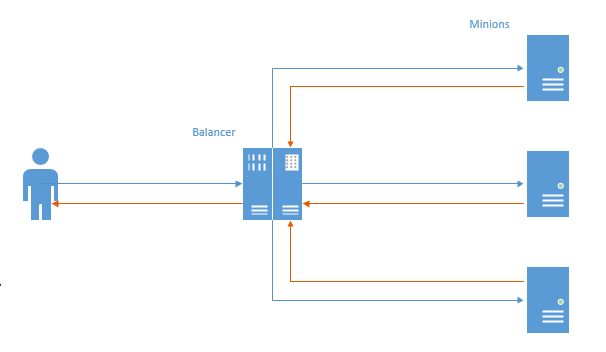

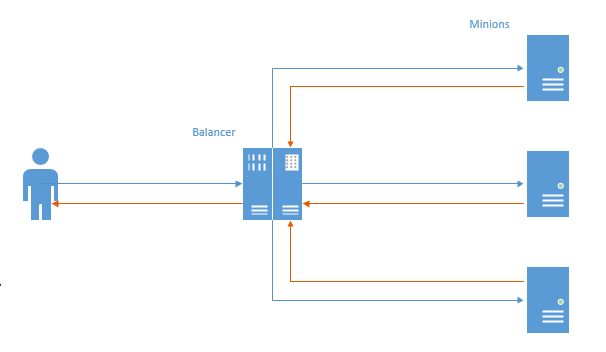

This deficiency is devoid of (but endowed with new) balancing method called Direct Routing. Schematically, it looks like this:

In the case of Direct Routing, the reverse packets go directly to the user, bypassing the balancer. Obviously, both balancer and network resources are saved. By saving network resources, it means not so much traffic saving, because the usual practice is to connect servers to a separate grid and not account traffic, but the fact that even transferring through a balancer is a loss of milliseconds.

This method imposes certain restrictions:

A few years ago, Linux began to actively promote NFTables as a replacement for IPTables, ArpTables, EBTables and all the rest [az] {1,} Tables. At the moment when we had to Adram, it became necessary to squeeze every millisecond of the response from the network, I decided to pull out the checker and look for it - or maybe ipTables learned how to do iphash forwarding and can be quickly balancing it. Then I came across nftables, which can and not only that, but iptables still doesn’t even know how to do it.

After several days of proceedings, I was finally able to start up Direct Routing and Channel Routing via NFTables in the test lab, as well as test them in comparison with nginx.

So, test lab. We have 5 cars:

All interfaces have MTU 1500.

Settings NFTables on the balancer:

A raw chain is created, on the pre-outs hook, with a priority of -300.

If a packet arrives with a target address http, then depending on the source port (made for testing from one machine, ip saddr is needed in reality), either 56.2 or 56.3 is selected and set as the target address in the packet, and then sent further along the routes. Roughly speaking, for even ports 56.2, for odd ports, respectively, 56.3 (in fact, not, for even / odd hashes, but it is easier to understand that way). After setting the target IP, the packet goes back to the network. No NAT occurs, the minions packet comes from the source IP of the client, not the balancer, which is important for Direct Routing.

NFT settings on minions:

Raw output hook is created with a priority of -300 (priority is very important here, at higher ones, the required mengling will not work for reply packages).

All outgoing traffic from the http port is signed 56.4 (ip balancer) and sent straight to the client, bypassing the balancer.

To check whether everything will work correctly, I started a client in another network and started it through a router.

I also disabled arp_filter, rp_filter (so that spoofing works) and enabled ip_forward both on the balancer and on the router.

For benches, in the case of NFT, Nginx + php7.2-FPM is used through the unix socket on each minion. There was nothing on the balancer.

In the case of Nginx used: nginx on the balancer and php7.2-FPM via TCP on the minions. As a result, I was not balancing the web server behind the balancer, but at once FPM (which will be more honest with respect to nginx, and correspond more to real life).

For NFT, only the hash strategy was used (in the table, nft dr ), for nginx: hash ( ngx eq ) and least conn ( ngx lc )

Several tests were done.

Tested ab, three times each test:

Initially, I planned to give the test time, milliseconds and the rest, eventually settled on the RPS - they are representative and correlate with time indicators.

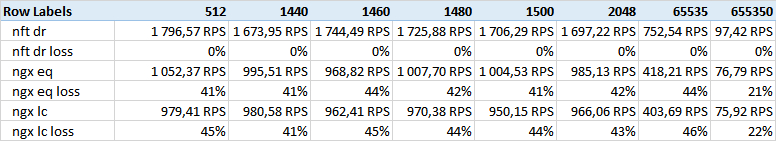

Got the following results:

Test Size - columns - the size of the data given.

As you can see, nft direct routing wins by a wide margin.

I was counting on several other results related to the size of the ethernet frame, but no correlation was found. Perhaps the 512 body doesn’t fit in 1500 MTU, although I doubt the small test will be revealing.

I noticed that on large volumes (650k) nginx reduces the gap. Perhaps this is somehow related to the buffers and TCP Windows size.

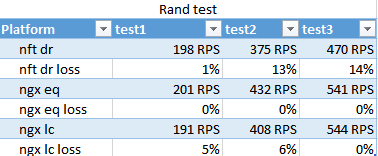

The result of the rand test. Shows how at least conn copes in terms of different speed of scripts execution on different minions.

Surprisingly, nginx hash worked faster than least conn, and only in the final pass, at least conn took the lead a little, which does not claim to be of statistical significance.

The numbers of passages are very different due to the fact that 100 threads are leaving at once, and FPM-ok loads about 10 from the start. By the third pass, they managed to roll up - which shows the applicability of the strategies for berst.

NFT is expected to lose this test. Nginx well optimizes interaction with FPMs in such situations.

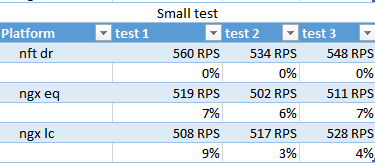

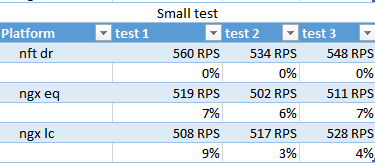

small test

nft slightly wins over RPS, at least conn again as an outsider.

By the way, in this test it is clear that 400-500RPS is being issued, although on the test with sending 512 bytes it was over 1500 - it seems that the system eats this thousand.

NFT performed well in the situation of uniform load optimization: when a lot of data is given, and the application running time is deterministic and the cluster resources are enough to test the incoming stream without spinning.

In a situation where the load on each request is chaotic and it is impossible to evenly balance the load on the servers with the primitive remainder of the hash division, the NFT will lose.

A popular L7 balancing tool is Nginx. It allows you to cache responses, choose different strategies, and even script on LUA.

Despite all the charms of Nginx, if:

')

- No need to work with HTTP (s).

- You need to squeeze the maximum out of the network

- There is no need to cache anything - behind the balancer, clean API servers with dynamics.

The question may arise: why do we need Nginx? Why spend resources on balancing on L7, isn't it easier to just forward a SYN packet? (L4 Direct Routing).

Layer 4 balancing or as balanced in antiquity

A popular packet forwarding tool was IPVS. He performed the tasks of balancing through the tunnel and Direct Routing.

In the first case, for each connection, a TCP channel was established and a packet from the user went to the balancer, then to the minion, and then in the reverse order.

In this scheme, the main problem is visible: in the opposite direction, the data go first to the balancer, and then to the user (Nginx works in the same way). Unnecessary work is done, given the fact that more data is usually sent to the user, this behavior leads to some loss of performance.

This deficiency is devoid of (but endowed with new) balancing method called Direct Routing. Schematically, it looks like this:

In the case of Direct Routing, the reverse packets go directly to the user, bypassing the balancer. Obviously, both balancer and network resources are saved. By saving network resources, it means not so much traffic saving, because the usual practice is to connect servers to a separate grid and not account traffic, but the fact that even transferring through a balancer is a loss of milliseconds.

This method imposes certain restrictions:

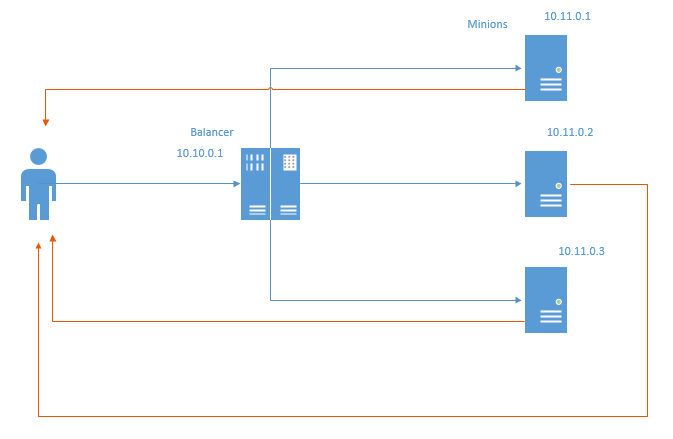

- The datacenter where the infrastructure is located should allow spoofing local addresses. In the scheme above, each minion must send packets back on behalf of IP 10.10.0.1, which is assigned to the balancer.

- Balancer knows nothing about the state of the minions. Therefore, the strategies of Least Conn and Least Time are not realizable out of the box. In one of the subsequent articles I will try to implement them and show the result.

Here Comes NFTables

A few years ago, Linux began to actively promote NFTables as a replacement for IPTables, ArpTables, EBTables and all the rest [az] {1,} Tables. At the moment when we had to Adram, it became necessary to squeeze every millisecond of the response from the network, I decided to pull out the checker and look for it - or maybe ipTables learned how to do iphash forwarding and can be quickly balancing it. Then I came across nftables, which can and not only that, but iptables still doesn’t even know how to do it.

After several days of proceedings, I was finally able to start up Direct Routing and Channel Routing via NFTables in the test lab, as well as test them in comparison with nginx.

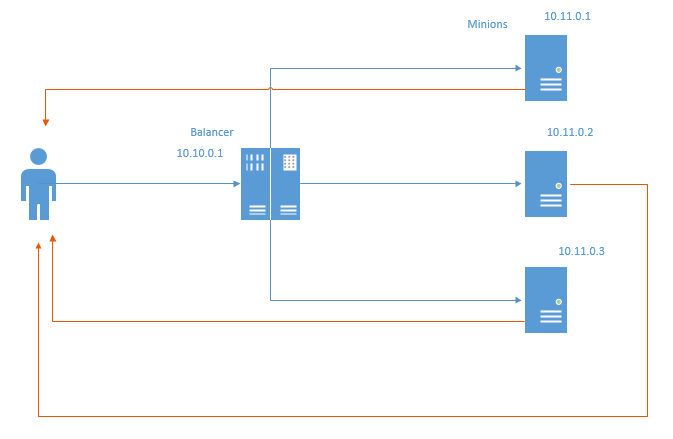

So, test lab. We have 5 cars:

- nft-router is a router that performs the task of communicating with the client and the AppServer subnet. There are 2 network cards on it: 192.168.56.254 - looks at the appserver network, 192.168.97.254 - looks at the clients. Ip_forward is on and all routes are registered.

- nft-client: client from which ab will be chased, ip 192.168.97.2

- nft-balancer: balancer. It has two IPs: 192.168.56.4, to which clients and 192.168.13.1 are accessed, from the minion subgrid.

- nft-minion-a and nft-minion-b: minions of types: 192.168.56.2, 192.168.56.3 and 192.168.13.2 and 192.168.13.3 (I tried to balance it through the same network and through different networks). In the tests I stopped at the fact that the minions have “external” types - on the 192.168.56.0/24 subnet

All interfaces have MTU 1500.

Direct routing

Settings NFTables on the balancer:

table ip raw { chain input { type filter hook prerouting priority -300; policy accept; tcp dport http ip daddr set jhash tcp sport mod 2 map { 0: 192.168.56.2, 1: 192.168.56.3 } } } A raw chain is created, on the pre-outs hook, with a priority of -300.

If a packet arrives with a target address http, then depending on the source port (made for testing from one machine, ip saddr is needed in reality), either 56.2 or 56.3 is selected and set as the target address in the packet, and then sent further along the routes. Roughly speaking, for even ports 56.2, for odd ports, respectively, 56.3 (in fact, not, for even / odd hashes, but it is easier to understand that way). After setting the target IP, the packet goes back to the network. No NAT occurs, the minions packet comes from the source IP of the client, not the balancer, which is important for Direct Routing.

NFT settings on minions:

table ip raw { chain output { type filter hook output priority -300; policy accept; tcp sport http ip saddr set 192.168.56.4 } } Raw output hook is created with a priority of -300 (priority is very important here, at higher ones, the required mengling will not work for reply packages).

All outgoing traffic from the http port is signed 56.4 (ip balancer) and sent straight to the client, bypassing the balancer.

To check whether everything will work correctly, I started a client in another network and started it through a router.

I also disabled arp_filter, rp_filter (so that spoofing works) and enabled ip_forward both on the balancer and on the router.

For benches, in the case of NFT, Nginx + php7.2-FPM is used through the unix socket on each minion. There was nothing on the balancer.

In the case of Nginx used: nginx on the balancer and php7.2-FPM via TCP on the minions. As a result, I was not balancing the web server behind the balancer, but at once FPM (which will be more honest with respect to nginx, and correspond more to real life).

For NFT, only the hash strategy was used (in the table, nft dr ), for nginx: hash ( ngx eq ) and least conn ( ngx lc )

Several tests were done.

- Small quick script (small) .

<?php system('hostname'); - Script with random delay (rand) .

<?php usleep(mt_rand(100000,200000)); echo "ok"; - Script with sending a large amount of data (size) .

<?php $size=$_GET['size']; $file='/tmp/'.$size; if (!file_exists($file)) { $dummy=""; exec ("dd if=/dev/urandom of=$file bs=$size count=1 2>&1",$dummy); } fpassthru (fopen($file,'rb'));

The following dimensions were used:

512,1440,1460,1480,1500,2048,65535,655350 bytes.

Before the tests, I warmed up the files with statics, on each minion.

Tested ab, three times each test:

#!/bin/bash function do_test() { rep=$3 for i in $(seq $rep) do echo "testing $2 # $i" echo "$2 pass $i" >> $2 ab $1 >> $2 echo "--------------------------" >> $2 done } do_test " -n 5000 -c 100 http://192.168.56.4:80/rand.php" "ngx_eq_test_rand" 3 do_test " -n 10000 -c 100 http://192.168.56.4:80/" "ngx_eq_test_small" 3 size=512 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=1440 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=1460 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=1480 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=1500 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=2048 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=65535 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 size=655350 do_test " -n 10000 -c 100 http://192.168.56.4:80/size.php?size=$size" "ngx_eq_test_size_$size" 3 Initially, I planned to give the test time, milliseconds and the rest, eventually settled on the RPS - they are representative and correlate with time indicators.

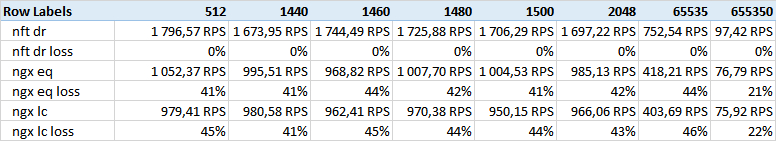

Got the following results:

Test Size - columns - the size of the data given.

As you can see, nft direct routing wins by a wide margin.

I was counting on several other results related to the size of the ethernet frame, but no correlation was found. Perhaps the 512 body doesn’t fit in 1500 MTU, although I doubt the small test will be revealing.

I noticed that on large volumes (650k) nginx reduces the gap. Perhaps this is somehow related to the buffers and TCP Windows size.

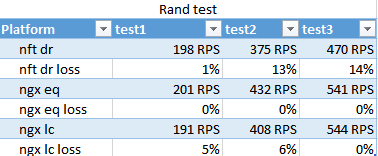

The result of the rand test. Shows how at least conn copes in terms of different speed of scripts execution on different minions.

Surprisingly, nginx hash worked faster than least conn, and only in the final pass, at least conn took the lead a little, which does not claim to be of statistical significance.

The numbers of passages are very different due to the fact that 100 threads are leaving at once, and FPM-ok loads about 10 from the start. By the third pass, they managed to roll up - which shows the applicability of the strategies for berst.

NFT is expected to lose this test. Nginx well optimizes interaction with FPMs in such situations.

small test

nft slightly wins over RPS, at least conn again as an outsider.

By the way, in this test it is clear that 400-500RPS is being issued, although on the test with sending 512 bytes it was over 1500 - it seems that the system eats this thousand.

findings

NFT performed well in the situation of uniform load optimization: when a lot of data is given, and the application running time is deterministic and the cluster resources are enough to test the incoming stream without spinning.

In a situation where the load on each request is chaotic and it is impossible to evenly balance the load on the servers with the primitive remainder of the hash division, the NFT will lose.

Source: https://habr.com/ru/post/441348/

All Articles