Has the evidence of the presence of new physics been lost at the Large Hadron Collider?

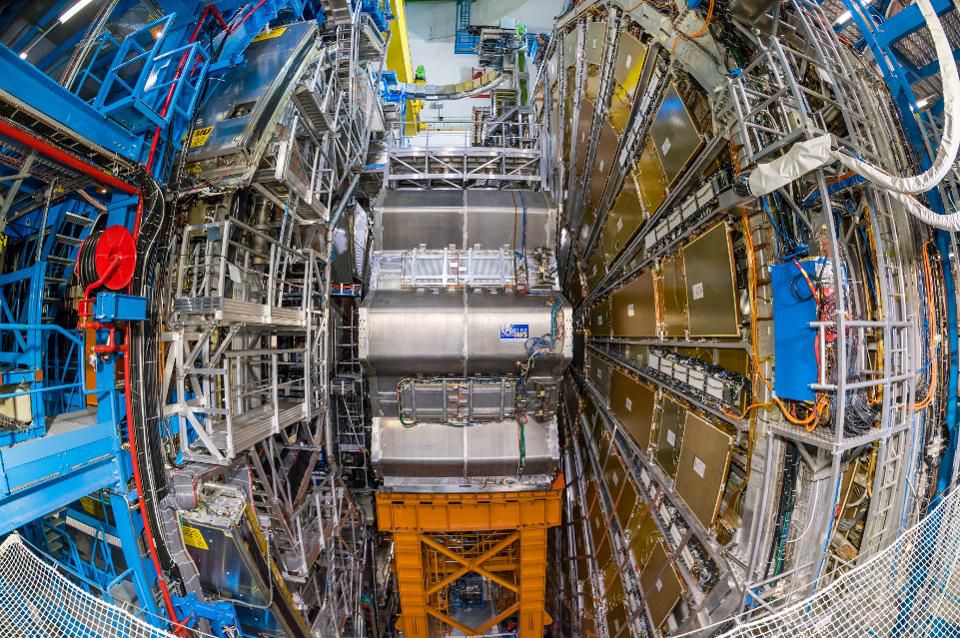

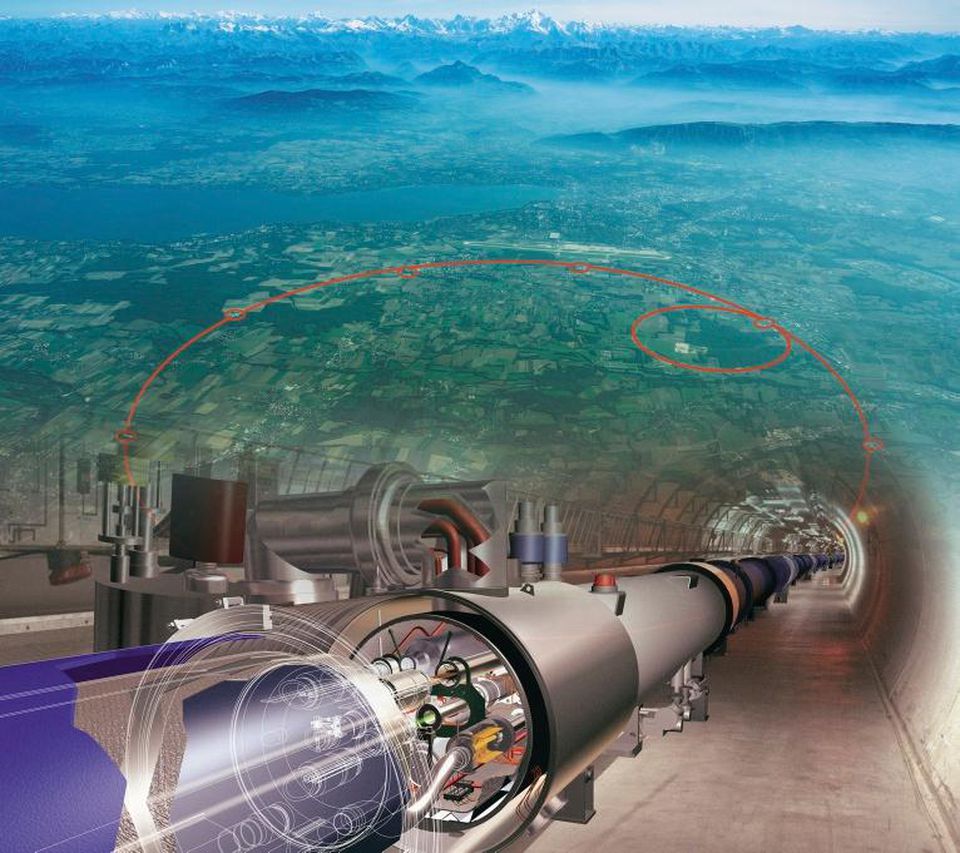

ATLAS particle detector at the LHC at the European Center for Nuclear Research (CERN) in Geneva, Switzerland. The tank, built inside an underground tunnel with a circumference of 27 km, is the largest and most powerful particle accelerator and the largest machine in the world. But he is able to record only a small fraction of the data he collects.

In the Large Hadron Collider, protons simultaneously spin clockwise and counterclockwise, and collide with each other, moving at a speed of 99.9999991% of the speed of light. At two points where the largest number of collisions should occur according to the scheme, huge particle detectors were built: CMS and ATLAS . After billions and billions of collisions that occurred on such enormous energies, the LHC has allowed us to go further in our hunt for the fundamental nature of the Universe and the understanding of the elementary building blocks of matter.

')

In September last year, the BAC celebrated 10 years of its work, having discovered the Higgs boson, which was its main achievement. But, despite these successes, no new particles, interactions, decays or new fundamental physics were found on it. And worst of all - most of the data obtained from the LHC is lost forever.

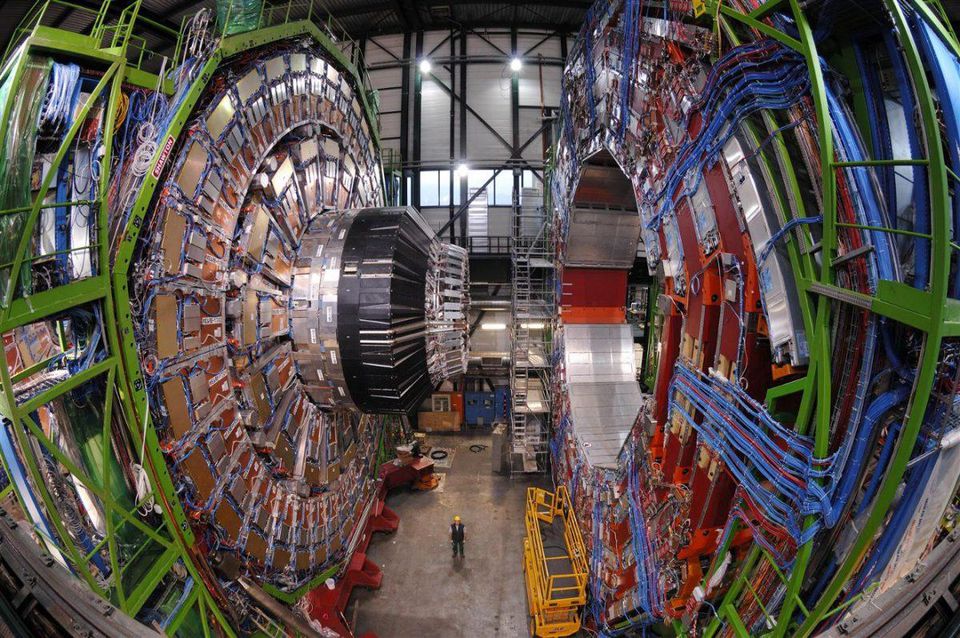

The CMS collaboration, whose detector before the final assembly can be seen in the photo, released the most complete results of its work. There are no signs of physics beyond the Standard Model .

This is one of the most obscure mysteries in high-energy physics, at least for ordinary people. LHC did not just lose most of the data: it lost an incredible 99.997% of them. Precisely this way: out of every million collisions occurring at the LHC, there are records relating only to about 30.

This is by necessity, due to the limitations imposed by the laws of nature, as well as the abilities of modern technologies. But this decision is accompanied by a sense of fear, intensified by the fact that, apart from the expected Higgs boson, nothing more openly was. The fear is that there is a new physics, waiting for it to be discovered, but we missed it, discarding all the necessary data.

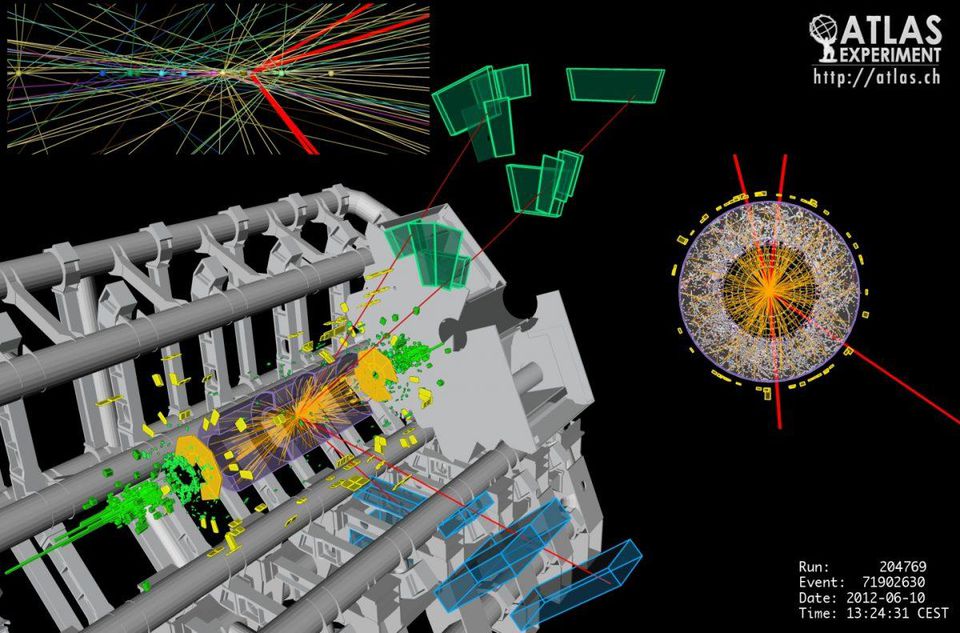

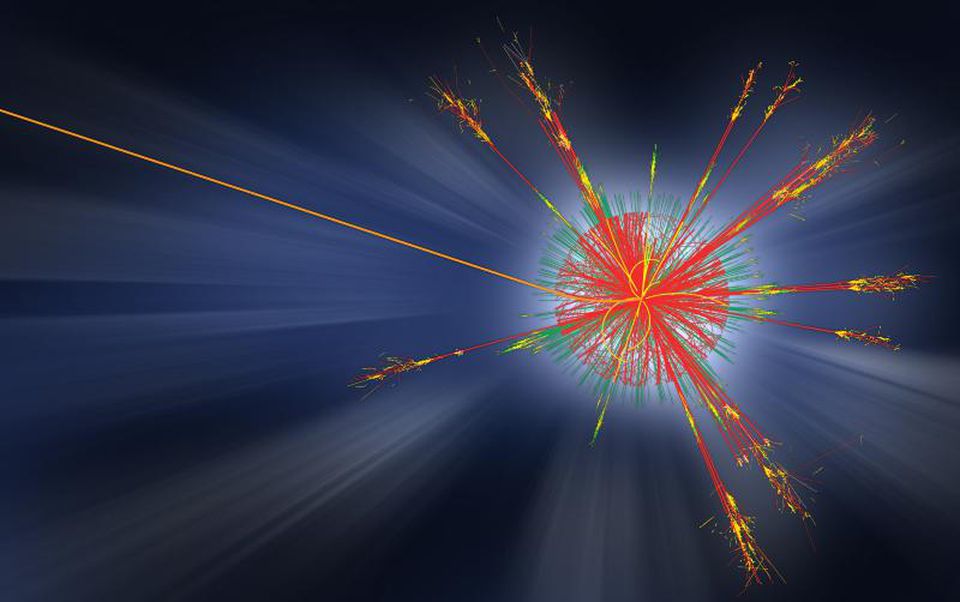

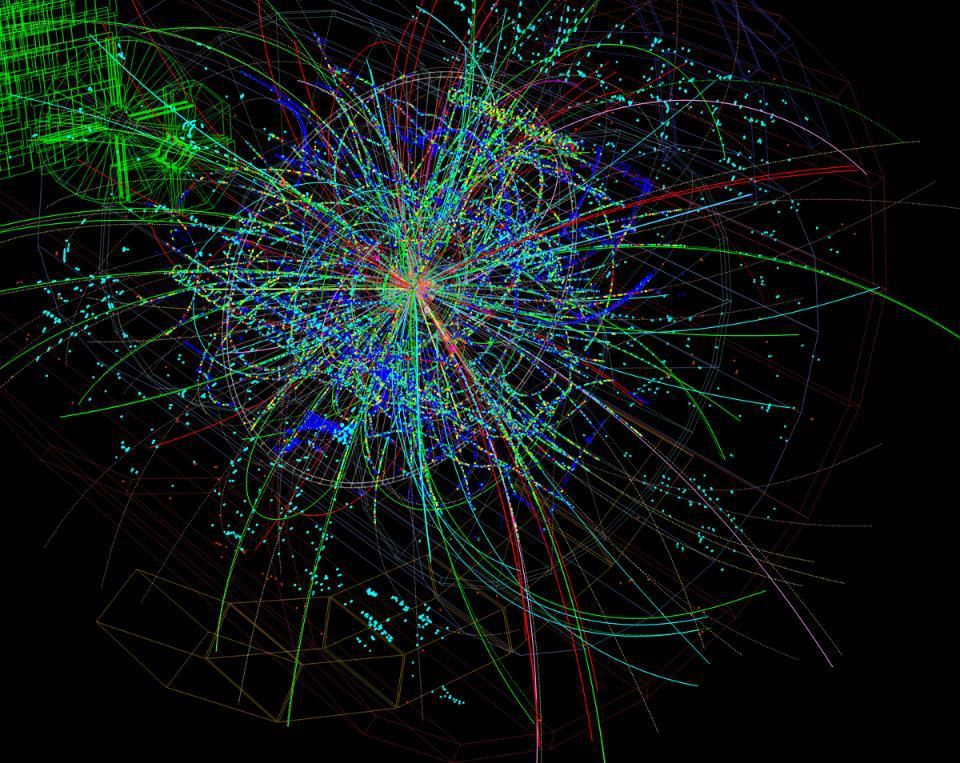

Event candidate for four muons in the ATLAS detector. Traces of muons and anti-muons are shown in red, and long-lived muons travel a longer way than any other unstable particles. This is an interesting event, but for every recorded event there are a million discarded ones.

But we had no choice. Something would still have to be discarded. The LHC works by accelerating protons to speeds close to the light one, launching them in opposite directions and pushing them together. So particle accelerators have been able to work best for several generations. According to Einstein, the energy of a particle is a combination of its rest mass (which you may recognize as E = mc 2 ) and the energy of motion, also known as kinetic. The faster you move - or, more precisely, the more you approach the speed of light - the more energy a particle can receive.

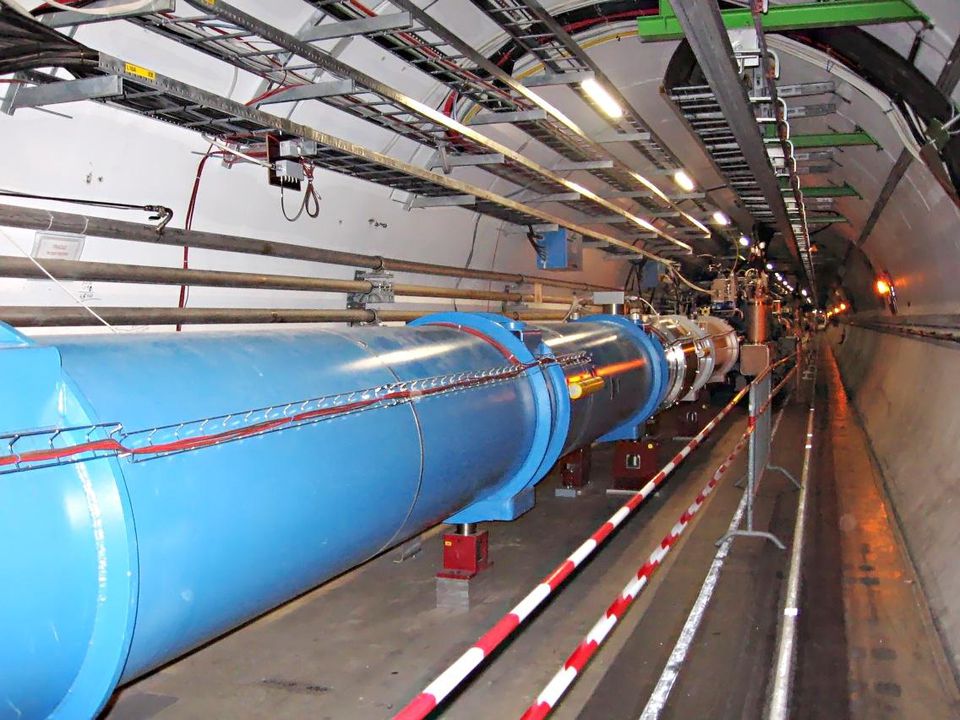

At the LHC, we push protons at speeds of 299 792 455 m / s, only 3 m / s, not reaching the speed of light. By pushing them at such high speeds, when they move in the opposite direction, we make possible the existence of particles that could not appear in other conditions.

The insides of the LHC, where the protons fly at speeds of 299 792 455 m / s, only 3 m / s are not reaching the speed of light.

The reason is the following: all particles (and antiparticles) created by us have a certain amount of inherent energy in the form of a rest mass. When two particles collide, a part of this energy must go to the separate components of these particles, to their rest energy and to kinetic energy (ie, the energy of motion).

But if there is enough energy, part of it can go to the production of new particles! Here the equation E = mc 2 becomes more interesting: the point is not only that all particles of mass m have energy E, but also that with enough energy available to us we can create new particles. At the LHC, humankind reached great energies in collisions that gave rise to new particles than any other laboratory in history.

Physicists were looking for signs of a huge number of variants of potentially new physics at the LHC, from extra dimensions and dark matter to supersymmetric particles and microscopic black holes. But despite all the data collected in these high-energy collisions, no evidence was found of these scenarios.

Each particle accounts for approximately 7 TeV of energy, that is, each proton receives kinetic energy 7,000 times its own rest energy. However, collisions are rare, and the protons are not just tiny - they are mostly empty. To increase the probability of a collision, you need to take more than one proton at a time; protons are injected in groups.

This means that many small groups of protons are worn at full power inside the LHC during its clockwise and counterclockwise operation. The length of the LHC tunnels is approximately 26 km, and each group of protons is only 7.5 m apart. These proton rays are compressed before interacting at the central point of each detector. And every 25 nanoseconds there is a chance for a collision.

The CMS detector at CERN is one of the two most powerful detectors ever created. On average, every 25 nanoseconds in its center collide new groups of particles.

So what to do? Count on a small number of collisions and record each one? This will be a huge waste of energy and potential data.

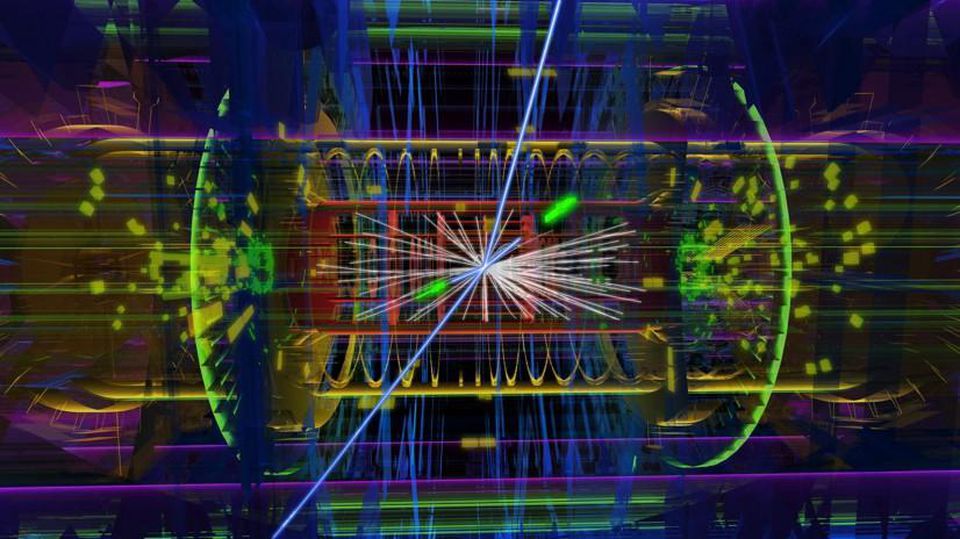

Instead, we pump a lot of protons into each group, and each time a beam collides, we get good chances for particles to collide. And each time with such a collision, the particles are torn in all directions inside the detector, launching complex electronics and schemes that allow us to recreate what was created, when and in what place of the detector. It looks like a giant explosion, and only by measuring all the pieces of shrapnel ejected from it can we recreate what happened (and the new things we created) at the time of the outbreak.

Event with the Higgs boson in the CMS at the LHC. The energy of this spectacular collision is 15 orders of magnitude smaller than Planck's, but it is precisely the exact measurements of the detector that allow us to reconstruct what happened at the collision point.

However, there is a problem with the collection and recording of all data. The detectors are large in themselves: the CMS is 22 m in size, and the ATLAS is 46 m. At any moment, particles originating from three different collisions arise inside the CMS, and from six in ATLAS. To record data, you need to take two steps:

- The data must be transferred to the memory of the detector, limited by the speed of the electronics. Although electric signals travel at almost the speed of light, we can “remember” only about one in five hundred collisions.

- Data in memory needs to be written to disk (or other permanent medium), and this happens much slower than writing data to memory. We have to decide what to store and what to throw away.

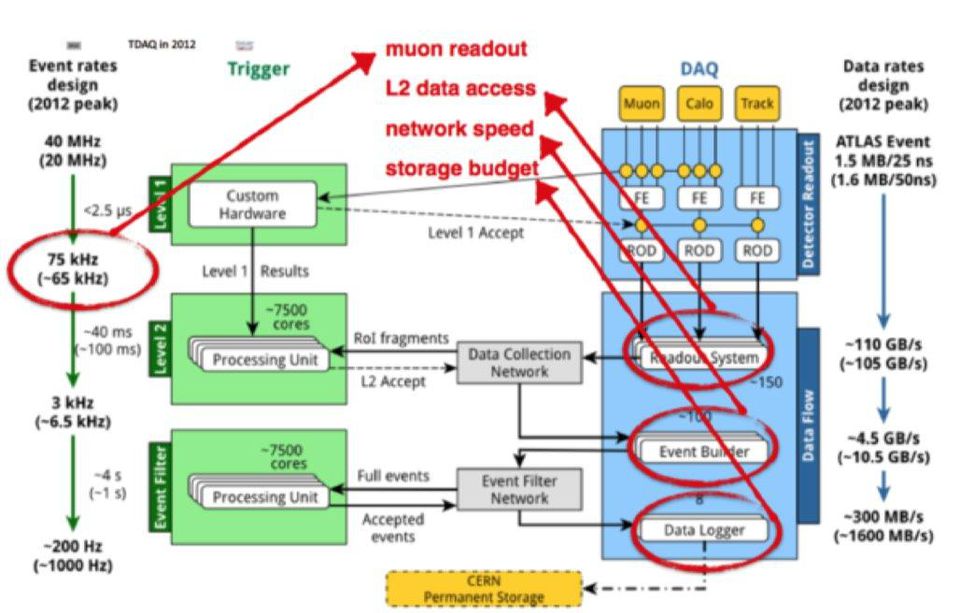

A schematic diagram of how data is received by the system, sensors are launched, analyzed, and sent for permanent storage. This is a chart for ATLAS, it is slightly different from a chart for CMS.

We use some tricks in order to guarantee the choice of events wisely. We immediately examine many collision factors to determine whether to study them more carefully or not: this is what we call a trigger. Passing the trigger, we get to the next level. (There is also a small amount of data that did not pass the trigger, just in case an interesting signal appears, for which we did not think to make a trigger). Then a second layer of filters and triggers are applied; if an event is interesting enough to save it, it enters the buffer to ensure that it is written to the media. We can guarantee that any event marked “interesting” is preserved, along with a small fraction of uninteresting events.

Since both of these steps are necessary, we can save only 0.003% for further analysis.

Candidate for the Higgs boson in the ATLAS detector. Even with obvious signs and tracks going transversely, there is a huge number of other particles; all because protons are composite particles. This only works because Higgs gives mass to the fundamental components of these particles.

How do we know that we save the right pieces of information? Those in which the creation of new particles is most likely recorded, the importance of new interactions is seen, is there a new physics?

When protons collide, for the most part normal particles are born - in the sense that they consist almost entirely of the upper and lower quarks. (These are particles such as protons, neutrons and pions). Most of the collisions occur in passing, that is, most of the particles collide with the detector in or against the direction of motion.

Particle accelerators on Earth, such as the LHC at CERN, can accelerate them to a speed very close to the light one, but still not reaching it. Protons are composite particles, and because of their motion at a speed close to light, after collisions, the scattering of new particles goes in or against the direction of motion, rather than across.

Therefore, in the first step, we are trying to study the traces of particles of relatively high energies going in the transverse direction, and not forward or backward in the direction of the rays. We are trying to record in the detector’s memory the events that, in our opinion, have the greatest amount of free energy E to create new particles of the highest possible mass m. Then we quickly scan for what went into the detector’s memory to see if it is worth writing this data to disk. If so, this data can be queued for permanent storage.

As a result, every second you can save 1000 events. This number may seem large - but note that about 40,000,000 groups of protons collide every second.

Particle trails appearing due to collisions with high energies - snapshot from the 2014 LHC. Only one of the 30,000 such collisions is recorded and saved, most of them are lost.

We think that we act intelligently, choosing and preserving exactly what we are saving, but we cannot be 100% sure. In 2010, the CERN data center reached an incredible milestone: 10 petabytes of data. By the end of 2013, it already contained 100 petabytes, in 2017 the mark of 200 petabytes was passed. But with all these volumes, we know that we threw away - or could not record - 30,000 times more data. We could collect hundreds of petabytes, but we refused and lost forever many zettabytes of data: this is more data than the entire Internet creates in a year .

The total amount of data collected at the LHC, is seriously ahead of the entire amount of data sent and received via the Internet over the past 10 years. But only 0.003% of this data was recorded and saved; all else is lost forever.

It is highly probable that the LHC created new particles, saw evidence of new interactions, observed and recorded all the signs of a new physics. Also because of our ignorance about the subject of the search, it is possible that we threw it all out and continue to do so. The nightmare of the absence of physics beyond the Standard Model becomes a reality. However, the real nightmare consists in the quite plausible possibility that the new physics exists, we built the ideal machine for its searches, found it, but did not realize it, because of our decisions and assumptions. The real nightmare is that we are deceiving ourselves, believing in the Standard Model, only because we studied 0.003% of the available data. We think we made a smart decision, keeping the selected data, but we can’t be sure of it. Perhaps we ourselves, without knowing it, have brought upon this nightmare.

More articles on the popular science topic can be found on the Golovanov.net website. See also: what is the meaning of life ; why the San Francisco debris eradication plan did not work ; where were found the remnants of normal matter in the Universe, which for a long time could not be found; is there space and time ; how else can we search for life on other planets; and a series of articles on cosmology " Ask Ethan ".

I remind you that the project exists only thanks to the support of readers (bank cards, Yandex.Money, WebMoney, Bitcoins, and at least as). Thanks to everyone who has already provided support!

Now you can support the project through the Patreon automatic subscription service!

Source: https://habr.com/ru/post/441240/

All Articles