Machine-synaesthetic approach to detecting network DDoS attacks. Part 2

Hello again. Today we continue to share material dedicated to the launch of the course “Network Engineer” , which starts in early March. We see that many were interested in the first part of the article “Machine-synaesthetic approach to detecting network DDoS attacks” and today we want to share the second part with you - the final part.

3.2 Classification of images in the problem of detection of anomalies

The next step is to solve the problem of classifying the resulting image. In general, the solution to the problem of detecting classes (objects) in an image is to use machine learning algorithms to build class models, and then algorithms to search for classes (objects) in an image.

')

Building a model consists of two stages:

a) Extraction of characteristic features for a class: construction of vectors of characteristic features for class elements.

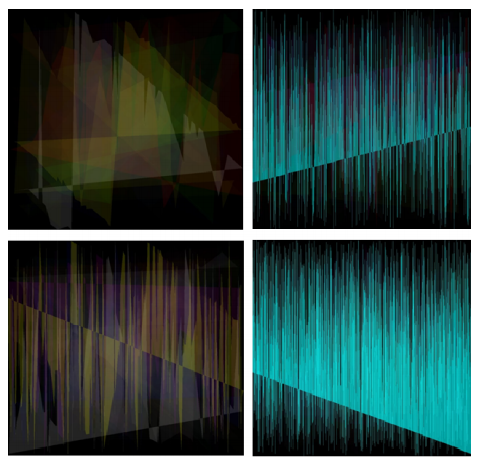

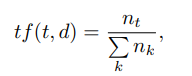

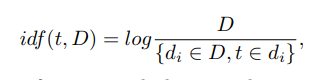

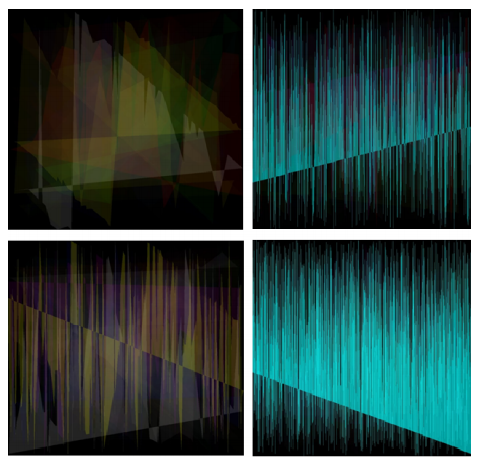

Fig. one

b) Learning the features of the model for subsequent recognition tasks.

The description of the class object is performed using feature vectors. Vectors are formed from:

a) color information (oriented gradient histogram);

b) contextual information;

c) data on the geometrical arrangement of the parts of the object.

The classification (forecasting) algorithm can be divided into two stages:

a) Extracting features from an image. At this stage, two tasks are performed:

b) associating an image with a specific class. A formal class description, that is, a set of features that are highlighted by their test images, is used as input. Based on this information, the classifier decides whether the image belongs to the class, and evaluates the confidence level for the conclusion.

Classification methods. Classification methods range from predominantly heuristic approaches to formal procedures based on the methods of mathematical statistics. There is no generally accepted classification, but several approaches to the classification of images can be distinguished:

For the implementation presented in this article, the authors chose the "bag of words" algorithm, given the following reasons:

To analyze the video stream projected from the traffic, we used a naive Bayes classifier [25]. It is often used to classify texts with the word bag model. In this case, the approach is similar to text analysis, instead of words only descriptors are used. The work of this classifier can be divided into two parts: the learning phase and the forecasting phase.

The learning phase . Each frame (image) is fed to the input of the descriptor search algorithm, in this case scale-invariant feature transform (SIFT - scale-invariant feature transform) [26]. After this, the task of correlating the singular points between frames is performed. The singular point on the image of the object is the point that is most likely to appear on other images of this object.

To solve the problem of comparing the singular points of an object in different images, a descriptor is used. A descriptor is a data structure, an identifier of a singular point that distinguishes it from the others. It may or may not be invariant with respect to object image transformations. In our case, the descriptor is invariant with respect to promising transformations, that is, scaling. The descriptor allows you to compare a particular point of an object in one image with the same special point on another image of this object.

Then, the set of descriptors obtained from all images is sorted by groups by similarity using the k-means clustering method [26, 27]. This is done in order to train the classifier, which will give an opinion on whether the image represents abnormal behavior.

Below is a step-by-step algorithm for learning the image descriptor classifier:

Step 1 . Extract all descriptors from sets with and without attack.

Step 2 . Clustering all descriptors using the k-means method in n clusters.

Step 3 . Calculation of the matrix A (m, k), where m is the number of images, and k is the number of clusters. The element (i; j) will store the value of how often descriptors from the j-th cluster appear on the i-th image. Such a matrix will be called the appearance frequency matrix.

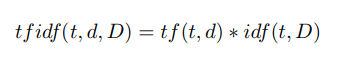

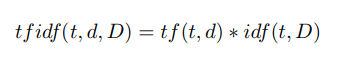

Step 4 . Calculation of descriptor weights using the formula tf idf [28]:

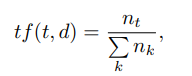

Here tf (“term frequency”) is the frequency of the descriptor appearing in this image and is defined as

where t is the descriptor, k is the number of descriptors in the image, nt is the number of descriptors t in the image. In addition, idf (“reverse document frequency”) is the inverse of the image frequency with the specified descriptor in the sample and is defined as

where D is the number of images with a given descriptor in the sample, {di ∈ D, t ∈ di} is the number of images in D, where t is under the conditions nt! = 0

Step 5 . Substituting the corresponding weights instead of descriptors into the matrix A.

Step 6 . Classification. We use the gain of naive Bayes classifiers (adaboost).

Step 7 . Save the trained model to a file.

Step 8 . This completes the training phase.

Phase prediction . The differences between the learning phase and the prediction phase are small: descriptors are extracted from the image and correlated with existing groups. Based on this relationship, a vector is constructed. Each element of this vector is the frequency of the appearance of descriptors from this group on the image. By analyzing this vector, the classifier can make a prediction of an attack with a certain probability.

The general prediction algorithm based on a pair of classifiers is presented below.

Step 1 . Extract all descriptors from the image;

Step 2 . Clustering the resulting set of descriptors;

Step 3 . Calculation of the vector [1, k];

Step 4 . Calculate the weight for each descriptor using the tf idf formula presented above;

Step 5 . Replacing the frequency of occurrence in vectors by their weight;

Step 6 . Classification of the resultant vector by the previously trained classifier;

Step 7 . The conclusion about the presence of anomalies in the observed network based on the forecast classifier.

4. Evaluation of detection efficiency

The task of evaluating the effectiveness of the proposed method was solved experimentally. The experiment used a number of parameters established empirically. 1000 clusters were used for clustering. The generated images had 1000 per 1000 pixels.

4.1 Experimental data set

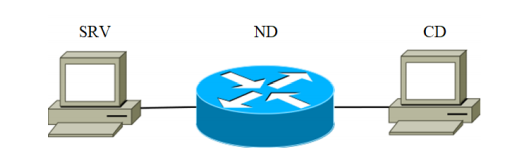

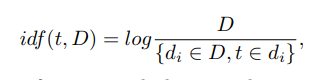

For experiments was assembled installation. It consists of three devices connected by a communication channel. The block diagram of the installation is shown in Figure 2.

Pic1

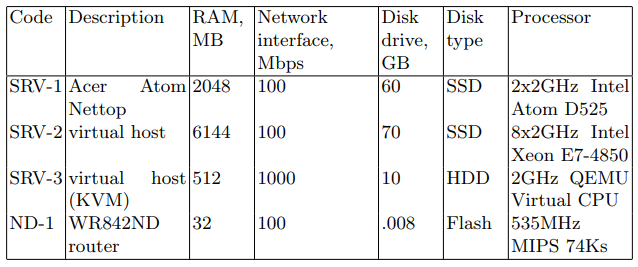

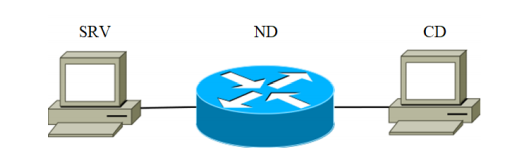

The SRV device plays the role of an attacking server (hereinafter referred to as the target server). As the target server, the devices listed in Table 1 with the SRV code were used sequentially. The second is a network device designed to transmit network packets. Characteristics of the device are shown in Table 1 under the code ND-1.

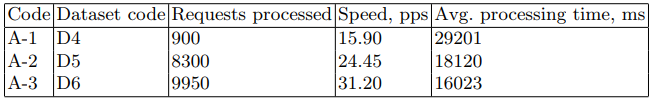

Table 1. Network device specifications

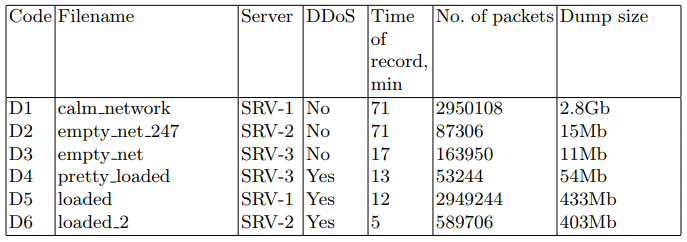

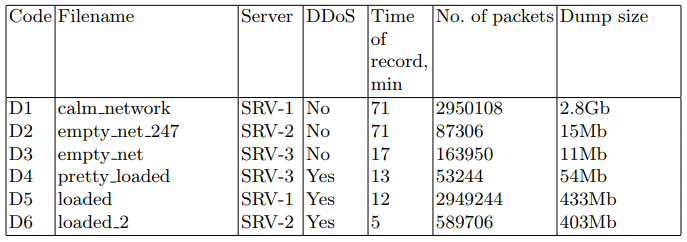

On the target servers, the network packets were written to a PCAP file for later use in detection algorithms. For this task, the tcpdump utility was used. The data sets are described in table 2.

Table 2. Intercepted Network Packets

The following software was used on the target servers: Linux distribution, nginx 1.10.3 web server, postgresql 9.6 DBMS. A special web application was written to emulate the system boot. The application requests a database with a large amount of data. The query is designed to minimize the use of various caching. During the experiments, requests were made to this web application.

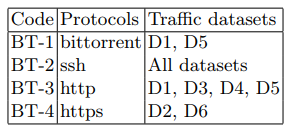

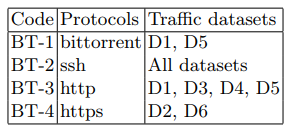

The attack was carried out from the third client device (Table 1) using the Apache Benchmark utility. The structure of the background traffic during the attack and the rest of the time is presented in Table 3.

Table 3. Background traffic features

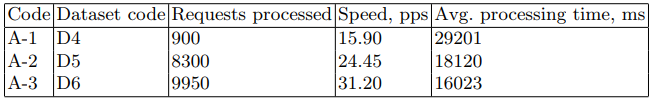

As an attack, we are implementing the version of the distributed DoS HTTP GET flood. Such an attack, in essence, is the generation of a constant stream of GET requests, in this case from a CD-1 device. To generate it, we used the ab utility from the apache-utils package. As a result, files containing network status information were obtained. The main characteristics of these files are presented in Table 2. The main parameters of the attack scenario are shown in Table 4.

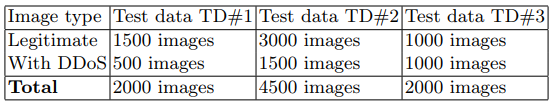

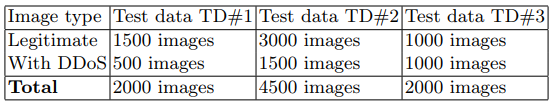

From the resulting network traffic dump, the sets of generated images TD # 1 and TD # 2, which were used during the training phase, were obtained. Sample TD # 3 was used for the prediction phase. A summary of the test datasets is presented in table 5.

4.2 Performance Criteria

The main parameters evaluated during this study were:

Table 4. Features of DDoS attacks

Table 5. Test Image Sets

a) DR (Detection Rate) - the number of detected attacks in relation to the total number of attacks. The higher this parameter, the higher the efficiency and quality of ADS.

b) FPR (False Positive Rate) - the number of “normal” objects mistakenly classified as an attack, in relation to the total number of “normal” objects. The lower this parameter, the higher the efficiency and quality of the anomaly detection system.

c) CR (Complex rate) is a complex indicator that takes into account the combination of the DR and FPR parameters. Since the DR and FPR parameters were taken to be of equal importance in the study, the complex indicator was calculated as follows: CR = (DR + FPR) / 2.

1000 images were submitted to the classifier, marked as “abnormal”. Based on the recognition results, DR was calculated depending on the size of the training sample. The following values were obtained: for TD # 1 DR = 9.5% and for TD # 2 DR = 98.4%. Further, the second half of the images (“normal”) were classified. Based on the result, FPR was calculated (for TD # 1 FPR = 3.2% and for TD # 2 FPR = 4.3%). Thus, the following comprehensive performance indicators were obtained: for TD # 1 CR = 53.15% and for TD # 2 CR = 97.05%.

5. Conclusions and future research

From the results of the experiments it is clear that the proposed method for detecting anomalies shows high results in detecting attacks. For example, on a large sample, the value of the integrated efficiency indicator reaches 97%. However, this method has some limitations in its application:

1. The DR and FPR values show the sensitivity of the algorithm to the size of the training set, which is a conceptual problem for machine learning algorithms. Increasing the sample results in improved detection rates. However, it is not always possible to implement a large enough training sample for a particular network.

2. The developed algorithm is deterministic, the same image is classified each time with the same result.

3. The effectiveness of the approach is good enough to confirm the concept, but the number of false positives is also large, which can lead to difficulties in practical implementation.

To overcome the limitation described above (point 3), it is supposed to change the naive Bayesian classifier to a convolutional neural network, which, in the authors' opinion, should lead to an increase in the accuracy of the anomaly detection algorithm.

Traditionally, we are waiting for your comments and invite everyone to the open day , which will be held next Monday.

3.2 Classification of images in the problem of detection of anomalies

The next step is to solve the problem of classifying the resulting image. In general, the solution to the problem of detecting classes (objects) in an image is to use machine learning algorithms to build class models, and then algorithms to search for classes (objects) in an image.

')

Building a model consists of two stages:

a) Extraction of characteristic features for a class: construction of vectors of characteristic features for class elements.

Fig. one

b) Learning the features of the model for subsequent recognition tasks.

The description of the class object is performed using feature vectors. Vectors are formed from:

a) color information (oriented gradient histogram);

b) contextual information;

c) data on the geometrical arrangement of the parts of the object.

The classification (forecasting) algorithm can be divided into two stages:

a) Extracting features from an image. At this stage, two tasks are performed:

- Since the image may contain objects of many classes, we need to find all the representatives. To do this, you can use a sliding window that passes through the image from the upper left to the lower right corner.

- The image is scaled as the scale of the objects in the image may change.

b) associating an image with a specific class. A formal class description, that is, a set of features that are highlighted by their test images, is used as input. Based on this information, the classifier decides whether the image belongs to the class, and evaluates the confidence level for the conclusion.

Classification methods. Classification methods range from predominantly heuristic approaches to formal procedures based on the methods of mathematical statistics. There is no generally accepted classification, but several approaches to the classification of images can be distinguished:

- object modeling methods based on details;

- word bag methods;

- spatial pyramid matching methods.

For the implementation presented in this article, the authors chose the "bag of words" algorithm, given the following reasons:

- Algorithms for modeling on the basis of details and comparison of spatial pyramids are sensitive to the position of the descriptors in space and their relative position. These classes of methods are effective in detecting objects in an image; However, due to the characteristic features of the input data, they are poorly applicable to the problem of image classification.

- The “bag of words” algorithm is widely tested in other fields of knowledge, it shows good results and is quite simple to implement.

To analyze the video stream projected from the traffic, we used a naive Bayes classifier [25]. It is often used to classify texts with the word bag model. In this case, the approach is similar to text analysis, instead of words only descriptors are used. The work of this classifier can be divided into two parts: the learning phase and the forecasting phase.

The learning phase . Each frame (image) is fed to the input of the descriptor search algorithm, in this case scale-invariant feature transform (SIFT - scale-invariant feature transform) [26]. After this, the task of correlating the singular points between frames is performed. The singular point on the image of the object is the point that is most likely to appear on other images of this object.

To solve the problem of comparing the singular points of an object in different images, a descriptor is used. A descriptor is a data structure, an identifier of a singular point that distinguishes it from the others. It may or may not be invariant with respect to object image transformations. In our case, the descriptor is invariant with respect to promising transformations, that is, scaling. The descriptor allows you to compare a particular point of an object in one image with the same special point on another image of this object.

Then, the set of descriptors obtained from all images is sorted by groups by similarity using the k-means clustering method [26, 27]. This is done in order to train the classifier, which will give an opinion on whether the image represents abnormal behavior.

Below is a step-by-step algorithm for learning the image descriptor classifier:

Step 1 . Extract all descriptors from sets with and without attack.

Step 2 . Clustering all descriptors using the k-means method in n clusters.

Step 3 . Calculation of the matrix A (m, k), where m is the number of images, and k is the number of clusters. The element (i; j) will store the value of how often descriptors from the j-th cluster appear on the i-th image. Such a matrix will be called the appearance frequency matrix.

Step 4 . Calculation of descriptor weights using the formula tf idf [28]:

Here tf (“term frequency”) is the frequency of the descriptor appearing in this image and is defined as

where t is the descriptor, k is the number of descriptors in the image, nt is the number of descriptors t in the image. In addition, idf (“reverse document frequency”) is the inverse of the image frequency with the specified descriptor in the sample and is defined as

where D is the number of images with a given descriptor in the sample, {di ∈ D, t ∈ di} is the number of images in D, where t is under the conditions nt! = 0

Step 5 . Substituting the corresponding weights instead of descriptors into the matrix A.

Step 6 . Classification. We use the gain of naive Bayes classifiers (adaboost).

Step 7 . Save the trained model to a file.

Step 8 . This completes the training phase.

Phase prediction . The differences between the learning phase and the prediction phase are small: descriptors are extracted from the image and correlated with existing groups. Based on this relationship, a vector is constructed. Each element of this vector is the frequency of the appearance of descriptors from this group on the image. By analyzing this vector, the classifier can make a prediction of an attack with a certain probability.

The general prediction algorithm based on a pair of classifiers is presented below.

Step 1 . Extract all descriptors from the image;

Step 2 . Clustering the resulting set of descriptors;

Step 3 . Calculation of the vector [1, k];

Step 4 . Calculate the weight for each descriptor using the tf idf formula presented above;

Step 5 . Replacing the frequency of occurrence in vectors by their weight;

Step 6 . Classification of the resultant vector by the previously trained classifier;

Step 7 . The conclusion about the presence of anomalies in the observed network based on the forecast classifier.

4. Evaluation of detection efficiency

The task of evaluating the effectiveness of the proposed method was solved experimentally. The experiment used a number of parameters established empirically. 1000 clusters were used for clustering. The generated images had 1000 per 1000 pixels.

4.1 Experimental data set

For experiments was assembled installation. It consists of three devices connected by a communication channel. The block diagram of the installation is shown in Figure 2.

Pic1

The SRV device plays the role of an attacking server (hereinafter referred to as the target server). As the target server, the devices listed in Table 1 with the SRV code were used sequentially. The second is a network device designed to transmit network packets. Characteristics of the device are shown in Table 1 under the code ND-1.

Table 1. Network device specifications

On the target servers, the network packets were written to a PCAP file for later use in detection algorithms. For this task, the tcpdump utility was used. The data sets are described in table 2.

Table 2. Intercepted Network Packets

The following software was used on the target servers: Linux distribution, nginx 1.10.3 web server, postgresql 9.6 DBMS. A special web application was written to emulate the system boot. The application requests a database with a large amount of data. The query is designed to minimize the use of various caching. During the experiments, requests were made to this web application.

The attack was carried out from the third client device (Table 1) using the Apache Benchmark utility. The structure of the background traffic during the attack and the rest of the time is presented in Table 3.

Table 3. Background traffic features

As an attack, we are implementing the version of the distributed DoS HTTP GET flood. Such an attack, in essence, is the generation of a constant stream of GET requests, in this case from a CD-1 device. To generate it, we used the ab utility from the apache-utils package. As a result, files containing network status information were obtained. The main characteristics of these files are presented in Table 2. The main parameters of the attack scenario are shown in Table 4.

From the resulting network traffic dump, the sets of generated images TD # 1 and TD # 2, which were used during the training phase, were obtained. Sample TD # 3 was used for the prediction phase. A summary of the test datasets is presented in table 5.

4.2 Performance Criteria

The main parameters evaluated during this study were:

Table 4. Features of DDoS attacks

Table 5. Test Image Sets

a) DR (Detection Rate) - the number of detected attacks in relation to the total number of attacks. The higher this parameter, the higher the efficiency and quality of ADS.

b) FPR (False Positive Rate) - the number of “normal” objects mistakenly classified as an attack, in relation to the total number of “normal” objects. The lower this parameter, the higher the efficiency and quality of the anomaly detection system.

c) CR (Complex rate) is a complex indicator that takes into account the combination of the DR and FPR parameters. Since the DR and FPR parameters were taken to be of equal importance in the study, the complex indicator was calculated as follows: CR = (DR + FPR) / 2.

1000 images were submitted to the classifier, marked as “abnormal”. Based on the recognition results, DR was calculated depending on the size of the training sample. The following values were obtained: for TD # 1 DR = 9.5% and for TD # 2 DR = 98.4%. Further, the second half of the images (“normal”) were classified. Based on the result, FPR was calculated (for TD # 1 FPR = 3.2% and for TD # 2 FPR = 4.3%). Thus, the following comprehensive performance indicators were obtained: for TD # 1 CR = 53.15% and for TD # 2 CR = 97.05%.

5. Conclusions and future research

From the results of the experiments it is clear that the proposed method for detecting anomalies shows high results in detecting attacks. For example, on a large sample, the value of the integrated efficiency indicator reaches 97%. However, this method has some limitations in its application:

1. The DR and FPR values show the sensitivity of the algorithm to the size of the training set, which is a conceptual problem for machine learning algorithms. Increasing the sample results in improved detection rates. However, it is not always possible to implement a large enough training sample for a particular network.

2. The developed algorithm is deterministic, the same image is classified each time with the same result.

3. The effectiveness of the approach is good enough to confirm the concept, but the number of false positives is also large, which can lead to difficulties in practical implementation.

To overcome the limitation described above (point 3), it is supposed to change the naive Bayesian classifier to a convolutional neural network, which, in the authors' opinion, should lead to an increase in the accuracy of the anomaly detection algorithm.

Links

1. Mohiuddin A., Abdun NM, Jiankun H .: A survey of network anomaly detection techniques. In: Journal of Network and Computer Applications. Vol. 60, p. 21 (2016)

2. Afontsev E.: Network anomalies, 2006 nag.ru/articles/reviews/15588 setevyie-anomalii.html

3. Berestov AA: On the Internet. In: XV All-Russian Scientific Conference on the Higher School System ”, pp. 180–276 (2008)

4. Galtsev AV: for the Candidate Degree of Technical Sciences. Samara (2013)

5. Kornienko AA, Slyusarenko IM: Intrusion Detection Systems, 2008 ; citforum.ru/security internet / ids overview /

6. Kussul N., Sokolov A.: Adaptive anomaly detection using variable ordering. Part 2: Methods of detecting anomalies and the results of experiments. In: Informatics and Control Problems. Issue 4, pp. 83 × 88 (2003)

7. Mirkes EM: Neurocomputer: draft standard. Science, Novosibirsk, pp. 150-176 (1999)

8. Tsvirko DA Prediction of a network attack route using production model methods, 2012 academy.kaspersky.com/downloads/academycup participants / cvirko d. ppt

9. Somayaji A.: Automated response using system-call delays. In: USENIX Security Symposium 2000, pp. 185-197, 2000

10. Ilgun K .: USTAT: A Real-time Intrusion Detection System for UNIX. In: IEEE, University of California (1992)

11. Eskin E., Lee W., and Stolfo SJ: Modeling system calls for intrusion detection with dynamic window sizes. In: DARPA Information Survivability Conference and Exposition (DISCEX II), June 2001

12. Ye N., Xu M., and Emran SM: Probabilistic networks with undirected links for anomaly detection. In: 2000 IEEE Workshop on Information Assurance and Security, West Point, NY (2000)

13. Michael CC and Ghosh A.: Two state-based approaches to program-based anomaly detection. In: ACM Transactions on Information and System Security. No. 5 (2), 2002

14. Garvey TD, Lunt TF: Model-based Intrusion Detection. In: 14th Nation computer security conference, Baltimore, MD (1991)

15. Theus M. and Schonlau M .: Intrusion detection based on structural zeroes. In: Statistical Computing and Graphics Newsletter. No. 9 (1), pp. 12–17 (1998)

16. Tan K .: The application of neural networks to computer security. In: IEEE International Conference on Neural Networks. Vol. 1, pp. 476 × 481, Perth, Australia (1995)

17. Ilgun K., Kemmerer RA, Porras PA: State Transition Analysis: A Rule-Based Intrusion Detection System. In: IEEE Trans. Software Eng. Vol. 21, no. 3, (1995)

18. Eskin E.: Anomaly detection over noisy data using learned probability distributions. In: 17th International Conf. on Machine Learning, pp. 255? 262. Morgan Kaufmann, San Francisco, CA (2000)

19. Ghosh K., Schwartzbard A., and Schatz M.: Learning program behavior profiles for intrusion detection. In: 1st USENIX Workshop on Intrusion Detection and Network Monitoring, pp. 51 × 62, Santa Clara, California (1999)

20. Ye N .: A markov chain model for anomaly detection. In: 2000 IEEE Systems, Man, and Cybernetics, Information Assurance and Security Workshop (2000)

21. Axelsson S .: For the difficulty of intrusion detection. In: ACM Conference on Computer and Communications Security, pp. 1–7 (1999)

22. Chikalov I, Moshkov M, Zielosko B.: Optimization of dynamic programming. In Vestnik of Lobachevsky State University of Nizhni Novgorod, no. 6, pp. 195-200

23. Chen CH: Handbook of pattern recognition and computer vision. University of Massachusetts Dartmouth, USA (2015)

24. Gantmacher FR: Theory of matrices, p. 227. Science, Moscow (1968)

25. Murty MN, Devi VS: Pattern Recognition: An Algorithmic. Pp. 93-94 (2011)

2. Afontsev E.: Network anomalies, 2006 nag.ru/articles/reviews/15588 setevyie-anomalii.html

3. Berestov AA: On the Internet. In: XV All-Russian Scientific Conference on the Higher School System ”, pp. 180–276 (2008)

4. Galtsev AV: for the Candidate Degree of Technical Sciences. Samara (2013)

5. Kornienko AA, Slyusarenko IM: Intrusion Detection Systems, 2008 ; citforum.ru/security internet / ids overview /

6. Kussul N., Sokolov A.: Adaptive anomaly detection using variable ordering. Part 2: Methods of detecting anomalies and the results of experiments. In: Informatics and Control Problems. Issue 4, pp. 83 × 88 (2003)

7. Mirkes EM: Neurocomputer: draft standard. Science, Novosibirsk, pp. 150-176 (1999)

8. Tsvirko DA Prediction of a network attack route using production model methods, 2012 academy.kaspersky.com/downloads/academycup participants / cvirko d. ppt

9. Somayaji A.: Automated response using system-call delays. In: USENIX Security Symposium 2000, pp. 185-197, 2000

10. Ilgun K .: USTAT: A Real-time Intrusion Detection System for UNIX. In: IEEE, University of California (1992)

11. Eskin E., Lee W., and Stolfo SJ: Modeling system calls for intrusion detection with dynamic window sizes. In: DARPA Information Survivability Conference and Exposition (DISCEX II), June 2001

12. Ye N., Xu M., and Emran SM: Probabilistic networks with undirected links for anomaly detection. In: 2000 IEEE Workshop on Information Assurance and Security, West Point, NY (2000)

13. Michael CC and Ghosh A.: Two state-based approaches to program-based anomaly detection. In: ACM Transactions on Information and System Security. No. 5 (2), 2002

14. Garvey TD, Lunt TF: Model-based Intrusion Detection. In: 14th Nation computer security conference, Baltimore, MD (1991)

15. Theus M. and Schonlau M .: Intrusion detection based on structural zeroes. In: Statistical Computing and Graphics Newsletter. No. 9 (1), pp. 12–17 (1998)

16. Tan K .: The application of neural networks to computer security. In: IEEE International Conference on Neural Networks. Vol. 1, pp. 476 × 481, Perth, Australia (1995)

17. Ilgun K., Kemmerer RA, Porras PA: State Transition Analysis: A Rule-Based Intrusion Detection System. In: IEEE Trans. Software Eng. Vol. 21, no. 3, (1995)

18. Eskin E.: Anomaly detection over noisy data using learned probability distributions. In: 17th International Conf. on Machine Learning, pp. 255? 262. Morgan Kaufmann, San Francisco, CA (2000)

19. Ghosh K., Schwartzbard A., and Schatz M.: Learning program behavior profiles for intrusion detection. In: 1st USENIX Workshop on Intrusion Detection and Network Monitoring, pp. 51 × 62, Santa Clara, California (1999)

20. Ye N .: A markov chain model for anomaly detection. In: 2000 IEEE Systems, Man, and Cybernetics, Information Assurance and Security Workshop (2000)

21. Axelsson S .: For the difficulty of intrusion detection. In: ACM Conference on Computer and Communications Security, pp. 1–7 (1999)

22. Chikalov I, Moshkov M, Zielosko B.: Optimization of dynamic programming. In Vestnik of Lobachevsky State University of Nizhni Novgorod, no. 6, pp. 195-200

23. Chen CH: Handbook of pattern recognition and computer vision. University of Massachusetts Dartmouth, USA (2015)

24. Gantmacher FR: Theory of matrices, p. 227. Science, Moscow (1968)

25. Murty MN, Devi VS: Pattern Recognition: An Algorithmic. Pp. 93-94 (2011)

Traditionally, we are waiting for your comments and invite everyone to the open day , which will be held next Monday.

Source: https://habr.com/ru/post/441182/

All Articles