Changing the schema of PostgreSQL tables without long locks. Yandex lecture

If many operations are performed at the same time to change the database schema, the service cannot work correctly on writing. Developer Vladimir Kolyasinsky explained which operations in PostgreSQL require long locks and how the Yandex.Connect team provides almost 100% availability of the service for writing during the execution of such operations. In addition, you will learn about the library for Django, which is designed to automate part of the described processes.

- Good evening everyone! My name is Vladimir, I have been working at Yandex for five years. For the last two years I have been developing internal services and services for organizations.

It is a little about what services for the organizations are. We have been using a large number of internal services for quite a long time: Wikis for storing and exchanging data, a messenger for quick communication with colleagues, a tracker for organizing the work process, forms for conducting interviews inside and outside, as well as many other services.

')

Some time ago we decided that our services were great and that they could be useful not only inside Yandex, but also to people outside. We began to display them on a single platform Yandex.Connect, adding existing external services, such as Mail for the domain.

I am currently developing a Form Designer and a wiki. The stack used is basically services written in Python second and third versions; Django 1.9-1.11. Like a DB for the most part PostgreSQL. It is also Celery with MongoDB and SQS as brokers. It all works in Docker.

Let us turn to the problem we are facing. Services are popular, they are used by hundreds of thousands of people every day, data is accumulated, tables become more and more, and over time, many DB schema change operations that were performed unnoticed by users yesterday begin to interfere with the normal operation of services.

We will talk today about how we cope with such situations and how we achieve high availability of services for reading and writing.

First, consider what operations with PostgreSQL require long locks on the table. By lock, I mean any type of lock that prevents normal work with the table - be it access exclusive, which prevents both writing and reading, or weaker lock levels that prevent only writing.

Then we will see how locks can be avoided during the execution of such operations. Then we will talk about which operations with PostgreSQL are initially fast and do not require long locks. And at the end, let's talk about our zero_downtime_migrations library, which we use to automate some of the previously described techniques for avoiding long locks.

Operations that require a long lock:

Create index. By default, it does not block read operations in the table, but all write operations will be blocked for the entire time the index is created, respectively, the service will be read only.

Also, adding a new column with a default value is one of such operations, since PostgreSQL will overwrite the entire table under the hood, and for this time it will be blocked for both reading and writing. In addition, all its indexes will be overwritten.

About changing the type of the column - a similar thing happens, the plate will also be overwritten again. It should be noted that this not only takes a long time on large tables, but also briefly requires up to twice the amount of free memory from the occupied table.

Also, the VACUUM FULL operation requires a similar level of blocking, like the previous operations - this is access exclusive. VACUUM FULL will also block all read and write operations to the table.

The last two operations are the addition of the uniqueness properties column and, in general, the addition of CONSTRAINT. They also require locking at the time of data verification, although they take significantly less time than those discussed earlier, since they do not overwrite tables under the hood.

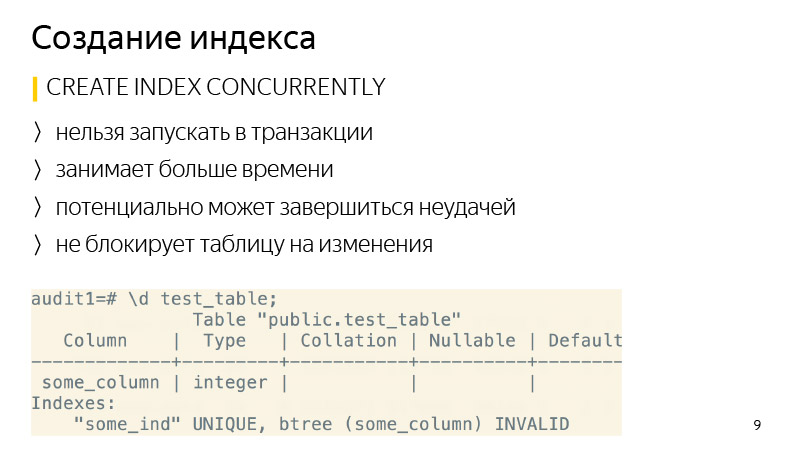

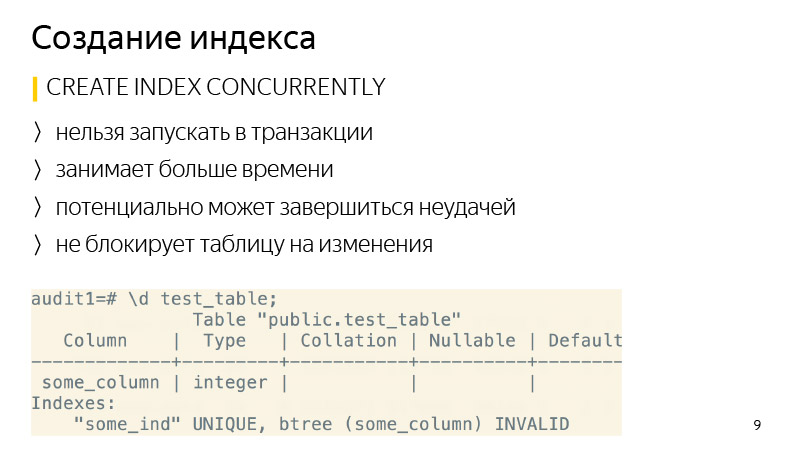

Create index. It's quite simple, you can create it using the CONCURRENTLY keyword. What's the Difference? This operation will take more time, since not one, but several passes through the table will be performed, and it will also wait until all current operations that can potentially change the index are completed. And it can also end in failure — for example, if creating a unique index reveals a violation of the conditions of uniqueness. Then the index will be marked as invalid, and it will have to be deleted and re-created. The REINDEX command is not recommended, as it works in the same way as the usual CREATE INDEX, that is, blocks the table for writing.

Regarding the removal of the index - starting from version 9.3, the index can also be deleted CONCURRENTLY to avoid locks during its deletion, although in general this is a quick operation.

Let's look at adding a new column with a default value. Here is the standard operation that is performed when we want to execute such a command, including Django performing such an operation.

How can I rewrite it to avoid rewriting a table? First, we add a new column with no default value in one transaction, and add a default value with a separate query. What is the difference? When we add a default value to an existing column, it does not change the existing data in the table. Only metadata changes. That is, for all new lines this default value will already be guaranteed. It remains for us to update all existing lines that were in the table at the time of the execution of this command. What we will do in batches of several thousand copies in order not to block a large amount of data for a long time.

After all the data we have updated, it remains only to perform SET NOT NULL, if we create a NOT NULL-column. If you do not create, then do not. In this way, it is possible to avoid rewriting the table when making such changes.

Such a sequence of commands takes more time than the execution of a normal command, since it depends on the size of the table and the number of indexes in it, and the usual command simply blocks all operations and rewrites the table regardless of the load, since there is no load at this moment. But it does not matter so much, because during the operation the table is available both for reading and writing. Takes a long time, just need to follow this and that's it.

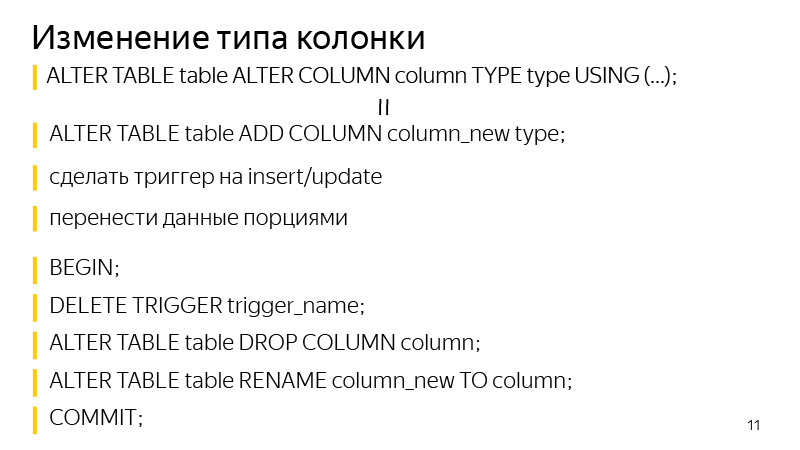

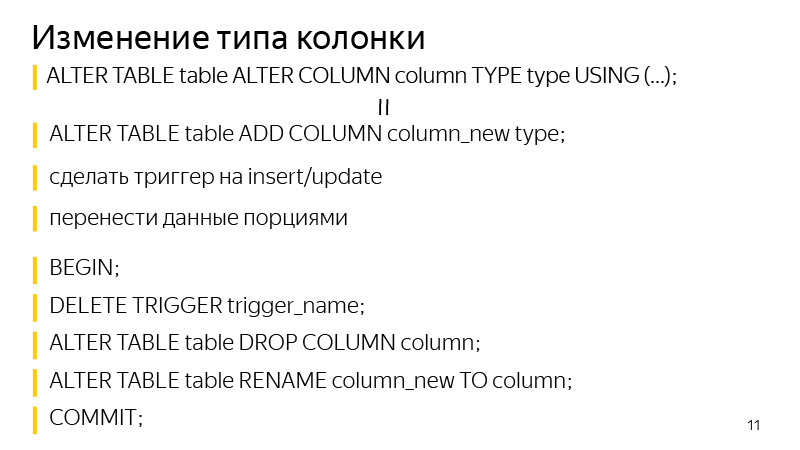

About changing the type of column. The approach is similar to adding a column with a default value. We first add a separate column of the type we need, then we add triggers to change the data in the original column in order to write at once to both columns, to a new one with the data type we need. For all new entries, they will immediately go to both of these columns. We need to update all existing ones. What we are doing in portions, just as it was on the previous slide, is similar.

After that, it remains to delete the trigger in one transaction, delete the old column and rename the old column to a new one. Thus, we achieved the same result: we changed the type of the column, while the table lock was not long.

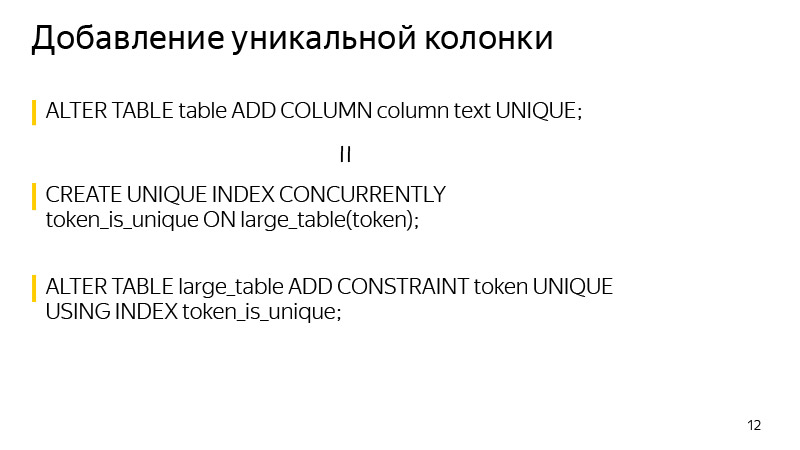

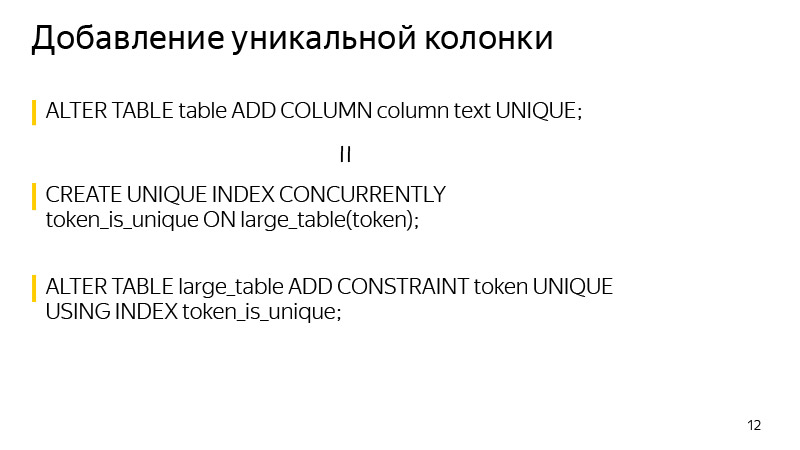

About adding a unique column. A lock is taken at the time of creation. It can be avoided if you know that the uniqueness in PostgreSQL is guaranteed by building a unique index. We can build the required unique index ourselves using CONCURRENTLY. And after constructing this index, create a CONSTRAINT using this index. After this, the definition of the initial index from the table will disappear, and the result, which will show us the definition of the table, will be no different after performing these two operations.

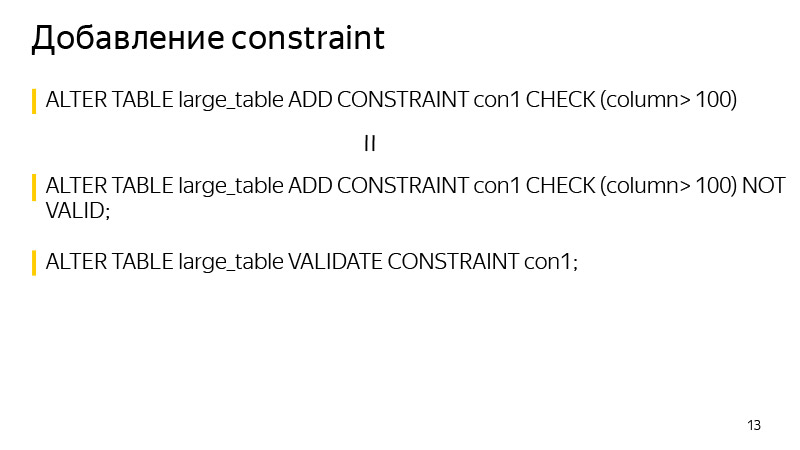

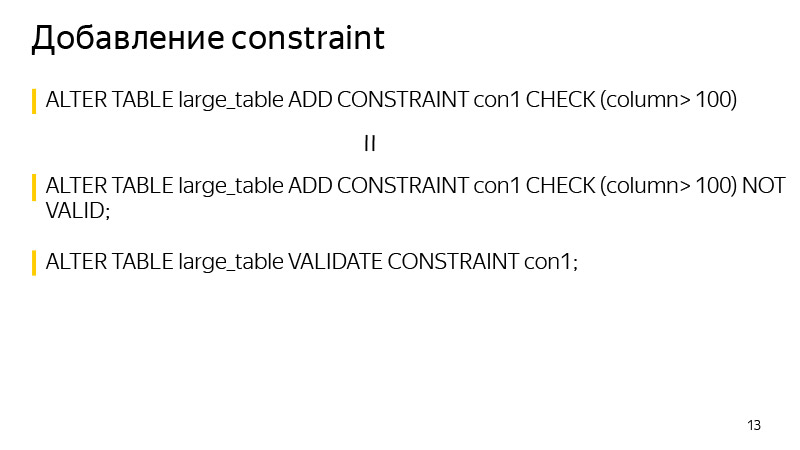

And in general when adding CONSTRAINT. You can use this technique to avoid blocking at the time of data verification. We first add CONSTRAINT with the keyword NOT VALID. This means that the execution of this CONSTRAINT for all lines in the table is not guaranteed. But at the same time for all new lines this CONSTRAINT will already be applied, and the corresponding exceptions will be thrown out, if it is not performed.

We can only check all existing values, which can be done by a separate VALIDATE CONSTRAINT command, and at the same time this command does not interfere either with reading or writing to the table. A table for this time will be available.

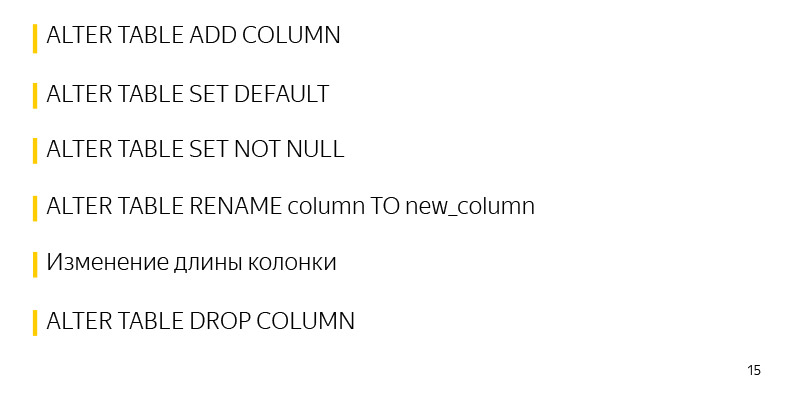

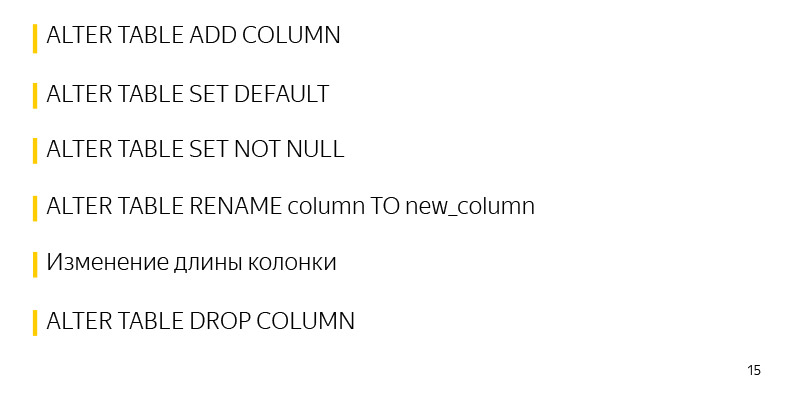

Operations that initially work quickly in PostgreSQL and do not require long locks:

One of these operations is the addition of a column with no default values and no restrictions. Because no changes are made to the table itself, only its meta data is changed. And all the NULL values that we see as a result of a SELECT are mixed in just at the output.

Also adding default values to an already existing nameplate is a quick operation, because only the meta data is changing. The table and lock is taken literally for the several milliseconds required to enter this information.

Also, the quick operation of setting SET NOT NULL, here it takes a little longer than described earlier, about a few seconds per table of 30 million records. This time can also be avoided if it matters.

Also fast operations include renaming a column, changing the length of a column, also does not lead to a rewriting of a column. Deleting a column and generally many entities in PostgreSQL is also a quick operation.

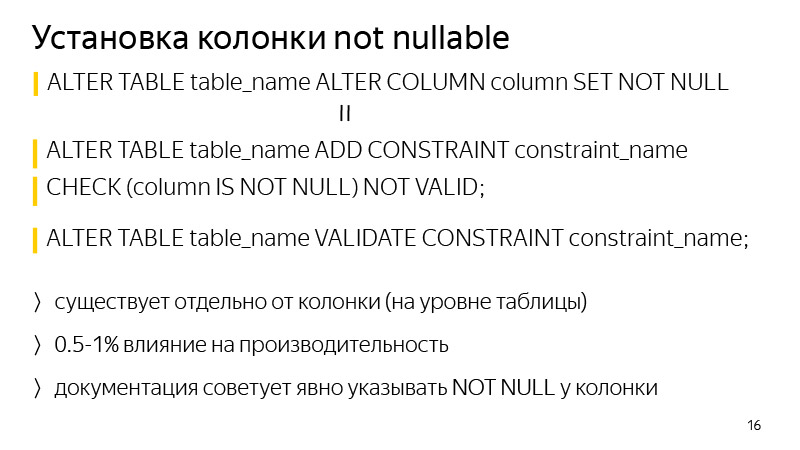

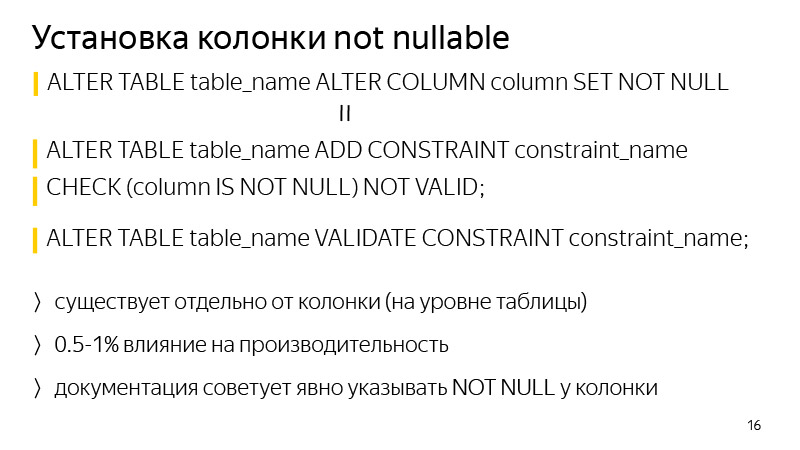

Regarding the addition of a NOT NULL column. To avoid blocking at the time of validation, you can perform the technique mentioned earlier - add a CONSTRAINT that corresponds to the CHECK (column IS NOT NULL) NOT VALID, and validate it with a separate command.

The difference in general is that this restriction will exist at the table level, and not at the column level in the table definition. Another difference is that this can affect performance, about one percent. In this case, there will be no blocking, if the service is high-loaded, even a few seconds of blocking may result in a huge transaction queue and a problem on the service.

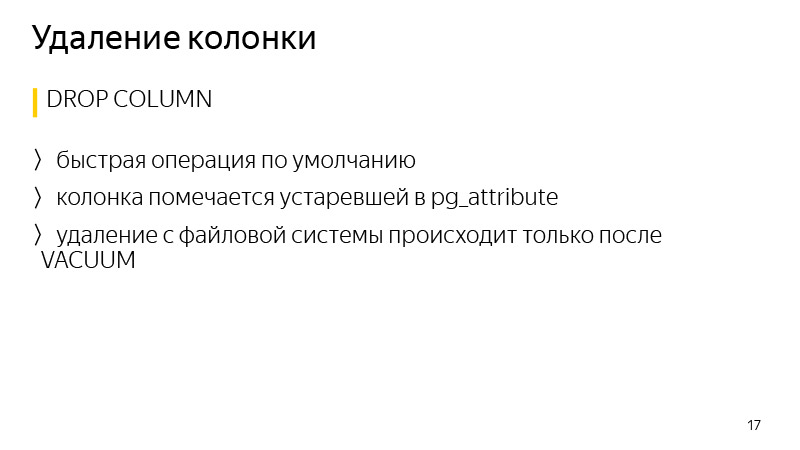

Deleting data in PostgreSQL is, in general, a quick operation, since the data is not deleted immediately, just the column is marked obsolete in the attributes of the table, and the data will actually be deleted only after the next vacuum is started.

Let's talk about the library . I'm talking about Django, migration. In general, Django is a library for Python, a web framework, which was originally created to quickly create news-type websites, since it has been greatly improved. There is an ORM system that allows you to communicate with database entries, with tables, as if they were objects or Python classes. That is, each table has its own class in Python. And when we make changes to our Python code, that is, we add new attributes like columns to a table, Django, during the procedure for creating a migration, notices these changes, and creates migration files to make mirror changes to the database itself so that they do not diverge.

The library was written to automate some of the previously discussed techniques for avoiding long locks on a table while performing such migrations. It works with Django from version 1.8 to 2.1 inclusive, and Python from 2.7 to 3.7 inclusive.

Regarding the current features of the library, this is adding a column with a default value of no locks, nullable or not, this is creating a CONCURRENTLY index, as well as the ability to restart if it falls. In the standard Django implementation, if we add a column with a default value, block the table, and if it is large, it could be 40 minutes of blocking in my experience. The table is locked, and everything is waiting until the changes are copied and made. It took 30 minutes - they caught the connection error to the database, the migration drops, the changes are not committed, and you have to start again, wait 40 minutes again, again blocking the table for this time.

The library also allows you to resume migration from the place where it was interrupted. When crashing and restarting, a dialog box is displayed, where there are various options for actions, that is, we can say continue to update the data. This is usually an update of the data, because it is the longest process. Migration will just continue from where it left off. Such an operation also takes more time than the standard with table locking, but the service remains in working condition at this time.

Pro connection in general. There is documentation; In short, you need to replace the engine in the Django database settings with the engine from the library. Also there are various mixins, if you use your engines to connect.

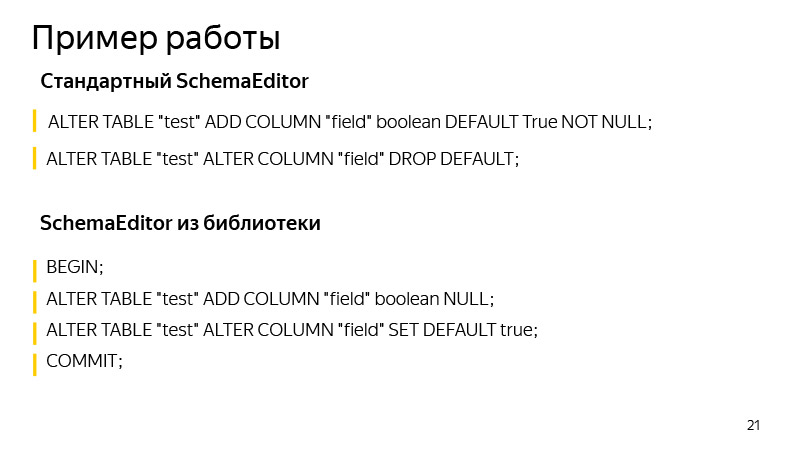

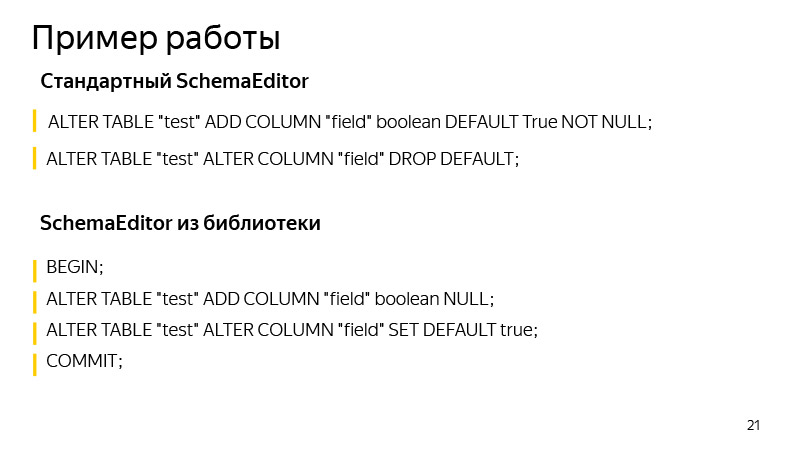

Example of work, about adding a column with a default value. Here we add columns with boolean value, True by default. What operations are performed by the standard SchemaEditor? Performed operations can be viewed if you start SQL migrate. This is quite useful, by the very type of migration it is not always clear what Django can actually change. And it is useful to run and see if the operations we are expecting will be carried out and whether there is something unnecessary and unnecessary there.

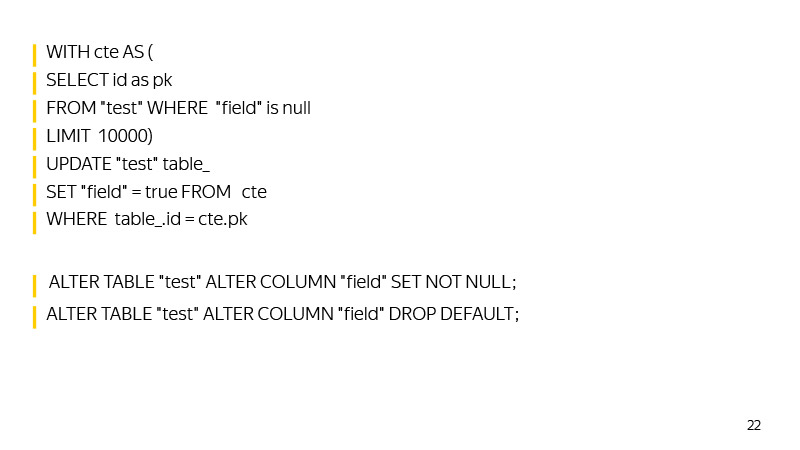

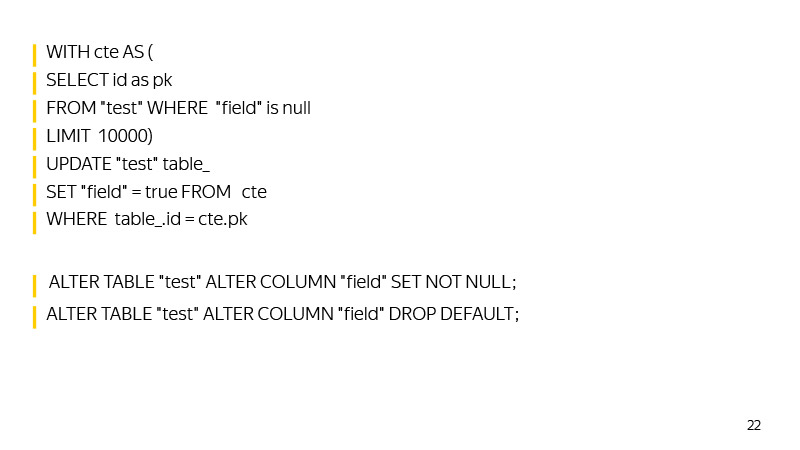

What commands will SchemaEditor perform? First, a new column will be added to one transaction, and a default value will be added. Then, until such an Update returns that it has updated zero, the data will be updated.

Then SET NOT NULL will be set at the column, and the default value will be removed by repeating the behavior of Django, which stores the default value not in the database, but at the logic level in the code.

Here, in general, there is also where to grow. For example, you can build an auxiliary index to quickly find such rows with a NULL value as you approach the update of the entire table.

You can also record the maximum id at the time of the update, when we started the migration, so that id can quickly find the values that we have not yet updated.

In general, the library is developing, we accept pool requests. Who cares - join.

It is worth paying attention that with the growth of the database, migrations have an inevitable tendency to slow down. You need to keep track of which lock'i and table takes, run SQL migrations, to see what operations apply. We, on our part, in Yandex. Connect, use this library where its capabilities allow. And where they don’t allow it, we are already on our own, with the help of fake Django migrations, we run our SQL queries.

Thus, we achieve high availability of services for reading and writing. We have large loads, thousands of RPS, and idle time in a few minutes, not to mention more time, is unacceptable. It is necessary that migrations occur unnoticed by the user. And with such loads it will not be possible to get up at four o'clock in the morning, to roll something when there is no load, and again go to bed - because the load goes round the clock.

It is worth noting that even fast operations in PostgreSQL can still cause the service to slow down and errors due to how the lock queue works in PostgreSQL.

Imagine that an operation is started, which - even for a few milliseconds - requires access exclusive. An example of such an operation is adding a column with no default value. Imagine that at the time of its launch in another transaction, there is some other long operation - say, SELECT with aggregation. In this case, our operation will stand in line with her. This happens because access exclusive conflicts with all other types of locks.

While our operation of adding a column waits for a lock, all the others will stand behind it in the queue and will not be executed until its completion. At the same time, the performed operation — SELECT with aggregation — may not conflict with the others, and if it were not for our creation of the column, they would not be queued, but would be executed in parallel.

This situation can create big problems on the service. Therefore, before launching ALTER TABLE or any other operation that requires access exclusive locking, you need to take care not to send long queries to the database. Or you can just insert a very small log timeout. Then, if it were not possible to quickly take the lock, the operation would fall. We could just restart it, and not block the table for a long time, while the operation will wait for the grant of a lock on it. That's all, thank you.

We have large loads, thousands of RPS, and idle time in a few minutes, not to mention more time, is unacceptable. It is necessary that migrations occur unnoticed by the user. And with such loads it will not be possible to get up at four in the morning, to roll something when there is no load, and again go to bed - because the load goes round the clock.

- Good evening everyone! My name is Vladimir, I have been working at Yandex for five years. For the last two years I have been developing internal services and services for organizations.

It is a little about what services for the organizations are. We have been using a large number of internal services for quite a long time: Wikis for storing and exchanging data, a messenger for quick communication with colleagues, a tracker for organizing the work process, forms for conducting interviews inside and outside, as well as many other services.

')

Some time ago we decided that our services were great and that they could be useful not only inside Yandex, but also to people outside. We began to display them on a single platform Yandex.Connect, adding existing external services, such as Mail for the domain.

I am currently developing a Form Designer and a wiki. The stack used is basically services written in Python second and third versions; Django 1.9-1.11. Like a DB for the most part PostgreSQL. It is also Celery with MongoDB and SQS as brokers. It all works in Docker.

Let us turn to the problem we are facing. Services are popular, they are used by hundreds of thousands of people every day, data is accumulated, tables become more and more, and over time, many DB schema change operations that were performed unnoticed by users yesterday begin to interfere with the normal operation of services.

We will talk today about how we cope with such situations and how we achieve high availability of services for reading and writing.

First, consider what operations with PostgreSQL require long locks on the table. By lock, I mean any type of lock that prevents normal work with the table - be it access exclusive, which prevents both writing and reading, or weaker lock levels that prevent only writing.

Then we will see how locks can be avoided during the execution of such operations. Then we will talk about which operations with PostgreSQL are initially fast and do not require long locks. And at the end, let's talk about our zero_downtime_migrations library, which we use to automate some of the previously described techniques for avoiding long locks.

Operations that require a long lock:

Create index. By default, it does not block read operations in the table, but all write operations will be blocked for the entire time the index is created, respectively, the service will be read only.

Also, adding a new column with a default value is one of such operations, since PostgreSQL will overwrite the entire table under the hood, and for this time it will be blocked for both reading and writing. In addition, all its indexes will be overwritten.

About changing the type of the column - a similar thing happens, the plate will also be overwritten again. It should be noted that this not only takes a long time on large tables, but also briefly requires up to twice the amount of free memory from the occupied table.

Also, the VACUUM FULL operation requires a similar level of blocking, like the previous operations - this is access exclusive. VACUUM FULL will also block all read and write operations to the table.

The last two operations are the addition of the uniqueness properties column and, in general, the addition of CONSTRAINT. They also require locking at the time of data verification, although they take significantly less time than those discussed earlier, since they do not overwrite tables under the hood.

Create index. It's quite simple, you can create it using the CONCURRENTLY keyword. What's the Difference? This operation will take more time, since not one, but several passes through the table will be performed, and it will also wait until all current operations that can potentially change the index are completed. And it can also end in failure — for example, if creating a unique index reveals a violation of the conditions of uniqueness. Then the index will be marked as invalid, and it will have to be deleted and re-created. The REINDEX command is not recommended, as it works in the same way as the usual CREATE INDEX, that is, blocks the table for writing.

Regarding the removal of the index - starting from version 9.3, the index can also be deleted CONCURRENTLY to avoid locks during its deletion, although in general this is a quick operation.

Let's look at adding a new column with a default value. Here is the standard operation that is performed when we want to execute such a command, including Django performing such an operation.

How can I rewrite it to avoid rewriting a table? First, we add a new column with no default value in one transaction, and add a default value with a separate query. What is the difference? When we add a default value to an existing column, it does not change the existing data in the table. Only metadata changes. That is, for all new lines this default value will already be guaranteed. It remains for us to update all existing lines that were in the table at the time of the execution of this command. What we will do in batches of several thousand copies in order not to block a large amount of data for a long time.

After all the data we have updated, it remains only to perform SET NOT NULL, if we create a NOT NULL-column. If you do not create, then do not. In this way, it is possible to avoid rewriting the table when making such changes.

Such a sequence of commands takes more time than the execution of a normal command, since it depends on the size of the table and the number of indexes in it, and the usual command simply blocks all operations and rewrites the table regardless of the load, since there is no load at this moment. But it does not matter so much, because during the operation the table is available both for reading and writing. Takes a long time, just need to follow this and that's it.

About changing the type of column. The approach is similar to adding a column with a default value. We first add a separate column of the type we need, then we add triggers to change the data in the original column in order to write at once to both columns, to a new one with the data type we need. For all new entries, they will immediately go to both of these columns. We need to update all existing ones. What we are doing in portions, just as it was on the previous slide, is similar.

After that, it remains to delete the trigger in one transaction, delete the old column and rename the old column to a new one. Thus, we achieved the same result: we changed the type of the column, while the table lock was not long.

About adding a unique column. A lock is taken at the time of creation. It can be avoided if you know that the uniqueness in PostgreSQL is guaranteed by building a unique index. We can build the required unique index ourselves using CONCURRENTLY. And after constructing this index, create a CONSTRAINT using this index. After this, the definition of the initial index from the table will disappear, and the result, which will show us the definition of the table, will be no different after performing these two operations.

And in general when adding CONSTRAINT. You can use this technique to avoid blocking at the time of data verification. We first add CONSTRAINT with the keyword NOT VALID. This means that the execution of this CONSTRAINT for all lines in the table is not guaranteed. But at the same time for all new lines this CONSTRAINT will already be applied, and the corresponding exceptions will be thrown out, if it is not performed.

We can only check all existing values, which can be done by a separate VALIDATE CONSTRAINT command, and at the same time this command does not interfere either with reading or writing to the table. A table for this time will be available.

Operations that initially work quickly in PostgreSQL and do not require long locks:

One of these operations is the addition of a column with no default values and no restrictions. Because no changes are made to the table itself, only its meta data is changed. And all the NULL values that we see as a result of a SELECT are mixed in just at the output.

Also adding default values to an already existing nameplate is a quick operation, because only the meta data is changing. The table and lock is taken literally for the several milliseconds required to enter this information.

Also, the quick operation of setting SET NOT NULL, here it takes a little longer than described earlier, about a few seconds per table of 30 million records. This time can also be avoided if it matters.

Also fast operations include renaming a column, changing the length of a column, also does not lead to a rewriting of a column. Deleting a column and generally many entities in PostgreSQL is also a quick operation.

Regarding the addition of a NOT NULL column. To avoid blocking at the time of validation, you can perform the technique mentioned earlier - add a CONSTRAINT that corresponds to the CHECK (column IS NOT NULL) NOT VALID, and validate it with a separate command.

The difference in general is that this restriction will exist at the table level, and not at the column level in the table definition. Another difference is that this can affect performance, about one percent. In this case, there will be no blocking, if the service is high-loaded, even a few seconds of blocking may result in a huge transaction queue and a problem on the service.

Deleting data in PostgreSQL is, in general, a quick operation, since the data is not deleted immediately, just the column is marked obsolete in the attributes of the table, and the data will actually be deleted only after the next vacuum is started.

Let's talk about the library . I'm talking about Django, migration. In general, Django is a library for Python, a web framework, which was originally created to quickly create news-type websites, since it has been greatly improved. There is an ORM system that allows you to communicate with database entries, with tables, as if they were objects or Python classes. That is, each table has its own class in Python. And when we make changes to our Python code, that is, we add new attributes like columns to a table, Django, during the procedure for creating a migration, notices these changes, and creates migration files to make mirror changes to the database itself so that they do not diverge.

The library was written to automate some of the previously discussed techniques for avoiding long locks on a table while performing such migrations. It works with Django from version 1.8 to 2.1 inclusive, and Python from 2.7 to 3.7 inclusive.

Regarding the current features of the library, this is adding a column with a default value of no locks, nullable or not, this is creating a CONCURRENTLY index, as well as the ability to restart if it falls. In the standard Django implementation, if we add a column with a default value, block the table, and if it is large, it could be 40 minutes of blocking in my experience. The table is locked, and everything is waiting until the changes are copied and made. It took 30 minutes - they caught the connection error to the database, the migration drops, the changes are not committed, and you have to start again, wait 40 minutes again, again blocking the table for this time.

GitHub link

The library also allows you to resume migration from the place where it was interrupted. When crashing and restarting, a dialog box is displayed, where there are various options for actions, that is, we can say continue to update the data. This is usually an update of the data, because it is the longest process. Migration will just continue from where it left off. Such an operation also takes more time than the standard with table locking, but the service remains in working condition at this time.

Pro connection in general. There is documentation; In short, you need to replace the engine in the Django database settings with the engine from the library. Also there are various mixins, if you use your engines to connect.

Example of work, about adding a column with a default value. Here we add columns with boolean value, True by default. What operations are performed by the standard SchemaEditor? Performed operations can be viewed if you start SQL migrate. This is quite useful, by the very type of migration it is not always clear what Django can actually change. And it is useful to run and see if the operations we are expecting will be carried out and whether there is something unnecessary and unnecessary there.

What commands will SchemaEditor perform? First, a new column will be added to one transaction, and a default value will be added. Then, until such an Update returns that it has updated zero, the data will be updated.

Then SET NOT NULL will be set at the column, and the default value will be removed by repeating the behavior of Django, which stores the default value not in the database, but at the logic level in the code.

Here, in general, there is also where to grow. For example, you can build an auxiliary index to quickly find such rows with a NULL value as you approach the update of the entire table.

You can also record the maximum id at the time of the update, when we started the migration, so that id can quickly find the values that we have not yet updated.

In general, the library is developing, we accept pool requests. Who cares - join.

It is worth paying attention that with the growth of the database, migrations have an inevitable tendency to slow down. You need to keep track of which lock'i and table takes, run SQL migrations, to see what operations apply. We, on our part, in Yandex. Connect, use this library where its capabilities allow. And where they don’t allow it, we are already on our own, with the help of fake Django migrations, we run our SQL queries.

Thus, we achieve high availability of services for reading and writing. We have large loads, thousands of RPS, and idle time in a few minutes, not to mention more time, is unacceptable. It is necessary that migrations occur unnoticed by the user. And with such loads it will not be possible to get up at four o'clock in the morning, to roll something when there is no load, and again go to bed - because the load goes round the clock.

It is worth noting that even fast operations in PostgreSQL can still cause the service to slow down and errors due to how the lock queue works in PostgreSQL.

Imagine that an operation is started, which - even for a few milliseconds - requires access exclusive. An example of such an operation is adding a column with no default value. Imagine that at the time of its launch in another transaction, there is some other long operation - say, SELECT with aggregation. In this case, our operation will stand in line with her. This happens because access exclusive conflicts with all other types of locks.

While our operation of adding a column waits for a lock, all the others will stand behind it in the queue and will not be executed until its completion. At the same time, the performed operation — SELECT with aggregation — may not conflict with the others, and if it were not for our creation of the column, they would not be queued, but would be executed in parallel.

This situation can create big problems on the service. Therefore, before launching ALTER TABLE or any other operation that requires access exclusive locking, you need to take care not to send long queries to the database. Or you can just insert a very small log timeout. Then, if it were not possible to quickly take the lock, the operation would fall. We could just restart it, and not block the table for a long time, while the operation will wait for the grant of a lock on it. That's all, thank you.

Source: https://habr.com/ru/post/435880/

All Articles