Massachusetts Hospital and DeepMind independently opened the "black box" of AI in medicine

The use of artificial intelligence in making diagnoses is not far off. And even closer than it seems. After all, at once two research teams on both sides of the Atlantic managed to solve the problem of the black box of AI in medicine.

The problem of the “black box” is that the AI system when issuing results, and in medicine it is a diagnosis and recommendations for further therapy, does not provide the rationale that, in particular, requires the FDA in the US .

Last December, the Massachusetts General Hospital (Massachusetts General Hospital) reported that it was able to teach the AI to “explain” the delivery of the diagnosis for intracranial bleeding. Five months earlier, the British company DeepMind, acquired by Google in 2014, announced a similar breakthrough in the diagnosis of eye diseases.

The main task of both teams was to teach the system to evaluate scanner images and make decisions the way a specialist doctor does.

Atlas of signs

Doctors at the Massachusetts General Hospital of Radiology, together with graduate students from the Harvard Department of Engineering and Applied Sciences, developed an AI model that can classify intracranial hemorrhage, according to a press release on the hospital's website. In order to train the system, the team used 904 CT images (computed tomography), each of which contained about 40 individual images. A team of five neuroradiologists labeled each of the images for one of the five subtypes of hemorrhage, based on location, as well as the absence of hemorrhage as such. To improve the accuracy of this deep learning system, the team has built in actions that mimic the process of analyzing images by a radiologist, which includes adjusting parameters, such as contrast and brightness, to reveal hidden differences, and scrolling adjacent CT slices to determine if something is really in one image, it reflects a real problem or it is a silent talk about nothing.

Immediately after creating the model, researchers tested it with two separate sets of CT scans: 100 scans with intracranial hemorrhage and 100 without it, taken before the system was developed, and 79 scans with hemorrhage and 117 without it, taken after the model was created. In the case of the first set of data taken before, the model was accurate in defining and classifying intracranial bleeding at the level of the analysis done by the radiologist. When analyzing the second set, she proved that it can be even better than a person, not an expert in this field.

To solve the black box problem, the team made the system check and save those images from the training dataset that most clearly represent the characteristic symptoms of each of the five hemorrhage subtypes. Using this atlas of distinctive features, the system can represent a group of images similar to that used in the analysis of CT scans in order to explain on what grounds decisions are made.

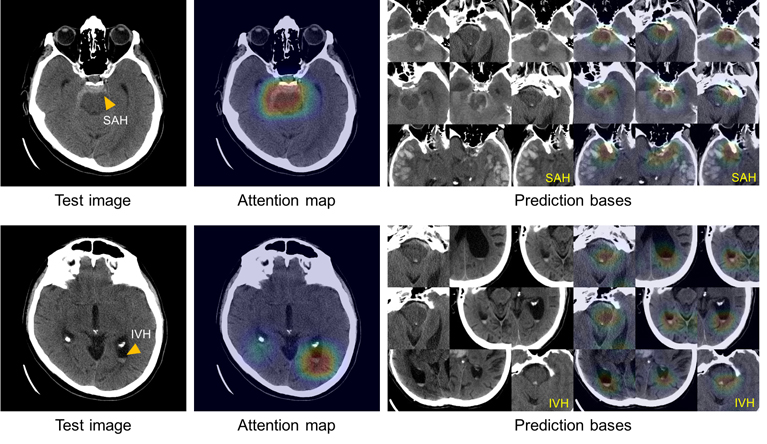

This illustration shows the ability of the system to explain the diagnosis of subarachnoid (upper left) and intraventricular (lower left) hemorrhages, showing images with similar features (right) from the image atlas that was used to train the system.

“Rapid recognition of intracranial hemorrhage, followed by immediate proper treatment of patients with symptoms of acute stroke, can minimize serious health consequences and prevent death,” says study radiologist Michael Leo. - In many laboratories there are no specially trained neuroradiologists, especially at night or on weekends, which requires non-specialists to make decisions about whether the patient's symptoms are caused by hemorrhage or not. The availability of a reliable “virtual second opinion”, trained by neuroradiologists, could improve the performance of non-specialists and help ensure that patients receive the right treatment. ”

Tissue segmentation map

In August 2018, the British company DeepMind published a study on Nature Medicine, stating that it solved the black box problem by developing an AI model capable of acting at a professional medical level, which, however, does not exclude people from the treatment process, but rather helps doctors to be more effective, as in the above case.

According to a published study , the DeepMind team worked in the field of eye diseases in conjunction with the Center for Combating Eye Diseases of the Moorfields Eye Hospital and developed a model for diagnosing 3D images of optical coherent tomography (OCT). It was possible to open the "black box" by creating two separate neural networks working together. The first segmenting using the three-dimensional convolutional architecture (U-Net) transforms the raw OCT scans into an eye tissue segmentation map. For the training, 877 clinical OCT scans were used, on each of which 128 sections, only three representative sections were segmented by hand. The segmentation network finds various symptoms (hemorrhages, focal lesions, etc.) and creates a map. According to the head of Applied AI Mustafa Suleiman in the company's blog, this helps oculists to get access to how the system “thinks”.

The second neural network, which classifies, analyzes the proposed map and provides the treating staff with diagnoses and treatment recommendations. It is fundamentally important that developers consider that the network shows recommendations as percentages, allowing physicians to evaluate the “confidence” of the system in its analysis. "This functionality is critical, since oculists play a key role in making treatment decisions for patients," says Mustava Suleiman. According to him, a key feature of the system, which makes it useful in practice, is to provide an opportunity for doctors to carefully examine the recommendations of AI. The system is designed to help prevent complete loss of vision due to accelerated diagnosis in diseases such as diabetic retinopathy, age-related macular degeneration and several dozen other diseases.

Description of the illustration. Receiving calculations using a complex segmentation and classifying networks. The illustration shows how a complex of 5 samples of a segmentation network and 5 samples of a classifying network are used together when creating 25 forecasts for one scan. Each sample of the segmentation network first provides a segmented assumption map based on the OCT under study. For each of the five assumptions, the samples of the classifying network provide the probability for each marker. Here is a detailed description of the atrophy location marker.

Both research teams express the hope that the systems they have developed will not replace doctors, but will help them to be more effective in making decisions, and therefore will help to help more patients in a short time. The next step is to use the developments directly in hospital scanners.

')

Source: https://habr.com/ru/post/435816/

All Articles