Testing Node.js projects. Part 1. Anatomy of tests and types of tests

The author of the material, the first part of which we are publishing today, says that he, as an independent consultant for Node.js, analyzes more than 10 projects every year. His clients, which is justified, ask him to pay special attention to testing. A few months ago, he began to take notes on valuable testing techniques and errors he encountered. The result was a material containing three dozen recommendations for testing.

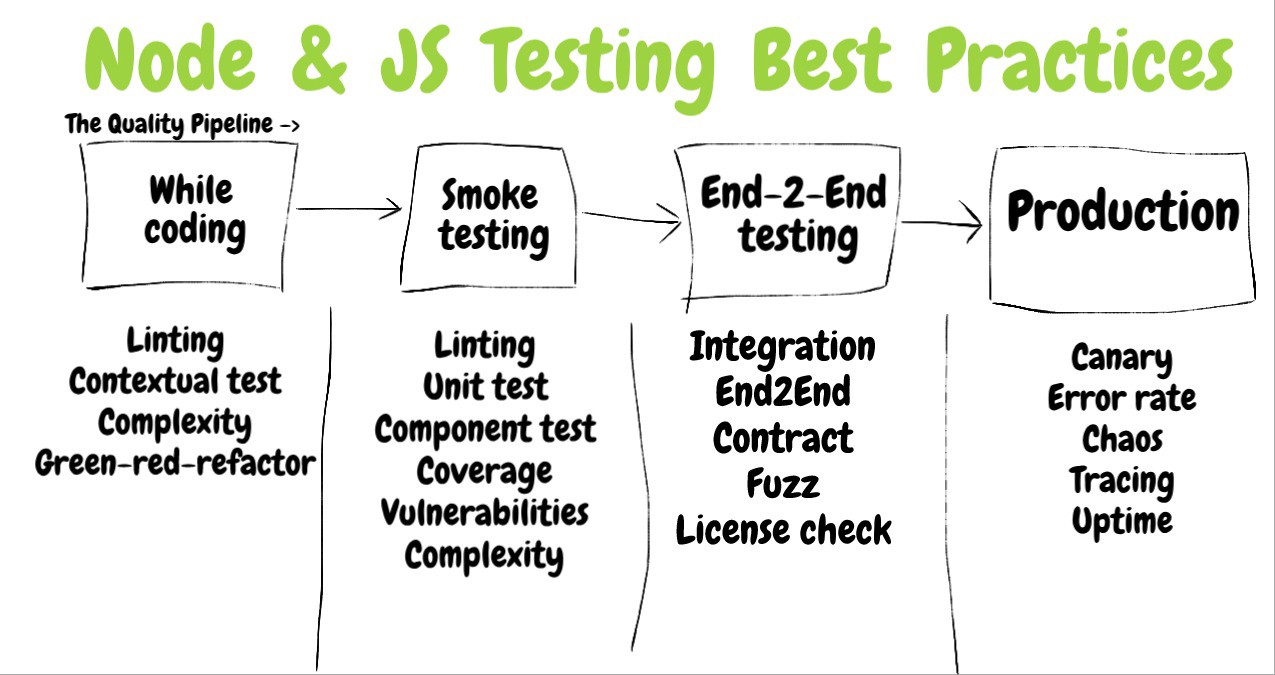

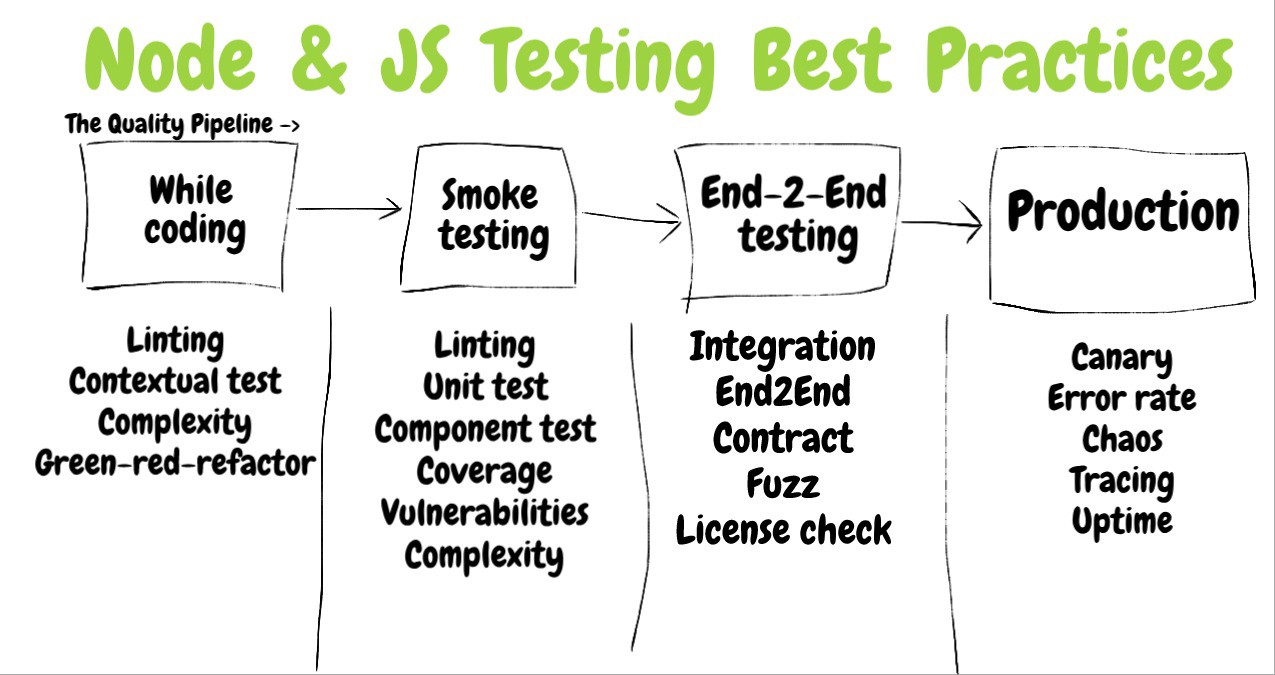

In particular, the discussion here will focus on the selection of test types that are appropriate in a particular situation, on their proper design, on the evaluation of their effectiveness, and on exactly where to place them in the CI / CD chains. Some of the examples given here are illustrated using Jest, some using Mocha. This material is mainly focused not on tools, but on testing methodologies.

')

→ Testing Node.js projects. Part 2. Evaluation of the effectiveness of tests, continuous integration and analysis of code quality

Do you know anyone - a friend, family member, hero of the film, who is always charged with a good mood and is always ready to offer a helping hand, without demanding anything in return? That is how good tests should be designed. They should be simple, should be useful and cause positive emotions. This can be achieved by careful selection of test methods, tools and goals. Such, the use of which justifies the expenditure of time and effort to prepare and conduct tests and at the same time gives good results. You only need to test what needs to be tested, you need to strive to ensure that the tests are simple and flexible, and sometimes you can even refuse some tests, meaningfully sacrificing the reliability of the project for its simplicity and speed of development.

Tests should not be viewed as ordinary application code. The fact is that a typical team engaged in the development of a project, in any case, does everything possible to support it in working condition, that is, it seeks to ensure that, say, a commercial product works as expected by its users. As a result, such a team may not be very well aware of the fact that it will have to support another complex “project”, represented by a set of tests. If the tests of the main code grow, delaying more and more attention and becoming a cause for constant concern, they either abandon work on them or, trying to maintain at a decent level, give them so much time and effort that it will slow down work on the main project.

Therefore, the test code should be as simple as possible, with a minimum number of dependencies and levels of abstraction. Tests should look like they can be understood at a glance. Most of the recommendations we consider here stem from this principle.

The testing report should indicate whether the current version of the application meets the requirements for it. This should be done in a form that will be clear to those who do not have to be familiar with the application code. This can be a tester, a DevOps specialist who is involved in the deployment of a project, or the developer himself, who looked at the project some time after writing its code. This can be achieved in the event that when writing tests to focus on the requirements of the product. With this approach, the structure of the test can be imagined consisting of three parts:

Suppose the system could not be deployed and at the same time you can only learn from the test report that it did not pass the test, called

Information about the test consists of three pieces of information.

The testing report resembles a document containing a statement of product requirements.

Here is how it looks at different levels.

Product requirements document, test naming, test results

Development of tests in a declarative style allows those who work with them, instantly grasp their essence. If tests are written using the imperative approach, they turn out to be filled with conditional constructions that make their understanding much harder. Following this principle, expectations should be described in a language close to normal. In the declarative BDD style, use an

If you do not follow the above recommendations, everything will end with the fact that members of the development team will write fewer tests and will skip especially annoying checks using the

The reader of this test will have to look through a rather long imperative code only in order to understand what is being tested in the test.

You can understand this test literally at a glance.

There is a set of plug-ins for ESLint, designed specifically for analyzing test code and for finding problems in such code. For example, the eslint-plugin-mocha plugin gives warnings if the test is written at the global level (and is not a descendant of

The developer will be happy to see that the code is covered by tests for 90% and at the same time 100% of the tests pass successfully. However, in this state it will remain only until it turns out that many tests, in fact, do not check anything, and some test scripts are simply skipped. It remains only to hope that no one will begin to develop in production projects that are "tested" in this way.

The test script is full of errors, which, fortunately, can be identified with the help of a linter.

Testing some internal mechanisms of the code means a significant increase in the burden on developers and does not give almost any benefits. If a certain API gives the correct results, is it worth spending a few hours testing its internal mechanisms and then still maintaining these tests, which very easily “break”, up to date? When testing public methods, their internal implementation, although implicitly, is also verified. Such a test will give an error if there is some problem in the system, which is expressed in the output of incorrect data. This approach is also called "behavioral testing." On the other hand, while testing the internal mechanisms of some API (that is, using the “white box” technique), the developer focuses on small implementation details, rather than on the final result of the code. Tests that check these subtleties may begin to generate errors, for example, after a small refactoring of the code, even though the system continues to produce correct results. As a result, this approach significantly increases the load on the programmer associated with supporting the test code.

Tests that are trying to cover the internal mechanisms of a certain system, behave like a shepherd boy from a fable who called the peasants to shouts of “Help! A wolf! ”When there was no wolf nearby. People came running to help only to find that they were deceived. And when the wolf really appeared, no one came to the rescue. Such tests give false positive results, for example, in cases when the names of some internal variables change. As a result, it is not surprising that the person who conducts these tests soon begins to ignore their “screams”, which, ultimately, leads to the fact that once a real serious mistake may turn out to be unnoticed.

This test checks the internal mechanisms of the class for no particular reason for such checks.

The use of duplicate objects (test doubles) for testing is a necessary evil, as they are associated with the internal mechanisms of the application. Without some of them just really impossible to do. Here is useful material on this topic. However, different approaches to the use of such objects can not be called equivalent. So, some of them - stubs (stub) and spies (spy), are aimed at testing the requirements for the product, but, as an inevitable side effect, they are forced to slightly affect the internal mechanisms of this product. Mocks, on the other hand, are aimed at testing the internal mechanisms of the project. Therefore, their use leads to a huge unnecessary burden on programmers, which we talked about above, offering to adhere to the black box technique when writing tests.

Before using backup objects, ask yourself one simple question: “Do I use them to check the functionality that is described, or could it be described in the technical requirements for the project?”. If the answer to this question is negative - it may mean that you are going to test the product using the “white box” approach, about the shortcomings of which we have already spoken.

For example, if you want to find out if your application is working correctly in a situation where a payment service is not available, you can stop this service and make the application get something indicating that there is no response. This will check the system's response to a similar situation, find out whether it behaves correctly. In the course of such a test, a check of the behavior, or the response, or the result of the operation of the application under certain conditions is carried out. In this situation, you can use a spy to check whether, when a drop in the payment service was detected, a certain email was sent. This, again, will be a test of the system’s behavior in a certain situation, which is probably fixed in the technical requirements for it, for example, in the following form: “Send an email to the administrator if the payment fails”. On the other hand, if you use the mock object to represent the payment service and check the work when accessing it with the transfer of what it expects, then it will be about testing internal mechanisms that are not directly related to the functionality of the application, and quite perhaps they can change frequently.

With any refactoring code, you have to look for all the mocks, refactoring and their code. As a result, support for tests will turn into a heavy burden, making them the enemies of the developer, and not his friends.

This example shows a mock object that focuses on testing internal application mechanisms.

Spies aim to test systems for compliance with their requirements, but, as a side effect, inevitably affect the internal mechanisms of the systems.

Often, production errors occur under a very specific and even surprising set of circumstances. This means that the closer to reality the input data used during testing, the higher the probability of early detection of errors. Use, to generate data resembling real, specialized libraries, such as Faker . For example, such libraries generate random, but realistic phone numbers, user names, bank card numbers, company names, and even texts in the spirit of "lorem ipsum." Moreover, consider using data from the production environment in tests. If you want to raise such tests to an even higher level, refer to our next recommendation on testing, based on property testing.

When testing a project during its development, all tests can be passed only if they are carried out using unrealistic data, like foo lines. But in production, the system will fail in a situation where a hacker will give her something like

The system successfully passes these tests only because they use unrealistic data.

It uses randomized data, similar to the real ones.

Typically, tests use small sets of input data. Even if they resemble real data (we talked about this in the previous section), such tests cover only a very limited number of possible combinations of the inputs of the entity under study. For example, it might look like this:

The developer, unknowingly, selects such test data that covers only those parts of the code that work correctly. Unfortunately, this reduces the effectiveness of testing as a means to detect errors.

Testing a variety of input options using the mocha-testcheck library.

It is probably already obvious that I am a supporter of extremely simple tests. The fact is that otherwise the development team of a certain project, in fact, has to deal with another project. In order to understand its code, they have to spend valuable time, which they don’t have much. It is very well written about this phenomenon: “A quality production code is a well thought out code, and a quality test code is a code that is completely understandable ... When you write a test, think about who will see the error message displayed by him. This person would not like, in order to understand the causes of the error, to read the code of the entire test suite or the inheritance tree code of the utilities used for testing. ”

In order for the reader to understand the test without leaving its code, minimize the use of utilities, hooks, or any external mechanisms when performing a test. If in order to do this, it is necessary to resort too often to copying and pasting operations, you can stop at one external auxiliary mechanism, the use of which does not violate the comprehensibility of the test. But, if the number of such mechanisms will grow, the test code will lose clarity.

, 4 , 2 , ? ! , , .

. ?

, .

, , , , . . , ( ) . — , ( , ). — , , , , . , , . — , , , , ( , , , ).

, . . . . , , , .

. , .

, , .

, ,

Chai,

, , , .

,

. , (smoke test), -, , . - . , , ,

, , , , , , . .

, Node.js-. , , Node.js .

TDD — , , . , . , , Red-Green-Refactor . , - , , , , , . , . ( — , , ).

— , . .

, , 10 , . , . , . , (, ), , , , ? - ?

, . , 2019 , , TDD, — , . , , , . , IoT-, , , - Kafka RabbitMQ, . - , , , . , , , , ? (, , Alexa) , , .

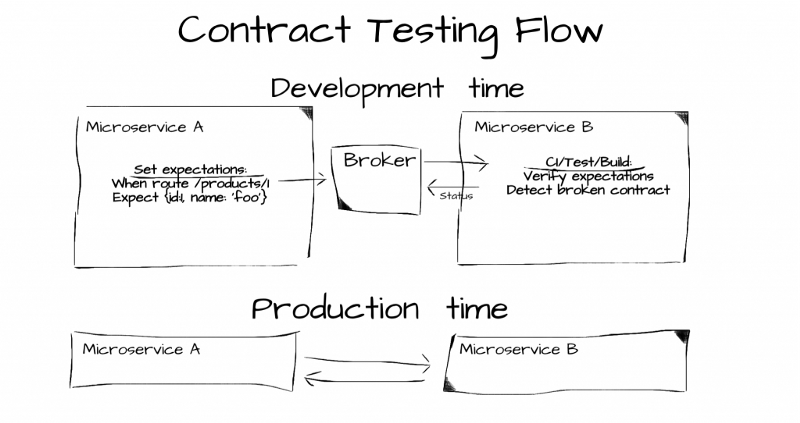

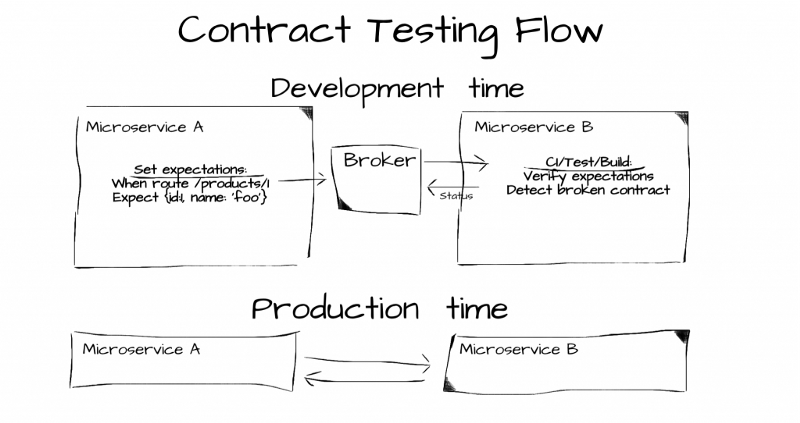

, ( ). , , , , , , . , , - API — Consumer-Driven Contracts . , , , , . , , , , , . , , .

, TDD . , TDD , . , , , .

— ( ), .

. . , , Node.js, .

. — . , , . , , - , , - ? , . , : TDD, — .

«». API, - , (, , , , , ). , , , (, ). , , , , , , .

, , , , 20.

supertest , API, Express, .

API, Express

, , , , . , , - , , , - . «-22» : . , , . , Consumer-Driven Contracts PACT .

. . PACT , ( «»). , , PACT, , , . , , , , .

.

Consumer-Driven Contracts

, , B , . B .

(middleware) - , , - , Express-. . , , . , , JS-

Express .

Express-.

, , , , , , . , , - . , , , ( , , ), , ( — ) . , : SonarQube ( 2600 GitHub) Code Climate ( 1500 ).

, , . . , .

Code Climate.

Code Climate

, , . , , , - , . , - , , , , ? , , ? , API ?

Netflix - . , , , , . , - — Chaos Monkey . , , , . Kubernetes — kube-monkey . , Node.js? , , , V8 1.7 . . node-chaos , -.

, , , .

npm- chaos-monkey , Node.js.

chaos-monkey

, Node.js-. , . .

Dear readers! - ?

In particular, the discussion here will focus on the selection of test types that are appropriate in a particular situation, on their proper design, on the evaluation of their effectiveness, and on exactly where to place them in the CI / CD chains. Some of the examples given here are illustrated using Jest, some using Mocha. This material is mainly focused not on tools, but on testing methodologies.

')

→ Testing Node.js projects. Part 2. Evaluation of the effectiveness of tests, continuous integration and analysis of code quality

▍0. The golden rule: tests should be very simple and straightforward.

Do you know anyone - a friend, family member, hero of the film, who is always charged with a good mood and is always ready to offer a helping hand, without demanding anything in return? That is how good tests should be designed. They should be simple, should be useful and cause positive emotions. This can be achieved by careful selection of test methods, tools and goals. Such, the use of which justifies the expenditure of time and effort to prepare and conduct tests and at the same time gives good results. You only need to test what needs to be tested, you need to strive to ensure that the tests are simple and flexible, and sometimes you can even refuse some tests, meaningfully sacrificing the reliability of the project for its simplicity and speed of development.

Tests should not be viewed as ordinary application code. The fact is that a typical team engaged in the development of a project, in any case, does everything possible to support it in working condition, that is, it seeks to ensure that, say, a commercial product works as expected by its users. As a result, such a team may not be very well aware of the fact that it will have to support another complex “project”, represented by a set of tests. If the tests of the main code grow, delaying more and more attention and becoming a cause for constant concern, they either abandon work on them or, trying to maintain at a decent level, give them so much time and effort that it will slow down work on the main project.

Therefore, the test code should be as simple as possible, with a minimum number of dependencies and levels of abstraction. Tests should look like they can be understood at a glance. Most of the recommendations we consider here stem from this principle.

Section 1. Anatomy of tests

▍1. Design the tests so that the report tells you what is being tested, what scenario, and what is expected from the tests.

Recommendations

The testing report should indicate whether the current version of the application meets the requirements for it. This should be done in a form that will be clear to those who do not have to be familiar with the application code. This can be a tester, a DevOps specialist who is involved in the deployment of a project, or the developer himself, who looked at the project some time after writing its code. This can be achieved in the event that when writing tests to focus on the requirements of the product. With this approach, the structure of the test can be imagined consisting of three parts:

- What exactly is being tested? For example, the

ProductsService.addNewProductmethod. - What is the scenario and the circumstances under which the test is conducted? For example, the response of the system is checked in a situation when the price of the goods was not transferred to the method.

- What are the expected test results? For example, in such a situation, the system refuses to confirm the addition of a new product to it.

Implications of derogation

Suppose the system could not be deployed and at the same time you can only learn from the test report that it did not pass the test, called

Add product , which checks for the addition of some product to it. Will it give information about what exactly went wrong?The right approach

Information about the test consists of three pieces of information.

//1. describe('Products Service', function() { //2. describe('Add new product', function() { // 3. , it('When no price is specified, then the product status is pending approval', ()=> { const newProduct = new ProductService().add(...); expect(newProduct.status).to.equal('pendingApproval'); }); }); }); The right approach

The testing report resembles a document containing a statement of product requirements.

Here is how it looks at different levels.

Product requirements document, test naming, test results

- The product requirements document can either be a special document itself, or it can exist as something like an email.

- When naming tests, describing the purpose of the test, its scenario and expected results, one should adhere to the language that is used in formulating the requirements for the product. This will help to compare the test code and product requirements.

- Test results should be clear even to those who are not familiar with the application code or have forgotten it thoroughly. These are testers, DevOps specialists, developers returning to work with the code a few months after writing it.

▍2. Describe what is expected of the tests in the language of the product: use BDD style statements

Recommendations

Development of tests in a declarative style allows those who work with them, instantly grasp their essence. If tests are written using the imperative approach, they turn out to be filled with conditional constructions that make their understanding much harder. Following this principle, expectations should be described in a language close to normal. In the declarative BDD style, use an

expect or should construct, and not some special code of our own design. If there are no necessary statements in Chai or Jest, and it turns out that the need for such statements often arises, consider adding new “checks” to the Jest or writing your own plug-ins for Chai .Implications of derogation

If you do not follow the above recommendations, everything will end with the fact that members of the development team will write fewer tests and will skip especially annoying checks using the

.skip() method.Wrong approach

The reader of this test will have to look through a rather long imperative code only in order to understand what is being tested in the test.

it("When asking for an admin, ensure only ordered admins in results" , ()={ //, — "admin1" "admin2", "user1" const allAdmins = getUsers({adminOnly:true}); const admin1Found, adming2Found = false; allAdmins.forEach(aSingleUser => { if(aSingleUser === "user1"){ assert.notEqual(aSingleUser, "user1", "A user was found and not admin"); } if(aSingleUser==="admin1"){ admin1Found = true; } if(aSingleUser==="admin2"){ admin2Found = true; } }); if(!admin1Found || !admin2Found ){ throw new Error("Not all admins were returned"); } }); The right approach

You can understand this test literally at a glance.

it("When asking for an admin, ensure only ordered admins in results" , ()={ //, const allAdmins = getUsers({adminOnly:true}); expect(allAdmins).to.include.ordered.members(["admin1" , "admin2"]) .but.not.include.ordered.members(["user1"]); }); ▍3. Perform lint code tests using special plugins

Recommendations

There is a set of plug-ins for ESLint, designed specifically for analyzing test code and for finding problems in such code. For example, the eslint-plugin-mocha plugin gives warnings if the test is written at the global level (and is not a descendant of

describe() ), or if the tests are skipped , which can give false hopes that all the tests are passed. The eslint-plugin-jest plugin works in a similar way, for example, warning about tests that have no assertions, that is, those that do not check anything.Implications of derogation

The developer will be happy to see that the code is covered by tests for 90% and at the same time 100% of the tests pass successfully. However, in this state it will remain only until it turns out that many tests, in fact, do not check anything, and some test scripts are simply skipped. It remains only to hope that no one will begin to develop in production projects that are "tested" in this way.

Wrong approach

The test script is full of errors, which, fortunately, can be identified with the help of a linter.

describe("Too short description", () => { const userToken = userService.getDefaultToken() // *error:no-setup-in-describe, use hooks (sparingly) instead it("Some description", () => {});//* error: valid-test-description. Must include the word "Should" + at least 5 words }); it.skip("Test name", () => {// *error:no-skipped-tests, error:error:no-global-tests. Put tests only under describe or suite expect("somevalue"); // error:no-assert }); it("Test name", () => {*//error:no-identical-title. Assign unique titles to tests }); ▍4. Stick to the black box method - test only public methods.

Recommendations

Testing some internal mechanisms of the code means a significant increase in the burden on developers and does not give almost any benefits. If a certain API gives the correct results, is it worth spending a few hours testing its internal mechanisms and then still maintaining these tests, which very easily “break”, up to date? When testing public methods, their internal implementation, although implicitly, is also verified. Such a test will give an error if there is some problem in the system, which is expressed in the output of incorrect data. This approach is also called "behavioral testing." On the other hand, while testing the internal mechanisms of some API (that is, using the “white box” technique), the developer focuses on small implementation details, rather than on the final result of the code. Tests that check these subtleties may begin to generate errors, for example, after a small refactoring of the code, even though the system continues to produce correct results. As a result, this approach significantly increases the load on the programmer associated with supporting the test code.

Implications of derogation

Tests that are trying to cover the internal mechanisms of a certain system, behave like a shepherd boy from a fable who called the peasants to shouts of “Help! A wolf! ”When there was no wolf nearby. People came running to help only to find that they were deceived. And when the wolf really appeared, no one came to the rescue. Such tests give false positive results, for example, in cases when the names of some internal variables change. As a result, it is not surprising that the person who conducts these tests soon begins to ignore their “screams”, which, ultimately, leads to the fact that once a real serious mistake may turn out to be unnoticed.

Wrong approach

This test checks the internal mechanisms of the class for no particular reason for such checks.

class ProductService{ // // , calculateVAT(priceWithoutVAT){ return {finalPrice: priceWithoutVAT * 1.2}; // } // getPrice(productId){ const desiredProduct= DB.getProduct(productId); finalPrice = this.calculateVATAdd(desiredProduct.price).finalPrice; } } it("White-box test: When the internal methods get 0 vat, it return 0 response", async () => { // VAT, . , , expect(new ProductService().calculateVATAdd(0).finalPrice).to.equal(0); }); ▍5. Choose suitable backup objects: avoid mocks, preferring stubs and spies

Recommendations

The use of duplicate objects (test doubles) for testing is a necessary evil, as they are associated with the internal mechanisms of the application. Without some of them just really impossible to do. Here is useful material on this topic. However, different approaches to the use of such objects can not be called equivalent. So, some of them - stubs (stub) and spies (spy), are aimed at testing the requirements for the product, but, as an inevitable side effect, they are forced to slightly affect the internal mechanisms of this product. Mocks, on the other hand, are aimed at testing the internal mechanisms of the project. Therefore, their use leads to a huge unnecessary burden on programmers, which we talked about above, offering to adhere to the black box technique when writing tests.

Before using backup objects, ask yourself one simple question: “Do I use them to check the functionality that is described, or could it be described in the technical requirements for the project?”. If the answer to this question is negative - it may mean that you are going to test the product using the “white box” approach, about the shortcomings of which we have already spoken.

For example, if you want to find out if your application is working correctly in a situation where a payment service is not available, you can stop this service and make the application get something indicating that there is no response. This will check the system's response to a similar situation, find out whether it behaves correctly. In the course of such a test, a check of the behavior, or the response, or the result of the operation of the application under certain conditions is carried out. In this situation, you can use a spy to check whether, when a drop in the payment service was detected, a certain email was sent. This, again, will be a test of the system’s behavior in a certain situation, which is probably fixed in the technical requirements for it, for example, in the following form: “Send an email to the administrator if the payment fails”. On the other hand, if you use the mock object to represent the payment service and check the work when accessing it with the transfer of what it expects, then it will be about testing internal mechanisms that are not directly related to the functionality of the application, and quite perhaps they can change frequently.

Implications of derogation

With any refactoring code, you have to look for all the mocks, refactoring and their code. As a result, support for tests will turn into a heavy burden, making them the enemies of the developer, and not his friends.

Wrong approach

This example shows a mock object that focuses on testing internal application mechanisms.

it("When a valid product is about to be deleted, ensure data access DAL was called once, with the right product and right config", async () => { //, const dataAccessMock = sinon.mock(DAL); // , dataAccessMock.expects("deleteProduct").once().withArgs(DBConfig, theProductWeJustAdded, true, false); new ProductService().deletePrice(theProductWeJustAdded); mock.verify(); }); The right approach

Spies aim to test systems for compliance with their requirements, but, as a side effect, inevitably affect the internal mechanisms of the systems.

it("When a valid product is about to be deleted, ensure an email is sent", async () => { //, const spy = sinon.spy(Emailer.prototype, "sendEmail"); new ProductService().deletePrice(theProductWeJustAdded); // . ? , ( ) }); ▍6. During testing, use realistic input, not limited to something like "foo"

Recommendations

Often, production errors occur under a very specific and even surprising set of circumstances. This means that the closer to reality the input data used during testing, the higher the probability of early detection of errors. Use, to generate data resembling real, specialized libraries, such as Faker . For example, such libraries generate random, but realistic phone numbers, user names, bank card numbers, company names, and even texts in the spirit of "lorem ipsum." Moreover, consider using data from the production environment in tests. If you want to raise such tests to an even higher level, refer to our next recommendation on testing, based on property testing.

Implications of derogation

When testing a project during its development, all tests can be passed only if they are carried out using unrealistic data, like foo lines. But in production, the system will fail in a situation where a hacker will give her something like

@3e2ddsf . ##' 1 fdsfds . fds432 AAAA @3e2ddsf . ##' 1 fdsfds . fds432 AAAA @3e2ddsf . ##' 1 fdsfds . fds432 AAAA .Wrong approach

The system successfully passes these tests only because they use unrealistic data.

const addProduct = (name, price) =>{ const productNameRegexNoSpace = /^\S*$/;// if(!productNameRegexNoSpace.test(name)) return false;// , - , . // - return true; }; it("Wrong: When adding new product with valid properties, get successful confirmation", async () => { // "Foo", , , false const addProductResult = addProduct("Foo", 5); expect(addProductResult).to.be.true; // : - , // }); The right approach

It uses randomized data, similar to the real ones.

it("Better: When adding new valid product, get successful confirmation", async () => { const addProductResult = addProduct(faker.commerce.productName(), faker.random.number()); // : {'Sleek Cotton Computer', 85481} expect(addProductResult).to.be.true; // , , . // , ! }); ▍7. Test systems using a variety of input data combinations using property-based testing.

Recommendations

Typically, tests use small sets of input data. Even if they resemble real data (we talked about this in the previous section), such tests cover only a very limited number of possible combinations of the inputs of the entity under study. For example, it might look like this:

(method('', true, 1), method("string" , false" , 0)) . The problem is that in the production API, which is called with five parameters, can get There are thousands of different variants of their combinations, one of which can lead to a crash (it is appropriate to recall fuzzing .) What if you could write a single test that automatically checks a certain method for 1000 combinations of its inputs and finds out which ones it is does the method respond incorrectly? Testing based on checking properties is exactly what us in such a situation is useful. Namely, in the course of this testing module checks, calling it with all possible combinations of input data, which increases the probability of finding a few bugs. Suppose we have a method addNewProduct(id, name, isDiscount) , and Library , performing the test, calls it with a variety of combinations of numeric, string and logical type parameters, for example - (1, "iPhone", false) , (2, "Galaxy", true) . Testing based on property verification can be done using a common environment for executing tests (Mocha, Jest, and so on) and using specialized libraries like js-verify or testcheck (this library has very good documentation).Implications of derogation

The developer, unknowingly, selects such test data that covers only those parts of the code that work correctly. Unfortunately, this reduces the effectiveness of testing as a means to detect errors.

The right approach

Testing a variety of input options using the mocha-testcheck library.

require('mocha-testcheck').install(); const {expect} = require('chai'); const faker = require('faker'); describe('Product service', () => { describe('Adding new', () => { // 100 check.it('Add new product with random yet valid properties, always successful', gen.int, gen.string, (id, name) => { expect(addNewProduct(id, name).status).to.equal('approved'); }); }) }); ▍8. Aim to ensure that the test code is self-sufficient, minimizing external tools and abstractions

Recommendations

It is probably already obvious that I am a supporter of extremely simple tests. The fact is that otherwise the development team of a certain project, in fact, has to deal with another project. In order to understand its code, they have to spend valuable time, which they don’t have much. It is very well written about this phenomenon: “A quality production code is a well thought out code, and a quality test code is a code that is completely understandable ... When you write a test, think about who will see the error message displayed by him. This person would not like, in order to understand the causes of the error, to read the code of the entire test suite or the inheritance tree code of the utilities used for testing. ”

In order for the reader to understand the test without leaving its code, minimize the use of utilities, hooks, or any external mechanisms when performing a test. If in order to do this, it is necessary to resort too often to copying and pasting operations, you can stop at one external auxiliary mechanism, the use of which does not violate the comprehensibility of the test. But, if the number of such mechanisms will grow, the test code will lose clarity.

Implications of derogation

, 4 , 2 , ? ! , , .

. ?

test("When getting orders report, get the existing orders", () => { const queryObject = QueryHelpers.getQueryObject(config.DBInstanceURL); const reportConfiguration = ReportHelpers.getReportConfig();// ? userHelpers.prepareQueryPermissions(reportConfiguration);// ? const result = queryObject.query(reportConfiguration); assertThatReportIsValid();// , - expect(result).to.be.an('array').that.does.include({id:1, productd:2, orderStatus:"approved"}); // ? }) The right approach

, .

it("When getting orders report, get the existing orders", () => { // , const orderWeJustAdded = ordersTestHelpers.addRandomNewOrder(); const queryObject = newQueryObject(config.DBInstanceURL, queryOptions.deep, useCache:false); const result = queryObject.query(config.adminUserToken, reports.orders, pageSize:200); expect(result).to.be.an('array').that.does.include(orderWeJustAdded); }) ▍9. :

Recommendations

, , , , . . , ( ) . — , ( , ). — , , , , . , , . — , , , , ( , , , ).

Implications of derogation

, . . . . , , , .

. , .

before(() => { // . ? - , - json-. await DB.AddSeedDataFromJson('seed.json'); }); it("When updating site name, get successful confirmation", async () => { // , , "Portal", , const siteToUpdate = await SiteService.getSiteByName("Portal"); const updateNameResult = await SiteService.changeName(siteToUpdate, "newName"); expect(updateNameResult).to.be(true); }); it("When querying by site name, get the right site", async () => { // , , "Portal", , const siteToCheck = await SiteService.getSiteByName("Portal"); expect(siteToCheck.name).to.be.equal("Portal"); //! :[ }); The right approach

, , .

it("When updating site name, get successful confirmation", async () => { // const siteUnderTest = await SiteService.addSite({ name: "siteForUpdateTest" }); const updateNameResult = await SiteService.changeName(siteUnderTest, "newName"); expect(updateNameResult).to.be(true); }); ▍10. , . expect

Recommendations

, ,

try-catch-finally catch . , , , .Chai,

expect(method).to.throw . Jest: expect(method).toThrow() . , . , , .Implications of derogation

, , , .

,

try-catch . it("When no product name, it throws error 400", async() => { let errorWeExceptFor = null; try { const result = await addNewProduct({name:'nest'});} catch (error) { expect(error.code).to.equal('InvalidInput'); errorWeExceptFor = error; } expect(errorWeExceptFor).not.to.be.null; // , // , null, }); The right approach

expect , , . it.only("When no product name, it throws error 400", async() => { expect(addNewProduct)).to.eventually.throw(AppError).with.property('code', "InvalidInput"); }); ▍11. ,

Recommendations

. , (smoke test), -, , . - . , , ,

#cold , #api , #sanity . . , Mocha -g ( --grep ).Implications of derogation

, , , , , , . .

The right approach

#cold-test , . , -, , — . // ( , ), // , // describe('Order service', function() { describe('Add new order #cold-test #sanity', function() { it('Scenario - no currency was supplied. Expectation - Use the default currency #sanity', function() { //- }); }); }); ▍12.

Recommendations

, Node.js-. , , Node.js .

TDD — , , . , . , , Red-Green-Refactor . , - , , , , , . , . ( — , , ).

Implications of derogation

— , . .

2.

▍13. ,

Recommendations

, , 10 , . , . , . , (, ), , , , ? - ?

, . , 2019 , , TDD, — , . , , , . , IoT-, , , - Kafka RabbitMQ, . - , , , . , , , , ? (, , Alexa) , , .

, ( ). , , , , , , . , , - API — Consumer-Driven Contracts . , , , , . , , , , , . , , .

, TDD . , TDD , . , , , .

Implications of derogation

— ( ), .

The right approach

. . , , Node.js, .

▍14. ,

Recommendations

. — . , , . , , - , , - ? , . , : TDD, — .

«». API, - , (, , , , , ). , , , (, ). , , , , , , .

Implications of derogation

, , , , 20.

The right approach

supertest , API, Express, .

API, Express

▍15. , API, Consumer-Driven Contracts

Recommendations

, , , , . , , - , , , - . «-22» : . , , . , Consumer-Driven Contracts PACT .

. . PACT , ( «»). , , PACT, , , . , , , , .

Implications of derogation

.

The right approach

Consumer-Driven Contracts

, , B , . B .

▍16.

Recommendations

(middleware) - , , - , Express-. . , , . , , JS-

{req,res} . , «» (, Sinon ) , {req,res} . , , , . node-mock-http , , , . , , HTTP-, -.Implications of derogation

Express .

The right approach

Express-.

// , const unitUnderTest = require('./middleware') const httpMocks = require('node-mocks-http'); // Jest, Mocha describe() it() test('A request without authentication header, should return http status 403', () => { const request = httpMocks.createRequest({ method: 'GET', url: '/user/42', headers: { authentication: '' } }); const response = httpMocks.createResponse(); unitUnderTest(request, response); expect(response.statusCode).toBe(403); }); ▍17.

Recommendations

, , , , , , . , , - . , , , ( , , ), , ( — ) . , : SonarQube ( 2600 GitHub) Code Climate ( 1500 ).

Implications of derogation

, , . . , .

The right approach

Code Climate.

Code Climate

▍18. , Node.js

Recommendations

, , . , , , - , . , - , , , , ? , , ? , API ?

Netflix - . , , , , . , - — Chaos Monkey . , , , . Kubernetes — kube-monkey . , Node.js? , , , V8 1.7 . . node-chaos , -.

Implications of derogation

, , , .

The right approach

npm- chaos-monkey , Node.js.

chaos-monkey

Results

, Node.js-. , . .

Dear readers! - ?

Source: https://habr.com/ru/post/435462/

All Articles