We cover A / B tests with UI tests. How not to get lost in your own code

Hi, Habr!

My name is Vitaliy Kotov, I work for Badoo and most of the time I work on testing automation. The solution of one such issue I want to share in this article.

It’s about how we organized the process of working with A / B tests for UI tests, of which we have a lot. I will tell you about the problems we faced and which flow came in the end. Welcome under the cut!

')

In this article, the word "test" is very common. That's because we are talking simultaneously about UI tests and A / B tests. I tried to always separate these two concepts and formulate thoughts so that the text was easy to read. If somewhere, I still missed the first part of the word and wrote just a “test”, I meant a UI test.

Enjoy reading!

So let's first define the concept of A / B-test. Here is a quote from Wikipedia:

"A / B testing (born A / B testing, Split testing) is a marketing research method, the essence of which is that the control group of elements is compared with a set of test groups in which one or several indicators have been changed, in order to find out which of the changes improve the target indicator ” Ref .

In terms of our project, the presence of an A / B test implies that some functionality is different for different users. I would highlight several options:

In order for all this logic to work, we have a tool in our company called the UserSplit Tool , and our developer Rinat Akhmadeev described it in detail in this article .

We now talk about what it means to have A / B tests for the testing department and for automators in particular.

When we talk about coverage with UI tests, we are not talking about the number of lines of code we tested. This is understandable, because even just opening the page can use many components, while we have not yet tested anything.

For many years of work in the field of test automation, I have seen many ways to measure the coverage of UI tests. I will not list them all, just to say that we prefer to evaluate this indicator by the number of features that are covered by UI tests. This is not an ideal way (I personally don’t know the ideal one), but in our case it works.

And here we return directly to the topic of the article. How to measure and maintain a good level of coverage of UI-tests, when each feature can behave differently depending on the user who uses it?

Even before the UserSplit Tool appeared in the company and there were really a lot of A / B tests, we followed the following strategy of covering UI tests with features: covering only those features that have been on production for some time and are well-established.

And all because earlier, when the feature only got into production, it was still “tyunil” for a while - its behavior and appearance could change. And she could not establish herself at all and rather quickly disappear from the eyes of users. Writing UI tests for unstable features is expensive and has not been practiced.

With the introduction of the A / B tests into the development process, at first nothing has changed. Each A / B test had a so-called “control group,” that is, a group that saw some default behavior of the feature. It was on him and wrote UI-tests. All that had to be done when writing UI tests for such a feature was to remember to include the user with default behavior. We call this process Force A / B-group (from the English. Force).

I will dwell on the description of the force as it will play a role in my story.

We have already told about QaAPI more than once in our articles and in reports. Nevertheless, oddly enough, so far we have not written a full article about this tool. Probably one day this space will be filled. In the meantime, you can watch a video of the speech of my colleague Dmitry Marushchenko: " 4. The concept of QaAPI: a look at testing from the other side of the barricades ."

In a nutshell, QaAPI allows you to make requests from a test to the application server through a special backdoor in order to manipulate any data. With this tool, for example, we prepare users for specific test cases, send them messages, upload photos, and so on.

With the help of the same QaAPI, we are able to force the A / B test group; you only need to specify the name of the test and the name of the desired group. The challenge in the test looks like this:

In the last parameter, we specify the user_id or device_id for which this force should start working. We specify the device_id parameter in the case of an unauthorized user, since there is still no user_id parameter. That's right, for unauthorized pages, we also have A / B tests.

After calling this QaAPI method, an authorized user or owner of the device will be guaranteed to see the version of the feature that we have uploaded. We wrote such calls in UI tests that covered features under A / B testing.

And so we lived for a long time. UI tests covered only control groups of A / B tests. Then they were not very many, and it worked. But time passed; The number of A / B tests began to increase, and almost all new features began to run under A / B tests. The approach with coverage of only control versions of features stopped us. And that's why…

Problem one - coverage

As I wrote above, over time, almost all new features began to come out under the A / B tests. In addition to the control, each feature has one more, two or three other options. It turns out that for such a feature, at best, the coverage will not exceed 50%, and at worst - it will be about 25%. Previously, when there were few such features, this did not have a significant impact on the total coverage indicator. Now - began to render.

Problem two - long A / B tests

Some A / B tests now take quite a long time. And we continue to be released twice a day (you can read about this in the article of our QA-engineer Ilya Kudinov “ As we have been surviving for 4 years in conditions of two releases per day ”).

Thus, the probability of breaking some of the versions of the A / B test during this time is incredibly large. And this will certainly affect the user experience and nullify the whole point of the A / B testing feature: after all, a feature can show bad results on one of the versions, not because the users do not like it, but because it does not work as expected.

If we want to be confident in the result of A / B testing, we should not allow any of the versions of the feature to work differently from what is expected of it.

Problem three - the relevance of UI tests

There is such a thing as an A / B test release. This means that the A / B test collected a sufficient amount of statistics and the product manager is ready to open the winning option for all users. The A / B test release occurs asynchronously with the release of the code, since it depends on the configuration of the config, and not on the code.

Suppose won and unreleased not control version. What will happen to the UI tests that covered only him? That's right: they will break. And what if they break an hour before the release of the build? Can we do regression testing of this very build? Not. As you know, with broken tests will not go far.

So, you need to be ready to close any A / B test in advance so that it does not interfere with the performance of the UI tests and, as a result, the next release of the build.

Conclusion

The conclusion from the above suggests an obvious one: it is necessary to cover all of the A / B tests with UI tests. Is it logical Yes! Thank you all, disagree!

... Joke! Not so simple.

The first thing that seemed inconvenient is control over which A / B tests and options of features are already covered, and which ones are not yet. Historically, we call UI tests according to the following principle:

For example, ChatBlockedUserTest, RegistrationViaFacebookTest, and so on. To shove here also the name of the split test seemed inconvenient. First, the names would be incredibly long. Secondly, tests would have to be renamed upon completion of the A / B test, and this would have a bad effect on collecting statistics, which takes into account the name of the UI test.

To get the code all the time to call the QaAPI method is still a pleasure.

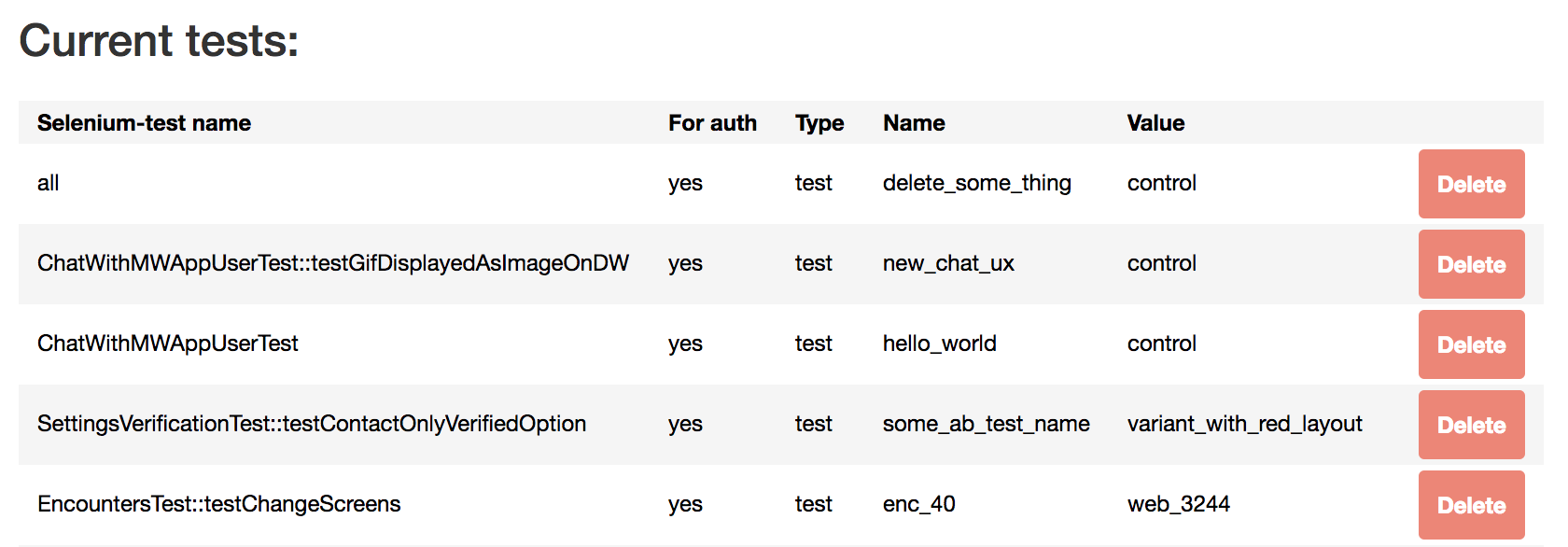

So we decided to remove all the calls to QaApi :: forceSplitTest () from the UI test code and transfer the data on which forces are needed to the MySQL table. For her, we did a UI view on the Selenium Manager (I told about it here ).

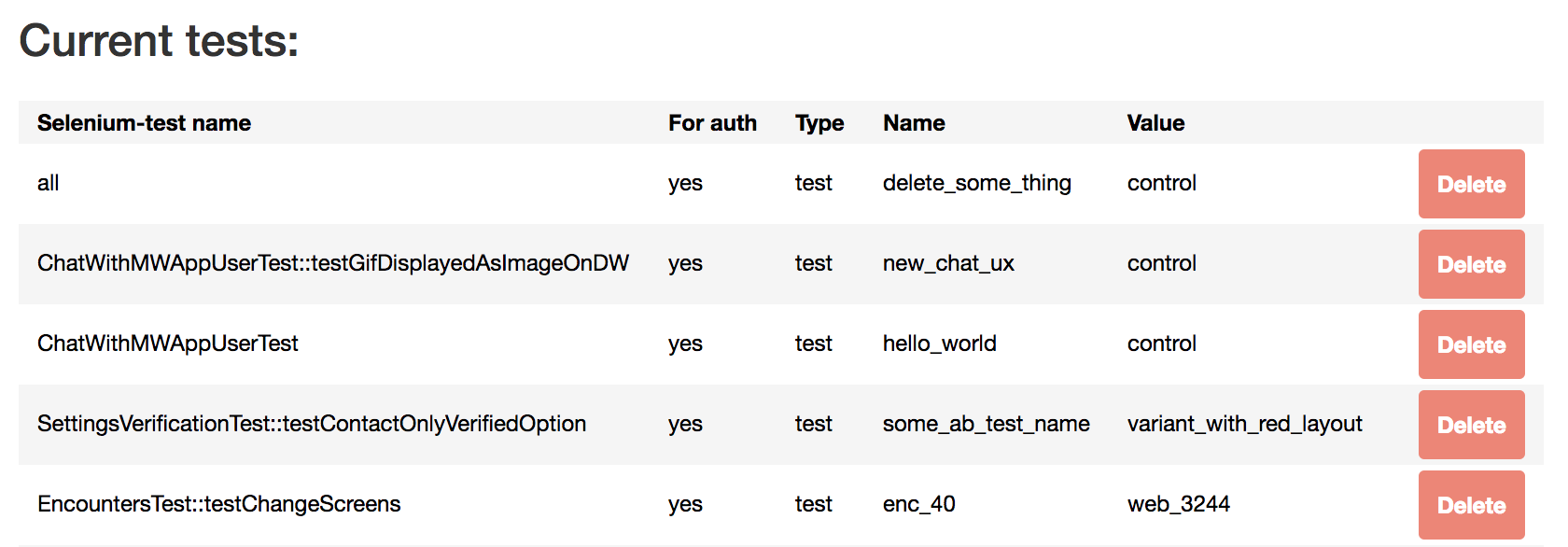

It looks like this:

In the table, you can specify for which UI test the force of which A / B test and in which group we want to apply. You can specify the name of the UI test itself, test class or All.

In addition, we can indicate whether this force applies to authorized or unauthorized users.

Next, we taught UI tests to get data from this table at startup and force those that are directly related to the running test or to all (all) tests.

Thus, we managed to collect all A / B test manipulations in one place. Now the list of covered A / B tests is convenient for viewing.

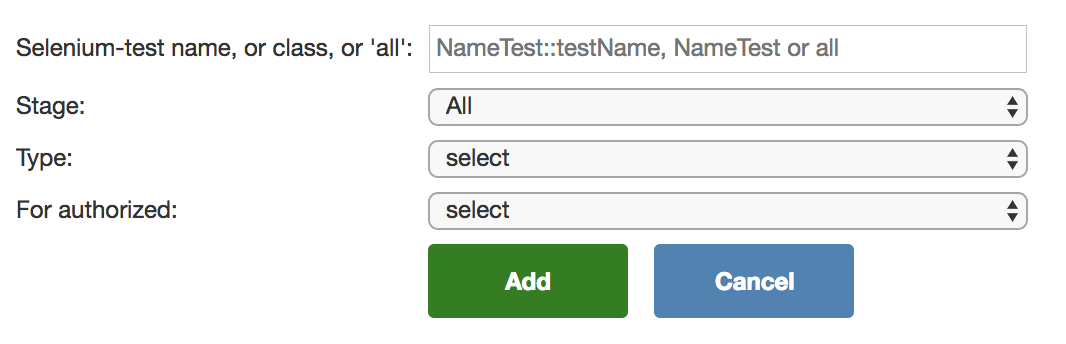

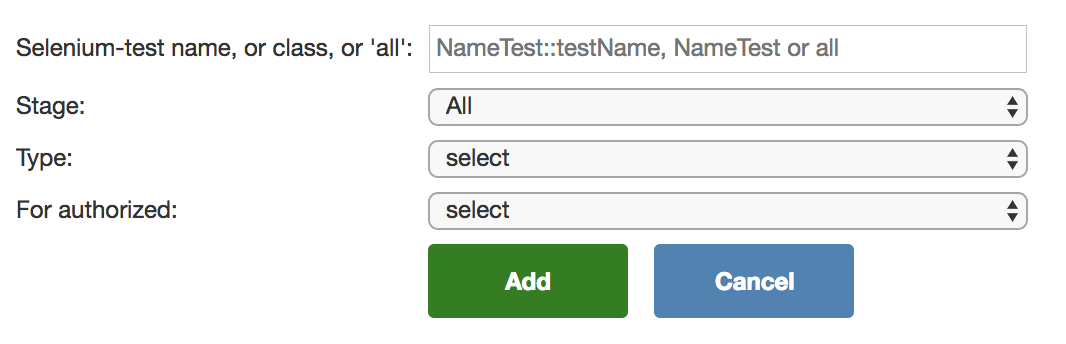

We also created a form for adding new A / B tests:

All this allows you to easily and quickly add and remove the necessary forces without creating a commit, waiting for it to decompose into all the keys, where UI tests are run, etc.

The second thing we decided to pay attention to is a revision of the approach to writing UI tests for A / B tests.

In a nutshell, I’ll tell you how we write normal UI tests. The architecture is quite simple and familiar:

In general, this architecture suits us completely. We know that if the UI has changed, only the PageObject classes need to be changed (and the tests themselves should not be affected). If the business logic of the feature has changed, change the script.

As I wrote in one of the previous articles , everything works with UI tests: the guys from the manual testing department and the developers. The simpler and clearer this process is, the more often tests will be run by people who have no direct relation to them.

But, as I wrote above, unlike the well-established features, the A / B tests come and go. If we write them in the same format as regular UI tests, we will have to permanently delete the code from many different places after completing the A / B tests. You understand that refactoring, especially when everything works without it, is not always possible to allocate time.

Nevertheless, we don’t want to allow our classes to overgrow with unused methods and locators, this will make the same PageObjects difficult to use. How to make your life easier?

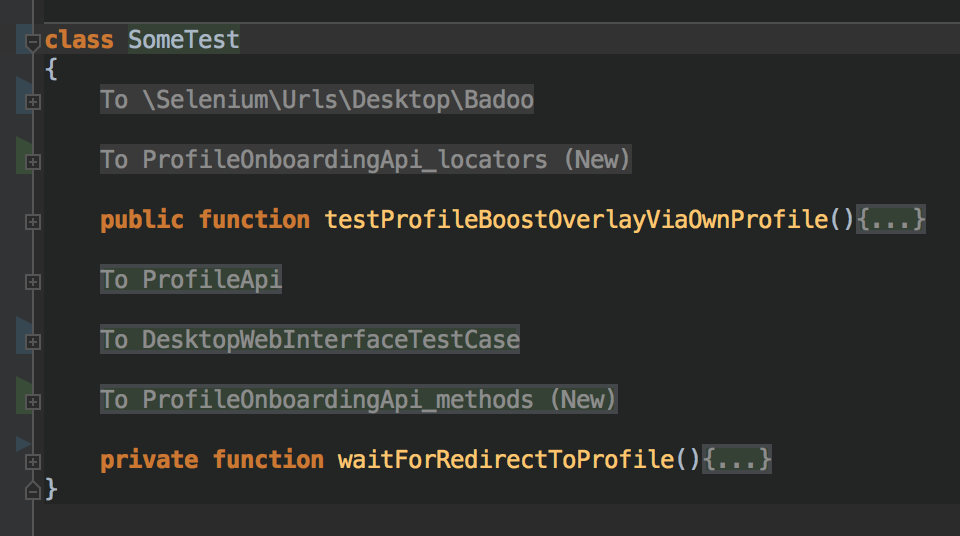

Then PhpStorm came to our rescue (thanks to the guys from JetBrains for the convenient IDE), namely this feature .

In short, it allows using special tags to divide the code into so-called regions. We tried - and we liked it. We started writing temporary UI tests for active A / B tests in one file, dividing code zones into regions indicating the class in which this code should be put in the future.

As a result, the test code looked like this:

In each region there is a code that belongs to a particular class. Surely in other IDE there is something similar.

Thus, we covered all the variants of the A / B test with a single test class, placing the PageObject methods and the locators there as well. And after its completion, we first removed the losers from the class, and then rather easily spread the remaining code to the desired classes in accordance with what is indicated in the region.

You can't just take all the A / B tests with UI tests at once. On the other hand, there is no such task. The task from the point of view of automation is to quickly cover only the important and long-playing tests.

Nevertheless, before the release of any, even the tiniest, A / B test, I want to be able to run all the UI tests on the winning version and make sure that everything works as it should and we are replicating high-quality working functionality for 100% of users.

The MySQL table solution mentioned above is not suitable for this purpose. The fact is that if you add a force there, it will immediately start to turn on for all UI tests. In addition to styling (our pre-production environment, where we run a full set of tests), this will also affect UI tests running against branches of individual tasks. The results of those launches will work colleagues from the manual testing department. And if a tired A / B test has a bug, the tests for their tasks will also fall and the guys may decide that the problem is in their task, and not in the A / B test. Because of this, testing and trial can take a long time (no one will be satisfied).

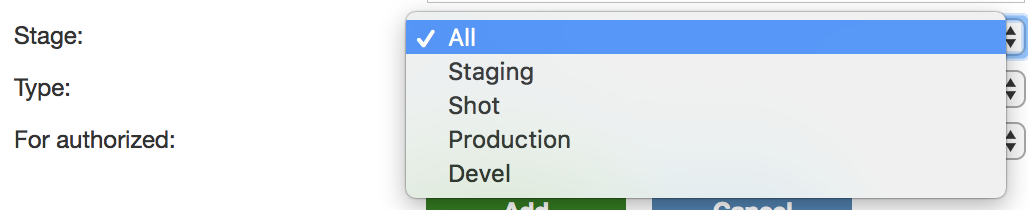

We have so far managed minimal changes, adding to the table the ability to specify the target environment:

This environment can be changed on the fly in an existing record. Thus, we can add force only for staging, without affecting the results of passing tests on individual tasks.

So, before the start of this whole story, our UI tests covered only the main (control) groups of A / B tests. But we realized that we want more, and came to the conclusion that it is also necessary to cover other variants of A / B tests.

Eventually:

All this allowed us to adapt test automation to constantly changing features, to easily control and increase the level of coverage and not to overgrow with legacy code.

Do you have the experience of bringing at first glance a chaotic situation to some controlled order and simplifying the life of yourself and your colleagues? Share it in the comments. :)

Thanks for attention! And happy New Year!

My name is Vitaliy Kotov, I work for Badoo and most of the time I work on testing automation. The solution of one such issue I want to share in this article.

It’s about how we organized the process of working with A / B tests for UI tests, of which we have a lot. I will tell you about the problems we faced and which flow came in the end. Welcome under the cut!

')

Not yet started ...

In this article, the word "test" is very common. That's because we are talking simultaneously about UI tests and A / B tests. I tried to always separate these two concepts and formulate thoughts so that the text was easy to read. If somewhere, I still missed the first part of the word and wrote just a “test”, I meant a UI test.

Enjoy reading!

What are A / B tests

So let's first define the concept of A / B-test. Here is a quote from Wikipedia:

"A / B testing (born A / B testing, Split testing) is a marketing research method, the essence of which is that the control group of elements is compared with a set of test groups in which one or several indicators have been changed, in order to find out which of the changes improve the target indicator ” Ref .

In terms of our project, the presence of an A / B test implies that some functionality is different for different users. I would highlight several options:

- feature is available for one user group, but not available for another;

- The feature is available to all users, but it works in different ways;

- the feature is available to all users, it works the same, but it looks different;

- any combination of the previous three options.

In order for all this logic to work, we have a tool in our company called the UserSplit Tool , and our developer Rinat Akhmadeev described it in detail in this article .

We now talk about what it means to have A / B tests for the testing department and for automators in particular.

UI test coverage

When we talk about coverage with UI tests, we are not talking about the number of lines of code we tested. This is understandable, because even just opening the page can use many components, while we have not yet tested anything.

For many years of work in the field of test automation, I have seen many ways to measure the coverage of UI tests. I will not list them all, just to say that we prefer to evaluate this indicator by the number of features that are covered by UI tests. This is not an ideal way (I personally don’t know the ideal one), but in our case it works.

And here we return directly to the topic of the article. How to measure and maintain a good level of coverage of UI-tests, when each feature can behave differently depending on the user who uses it?

How features were covered with UI tests initially

Even before the UserSplit Tool appeared in the company and there were really a lot of A / B tests, we followed the following strategy of covering UI tests with features: covering only those features that have been on production for some time and are well-established.

And all because earlier, when the feature only got into production, it was still “tyunil” for a while - its behavior and appearance could change. And she could not establish herself at all and rather quickly disappear from the eyes of users. Writing UI tests for unstable features is expensive and has not been practiced.

With the introduction of the A / B tests into the development process, at first nothing has changed. Each A / B test had a so-called “control group,” that is, a group that saw some default behavior of the feature. It was on him and wrote UI-tests. All that had to be done when writing UI tests for such a feature was to remember to include the user with default behavior. We call this process Force A / B-group (from the English. Force).

I will dwell on the description of the force as it will play a role in my story.

Force for A / B tests and QaAPI

We have already told about QaAPI more than once in our articles and in reports. Nevertheless, oddly enough, so far we have not written a full article about this tool. Probably one day this space will be filled. In the meantime, you can watch a video of the speech of my colleague Dmitry Marushchenko: " 4. The concept of QaAPI: a look at testing from the other side of the barricades ."

In a nutshell, QaAPI allows you to make requests from a test to the application server through a special backdoor in order to manipulate any data. With this tool, for example, we prepare users for specific test cases, send them messages, upload photos, and so on.

With the help of the same QaAPI, we are able to force the A / B test group; you only need to specify the name of the test and the name of the desired group. The challenge in the test looks like this:

QaApi::forceSpliTest(“Test name”, “Test group name”, {USER_ID or DEVICE_ID}); In the last parameter, we specify the user_id or device_id for which this force should start working. We specify the device_id parameter in the case of an unauthorized user, since there is still no user_id parameter. That's right, for unauthorized pages, we also have A / B tests.

After calling this QaAPI method, an authorized user or owner of the device will be guaranteed to see the version of the feature that we have uploaded. We wrote such calls in UI tests that covered features under A / B testing.

And so we lived for a long time. UI tests covered only control groups of A / B tests. Then they were not very many, and it worked. But time passed; The number of A / B tests began to increase, and almost all new features began to run under A / B tests. The approach with coverage of only control versions of features stopped us. And that's why…

Why cover A / B tests

Problem one - coverage

As I wrote above, over time, almost all new features began to come out under the A / B tests. In addition to the control, each feature has one more, two or three other options. It turns out that for such a feature, at best, the coverage will not exceed 50%, and at worst - it will be about 25%. Previously, when there were few such features, this did not have a significant impact on the total coverage indicator. Now - began to render.

Problem two - long A / B tests

Some A / B tests now take quite a long time. And we continue to be released twice a day (you can read about this in the article of our QA-engineer Ilya Kudinov “ As we have been surviving for 4 years in conditions of two releases per day ”).

Thus, the probability of breaking some of the versions of the A / B test during this time is incredibly large. And this will certainly affect the user experience and nullify the whole point of the A / B testing feature: after all, a feature can show bad results on one of the versions, not because the users do not like it, but because it does not work as expected.

If we want to be confident in the result of A / B testing, we should not allow any of the versions of the feature to work differently from what is expected of it.

Problem three - the relevance of UI tests

There is such a thing as an A / B test release. This means that the A / B test collected a sufficient amount of statistics and the product manager is ready to open the winning option for all users. The A / B test release occurs asynchronously with the release of the code, since it depends on the configuration of the config, and not on the code.

Suppose won and unreleased not control version. What will happen to the UI tests that covered only him? That's right: they will break. And what if they break an hour before the release of the build? Can we do regression testing of this very build? Not. As you know, with broken tests will not go far.

So, you need to be ready to close any A / B test in advance so that it does not interfere with the performance of the UI tests and, as a result, the next release of the build.

Conclusion

The conclusion from the above suggests an obvious one: it is necessary to cover all of the A / B tests with UI tests. Is it logical Yes! Thank you all, disagree!

... Joke! Not so simple.

Interface for A / B tests

The first thing that seemed inconvenient is control over which A / B tests and options of features are already covered, and which ones are not yet. Historically, we call UI tests according to the following principle:

- name of feature or page;

- description of the case;

- Test.

For example, ChatBlockedUserTest, RegistrationViaFacebookTest, and so on. To shove here also the name of the split test seemed inconvenient. First, the names would be incredibly long. Secondly, tests would have to be renamed upon completion of the A / B test, and this would have a bad effect on collecting statistics, which takes into account the name of the UI test.

To get the code all the time to call the QaAPI method is still a pleasure.

So we decided to remove all the calls to QaApi :: forceSplitTest () from the UI test code and transfer the data on which forces are needed to the MySQL table. For her, we did a UI view on the Selenium Manager (I told about it here ).

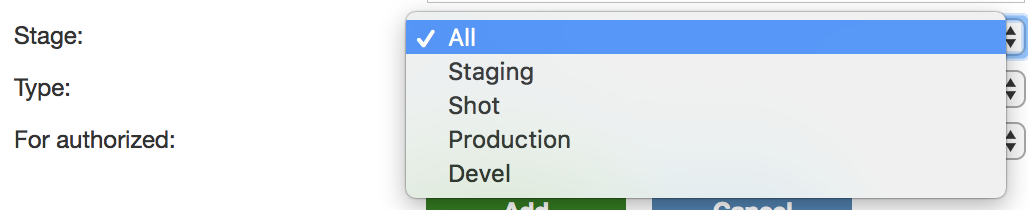

It looks like this:

In the table, you can specify for which UI test the force of which A / B test and in which group we want to apply. You can specify the name of the UI test itself, test class or All.

In addition, we can indicate whether this force applies to authorized or unauthorized users.

Next, we taught UI tests to get data from this table at startup and force those that are directly related to the running test or to all (all) tests.

Thus, we managed to collect all A / B test manipulations in one place. Now the list of covered A / B tests is convenient for viewing.

We also created a form for adding new A / B tests:

All this allows you to easily and quickly add and remove the necessary forces without creating a commit, waiting for it to decompose into all the keys, where UI tests are run, etc.

UI Test Architecture

The second thing we decided to pay attention to is a revision of the approach to writing UI tests for A / B tests.

In a nutshell, I’ll tell you how we write normal UI tests. The architecture is quite simple and familiar:

- test classes - the business logic of the covered feature is described there (in fact, these are the scenarios of our tests: I did this, I saw this and that);

- PageObject-classes - all interactions with UI and locators are described there;

- TestCase classes - there are common methods that do not directly relate to the UI, but can be useful in several test classes (for example, interactions with QaAPI);

- core classes - there are the logic of raising the session, logging and other things that you do not need to touch when writing a regular test.

In general, this architecture suits us completely. We know that if the UI has changed, only the PageObject classes need to be changed (and the tests themselves should not be affected). If the business logic of the feature has changed, change the script.

As I wrote in one of the previous articles , everything works with UI tests: the guys from the manual testing department and the developers. The simpler and clearer this process is, the more often tests will be run by people who have no direct relation to them.

But, as I wrote above, unlike the well-established features, the A / B tests come and go. If we write them in the same format as regular UI tests, we will have to permanently delete the code from many different places after completing the A / B tests. You understand that refactoring, especially when everything works without it, is not always possible to allocate time.

Nevertheless, we don’t want to allow our classes to overgrow with unused methods and locators, this will make the same PageObjects difficult to use. How to make your life easier?

Then PhpStorm came to our rescue (thanks to the guys from JetBrains for the convenient IDE), namely this feature .

In short, it allows using special tags to divide the code into so-called regions. We tried - and we liked it. We started writing temporary UI tests for active A / B tests in one file, dividing code zones into regions indicating the class in which this code should be put in the future.

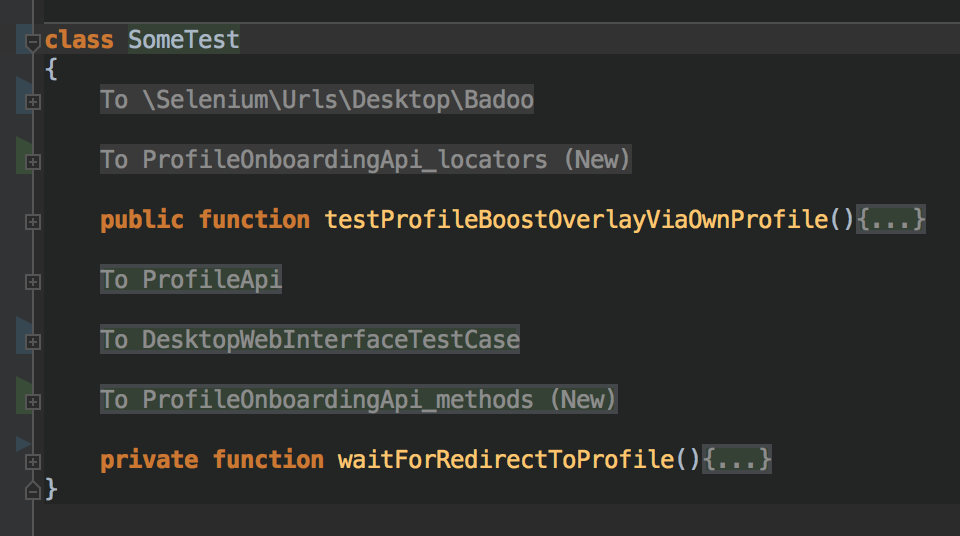

As a result, the test code looked like this:

In each region there is a code that belongs to a particular class. Surely in other IDE there is something similar.

Thus, we covered all the variants of the A / B test with a single test class, placing the PageObject methods and the locators there as well. And after its completion, we first removed the losers from the class, and then rather easily spread the remaining code to the desired classes in accordance with what is indicated in the region.

As we now close the A / B tests

You can't just take all the A / B tests with UI tests at once. On the other hand, there is no such task. The task from the point of view of automation is to quickly cover only the important and long-playing tests.

Nevertheless, before the release of any, even the tiniest, A / B test, I want to be able to run all the UI tests on the winning version and make sure that everything works as it should and we are replicating high-quality working functionality for 100% of users.

The MySQL table solution mentioned above is not suitable for this purpose. The fact is that if you add a force there, it will immediately start to turn on for all UI tests. In addition to styling (our pre-production environment, where we run a full set of tests), this will also affect UI tests running against branches of individual tasks. The results of those launches will work colleagues from the manual testing department. And if a tired A / B test has a bug, the tests for their tasks will also fall and the guys may decide that the problem is in their task, and not in the A / B test. Because of this, testing and trial can take a long time (no one will be satisfied).

We have so far managed minimal changes, adding to the table the ability to specify the target environment:

This environment can be changed on the fly in an existing record. Thus, we can add force only for staging, without affecting the results of passing tests on individual tasks.

Summing up

So, before the start of this whole story, our UI tests covered only the main (control) groups of A / B tests. But we realized that we want more, and came to the conclusion that it is also necessary to cover other variants of A / B tests.

Eventually:

- we created an interface for convenient control over the A / B test coverage; as a result, we now have all the information about the operation of UI tests with A / B tests;

- we have developed a way to write temporary UI tests with a simple and effective flow of their further removal or transfer to the ranks of permanent;

- we learned how to easily and easily test A / B test releases without interfering with other running UI tests and without excessive commits in Git.

All this allowed us to adapt test automation to constantly changing features, to easily control and increase the level of coverage and not to overgrow with legacy code.

Do you have the experience of bringing at first glance a chaotic situation to some controlled order and simplifying the life of yourself and your colleagues? Share it in the comments. :)

Thanks for attention! And happy New Year!

Source: https://habr.com/ru/post/434448/

All Articles