MIT course "Computer Systems Security". Lecture 22: MIT Information Security, Part 2

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Lecture 7: "Sandbox Native Client" Part 1 / Part 2 / Part 3

Lecture 8: "Model of network security" Part 1 / Part 2 / Part 3

Lecture 9: "Web Application Security" Part 1 / Part 2 / Part 3

Lecture 10: "Symbolic execution" Part 1 / Part 2 / Part 3

Lecture 11: "Ur / Web programming language" Part 1 / Part 2 / Part 3

Lecture 12: "Network Security" Part 1 / Part 2 / Part 3

Lecture 13: "Network Protocols" Part 1 / Part 2 / Part 3

Lecture 14: "SSL and HTTPS" Part 1 / Part 2 / Part 3

Lecture 15: "Medical Software" Part 1 / Part 2 / Part 3

Lecture 16: "Attacks through the side channel" Part 1 / Part 2 / Part 3

Lecture 17: "User Authentication" Part 1 / Part 2 / Part 3

Lecture 18: "Private Internet browsing" Part 1 / Part 2 / Part 3

Lecture 19: "Anonymous Networks" Part 1 / Part 2 / Part 3

Lecture 20: “Mobile Phone Security” Part 1 / Part 2 / Part 3

Lecture 21: “Data Tracking” Part 1 / Part 2 / Part 3

Lecture 22: MIT Information Security Part 1 / Part 2 / Part 3

So, this was a preface to the fact that we have a team of just four people in a very large institution with a bunch of devices. Thus, the federation that Mark talked about is really necessary in order to at least try to ensure the security of a network of this size.

')

We communicate with people and help them on campus. Our portfolio consists of consulting services, services that we represent to the community, and tools that we use. The services we provide are quite diverse.

Abuse reporting - we issue reports of network abuse. Usually these are responses to complaints from the outside world, the vast majority of which concern the creation of Tor nodes in the institute’s network. We have them, what else can I say? (audience laughter)

Endpoint protection - endpoint protection. We have some tools and products that we install on "big" computers, if you wish, you can use them for work. If you are part of the MIT domain, which is mostly an administrative resource, these products will be automatically installed on your computer.

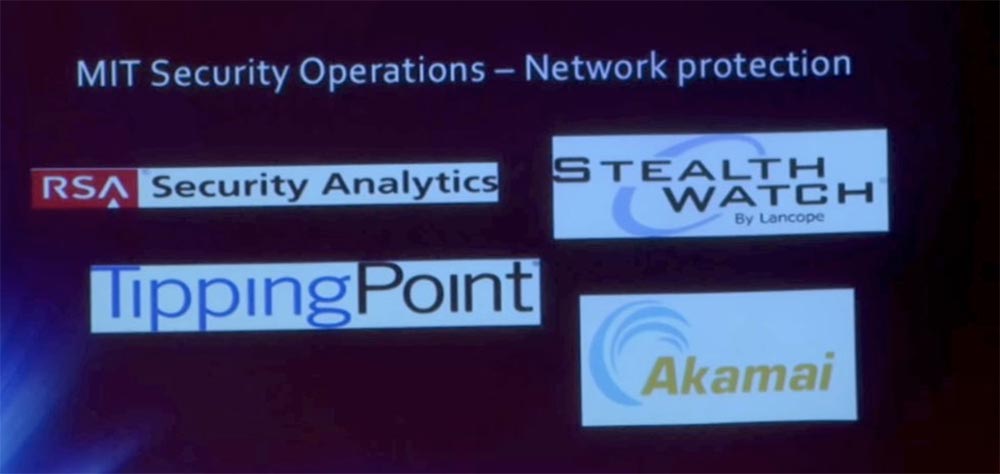

Network protection, or network protection, is a set of tools located both throughout the mit.net network and at its borders. They detect anomalies or collect traffic flow data for analysis. Data analytics helps us compare, connect all these things together and try to get some useful information.

Forensics is computer forensics, we'll talk about it in a second.

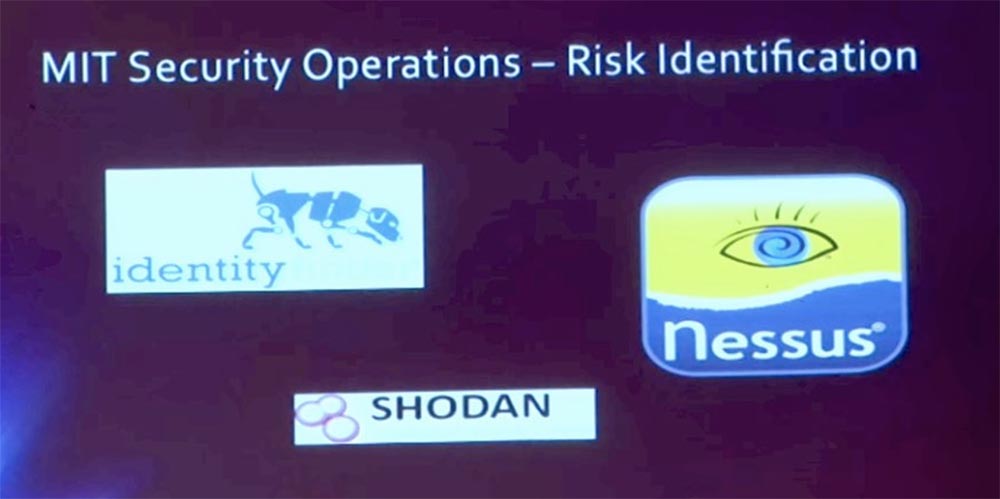

Risk identification, or risk identification, is mainly network sensing and assessment tools such as Nessus. This includes things that are looking for PII - personal identification information, because, while in Massachusetts, we need to enforce the new rules of the basic state law 201 CMR 17.00. They require that agencies or companies that store or use personal information about residents of the state of Massachusetts develop a plan to protect personal information and regularly check its effectiveness. Therefore, we need to determine where in our network is the personal information of users.

Outreach / Awareness / Training - information and training, I just talked about this.

Compliance needs, addressing needs is primarily a PCI DSS system. PCI, the payment card industry, which has DSS, a data security standard. Believe it or not, the Massachusetts Institute of Technology trades in credit cards. We have several suppliers of these payment tools on campus, and we need to be able to ensure that their infrastructure is compatible with PCI DSS. So, safety management and safety compliance in meeting such needs are also part of our team’s work.

PCI 3.0, which is the sixth major update of the standard, will be launched on January 1, so we are in the process of ensuring compliance with our entire infrastructure.

Reporting / Metrics / Alerting, reporting, providing indicators, and issuing alerts are also part of our work.

To protect the endpoints, a number of products are used, as shown in the next slide. On top of it seems to be an eagle. There is a tool called CrowdStrike, which is currently being tested in our IS & T department. Basically, it tracks anomalous behavior from a system call point of view.

For example, if you use MS Word and the program suddenly starts doing something that it should not do, for example, trying to read the account database from the system or a bunch of passwords, CrowdStrike warns about this and throws an alarm flag. This is a cloud tool, which we'll talk about later. All this data is sent to the central console, and if computers try to do something malicious, as evidenced by a heuristic behavioral perspective, they get a red flag.

GPO - Group Policy Objects is a system that implements group security policies. S is all sorts of Sophos programs to protect against malicious applications, antiviruses, all the things we expect to get when you buy products to protect the endpoint.

PGP encrypts the hard disk for campus systems that contain sensitive data.

Some of these tools are being replaced with more advanced ones. The industry is increasingly moving towards vendor neutral policies using solutions like BitLocker for Windows or FileVault for Mac, so we are exploring these options. Casper mainly manages the security policies of computers running Mac OS.

As for the protection of the network, we are working in this area with the company Akamai - this is the company that actually left MTI, many of our graduates work in it. They provide very good maintenance, so we use the many services they provide.

Later we will talk about them in sufficient detail.

TippingPoint is a risk identifier, intrusion detection system. As I said, some of these tools are constantly being improved, and TippingPoint serves as an example. Thanks to him, we have an intrusion prevention system at our border. In fact, we do not prevent them, but simply discover them. We do not block anything at all at the MIT network boundary, using some very simple and widespread anti-spoofing rules.

Stealth Watch is a tool that generates NetFlow data, or, more correctly, collects NetFlow data. We use Cisco devices, but all network devices output some details, the metadata of the streams they send, the source port, destination port, source IP address, destination IP address, protocol, and so on. StealthWatch collects this data, performs basic security analysis on it, and also provides an API that we can use to do smarter things.

RSA Security Analytics is another tool that acts in many ways as an identifier on steroids. It performs a full packet capture, so you can see their contents if it is marked with a red flag.

In the area of risk identification, Nessus is a kind of de facto vulnerability assessment tool. Usually we use it on demand, we do not deploy it as a whole on the 18/8 network subdomain. But if we get some DLC add-ons for the campus software, then we use Nessus to evaluate their vulnerabilities.

Shodan is called a computer search engine. Basically, it scans the Internet as a whole and provides a lot of useful data about its security. We have a subscription to this "engine", so that we can use this information.

Identity Finder is a tool that we use in places where there is PII, confidential personal information, in order to comply with registration data protection rules and just make sure that we know where important data is located.

Computer forensics is a matter that we deal with periodically ... I can’t find the right words, because we don’t do it regularly, sometimes such investigations take a lot of time, sometimes not so much. For this, we also have a set of tools.

EnCase is a tool that allows us to capture disk images and view HDD content with their help.

FTK, or Forensic Tool Kit - a set of tools for carrying out investigations in the field of computer forensics. People often turn to us when it is necessary to take images of the disks when considering controversial cases of confirming intellectual property or in some other cases that are considered by OGC, the office of the General Counsel. We have all the necessary tools for this. Honestly, this is not a permanent job, it appears periodically.

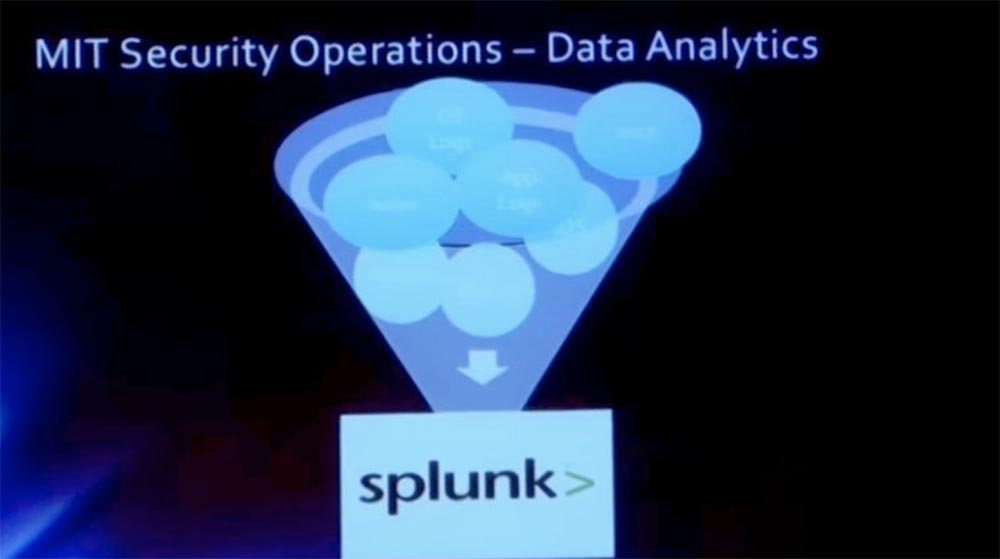

So how do we collect all this data together? Mark mentioned correlation. To ensure system management, we process the system logs. You can see that we have NetFlow logs, some DHCP logs, identifier logs and Touchstone logs.

Splunk is a tool that performs most of the correlation work and takes data that is not necessarily normalized, normalizes it and allows us to compare data from different sources in order to get more “intelligence”. Thus, it is possible to display a page on which all your logins will be recorded, and impose a GeoIP on this to display also the log-in points.

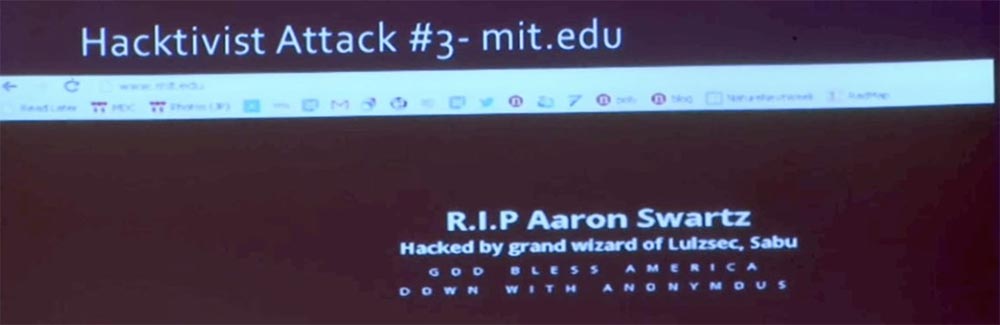

Let's talk about attacks and more interesting things than the software we use. First, we’ll talk about the most common denial of service attacks in recent years. We will also talk about specific attacks that occurred as a result of the tragedy of Aaron Schwartz several years ago, and they are also associated with distributed DoS attacks.

Now I will show you the DoS primer. The first DoS attack is aimed at the letter A of the Computer Security Triad CIA. CIA is an abbreviation composed of Confidentiality - Confidentiality, Integrity - Integrity and Availability - Accessibility. So the DoS attack is aimed primarily at availability, Availability. The attacker wants to disable the resource so that legitimate users cannot use it.

This may be damage to the page. It's very simple, isn't it? Digital graffiti just spoils the page so that no one can see it. This can be a huge resource consumption, where the attack "eats" all the computing power of the system and the entire network bandwidth. This attack can be carried out by one person, but it is likely that a hacker will invite friends to organize a whole DDoS - party, that is, a distributed denial of service attack.

Current DDoS trends are listed in the Arbor Network report. This is an extension of the attack surface, with hacktivism being the most common motivation; it accounts for up to 40% of such attacks, the motivation of another 39% remains unknown. The intensity of the attacks, at least last year, reached 100 Gbps. In 2012, there was an attack on the organization Spamhaus, which reached an intensity of 300 Gbit / s.

DDoS attacks become longer. Thus, the hacker operation Abibal, directed against the US financial sector, lasted for several months, periodically increasing the intensity to 65 Gbit / s. I heard about this, and you can find confirmation on Google that this attack lasted almost constantly, and it was impossible to stop it. Later we will talk about how they did it.

But, frankly, 65 Gbit / s or 100 Gbit / s, the difference for the victim is not big, because rarely which systems in the world can withstand a prolonged attack of this magnitude, because it is simply impossible to stop. Recently, there has been a shift towards reflection and amplification attacks. These are attacks for which you take a small input signal and turn it into a large output signal. This is nothing new. It goes back to the ICMP Smurf attack, where you would ping the network broadcast address and every machine on that network that responds to the intended sender of the packet, which, of course, will be forged. For example, I pretend to be Mark and send a packet to the broadcast address of this class. And you will all begin to respond with Mark’s packages, thinking that he sent them. Meanwhile, I sit in the corner and laugh. So there is nothing new in it, because I read about this method while still a student.

So, the next kind of DDoS attacks is UDP Amplifacation, or “UDP amplification”. UDP is a “burn and forget” user datagram protocol, right? This is not TCP, it is completely unreliable and not focused on creating secure connections. It is very easy to fake. What we have seen in the last year is the exploits to enhance the functions of the three protocols: DNS, port 53, UDP. If you send 64 bytes of an ANY request to an incorrectly configured server, it will generate a 512 byte response that will be returned to the victim of the attack. This is an 8-fold gain, which is quite good for organizing a DoS – attack.

When there was a tendency to increase attacks of this type, we witnessed them here in the mit.net network before they were massively used against victims in the commercial sector. We saw a 12 gig DNS amplification attack that significantly affected our outgoing bandwidth. We have rather high bandwidth, but adding such data volumes to the legal traffic caused a problem, and Mark and I were forced to solve it.

SNMP, which is a UDP 161 port protocol, is a very useful management protocol. It allows you to remotely manipulate data using get / set statements. Multiple devices, such as network printers, allow get access without any authentication. But if you send a GetBulkRequest request of 64 bytes to a device that is incorrectly configured, it will send a response to the victim, the size of which may exceed the size of the request 1000 times. So it is even better than the previous version.

As an attacker, you usually choose targeted attacks, so we witnessed massive attacks on printers located on our campus network. Using a printer with an open SNMP agent, the hacker sent him packets, and the response, a thousand times larger, polluted the entire Internet network.

This is followed by the time server protocol, or the network time protocol NTP. When it is used, an incorrectly configured server will respond to the MONLIST request. Here, the basis of the attack will not be amplification, but the response to the monlist request in the form of a list of the last 600 NTP clients. Thus, a small request from an infected computer sends a large UDP traffic to the victim.

This is a very popular type of attack, and we got into a big mess due to incorrect configuration of the NTP monlist. In the end, we did a few things to mitigate these attacks. So, on the NTP side, we disabled the monlist command on the NTP server, and this allowed us to “kill” the attack in its tracks. But since we have a government agency, there are things that we have no right to touch, so we turned off the monlist as far as our powers allowed. In the end, we simply limited the speed of NTP at the MIT network boundary to reduce the impact of the systems responding to us, and now we have been living for a year without any negative consequences. The speed limit of a few megabits is certainly better than the gigabytes that we previously sent to the Internet. So this is a solvable problem.

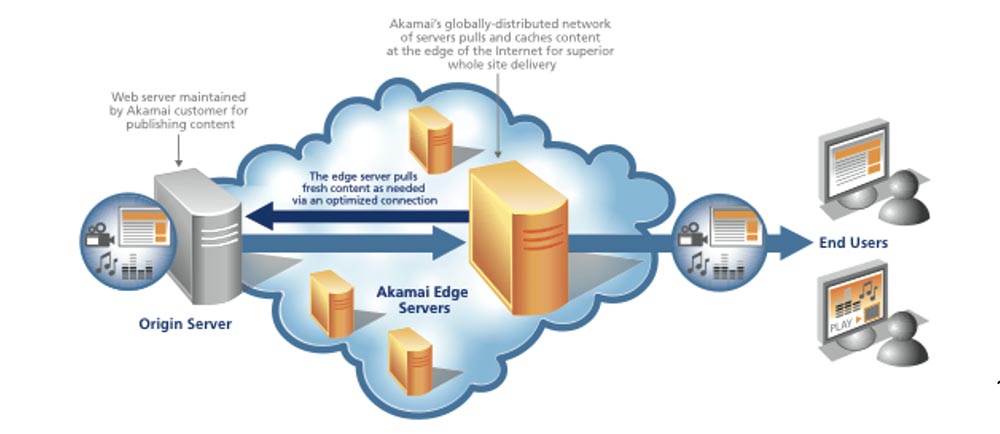

Taking care of DNS security is a bit more complicated. We started using Akamai service called eDNS. Akamai provides this service as a shared hosting zone, so we used their eDNA and split our DNS, our system domain name space, on 2 tiers. We assigned Akamai an external tier external view, and internal internal view was assigned to servers that always served MIT. We blocked access to internal networks in such a way that only MIT clients could get on our internal servers, and the rest of the world was left in the care of Akamai.

Akamai's eDNS advantage is content distributed across the Internet throughout the world, it is a geographically distributed network infrastructure that delivers content from Asia, Europe, North America, and East and West. Hackers cannot destroy Akamai, so we don’t need to worry that our DNS will ever shut down. This is how we solved this problem.

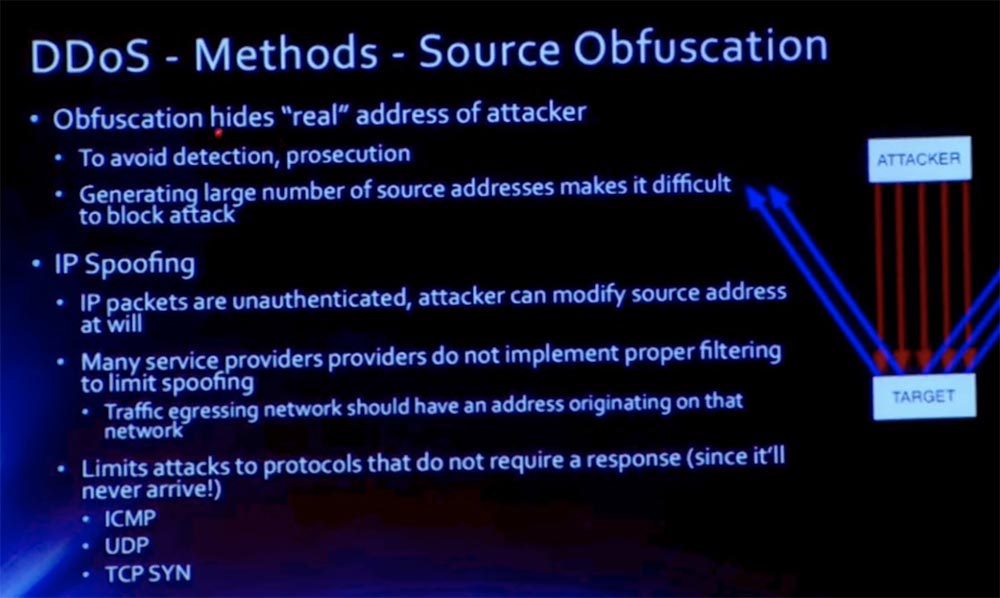

DDoS–. , Source Obfuscation, . , . .

DDoS — – . , .

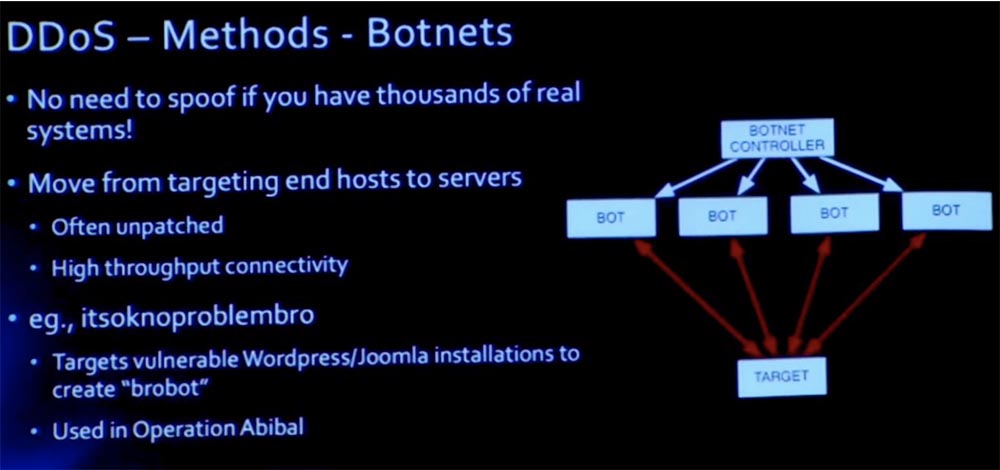

. «itsoknoproblembro» ( , , ) Ababil, . «brobot» Wordpress Joomla.

, , , , . , . , , , TCP SYN-ACK, , HTTP- GET POST.

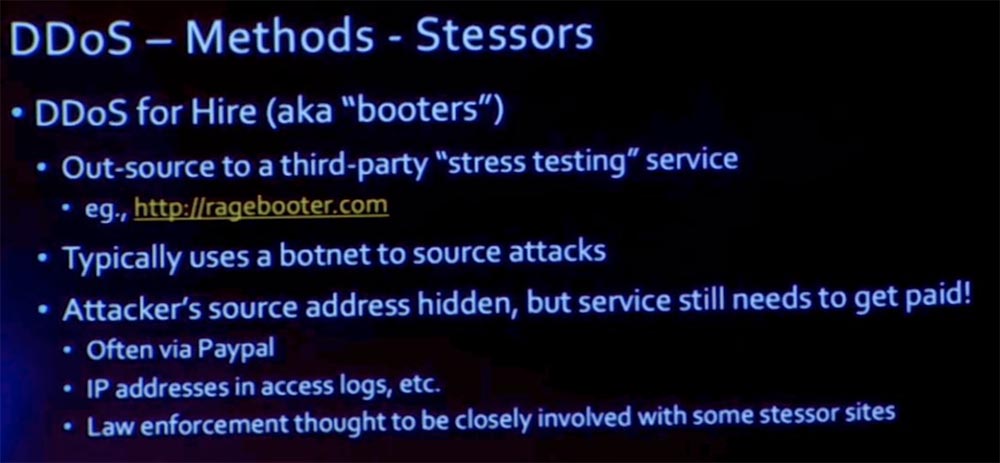

DDoS- . – , .

, -, , Ragebooter. , , DDoS- . , , PayPal.

DDoS-. DNS- , Akamai. .

-, . , , MIT. «» -. , DNS. MIT. MIT Akamai, DNS, Akamai.

, MIT, CDN- Akamai, . - , , MIT Akamai, Akamai , . , , - - Akamai.

, NTP, . , brute-force, . , . , ?

, , ? , , , . ? , , .

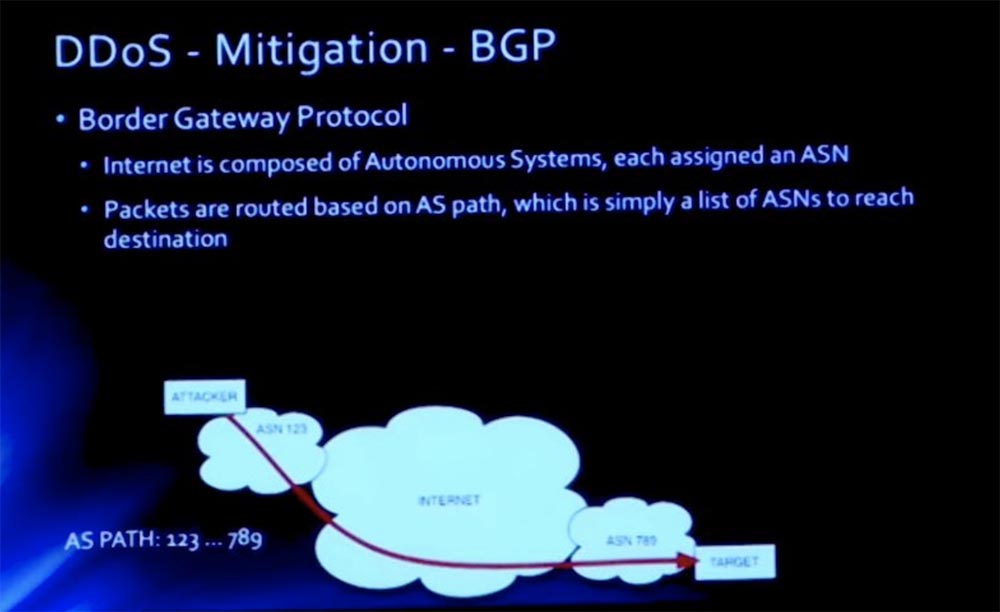

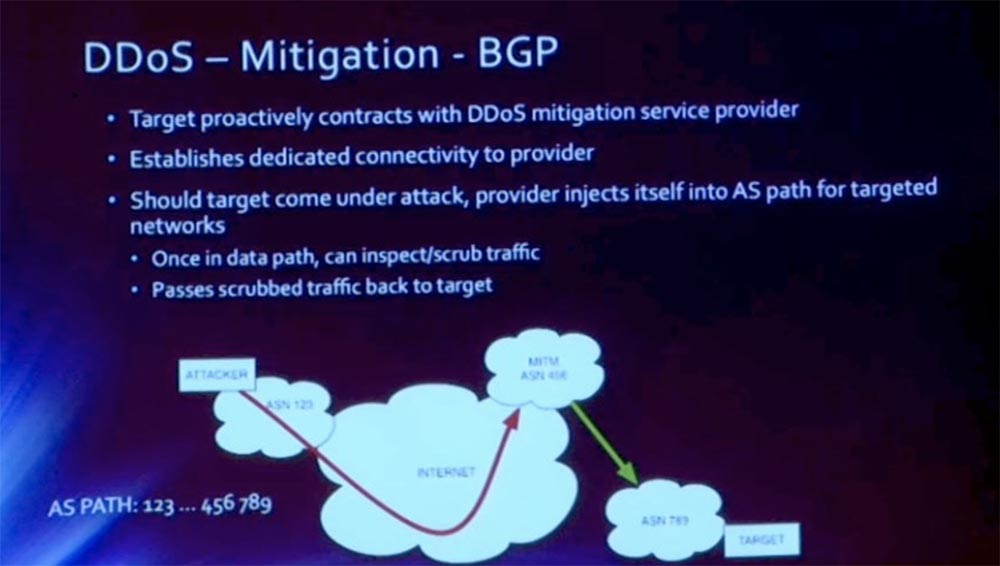

BGP. BGP, , , . , ASN. ASN, BGP , , ASN.

123, . , , 11 , .

, , ASN123, – ASN789. ASE, .

BGP ASN, MIT. ASN456. « », . , 18.1.2.3.0/24 255 MIT , ASN456 .

, . AS Akamai. , .

, «», . , .

, , ?

: MIT, , .

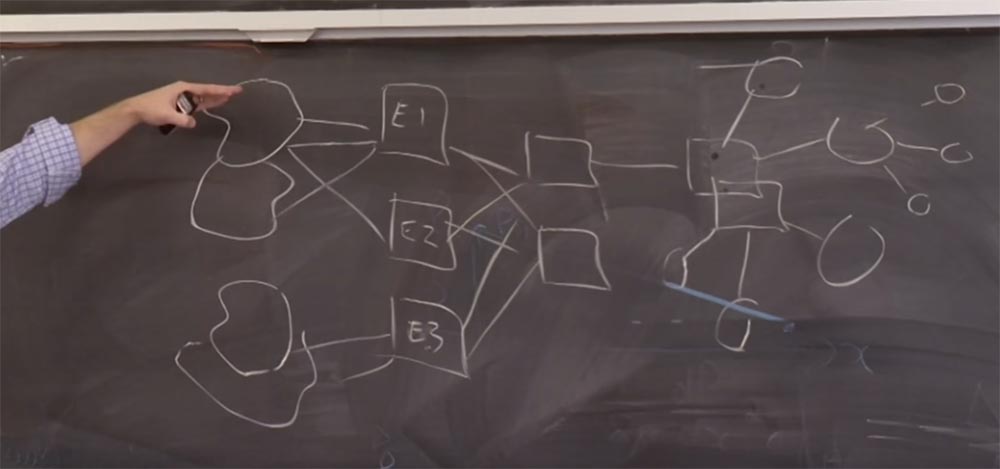

: . MIT : External 1, External 2 External 3. MIT. , , . , 3 E1,2,3, . , . 3, , . .

, , BGP, . – , .

, , , 2 , MIT, . , .

. . , . , .

, E1,2,3, , , « ».

, 100 /, , . , «» . , . mit.net, .

. , , Patriots -. , - , . , .

, , ? . , IP- VPN. , . , .

: , ?

: , , . web.mit.edu. , DNS-. web.mit.edu -, . HTTP- GET/POST, , GET POST. , -, . , , . , , IP-. , , , , , «».

, . , - . , , , Akamai CDN. , MIT web.mit, 18.09.22 . C, , IP- Akamai.

mit.net, . www.mit web.mit – . -. , . .

, who_is DNS . , « » — « – » – DESTROYED, MA 02139-4307.

, - CloudFlare.

58:30

MIT course "Computer Systems Security". 22: « MIT», 3

.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January free of charge if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/434344/

All Articles