Experience of introducing automation into the process of manual testing on the example of an Android application

A good tester with critical thinking skills cannot be completely replaced by automation. Making it work more efficiently is easy. With this conviction, I went to our testing department with a new task, where Pavel and I set about implementing it. Let's see what came of it.

Together with our partners, we actively develop, test and support a family of applications for different platforms: Android, iOS, Windows. Applications are actively developing, along with which increases the amount of testing, in the first place - regression.

We decided to try to simplify and speed up testing by automating most of the test scripts. At the same time, we did not want to completely abandon the manual testing process, but rather modify it.

')

The implementation of this approach began with one of the Android applications, which I will tell you about. The article will be interesting to novice authors of UI-tests, primarily for mobile applications, as well as those who want to automate the manual testing process to some extent.

Go!

For each platform, we have several similar applications running the same basic business process. However, they differ from each other in a set of small auxiliary functionalities, are implemented under different brands depending on the customer (which is why the interface changes from application to application), and the business process can be customized by adding additional steps.

We are faced with certain problems that need to be addressed. Similar difficulties may arise in a situation other than ours. For example, if you have one bulky application with difficult business logic, overgrown with many tests.

The sets of test scenarios for each application are both similar and different, which increases the regression and makes it even more boring. However, all applications need to be tested separately.

Given that already running applications are updated regularly, and in the future there will only be more, the total number of tests will inexorably grow.

An important requirement is the performance of our mobile applications on a wide range of operating system versions. For example, in the case of Android at the time of writing, these are API levels from 17 to 28.

Ideally, we should test on each version of Android, which further “weights” our regression. The process of direct testing of an application acquires an additional routine, multiplied by the number of devices: installing and launching the application, resetting it to its original state after each individual test, and deleting. At the same time, to maintain your own device farm rather laboriously.

A typical test automation task is to automate regression tests. So we want to improve the efficiency of the testing process today and prevent the possible consequences of growth tomorrow.

At the same time, we are well aware that it is impossible and unnecessary to try to completely eradicate manual testing by automation. Critical thinking and the human eye is difficult to replace. On this topic there is a good article in the blog of Michael Bolton The End of Manual Testing (or translation from Anna Rodionova).

We thought that it would be useful to have a set of automated tests that cover the stable parts of the application, and in the future - to write tests for found bugs and new functionality. At the same time, we want to link autotests with existing test suites in our test management system (we use TestRail), and also give testers the ability to easily run auto tests on cloud physical devices (we chose Firebase Test Lab as the cloud infrastructure).

To start and sample, we took one of our Android applications. It was important to take into account that if the solution was successful, its best practices could be applied to the rest of our applications, including on other platforms.

What we want to get in the end:

Further, I will separately tell about the implementation of each of these points with a slight immersion in the technical component.

But first - the general scheme of what we have done:

Auto tests run in two ways:

In the case of a manual launch, the tester needs to either indicate the number of the corresponding build, or download 2 APK from your computer: with the application and with the tests. This method is needed so that you can run the necessary tests at any time on any available devices.

During the execution of tests, their results are sent to TestRail. This happens in the same way as if the tester did manual testing and recorded the results in the way he was used to.

Thus, we left the well-established process of manual testing, but added automation to it, which performs a specific set of tests. The tester "picks up" what is done automatically, and:

We now turn to the promised description of the implementation.

We used 3 tools to interact with the user interface:

The main tool and the one with which we started is Espresso. The main argument in favor of his choice was the fact that Espresso allows you to test the white box method, providing access to Android Instrumentation. The test code is in the same project as the application code.

Access to the Android application code is needed in order to call its methods in tests. We can prepare our application in advance for a specific test by running it in the desired state. Otherwise, we need to reach this state through the interface, which deprives the tests of atomicity, making them dependent on each other, and simply eats up a lot of time.

During the implementation, another tool was added to Espresso - UI Automator. Both frameworks are part of Google's Android Testing Support Library . Using the UI Automator, we can interact with various system dialogs or, for example, with Notification Drawer.

And the latest in our arsenal was the Barista framework. It is a wrapper around Espresso, saving you the boilerplate code when implementing common user actions.

Bearing in mind the desire to be able to reuse the solution in other applications, it is important to note that the listed tools are intended exclusively for Android applications. If you do not need access to the code of the application under test, then you will probably prefer to use another framework. For example, very popular today Appium. Although with him you can try to get to the application code using backdoors, which is a good article on the Badoo blog. The choice is yours.

As a design pattern, we chose Testing Robots, proposed by Jack Wharton in the report of the same name. The idea of this approach is similar to the common Page Object design pattern, which we use when testing web systems. Programming language - Java.

For each independent fragment of the application, a special robot class is created in which business logic is implemented. Interaction with each element of the fragment is described in a separate method. In addition, all the assertions performed in this fragment are described here.

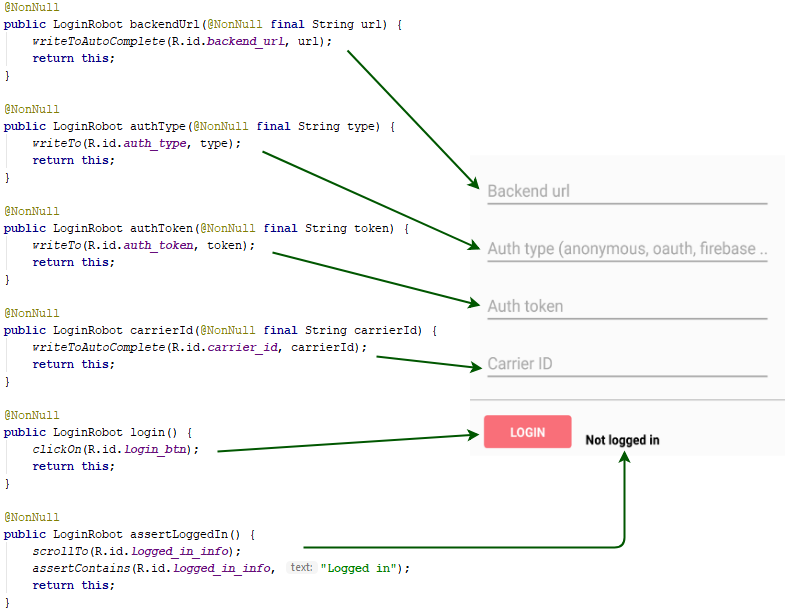

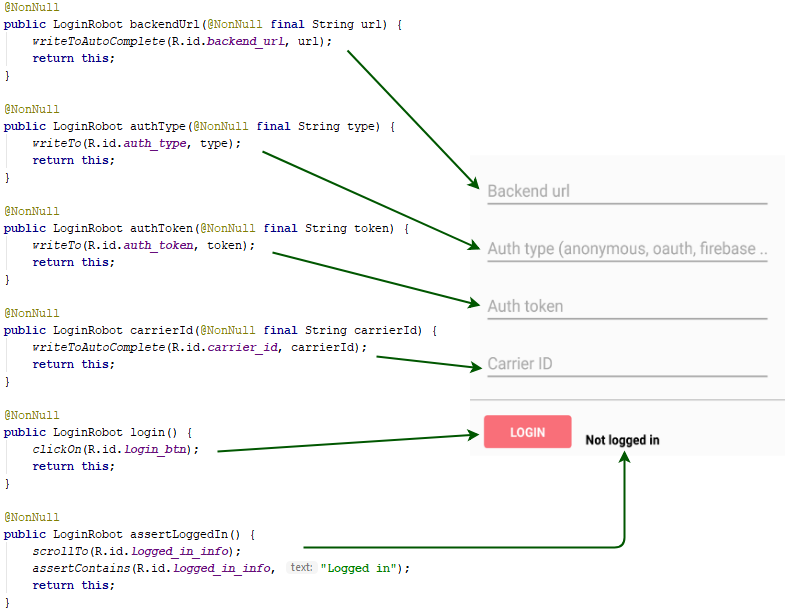

Consider a simple example. The described fragment contains several data entry fields and an action button:

The code of the login functionality test itself:

Here we check the positive scenario when the authentication data entered is correct. The data itself is submitted to the entrance tests, or default values are used. Thus, the tester has the possibility of parameterization in terms of test data.

Such a structure first of all gives the tests excellent readability, when the whole script is broken down into basic execution steps. We also liked the idea of carrying out asserts into separate methods of the corresponding robot. An assert becomes the same step, without breaking the overall chain, and your tests still do not know anything about the application.

In the aforementioned report, Jake Wharton gives an implementation in the Kotlin language, where finite. We already tried it on another project and we really liked it.

Prior to the introduction of automation, we conducted all of our testing in the TestRail test management system. The good news is that there is a fairly good TestRail API , with which we were able to connect test cases already instituted in the system with autotests.

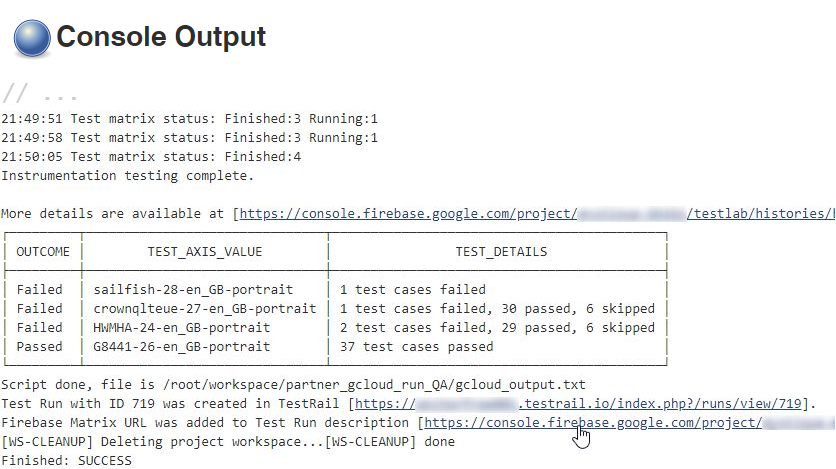

During a test run using JUnit RunListener , various events are captured, such as

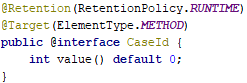

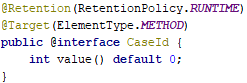

You also need to establish a connection with various TestRail entities by their ID. So, to link the test with the corresponding test case, we created a simple

The code for the implementation of the annotation itself:

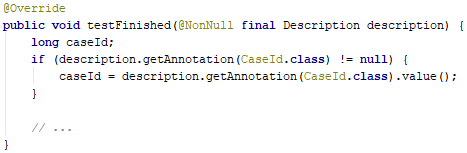

It remains only to get its value in the right place from Description:

To organize the manual start of autotests, we use the Free-style Jenkins Job . This option was chosen because the company already had some experience of similar work with Jenkins Job in other areas, in particular, with DevOps-engineers, whom they happily shared.

Jenkins Job runs a script based on the data transferred from the web interface. Thus, the parameterization of test runs is implemented. In our case, the Bash script initiates the launch of tests on Firebase cloud devices.

Parameterization includes:

Consider the part of the web page of our Jenkins Job on the example of the device selection interface and test cases:

Each element, where you can enter or select any data, is implemented by special Jenkins-plugins. For example, the device selection interface is made using the Active Choices Plugin . Using the groovy script from Firebase, you get a list of available devices, which is then displayed in the desired form on a web page.

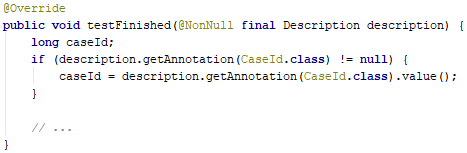

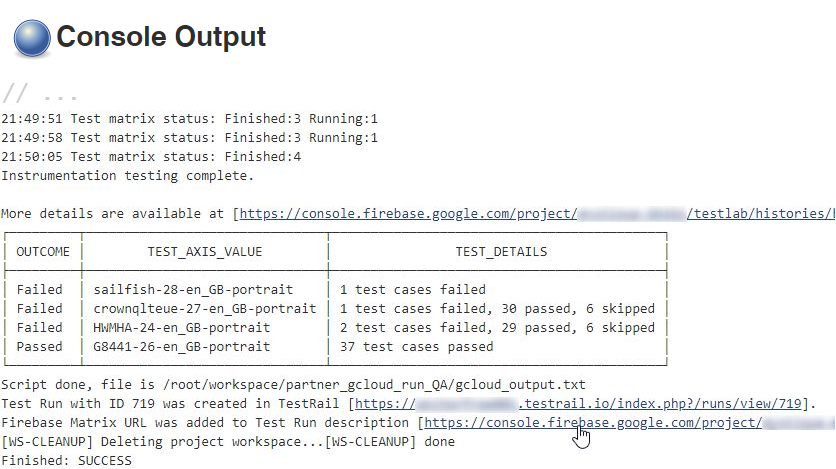

After all the necessary data is entered, the corresponding script is launched, the progress of which we can observe in the Console Output section:

From here, the tester, who initiated the test run, can get to the TestRail or Firebase console, which contains a lot of useful information about running tests on each of the selected devices, at the received URLs.

The device matrix in Firebase contains the distribution of tests according to the devices on which they were run:

For each device, you can view the full log, video test run, various performance indicators. In addition, you can access all the files that may have been created during the execution of tests. We use this in order to unload from the device indicators of code coverage tests.

We chose Firebase, as we already used this service to solve other problems, and we are satisfied with the pricing policy. If you meet the 30 minutes of net time for testing per day, you do not need to pay at all. This may be an additional reason why it is important to be able to run only certain tests.

You might prefer a different cloud infrastructure that also fits well with your testing process.

How can all this be used in the future? From the point of view of the code base, this solution is applicable only for Android applications. For example, during the implementation we have auxiliary classes

If we talk about other platforms, the experience gained can be very useful in order to build and implement similar processes. We are now actively engaged in this on the iOS-direction and are thinking about Windows.

There are many options for the implementation and use of test automation. We are of the opinion that automation is primarily a tool that is designed to facilitate the traditional process of “human” testing, and not to eradicate it.

Together with our partners, we actively develop, test and support a family of applications for different platforms: Android, iOS, Windows. Applications are actively developing, along with which increases the amount of testing, in the first place - regression.

We decided to try to simplify and speed up testing by automating most of the test scripts. At the same time, we did not want to completely abandon the manual testing process, but rather modify it.

')

The implementation of this approach began with one of the Android applications, which I will tell you about. The article will be interesting to novice authors of UI-tests, primarily for mobile applications, as well as those who want to automate the manual testing process to some extent.

Go!

A starting point

For each platform, we have several similar applications running the same basic business process. However, they differ from each other in a set of small auxiliary functionalities, are implemented under different brands depending on the customer (which is why the interface changes from application to application), and the business process can be customized by adding additional steps.

We are faced with certain problems that need to be addressed. Similar difficulties may arise in a situation other than ours. For example, if you have one bulky application with difficult business logic, overgrown with many tests.

Problem number 1: many regression tests

The sets of test scenarios for each application are both similar and different, which increases the regression and makes it even more boring. However, all applications need to be tested separately.

Given that already running applications are updated regularly, and in the future there will only be more, the total number of tests will inexorably grow.

Problem number 2: you need to test on all versions of the mobile OS

An important requirement is the performance of our mobile applications on a wide range of operating system versions. For example, in the case of Android at the time of writing, these are API levels from 17 to 28.

Ideally, we should test on each version of Android, which further “weights” our regression. The process of direct testing of an application acquires an additional routine, multiplied by the number of devices: installing and launching the application, resetting it to its original state after each individual test, and deleting. At the same time, to maintain your own device farm rather laboriously.

Solution: implement automation in the manual testing process

A typical test automation task is to automate regression tests. So we want to improve the efficiency of the testing process today and prevent the possible consequences of growth tomorrow.

At the same time, we are well aware that it is impossible and unnecessary to try to completely eradicate manual testing by automation. Critical thinking and the human eye is difficult to replace. On this topic there is a good article in the blog of Michael Bolton The End of Manual Testing (or translation from Anna Rodionova).

We thought that it would be useful to have a set of automated tests that cover the stable parts of the application, and in the future - to write tests for found bugs and new functionality. At the same time, we want to link autotests with existing test suites in our test management system (we use TestRail), and also give testers the ability to easily run auto tests on cloud physical devices (we chose Firebase Test Lab as the cloud infrastructure).

To start and sample, we took one of our Android applications. It was important to take into account that if the solution was successful, its best practices could be applied to the rest of our applications, including on other platforms.

What we want to get in the end:

- Automation of regression testing.

- Integration with test management system.

- Possibility of parameterized manual launch of autotests on cloud devices.

- Ability to reuse the solution in the future.

Further, I will separately tell about the implementation of each of these points with a slight immersion in the technical component.

General scheme for implementing the solution

But first - the general scheme of what we have done:

Auto tests run in two ways:

- From CI after merge or pull request to master.

- Tester manually from the Jenkins Job web interface.

In the case of a manual launch, the tester needs to either indicate the number of the corresponding build, or download 2 APK from your computer: with the application and with the tests. This method is needed so that you can run the necessary tests at any time on any available devices.

During the execution of tests, their results are sent to TestRail. This happens in the same way as if the tester did manual testing and recorded the results in the way he was used to.

Thus, we left the well-established process of manual testing, but added automation to it, which performs a specific set of tests. The tester "picks up" what is done automatically, and:

- sees the result of the test cases on each device that have been selected;

- can recheck any test case manually;

- performs test cases that are not yet automated, or cannot be optimized for any reason;

- makes a final decision on the current test run.

We now turn to the promised description of the implementation.

1. Autotest

Instruments

We used 3 tools to interact with the user interface:

- Espresso.

- Barista.

- UI Automator.

The main tool and the one with which we started is Espresso. The main argument in favor of his choice was the fact that Espresso allows you to test the white box method, providing access to Android Instrumentation. The test code is in the same project as the application code.

Access to the Android application code is needed in order to call its methods in tests. We can prepare our application in advance for a specific test by running it in the desired state. Otherwise, we need to reach this state through the interface, which deprives the tests of atomicity, making them dependent on each other, and simply eats up a lot of time.

During the implementation, another tool was added to Espresso - UI Automator. Both frameworks are part of Google's Android Testing Support Library . Using the UI Automator, we can interact with various system dialogs or, for example, with Notification Drawer.

And the latest in our arsenal was the Barista framework. It is a wrapper around Espresso, saving you the boilerplate code when implementing common user actions.

Bearing in mind the desire to be able to reuse the solution in other applications, it is important to note that the listed tools are intended exclusively for Android applications. If you do not need access to the code of the application under test, then you will probably prefer to use another framework. For example, very popular today Appium. Although with him you can try to get to the application code using backdoors, which is a good article on the Badoo blog. The choice is yours.

Implementation

As a design pattern, we chose Testing Robots, proposed by Jack Wharton in the report of the same name. The idea of this approach is similar to the common Page Object design pattern, which we use when testing web systems. Programming language - Java.

For each independent fragment of the application, a special robot class is created in which business logic is implemented. Interaction with each element of the fragment is described in a separate method. In addition, all the assertions performed in this fragment are described here.

Consider a simple example. The described fragment contains several data entry fields and an action button:

The code of the login functionality test itself:

Here we check the positive scenario when the authentication data entered is correct. The data itself is submitted to the entrance tests, or default values are used. Thus, the tester has the possibility of parameterization in terms of test data.

Such a structure first of all gives the tests excellent readability, when the whole script is broken down into basic execution steps. We also liked the idea of carrying out asserts into separate methods of the corresponding robot. An assert becomes the same step, without breaking the overall chain, and your tests still do not know anything about the application.

In the aforementioned report, Jake Wharton gives an implementation in the Kotlin language, where finite. We already tried it on another project and we really liked it.

2. Integration with test management system

Prior to the introduction of automation, we conducted all of our testing in the TestRail test management system. The good news is that there is a fairly good TestRail API , with which we were able to connect test cases already instituted in the system with autotests.

During a test run using JUnit RunListener , various events are captured, such as

testRunStarted , testFailure , testFinished , in which we send the results to TestRail. If you are using AndroidJUnitRunner, then it needs to report your RunListener in a certain way, described in the official documentation.You also need to establish a connection with various TestRail entities by their ID. So, to link the test with the corresponding test case, we created a simple

@CaseId annotation, the use of which is shown in the test implementation example above.The code for the implementation of the annotation itself:

It remains only to get its value in the right place from Description:

3. Manual start of autotests on cloud devices

Start Parameterization in Jenkins Job

To organize the manual start of autotests, we use the Free-style Jenkins Job . This option was chosen because the company already had some experience of similar work with Jenkins Job in other areas, in particular, with DevOps-engineers, whom they happily shared.

Jenkins Job runs a script based on the data transferred from the web interface. Thus, the parameterization of test runs is implemented. In our case, the Bash script initiates the launch of tests on Firebase cloud devices.

Parameterization includes:

- Select the desired APK by specifying the number of the corresponding build or download them manually.

- Enter all kinds of test data.

- Enter additional custom data for TestRail.

- Select the cloud physical devices on which tests will be run from the list available in the Firebase Test Lab.

- Selection of test cases to be executed.

Consider the part of the web page of our Jenkins Job on the example of the device selection interface and test cases:

Each element, where you can enter or select any data, is implemented by special Jenkins-plugins. For example, the device selection interface is made using the Active Choices Plugin . Using the groovy script from Firebase, you get a list of available devices, which is then displayed in the desired form on a web page.

After all the necessary data is entered, the corresponding script is launched, the progress of which we can observe in the Console Output section:

From here, the tester, who initiated the test run, can get to the TestRail or Firebase console, which contains a lot of useful information about running tests on each of the selected devices, at the received URLs.

Final Test Matrix in Firebase Test Lab

The device matrix in Firebase contains the distribution of tests according to the devices on which they were run:

For each device, you can view the full log, video test run, various performance indicators. In addition, you can access all the files that may have been created during the execution of tests. We use this in order to unload from the device indicators of code coverage tests.

We chose Firebase, as we already used this service to solve other problems, and we are satisfied with the pricing policy. If you meet the 30 minutes of net time for testing per day, you do not need to pay at all. This may be an additional reason why it is important to be able to run only certain tests.

You might prefer a different cloud infrastructure that also fits well with your testing process.

4. Reuse

How can all this be used in the future? From the point of view of the code base, this solution is applicable only for Android applications. For example, during the implementation we have auxiliary classes

EspressoExtensions and UiAutomatorExtensions , where we encapsulate various options for interacting with the interface and waiting for the readiness of elements. This also includes the RunListener class, which is responsible for integrating with the TestRail. We have already taken them into separate modules and use them to automate other applications.If we talk about other platforms, the experience gained can be very useful in order to build and implement similar processes. We are now actively engaged in this on the iOS-direction and are thinking about Windows.

Conclusion

There are many options for the implementation and use of test automation. We are of the opinion that automation is primarily a tool that is designed to facilitate the traditional process of “human” testing, and not to eradicate it.

Source: https://habr.com/ru/post/434252/

All Articles