Operation of rabbits (RabbitMQ) in the "Survive at any cost"

" Company " - communication operator of PJSC "Megaphone"

Noda is the RabbitMQ server.

“ Cluster ” is a set, in our case of three, RabbitMQ nodes working as a whole.

“ Contour ” is a collection of RabbitMQ clusters, the rules for working with which are determined by the balancer in front of them.

" Balancer ", " Hap " - Haproxy - balancer, performing the function of switching the load on the clusters within the contour. A pair of Haproxy servers running in parallel is used for each loop.

“ Subsystem ” - publisher and / or consumer of messages transmitted through a rabbit

“ SYSTEM ” is a set of Subsystems, which is a unified software and hardware solution used in the Company, characterized by distribution throughout Russia, but having several centers where all information flows and where the main calculations and calculations take place.

SYSTEM - a geographically distributed system - from Khabarovsk and Vladivostok to St. Petersburg and Krasnodar. Architecturally, these are several central contours, divided by the characteristics of the subsystems connected to them.

In a nutshell: for each subscriber action follows the reaction of the Subsystems, which in turn informs the other Subsystems of events and subsequent changes. Messages are generated by any actions with the SYSTEM, not only on the part of subscribers, but also on the part of the Company's employees, and on the part of the Subsystems (a very large number of tasks are performed automatically).

Features of transport in telecom: large, no, not so, BIG stream of various data transmitted through asynchronous transport.

Some Subsystems live on separate Clusters due to the heaviness of message flows — there is simply no other resources left on the cluster, for example, with a message flow of 5-6 thousand messages / second, the volume of transmitted data can reach 170-190 Megabytes / second. With such a load profile, someone else will land on this cluster will lead to sad consequences: since there are not enough resources to process all data at the same time, the rabbit will start to drive incoming connections during the flow - simple publishers will start, with all the consequences for all Subsystems and SYSTEMS in whole

')

Basic requirements for transport:

For example, the very fact of the call, through the asynchronous transport flies several different messages. some messages are intended for subsystems that live in the same circuit, and some are intended for transmission to central nodes. The same message can be claimed by several subsystems, therefore, at the stage of publishing a message in a rabbit, it is copied and sent to different consumers. And in some cases, copying messages is forcedly implemented on the intermediate circuit - when information must be delivered from the circuit in Khabarovsk, to the circuit in Krasnodar. The transfer is performed through one of the central Circuits, where copies of the messages are made, for central recipients.

In addition to events caused by subscriber actions, service messages go through the transport that Subsystems exchange. This results in several thousand different message passing routes, some overlap, some exist in isolation. It is enough to name the number of queues involved in routes on different Contours to understand the approximate scale of the transport map: On central contours 600, 200, 260, 15 ... and on remote Contours 80-100 ...

With such transport involvement, the requirements of 100% availability of all transport hubs no longer seem excessive. We turn to the implementation of these requirements.

In addition to RabbitMQ itself , Haproxy is used for load balancing and providing automatic response to emergency situations.

A few words about the software and hardware environment in which our rabbits exist:

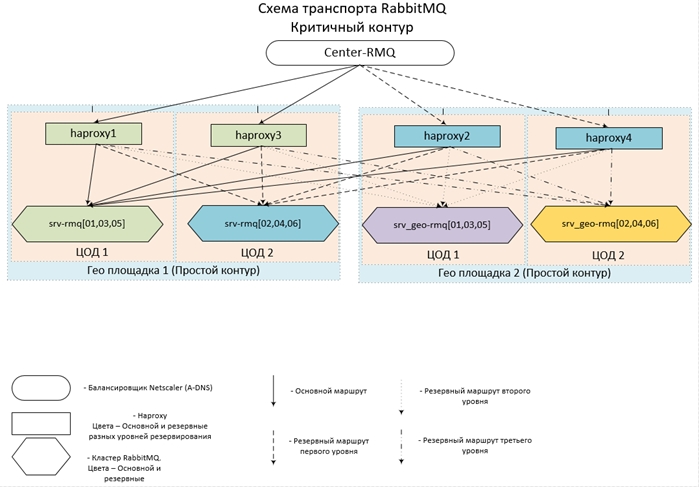

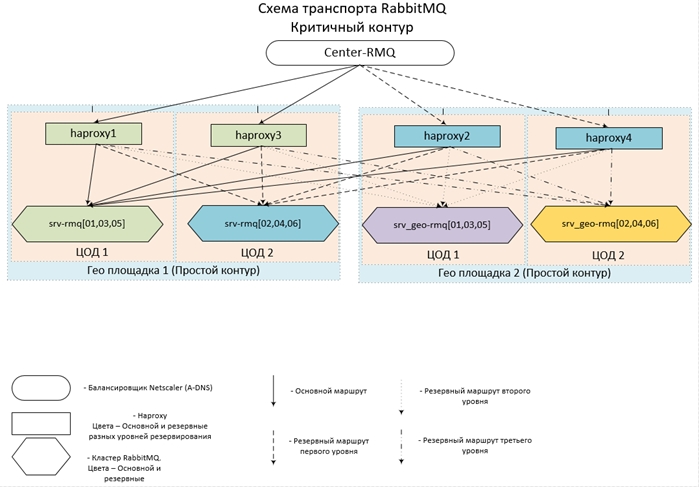

It looks conceived (and realized) all this about like this:

Now some configs.

The first section of the front describes the entry point - leading to the main cluster, the second section is designed for balancing the reserve level. If you simply describe in the backend section all backup servers of rabbits (backup instruction), then it will work the same way - if the main cluster is completely unavailable, the connections will go to the backup, however, all connections will go to FIRST in the backup server list. To ensure load balancing on all backup nodes, we are introducing one more front, which we make available only from localhost and assign it as the backup server.

The given example describes the balancing of the remote Circuit - which operates within two data centers: the server srv-rmq {01.03.05} - live in data center No. 1, srv-rmq {02.04.06} - in data center No. 2. Thus, to implement the four-zod solution, we only need to add two more local fronts and two backend sections of the corresponding rabbit servers.

The balancer behavior with this configuration is the following: While at least one primary server is alive, we use it. If the main servers are not available, we work with the reserve. If at least one primary server becomes available, all connections to the backup servers are broken and when the connection is restored, they already fall on the main cluster.

Operating experience of this configuration shows almost 100% availability of each of the circuits. This solution requires the Subsystems to be completely legitimate and simple: to be able to reconnect with the rabbit after breaking the connection.

So, we have provided load balancing for an arbitrary number of Clusters and automatic switching between them, it's time to go directly to the rabbits.

Each Cluster is created from three nodes, as practice shows - the most optimal number of nodes, which ensures the optimal balance of availability / resiliency / speed. Since the rabbit does not scale horizontally (cluster performance is equal to the performance of the slowest server), we create all nodes with the same, optimal parameters using CPU / Mem / Hdd. We arrange the servers as close as possible to each other - in our case, we write down virtual machines within the same farm.

As for the preconditions, following which by the Subsystems will ensure the most stable operation and fulfillment of the requirement to preserve incoming messages:

We get a configured (at the level of the configuration file and settings in the rabbit itself) cluster, which maximally ensures the availability and safety of data. By this we implement the requirement - ensuring the availability and security of data ... in most cases.

There are several points that should be taken into account when operating such highly loaded systems:

What no setting can protect against is a collapsed mnesia base - unfortunately, it is happening with a non-zero probability. Not global failures (for example, complete failure of the entire data center - the load will simply switch to another cluster) lead to this deplorable result, but failures are more local - within the same network segment.

And it is terrible local network failures, because emergency shutdown of one or two nodes will not lead to fatal consequences - just all requests will go to one node, and as we remember, performance depends on the performance of the node itself. Network failures (we do not take into account small interruptions of communication - they are experienced without serious consequences), lead to a situation where the nodes start the synchronization process between themselves and then the connection breaks again and again for a few seconds.

For example, multiple blinking of the network, and with a periodicity of more than 5 seconds (this is exactly the timeout set in the Hap settings, you can of course play around, but to check the effectiveness you will need to repeat the failure, which nobody wants).

One - two such iterations a cluster can still withstand, but more - the chances are already minimal. In such a situation, stopping a dropped node can save, but it's almost impossible to do it manually. More often, the result is not just a dropout of the node from the cluster with the “Network Partition” message, but also a picture when the data on the part of the queues lived just this node and did not have time to synchronize for the others. Visually - in the data on the queues is NaN .

And this is already an unambiguous signal - to switch to the backup cluster. Switching will provide hap, you only need to stop the rabbits on the main cluster - a matter of a few minutes. As a result, we get the restoration of transport performance and we can safely proceed to the analysis of the accident and its elimination.

In order to remove a damaged cluster from under load, in order to prevent further degradation, the simplest thing is to make the rabbit work on ports other than 5672. Since we have Hapa who monitor the rabbits in the regular port, its offset, for example, at 5673 in the settings of the rabbit, it will allow the cluster to run completely painlessly and try to restore its working capacity and the messages remaining on it.

We do in a few steps:

At startup, there will be a restructuring of the indexes and in the overwhelming majority of cases all data is restored in full. Unfortunately, failures occur as a result of which one has to physically delete all messages from the disk, leaving only the configuration — the directories msg_store_persistent , msg_store_transient , queues (for version 3.6) or msg_stores (for version 3.7) are deleted in the database folder.

After such radical therapy, the cluster is launched with preservation of the internal structure, but without messages.

And the most unpleasant option (it was observed once): The damage to the base was such that it was necessary to completely remove the entire base and rebuild the cluster from scratch.

For the convenience of managing and updating rabbits, not a ready-made assembly in rpm is used, but a rabbit disassembled using cpio and reconfigured (changed paths in scripts). The main difference: it does not require root rights for installation / configuration, is not installed on the system (the reassembled rabbit is perfectly packaged in tgz) and runs from any user. This approach allows flexibility to update versions (if it does not require a complete cluster shutdown - in this case, simply switch to the backup cluster and update, not forgetting to specify the offset port for operation). It is even possible to launch several instances of RabbitMQ on one machine — the test is very convenient for tests — you can deploy a reduced architectural copy of a combat zoo.

As a result, shamanism with cpio and paths in scripts received an assembly option: two rabbitmq-base folders (in the original assembly - mnesia folder) and rabbimq-main - put all the necessary scripts for both the rabbit and erlang.

In rabbimq-main / bin - symlinks to the rabbit and erlang scripts and the rabbit tracking script (description below).

In rabbimq-main / init.d - the rabbitmq-server script through which the logs start / stop / rotate; in lib, the rabbit itself; in lib64 - erlang (a truncated version is used, only for rabbit operation, erlang version).

It is extremely easy to update the resulting assembly when new versions are released - add the contents of rabbimq-main / lib and rabbimq-main / lib64 from new versions and replace the symlinks in the bin. If the update also affects control scripts, simply changing the paths to ours in them.

The solid advantage of this approach is the complete continuity of versions - all paths, scripts, control commands remain unchanged, which allows you to use any self-written service scripts without finishing each version.

Since the fall of rabbits, an event, though rare, is happening, it was necessary to embody a mechanism for tracking their well-being - raising in case of a fall (while maintaining the logs of the reasons for the fall). The fall of the node in 99% of cases is accompanied by a log entry, even kill and it leaves traces; this allowed monitoring the state of the crawl with a simple script.

For versions 3.6 and 3.7, the script is slightly different due to the differences in the log entries.

We start in the crontab account under which the rabbit will work (by default rabbitmq) the execution of this script (script name: check_and_run) every minute (for a start we ask the admin to give the account the rights to use crontab, we do it ourselves):

* / 1 * * * * ~ / rabbitmq-main / bin / check_and_run

The second point in the use of the reassembled crawl is the logging of logs.

Since we are not tied to the logrotate of the system - we use the functionality provided by the developer: rabbitmq-server script from init.d (for version 3.6)

Making minor changes to rotate_logs_rabbitmq ()

Add:

The result of running the rabbitmq-server script with the rotate-logs key: logs are compressed with gzip and stored only for the last 30 days. http_api - the path where the rabbit adds http logs - is configured in the configuration file: {rabbitmq_management, [{rates_mode, detailed}, {http_log_dir, path_to_logs / http_api "}]}

At the same time I pay attention to {rates_mode, detailed } - the option somewhat increases the load, but it allows you to see on the WEB interface (and thus get through the API) information about who publishes the messages in the exchanges. Information is extremely necessary, because all connections go through the balancer - we will see only the IP of the balancers themselves. And if all the Subsystems that work with the rabbit are puzzled to fill in the “Client properties” parameters in the properties of their connections to rabbits, then it will be possible at the connection level to get detailed information about who exactly where and with what intensity publishes messages.

With the release of new versions 3.7, there was a complete rejection of the rabbimq-server script in init.d. In order to facilitate operation (uniformity of control commands regardless of the rabbit version) and smoother transition between versions, we continue to use this script in the reassembled rabbit. It is true again: we ’ll change rotate_logs_rabbitmq () a bit, since in 3.7 the log naming mechanism after rotation was changed:

Now it remains only to add a task to rotate logs in crontab - for example, every day at 23-00:

00 23 * * * ~ / rabbitmq-main / init.d / rabbitmq-server rotate-logs

Let us turn to the tasks that need to be addressed in the framework of the operation of the "rabbit farm":

The operation of our zoo and the solution of the voiced tasks by means of the supplied rabbitmq_management staff plug-in is possible, but extremely inconvenient, which is why the shell was developed and implemented to control the entire diversity of rabbits .

Noda is the RabbitMQ server.

“ Cluster ” is a set, in our case of three, RabbitMQ nodes working as a whole.

“ Contour ” is a collection of RabbitMQ clusters, the rules for working with which are determined by the balancer in front of them.

" Balancer ", " Hap " - Haproxy - balancer, performing the function of switching the load on the clusters within the contour. A pair of Haproxy servers running in parallel is used for each loop.

“ Subsystem ” - publisher and / or consumer of messages transmitted through a rabbit

“ SYSTEM ” is a set of Subsystems, which is a unified software and hardware solution used in the Company, characterized by distribution throughout Russia, but having several centers where all information flows and where the main calculations and calculations take place.

SYSTEM - a geographically distributed system - from Khabarovsk and Vladivostok to St. Petersburg and Krasnodar. Architecturally, these are several central contours, divided by the characteristics of the subsystems connected to them.

What is the task of transport in the realities of telecom?

In a nutshell: for each subscriber action follows the reaction of the Subsystems, which in turn informs the other Subsystems of events and subsequent changes. Messages are generated by any actions with the SYSTEM, not only on the part of subscribers, but also on the part of the Company's employees, and on the part of the Subsystems (a very large number of tasks are performed automatically).

Features of transport in telecom: large, no, not so, BIG stream of various data transmitted through asynchronous transport.

Some Subsystems live on separate Clusters due to the heaviness of message flows — there is simply no other resources left on the cluster, for example, with a message flow of 5-6 thousand messages / second, the volume of transmitted data can reach 170-190 Megabytes / second. With such a load profile, someone else will land on this cluster will lead to sad consequences: since there are not enough resources to process all data at the same time, the rabbit will start to drive incoming connections during the flow - simple publishers will start, with all the consequences for all Subsystems and SYSTEMS in whole

')

Basic requirements for transport:

- Availability of transport should be 99.99%. In practice, this translates into a 24/7 job requirement and the ability to automatically respond to any emergencies.

- Data security:% of lost messages on the transport should strive to 0.

For example, the very fact of the call, through the asynchronous transport flies several different messages. some messages are intended for subsystems that live in the same circuit, and some are intended for transmission to central nodes. The same message can be claimed by several subsystems, therefore, at the stage of publishing a message in a rabbit, it is copied and sent to different consumers. And in some cases, copying messages is forcedly implemented on the intermediate circuit - when information must be delivered from the circuit in Khabarovsk, to the circuit in Krasnodar. The transfer is performed through one of the central Circuits, where copies of the messages are made, for central recipients.

In addition to events caused by subscriber actions, service messages go through the transport that Subsystems exchange. This results in several thousand different message passing routes, some overlap, some exist in isolation. It is enough to name the number of queues involved in routes on different Contours to understand the approximate scale of the transport map: On central contours 600, 200, 260, 15 ... and on remote Contours 80-100 ...

With such transport involvement, the requirements of 100% availability of all transport hubs no longer seem excessive. We turn to the implementation of these requirements.

How we solve the tasks

In addition to RabbitMQ itself , Haproxy is used for load balancing and providing automatic response to emergency situations.

A few words about the software and hardware environment in which our rabbits exist:

- All rabbit servers are virtual, with parameters of 8-12 CPU, 16 Gb Mem, 200 Gb HDD. As experience has shown, even the use of creepy non-virtual servers for 90 cores and a bunch of RAM provides a small performance boost at a significantly higher cost. Versions used: 3.6.6 (in practice - the most stable of 3.6) with an erlang of 18.3, 3.7.6 with an erlang of 20.1.

- For Haproxy, the requirements are much lower: 2 CPU, 4 Gb Mem, haproxy version - 1.8 stable. Resource load on all haproxy servers does not exceed 15% CPU / Mem.

- The entire zoo is located in 14 data centers at 7 sites throughout the country, united in a single network. In each of the data centers there is a cluster of three nods and one hap.

- For remote circuits, 2 data centers are used; for each of the central circuits, 4 each.

- Central Contours interact both with each other and with remote Contours, in turn, remote Contours work only with central ones, they do not have a direct connection between each other.

- The configurations of Hapov and Clusters within one Contour are completely identical. The entry point for each Contour is a pseudonym for several A-DNS records. Thus, in order not to happen, at least one hap and at least one of the clusters (at least one node in the cluster) will be available in each circuit. Since the case of failure of even 6 servers in two data centers at the same time is extremely unlikely, the availability is close to 100%.

It looks conceived (and realized) all this about like this:

Now some configs.

Haproxy configuration

| frontend center-rmq_5672 | ||

| bind | *: 5672 | |

| mode | tcp | |

| maxconn | 10,000 | |

| timeout client | 3h | |

| option | tcpka | |

| option | tcplog | |

| default_backend | center-rmq_5672 | |

| frontend center-rmq_5672_lvl_1 | ||

| bind | localhost: 56721 | |

| mode | tcp | |

| maxconn | 10,000 | |

| timeout client | 3h | |

| option | tcpka | |

| option | tcplog | |

| default_backend | center-rmq_5672_lvl_1 | |

| backend center-rmq_5672 | ||

| balance | leastconn | |

| mode | tcp | |

| fullconn | 10,000 | |

| timeout | server 3h | |

| server | srv-rmq01 10.10.10.10II672 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions | |

| server | srv-rmq03 10.10.10.11 Lower672 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions | |

| server | srv-rmq05 10.10.10.12 Low672 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions | |

| server | localhost 127.0.0.1 Redu6721 check inter 5s rise 2 fall 3 backup on-marked-down shutdown sessions | |

| backend center-rmq_5672_lvl_1 | ||

| balance | leastconn | |

| mode | tcp | |

| fullconn | 10,000 | |

| timeout | server 3h | |

| server | srv-rmq02 10.10.10.13 Low672 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions | |

| server | srv-rmq04 10.10.10.14II672 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions | |

| server | srv-rmq06 10.10.10.5.56767 check inter 5s rise 2 fall 3 on-marked-up shutdown-backup-sessions |

The first section of the front describes the entry point - leading to the main cluster, the second section is designed for balancing the reserve level. If you simply describe in the backend section all backup servers of rabbits (backup instruction), then it will work the same way - if the main cluster is completely unavailable, the connections will go to the backup, however, all connections will go to FIRST in the backup server list. To ensure load balancing on all backup nodes, we are introducing one more front, which we make available only from localhost and assign it as the backup server.

The given example describes the balancing of the remote Circuit - which operates within two data centers: the server srv-rmq {01.03.05} - live in data center No. 1, srv-rmq {02.04.06} - in data center No. 2. Thus, to implement the four-zod solution, we only need to add two more local fronts and two backend sections of the corresponding rabbit servers.

The balancer behavior with this configuration is the following: While at least one primary server is alive, we use it. If the main servers are not available, we work with the reserve. If at least one primary server becomes available, all connections to the backup servers are broken and when the connection is restored, they already fall on the main cluster.

Operating experience of this configuration shows almost 100% availability of each of the circuits. This solution requires the Subsystems to be completely legitimate and simple: to be able to reconnect with the rabbit after breaking the connection.

So, we have provided load balancing for an arbitrary number of Clusters and automatic switching between them, it's time to go directly to the rabbits.

Each Cluster is created from three nodes, as practice shows - the most optimal number of nodes, which ensures the optimal balance of availability / resiliency / speed. Since the rabbit does not scale horizontally (cluster performance is equal to the performance of the slowest server), we create all nodes with the same, optimal parameters using CPU / Mem / Hdd. We arrange the servers as close as possible to each other - in our case, we write down virtual machines within the same farm.

As for the preconditions, following which by the Subsystems will ensure the most stable operation and fulfillment of the requirement to preserve incoming messages:

- Work with the rabbit is only under the protocol amqp / amqps - through balancing. Authorization under local accounts - within each Cluster (and of the Outline as a whole)

- Subsystems are connected to the rabbit in the passive mode: No manipulation of the entities of the rabbits (creation of queues / exchanges / binds) is allowed and limited to the level of account rights - we simply do not give permission to configure.

- All necessary entities are created centrally, not by means of Subsystems, and on all Contour Clusters are done in the same way - to ensure automatic switching to the backup Cluster and back. Otherwise, we can get a picture: they have switched to the reserve, but there is no queue or bind there, and we can get either a connection error or a message loss to choose from.

Now directly settings on rabbits:

- Local KMs do not have access to the Web interface.

- Access to the Web is organized through LDAP - we integrate with AD and we get logging who and where on the webcam went. At the configuration level, we restrict the rights of the AD accounts, not only do we require being in a certain group, so we give only the rights to “look”. Monitoring groups are more than enough. And we assign the rights of the administrator to another group in AD, thus the range of influence on transport is strongly limited.

- To facilitate administration and tracking:

At all VHOST, we immediately hang a level 0 policy with application to all queues (pattern:. *):- ha-mode: all - store all data on all nodes of the cluster, slows down the processing of messages, but ensures their safety and availability.

- ha-sync-mode: automatic - we instruct the crawler to automatically synchronize data on all nodes of the cluster: the safety and availability of data also increases.

- queue-mode: lazy - perhaps one of the most useful options that appeared in rabbits from version 3.6 - the immediate recording of messages on the HDD. This option drastically reduces the consumption of RAM and increases the data integrity when the node stops or falls or the cluster as a whole.

- Settings in the configuration file ( rabbitmq-main / conf / rabbitmq.config ):

- Section rabbit : {vm_memory_high_watermark_paging_ratio, 0.5} - the threshold for downloading messages to disk is 50%. When lazy is on, it serves more like insurance when we draw a policy, for example, level 1, in which we forget to include lazy .

- {vm_memory_high_watermark, 0.95} - we limit the crawl to 95% of all RAM, since only the rabbit lives on the servers, there is no point in imposing more stringent restrictions. 5% “broad gesture” so be it - leave the OS, monitoring and other useful trifles. Since this value is the upper limit - there is enough resources for everyone.

- {cluster_partition_handling, pause_minority} - describes the behavior of the cluster when a Network Partition occurs, for three or more node clusters, it is recommended that this flag - allows the cluster to recover itself.

- {disk_free_limit, "500MB"} - everything is simple when there is 500 MB of free disk space - the publication of messages will be stopped, only reading will be available.

- {auth_backends, [rabbit_auth_backend_internal, rabbit_auth_backend_ldap]} - authorization order in rabbits: First, check for the presence of KM in the local database and if not found - go to the LDAP server.

- Section rabbitmq_auth_backend_ldap - configuration of interaction with AD: {servers, ["srv_dc1", "srv_dc2"]} - list of domain controllers on which authentication will take place.

- The parameters that directly describe the user in AD, the LDAP port, etc., are very individual and are described in detail in the documentation.

- The most important thing for us is a description of the rights and restrictions on the administration and access to the web page interface: tag_queries:

[{administrator, {in_group, "cn = rabbitmq-admins, ou = GRP, ou = GRP_MAIN, dc = My_domain, dc = ru"}},

{monitoring,

{in_group, "cn = rabbitmq-web, ou = GRP, ou = GRP_MAIN, dc = My_domain, dc = en"}

}] - this construction provides administrative privileges to all users of the rabbitmq-admins group and monitoring rights (minimum sufficient for access to view) for the rabbitmq-web group. - resource_access_query :

{for,

[{permission, configure, {in_group, "cn = rabbitmq-admins, ou = GRP, ou = GRP_MAIN, dc = My_domain, dc = ru"}},

{permission, write, {in_group, “cn = rabbitmq-admins, ou = GRP, ou = GRP_MAIN, dc = My_domain, dc = ru”}},

{permission, read, {constant, true}}

]

} - we provide configuration and write permissions only to a group of administrators, all others who successfully authorize permissions are read-only - may well read messages via the Web interface.

We get a configured (at the level of the configuration file and settings in the rabbit itself) cluster, which maximally ensures the availability and safety of data. By this we implement the requirement - ensuring the availability and security of data ... in most cases.

There are several points that should be taken into account when operating such highly loaded systems:

- All additional properties of queues (TTL, expire, max-length, etc.) are better organized by politicians, and not hung with parameters when creating queues. It turns out a flexibly customizable structure that can be customized on the fly to changing realities.

- Using TTL. The longer the queue, the higher the load on the CPU. In order to prevent the “punching of the ceiling”, it is better to limit the queue length in max-length.

- In addition to the rabbit itself, a certain number of service applications are spinning on the server, which, oddly enough, also requires CPU resources. A greedy rabbit, by default, occupies all the available cores ... It may turn out to be an unpleasant situation: the struggle for resources, which will easily lead to brakes on a rabbit. To avoid the occurrence of such a situation, you can, for example, like this: Change the erlang launch parameters — enter a forced limit on the number of used cores. We do this as follows: find the rabbitmq-env file, look for the SERVER_ERL_ARGS = parameter and add the + sct L0-Xc0-X + SY: Y to it. Where X is the number of 1 cores (counting starts from 0), Y is the Number of cores -1 (counting from 1). + sct L0-Xc0-X - changes the binding to the cores, + SY: Y - changes the number of shearlers triggered by the Erlang. So for a system of 8 cores, the added parameters will take the form: + sct L0-6c0-6 + S 7: 7. By this, we give the rabbit only 7 cores and expect that the OS will launch other processes optimally and hang them on an unloaded core.

The nuances of operating the resulting zoo

What no setting can protect against is a collapsed mnesia base - unfortunately, it is happening with a non-zero probability. Not global failures (for example, complete failure of the entire data center - the load will simply switch to another cluster) lead to this deplorable result, but failures are more local - within the same network segment.

And it is terrible local network failures, because emergency shutdown of one or two nodes will not lead to fatal consequences - just all requests will go to one node, and as we remember, performance depends on the performance of the node itself. Network failures (we do not take into account small interruptions of communication - they are experienced without serious consequences), lead to a situation where the nodes start the synchronization process between themselves and then the connection breaks again and again for a few seconds.

For example, multiple blinking of the network, and with a periodicity of more than 5 seconds (this is exactly the timeout set in the Hap settings, you can of course play around, but to check the effectiveness you will need to repeat the failure, which nobody wants).

One - two such iterations a cluster can still withstand, but more - the chances are already minimal. In such a situation, stopping a dropped node can save, but it's almost impossible to do it manually. More often, the result is not just a dropout of the node from the cluster with the “Network Partition” message, but also a picture when the data on the part of the queues lived just this node and did not have time to synchronize for the others. Visually - in the data on the queues is NaN .

And this is already an unambiguous signal - to switch to the backup cluster. Switching will provide hap, you only need to stop the rabbits on the main cluster - a matter of a few minutes. As a result, we get the restoration of transport performance and we can safely proceed to the analysis of the accident and its elimination.

In order to remove a damaged cluster from under load, in order to prevent further degradation, the simplest thing is to make the rabbit work on ports other than 5672. Since we have Hapa who monitor the rabbits in the regular port, its offset, for example, at 5673 in the settings of the rabbit, it will allow the cluster to run completely painlessly and try to restore its working capacity and the messages remaining on it.

We do in a few steps:

- We stop all the nodes of the failed cluster - hap will switch the load to the backup cluster

- Add RABBITMQ_NODE_PORT = 5673 to the file rabbitmq-env - when the rabbit is started, these settings will be pulled, and the Web interface will still work on 15672.

- We specify the new port on all nodes of the untimely deceased cluster and launch them.

At startup, there will be a restructuring of the indexes and in the overwhelming majority of cases all data is restored in full. Unfortunately, failures occur as a result of which one has to physically delete all messages from the disk, leaving only the configuration — the directories msg_store_persistent , msg_store_transient , queues (for version 3.6) or msg_stores (for version 3.7) are deleted in the database folder.

After such radical therapy, the cluster is launched with preservation of the internal structure, but without messages.

And the most unpleasant option (it was observed once): The damage to the base was such that it was necessary to completely remove the entire base and rebuild the cluster from scratch.

For the convenience of managing and updating rabbits, not a ready-made assembly in rpm is used, but a rabbit disassembled using cpio and reconfigured (changed paths in scripts). The main difference: it does not require root rights for installation / configuration, is not installed on the system (the reassembled rabbit is perfectly packaged in tgz) and runs from any user. This approach allows flexibility to update versions (if it does not require a complete cluster shutdown - in this case, simply switch to the backup cluster and update, not forgetting to specify the offset port for operation). It is even possible to launch several instances of RabbitMQ on one machine — the test is very convenient for tests — you can deploy a reduced architectural copy of a combat zoo.

As a result, shamanism with cpio and paths in scripts received an assembly option: two rabbitmq-base folders (in the original assembly - mnesia folder) and rabbimq-main - put all the necessary scripts for both the rabbit and erlang.

In rabbimq-main / bin - symlinks to the rabbit and erlang scripts and the rabbit tracking script (description below).

In rabbimq-main / init.d - the rabbitmq-server script through which the logs start / stop / rotate; in lib, the rabbit itself; in lib64 - erlang (a truncated version is used, only for rabbit operation, erlang version).

It is extremely easy to update the resulting assembly when new versions are released - add the contents of rabbimq-main / lib and rabbimq-main / lib64 from new versions and replace the symlinks in the bin. If the update also affects control scripts, simply changing the paths to ours in them.

The solid advantage of this approach is the complete continuity of versions - all paths, scripts, control commands remain unchanged, which allows you to use any self-written service scripts without finishing each version.

Since the fall of rabbits, an event, though rare, is happening, it was necessary to embody a mechanism for tracking their well-being - raising in case of a fall (while maintaining the logs of the reasons for the fall). The fall of the node in 99% of cases is accompanied by a log entry, even kill and it leaves traces; this allowed monitoring the state of the crawl with a simple script.

For versions 3.6 and 3.7, the script is slightly different due to the differences in the log entries.

For version 3.6

#!/usr/bin/python import subprocess import os import datetime import zipfile def LastRow(fileName,MAX_ROW=200): with open(fileName,'rb') as f: f.seek(-min(os.path.getsize(fileName),MAX_ROW),2) return (f.read().splitlines())[-1] if os.path.isfile('/data/logs/rabbitmq/startup_log'): if b'FAILED' in LastRow('/data/logs/rabbitmq/startup_log'): proc = subprocess.Popen("ps x|grep rabbitmq-server|grep -v 'grep'", shell=True, stdout=subprocess.PIPE) out = proc.stdout.readlines() if str(out) == '[]': cur_dt=datetime.datetime.now() try: os.stat('/data/logs/rabbitmq/after_crush') except: os.mkdir('/data/logs/rabbitmq/after_crush') z=zipfile.ZipFile('/data/logs/rabbitmq/after_crush/repair_log'+'-'+str(cur_dt.day).zfill(2)+str(cur_dt.month).zfill(2)+str(cur_dt.year)+'_'+str(cur_dt.hour).zfill(2)+'-'+str(cur_dt.minute).zfill(2)+'-'+str(cur_dt.second).zfill(2)+'.zip','a') z.write('/data/logs/rabbitmq/startup_err','startup_err') proc = subprocess.Popen("~/rabbitmq-main/init.d/rabbitmq-server start", shell=True, stdout=subprocess.PIPE) out = proc.stdout.readlines() z.writestr('res_restart.log',str(out)) z.close() my_file = open("/data/logs/rabbitmq/run.time", "a") my_file.write(str(cur_dt)+"\n") my_file.close() For 3.7, only two lines change

if (os.path.isfile('/data/logs/rabbitmq/startup_log')) and (os.path.isfile('/data/logs/rabbitmq/startup_err')): if ((b' OK ' in LastRow('/data/logs/rabbitmq/startup_log')) or (b'FAILED' in LastRow('/data/logs/rabbitmq/startup_log'))) and not (b'Gracefully halting Erlang VM' in LastRow('/data/logs/rabbitmq/startup_err')): We start in the crontab account under which the rabbit will work (by default rabbitmq) the execution of this script (script name: check_and_run) every minute (for a start we ask the admin to give the account the rights to use crontab, we do it ourselves):

* / 1 * * * * ~ / rabbitmq-main / bin / check_and_run

The second point in the use of the reassembled crawl is the logging of logs.

Since we are not tied to the logrotate of the system - we use the functionality provided by the developer: rabbitmq-server script from init.d (for version 3.6)

Making minor changes to rotate_logs_rabbitmq ()

Add:

find ${RABBITMQ_LOG_BASE}/http_api/*.log.* -maxdepth 0 -type f ! -name "*.gz" | xargs -i gzip --force {} find ${RABBITMQ_LOG_BASE}/*.log.*.back -maxdepth 0 -type f | xargs -i gzip {} find ${RABBITMQ_LOG_BASE}/*.gz -type f -mtime +30 -delete find ${RABBITMQ_LOG_BASE}/http_api/*.gz -type f -mtime +30 -delete The result of running the rabbitmq-server script with the rotate-logs key: logs are compressed with gzip and stored only for the last 30 days. http_api - the path where the rabbit adds http logs - is configured in the configuration file: {rabbitmq_management, [{rates_mode, detailed}, {http_log_dir, path_to_logs / http_api "}]}

At the same time I pay attention to {rates_mode, detailed } - the option somewhat increases the load, but it allows you to see on the WEB interface (and thus get through the API) information about who publishes the messages in the exchanges. Information is extremely necessary, because all connections go through the balancer - we will see only the IP of the balancers themselves. And if all the Subsystems that work with the rabbit are puzzled to fill in the “Client properties” parameters in the properties of their connections to rabbits, then it will be possible at the connection level to get detailed information about who exactly where and with what intensity publishes messages.

With the release of new versions 3.7, there was a complete rejection of the rabbimq-server script in init.d. In order to facilitate operation (uniformity of control commands regardless of the rabbit version) and smoother transition between versions, we continue to use this script in the reassembled rabbit. It is true again: we ’ll change rotate_logs_rabbitmq () a bit, since in 3.7 the log naming mechanism after rotation was changed:

mv ${RABBITMQ_LOG_BASE}/$NODENAME.log.0 ${RABBITMQ_LOG_BASE}/$NODENAME.log.$(date +%Y%m%d-%H%M%S).back mv ${RABBITMQ_LOG_BASE}/$(echo $NODENAME)_upgrade.log.0 ${RABBITMQ_LOG_BASE}/$(echo $NODENAME)_upgrade.log.$(date +%Y%m%d-%H%M%S).back find ${RABBITMQ_LOG_BASE}/http_api/*.log.* -maxdepth 0 -type f ! -name "*.gz" | xargs -i gzip --force {} find ${RABBITMQ_LOG_BASE}/*.log.* -maxdepth 0 -type f ! -name "*.gz" | xargs -i gzip --force {} find ${RABBITMQ_LOG_BASE}/*.gz -type f -mtime +30 -delete find ${RABBITMQ_LOG_BASE}/http_api/*.gz -type f -mtime +30 -delete Now it remains only to add a task to rotate logs in crontab - for example, every day at 23-00:

00 23 * * * ~ / rabbitmq-main / init.d / rabbitmq-server rotate-logs

Let us turn to the tasks that need to be addressed in the framework of the operation of the "rabbit farm":

- Manipulations with entities of rabbits - creation / removal of entities of a rabbit: ekschendzhey, turns, binds, chauvelov, users, the politician. And to do this is absolutely identical on all Contour Clusters.

- After switching to / from the backup Cluster, it is required to transfer messages that remain on it to the current Cluster.

- Backup configurations of all Clusters of all Contours

- Full synchronization of Cluster configurations within the contour

- Stop / start rabbits

- Analyze current data streams: do all messages go and, if they do, go where they should or…

- Find and catch passing messages by any criteria.

The operation of our zoo and the solution of the voiced tasks by means of the supplied rabbitmq_management staff plug-in is possible, but extremely inconvenient, which is why the shell was developed and implemented to control the entire diversity of rabbits .

Source: https://habr.com/ru/post/434016/

All Articles