[Illustrated] Networking Guide for Kubernetes. Part 3

Note trans. : This article continues the cycle of materials on the basic structure of networks in Kubernetes, which is described in an accessible form and with illustrative illustrations (however, there were practically no illustrations in this particular part). Translating the two preceding parts of this cycle, we merged them into one publication , which described the K8s network model (interaction within nodes and between nodes) and overlay networks. Her preliminary reading is desirable (recommended by the author himself). The continuation is devoted to Kubernetes services and the processing of outgoing and incoming traffic.

NB : For the convenience of the author, the text is supplemented with references (mainly to the official K8s documentation).

Due to the constantly changing, dynamic nature of Kubernetes and distributed systems in general, pods (and, as a result, their IP addresses) are also constantly changing. The reasons for this vary from incoming updates to achieve the desired state and events leading to scaling to unexpected drops of the pod or node. Therefore, pod's IP addresses cannot be directly used for communication.

')

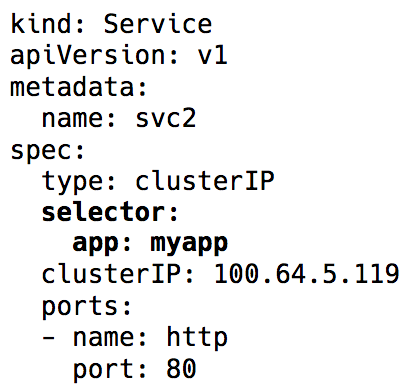

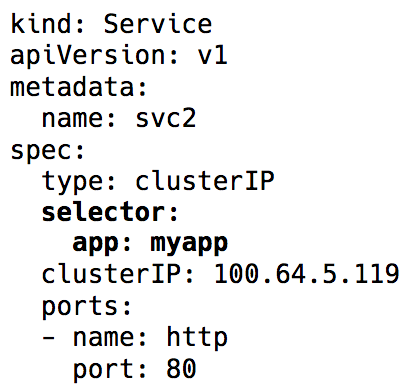

The service enters the service in Kubernetes - a virtual IP with a group of pod's IP addresses used as endpoints and identifiable labels using labels ( select selectors ). This service works as a virtual load balancer, the IP address of which remains constant, and at the same time, the IP addresses of the pod presented to them can change constantly.

Label selector in the Kubernetes Service object

Behind the entire implementation of this virtual IP are iptables rules (the latest versions of Kubernetes also have the option of using IPVS, but this is a topic for a separate discussion), which are controlled by the Kubernetes component called kube-proxy . However, his name in today's reality is misleading. Kube-proxy was actually used as a proxy in the days before Kubernetes v1.0 release, but this led to a large resource consumption and slowdown due to constant copying operations between the kernel space and user space. Now, this is just a controller - like many other controllers in Kubernetes. It monitors the API server for changes to endpoints and updates the iptables rules accordingly.

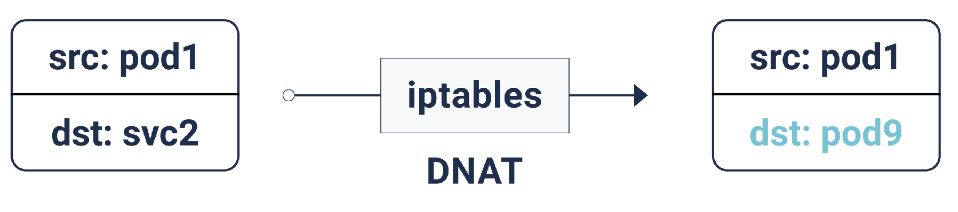

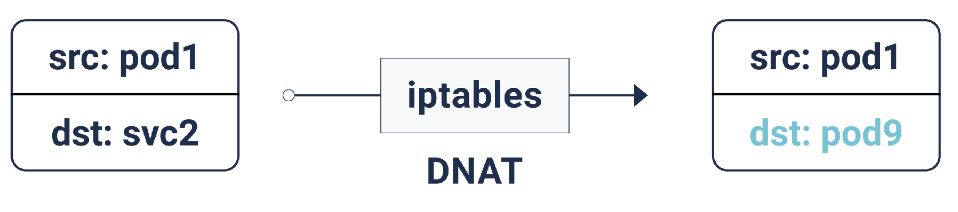

According to these rules, iptables, if the packet is intended for the IP address of the service, DNAT (Destination Network Address Translation) is done for it: this means that its IP address will change from the IP service to one of the endpoints, i.e. One of the pod's IP addresses, which is randomly selected by iptables. This ensures that the load is evenly distributed between the pods.

DNAT in iptables

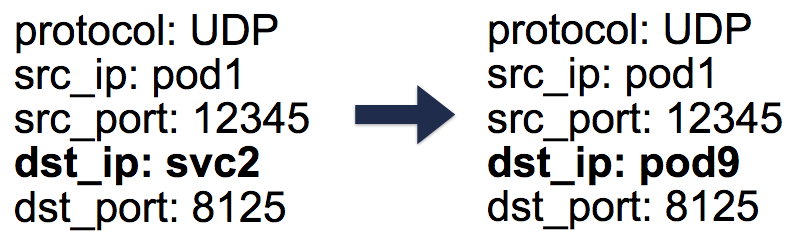

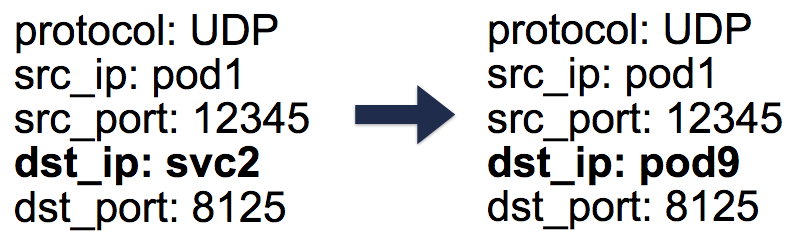

In the case of such a DNAT, the necessary information is stored in the conntrack - connection accounting table in Linux (it stores five-pair translations made by iptables:

Five-way (5-tuple) entries in the conntrack table

So, using Kubernetes services, we can work with the same ports without any conflicts (because it is possible to reassign ports to endpoints). Thanks to this, service discovery is made very simple. It is enough to use the internal DNS and for the hard-code-it hosts services. You can even use variables that are pre-configured in Kubernetes with the host and port of the service.

Tip : Choosing the second path will save you a lot of unnecessary DNS calls!

The Kubernetes services described above operate within a cluster. In practice, applications usually need access to some external API / sites.

In general, nodes can have both private and public IP addresses. For Internet access, a one-to-one NAT is provided for these private and public IP addresses - especially for cloud environments.

For normal communication, the source from the source IP address of the source changes from a private IP node to a public IP for outgoing packets, and for incoming packets in the opposite direction. However, in cases where the connection to the external IP is initiated by the pod, the source IP address is the Pod IP, which the cloud provider’s NAT provider does not know about. Therefore, it simply discards packets with source IP addresses that differ from host IP addresses.

And then, you guessed it, iptables will need us even more! This time, the rules, which are also added by kube-proxy, are performed by SNAT (Source Network Address Translation), also known as IP MASQUERADE (masquerading). The kernel is told to use the IP interface from which the packet comes from instead of the source IP address. A record also appears in conntrack to further perform the inverse operation (un-SNAT) on the response.

Until now, everything was fine. Pods can communicate with each other and with the Internet. However, we still lack the main thing - maintenance of user traffic. Currently there are two ways to implement:

Setting

The type of service

Many other implementations — for example, nginx, traefik, HAProxy, etc. — perform the matching of HTTP hosts / paths with the corresponding backends. With them, LoadBalancer and NodePort are again the entry point for traffic, however there is an advantage here that we only need one Ingress to service the incoming traffic of all services instead of numerous NodePort / LoadBalancers.

Network policies can be thought of as access control lists (security groups / ACLs) for pods.

That's all. In previous installments, we learned the basics of the network at Kubernetes and how overlays work. We now know how Service Abstraction helps in a dynamic cluster and makes the discovery of services truly simple. We also looked at how outgoing / inbound traffic flows and what network policies might be useful for securing a cluster.

Read also in our blog:

NB : For the convenience of the author, the text is supplemented with references (mainly to the official K8s documentation).

Cluster dynamics

Due to the constantly changing, dynamic nature of Kubernetes and distributed systems in general, pods (and, as a result, their IP addresses) are also constantly changing. The reasons for this vary from incoming updates to achieve the desired state and events leading to scaling to unexpected drops of the pod or node. Therefore, pod's IP addresses cannot be directly used for communication.

')

The service enters the service in Kubernetes - a virtual IP with a group of pod's IP addresses used as endpoints and identifiable labels using labels ( select selectors ). This service works as a virtual load balancer, the IP address of which remains constant, and at the same time, the IP addresses of the pod presented to them can change constantly.

Label selector in the Kubernetes Service object

Behind the entire implementation of this virtual IP are iptables rules (the latest versions of Kubernetes also have the option of using IPVS, but this is a topic for a separate discussion), which are controlled by the Kubernetes component called kube-proxy . However, his name in today's reality is misleading. Kube-proxy was actually used as a proxy in the days before Kubernetes v1.0 release, but this led to a large resource consumption and slowdown due to constant copying operations between the kernel space and user space. Now, this is just a controller - like many other controllers in Kubernetes. It monitors the API server for changes to endpoints and updates the iptables rules accordingly.

According to these rules, iptables, if the packet is intended for the IP address of the service, DNAT (Destination Network Address Translation) is done for it: this means that its IP address will change from the IP service to one of the endpoints, i.e. One of the pod's IP addresses, which is randomly selected by iptables. This ensures that the load is evenly distributed between the pods.

DNAT in iptables

In the case of such a DNAT, the necessary information is stored in the conntrack - connection accounting table in Linux (it stores five-pair translations made by iptables:

protocol , srcIP , srcPort , dstIP , dstPort ). Everything is arranged in such a way that when the answer is returned, the reverse operation (un-DNAT) can occur, ie Replacing the IP source from Pod IP with Service IP. Thanks to this client, there is absolutely no need to know how “behind the scenes” work with packages happens.

Five-way (5-tuple) entries in the conntrack table

So, using Kubernetes services, we can work with the same ports without any conflicts (because it is possible to reassign ports to endpoints). Thanks to this, service discovery is made very simple. It is enough to use the internal DNS and for the hard-code-it hosts services. You can even use variables that are pre-configured in Kubernetes with the host and port of the service.

Tip : Choosing the second path will save you a lot of unnecessary DNS calls!

Outbound traffic

The Kubernetes services described above operate within a cluster. In practice, applications usually need access to some external API / sites.

In general, nodes can have both private and public IP addresses. For Internet access, a one-to-one NAT is provided for these private and public IP addresses - especially for cloud environments.

For normal communication, the source from the source IP address of the source changes from a private IP node to a public IP for outgoing packets, and for incoming packets in the opposite direction. However, in cases where the connection to the external IP is initiated by the pod, the source IP address is the Pod IP, which the cloud provider’s NAT provider does not know about. Therefore, it simply discards packets with source IP addresses that differ from host IP addresses.

And then, you guessed it, iptables will need us even more! This time, the rules, which are also added by kube-proxy, are performed by SNAT (Source Network Address Translation), also known as IP MASQUERADE (masquerading). The kernel is told to use the IP interface from which the packet comes from instead of the source IP address. A record also appears in conntrack to further perform the inverse operation (un-SNAT) on the response.

Incoming traffic

Until now, everything was fine. Pods can communicate with each other and with the Internet. However, we still lack the main thing - maintenance of user traffic. Currently there are two ways to implement:

1. NodePort / Cloud Load Balancer (L4 Level: IP and Port)

Setting

NodePort as the service type will assign the NodePort service in the range of 30,000–33,000. This nodePort open on every node, even in cases when no pod is running on the node. Incoming traffic on this NodePort sent to one of the pods (which may even end up on another node!), Again using iptables.The type of service

LoadBalancer in cloud environments creates a cloud load balancer (for example, ELB) in front of all nodes, working further with the same NodePort .2. Ingress (L7 level: HTTP / TCP)

Many other implementations — for example, nginx, traefik, HAProxy, etc. — perform the matching of HTTP hosts / paths with the corresponding backends. With them, LoadBalancer and NodePort are again the entry point for traffic, however there is an advantage here that we only need one Ingress to service the incoming traffic of all services instead of numerous NodePort / LoadBalancers.

Network policies

Network policies can be thought of as access control lists (security groups / ACLs) for pods.

NetworkPolicy rules allow you to allow / deny traffic between pods. Their exact implementation depends on the network layer / CNI, but most of them simply use iptables....

That's all. In previous installments, we learned the basics of the network at Kubernetes and how overlays work. We now know how Service Abstraction helps in a dynamic cluster and makes the discovery of services truly simple. We also looked at how outgoing / inbound traffic flows and what network policies might be useful for securing a cluster.

PS from translator

Read also in our blog:

- “ An illustrative guide to networking in Kubernetes. Parts 1 and 2 ";

- " Behind the scenes of the network in Kubernetes ";

- “ Experiments with kube-proxy and unavailability of a node in Kubernetes ”;

- " Comparison of the performance of network solutions for Kubernetes ";

- “ Container Networking Interface (CNI) - network interface and standard for Linux containers ”;

- “ Conduit is a lightweight service mesh for Kubernetes .”

Source: https://habr.com/ru/post/433382/

All Articles