A perfect babysitter is required; be sure to go through an AI scan to evaluate respect and good manners

Jesse Batalha keeps his son Bennett at their home in Rancho Mirage, California. In search of a new nanny, Batalha began using Predictim, an online service that allegedly uses "advanced artificial intelligence" to assess the risks that the nanny will be addicted to drugs, act aggressively or demonstrate "bad manners."

When Jesse Batalha began to look for a new nanny for a one-year-old son, she wanted to get more information about the employee than the absence of a criminal record, comments from parents and a personal interview provide. Therefore, she turned to the online service Predictim, which uses “advanced artificial intelligence” to assess the nanny’s personality, and sent its scanners to thousands of posts on Facebook, Twitter and Instagram of one of the candidates.

The system introduced an automatic “risk assessment” of a 24-year-old girl, claiming that the risk of using her drug was “very small”. However, the system rated the risk of intimidation, aggression, disrespect and bad manners a little higher - by 2 out of 5.

The system does not explain its decisions. However, Batalha, who considered the nanny quite trustworthy, suddenly began to doubt. “Social networks demonstrate the identity of a person,” said Batalha, who is now 29 years old, living in a suburb of Los Angeles. “Why did the score turn out to be 2, not 1?”

')

Predictim offers parents the same services that dozens of other technology companies around the world sell to their employers: AI systems that analyze human speech, facial expressions and online presence history, promising to reveal the secret aspects of their personal life.

Technology is changing the way some companies approach to finding candidates, hiring and evaluating employees, offering employers an unrivaled assessment of candidates through a new wave of invasive psychological assessments and surveillance.

In the techno company, Fama claim that they use AI to monitor employee social networks for “toxic behavior” to warn their bosses. A company specializing in recruitment technology HireVue, working with companies such as Geico, Hilton and Unilever, offers a system that automatically analyzes the candidate’s tone, choice of words and facial expressions during the video interview, and predicts their skills and manners (for better results, candidates are offered more to smile).

Critics also argue that systems like Predictim carry their own danger, leaving automatic solutions that can change a person’s life without any checks.

Systems depend on algorithms that work on the principle of a black box, giving out almost no details about how they reduced all the complex details of a person’s inner life to the calculation of its positive and negative sides. And although Predictim technology influences the thinking of parents, it remains completely unproved, almost unexplained and vulnerable to perceptual distortions related to exactly how a suitable nanny should behave in social networks, look and speak.

There is “an insane race to master the power of AI and make all sorts of decisions without any reports to people,” said Jeff Chester, executive director of the Center for Digital Democracy, a technology advocacy group. “The impression is that people got drunk on digital soda, and they think that this is the normal way to control our lives.”

The Predictim scanning process analyzes the entire history of nanny behavior on social networks, which for many of the youngest nannies can capture most of their lives. At the same time, nannies are told that if they refuse to undergo such a scan, this will be a serious disadvantage in the competition for jobs.

The head and co-founder of Predictim, Sal Parsa, said that the company, which was launched last month as part of the SkyDeck University of California at Berkeley techno-incubator, takes ethical questions about its technology seriously. He said that parents should consider rating as an adviser who “may reflect, and perhaps not reflect, the real characteristics of the nanny.”

He added that the danger of hiring a problem-minded or cruelty-minded nanny makes AI an indispensable tool for any parent trying to ensure the safety of their child.

“Look at Google for child abuse cases and you’ll find hundreds of results,” he said. - There are people with mental illness or just angry. Our goal is to do everything in our power to stop them. ”

The cost of scanning Predictim starts at $ 24.99, and for it you need to provide the name and e-mail of the potential nanny, as well as get her consent for wide access to her accounts in social networks. The nanny can refuse, and then the parents receive a notice about it, and the nanny herself receives a letter stating that "the parent you are interested in cannot hire you until you obey the request."

Managers at Predictim say they use language and image processing algorithms, “computer vision,” evaluating nanny posts on Facebook, Twitter, and Instagram, and looking for clues about her offline life. The report is received only by the parent who is not obliged to share his results with the nanny.

Parents can probably take a look at publicly available nanny accounts on social networks. However, computer reports promise to give a deep assessment of the years of online activity, reduced to a single figure: a temptingly simple solution to an almost impossible task.

Risk assessments fall into several categories, including overt content and drug abuse. The startup also claims in advertising that its system is able to evaluate other characteristics of a nanny’s personality, such as politeness, ability to work with others and “positivity”.

The company hopes to turn over the multi-billion dollar industry of “outsourcing parental responsibilities” and has already begun to advertise, sponsoring parenting blogs and websites for moms. The marketing strategy focuses on the stated opportunity to unlock the secrets and prevent the “nightmare of any parent”, and the advertisement quotes criminal cases, including, for example, a nanny from Kentucky, convicted of causing serious injury to an eight-month-old child.

“If the parents of this girl who had suffered from the nanny could use Predictim in the process of approving the candidate,” the company’s marketing statement says, “they would never leave her alone with their precious child.”

However, technology experts say that the system issues warnings on its own, playing on the fears of parents, in order to sell the results of an identity scan with unverified accuracy.

They also raise questions about how such systems are trained and how vulnerable they are to mistakes that can arise from the uncertainties associated with the use of nanny social networks. Parents are issued only a warning about questionable behavior, without specific phrases, references or other details on which they could make their decision.

When a scan of a nanny issued a warning about a possible harassment on her part, the excited mother who asked for this assessment said that she couldn’t find out if the program had noticed a quote from an old film, song, or some other phrase that she considered to be really dangerous. .

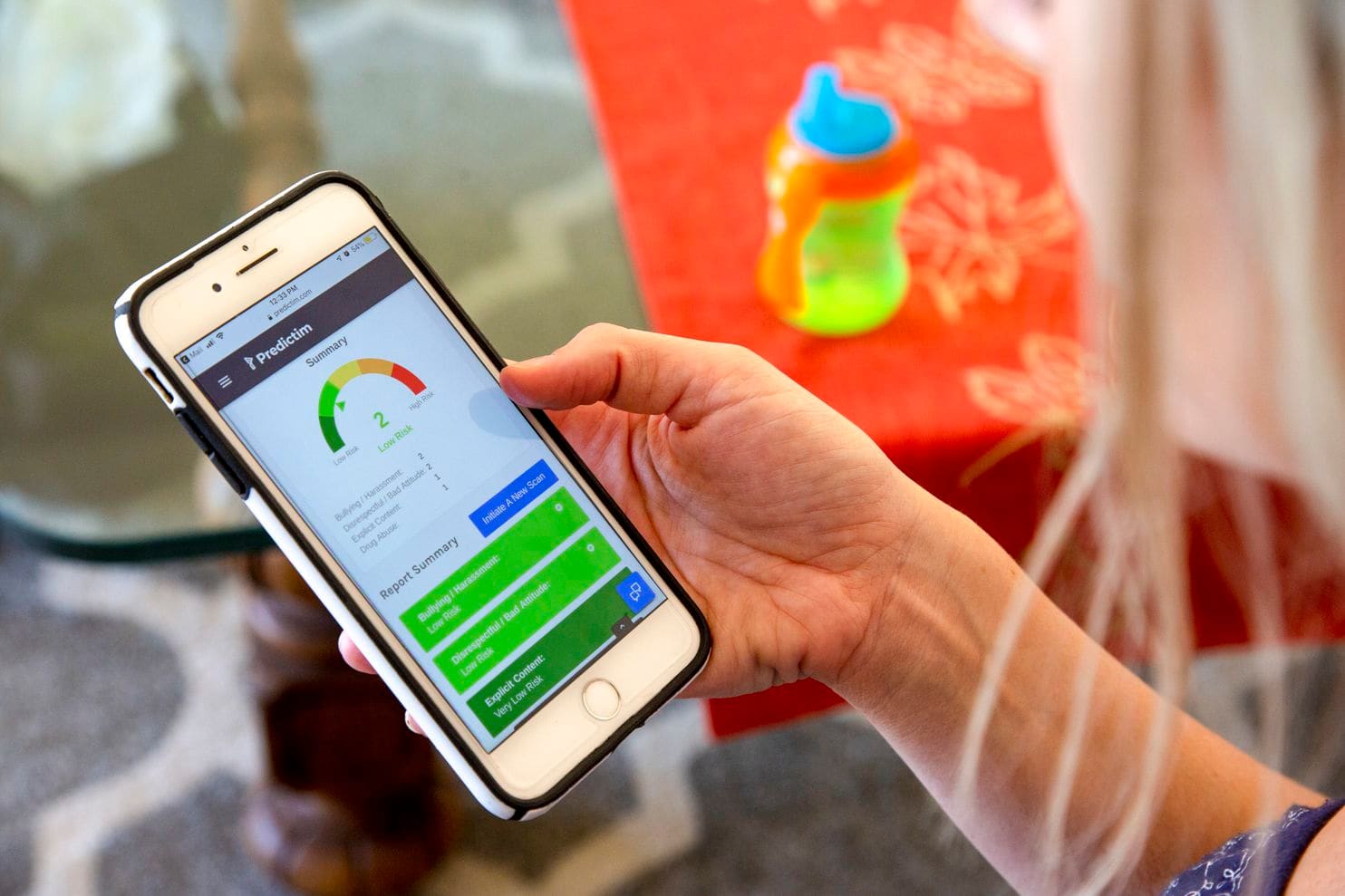

Phone Jesse demonstrates the results of the application Predictim

Jamie Williams, an attorney at the Electronic Frontier Foundation, which fights for civil rights, said that most of the algorithms used today to evaluate the meaning of words and photographs are known to lack human context and common sense. Even tech giants like Facebook have had difficulty creating algorithms that can distinguish between harmless and angry comments.

“How can you try this system to evaluate adolescents: they are children! Said Williams. - Children have their own jokes, they are famous for sarcasm. A person may think that what may seem to the algorithm “bad manners” is a political statement or legitimate criticism. ”

And when the system is mistaken - let's say, stating that the nanny is abusing drugs - the parents will not be able to learn about the error. Accurate ratings and estimates of the accuracy of the system can make parents expect from it much greater accuracy and authoritarianism than a person in her place could have promised. As a result, parents will tend to hire nannies whom they would otherwise avoid, or move away from people who have already earned their trust.

“There is no metric that could tell exactly whether these methods are as effective in their predictions as their creators say,” said Miranda Bogen, principal analyst at Upturn think tank in Washington, who studies the use of algorithms for automatic decision-making and judicial decisions. . "The attractiveness of these technologies is very likely to outpace their real capabilities."

Malissa Nielsen, a 24-year-old nanny working for Batalha, recently gave two other families access to their social networks for evaluation at Predictim. She said that she was always careful about social networks and decided that she could share additional information without harming herself. She goes to church every week, does not curse and finishes her education as a teacher in the field of teaching young children, after which she hopes to open a kindergarten.

However, having learned that the system gave her not perfect marks based on disrespect and harassment, she was amazed. She believed that allows parents to explore her presence in social networks, and not the algorithm - a critical analysis of her personality. She was also not informed of the results of the test, which could potentially damage her only source of income.

“I would like to sort out this issue a bit. Why did this program think about me? - said Nielsen. “The computer has no feelings, it cannot define anything like that.”

Americans still do not trust the algorithms, whose decisions may affect their daily lives. In a survey from the Pew Research Center, released this month, 57% of those surveyed rated the automatic processing of job candidates resumes as "unacceptable."

However, Predictim reported that they are preparing to expand work throughout the country. The managers of Sittercity, an online nanny selection service that millions of parents attend, talk about launching a pilot program that will incorporate Predictim's automatic ratings into the current set of automatic ratings and service checks.

“The nanny search is fraught with a lot of uncertainties,” said Sandra Dynor, head of product at Sittercity, who believes that such tools can soon become standard with an online search for caregivers. “Parents are in constant search for the best solution, the fullest research, and the most reliable facts.”

The managers of Predictim also believe that they can seriously expand the capabilities of the system and offer even more personal assessments of the nanny’s private life. Joel Simonov, technology director, said the team is interested in obtaining "useful psychometric data" based on analyzing the activity of nannies in social networks, passing their stories through a personality test, for example, the Myers-Briggs typology, to provide these results to parents.

Mining of social networks and Predictim's interest in mass psychological analysis is similar to the ambitions of Cambridge Analytica, a company that engages in political consultations and has worked in support of Trump's election campaign, and then involved Facebook in the global personal data scandal. However, leaders of Predictim claim that they have installed internal security checks and are working to protect the personal data of nannies. "If we had a leak of data nannies, it would not be cool," - said Simonov.

Experts worry that AI-based rating systems portend a future in which a person’s getting any work not necessarily related to childcare will depend on the car. Many recruitment and recruiting companies are already creating or investing in systems that can analyze candidate resumes on a large scale and issue automatic candidate evaluations. Similar AI systems — including Jigsaw, a techno-incubator created by Google — are used to track online comments for harassment, threats, and cruelty.

However, more than once it has been demonstrated how algorithms for searching for employees harbor flaws that can ruin a person’s career. At Amazon.com, they stopped developing an algorithm for hiring, having learned that it improperly underestimates women's ratings, because the company’s hiring history, following the techno industry’s hiring trends, taught the system to prefer male candidates. The company said they never used this program to evaluate candidates.

Some AI experts believe that such systems have the opportunity to seriously inflate the prejudices associated with age or race, for example, marking important words or photographs of certain groups of people more often than others. They also worry that Predictim will force young nannies to share their personal data just to get a job.

However, Diane Werner, a mother of two children living in a suburb of San Francisco, believes that babysitters should voluntarily share personal information to help reassure parents. “The verification of the biography is a good thing, but Predictim studies the issue in depth, dissecting the personality - their social and intellectual status,” she said. Where she lives, “100% of parents will want to use such a service,” she added. “We all want to get the perfect nanny.”

Source: https://habr.com/ru/post/433234/

All Articles